A Deep Learning Framework for Intelligent Fault Diagnosis Using AutoML-CNN and Image-like Data Fusion

Abstract

:1. Introduction

2. Related Works

2.1. IFD with Traditional Machine Learning

2.2. IFD with Deep Learning

- (1)

- Volume—the volume of collected data sustainably grows during the long-term operation and maintenance (O&M).

- (2)

- Quality—a portion of poor-quality data is mingled in the massive data.

- (3)

- Variety—multi-source data is collected from multiple sources (by different sensors) with a heterogeneous structure.

- (4)

- Velocity—fast transmission can be enabled in situ via fieldbus cables or at the remote end via high-speed communication like 5G, which promises response and decision-making in near real-time for DT.

2.2.1. DL with 1D Time Series

2.2.2. DL with 2D Synthetic Images

2.3. IFD with Data Fusion

3. Proposed IFD via AutoML-CNN and Image-like Fusion

3.1. Problem Statement

3.2. Pseudo-Image Reconstruction and Data Fusion

3.3. Automated Machine Learning

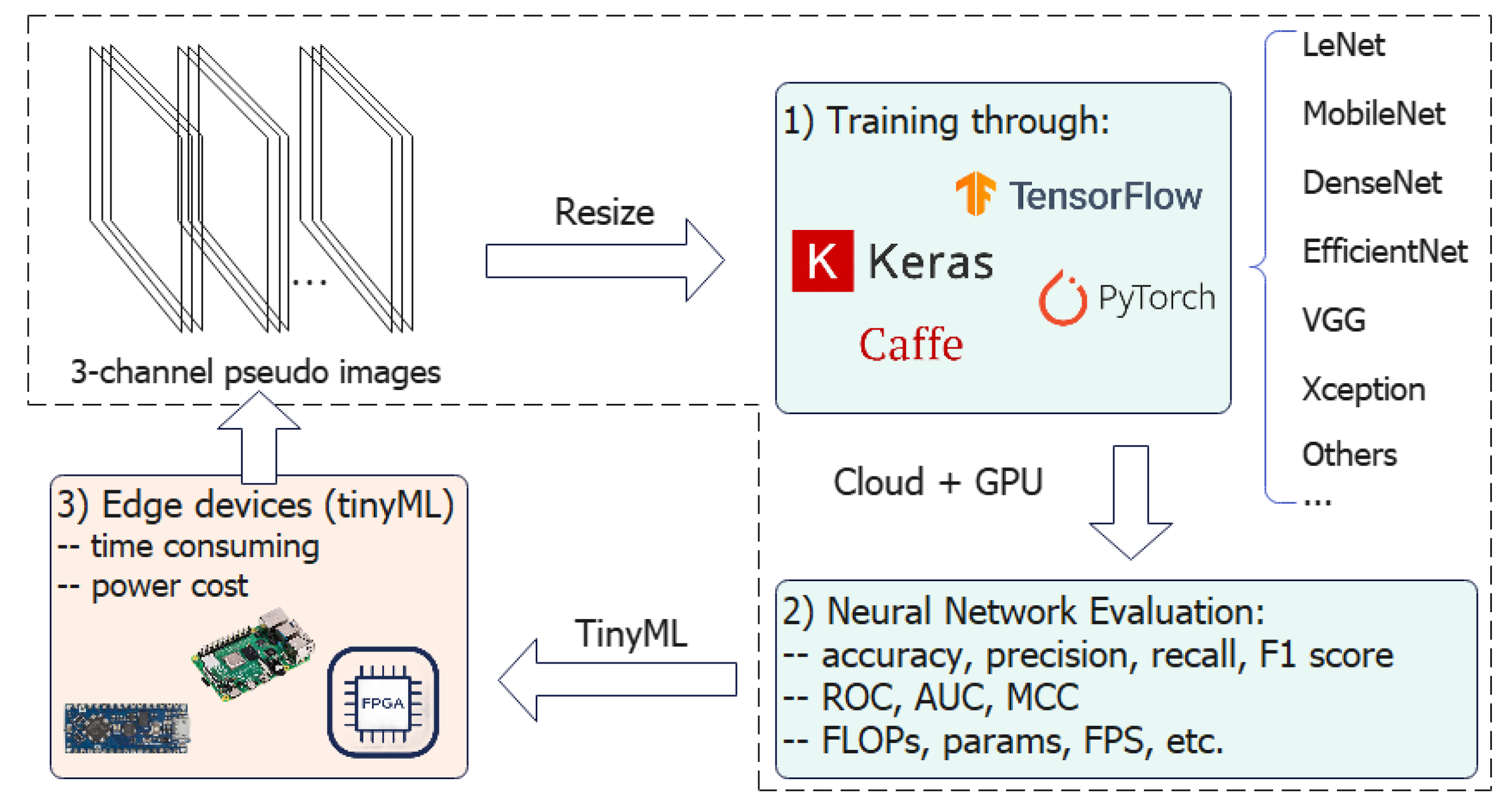

3.4. Proposed Framework and Workflow

4. Framework Validation

4.1. Experiment Preparation

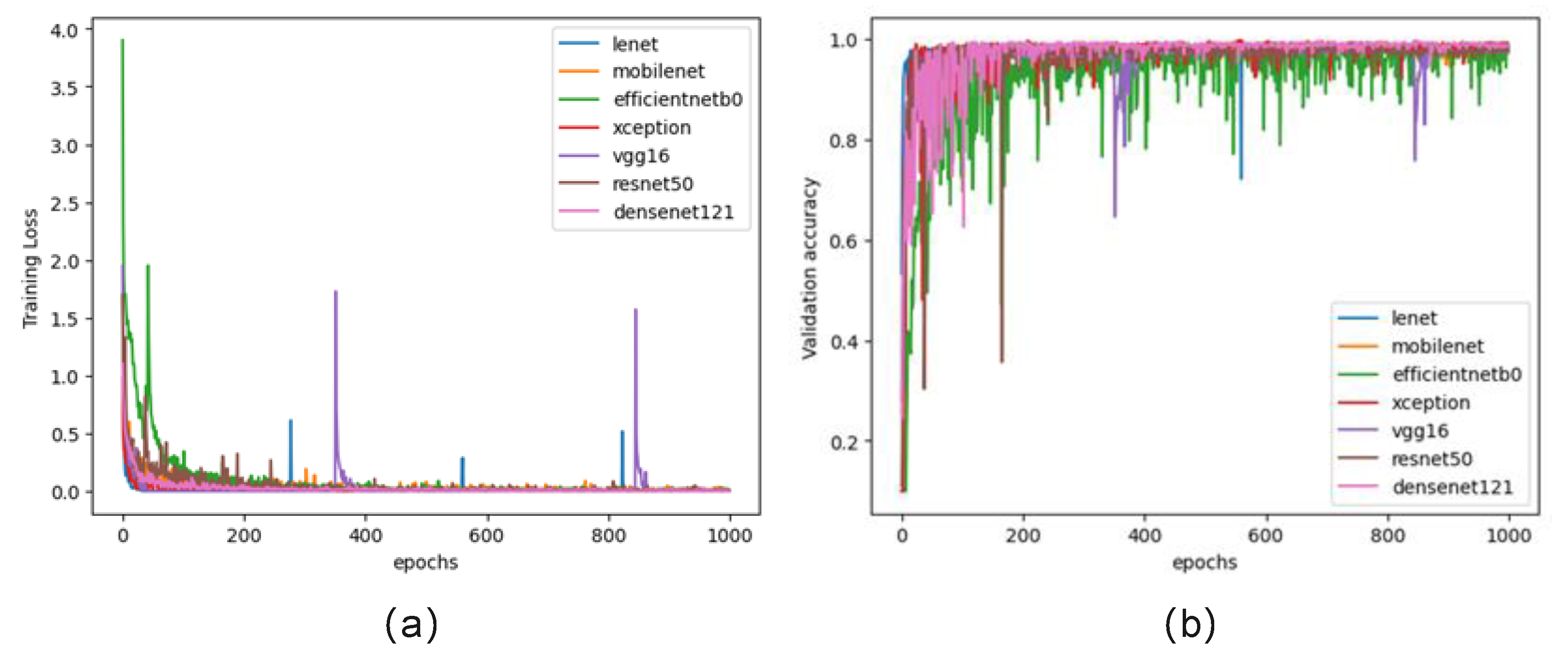

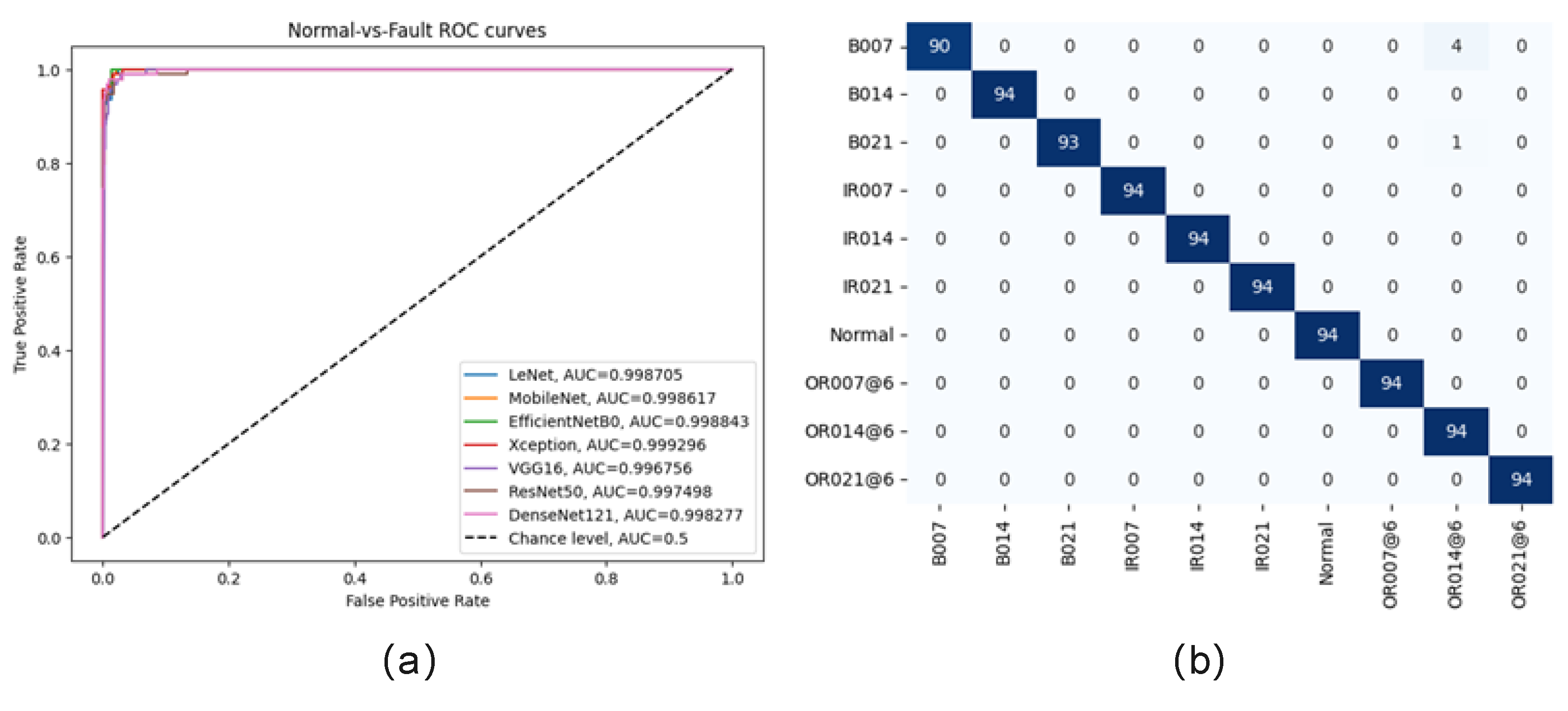

4.2. Case 1—CWRU Dataset (Uniaxial Signals)

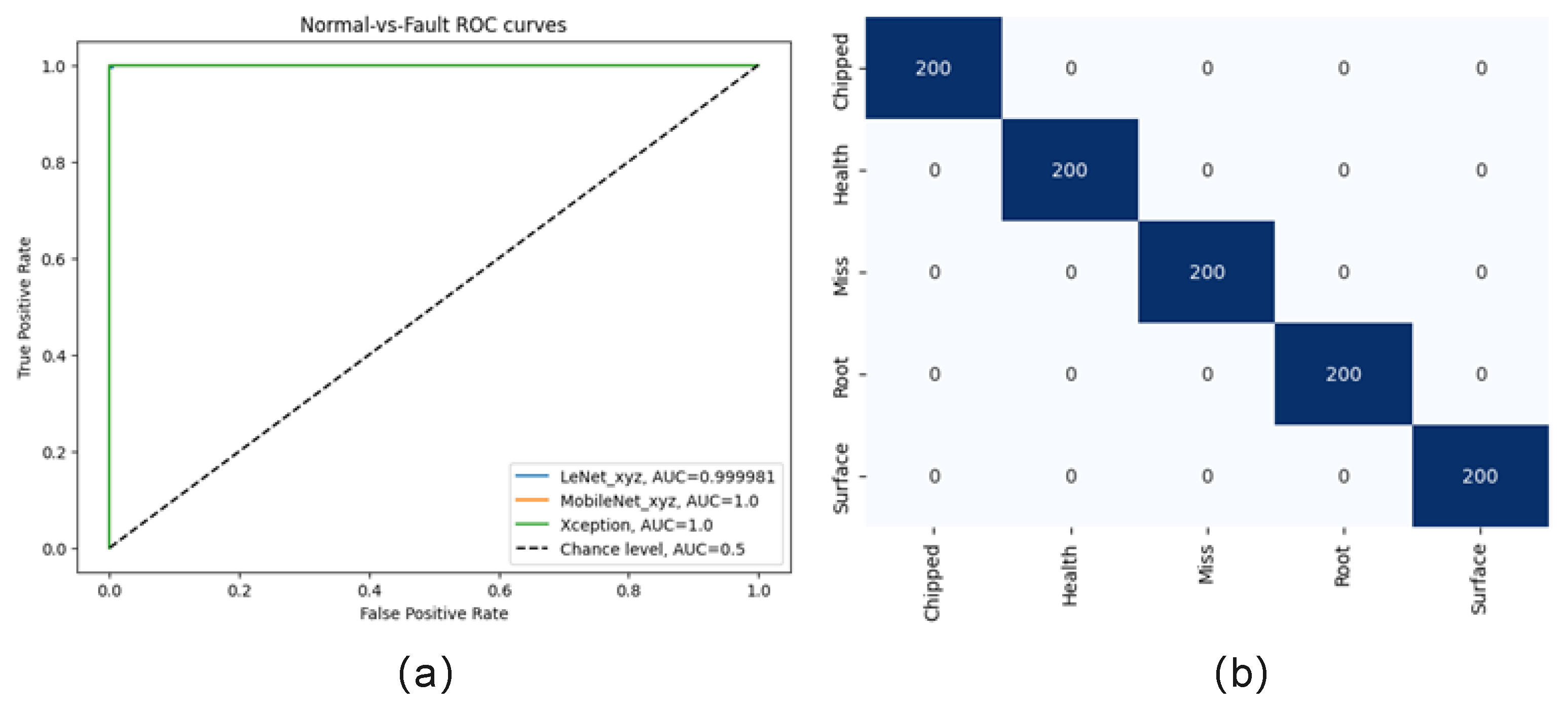

4.3. Case 2—SEU Dataset (Triaxial Signals)

5. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Peres, R.S.; Jia, X.; Lee, J.; Sun, K.; Colombo, A.W.; Barata, J. Industrial Artificial Intelligence in Industry 4.0-Systematic Review, Challenges and Outlook. IEEE Access 2020, 8, 220121–220139. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, Q.; Yu, X.; Sun, C.; Wang, S.; Yan, R.; Chen, X. Applications of Unsupervised Deep Transfer Learning to Intelligent Fault Diagnosis: A Survey and Comparative Study. IEEE Trans. Instrum. Meas. 2021, 70, 1–28. [Google Scholar] [CrossRef]

- Tang, S.; Yuan, S.; Zhu, Y. Deep learning-based intelligent fault diagnosis methods toward rotating machinery. IEEE Access 2020, 8, 9335–9346. [Google Scholar] [CrossRef]

- Zhang, T.; Chen, J.; Li, F.; Zhang, K.; Lv, H.; He, S.; Xu, E. Intelligent fault diagnosis of machines with small & imbalanced data: A state-of-the-art review and possible extensions. ISA Trans. 2022, 119, 152–171. [Google Scholar] [CrossRef]

- Qiu, J.; Ran, J.; Tang, M.; Yu, F.; Zhang, Q. Fault Diagnosis of Train Wheelset Bearing Roadside Acoustics Considering Sparse Operation with GA-RBF. Machines 2023, 11, 765. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, B.S.X.; Gao, Y.; Chen, T. An Adaptive Torque Observer Based on Fuzzy Inference for Flexible Joint Application. Machines 2023, 11, 794. [Google Scholar] [CrossRef]

- Lei, Y.; Yang, B.; Jiang, X.; Jia, F.; Li, N.; Nandi, A.K. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Pandya, D.H.; Upadhyay, S.H.; Harsha, S.P. Fault diagnosis of rolling element bearing with intrinsic mode function of acoustic emission data using APF-KNN. Expert Syst. Appl. 2013, 40, 4137–4145. [Google Scholar] [CrossRef]

- Wang, J.; Liu, S.; Gao, R.X.; Yan, R. Current envelope analysis for defect identification and diagnosis in induction motors. J. Manuf. Syst. 2012, 31, 380–387. [Google Scholar] [CrossRef]

- Jiang, X.; Li, S.; Wang, Y. A novel method for self-adaptive feature extraction using scaling crossover characteristics of signals and combining with LS-SVM for multi-fault diagnosis of gearbox. J. Vibroeng. 2015, 17, 1861–1878. [Google Scholar]

- Praveenkumar, T.; Sabhrish, B.; Saimurugan, M.; Ramachandran, K.I. Pattern recognition based on-line vibration monitoring system for fault diagnosis of automobile gearbox. Measurement 2018, 114, 233–242. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Q.; Xiong, J.; Xiao, M.; Sun, G.; He, J. Fault Diagnosis of a Rolling Bearing Using Wavelet Packet Denoising and Random Forests. IEEE Sens. J. 2017, 17, 5581–5588. [Google Scholar] [CrossRef]

- Liu, H.; Li, L.; Ma, J. Rolling Bearing Fault Diagnosis Based on STFT-Deep Learning and Sound Signals. Shock. Vib. 2016, 2016, 6127479. [Google Scholar] [CrossRef]

- Lu, C.; Wang, Z.Y.; Qin, W.L.; Ma, J. Fault diagnosis of rotary machinery components using a stacked denoising autoencoder-based health state identification. Signal Process. 2017, 130, 377–388. [Google Scholar] [CrossRef]

- Qiu, S.; Cui, X.; Ping, Z.; Shan, N.; Li, Z.; Bao, X.; Xu, X. Deep Learning Techniques in Intelligent Fault Diagnosis and Prognosis for Industrial Systems: A Review. Sensors 2023, 23, 1305. [Google Scholar] [CrossRef]

- Ling, J.; Liu, G.J.; Li, J.L.; Shen, X.C.; You, D.D. Fault prediction method for nuclear power machinery based on Bayesian PPCA recurrent neural network model. Nucl. Sci. Tech. 2020, 31, 75. [Google Scholar] [CrossRef]

- Yuan, M.; Wu, Y.; Lin, L. Fault diagnosis and remaining useful life estimation of aero engine using LSTM neural network. In Proceedings of the AUS 2016—2016 IEEE/CSAA International Conference on Aircraft Utility Systems, Beijing, China, 10–12 October 2016; pp. 135–140. [Google Scholar] [CrossRef]

- Neves, A.C.; González, I.; Karoumi, R.A.C.; González, I.; Karoumi, R. A combined model-free Artificial Neural Network-based method with clustering for novelty detection: The case study of the KW51 railway bridge. In IABSE Conference, Seoul 2020: Risk Intelligence of Infrastructures—Report; IABSE: Zurich, Switzerland, 2021; pp. 181–188. [Google Scholar] [CrossRef]

- Neves, A.C.; González, I.; Leander, J.; Karoumi, R. Structural health monitoring of bridges: A model-free ANN-based approach to damage detection. J. Civ. Struct. Health Monit. 2017, 7, 689–702. [Google Scholar] [CrossRef]

- Sajedi, S.O.; Liang, X. Vibration-based semantic damage segmentation for large-scale structural health monitoring. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 579–596. [Google Scholar] [CrossRef]

- Wu, X.; Peng, Z.; Ren, J.; Cheng, C.; Zhang, W.; Wang, D. Rub-Impact Fault Diagnosis of Rotating Machin-ery Based on 1-D Convolutional Neural Networks. IEEE Sens. J. 2020, 20, 8349–8363. [Google Scholar] [CrossRef]

- Sony, S.; Gamage, S.; Sadhu, A.; Samarabandu, J. Multiclass damage identification in a full-scale bridge using optimally tuned one-dimensional convolutional neural network. J. Comput. Civ. Eng. 2022, 36, 4021035. [Google Scholar] [CrossRef]

- Sharma, S.; Sen, S. One-dimensional convolutional neural network-based damage detection in structural joints. J. Civ. Struct. Health Monit. 2020, 10, 1057–1072. [Google Scholar] [CrossRef]

- Zhang, Y.; Miyamori, Y.; Mikami, S.; Saito, T. Vibration-based structural state identification by a 1-dimensional convolutional neural network. Comput.-Aided Civ. Infrastruct. Eng. 2019, 34, 822–839. [Google Scholar] [CrossRef]

- Fahim, S.R.; Sarker, S.K.; Muyeen, S.M.; Sheikh, M.R.I.; Das, S.K.; Simoes, M. A Robust Self-Attentive Capsule Network for Fault Diagnosis of Series-Compensated Transmission Line. IEEE Trans. Power Deliv. 2021, 36, 3846–3857. [Google Scholar] [CrossRef]

- Jiang, J.; Bie, Y.; Li, J.; Yang, X.; Ma, G.; Lu, Y.; Zhang, C. Fault diagnosis of the bushing infrared images based on mask R-CNN and improved PCNN joint algorithm. High Volt. 2021, 6, 116–124. [Google Scholar] [CrossRef]

- Xia, M.; Li, T.; Xu, L.; Liu, L.; De Silva, C.W. Fault Diagnosis for Rotating Machinery Using Multiple Sen-sors and Convolutional Neural Networks. IEEE/ASME Trans. Mechatron. 2018, 23, 101–110. [Google Scholar] [CrossRef]

- Gou, L.; Li, H.; Zheng, H.; Li, H.; Pei, X. Aeroengine Control System Sensor Fault Diagnosis Based on CWT and CNN. Math. Probl. Eng. 2020, 2020, 5357146. [Google Scholar] [CrossRef]

- Meng, S.; Kang, J.; Chi, K.; Die, X. Intelligent fault diagnosis of gearbox based on multiple syn-chrosqueezing S-transform and convolutional neural networks. Int. J. Perform. Eng. 2020, 16, 528–536. [Google Scholar] [CrossRef]

- Hoang, D.T.; Kang, H.J. Rolling element bearing fault diagnosis using convolutional neural network and vibration image. Cogn. Syst. Res. 2019, 53, 42–50. [Google Scholar] [CrossRef]

- Wang, S.; Xiang, J.; Zhong, Y.; Zhou, Y. Convolutional neural network-based hidden Markov models for rolling element bearing fault identification. Knowl.-Based Syst. 2018, 144, 65–76. [Google Scholar] [CrossRef]

- Wan, L.; Chen, Y.; Li, H.; Li, C. Rolling-element bearing fault diagnosis using improved lenet-5 network. Sensors 2020, 20, 1693. [Google Scholar] [CrossRef]

- Teng, S.; Chen, G.; Liu, Z.; Cheng, L.; Sun, X. Multi-sensor and decision-level fusion-based structural damage detection using a one-dimensional convolutional neural network. Sensors 2021, 21, 3950. [Google Scholar] [CrossRef]

- Gao, Y.; Li, H.; Xiong, G.; Song, H. AIoT-informed digital twin communication for bridge maintenance. Autom. Constr. 2023, 150, 104835. [Google Scholar] [CrossRef]

- Gong, W.; Chen, H.; Zhang, Z.; Zhang, M.; Wang, R.; Guan, C.; Wang, Q. A Novel Deep Learning Method for Intelligent Fault Diagnosis of Rotating Machinery Based on Improved CNN-SVM and Multichannel Data Fusion. Sensors 2019, 19, 1693. [Google Scholar] [CrossRef] [PubMed]

- Automated Machine Learning—Wikipedia. Available online: https://en.wikipedia.org/wiki/Automated_machine_learning (accessed on 16 August 2023).

- Li, L.; Jamieson, K.; DeSalvo, G.; Rostamizadeh, A.; Talwalkar, A. Hyperband: A novel bandit-based approach to hyperparameter optimisation. J. Mach. Learn. Res. 2018, 18, 1–52. [Google Scholar]

- Wu, J.; Chen, X.Y.; Zhang, H.; Xiong, L.D.; Lei, H.; Deng, S.H. Hyperparameter optimisation for machine learning models based on Bayesian optimisation. J. Electron. Sci. Technol. 2019, 17, 26–40. [Google Scholar] [CrossRef]

- Ozaki, Y.; Tanigaki, Y.; Watanabe, S.; Onishi, M. Multiobjective tree-structured parzen estimator for computationally expensive optimisation problems. In Proceedings of the GECCO 2020—2020 Genetic and Evolutionary Computation Conference, Cancún, Mexico, 8–12 July 2020; pp. 533–541. [Google Scholar] [CrossRef]

- Li, A.; Spyra, A.; Perel, S.; Dalibard, V.; Jaderberg, M.; Gu, C.; Budden, D.; Harley, T.; Gupta, P. A generalised framework for population-based training. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 1791–1799. [Google Scholar] [CrossRef]

| Machine Learning | Handcrafted Feature Extraction | Approaches |

|---|---|---|

| Traditional ML | Time domain: statistical features, zero-cross rate, wavelet, fractal features, etc. | KNN, SVM, Naïve Bayes classifier, decision tree, random forest, etc. |

| Frequency domain: DFT, PSD, etc. | ||

| Time–frequency domain: STFT, WT, WPT, EMD, HTT, etc. |

| Pipeline | Approaches |

|---|---|

| Deep Learning | 1D time series: RNN (including GRU and LSTM), 1D-CNN, etc. |

| 2D synthetic images: (1) Imaging—GAF, wavelet transform, S-transform, phase space reconstruction, etc. (2) Models—shallow single-channel CNNs and classical three-channel deep CNNs via proposed imaging. |

| Input Shape | Split | Epochs | Optimiser | Batch Size | Learning Rate |

|---|---|---|---|---|---|

| 32 × 32 × 3 or 75 × 75 × 3 | 60%:20%:20% | 1000 | Adam | 128 | 0.001 |

| Models | LeNet | EfficientNetB0 | Mobile-Net | Densnet-121 | ResNet50 | Xception | VGG16 |

|---|---|---|---|---|---|---|---|

| FLOPs | 6.58 × 105 | 8.66 × 106 | 1.16 × 107 | 5.79 × 107 | 7.89 × 107 | 5.62 × 108 | 3.32 × 108 |

| Params | 6.16 × 104 | 4.06 × 106 | 3.23 × 106 | 7.05 × 106 | 2.36 × 107 | 2.09 × 107 | 3.36 × 107 |

| FPS | 5449 | 2374 | 4058 | 1464 | 2463 | 1128 | 2760 |

| Models | LeNet_x | LeNet_y | LeNet_z | LeNet_xyz | Mobile-Net_xyz | Xception_xyz |

|---|---|---|---|---|---|---|

| FLOPs | 6.58 × 105 | 8.66 × 106 | 6.58 × 105 | 6.58 × 105 | 6.58 × 105 | 5.62 × 108 |

| Params | 6.16 × 104 | 4.06 × 106 | 6.16 × 104 | 6.16 × 104 | 6.16 × 104 | 2.09 × 107 |

| FPS | 5778 | 5585 | 5726 | 6003 | 3493 | 1161 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Y.; Chai, C.; Li, H.; Fu, W. A Deep Learning Framework for Intelligent Fault Diagnosis Using AutoML-CNN and Image-like Data Fusion. Machines 2023, 11, 932. https://doi.org/10.3390/machines11100932

Gao Y, Chai C, Li H, Fu W. A Deep Learning Framework for Intelligent Fault Diagnosis Using AutoML-CNN and Image-like Data Fusion. Machines. 2023; 11(10):932. https://doi.org/10.3390/machines11100932

Chicago/Turabian StyleGao, Yan, Chengzhang Chai, Haijiang Li, and Weiqi Fu. 2023. "A Deep Learning Framework for Intelligent Fault Diagnosis Using AutoML-CNN and Image-like Data Fusion" Machines 11, no. 10: 932. https://doi.org/10.3390/machines11100932

APA StyleGao, Y., Chai, C., Li, H., & Fu, W. (2023). A Deep Learning Framework for Intelligent Fault Diagnosis Using AutoML-CNN and Image-like Data Fusion. Machines, 11(10), 932. https://doi.org/10.3390/machines11100932