Abstract

Most of the current studies on autonomous vehicle decision-making and control based on reinforcement learning are conducted in simulated environments. The training and testing of these studies are carried out under the condition of rule-based microscopic traffic flow, with little consideration regarding migrating them to real or near-real environments. This may lead to performance degradation when the trained model is tested in more realistic traffic scenes. In this study, we propose a method to randomize the driving behavior of surrounding vehicles by randomizing certain parameters of the car-following and lane-changing models of rule-based microscopic traffic flow. We trained policies with deep reinforcement learning algorithms under the domain-randomized rule-based microscopic traffic flow in freeway and merging scenes and then tested them separately in rule-based and high-fidelity microscopic traffic flows. The results indicate that the policies trained under domain-randomized traffic flow have significantly better success rates and episodic rewards compared to those trained under non-randomized traffic flow.

1. Introduction

In recent years, autonomous vehicles have received increasing attention as they have the potential to free drivers from the fatigue of driving and facilitate efficient road traffic [1]. With the development of machine learning, rapid progress has been achieved in the development of autonomous vehicles. In particular, reinforcement learning, which enables vehicles to learn driving tasks through trial and error, continuously improves the learned policies. Compared to supervised learning, reinforcement learning does not require the manual labeling or supervision of sample data [2,3,4,5]. However, reinforcement learning models require tens of thousands of trial-and-error iterations for policy learning, and real vehicles on the road can hardly withstand so many trials. Therefore, the current mainstream research on autonomous driving with reinforcement learning focuses on using virtual driving simulators for training.

Lin et al. [6] utilized deep reinforcement learning within a driving simulator, Simulation of Urban Mobility (SUMO), to train autonomous vehicles, enabling them to merge safely and smoothly at on-ramps. Peng et al. [7] also employed deep reinforcement learning algorithms within a SUMO to train a model for lane changing and car following. They tested the model by reconstructing scenes using NGSIM data, and the results indicate that the models based on reinforcement learning demonstrate higher efficacy than those based on rule-based approaches. Mirchevska et al. [8] used fitted Q-learning for high-level decisionmaking on a busy simulated highway. However, the microscopic traffic flows of these studies are based on rule-based models, such as the Intelligent Driver Model (IDM) [9,10,11] and the Minimize Overall Braking Induced by Lane Change (MOBIL) model. These are mathematical models based on traffic flow theory [12]. They tend to simplify vehicle motion behavior and do not consider the interaction of multiple vehicles. Autonomous vehicles trained with reinforcement learning in such microscopic traffic flows may perform exceptionally well when tested in the same environments. However, when the trained model is applied to more realistic or real-world traffic flows, their performance may significantly deteriorate, and they could even cause traffic accidents. This is due to the discrepancies between simulated and real-world traffic flows.

For research on sim-to-real transfer, numerous methods have been proposed to date. For instance, robust reinforcement learning has been explored to develop strategies that account for the mismatch between simulated and real-world scenes [13]. Meta-learning is another approach that seeks to learn adaptability to potential test tasks from multiple training tasks [14]. Additionally, the domain randomization method used in this article is acknowledged as one of the most extensively used techniques to improve the adaptability to real-world scenes [15]. Domain randomization relies on randomized parameters aimed at encompassing the true distribution of real-world data. Sheckells et al. [16] applied domain randomization to vehicle dynamics, using stochastic dynamic models to optimize the control strategies for vehicles maneuvering on elliptical tracks. Real-world experiments indicated that the strategy was able to maintain performance levels similar to those achieved in simulations. However, few studies have applied domain randomization to microscopic traffic flows and investigated its efficacy.

In recent years, many driving simulators have been moving towards more realistic scenes. One type includes data-based driving simulators (InterSim [17] and TrafficGen [18]), which train neural network models by extracting vehicle motion characteristics from real-world traffic datasets, resulting in interactive microscopic traffic flows. However, the simulation time is much longer than for most rule-based driving simulators due to the complexity of the models. The other kind includes theory-based interactive traffic simulators, which can generate long-term interactive high-fidelity traffic flows by combining multiple modules (LimSim [19]). The traffic flow generated by LimSim closely resembles an actual dataset with a normal distribution, sharing similar means and standard deviations [20].

This paper proposes a domain randomization method for rule-based microscopic traffic flows for reinforcement learning-based decision and control. The parameters of the car-following and lane-changing models are randomized with Gaussian distributions, making the microscopic traffic flows more random and behaviorally uncertain, thus exposing the agent to a more complex and variable driving environment during training. To investigate the impact of domain randomization, this paper will train and test agents using microscopic traffic flow without randomization, high-fidelity microscopic traffic flow, and domain-randomized traffic flow for freeway and merging scenes.

The rest of this paper is structured as follows: Section 2 introduces the relevant microscopic traffic flows. Section 3 describes the proposed domain randomization method. Section 4 presents the simulation experiments and the analysis of the results for the freeway and merging scenes. Finally, the conclusions are drawn in Section 5.

2. Microscopic Traffic Flow

Microscopic traffic flow models take individual vehicles as the research subject and mathematically describe the driving behaviors of the vehicles, such as acceleration, overtaking, and lane changing.

2.1. Rule-Based Microscopic Traffic Flow

This paper utilizes IDM and SL2015 as the default car-following and lane-changing models, respectively. The following is a detailed introduction to them.

2.1.1. IDM Car-Following Model

IDM was originally proposed by Treiber in [9], capable of describing various traffic states from free flow to complete congestion with a unified formulaic approach. The model takes the preceding vehicle’s speed, the ego vehicle’s speed, and the distance to the preceding vehicle as inputs to output the ego vehicle’s safe acceleration. The acceleration of the ego vehicle at each timestep is

where a represents the maximum acceleration of the ego vehicle, is the current speed of the ego vehicle, is the desired speed of the ego vehicle, is the acceleration exponent, is the speed difference between the ego vehicle and the preceding vehicle, s is the current distance between the ego vehicle and the preceding vehicle, and is the desired following distance. The desired distance is defined as follows:

where is the minimum gap, T is the bumper-to-bumper time gap, and b represents the maximum deceleration.

2.1.2. SL2015 Lane-Changing Model

The safety distance required for the lane-changing process is calculated as follows:

where denotes the safety distance required for lane changing, represents the velocity of the vehicle at time t, is the length of the vehicle, and are safety factors, and the threshold speed differentiates between urban roads and highways.

The profit at time t for changing lanes is calculated as follows:

where is the velocity of the vehicle in the target lane at the next timestep, is the safe velocity in the current lane, and is the maximum velocity allowed in the current lane. The goal here is to maximize the velocity difference, thereby increasing the benefit of changing lanes.

If the profit for the current timestep is greater than zero, then this profit will be added to the cumulative profit. Conversely, if the profit for the current timestep is less than zero, the cumulative profit will be halved to moderate the desire to change to the target lane. If the cumulative profit is larger than a threshold, lane change can be initiated.

2.2. LimSim High-Fidelity Microscopic Traffic Flow

The study employs the LimSim driving simulation platform’s high-fidelity microscopic traffic flow. The high-fidelity microscopic traffic flow in LimSim is based on optimal trajectory in the Frenet frame [21]. Within the circular area around the ego vehicle, the microscopic traffic flow is updated based on each optimal trajectory.

2.2.1. Trajectory Generation

In the Frenet coordinate system, the motion state of a vehicle can be described by the tuple , where s represents the longitudinal displacement, the longitudinal velocity, the lateral acceleration, d represents the lateral displacement, the lateral velocity, and the latitudinal acceleration.

Lateral Trajectory Generation

The lateral trajectory curve can be expressed by the following fifth-order polynomial:

The trajectory start point is known as , and a complete polynomial trajectory can be determined once the end point is specified. As vehicles travel on the road, they use the road centerline as the reference line for navigation, and the optimal state should be moving parallel to the centerline, which means the end point would be . Equidistant sampling points are selected between the start point and end point, and the multiple polynomial segments are connected to form many complete lateral trajectories.

Longitudinal Trajectory Generation

Longitudinal trajectory curve can be expressed with a fourth-degree polynomial:

is the start point and is the end point. Equidistant sampling points are selected between the start point and end point, and the multiple polynomial segments are connected to form many complete longitudinal trajectories.

2.2.2. Optimal Trajectory Selection

The trajectory selection process involves evaluating a cost function that includes key components: trajectory smoothness, which is determined by the heading and curvature differences between the actual and reference trajectories; vehicle stability, indicated by the differences in acceleration and jerk between the actual and reference trajectories; collision risk, assessed by the risk level of collision with surrounding vehicles; speed deviation, gauged by the velocity difference between the actual trajectory and the reference speed; and lateral trajectory deviation, measured by the lateral distance difference between the actual trajectory and the reference trajectory.

The total cost function is utilized to evaluate the set of candidate trajectories in Section 2.2.1, followed by an assessment of their compliance with vehicle dynamics constraints, such as turning radius and speed/acceleration limits. The trajectory that not only satisfies the vehicle dynamics constraints but also incurs the minimum cost is selected as the final valid trajectory.

Vehicles within a 50 m perception range of the ego vehicle will be subject to the Frenet optimal trajectory control, with a trajectory being planned every 0.5 s and having a duration of 5 s.

3. Domain Randomization for Rule-Based Microscopic Traffic Flow

The domain randomization method is based on randomizing the model parameters in the IDM car-following model and the SL2015 lane-changing model. The randomized parameters are shown in Table 1 and are described below.

Table 1.

Domain randomization parameters.

There are five randomized parameters in the IDM model. “” is the acceleration exponent and “T” is the time gap in the IDM model, respectively. “”, “”, and “” are the upper and lower limits of vehicle acceleration and the upper limit of vehicle speed, respectively.

There are two randomized parameters in the SL2015 model. “lcSpeedGain” indicates the degree to which a vehicle is eager to change lanes to gain speed; the larger the value, the more inclined the vehicle is to change lanes. “lcAssertive” is another parameter that significantly influences the driver’s lane-changing model [22]; a lower “lcAssertive” value makes the vehicle more inclined to accept smaller lane-changing gaps, leading to more aggressive lane-changing behavior.

Ref. [23] found that the parameters , T, , , and are close to Gaussian distributions. Consequently, we adopt Gaussian distributions for all the domain-randomized parameters. All the randomized parameters follow Gaussian distributions within the interval , with distribution s. Here, and are the upper and lower bounds of the randomization interval. is set to be , and is set to be . Thus, when a vehicle is generated, the probability that its randomized parameter value will fall within is 99.73%.

When each vehicle is initialized on the road for each episode, these randomized parameters are generated and assigned to it.

4. Simulation Experiment

In this section, we create freeway and merging environments in the open-source SUMO driving simulator [24] and establish the communication between SUMO and the reinforcement learning algorithm via TraCI [25]. The timestep for the agent to select actions and observe environment state is set at 0.1 s. We create non-randomized microscopic traffic flow, the high-fidelity microscopic traffic flow of LimSim, and the domain-randomized microscopic traffic flows. We train the reinforcement learning-based autonomous vehicles under different microscopic traffic flows in freeway and merging scenes, respectively.

4.1. Merging

4.1.1. Merging Environment

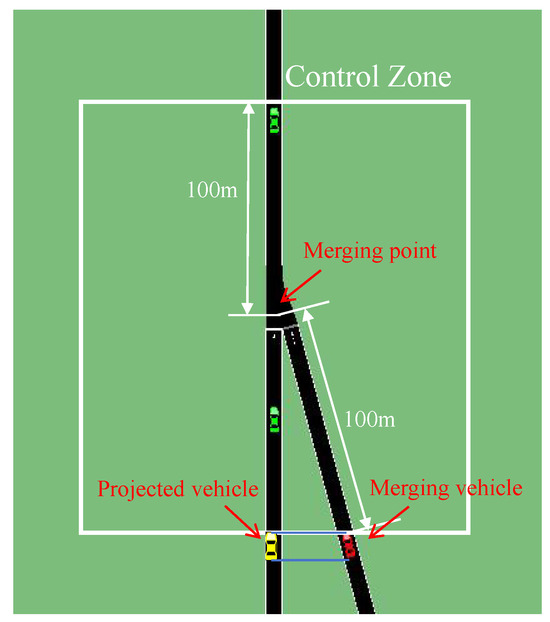

We establish the merging environment inspired by Lin et al. [6]. A control zone for the merging vehicle is established, spanning 100 m to the rear of the on-ramp’s merging point and 100 m to the front of the merging point, as depicted in Figure 1. The red vehicle, operating under reinforcement learning control, is tasked with executing smooth and safe merging within the designated control area.

Figure 1.

Merging in SUMO.

State

In defining the state of the reinforcement learning environment, the merging vehicle is projected onto the main road to produce the projected vehicle, and then a total of five vehicles are considered: two vehicles before the projected vehicle, two vehicles after the projected vehicle, and the projected vehicle. In order to utilize the observable information reasonably, the distance () of these five vehicles to the merging point, as well as their velocities (), are included in the state representation. These parameters form a state representation with eleven variables, defined as

Action

The action space we have defined is a continuous variable: acceleration within m/s2. This range is consistent with the normal acceleration range of surrounding vehicles.

Reward

We aim for the merging vehicle to maintain a safe distance from the preceding and following vehicles, ensure comfort, and avoid coming to a stop or making the following vehicle brake sharply. Therefore, the reward function is expressed as follows:

After merging, the merging vehicle is safer when its position is in the middle between the preceding and following vehicles. The corresponding penalizing reward is defined as

where represents the weight factor, and is the maximum allowable speed difference. The variable w is defined to measure the distance gap among the merging vehicle, its first preceding vehicle, and its first following vehicle. The details are as follows:

where and represent the lengths of the first preceding vehicle and the merging vehicle, both measuring at 5 m. When the first following vehicle performs braking in the control zone, a penalizing reward is defined as

where is the weight and is acceleration of the first following vehicle. In order to improve the comfort level of the merging vehicle, we define a penalizing reward for jerk:

where is the weight, is maximum allowed jerk, and is jerk of the merging vehicle.

In addition, if the merging vehicle comes to a stop, a penalty of is imposed. When a merged vehicle collides with any vehicle, a penalty of is applied. Conversely, if the merging vehicle successfully reaches its destination, a reward of is granted. Table 2 shows the values of the above-mentioned parameters of the merging vehicle.

Table 2.

Parameter values for the merging vehicle.

4.1.2. Soft Actor–Critic

SAC is the reinforcement learning algorithm used for training in merging scenes [26]. The SAC algorithm uses the classical framework of reinforcement learning, actor–critic, which helps to optimize the value function and the policy at the same time, and it consists of a parameterized soft-Q function and a tractable policy . The parameters of these networks are and . This approach considers a more general maximum entropy objective that not only seeks to maximize rewards but also maintains a degree of randomness in action selection, as follows:

where denotes the state–action distribution under the policy , while signifies the entropy of the policy at state , thereby enhancing the unpredictability of the chosen actions. The temperature parameter plays a pivotal role as it calibrates the balance between entropy and reward within the objective function, subsequently influencing the formulation of the optimal policy. The hyperparameters of SAC are the same as in Ref. [6].

4.1.3. Results under Different Microscopic Traffic Flows

Training

In the merging environment, we trained 200,000 timesteps in each of three different microscopic traffic flows. The training was carried out on an NVIDIA RTX 3060 graphics card paired with an Intel i7-12700F processor. It required approximately 1 h to complete the training using both SUMO’s default non-randomized and domain-randomized traffic flows. In contrast, the training under the condition of high-fidelity traffic flow took 3.5 h. Vehicle generation probability is 0.56, and the traffic density on the main road was approximately 16 vehicles per kilometer.

Testing

The trained policy was tested with 1000 episodes in the merging environment. We evaluated the trained policy based on the merging vehicle’s success rate defined by the completion of an episode without any collisions and the average reward value over the entire testing period.

Comparison and Analysis

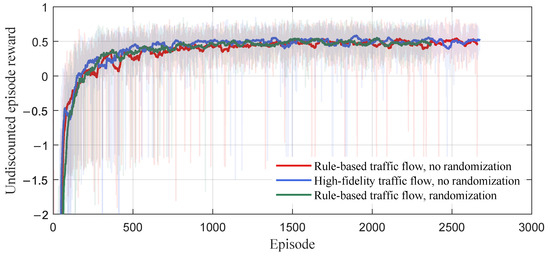

The training curves depicted in Figure 2 suggest that there is minimal visible difference in the rate of convergence and the rewards achieved by strategies trained under different microscopic traffic flows.

Figure 2.

Undiscounted episode reward during training under three traffic flows.

Table 3 shows that the policy trained under rule-based traffic flow without randomization and high-fidelity microscopic traffic flow yields poor results when adapted to domain-randomized rule-based traffic flow. Conversely, the policy trained under domain-randomized rule-based traffic flows consistently achieves success rates above when tested across all three traffic flows.

Table 3.

The results of testing the trained policies regarding merging.

4.1.4. Generalization Results for Increased Traffic Densities

High-fidelity microscopic traffic flows closely resemble actual traffic scenes, so we used them as the test traffic flow with increased traffic densities. The impact of changes in traffic density is shown in Table 4. It can be observed that the policy trained under rule-based traffic flow without randomization experiences a gradual decline in success rates and rewards as traffic density increases. In contrast, the policy trained under domain-randomized rule-based traffic flow consistently maintains a higher success rate.

Table 4.

The impact of traffic densities on three trained policies with high-fidelity traffic flow.

4.1.5. Ablation Study

In order to strengthen the understanding of individual domain-randomized parameters’ role in the model’s performance, we analyzed their individual impact on the training outcomes through an ablation study. For the ablation study, we separately ablated each of the domain-randomized parameters. Subsequently, policies were individually trained under the traffic flows with domain-randomized parameter ablation. Finally, the trained policies were tested under both the domain-randomized (all parameters randomized) and high-fidelity traffic flows. The results of the ablation study are shown in Table 5.

Table 5.

The results of the ablation study.

It can be observed that a decline occurs in the performance of the policies trained under the traffic flows with ablations when tested under the high-fidelity traffic flow. Moreover, the ablation of significantly affects performance.

4.2. Freeway

4.2.1. Freeway Environment

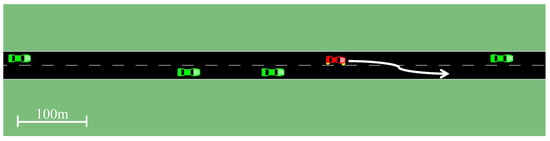

We used a straight two-lane freeway measuring 1000 m in length, inspired by Lin et al. [27]. Figure 3 depicts a standard lane-changing scenario in SUMO, where the ego vehicle is indicated by the red car and the surrounding vehicles are represented by the green cars.

Figure 3.

The ego vehicle overtakes along the arrow trajectory in the freeway.

State

The state of the environment is centered on the ego vehicle and four nearby vehicles: one directly in the front and one directly behind it in the same lane, and two similarly positioned vehicles in the adjacent lane. At time t, the state is defined by the longitudinal distance () of these four vehicles from the ego vehicle, their respective speeds (), and the speed and acceleration () of the ego vehicle. These parameters form a state representation with ten variables as follows:

Action

The action space is defined as follows:

where is a continuous action that indicates the acceleration of ego vehicle. Meanwhile, the discrete actions ‘0’ and ‘1’ dictate lane-changing behavior. The ‘0’ means to keep the current lane and the ‘1’ means an instantaneous lane change to the other lane.

Reward

We have formulated a reward function aligned with practical driving objectives, incentivizing behaviors such as avoiding collisions, obeying speed limits, preserving comfortable driving conditions, and maintaining a safe following distance. The total reward is expressed as follows:

In order to penalize frequent lane changes, the penalty is defined as follows:

where is ego vehicle’s lateral position and < . If the vehicle changes lanes within the safety distance , it incurs a penalty of . Alternatively, changing lanes outside results in a penalty of .

It is essential to ensure that the ego vehicle maintains a safe following distance from the preceding vehicle, and the corresponding penalizing reward is defined as

The objective of is to ensure driving comfort. It is defined as

where and denote the acceleration at the current and previous moments, respectively.

In order to promote the ego vehicle speed that enables overtaking, the penalty is defined as follows:

When there is no opportunity to overtake the vehicle ahead, the ego vehicle should travel at a steady speed similar to that of the preceding vehicle. Consequently, we introduce a threshold . As long as , the ego vehicle will not incur penalties of or .

In the equations presented above, denotes the corresponding weights. The key parameters for the freeway scene are presented in Table 6.

Table 6.

Parameters for freeway simulation.

4.2.2. Parameterized Soft Actor–Critic

The SAC algorithm in Section 4.1.2 can only solve continuous-action space problems. When dealing with continuous-discrete hybrid action space for freeway lane change, we adopt the Parameterized SAC (PASAC) algorithm, inspired by Lin et al. [27].

PASAC is based on SAC. The actor network produces continuous outputs, which include both continues actions and the weights for the discrete actions. An argmax function is utilized to select the discrete action associated with the maximum weight.

The freeway environment, having a hybrid continuous-discrete action space, requires the agent to be trained with the PASAC algorithm. The hyperparameters of PASAC are the same as SAC.

4.2.3. Results under Different Microscopic Traffic Flows

Training

In the freeway environment, we trained 400,000 timesteps in each of three different microscopic traffic flows. It required 1.5 h to complete the training using both rule-based traffic flows without randomization and with domain randomization. In contrast, the training under the condition of high-fidelity traffic flow took 5 h. Vehicle generation probability is 0.14 vehicles per second, and the traffic density on the main road was approximately 11 vehicles per kilometer on each straightaway.

Testing

The trained policy was tested with 1000 episodes in the freeway environment. We evaluated the trained policy based on the ego vehicle’s success rate defined by the completion of an episode without any collisions, and the average reward value over the entire testing period.

Comparison and Analysis

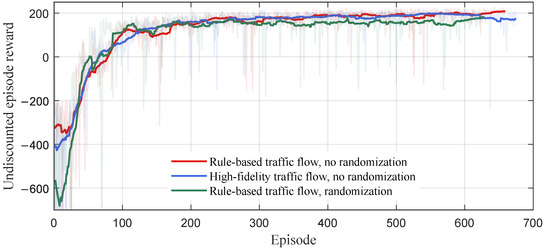

In Figure 4, it can be observed that the policies all tend to converge around 200 episodes. Throughout the training process, aside from the initially lower reward of the domain-randomized traffic flows, the convergence rates and final rewards of the three curves are closely aligned.

Figure 4.

Undiscounted episode reward during training under three traffic flows.

The results of testing are shown in Table 7. It can be observed that the policy trained under domain-randomized rule-based traffic flows has the highest success rates when tested under different microscopic traffic flows. The policy trained under rule-based and high-fidelity traffic flows without randomization cannot adapt to domain-randomized rule-based traffic flow.

Table 7.

The results of testing the trained policies regarding freeway condition.

4.2.4. Generalization Results for Increased Traffic Densities

The impact of changes in high-fidelity traffic density is shown in Table 8. It can be observed that the success rate and reward of the policy trained under microscopic traffic flow without randomization decreases significantly as traffic density increases. In contrast, the policy trained under domain-randomized traffic flow maintains a success rate near .

Table 8.

The impact of changes in traffic density on three trained policies under high-fidelity traffic flow.

4.2.5. Ablation Study

In the freeway environment, we also conducted an ablation study to enhance our understanding of the role that an individual domain-randomized parameter plays in the model’s performance. The results of the ablation study are shown in Table 9.

Table 9.

The results of the ablation study.

In the freeway environment, the collision rates of the different policies are close to zero, so we mainly compare the average rewards of the different policies. It can be found that the conclusions are similar to those of merging, where the performance of the policies trained under traffic flows with domain-randomized parameter ablation declines to varying degrees. The ablation of has a large impact on the performance.

5. Conclusions

In this study, we introduce a method for randomizing lane-changing and car-following model parameters to generate randomized microscopic traffic flows, and we evaluate and compare the policies trained by reinforcement learning algorithms in freeway and merging environments. The results show that

- The policy trained under the condition of domain-randomized rule-based microscopic traffic flow is able to maintain high rewards and success rates when tested with different microscopic traffic flows. However, the policy trained under the condition of microscopic traffic flow without randomization or high-fidelity microtraffic flow performs significantly worse when tested under microscopic traffic flows that are different from those of training. This indicates that domain randomization enables reinforcement learning agents to adapt to different types of traffic flow.

- The policy trained under the condition of domain-randomized rule-based microscopic traffic flow performs well when tested under high-fidelity microscopic traffic flow with different traffic densities. The policy trained under microscopic traffic flow without randomization decreases significantly with increasing traffic density. This indicates that the domain-randomized traffic flow possesses strong generalization to changes in traffic density.

- Although high-fidelity microscopic traffic flow is close to real microscopic traffic flows, the results show that not only does it considerably increase simulation time but policies trained under the condition of microscopic traffic flow also do not generalize well to different microscopic flows. Therefore, high-fidelity microscopic traffic flow is more suitable for testing rather than training.

In summary, the policies trained under domain-randomized rule-based microscopic traffic flow demonstrate robust performance when transferred to environments that closely resemble real-world traffic conditions. The future work includes testing a policy trained under the condition of domain-randomized rule-based microscopic traffic flow on a real autonomous vehicle.

Author Contributions

Conceptualization, Y.L.; methodology, A.X., Y.L. and X.L.; formal analysis, A.X. and Y.L.; investigation, A.X. and Y.L.; data curation, A.X.; writing—original draft preparation, A.X.; writing—review and editing, A.X.; supervision, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by Guangzhou Basic and Applied Basic Research Program under Grant 2023A04J1688, and in part by South China University of Technology faculty start-up fund.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data can be obtained upon reasonable request from the corresponding author.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Le Vine, S.; Zolfaghari, A.; Polak, J. Autonomous cars: The tension between occupant experience and intersection capacity. Transp. Res. Part C Emerg. Technol. 2015, 52, 1–14. [Google Scholar] [CrossRef]

- Sallab, A.E.; Abdou, M.; Perot, E.; Yogamani, S. Deep reinforcement learning framework for autonomous driving. arXiv 2017, arXiv:1704.02532. [Google Scholar] [CrossRef]

- Hoel, C.J.; Wolff, K.; Laine, L. Automated speed and lane change decision making using deep reinforcement learning. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2148–2155. [Google Scholar]

- Ye, Y.; Zhang, X.; Sun, J. Automated vehicle’s behavior decision making using deep reinforcement learning and high-fidelity simulation environment. Transp. Res. Part C Emerg. Technol. 2019, 107, 155–170. [Google Scholar] [CrossRef]

- Ye, F.; Cheng, X.; Wang, P.; Chan, C.Y.; Zhang, J. Automated lane change strategy using proximal policy optimization-based deep reinforcement learning. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1746–1752. [Google Scholar]

- Lin, Y.; McPhee, J.; Azad, N.L. Anti-jerk on-ramp merging using deep reinforcement learning. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 7–14. [Google Scholar]

- Peng, J.; Zhang, S.; Zhou, Y.; Li, Z. An Integrated Model for Autonomous Speed and Lane Change Decision-Making Based on Deep Reinforcement Learning. IEEE Trans. Intell. Transp. Syst. 2022, 23, 21848–21860. [Google Scholar] [CrossRef]

- Mirchevska, B.; Blum, M.; Louis, L.; Boedecker, J.; Werling, M. Reinforcement learning for autonomous maneuvering in highway scenarios. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium, Los Angeles, CA, USA, 11–14 June 2017. [Google Scholar]

- Treiber, M.; Hennecke, A.; Helbing, D. Congested traffic states in empirical observations and microscopic simulations. Phys. Rev. E 2000, 62, 1805. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Zeng, W.; Urtasun, R.; Yumer, E. Deep structured reactive planning. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 4897–4904. [Google Scholar]

- Huang, X.; Rosman, G.; Jasour, A.; McGill, S.G.; Leonard, J.J.; Williams, B.C. TIP: Task-informed motion prediction for intelligent vehicles. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 11432–11439. [Google Scholar]

- Punzo, V.; Simonelli, F. Analysis and comparison of microscopic traffic flow models with real traffic microscopic data. Transp. Res. Rec. 2005, 1934, 53–63. [Google Scholar] [CrossRef]

- Tessler, C.; Efroni, Y.; Mannor, S. Action robust reinforcement learning and applications in continuous control. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6215–6224. [Google Scholar]

- Wang, J.X.; Kurth-Nelson, Z.; Tirumala, D.; Soyer, H.; Leibo, J.Z.; Munos, R.; Blundell, C.; Kumaran, D.; Botvinick, M. Learning to reinforcement learn. arXiv 2016, arXiv:1611.05763. [Google Scholar]

- Andrychowicz, O.M.; Baker, B.; Chociej, M.; Jozefowicz, R.; McGrew, B.; Pachocki, J.; Petron, A.; Plappert, M.; Powell, G.; Ray, A.; et al. Learning dexterous in-hand manipulation. Int. J. Robot. Res. 2020, 39, 3–20. [Google Scholar] [CrossRef]

- Sheckells, M.; Garimella, G.; Mishra, S.; Kobilarov, M. Using data-driven domain randomization to transfer robust control policies to mobile robots. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3224–3230. [Google Scholar]

- Sun, Q.; Huang, X.; Williams, B.C.; Zhao, H. InterSim: Interactive traffic simulation via explicit relation modeling. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 11416–11423. [Google Scholar]

- Feng, L.; Li, Q.; Peng, Z.; Tan, S.; Zhou, B. Trafficgen: Learning to generate diverse and realistic traffic scenarios. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 3567–3575. [Google Scholar]

- Wenl, L.; Fu, D.; Mao, S.; Cai, P.; Dou, M.; Li, Y.; Qiao, Y. LimSim: A long-term interactive multi-scenario traffic simulator. In Proceedings of the 2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC), Bilbao, Spain, 24–28 September 2023; pp. 1255–1262. [Google Scholar]

- Zheng, O.; Abdel-Aty, M.; Yue, L.; Abdelraouf, A.; Wang, Z.; Mahmoud, N. CitySim: A drone-based vehicle trajectory dataset for safety oriented research and digital twins. arXiv 2022, arXiv:2208.11036. [Google Scholar] [CrossRef]

- Werling, M.; Ziegler, J.; Kammel, S.; Thrun, S. Optimal trajectory generation for dynamic street scenarios in a frenet frame. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, Alaska, 3–8 May 2010; pp. 987–993. [Google Scholar]

- Berrazouane, M.; Tong, K.; Solmaz, S.; Kiers, M.; Erhart, J. Analysis and initial observations on varying penetration rates of automated vehicles in mixed traffic flow utilizing sumo. In Proceedings of the 2019 IEEE International Conference on Connected Vehicles and Expo (ICCVE), Graz, Austria, 4–8 November 2019; pp. 1–7. [Google Scholar]

- Kusari, A.; Li, P.; Yang, H.; Punshi, N.; Rasulis, M.; Bogard, S.; LeBlanc, D.J. Enhancing SUMO simulator for simulation based testing and validation of autonomous vehicles. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 5–9 June 2022; pp. 829–835. [Google Scholar]

- Krajzewicz, D.; Erdmann, J.; Behrisch, M.; Bieker, L. Recent development and applications of SUMO-Simulation of Urban MObility. Int. J. Adv. Syst. Meas. 2012, 5, 128–138. [Google Scholar]

- Wegener, A.; Piórkowski, M.; Raya, M.; Hellbrück, H.; Fischer, S.; Hubaux, J.P. TraCI: An interface for coupling road traffic and network simulators. In Proceedings of the 11th Communications and Networking Simulation Symposium, Ottawa, ON, Canada, 14–17 April 2008; pp. 155–163. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Hartikainen, K.; Tucker, G.; Ha, S.; Tan, J.; Kumar, V.; Zhu, H.; Gupta, A.; Abbeel, P.; et al. Soft actor-critic algorithms and applications. arXiv 2018, arXiv:1812.05905. [Google Scholar]

- Lin, Y.; Liu, X.; Zheng, Z.; Wang, L. Discretionary Lane-Change Decision and Control via Parameterized Soft Actor-Critic for Hybrid Action Space. arXiv 2024, arXiv:2402.15790. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).