Deep Learning Multi-Domain Model Provides Accurate Detection and Grading of Mucosal Ulcers in Different Capsule Endoscopy Types

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Neural Network Model

- -

- For ulcer identification: 3 epochs; batch size 12; Adam optimization with a learning rate of 5 × 10−5. The network output was a binary classification layer: non-ulcerated mucosa versus ulcerated mucosa images.

- -

- For ulcer grading: 3 epochs; batch size 12; Adam optimization with a learning rate of 5 × 10−5. The network output was a binary classification layer: grade 1 (mild) ulcerations vs. grade 3 (severe) ulcerations.

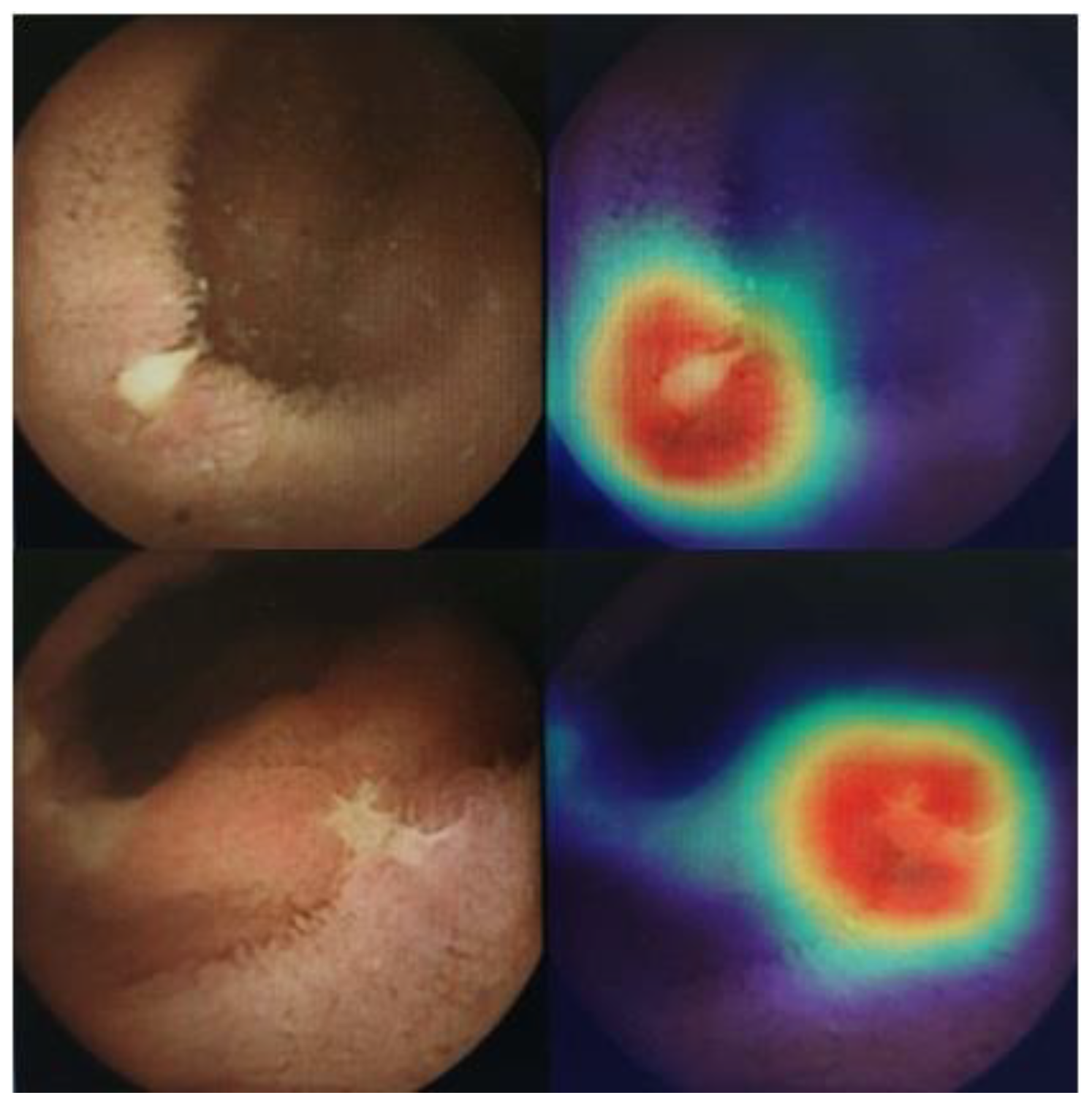

2.3. Class Activation Maps

2.4. Metrics

3. Results

3.1. Study Population

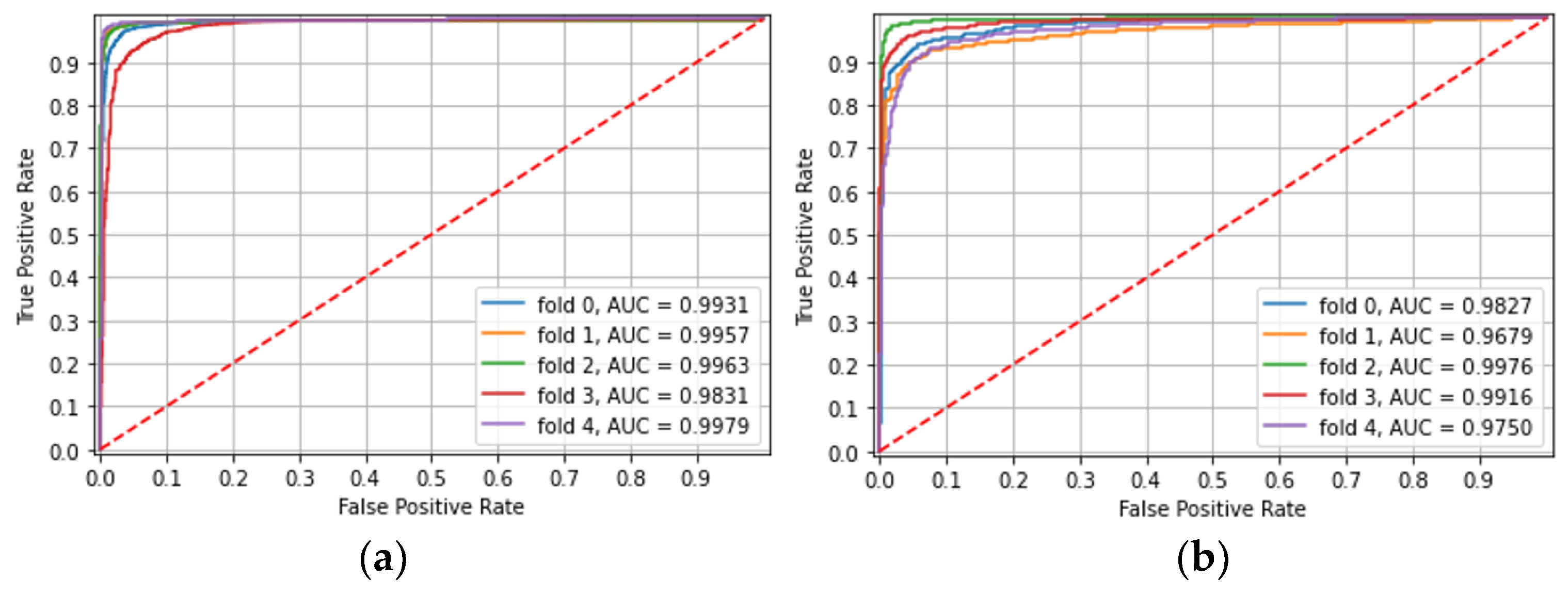

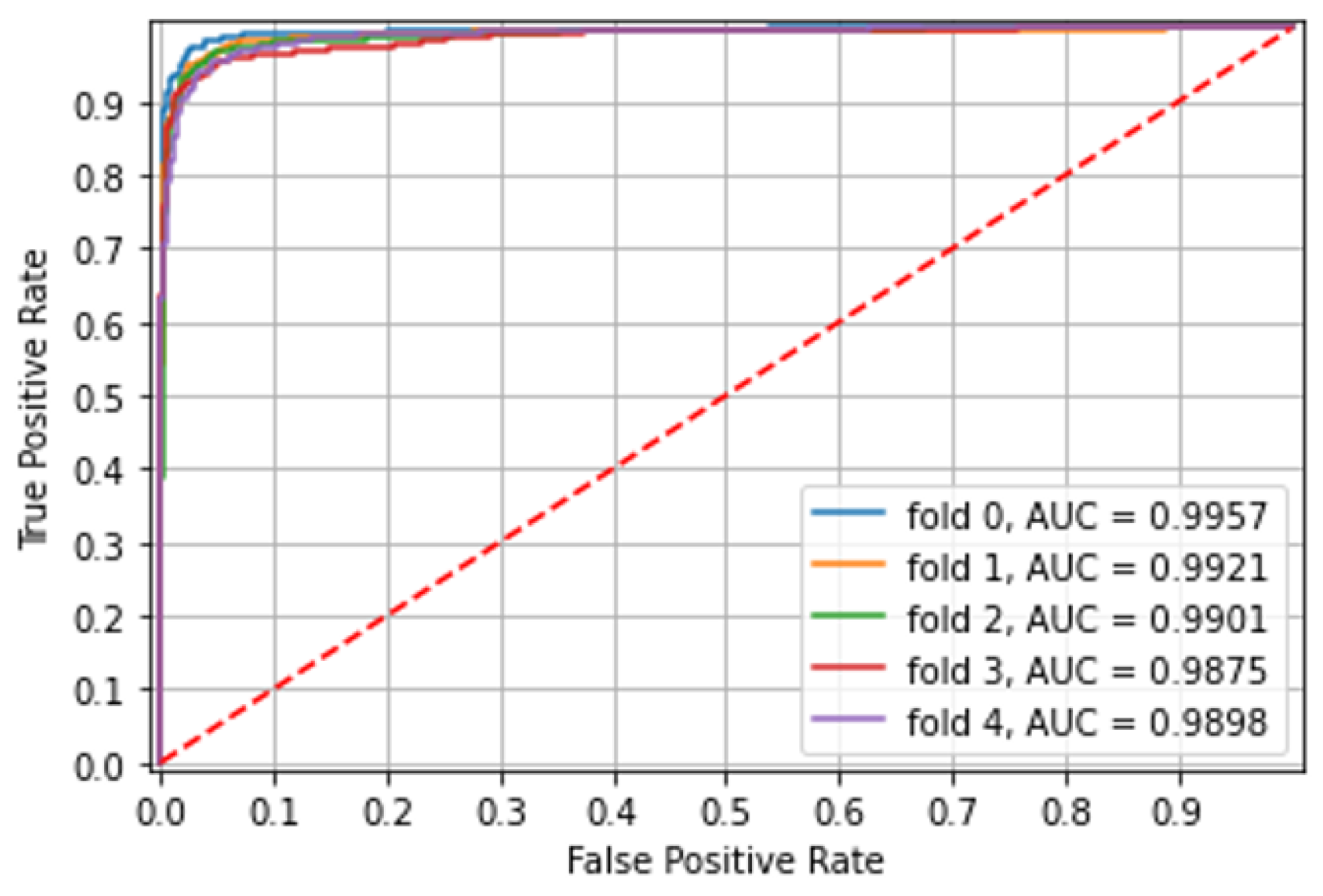

3.2. Identification of Small Bowel Ulcerated Mucosa (Experiments 1–3)

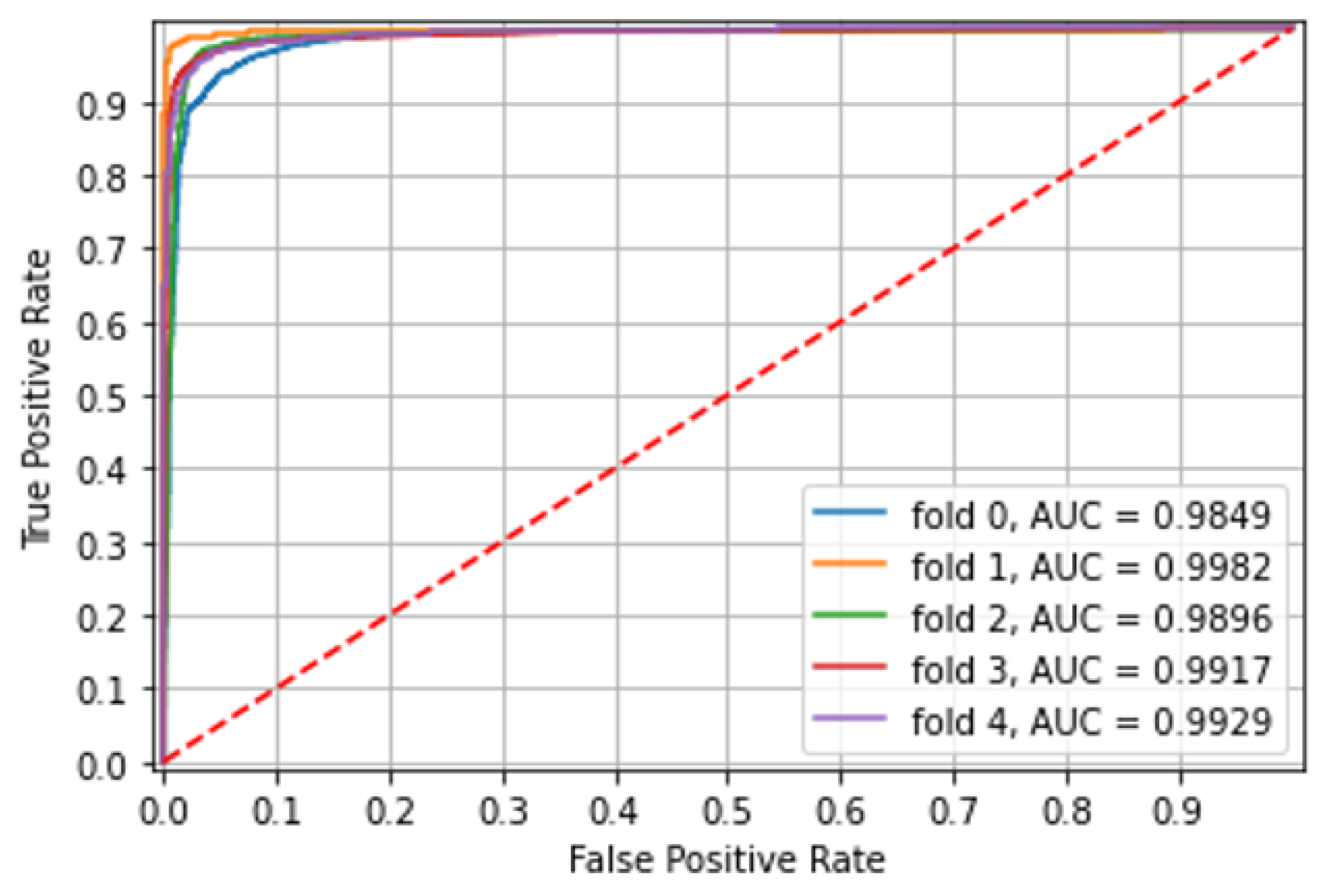

3.3. Identification of Colonic Ulcerated Mucosa (Experiment 4)

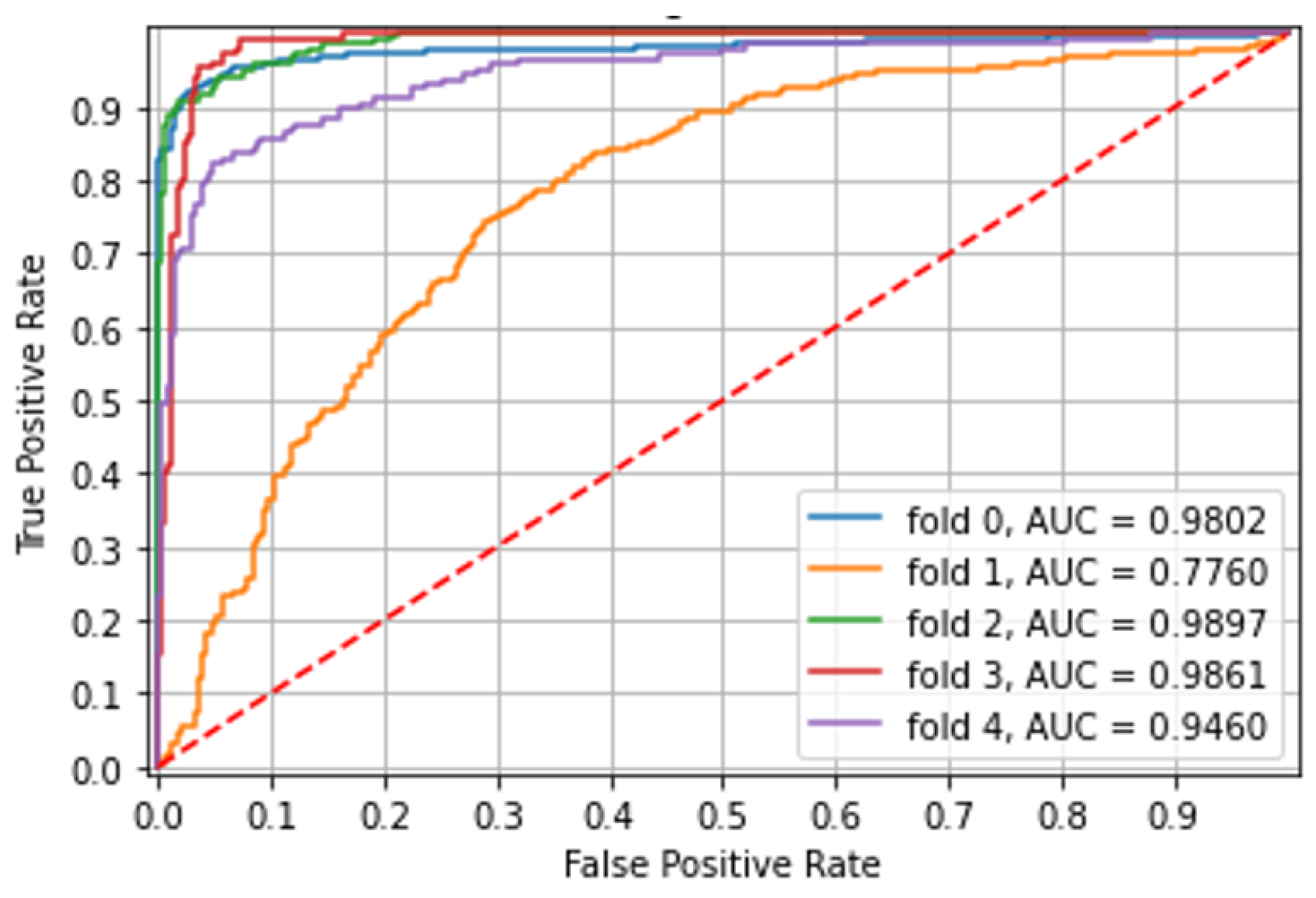

3.4. Ulcer Grading (Experiment 5)

3.5. Class Activation Map

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kopylov, U.; Seidman, E.G. Diagnostic modalities for the evaluation of small bowel disorders. Curr. Opin. Gastroenterol. 2015, 31, 111–117. [Google Scholar] [CrossRef] [PubMed]

- Kopylov, U.; Seidman, E.G. Clinical applications of small bowel capsule endoscopy. Clin. Exp. Gastroenterol. 2013, 6, 129–137. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Eliakim, R. Video capsule endoscopy of the small bowel. Curr. Opin. Gastroenterol. 2008, 24, 159–163. [Google Scholar] [CrossRef]

- Pennazio, M.; Spada, C.; Eliakim, R.; Keuchel, M.; May, A.; Mulder, C.J.; Rondonotti, E.; Adler, S.N.; Albert, J.; Baltes, P.; et al. Small-bowel capsule endoscopy and device-assisted enteroscopy for diagnosis and treatment of small-bowel disorders: European Society of Gastrointestinal Endoscopy (ESGE) clinical guideline. Endoscopy 2015, 47, 352–386. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mishkin, D.S.; Chuttani, R.; Croffie, J.; DiSario, J.; Liu, J.; Shah, R.; Somogyi, L.; Tierney, W.; Song, L.M.K.; Petersen, B.T.; et al. ASGE technology status evaluation report: Wireless capsule endoscopy. Gastrointest. Endosc. 2006, 63, 539–545. [Google Scholar] [CrossRef]

- Kopylov, U.; Koulaouzidis, A.; Klang, E.; Carter, D.; Ben-Horin, S.; Eliakim, R. Monitoring of small bowel Crohn’s disease. Exp. Rev. Gastroenterol. Hepatol. 2017, 11, 1047–1058. [Google Scholar] [CrossRef]

- Melmed, G.Y.; Dubinsky, M.C.; Rubin, D.T.; Fleisher, M.; Pasha, S.F.; Sakuraba, A.; Tiongco, F.; Shafran, I.; Fernandez-Urien, I.; Rosa, B.; et al. Utility of video capsule endoscopy for longitudinal monitoring of Crohn’s disease activity in the small bowel: A prospective study. Gastrointest. Endosc. 2018, 88, 947–955. [Google Scholar] [CrossRef] [Green Version]

- Eliakim, R. Video capsule endoscopy of the small bowel. Curr. Opin. Gastroenterol. 2010, 26, 129–133. [Google Scholar] [CrossRef]

- Waterman, M.; Eliakim, R. Capsule enteroscopy of the small intestine. Abdom. Imaging 2009, 34, 452–458. [Google Scholar] [CrossRef]

- Kopylov, U.; Nemeth, A.; Koulaouzidis, A.; Makins, R.; Wild, G.; Afif, W.; Bitton, A.; Johansson, G.W.; Bessissow, T.; Eliakim, R.; et al. Small bowel capsule endoscopy in the management of established Crohn’s disease: Clinical impact, safety, and correlation with inflammatory biomarkers. Inflamm. Bowel Dis. 2015, 21, 93–100. [Google Scholar] [CrossRef]

- Ben-Horin, S.; Lahat, A.; Amitai, M.M.; Klang, E.; Yablecovitch, D.; Neuman, S.; Levhar, N.; Selinger, L.; Rozendorn, N.; Turner, D.; et al. Assessment of small bowel mucosal healing by video capsule endoscopy for the prediction of short-term and long-term risk of Crohn’s disease flare: A prospective cohort study. Lancet Gastroenterol. Hepatol. 2019, 4, 519–528. [Google Scholar] [CrossRef]

- Maaser, C.; Sturm, A.; Vavricka, S.R.; Kucharzik, T.; Fiorino, G.; Annese, V.; Calabrese, E.; Baumgart, D.C.; Bettenworth, D.; Nunes, P.B.; et al. ECCO-ESGAR Guideline for Diagnostic Assessment in IBD Part 1: Initial diagnosis, monitoring of known IBD, detection of complications. J. Crohn’s Colitis 2019, 13, 144–164. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sturm, A.; Maaser, C.; Calabrese, E.; Annese, V.; Fiorino, G.; Kucharzik, T.; Vavricka, S.R.; Verstockt, B.; van Rheenen, P.; Tolan, D.; et al. ECCO-ESGAR Guideline for Diagnostic Assessment in IBD Part 2: IBD scores and general principles and technical aspects. J. Crohn’s Colitis 2019, 13, 273–284. [Google Scholar] [CrossRef] [PubMed]

- Soffer, S.; Ben-Cohen, A.; Shimon, O.; Amitai, M.M.; Greenspan, H.; Klang, E. Convolutional Neural Networks for Radiologic Images: A Radiologist’s Guide. Radiology 2019, 290, 590–606. [Google Scholar] [CrossRef] [PubMed]

- Jia, X.; Meng, M.Q.-H. A deep convolutional neural network for bleeding detection in wireless capsule endoscopy images. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; Volume 639. [Google Scholar]

- Aoki, T.; Yamada, A.; Kato, Y.; Saito, H.; Tsuboi, A.; Nakada, A.; Niikura, R.; Fujishiro, M.; Oka, S.; Ishihara, S.; et al. Automatic detection of blood content in capsule endoscopy images based on a deep convolutional neural network. J. Gastroenterol. Hepatol. 2020, 35, 1196–1200. [Google Scholar] [CrossRef]

- Jia, X.; Meng, M.Q.-.H. Gastrointestinal bleeding detection in wireless capsule endoscopy images using handcrafted and CNN features. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, 11–15 July 2017; Volume 3154. [Google Scholar]

- Leenhardt, R.; Vasseur, P.; Li, C.; Saurin, J.C.; Rahmi, G.; Cholet, F.; Becq, A.; Marteau, P.; Histace, A.; Dray, X. A neural network algorithm for detection of GI angiectasia during small-bowel capsule endoscopy. Gastrointest. Endosc. 2019, 89, 189–194. [Google Scholar] [CrossRef]

- Tsuboi, A.; Oka, S. Artificial intelligence using a convolutional neural network for automatic detection of small-bowel angioectasia in capsule endoscopy images. Dig. Endosc. 2020, 32, 382–390. [Google Scholar] [CrossRef]

- Mascarenhas Saraiva, M.; Ribeiro, T.; Afonso, J.; Andrade, P.; Cardoso, P.; Ferreira, J.; Cardoso, H.; Macedo, G. Deep Learning and Device-Assisted Enteroscopy: Automatic Detection of Gastrointestinal Angioectasia. Medicina 2021, 57, 1378. [Google Scholar] [CrossRef]

- Klang, E.; Grinman, A.; Soffer, S.; Margalit Yehuda, R.; Barzilay, O.; Amitai, M.M.; Konen, E.; Ben-Horin, S.; Eliakim, R.; Barash, Y.; et al. Automated Detection of Crohn’s Disease Intestinal Strictures on Capsule Endoscopy Images Using Deep Neural Networks. J. Crohn’s Colitis 2021, 15, 749–756. [Google Scholar] [CrossRef]

- Wang, X.; Qian, H.; Ciaccio, E.J.; Lewis, S.K.; Bhagat, G.; Green, P.H.; Xu, S.; Huang, L.; Gao, R.; Liu, Y. Celiac disease diagnosis from video capsule endoscopy images with residual learning and deep feature extraction. Comput. Methods Programs Biomed. 2020, 187, 105236. [Google Scholar] [CrossRef]

- Stoleru, C.A.; Dulf, E.H.; Ciobanu, L. Automated detection of celiac disease using Machine Learning Algorithms. Sci. Rep. 2022, 12, 4071. [Google Scholar] [CrossRef] [PubMed]

- He, J.Y.; Wu, X.; Jiang, Y.G.; Peng, Q.; Jain, R. Hookworm Detection in Wireless Capsule Endoscopy Images With Deep Learning. IEEE Trans. Image Process. 2018, 27, 2379–2392. [Google Scholar] [CrossRef] [PubMed]

- Klang, E.; Barash, Y.; Margalit, R.Y.; Soffer, S.; Shimon, O.; Albshesh, A.; Ben-Horin, S.; Amitai, M.M.; Eliakim, R.; Kopylov, U. Deep learning algorithms for automated detection of Crohn’s disease ulcers by video capsule endoscopy. Gastrointest. Endosc. 2020, 91, 606–613.e2. [Google Scholar] [CrossRef] [PubMed]

- Barash, Y.; Azaria, L.; Soffer, S.; Margalit Yehuda, R.; Shlomi, O.; Ben-Horin, S.; Eliakim, R.; Klang, E.; Kopylov, U. Ulcer severity grading in video capsule images of patients with Crohn’s disease: An ordinal neural network solution. Gastrointest. Endosc. 2021, 93, 187–192. [Google Scholar] [CrossRef] [PubMed]

- Fan, S.; Xu, L.; Fan, Y.; Wei, K.; Li, L. Computer-aided detection of small intestinal ulcer and erosion in wireless capsule endoscopy images. Phys. Med. Biol. 2018, 63, 165001. [Google Scholar] [CrossRef]

- Alaskar, H.; Hussain, A.; Al-Aseem, N.; Liatsis, P.; Al-Jumeily, D. Application of convolutional neural networks for automated ulcer detection in wireless capsule endoscopy images. Sensors 2019, 19, 1265. [Google Scholar] [CrossRef] [Green Version]

- Aoki, T.; Yamada, A.; Aoyama, K.; Saito, H.; Tsuboi, A.; Nakada, A.; Niikura, R.; Fujishiro, M.; Oka, S.; Ishihara, S.; et al. Automatic detection of erosions and ulcerations in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest. Endosc. 2019, 89, 357–363. [Google Scholar] [CrossRef]

- Wang, S.; Xing, Y.; Zhang, L.; Gao, H.; Zhang, H. A systematic evaluation and optimization of automatic detection of ulcers in wireless capsule endoscopy on a large dataset using deep convolutional neural networks. Phys. Med. Biol. 2019, 64, 235014. [Google Scholar] [CrossRef]

- Klang, E.; Kopylov, U.; Mortensen, B.; Damholt, A.; Soffer, S.; Barash, Y.; Konen, E.; Grinman, A.; Yehuda, R.M.; Buckley, M.; et al. A Convolutional Neural Network Deep Learning Model Trained on CD Ulcers Images Accurately Identifies NSAID Ulcers. Front. Med. 2021, 8, 656493. [Google Scholar] [CrossRef]

- Afonso, J.; Saraiva, M.M.; Ferreira, J.P.S.; Cardoso, H.; Ribeiro, T.; Andrade, P.; Parente, M.; Jorge, R.N.; Macedo, G. Automated detection of ulcers and erosions in capsule endoscopy images using a convolutional neural network. Med. Biol. Eng. Comput. 2022, 60, 719–725. [Google Scholar] [CrossRef]

- Ferreira, J.P.S.; de Mascarenhas Saraiva, M.J.D.Q.E.C.; Afonso, J.P.L.; Ribeiro, T.F.C.; Cardoso, H.M.C.; Ribeiro Andrade, A.P.; de Mascarenhas Saraiva, M.N.G.; Parente, M.P.L.; Natal Jorge, R.; Lopes, S.I.O.; et al. Identification of Ulcers and Erosions by the Novel Pillcam™ Crohn’s Capsule Using a Convolutional Neural Network: A Multicentre Pilot Study. J. Crohn’s Colitis 2022, 16, 169–172. [Google Scholar] [CrossRef] [PubMed]

- Klang, E. Deep learning and medical imaging. J. Thorac. Dis. 2018, 10, 1325–1328. [Google Scholar] [CrossRef] [PubMed]

- Mingxing, T.; Quoc, V.L. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. arXiv 2017, arXiv:1610.02391. [Google Scholar]

- Bang, C.S.; Lee, J.J.; Baik, G.H. Computer-Aided Diagnosis of Gastrointestinal Ulcer and Hemorrhage Using Wireless Capsule Endoscopy: Systematic Review and Diagnostic Test Accuracy Meta-analysis. J. Med. Internet Res. 2021, 23, e33267. [Google Scholar] [CrossRef]

- Houdeville, C.; Souchaud, M.; Leenhardt, R.; Beaumont, H.; Benamouzig, R.; McAlindon, M.; Grimbert, S.; Lamarque, D.; Makins, R.; Saurin, J.C.; et al. A multisystem-compatible deep learning-based algorithm for detection and characterization of angiectasias in small-bowel capsule endoscopy. A proof-of-concept study. Dig. Liver Dis. 2021, 53, 1627–1631. [Google Scholar] [CrossRef]

| PillCam SB3 | PillCam Crohn | Total | |

|---|---|---|---|

| Normal | 10,248 | 3839 | 14,087 |

| Ulcer grade 1 | 5398 | 2147 | 7545 |

| Ulcer grade 2 | 3006 | 1527 | 4533 |

| Ulcer grade 3 | 1969 | 1384 | 3353 |

| Total | 20,621 | 8897 | 29,518 |

| Pillcam SB3 CE Images | PillCam Crohn CE Images | ||||||

|---|---|---|---|---|---|---|---|

| Fold | Accuracy | Mean Patient Accuracy | ROC_AUC | Fold | Accuracy | Mean Patient Accuracy | ROC_AUC |

| 0 | 0.966 | 0.977 | 0.993 | 0 | 0.935 | 0.893 | 0.982 |

| 1 | 0.978 | 0.976 | 0.995 | 1 | 0.915 | 0.908 | 0.967 |

| 2 | 0.974 | 0.985 | 0.996 | 2 | 0.978 | 0.977 | 0.997 |

| 3 | 0.935 | 0.98 | 0.983 | 3 | 0.954 | 0.954 | 0.991 |

| 4 | 0.975 | 0.982 | 0.997 | 4 | 0.924 | 0.918 | 0.974 |

| Fold | Accuracy | Mean Patient Accuracy | ROC_AUC |

|---|---|---|---|

| 0 | 0.941 | 0.978 | 0.984 |

| 1 | 0.975 | 0.975 | 0.998 |

| 2 | 0.958 | 0.982 | 0.989 |

| 3 | 0.963 | 0.967 | 0.991 |

| 4 | 0.963 | 0.968 | 0.992 |

| Fold | Accuracy | Mean Patient Accuracy | ROC_AUC |

|---|---|---|---|

| 0 | 0.896 | 0.951 | 0.98 |

| 1 | 0.478 | 0.74 | 0.77 |

| 2 | 0.937 | 0.948 | 0.989 |

| 3 | 0.931 | 0.905 | 0.986 |

| 4 | 0.828 | 0.795 | 0.946 |

| Fold | Accuracy | Mean Patient Accuracy | ROC_AUC |

|---|---|---|---|

| 0 | 0.972 | 0.979 | 0.995 |

| 1 | 0.965 | 0.954 | 0.992 |

| 2 | 0.960 | 0.925 | 0.990 |

| 3 | 0.950 | 0.916 | 0.950 |

| 4 | 0.948 | 0.932 | 0.989 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kratter, T.; Shapira, N.; Lev, Y.; Mauda, O.; Moshkovitz, Y.; Shitrit, R.; Konyo, S.; Ukashi, O.; Dar, L.; Shlomi, O.; et al. Deep Learning Multi-Domain Model Provides Accurate Detection and Grading of Mucosal Ulcers in Different Capsule Endoscopy Types. Diagnostics 2022, 12, 2490. https://doi.org/10.3390/diagnostics12102490

Kratter T, Shapira N, Lev Y, Mauda O, Moshkovitz Y, Shitrit R, Konyo S, Ukashi O, Dar L, Shlomi O, et al. Deep Learning Multi-Domain Model Provides Accurate Detection and Grading of Mucosal Ulcers in Different Capsule Endoscopy Types. Diagnostics. 2022; 12(10):2490. https://doi.org/10.3390/diagnostics12102490

Chicago/Turabian StyleKratter, Tom, Noam Shapira, Yarden Lev, Or Mauda, Yehonatan Moshkovitz, Roni Shitrit, Shani Konyo, Offir Ukashi, Lior Dar, Oranit Shlomi, and et al. 2022. "Deep Learning Multi-Domain Model Provides Accurate Detection and Grading of Mucosal Ulcers in Different Capsule Endoscopy Types" Diagnostics 12, no. 10: 2490. https://doi.org/10.3390/diagnostics12102490