Clearing the Fog: A Scoping Literature Review on the Ethical Issues Surrounding Artificial Intelligence-Based Medical Devices

Abstract

:1. Introduction

2. Evolution of AI Regulatory Frameworks

3. Methods

3.1. Search Strategy

3.2. Study Eligibility

3.3. Study Selection

3.4. Data Extraction

3.5. Data Synthesis

4. Results

4.1. Study Selection

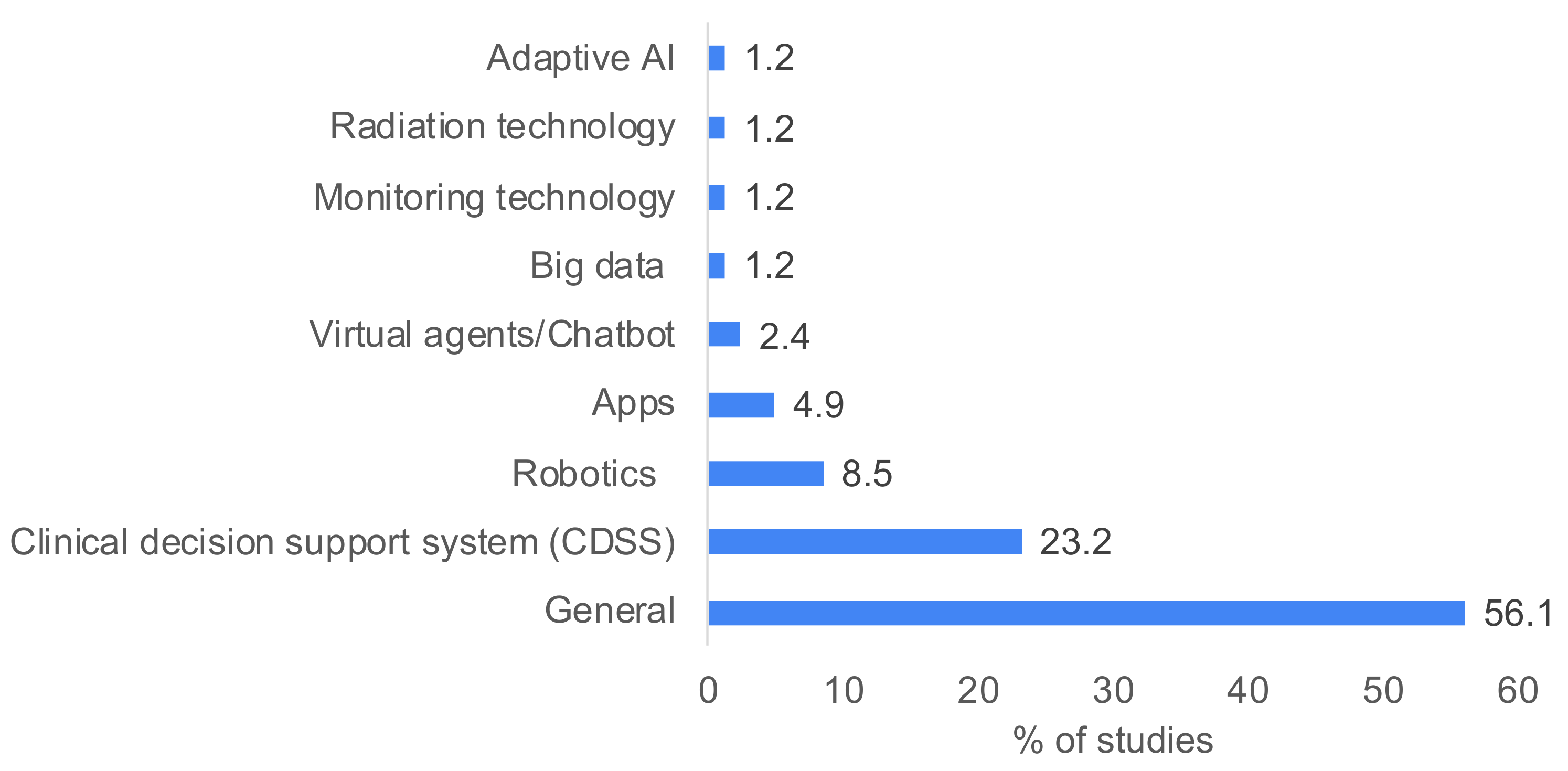

4.2. Study Characteristics

4.3. Transparency

4.4. Accountability

4.5. Confidentiality

4.6. Autonomy

4.7. Algorithmic Bias in AI-Based Medical Devices

4.8. Informed Consent in the Era of AI

4.9. Intersection of Algorithmic Bias and Informed Consent

4.10. Trust

4.11. Fairness

5. Discussion

- The lack of transparency in relation to data collection, use of personal data, explainability of AI and its effects on the relationship between the users and the service providers;

- The challenge of identifying who is responsible for medical AI technology. As AI systems become increasingly advanced and autonomous, there are questions about the level of agency and control that should be afforded to them and about how to ensure that this technology acts in the best interests of human beings;

- The pervasiveness, invasiveness and intrusiveness of technology that is difficult for the users to understand and therefore challenges the process of obtaining a fully informed consent;

- The lack of the establishment of a trust framework that ensures the protection/security of shared personal data, enhanced privacy and usable security countermeasures on the personal and sensitive data interchange among IoT systems;

- The difficulty of creating fair/equitable technology without algorithmic bias;

- The difficulty of respecting autonomy, privacy and confidentiality, particularly when third parties may have a strong interest in getting access to electronically recorded and stored personal data.

6. Conclusions

- Clarifying the ethical debate on AI-based solutions and identifying key issues;

- Fostering the ethical competence of biomedical engineering students, who are coauthors of this paper, introducing them to interdisciplinarity in research as a good practice;

- Enriching our already existing framework with the need for considerations of ethical–legal aspects of AI-based medical device solutions, awareness of the existing debates and an innovative and interdisciplinary approach. Such a framework could support AI-based medical device design and regulations at an international level.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- MedTechEurope. The European Medical Technology Industry in Figures 2022; MedTechEurope: Brussels, Belgium, 2022. [Google Scholar]

- Digital Health News. Healthcare 5.0 Technologies at Healthcare Automation and Digitalization Congress 2022. Available online: https://www.digitalhealthnews.eu/events/6737-healthcare-5-0-technologies-at-healthcare-automation-and-digitalization-congress-2022 (accessed on 23 January 2023).

- Tortorella, G.L.; Fogliatto, F.S.; Mac Cawley Vergara, A.; Vassolo, R.; Sawhney, R. Healthcare 4.0: Trends, challenges and research directions. Prod. Plan. Control 2020, 31, 1245–1260. [Google Scholar] [CrossRef]

- Corti, L.; Afferni, P.; Merone, M.; Soda, P. Hospital 4.0 and its innovation in methodologies and technologies. In Proceedings of the 2018 IEEE 31st International Symposium on Computer-Based Medical Systems (CBMS), Karlstad, Sweden, 18–21 June 2018. [Google Scholar]

- Mbunge, E.; Muchemwa, B.; Jiyane, S.e.; Batani, J. Sensors and healthcare 5.0: Transformative shift in virtual care through emerging digital health technologies. Glob. Health J. 2021, 5, 169–177. [Google Scholar] [CrossRef]

- Mohanta, B.; Das, P.; Patnaik, S. Healthcare 5.0: A paradigm shift in digital healthcare system using artificial intelligence, IOT and 5G communication. In Proceedings of the 2019 International Conference on Applied Machine Learning (ICAML), Bhubaneswar, India, 25–26 May 2019. [Google Scholar]

- Korteling, J.E.H.; van de Boer-Visschedijk, G.C.; Blankendaal, R.A.M.; Boonekamp, R.C.; Eikelboom, A.R. Human-versus Artificial Intelligence. Front. Artif. Intell. 2021, 4, 622364. [Google Scholar] [CrossRef] [PubMed]

- Healthcare IT News. 3 Charts Show Where Artificial Intelligence Is Making an Impact in Healthcare Right Now. 2018. Available online: https://www.healthcareitnews.com/news/3-charts-show-where-artificial-intelligence-making-impact-healthcare-right-now (accessed on 23 January 2023).

- Allied Market Research. Artificial Intelligence in Healthcare Market|Global Report—2030. 2021. Available online: https://www.alliedmarketresearch.com/artificial-intelligence-in-healthcare-market (accessed on 23 January 2023).

- Reuter, E. 5 Takeaways from the FDA’s List of AI-Enabled Medical Devices. 2022. Available online: https://www.medtechdive.com/news/FDA-AI-ML-medical-devices-5-takeaways/635908/ (accessed on 23 January 2023).

- Bohr, A.; Memarzadeh, K. The rise of artificial intelligence in healthcare applications. In Artificial Intelligence in Healthcare; Academic Press: Cambridge, MA, USA, 2020; pp. 25–60. [Google Scholar]

- A Pro-Innovation Approach to AI Regulation. Available online: https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach/white-paper (accessed on 10 March 2024).

- Bini, F.; Franzò, M.; Maccaro, A.; Piaggio, D.; Pecchia, L.; Marinozzi, F. Is medical device regulatory compliance growing as fast as extended reality to avoid misunderstandings in the future? Health Technol. 2023, 13, 831–842. [Google Scholar] [CrossRef]

- FDA. Artificial Intelligence and Machine Learning in Software as a Medical Device. Available online: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device (accessed on 10 March 2024).

- FEAM. Summary Report: Digital Health and AI: Benefits and Costs of Data Sharing in the EU. In FEAM FORUM Annual Lecture; Federation of European Academies of Medicine: Brussels, Belgium, 2022. [Google Scholar]

- OECD. OECD Legal Instruments. 2019. Available online: https://legalinstruments.oecd.org/en/instruments/OECD-LEGAL-0449 (accessed on 1 February 2023).

- Google. Our Principles—Google AI. Available online: https://ai.google/principles/ (accessed on 1 February 2023).

- ACM. SIGAI—Artificial Intelligence. 2022. Available online: https://www.acm.org/special-interest-groups/sigs/sigai (accessed on 10 March 2024).

- Alexia Skok, D. The EU Needs an Artificial Intelligence Act That Protects Fundamental Rights. Available online: https://www.accessnow.org/eu-artificial-intelligence-act-fundamental-rights/ (accessed on 1 February 2023).

- Consulting, I. General Data Protection Regulation (GDPR). Available online: https://gdpr-info.eu (accessed on 1 February 2023).

- Jobin, A.; Ienca, M.; Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar] [CrossRef]

- Lemonne, E. Ethics Guidelines for Trustworthy AI. 2018. Available online: https://ec.europa.eu/futurium/en/ai-alliance-consultation.1.html (accessed on 1 February 2023).

- European Commission. Assessment List for Trustworthy Artificial Intelligence (ALTAI) for Self-Assessment|Shaping Europe’s Digital Future. 2020. Available online: https://www.frontiersin.org/articles/10.3389/frai.2023.1020592/full#:~:text=In%20July%202020%2C%20the%20European,Principles%20for%20Trustworthy%20AI.%E2%80%9D%20Prior (accessed on 9 February 2023).

- European Commission. White Paper on Artificial Intelligence: A European Approach to Excellence and Trust; European Commission: Brussels, Belgium, 2020.

- UNESCO. UNESCO’s Input in Reply to the OHCHR Report on the Human Rights Council Resolution 47/23 entitled “New and Emerging Digital Technologies and Human Rights”. 2021. Available online: https://www.ohchr.org/sites/default/files/2022-03/UNESCO.pdf (accessed on 15 April 2024).

- Ethics and Governance of Artificial Intelligence for Health: Guidance on Large Multi-Modal Models. Available online: https://www.who.int/publications/i/item/9789240084759 (accessed on 10 March 2024).

- Hunimed. 6-Year Degree Course in Medicine and Biomedical Engineering, Entirely Taught in English, Run by Humanitas University in Partnership with Politecnico di Milano. 2022. Available online: https://www.hunimed.eu/course/medtec-school/ (accessed on 3 November 2022).

- Uo, P. Nasce Meet: Grazie All’incontro Di Quattro Prestigiosi Atenei, Una Formazione Medica All’altezza Delle Nuove Tecnologie. 2019. Available online: http://news.unipv.it/?p=45400 (accessed on 3 November 2022).

- Çalışkan, S.A.; Demir, K.; Karaca, O. Artificial intelligence in medical education curriculum: An e-Delphi Study for Competencies. PLoS ONE 2022, 17, e0271872. [Google Scholar] [CrossRef] [PubMed]

- Civaner, M.M.; Uncu, Y.; Bulut, F.; Chalil, E.G.; Tatli, A. Artificial intelligence in medical education: A cross-sectional needs assessment. BMC Med. Educ. 2022, 22, 772. [Google Scholar] [CrossRef] [PubMed]

- Grunhut, J.; Marques, O.; Wyatt, A.T.M. Needs, challenges, and applications of artificial intelligence in medical education curriculum. JMIR Med. Educ. 2022, 8, e35587. [Google Scholar] [CrossRef] [PubMed]

- Skillings, K. Teaching of Biomedical Ethics to Engineering Students through the Use of Role Playing. Ph.D. Thesis, Worcester Polytechnic Institute, Worcester, MA, USA, 2017. [Google Scholar]

- Maccaro, A.; Piaggio, D.; Pagliara, S.; Pecchia, L. The role of ethics in science: A systematic literature review from the first wave of COVID-19. Health Technol. 2021, 11, 1063–1071. [Google Scholar] [CrossRef] [PubMed]

- Maccaro, A.; Piaggio, D.; Dodaro, C.A.; Pecchia, L. Biomedical engineering and ethics: Reflections on medical devices and PPE during the first wave of COVID-19. BMC Med. Ethics 2021, 22, 130. [Google Scholar] [CrossRef]

- Piaggio, D.; Castaldo, R.; Cinelli, M.; Cinelli, S.; Maccaro, A.; Pecchia, L. A framework for designing medical devices resilient to low-resource settings. Glob. Health 2021, 17, 64. [Google Scholar] [CrossRef] [PubMed]

- Di Pietro, L.; Piaggio, D.; Oronti, I.; Maccaro, A.; Houessouvo, R.C.; Medenou, D.; De Maria, C.; Pecchia, L.; Ahluwalia, A. A Framework for Assessing Healthcare Facilities in Low-Resource Settings: Field Studies in Benin and Uganda. J. Med. Biol. Eng. 2020, 40, 526–534. [Google Scholar] [CrossRef]

- Maccaro, A.; Piaggio, D.; Leesurakarn, S.; Husen, N.; Sekalala, S.; Rai, S.; Pecchia, L. On the universality of medical device regulations: The case of Benin. BMC Health Serv. Res. 2022, 22, 1031. [Google Scholar] [CrossRef] [PubMed]

- High-Level Expert Group on Artificial Intelligence. Available online: https://digital-strategy.ec.europa.eu/en/policies/expert-group-ai (accessed on 10 March 2024).

- European Council. Artificial Intelligence Act: Council and Parliament Strike a Deal on the First Rules for AI in the World. 2023. Available online: https://www.consilium.europa.eu/en/press/press-releases/2023/12/09/artificial-intelligence-act-council-and-parliament-strike-a-deal-on-the-first-worldwide-rules-for-ai/ (accessed on 15 April 2024).

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef] [PubMed]

- Popay, J.; Roberts, H.; Sowden, A.; Petticrew, M.; Arai, L.; Rodgers, M.; Britten, N.; Roen, K.; Duffy, S. Guidance on the Conduct of Narrative Synthesis in Systematic Reviews; A Product from the ESRC Methods Programme Version; Citeseer: Online; University of Lancaster: Lancaster, UK, 2006; Volume 1, p. b92. [Google Scholar]

- McLennan, S.; Fiske, A.; Tigard, D.; Müller, R.; Haddadin, S.; Buyx, A. Embedded ethics: A proposal for integrating ethics into the development of medical AI. BMC Med. Ethics 2022, 23, 6. [Google Scholar] [CrossRef] [PubMed]

- Svensson, A.M.; Jotterand, F. Doctor ex machina: A critical assessment of the use of artificial intelligence in health care. J. Med. Philos. A Forum Bioeth. Philos. Med. 2022, 47, 155–178. [Google Scholar] [CrossRef] [PubMed]

- Martinho, A.; Kroesen, M.; Chorus, C. A healthy debate: Exploring the views of medical doctors on the ethics of artificial intelligence. Artif. Intell. Med. 2021, 121, 102190. [Google Scholar] [CrossRef] [PubMed]

- Donia, J.; Shaw, J.A. Co-design and ethical artificial intelligence for health: An agenda for critical research and practice. Big Data Soc. 2021, 8, 20539517211065248. [Google Scholar] [CrossRef]

- Arima, H.; Kano, S. Integrated Analytical Framework for the Development of Artificial Intelligence-Based Medical Devices. Ther. Innov. Regul. Sci. 2021, 55, 853–865. [Google Scholar] [CrossRef] [PubMed]

- Racine, E.; Boehlen, W.; Sample, M. Healthcare uses of artificial intelligence: Challenges and opportunities for growth. In Healthcare Management Forum; SAGE Publications: Los Angeles, CA, USA, 2019. [Google Scholar]

- Guan, J. Artificial intelligence in healthcare and medicine: Promises, ethical challenges and governance. Chin. Med. Sci. J. 2019, 34, 76–83. [Google Scholar] [PubMed]

- Quinn, T.P.; Senadeera, M.; Jacobs, S.; Coghlan, S.; Le, V. Trust and medical AI: The challenges we face and the expertise needed to overcome them. J. Am. Med. Inform. Assoc. 2021, 28, 890–894. [Google Scholar] [CrossRef] [PubMed]

- Arnold, M.H. Teasing out artificial intelligence in medicine: An ethical critique of artificial intelligence and machine learning in medicine. J. Bioethical Inq. 2021, 18, 121–139. [Google Scholar] [CrossRef] [PubMed]

- Karmakar, S. Artificial Intelligence: The future of medicine, or an overhyped and dangerous idea? Ir. J. Med. Sci. 2022, 191, 1991–1994. [Google Scholar] [CrossRef] [PubMed]

- Montemayor, C.; Halpern, J.; Fairweather, A. In principle obstacles for empathic AI: Why we can’t replace human empathy in healthcare. AI Soc. 2021, 37, 1353–1359. [Google Scholar] [CrossRef] [PubMed]

- Adlakha, S.; Yadav, D.; Garg, R.K.; Chhabra, D. Quest for dexterous prospects in AI regulated arena: Opportunities and challenges in healthcare. Int. J. Healthc. Technol. Manag. 2020, 18, 22–50. [Google Scholar] [CrossRef]

- Ho, A. Deep ethical learning: Taking the interplay of human and artificial intelligence seriously. Hastings Cent. Rep. 2019, 49, 36–39. [Google Scholar] [CrossRef] [PubMed]

- Whitby, B. Automating medicine the ethical way. In Machine Medical Ethics; Springer: Cham, Switzerland, 2015; pp. 223–232. [Google Scholar]

- Buruk, B.; Ekmekci, P.E.; Arda, B. A critical perspective on guidelines for responsible and trustworthy artificial intelligence. Med. Health Care Philos. 2020, 23, 387–399. [Google Scholar] [CrossRef] [PubMed]

- de Miguel, I.; Sanz, B.; Lazcoz, G. Machine learning in the EU health care context: Exploring the ethical, legal and social issues. Inf. Commun. Soc. 2020, 23, 1139–1153. [Google Scholar] [CrossRef]

- Johnson, S.L. AI, machine learning, and ethics in health care. J. Leg. Med. 2019, 39, 427–441. [Google Scholar] [CrossRef] [PubMed]

- Pasricha, S. Ethics for Digital Medicine: A Path for Ethical Emerging Medical IoT Design. Computer 2023, 56, 32–40. [Google Scholar] [CrossRef]

- Reddy, S. Navigating the AI Revolution: The Case for Precise Regulation in Health Care. J. Med. Internet Res. 2023, 25, e49989. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Zhang, Z.-M. Ethics and governance of trustworthy medical artificial intelligence. BMC Med. Inform. Decis. Mak. 2023, 23, 7. [Google Scholar] [CrossRef] [PubMed]

- Pruski, M. Ethics framework for predictive clinical AI model updating. Ethics Inf. Technol. 2023, 25, 48. [Google Scholar] [CrossRef]

- Schicktanz, S.; Welsch, J.; Schweda, M.; Hein, A.; Rieger, J.W.; Kirste, T. AI-assisted ethics? Considerations of AI simulation for the ethical assessment and design of assistive technologies. Front. Genet. 2023, 14, 1039839. [Google Scholar] [CrossRef] [PubMed]

- Adams, J. Defending explicability as a principle for the ethics of artificial intelligence in medicine. Med. Health Care Philos. 2023, 26, 615–623. [Google Scholar] [CrossRef] [PubMed]

- Love, C.S. “Just the Facts Ma’am”: Moral and Ethical Considerations for Artificial Intelligence in Medicine and its Potential to Impact Patient Autonomy and Hope. Linacre Q. 2023, 90, 375–394. [Google Scholar] [CrossRef] [PubMed]

- Couture, V.; Roy, M.C.; Dez, E.; Laperle, S.; Bélisle-Pipon, J.C. Ethical Implications of Artificial Intelligence in Population Health and the Public’s Role in Its Governance: Perspectives From a Citizen and Expert Panel. J. Med. Internet Res. 2023, 25, e44357. [Google Scholar] [CrossRef]

- Yves Saint James, A.; Stacy, M.C.; Nehmat, H.; Annette, B.-M.; Khin Than, W.; Chris, D.; Lei, W.; Wendy, A.R. Practical, epistemic and normative implications of algorithmic bias in healthcare artificial intelligence: A qualitative study of multidisciplinary expert perspectives. J. Med. Ethics 2023. [Google Scholar] [CrossRef]

- Chikhaoui, E.; Alajmi, A.; Larabi-Marie-Sainte, S. Artificial Intelligence Applications in Healthcare Sector: Ethical and Legal Challenges. Emerg. Sci. J. 2022, 6, 717–738. [Google Scholar] [CrossRef]

- Cobianchi, L.; Verde, J.M.; Loftus, T.J.; Piccolo, D.; Dal Mas, F.; Mascagni, P.; Garcia Vazquez, A.; Ansaloni, L.; Marseglia, G.R.; Massaro, M.; et al. Artificial Intelligence and Surgery: Ethical Dilemmas and Open Issues. J. Am. Coll. Surg. 2022, 235, 268–275. [Google Scholar] [CrossRef] [PubMed]

- De Togni, G.; Krauthammer, M.; Biller-Andorno, N. Beyond the hype: ‘Acceptable futures’ for AI and robotic technologies in healthcare. AI Soc. 2023, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Iqbal, J.D.; Krauthammer, M.; Biller-Andorno, N. The Use and Ethics of Digital Twins in Medicine. J. Law. Med. Ethics 2022, 50, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Lewanowicz, A.; Wiśniewski, M.; Oronowicz-Jaskowiak, W. The use of machine learning to support the therapeutic process—Strengths and weaknesses. Postep. Psychiatr. Neurol. 2022, 31, 167–173. [Google Scholar] [CrossRef]

- Martín-Peña, R. Does the COVID-19 Pandemic have Implications for Machine Ethics? In HCI International 2022—Late Breaking Posters; Springer Nature: Cham, Switzerland, 2022. [Google Scholar]

- Papadopoulou, E.; Exarchos, T. An Ethics Impact Assessment (EIA) for AI uses in Health & Care: The correlation of ethics and legal aspects when AI systems are used in health & care contexts. In Proceedings of the 12th Hellenic Conference on Artificial Intelligence, Corfu, Greece, 7–9 September 2022; p. 14. [Google Scholar]

- Pasricha, S. AI Ethics in Smart Healthcare. IEEE Consum. Electron. Mag. 2022, 12, 12–20. [Google Scholar] [CrossRef]

- Refolo, P.; Sacchini, D.; Raimondi, C.; Spagnolo, A.G. Ethics of digital therapeutics (DTx). Eur. Rev. Med. Pharmacol. Sci. 2022, 26, 6418–6423. [Google Scholar] [PubMed]

- Smallman, M. Multi Scale Ethics—Why We Need to Consider the Ethics of AI in Healthcare at Different Scales. Sci. Eng. Ethics 2022, 28, 63. [Google Scholar] [CrossRef] [PubMed]

- De Boer, B.; Kudina, O. What is morally at stake when using algorithms to make medical diagnoses? Expanding the discussion beyond risks and harms. Theor. Med. Bioeth. 2021, 42, 245–266. [Google Scholar] [CrossRef] [PubMed]

- Braun, M.; Hummel, P.; Beck, S.; Dabrock, P. Primer on an ethics of AI-based decision support systems in the clinic. J. Med. Ethics 2021, 47, e3. [Google Scholar] [CrossRef]

- Rogers, W.A.; Draper, H.; Carter, S.M. Evaluation of artificial intelligence clinical applications: Detailed case analyses show value of healthcare ethics approach in identifying patient care issues. Bioethics 2021, 35, 623–633. [Google Scholar] [CrossRef]

- Lysaght, T.; Lim, H.Y.; Xafis, V.; Ngiam, K.Y. AI-assisted decision-making in healthcare: The application of an ethics framework for big data in health and research. Asian Bioeth. Rev. 2019, 11, 299–314. [Google Scholar] [CrossRef] [PubMed]

- Astromskė, K.; Peičius, E.; Astromskis, P. Ethical and legal challenges of informed consent applying artificial intelligence in medical diagnostic consultations. AI Soc. 2021, 36, 509–520. [Google Scholar] [CrossRef]

- Fletcher, R.R.; Nakeshimana, A.; Olubeko, O. Addressing fairness, bias, and appropriate use of artificial intelligence and machine learning in global health. Front. Artif. Intell. 2021, 3, 561802. [Google Scholar] [CrossRef]

- Nabi, J. How bioethics can shape artificial intelligence and machine learning. Hastings Cent. Rep. 2018, 48, 10–13. [Google Scholar] [CrossRef] [PubMed]

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Madai, V.I.; Consortium, P.Q. Explainability for artificial intelligence in healthcare: A multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 2020, 20, 310. [Google Scholar] [CrossRef]

- Chen, A.; Wang, C.; Zhang, X. Reflection on the equitable attribution of responsibility for artificial intelligence-assisted diagnosis and treatment decisions. Intell. Med. 2023, 3, 139–143. [Google Scholar] [CrossRef]

- Hallowell, N.; Badger, S.; McKay, F.; Kerasidou, A.; Nellåker, C. Democratising or disrupting diagnosis? Ethical issues raised by the use of AI tools for rare disease diagnosis. SSM Qual. Res. Health 2023, 3, 100240. [Google Scholar] [CrossRef] [PubMed]

- Lorenzini, G.; Arbelaez Ossa, L.; Shaw, D.M.; Elger, B.S. Artificial intelligence and the doctor-patient relationship expanding the paradigm of shared decision making. Bioethics 2023, 37, 424–429. [Google Scholar] [CrossRef] [PubMed]

- Cagliero, D.; Deuitch, N.; Shah, N.; Feudtner, C.; Char, D. A framework to identify ethical concerns with ML-guided care workflows: A case study of mortality prediction to guide advance care planning. J. Am. Med. Inf. Assoc. 2023, 30, 819–827. [Google Scholar] [CrossRef] [PubMed]

- Redrup Hill, E.; Mitchell, C.; Brigden, T.; Hall, A. Ethical and legal considerations influencing human involvement in the implementation of artificial intelligence in a clinical pathway: A multi-stakeholder perspective. Front. Digit. Health 2023, 5, 1139210. [Google Scholar] [CrossRef] [PubMed]

- Ferrario, A.; Gloeckler, S.; Biller-Andorno, N. Ethics of the algorithmic prediction of goal of care preferences: From theory to practice. J. Med. Ethics 2023, 49, 165–174. [Google Scholar] [CrossRef] [PubMed]

- Lorenzini, G.; Shaw, D.M.; Arbelaez Ossa, L.; Elger, B.S. Machine learning applications in healthcare and the role of informed consent: Ethical and practical considerations. Clin. Ethics 2023, 18, 451–456. [Google Scholar] [CrossRef]

- Sharova, D.E.; Zinchenko, V.V.; Akhmad, E.S.; Mokienko, O.A.; Vladzymyrskyy, A.V.; Morozov, S.P. On the issue of ethical aspects of the artificial intelligence systems implementation in healthcare. Digit. Diagn. 2021, 2, 356–368. [Google Scholar] [CrossRef]

- Wellnhofer, E. Real-World and Regulatory Perspectives of Artificial Intelligence in Cardiovascular Imaging. Front. Cardiovasc. Med. 2022, 9, 890809. [Google Scholar] [CrossRef] [PubMed]

- Ballantyne, A.; Stewart, C. Big data and public-private partnerships in healthcare and research: The application of an ethics framework for big data in health and research. Asian Bioeth. Rev. 2019, 11, 315–326. [Google Scholar] [CrossRef]

- Howe, E.G., III; Elenberg, F. Ethical challenges posed by big data. Innov. Clin. Neurosci. 2020, 17, 24. [Google Scholar]

- De Angelis, L.; Baglivo, F.; Arzilli, G.; Privitera, G.P.; Ferragina, P.; Tozzi, A.E.; Rizzo, C. ChatGPT and the rise of large language models: The new AI-driven infodemic threat in public health. Front. Public Health 2023, 11, 1166120. [Google Scholar] [CrossRef] [PubMed]

- Liu, T.Y.A.; Wu, J.H. The Ethical and Societal Considerations for the Rise of Artificial Intelligence and Big Data in Ophthalmology. Front. Med. 2022, 9, 845522. [Google Scholar] [CrossRef] [PubMed]

- Fiske, A.; Henningsen, P.; Buyx, A. Your robot therapist will see you now: Ethical implications of embodied artificial intelligence in psychiatry, psychology, and psychotherapy. J. Med. Internet Res. 2019, 21, e13216. [Google Scholar] [CrossRef] [PubMed]

- Steil, J.; Finas, D.; Beck, S.; Manzeschke, A.; Haux, R. Robotic systems in operating theaters: New forms of team–machine interaction in health care. Methods Inf. Med. 2019, 58, e14–e25. [Google Scholar] [CrossRef] [PubMed]

- De Togni, G.; Erikainen, S.; Chan, S.; Cunningham-Burley, S. What makes AI ‘intelligent’and ‘caring’? Exploring affect and relationality across three sites of intelligence and care. Soc. Sci. Med. 2021, 277, 113874. [Google Scholar] [CrossRef] [PubMed]

- Weber, A. Emerging medical ethical issues in healthcare and medical robotics. Int. J. Mech. Eng. Robot. Res. 2018, 7, 604–607. [Google Scholar] [CrossRef]

- Bendel, O. Surgical, therapeutic, nursing and sex robots in machine and information ethics. In Machine Medical Ethics; Springer: Cham, Switzerland, 2015; pp. 17–32. [Google Scholar]

- Shuaib, A.; Arian, H.; Shuaib, A. The Increasing Role of Artificial Intelligence in Health Care: Will Robots Replace Doctors in the Future? Int. J. Gen. Med. 2020, 13, 891–896. [Google Scholar] [CrossRef] [PubMed]

- Boch, A.; Ryan, S.; Kriebitz, A.; Amugongo, L.M.; Lütge, C. Beyond the Metal Flesh. Beyond the Metal Flesh: Understanding the Intersection between Bio- and AI Ethics for Robotics in Healthcare. Robotics 2023, 12, 110. [Google Scholar] [CrossRef]

- Hatherley, J.; Sparrow, R. Diachronic and synchronic variation in the performance of adaptive machine learning systems: The ethical challenges. J. Am. Med. Inform. Assoc. 2023, 30, 361–366. [Google Scholar] [CrossRef] [PubMed]

- Lanne, M.; Leikas, J. Ethical AI in the re-ablement of older people: Opportunities and challenges. Gerontechnology 2021, 20, 1–13. [Google Scholar] [CrossRef]

- Leimanis, A.; Palkova, K. Ethical guidelines for artificial intelligence in healthcare from the sustainable development perspective. Eur. J. Sustain. Dev. 2021, 10, 90. [Google Scholar] [CrossRef]

- Ho, A. Are we ready for artificial intelligence health monitoring in elder care? BMC Geriatr. 2020, 20, 358. [Google Scholar] [CrossRef] [PubMed]

- Luxton, D.D. Recommendations for the ethical use and design of artificial intelligent care providers. Artif. Intell. Med. 2014, 62, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Smith, M.J.; Bean, S. AI and ethics in medical radiation sciences. J. Med. Imaging Radiat. Sci. 2019, 50, S24–S26. [Google Scholar] [CrossRef] [PubMed]

- Parviainen, J.; Rantala, J. Chatbot breakthrough in the 2020s? An ethical reflection on the trend of automated consultations in health care. Med. Health Care Philos. 2022, 25, 61–71. [Google Scholar] [CrossRef] [PubMed]

- Kühler, M. Exploring the phenomenon and ethical issues of AI paternalism in health apps. Bioethics 2022, 36, 194–200. [Google Scholar] [CrossRef] [PubMed]

- Kerasidou, A. Ethics of artificial intelligence in global health: Explainability, algorithmic bias and trust. J. Oral Biol. Craniofacial Res. 2021, 11, 612–614. [Google Scholar] [CrossRef]

- Panch, T.; Mattie, H.; Atun, R. Artificial intelligence and algorithmic bias: Implications for health systems. J. Glob. Health 2019, 9, 020318. [Google Scholar] [CrossRef] [PubMed]

- Balthazar, P.; Harri, P.; Prater, A.; Safdar, N.M. Protecting your patients’ interests in the era of big data, artificial intelligence, and predictive analytics. J. Am. Coll. Radiol. 2018, 15, 580–586. [Google Scholar] [CrossRef] [PubMed]

- Beauchamp, T.L. Methods and principles in biomedical ethics. J. Med. Ethics 2003, 29, 269–274. [Google Scholar] [CrossRef] [PubMed]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef] [PubMed]

- Sheu, R.K.; Pardeshi, M.S. A Survey on Medical Explainable AI (XAI): Recent Progress, Explainability Approach, Human Interaction and Scoring System. Sensors 2022, 22, 8068. [Google Scholar] [CrossRef] [PubMed]

- Borchert, R.; Azevedo, T.; Badhwar, A.; Bernal, J.; Betts, M.; Bruffaerts, R.; Burkhart, M.C.; Dewachter, I.; Gellersen, H.; Low, A. Artificial intelligence for diagnosis and prognosis in neuroimaging for dementia; a systematic review. medRxiv 2021. [Google Scholar] [CrossRef]

- Bernal, J.; Mazo, C. Transparency of Artificial Intelligence in Healthcare: Insights from Professionals in Computing and Healthcare Worldwide. Appl. Sci. 2022, 12, 10228. [Google Scholar] [CrossRef]

- Burdon, M.; Andrejevic, M. Big data in the sensor society. In Big Data Is Not a Monolith; The MIT Press: Cambridge, MA, USA, 2016; pp. 61–76. [Google Scholar]

- GATEKEEPER. Available online: https://www.gatekeeper-project.eu/ (accessed on 22 March 2024).

| Area | String |

|---|---|

| Ethics terms | Ethic* OR bioethic* OR ((cod*)AND(ethic*)) OR ((ethical OR moral) AND (value* OR principle*)) OR deontolog* OR (meta-ethics) AND (issue or problem or challenge) |

| Artificial intelligence terms | (“artificial intelligence”) OR (“neural network”) OR (“deep learning”) OR (“deep-learning”) OR (“machine learning”) OR (“machine-learning”) OR AI OR iot OR (“internet of things”) OR (expert system) |

| Healthcare technology terms | Health OR healthcare OR (health care) OR (medical device*) OR (medical technolog*) OR (medical equipment) OR ((healthcare OR (health care)) AND (technolog*)) |

| Ethical Themes | Number of Studies (%) | Proposed Solution/s |

|---|---|---|

| Transparency | 40 (17) |

|

| Algorithmic bias | 30 (13) | |

| Confidentiality | 33 (14) | |

| Fairness | 24 (10) | |

| Trust | 34 (15) | |

| Autonomy | 24 (10) | |

| Informed consent | 13 (6) | |

| Accountability | 25 (11) | |

| Other | 11 (5) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maccaro, A.; Stokes, K.; Statham, L.; He, L.; Williams, A.; Pecchia, L.; Piaggio, D. Clearing the Fog: A Scoping Literature Review on the Ethical Issues Surrounding Artificial Intelligence-Based Medical Devices. J. Pers. Med. 2024, 14, 443. https://doi.org/10.3390/jpm14050443

Maccaro A, Stokes K, Statham L, He L, Williams A, Pecchia L, Piaggio D. Clearing the Fog: A Scoping Literature Review on the Ethical Issues Surrounding Artificial Intelligence-Based Medical Devices. Journal of Personalized Medicine. 2024; 14(5):443. https://doi.org/10.3390/jpm14050443

Chicago/Turabian StyleMaccaro, Alessia, Katy Stokes, Laura Statham, Lucas He, Arthur Williams, Leandro Pecchia, and Davide Piaggio. 2024. "Clearing the Fog: A Scoping Literature Review on the Ethical Issues Surrounding Artificial Intelligence-Based Medical Devices" Journal of Personalized Medicine 14, no. 5: 443. https://doi.org/10.3390/jpm14050443