Development of UAV Tracing and Coordinate Detection Method Using a Dual-Axis Rotary Platform for an Anti-UAV System

Abstract

1. Introduction

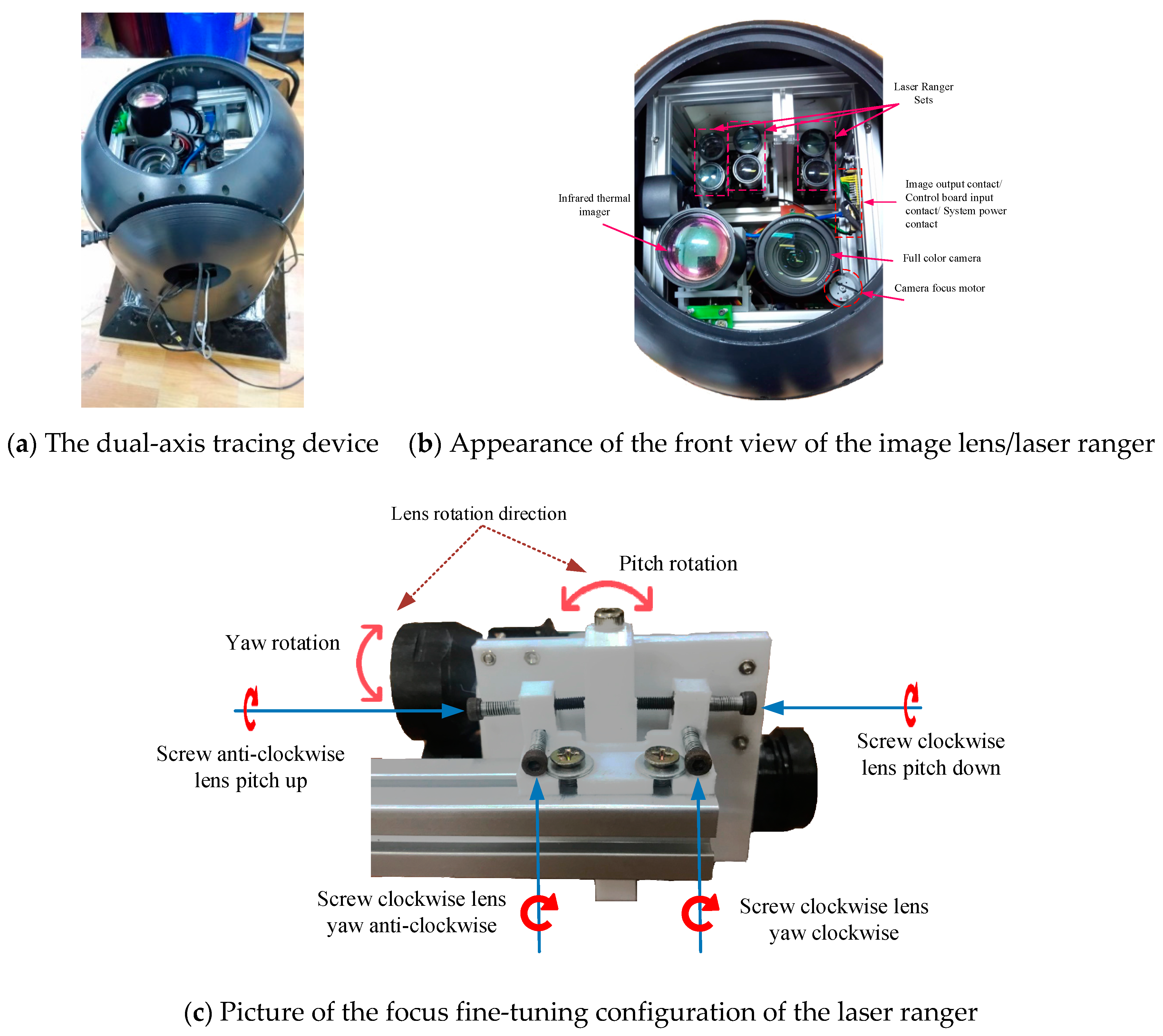

2. System Architecture

2.1. The Dual-Axis Rotary Platform Mechanism

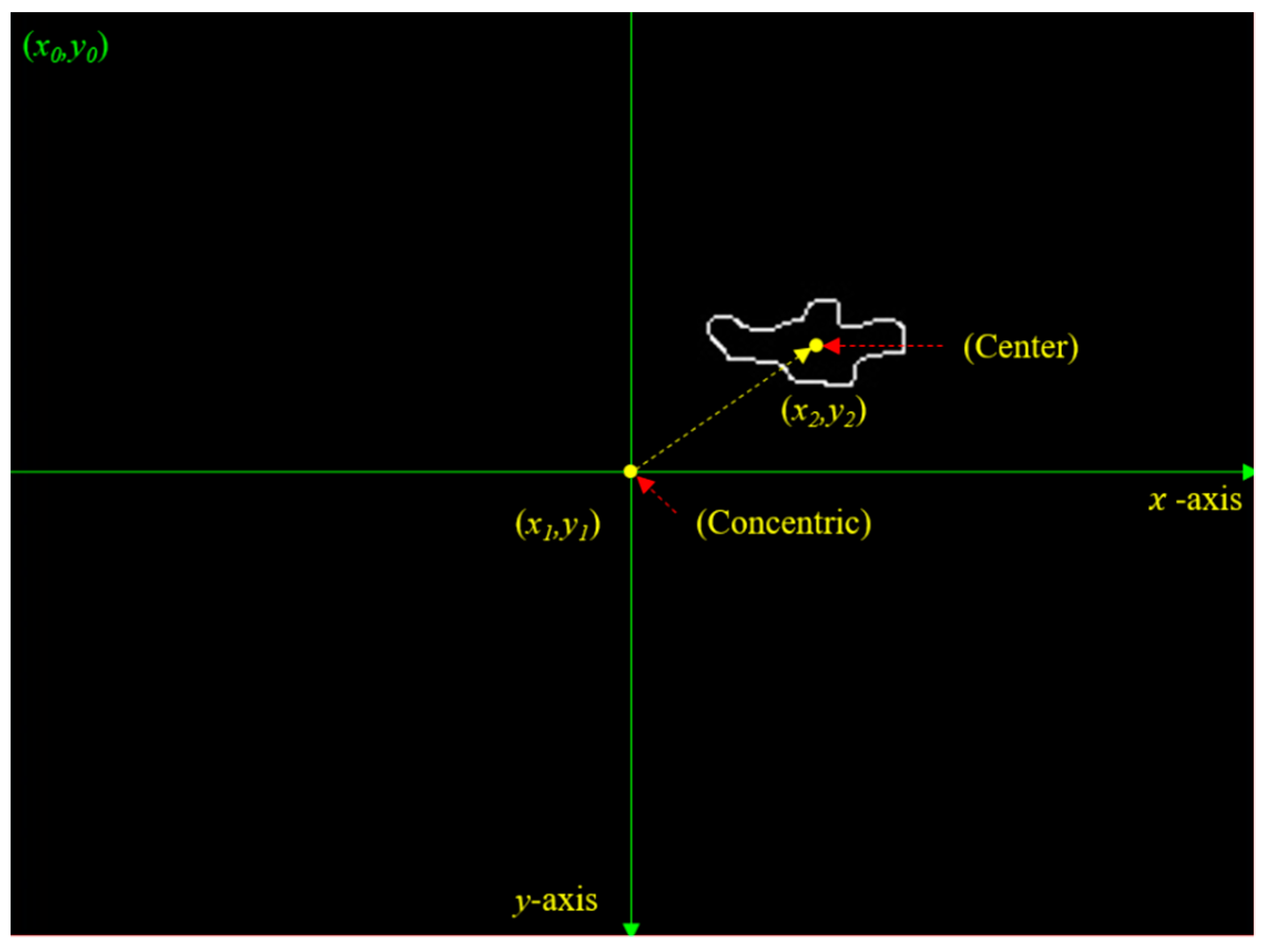

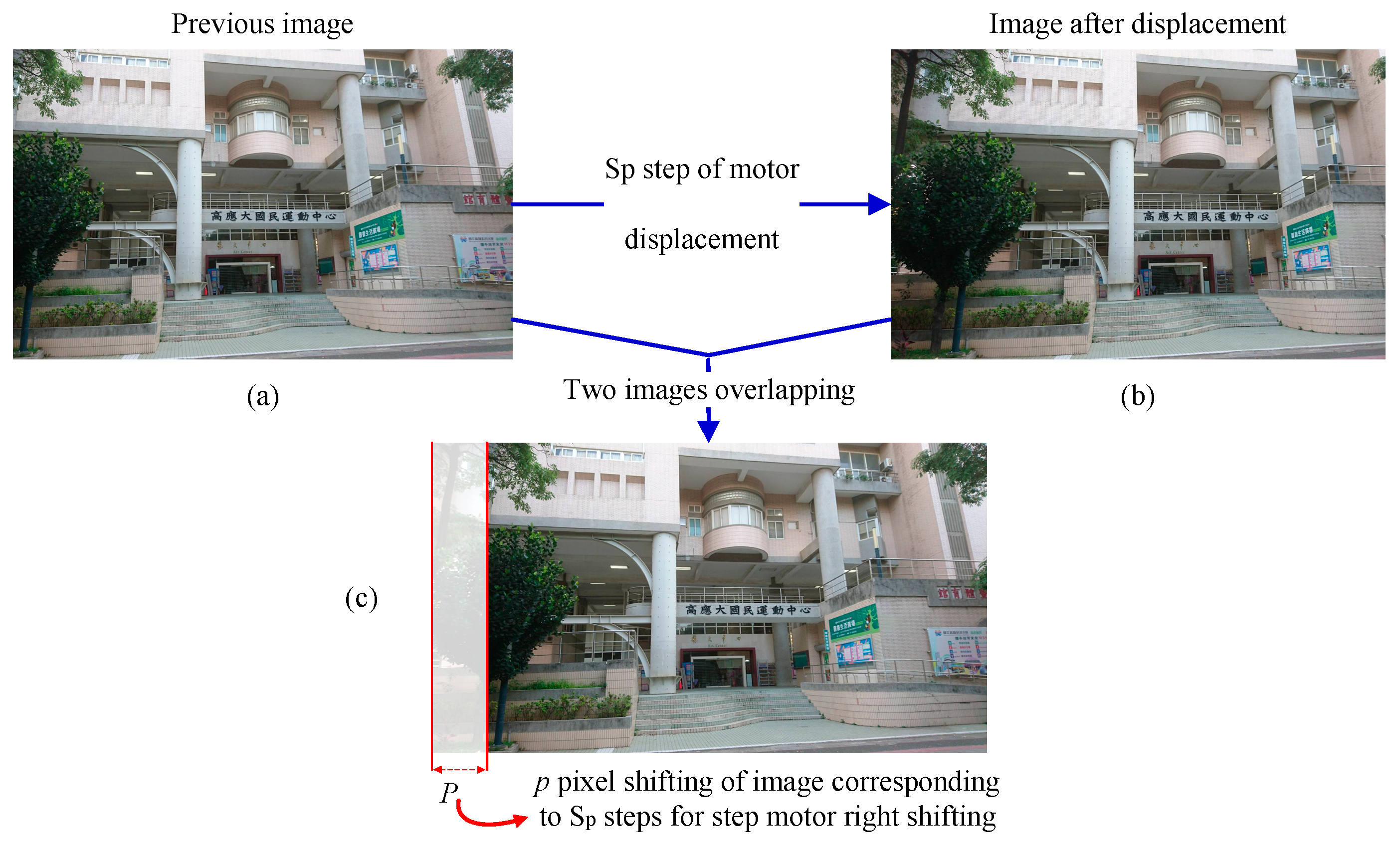

2.2. Drone Visual Image Tracing Method

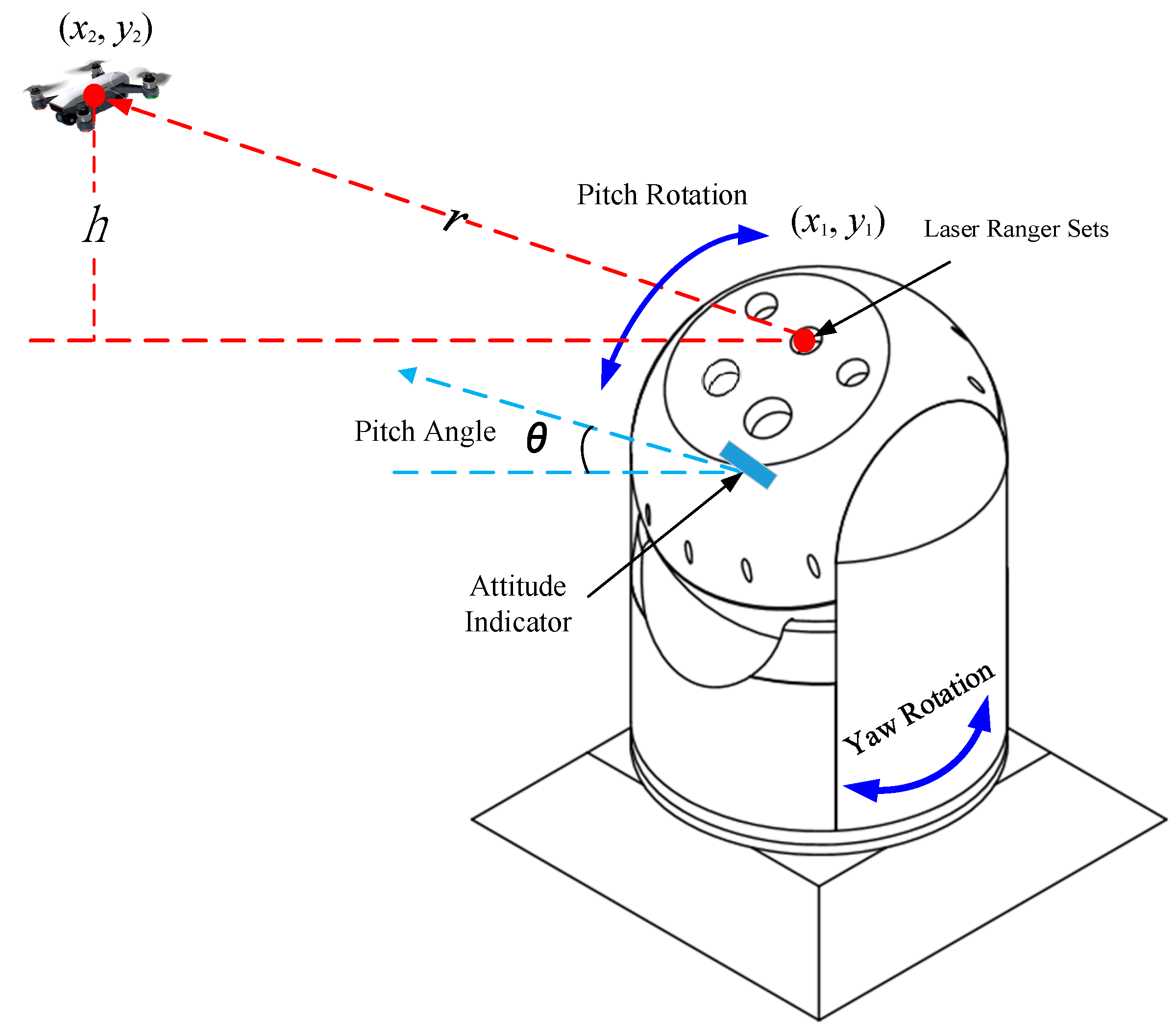

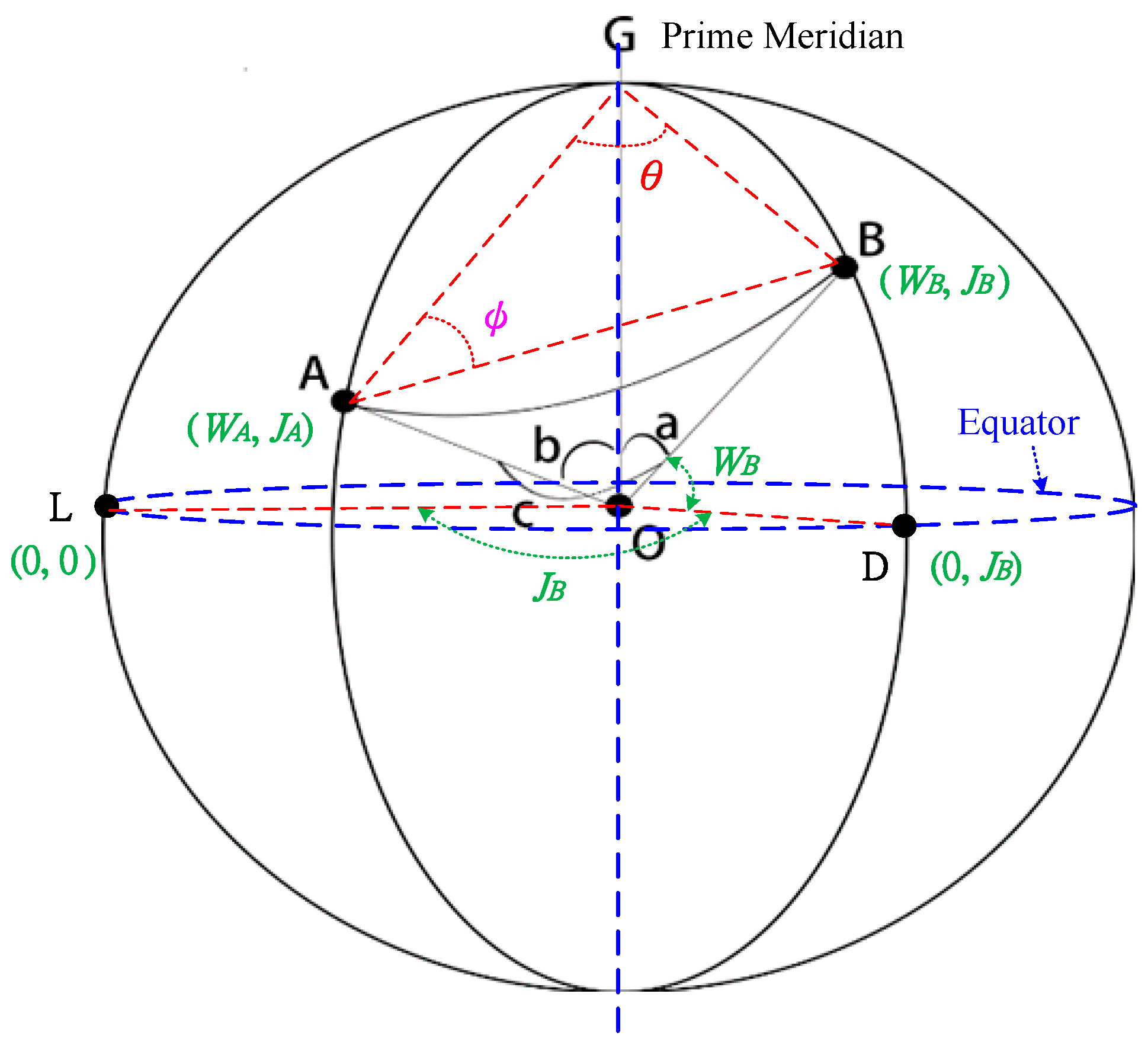

2.3. Drone Three-Dimensional Coordinate Calculation

3. Experimental Results and Discussion

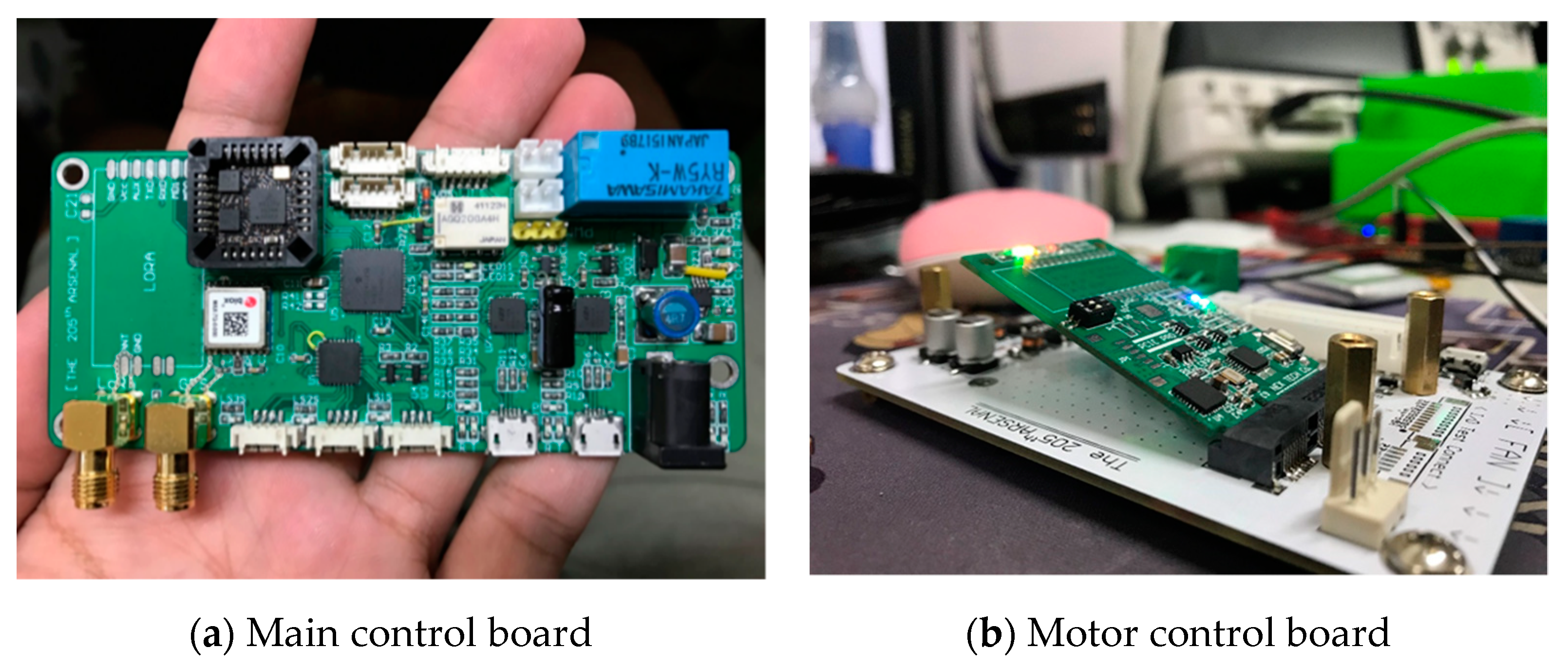

3.1. Development Environment of the Software and Hardware

- Infrared thermal imaging: As shown in Figure 8, this paper uses an intermediate level infrared thermal imaging camera (TE–EQ1), which is equipped with a 50 mm fixed-focus lens with 384×288 pixels. It can be used for mobile tracing tests with a drone flying altitude of about 100 m. The TE–EQ1 is capable of observing the distribution of heat sources in drones, providing users with drone tracing tasks through computer vision technology.

- Full-color single-lens reflex camera: This study uses a Sony α7SII (Japan) with a Sony SEL24240 lens, as shown in Figure 9. The Sony α7SII features ultra-high sensitivity and an ultra-wide dynamic range. It has a 35 mm full-frame 12.2 megapixel image quality, with a wide dynamic range of ISO 50 to 409,600 and a BIONZ X processor, which optimizes the performance of the sensor, highlights details, and reduces noise, and records 4K (QFHD: 3840*2160) movies in full-frame read-out without image pixel merging, effectively suppressing image edge aliasing and moiré. The main reason for choosing this device and lens is that the Sony α7SII can be transmitted via HDMI, achieving high-quality image instant transmission and less delay in camera transmission than others of a similar price, allowing instant acquisition of images and image processing. Additionally, the Sony SEL24240 telephoto single lens has a focal length of 240 mm, allowing drones at short distances (<100 m) to be clearly displayed on the tracing screen.

- Thermal image acquisition: The UPG311 UVC image acquisition device is used to read the infrared thermal imaging images. The infrared thermal imaging output interface is NTSC or PAL. It is compatible with multiple systems and supports plug-and-play and UVC (USB video device class). The agreement supports an image resolution of up to 640 × 480 pixels.

- Frame grabber for full-color camera: We used the Magewell USB Capture HDMI Gen2 capture card, which supports single-machine simultaneous connection of multiple groups of operations, and is compatible with Windows, Linux, and OS X operating systems with no need to install drivers or a real Plug and Play. It supports a completely standard development interface. In addition, the input and output interfaces use HDMI and USB 3.0, respectively, it supports an input image resolution of up to 2048 × 2160 and a frame rate of up to 120 fps, and it automatically selects the aspect ratio that is the most appropriate for the picture.

- Laser ranger: This article uses the LRF 28-2000 semiconductor laser ranger, as shown in Figure 10. The main reason for using this laser ranger is that it uses an RF section of 900~908 nm wavelength to protect the human eye, with a range of 3.5 m to as long as 2.0 km. Its measurement resolution and measurement accuracy are 0.1 m and 1.0 m, respectively. Its specifications are suitable for the measurement of the flight altitude of the short-range drones in this study, and its significant distance measurement accuracy can be used as a basis for testing the drone flight altitude.

- Multirotor: At present, the market share of multirotors is dominated by DJI Dajiang Innovation. With its drone, which is lightweight, portable, and has a small volume being sold at a friendly price, it is very popular among people generally. Additionally, the news media have reported that most drone events have involved drones from DJI Dajiang Innovation, so this study uses the DJI Mavic Pro multirotor as the main tracing target of the experiment.

3.2. Development of Hardware for the Dual-Axis Device

3.3. Drone Image Identification and Tracing Test in Different Weather Environments

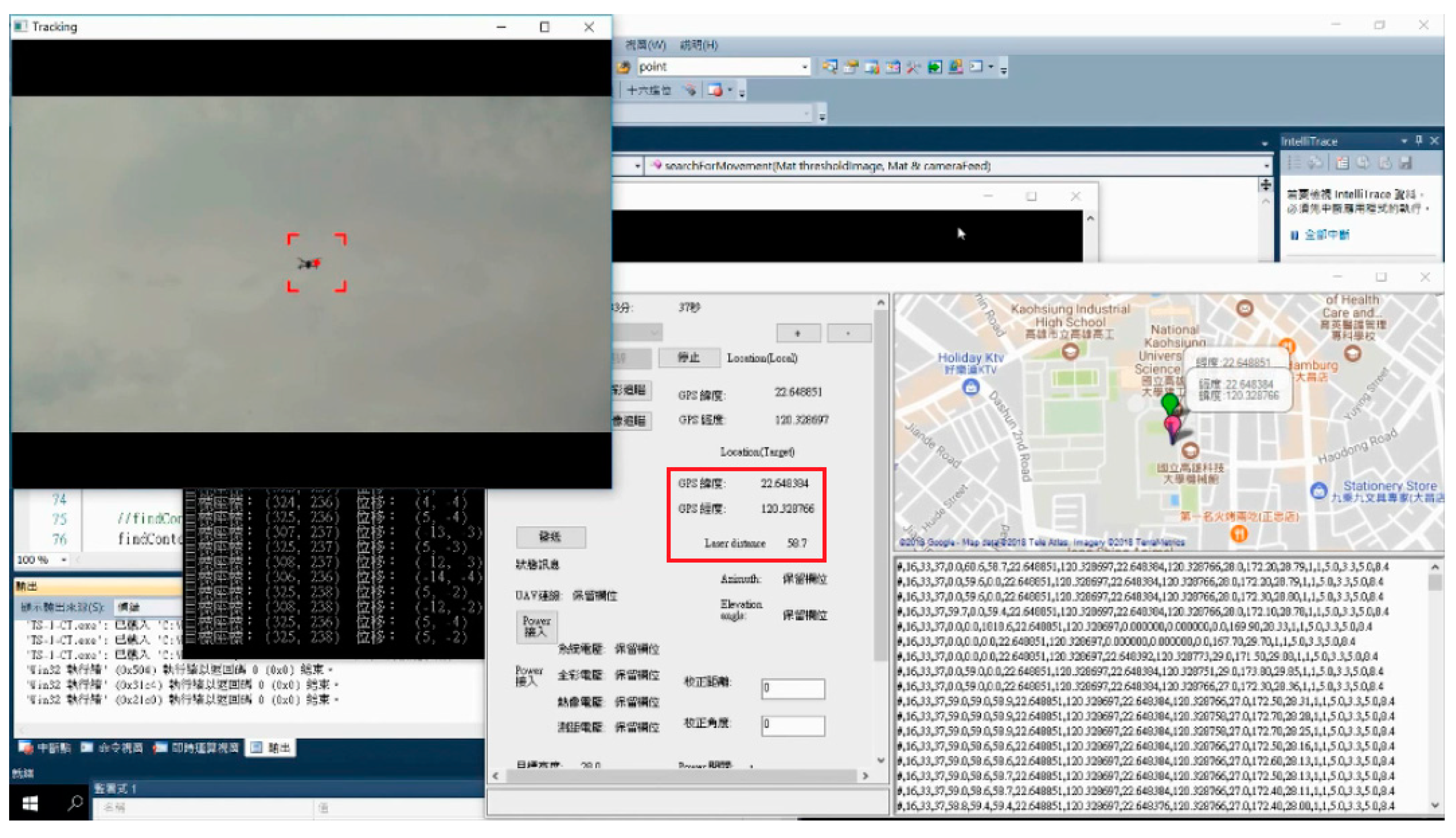

3.4. Test of Drone Tracing and Longitude and Latitude Coordinates

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Boh, W.F.; Lim, W.K.; Zeng, Y. Da Jiang Innovations (DJI): The rise of the drones. In The Asian Business Case Centre-Nanying Technology University Singapore; Nanyang Technological University: Singapore, 2017; pp. 1–28. [Google Scholar]

- Chin, K.S.H.; Siu, A.C.Y.; Ying, S.Y.K.; Zhang, Y. Da Jiang Innovation, DJI: The Future of Possible. Acad. Asian Bus. Rev. 2017, 3, 83–109. [Google Scholar] [CrossRef]

- Diaz, T.J. Lights, drone... action. IEEE Spectr. 2015, 52, 36–41. [Google Scholar] [CrossRef]

- Lort, M.; Aguasca, A.; López-Martínez, C.; Marín, T.M. Initial Evaluation of SAR Capabilities in UAV Multicopter Platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 127–140. [Google Scholar] [CrossRef]

- Dempsey, P. View from Washington [News Comment]. Eng. Technol. 2015, 10, 14. [Google Scholar] [CrossRef]

- Dempsey, P. View from Washington [News Briefing]. Eng. Technol. 2014, 9, 16. [Google Scholar] [CrossRef]

- Kratky, M.; Minarik, V. The non-destructive methods of fight against UAVs. In Proceedings of the 2017 International Conference on Military Technologies (ICMT), Brno, Czech Republic, 31 May–2 June 2017; pp. 690–694. [Google Scholar]

- Foreign Counter-Unmanned Aerial Systems: Developments in the International Arms Markets. Available online: https://www.ida.org/research-and-publications/publications/all/f/fo/foreign-counter-unmanned-aerial-systems-developments-in-the-international-arms-markets (accessed on 21 May 2019).

- Ding, G.; Wu, Q.; Zhang, L.; Lin, Y.; Theodoros, T.A.; Yao, Y. An Amateur Drone Surveillance System Based on Cognitive Internet of Things. IEEE Commun. Mag. 2018, 56, 29–35. [Google Scholar]

- About the Center for the Study of the Drone. Available online: https://dronecenter.bard.edu/publications/counter-drone-systems/ (accessed on 21 May 2019).

- Sebastain, P.; Andrei, L. Considerations Regarding Detection and Combat System for UAV’s. Recent 2017, 18, 49–55. [Google Scholar]

- Ramos, D.B.; Loubach, D.S.; da Cunha, A.M. Developing a distributed real-time monitoring system to track UAVs. IEEE Aerosp. Electron. Syst. Mag. 2010, 25, 18–25. [Google Scholar] [CrossRef]

- Xu, Z.; Wei, R.; Zhao, X.; Wang, S. Coordinated Standoff Target Tracking Guidance Method for UAVs. IEEE Access 2018, 6, 59853–59859. [Google Scholar] [CrossRef]

- Stary, V.; Krivanek, V.; Stefek, A. Optical detection methods for laser guided unmanned devices. J. Commun. Netw. 2018, 20, 464–472. [Google Scholar] [CrossRef]

- Sheu, B.H.; Chiu, C.C.; Lu, W.T.; Lien, C.C.; Liu, T.K.; Chen, W.P. Dual-axis Rotary Platform with UAV Image Recognition and Tracking. Microelectron. Reliab. 2019, 95, 8–17. [Google Scholar] [CrossRef]

- Honkavaara, E.; Eskelinen, M.A.; Pölönen, I.; Saari, H.; Ojanen, H.; Mannila, R.; Holmlund, C.; Hakala, T.; Litkey, P.; Rosnell, T.; et al. Remote Sensing of 3-D Geometry and Surface Moisture of a Peat Production Area Using Hyperspectral Frame Cameras in Visible to Short-Wave Infrared Spectral Ranges Onboard a Small Unmanned Airborne Vehicle (UAV). IEEE Trans. Geosci. Remote Sens. 2016, 54, 5440–5454. [Google Scholar] [CrossRef]

- Yin, H.; Chai, Y.; Yang, S.X.; Yang, X. Fast-moving target tracking based on mean shift and frame-difference methods. J. Syst. Eng. Electron. 2011, 22, 587–592. [Google Scholar] [CrossRef]

- Du, B.; Sun, Y.; Cai, S.; Wu, C.; Du, Q. Object Tracking in Satellite Videos by Fusing the Kernel Correlation Filter and the Three-Frame-Difference Algorithm. IEEE Geosci. Remote Sens. Lett. 2018, 15, 168–172. [Google Scholar] [CrossRef]

- Chen, P.; Dang, Y.; Liang, R.; Zhu, W.; He, X. Real-Time Object Tracking on a Drone with Multi-Inertial Sensing Data. IEEE Trans. Intell. Transp. Syst. 2018, 19, 131–139. [Google Scholar] [CrossRef]

- Chang, X.; Haiyan, X. Notice of Retraction-Using sphere parameters to detect construction quality of spherical buildings. In Proceedings of the 2010 2nd International Conference on Advanced Computer Control, Shenyang, China, 27–29 March 2010; Volume 4, p. 17. [Google Scholar]

- Oraizi, H.; Soleimani, H. Optimum pattern synthesis of non-uniform spherical arrays using the Euler rotation. IET Microw. Antennas Propag. 2015, 9, 898–904. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sheu, B.-H.; Chiu, C.-C.; Lu, W.-T.; Huang, C.-I.; Chen, W.-P. Development of UAV Tracing and Coordinate Detection Method Using a Dual-Axis Rotary Platform for an Anti-UAV System. Appl. Sci. 2019, 9, 2583. https://doi.org/10.3390/app9132583

Sheu B-H, Chiu C-C, Lu W-T, Huang C-I, Chen W-P. Development of UAV Tracing and Coordinate Detection Method Using a Dual-Axis Rotary Platform for an Anti-UAV System. Applied Sciences. 2019; 9(13):2583. https://doi.org/10.3390/app9132583

Chicago/Turabian StyleSheu, Bor-Horng, Chih-Cheng Chiu, Wei-Ting Lu, Chu-I Huang, and Wen-Ping Chen. 2019. "Development of UAV Tracing and Coordinate Detection Method Using a Dual-Axis Rotary Platform for an Anti-UAV System" Applied Sciences 9, no. 13: 2583. https://doi.org/10.3390/app9132583

APA StyleSheu, B.-H., Chiu, C.-C., Lu, W.-T., Huang, C.-I., & Chen, W.-P. (2019). Development of UAV Tracing and Coordinate Detection Method Using a Dual-Axis Rotary Platform for an Anti-UAV System. Applied Sciences, 9(13), 2583. https://doi.org/10.3390/app9132583