Full-Color Imaging System Based on the Joint Integration of a Metalens and Neural Network

Abstract

:1. Introduction

2. Theoretical Analyses

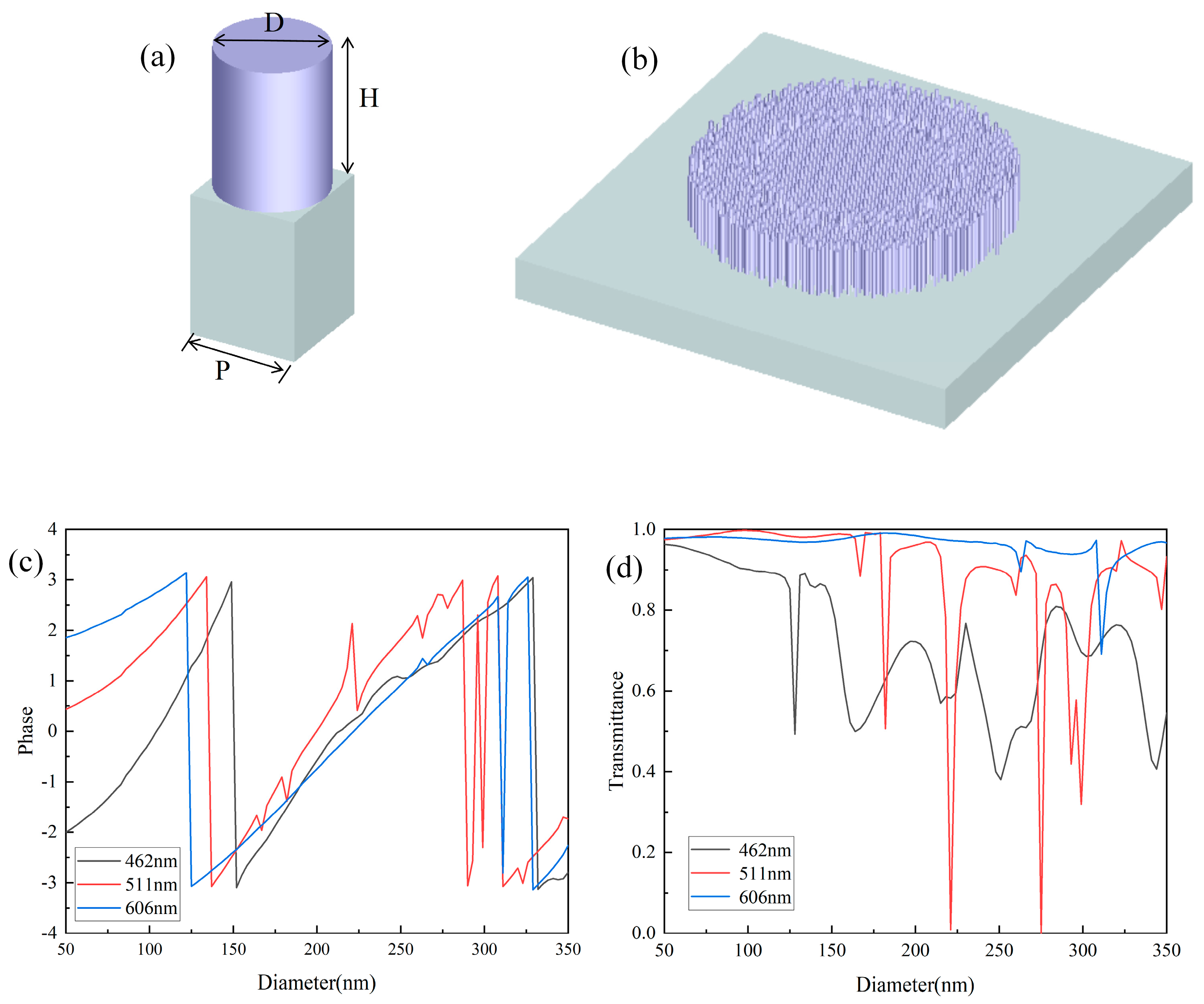

2.1. Metalens Design

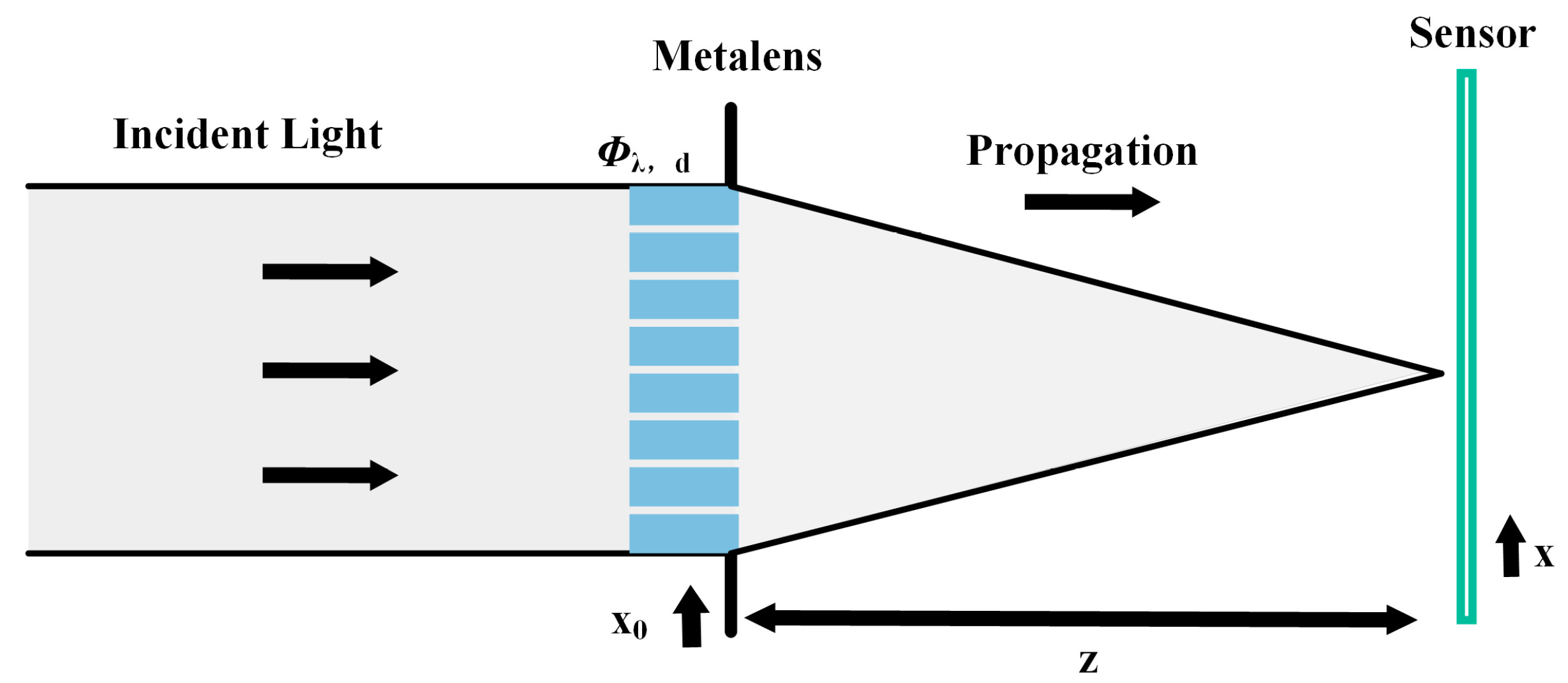

2.2. PSF Calculation

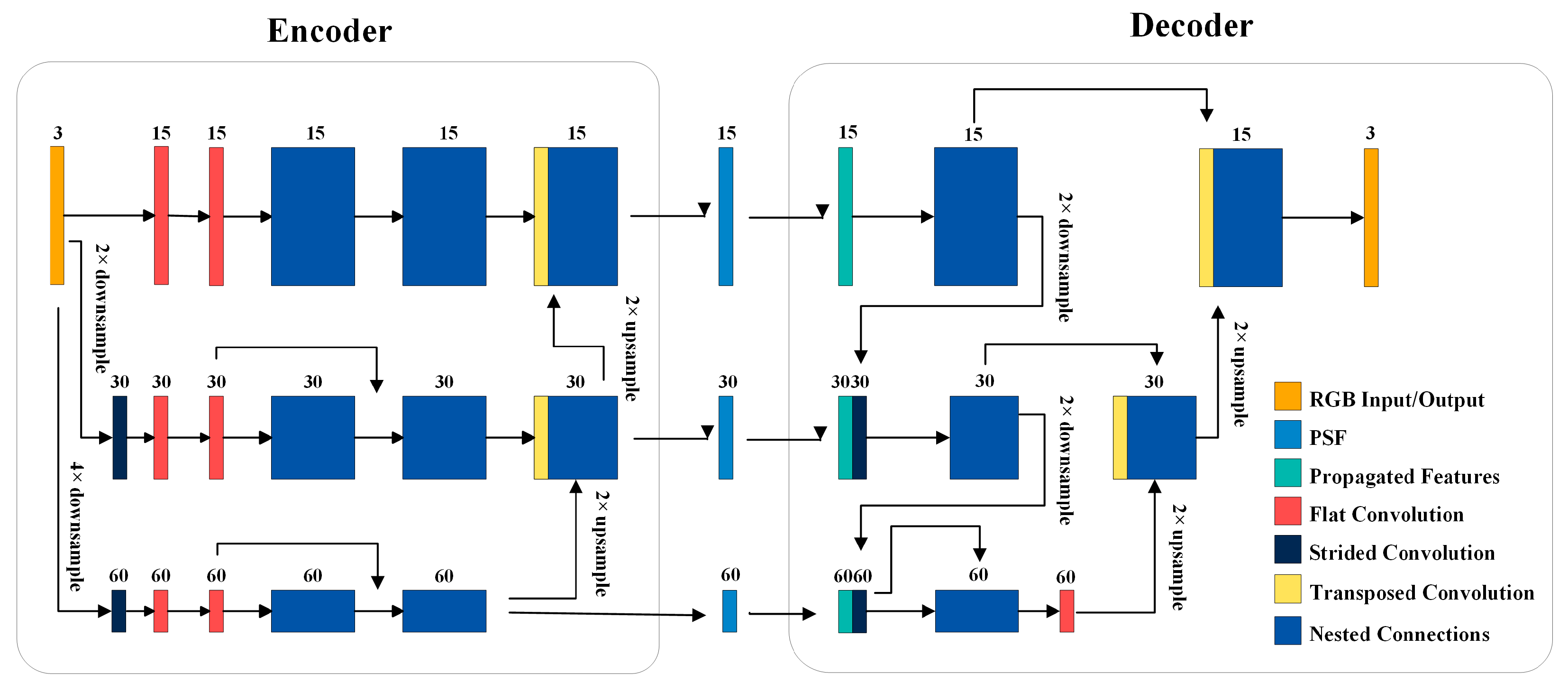

2.3. Network Architecture

2.4. Loss Definition

3. Results and Discussion

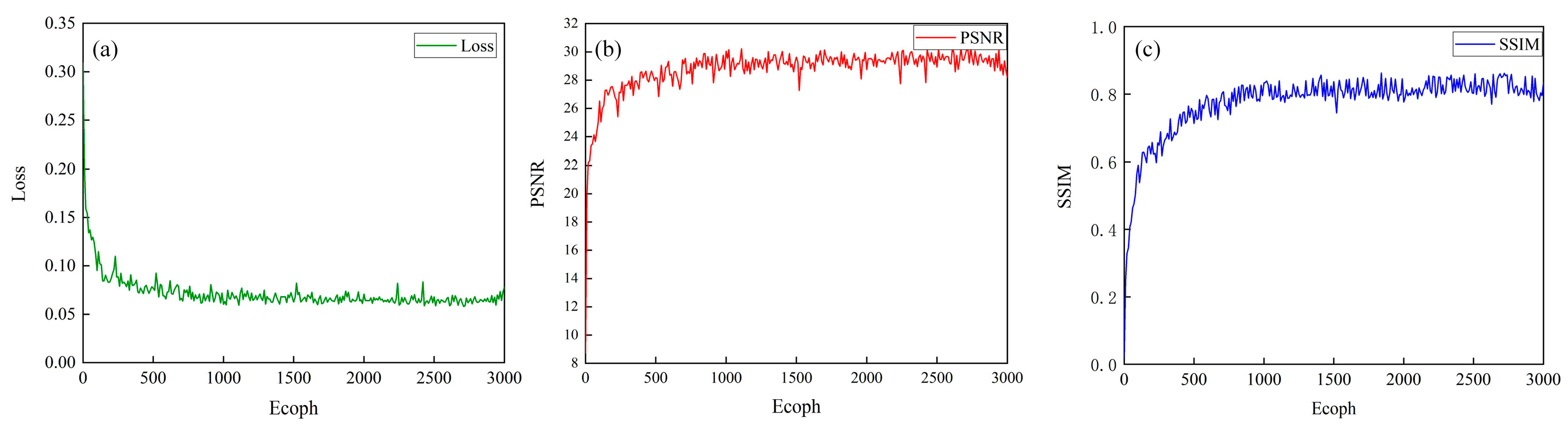

3.1. Experimental Details

3.2. Results

3.3. Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, L.; Wang, Q.H. Zoom lens design using liquid lenses for achromatic and spherical aberration corrected target. Opt. Eng. 2012, 51, 043001. [Google Scholar] [CrossRef]

- Feng, B.; Shi, Z.L.; Zhao, Y.H.; Liu, H.Z.; Liu, L. A wide-FoV athermalized infrared imaging system with a two-element lens. Infrared Phys. Technol. 2017, 87, 11–21. [Google Scholar] [CrossRef]

- Arbabi, A.; Horie, Y.; Bagheri, M.; Faraon, A. Dielectric metasurfaces for complete control of phase and polarization with subwavelength spatial resolution and high transmission. Nat. Nanotechnol. 2015, 10, 937–943. [Google Scholar] [CrossRef] [PubMed]

- Cencillo-Abad, P.; Ou, J.Y.; Plum, E.; Zheludev, N.I. Electro-mechanical light modulator based on controlling the interaction of light with a metasurface. Sci. Rep. 2017, 7, 5405. [Google Scholar] [CrossRef] [PubMed]

- Overvig, A.C.; Shrestha, S.; Malek, S.C.; Lu, M.; Stein, A.; Zheng, C.X.; Yu, N.F. Dielectric metasurfaces for complete and independent control of the optical amplitude and phase. Light Sci. Appl. 2019, 8, 92. [Google Scholar] [CrossRef] [PubMed]

- Chen, K.; Feng, Y.J.; Monticone, F.; Zhao, J.M.; Zhu, B.; Jiang, T.; Zhang, L.; Kim, Y.; Ding, X.M.; Zhang, S.; et al. A reconfigurable active huygens’ metalens. Adv. Mater. 2017, 29, 1606422. [Google Scholar] [CrossRef] [PubMed]

- Yoshikawa, H. Computer-generated holograms for 3D displays. In Proceedings of the 1st International Conference on Photonics Solutions (ICPS), Pattaya, Thailand, 26–28 May 2013. [Google Scholar]

- Khorasaninejad, M.; Aieta, F.; Kanhaiya, P.; Kats, M.A.; Genevet, P.; Rousso, D.; Capasso, F. Achromatic Metasurface Lens at Telecommunication Wavelengths. Nano Lett. 2015, 15, 5358–5362. [Google Scholar] [CrossRef] [PubMed]

- Avayu, O.; Almeida, E.; Prior, Y.; Ellenbogen, T. Composite functional metasurfaces for multispectral achromatic optics. Nat. Commun. 2017, 8, 14992. [Google Scholar] [CrossRef] [PubMed]

- Arbabi, E.; Arbabi, A.; Kamali, S.M.; Horie, Y.; Faraon, A. Multiwavelength metasurfaces through spatial multiplexing. Sci. Rep. 2016, 6, 32803. [Google Scholar] [CrossRef] [PubMed]

- Shrestha, S.; Overvig, A.C.; Lu, M.; Stein, A.; Yu, N.F. Broadband achromatic dielectric metalenses. Light Sci. Appl. 2018, 7, 85. [Google Scholar] [CrossRef] [PubMed]

- Fan, Z.B.; Qiu, H.Y.; Zhang, H.L.; Pang, X.N.; Zhou, L.D.; Liu, L.; Ren, H.; Wang, Q.H.; Dong, J.W. A broadband achromatic metalens array for integral imaging in the visible. Light Sci. Appl. 2019, 8, 67. [Google Scholar] [CrossRef] [PubMed]

- Jaffari, Z.H.; Abbas, A.; Kim, C.M.; Shin, J.; Kwak, J.; Son, C.; Lee, Y.G.; Kim, S.; Chon, K.; Cho, K.H. Transformer-based deep learning models for adsorption capacity prediction of heavy metal ions toward biochar-based adsorbents. J. Hazard. Mater. 2024, 462, 132773. [Google Scholar] [CrossRef] [PubMed]

- Jaffari, Z.H.; Abbas, A.; Umer, M.; Kim, E.-S.; Cho, K.H. Crystal graph convolution neural networks for fast and accurate prediction of adsorption ability of Nb 2 CT x towards Pb (ii) and Cd (ii) ions. J. Mater. Chem. A 2023, 11, 9009–9018. [Google Scholar] [CrossRef]

- Iftikhar, S.; Zahra, N.; Rubab, F.; Sumra, R.A.; Khan, M.B.; Abbas, A.; Jaffari, Z.H. Artificial neural networks for insights into adsorption capacity of industrial dyes using carbon-based materials. Sep. Purif. Technol. 2023, 326, 124891. [Google Scholar] [CrossRef]

- An, S.S.; Fowler, C.; Zheng, B.W.; Shalaginov, M.Y.; Tang, H.; Li, H.; Zhou, L.; Ding, J.; Agarwal, A.M.; Rivero-Baleine, C.; et al. A deep learning approach for objective-driven all-dielectric metasurface design. ACS Photonics 2019, 6, 3196–3207. [Google Scholar] [CrossRef]

- Liu, Z.; Zhu, D.; Rodrigues, S.P.; Lee, K.-T.; Cai, W. Generative model for the inverse design of metasurfaces. Nano Lett. 2018, 18, 6570–6576. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014; pp. 1–27. [Google Scholar]

- Lin, Z.; Roques-Carmes, C.; Pestourie, R.; Soljačić, M.; Majumdar, A.; Johnson, S.G. End-to-end nanophotonic inverse design for imaging and polarimetry. Nanophotonics 2021, 10, 1177–1187. [Google Scholar] [CrossRef]

- Mansouree, M.; Kwon, H.; Arbabi, E.; McClung, A.; Faraon, A.; Arbabi, A. Multifunctional 2.5 D metastructures enabled by adjoint optimization. Optica 2020, 7, 77–84. [Google Scholar] [CrossRef]

- Chung, H.; Miller, O.D. High-NA achromatic metalenses by inverse design. Opt. Express 2020, 28, 6945–6965. [Google Scholar] [CrossRef] [PubMed]

- Barbastathis, G.; Ozcan, A.; Situ, G. On the use of deep learning for computational imaging. Optica 2019, 6, 921–943. [Google Scholar] [CrossRef]

- Sitzmann, V.; Diamond, S.; Peng, Y.F.; Dun, X.; Boyd, S.; Heidrich, W.; Heide, F.; Wetzstein, G. End-to-end Optimization of Optics and Image Processing for Achromatic Extended Depth of Field and Super-resolution Imaging. ACM Trans. Graph. 2018, 37, 1–13. [Google Scholar] [CrossRef]

- Chang, J.; Wetzstein, G. Deep optics for monocular depth estimation and 3D object detection. In Proceedings of the IEEE/CVF ICCV, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10192–10201. [Google Scholar]

- Wu, Y.C.; Boominathan, V.; Chen, H.J.; Sankaranarayanan, A.; Veeraraghavan, A. PhaseCam3D-Learning phase masks for passive single view depth estimation. In Proceedings of the 2019 IEEE International Conference on Computational Photography (ICCP), Tokyo, Japan, 15–17 May 2019. [Google Scholar]

- Metzler, C.A.; Ikoma, H.; Peng, Y.F.; Wetzstein, G. Deep optics for single-shot high-dynamic-range imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1372–1382. [Google Scholar]

- Chang, J.; Sitzmann, V.; Dun, X.; Heidrich, W.; Wetzstein, G. Hybrid optical-electronic convolutional neural networks with optimized diffractive optics for image classification. Sci. Rep. 2018, 8, 12324. [Google Scholar] [CrossRef] [PubMed]

- Dun, X.; Ikoma, H.; Wetzstein, G.; Wang, Z.S.; Cheng, X.B.; Peng, Y.F. Learned rotationally symmetric diffractive achromat for full-spectrum computational imaging. Optica 2020, 7, 913–922. [Google Scholar] [CrossRef]

- Tseng, E.; Colburn, S.; Whitehead, J.; Huang, L.; Baek, S.-H.; Majumdar, A.; Heide, F. Neural nano-optics for high-quality thin lens imaging. Nat. Commun. 2021, 12, 6493–6503. [Google Scholar] [CrossRef] [PubMed]

- Colburn, S.; Zhan, A.; Majumdar, A. Metasurface optics for full-color computational imaging. Sci. Adv. 2018, 4, 2114. [Google Scholar] [CrossRef] [PubMed]

- Guo, Q.; Shi, Z.J.; Huang, Y.W.; Alexander, E.; Qiu, C.W.; Capasso, F.; Zickler, T. Compact single-shot metalens depth sensors inspired by eyes of jumping spiders. Proc. Natl. Acad. Sci. USA 2019, 116, 22959–22965. [Google Scholar] [CrossRef] [PubMed]

- Tan, S.Y.; Yang, F.; Boominathan, V.; Veeraraghavan, A.; Naik, G. 3D imaging using extreme dispersion in optical metasurfaces. ACS Photonics 2021, 8, 1421–1429. [Google Scholar] [CrossRef]

- Fan, Q.B.; Xu, W.Z.; Hu, X.M.; Zhu, W.Q.; Yue, T.; Zhang, C.; Yan, F.; Chen, L.; Lezec, H.J.; Lu, Y.Q.; et al. Trilobite-inspired neural nanophotonic light-field camera with extreme depth-of-field. Nat. Commun. 2022, 13, 2130. [Google Scholar] [CrossRef] [PubMed]

- Hua, X.; Wang, Y.J.; Wang, S.M.; Zou, X.J.; Zhou, Y.; Li, L.; Yan, F.; Cao, X.; Xiao, S.M.; Tsai, D.P.; et al. Ultra-compact snapshot spectral light-field imaging. Nat. Commun. 2022, 13, 30439–30448. [Google Scholar] [CrossRef]

- Yu, Z.Q.; Zhang, Q.B.; Tao, X.; Li, Y.; Tao, C.N.; Wu, F.; Wang, C.; Zheng, Z.R. High-performance full-color imaging system based on end-to-end joint optimization of computer-generated holography and metalens. Opt. Express 2022, 30, 40871–40883. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.B.; Yu, Z.Q.; Liu, X.Y.; Wang, C.; Zheng, Z.R. End-to-end joint optimization of metasurface and image processing for compact snapshot hyperspectral imaging. Opt. Commun. 2023, 530, 129154. [Google Scholar] [CrossRef]

- Li, H.M.; Xiao, X.J.; Fang, B.; Gao, S.L.; Wang, Z.Z.; Chen, C.; Zhao, Y.W.; Zhu, S.N.; Li, T. Bandpass-filter-integrated multiwavelength achromatic metalens. Photonics Res. 2021, 9, 1384–1390. [Google Scholar] [CrossRef]

- Khorasaninejad, M.; Shi, Z.; Zhu, A.Y.; Chen, W.T.; Sanjeev, V.; Zaidi, A.; Capasso, F. Achromatic metalens over 60 nm bandwidth in the visible and metalens with reverse chromatic dispersion. Nano Lett. 2017, 17, 1819–1824. [Google Scholar] [CrossRef] [PubMed]

- Khorasaninejad, M.; Capasso, F. Metalenses: Versatile multifunctional photonic components. Science 2017, 358, 358–366. [Google Scholar] [CrossRef] [PubMed]

- Fan, S.; Joannopoulos, J.D. Analysis of guided resonances in photonic crystal slabs. Phys. Rev. B 2002, 65, 235112. [Google Scholar] [CrossRef]

- Wang, S.; Magnusson, R. Theory and applications of guided-mode resonance filters. Appl. Opt. 1993, 32, 2606–2613. [Google Scholar] [CrossRef] [PubMed]

- Shi, R.X.; Hu, S.L.; Sun, C.Q.; Wang, B.; Cai, Q.Z. Broadband achromatic metalens in the visible light spectrum based on fresnel zone spatial multiplexing. Nanomaterials 2022, 12, 4298. [Google Scholar] [CrossRef] [PubMed]

- Khare, K.; Butola, M.; Rajora, S. Fourier Optics and Computational Imaging; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Agustsson, E.; Timofte, R. Ntire 2017 challenge on single image super-resolution: Dataset and study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 126–135. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, S.; Shi, R.; Wang, B.; Wei, Y.; Qi, B.; Zhou, P. Full-Color Imaging System Based on the Joint Integration of a Metalens and Neural Network. Nanomaterials 2024, 14, 715. https://doi.org/10.3390/nano14080715

Hu S, Shi R, Wang B, Wei Y, Qi B, Zhou P. Full-Color Imaging System Based on the Joint Integration of a Metalens and Neural Network. Nanomaterials. 2024; 14(8):715. https://doi.org/10.3390/nano14080715

Chicago/Turabian StyleHu, Shuling, Ruixue Shi, Bin Wang, Yuan Wei, Binzhi Qi, and Peng Zhou. 2024. "Full-Color Imaging System Based on the Joint Integration of a Metalens and Neural Network" Nanomaterials 14, no. 8: 715. https://doi.org/10.3390/nano14080715