1. Introduction

For numerous animals, vision plays a critical role in receiving information from the outside world [

1]. In recent decades, scientists have discovered separate visual processing systems in different species of animals, and each system is dedicated to processing its own interesting features [

2,

3,

4]. Among these functional processing systems, motion perception is considered the most basic visual capability because of its widespread presence in the visual system, ranging from vertebrates to invertebrates [

5]. Research on motion perception can be dated back to 1894, when Exner first presented a drawing of motion detection neural networks [

6]. In 1912, Wertheimer introduced the Phi phenomenon and revealed that motion can be seen in two stationary flashes [

7]. The discovery that optic nerve fibers’ activities can be recorded by the discharge of impulses makes it possible for biologists to conduct research on single ganglion cells [

8,

9,

10,

11]. After the observation of many retinal ganglion cells in the receptive field with light-specific responses [

12,

13], neurons that respond to movement in a direction-selective way have been observed in both the mammalian retina (direction-selective ganglion cells, DSGCs) and the insect optic lobe (lobula plate tangential cells, LPTCs) [

14,

15].

In order to understand the mechanism underlying signal processing in direction-selective neurons (DSNs), scientists have proposed a variety of computational models [

16,

17,

18,

19,

20]. These models are summarized by Borst and Egelhaaf and have been divided into two main categories: correlation-type models and gradient-type models [

21,

22]. The gradient-type models, which originated from the computer vision, calculate motion through the spatial and the temporal gradient of the moving image. In contrast to the gradient-type model, the biological motion detectors correlate the brightness values measured at two adjacent image points with each other after one of them has been filtered in time. In 1956, Hassenstein and Reichardt proposed the first biologically based correlation-type model, the so-called HRC model [

16]. The HRC model is considered the modern theoretical framework for motion direction detection because it encourages people to understand the selectivity of motion direction from the perspective of neural computations [

23,

24]. As advances in techniques develop, more details about DSNs’ neuronal circuits have been investigated [

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36]. Now, we have evidence to believe that motion detection in flies can be computed by preferred direction enhancement, and the direction selectivity underlying the fly’s neural circuits can be well-explained by the HRC models [

37].

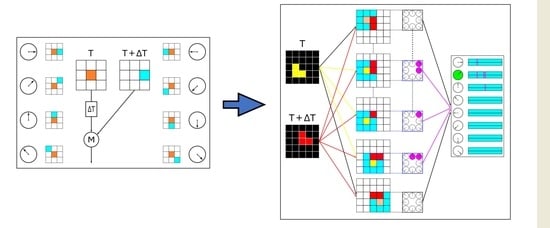

Although direction selectivity in visual pathways has been researched in recent decades, the exact mechanism of global motion direction detection has remained a puzzle [

31,

38]. To settle this issue, we propose an artificial visual system (AVS) to further understand the detecting mechanism of motion direction. First, we assume that the motion can be observed in two time-continuous stationary images. Based on the research of Adelson and Bergen [

39], we build our dataset with a sufficiently high sampling frequency so that the step size of the object’s movement is fixed. Then, we cite the concept of simple cells [

40] and propose a local motion-sensitive directionally detective neuron to detect motion in a particular direction. In our previous works, we have conducted research on the motion direction detection mechanism and the orientation detection mechanism, which may exist in the biological visual system [

41,

42,

43,

44,

45,

46]. In studies of mammalian motion vision, we relied on the dendritic computation of direction-selective ganglion cells and Barlow’s retina inhibitory scheme in direction-selective ganglion cells, respectively, to successfully detect the global motion direction using the multi-neurons scheme scanning mechanism [

41,

42]. In this paper, we build our neurons to respond to the ON motion signals with a high pattern contrast. The proposed neuron, based on the core computation of the HRC model, detects the motion direction with two spatiotemporally separated photoreceptors. Furthermore, the proposed neuron can be easily extended to two-dimensional multi-direction detections; for simplicity, eight neurons were employed to detect eight potential directions of motion. Finally, we used the full-neurons scheme motion direction detection mechanism to detect the global direction of motion. Inspired by the fly’s visual system, we assume that each light spot in the visual field can provide signals to an array of local motion-sensitive directionally detective neurons and introduce the activation strength to obtain the global direction of motion. Similar to the way LPTCs sum signals from medulla columns and generate a wide-field response [

33], we summed the activated number of neurons that detect the same direction and mark the maximum value as the global motion direction. To verify the reliability of our AVS, we conducted a series of experiments. The experimental results show that our AVS not only detects the global motion direction regardless of sizes and shapes, but also performs well in noisy situations. Moreover, we compare the system’s performance with the time-considered CNN and the EfficientNetB0 under the same conditions. The detection results show that our AVS not only beats the CNN and the EfficientNetB0 on motion direction detection in learning costs, but also in noise resistance.

3. Results and Discussion

To validate the reliability of our AVS, we conduct a series of experiments with a background size of 32 × 32, 1,024-pixel datasets. The objects in all experiments have random sizes ranging from 1 to 128 pixels, and their shapes and positions are set to arbitrary.

In the first series of experiments, we test our AVS within the background with no noise. The dataset has a total of 192,000 images (each object has 24,000 images), and the detection results are presented in

Table 1. The activation plots of the AVS for a 16-pixel object motion direction detection within the background with no noise are shown in

Figure 5. The activation numbers of eight local motion-sensitive directionally detective neurons are: 0°-detective neurons 5, 45°-detective neurons 5, 90°-detective neurons 9, 135°-detective neurons 16, 180°-detective neurons 8, 225°-detective neurons 7, 270°-detective neurons 6, and 315°-detective neurons 5. Thus, the global direction of this 16-pixel object’s motion is upper leftward.

In the second series of experiments, we test our AVS within the background with 1% to 10% separated noises. The dataset has a total of 192,000 images (each object has 24,000 images), and the detection results are presented in

Table 2. The reason why the separated noises have no effect on the detection results is because the noise remains stationary between the time T and the time T + ∆T. Therefore, no local motion-sensitive directionally detective neurons have been activated by the noises.

Figure 6 shows an example of the activation plots of the AVS for a 32-pixel object motion direction detection within the background with 10% separated noises. The activation numbers of eight local motion-sensitive directionally detective neurons are: 0°-detective neurons 17, 45°-detective neurons 14, 90°-detective neurons 15, 135°-detective neurons 14, 180°-detective neurons 15, 225°-detective neurons 18, 270°-detective neurons 32, and 315°-detective neurons 18. Thus, the global direction of this 32-pixel object’s motion is downward.

In the third series of experiments, we test our AVS within the background with 1% to 10% connected noises. The dataset has a total of 192,000 images (each object has 24,000 images), and the detection results are presented in

Table 3. Through observation, it can be found that the connected noises do have impacts on the detection results, especially when the object’s size is small. However, as the size of the object increases, the detection results will return to high accuracy. The activation plots of the AVS for a 64-pixel object motion direction detection within the background with 2% connected noises have been presented in

Figure 7, and the error activations due to noise are represented by the purple dots. The activation numbers of eight local motion-sensitive directionally detective neurons are: 0°-detective neurons 33, 45°-detective neurons 31, 90°-detective neurons 35, 135°-detective neurons 38, 180°-detective neurons 43, 225°-detective neurons 64, 270°-detective neurons 41, and 315°-detective neurons 34. Thus, the global direction of this 64-pixel object’s motion is Lower Leftward.

In the last series of experiments, we compare the performance of the AVS, the time-considered CNN, and the EfficientNetB0. In recent research, convolutional architectures performed well in tasking audio synthesis, and convolutional networks are regarded as the natural starting point for sequence modeling tasks [

58]. Therefore, we propose a time-considered CNN train it for the task of detecting the global direction of motion. The time-considered CNN is made up of eight convolution kernels (3 × 3), eight feature maps (32 × 32), and eight outputs. The structure of the time-considered CNN is shown in

Figure 8. At first, we used the dataset with no noise in the background and randomly picked 3000 images out of 24,000 images from each size of the object’s dataset to form a new dataset. Then, we picked 15,000 images in the new dataset for training the CNN and the EfficientNetB0 and then used 5000 of the remaining images to form the test dataset. Finally, we saved the CNN and the EfficientNetB0 when the training accuracy reached 100% and compared their performances with the proposed AVS. The accuracy rates on the test dataset are: AVS 100%, the time-considered CNN 63.6%, and the EfficientNetB0 100%. Furthermore, we performed a comparison of the three models in detecting the dataset with separated noises and the dataset with connected noises. These detection results are shown in

Table 4,

Table 5,

Table 6 and

Table 7, respectively. We can easily observe from these tables that our AVS has better performance than the time-considered CNN and the EfficientNetB0. Furthermore, since our AVS cites the spatial structure of the basic form of the HRC model, the requirements on the dataset are almost none, while the CNN and the EfficientNetB0 need a large amount of data for training.

4. Conclusions

In this paper, we proposed an artificial visual system using the full-neurons scheme motion direction detection mechanism to detect the global direction of motion. With reference to the ON-edge motion pathway in flies, we assumed that each light spot in the visual field can be received by eight local motion-sensitive directionally detective neurons. The detection in each local motion-sensitive directionally detective neuron is based on the core computation of the HRC model, and the global motion direction is inferred from the maximum value of activation strength in eight directions. Through three different types of experiments, we demonstrated that the proposed AVS not only has a great motion direction detection ability, but also has good resistance to noises. Additionally, through comparison of our AVS with the time-considered CNN and the EfficientNetB0, we verified the possibility of the proposed AVS as an engineering application. Although our AVS is still in the early stages of design, it already exhibits three notable advantages: learning costs, noise resistance, and structural extensibility. With the application of neurons with different functions in our designed AVS, the AVS can be used for more complex detection tasks. For example, in subsequent experiments, we initially confirmed that the application of the horizontal cells concept will allow our AVS to detect the direction of motion in grayscale images. We will continue to explore our artificial visual system in conjunction with investigations from biological research.