1. Introduction

Affective computing is a comprehensive research and technical field that involves various disciplines and applications, promoting research in the field. It mainly focuses on human–computer interaction and related issues to achieve emotional communication between humans and computers in a friendly environment. Affective computing has a wide range of applications in areas such as art, business, education, finance, medicine, and security. In 1997, Picard of the MIT Media Lab predicted about 50 possible application scenarios for affective computing in the book

Affective Computing [

1], which has significant research value. Currently, researchers are focused mainly on single-modal emotional computing, such as text semantics and sentiment analysis, speech transcription, and emotion recognition, and facial expression recognition. However, single-modal analysis has limitations, as many factors affect human emotions, and their internal connections are complex and changeable. Therefore, unimodal analysis cannot fully reflect human emotions. Consequently, researchers are turning to bimodal and multimodal analysis. Multimodal deep learning—a model with a high generalization ability and good recognition performance—is developed from multimodal machine learning, mainly employing deep-learning methods to address problems in the multimodal field, such as the low recognition rate and poor robustness of single-modal affective computing. Multimodal analysis can leverage the correlation and independence between different modalities to fully exploit the potential of each modality’s information and increase the confidence level in emotion recognition.

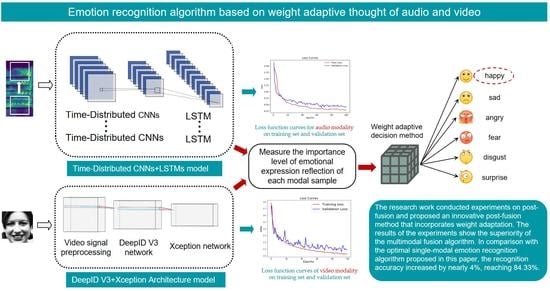

This paper explores the problem of multimodal emotion recognition using deep learning and proposes two model design schemes for audio and video modalities via the application of multimodal thought. Moreover, improvements have been made to multimodal fusion-related technologies, and multimodal fusion has been conducted at the decision-making level.

The main research work comprises three aspects:

Firstly, for audio-modal emotion recognition, this paper studies audio-signal preprocessing methods and feature-extraction algorithms. The logarithmic-mel spectrogram feature [

2,

3] is used, and the “time-distributed CNNs + LSTMs” network [

4,

5] is designed to make full use of the audio time domain information. Compared with other common network model training datasets, it is found that the algorithm proposed in this paper has a certain degree of improvement in accuracy compared with existing common algorithms, and the model training speed is also significantly improved.

Secondly, for video-modal emotion recognition, this paper studies image-preprocessing methods and feature-extraction algorithms. The HOG feature-extraction technology [

6,

7] is used in the image preprocessing, and the main framework of the video-modal emotion feature-extraction network is constructed by using the optimized Xception system [

8,

9]. At the same time, the DeepID V3 network [

10,

11] is introduced to realize the extraction of facial feature points, so that the extracted video emotion features are more comprehensive and effective. This paper proves that the proposed algorithm improves the accuracy rate by 5% compared with the existing common algorithms.

Lastly, for multimodal fusion [

12], it is particularly important to choose an appropriate fusion method. Common fusion methods do not have absolute advantages. In actual tasks, various factors need to be considered comprehensively. This paper mainly studies the multimodal deep learning emotion-recognition algorithm and its application. According to the progress of previous research work and the needs of later system expansion, as well as considering the asynchronous nature of the dataset used in this study, the late fusion method—which is more flexible in modality expansion—is chosen to carry out research on multimodal emotion recognition. The American psychologist Mehrabian proposed a formula: Emotional information expression during communication = 7% speech + 38% human voice + 55% facial expression [

13]. It can be seen that the information contained in the voice data and facial-expression data during human communication accounts for 93% of the expression of emotional information in communication. Based on the above theoretical basis, this paper abandons the poorly performing text mode, comprehensively considers the emotional expressiveness of each mode and the need for subsequent mode expansion, and finally adopts a late fusion decision-making method based on the idea of weight self-adaptation to realize the decision-level fusion of audio and video modalities. In short, the model and method proposed in this paper have achieved a good performance on multiple datasets, providing new ideas and methods for the in-depth exploration of multimodal emotion-recognition problems.

2. Related Work

With the development and application of the field of affective computing and deep-learning technology, researchers began to pay attention to the research of multimodal affective computing. In 2017, researchers from the University of Stirling (School of Natural Sciences) and Nanyang Technological University (Temasek Laboratories) in Singapore conducted a collaborative study. Soujanya Poria conducted the first comprehensive literature review on the different fields of affective computing [

14]. On the basis of describing the results of various single-factor impact analysis, the existing methods of information fusion under different modes are outlined. In this article, the researchers review the basic stages of the multimodal emotion-recognition framework for the first time. The available benchmark datasets are first discussed, followed by an overview of recent advances in audio, video, and text-based emotion-recognition research. The article addresses findings by other researchers that multimodal classifiers far outperform unimodal classifiers. Furthermore, deep learning has clear advantages in multimodal tasks. Therefore, future research on multimodal fusion emotional computing combined with deep learning ideas will be an important research direction in this field.

The model proposed by researchers such as Deepak Kumar Jain is based on a single deep convolutional neural network [

15], which contains convolutional layers and deep residual blocks. Image labels for all faces are first set up for training. Second, the images are passed through the proposed DNN model. The contribution is to classify each image into one of six facial emotion categories. Balaji Balasubramanian et al. present a dataset and algorithm for facial emotion recognition [

16]. This algorithm ranges from simple support vector machines (SVM) to complex convolutional neural networks (CNN). These algorithms are explained through fundamental research papers and applied to the FER task. Dhwani Mehta et al. focus on identifying emotional intensity using machine-learning algorithms in a comparative study [

17]. The algorithms used in the comparative study are Gabor filters, the histogram of oriented gradients (HOG), and local binary patterns (LBP) for feature extraction. For classification, support vector machines (SVM), random forests (RF), and nearest neighbors (kNN) are used. The study implements emotion recognition and intensity estimation for each recognized emotion. Yang Liu et al. conduct the first investigation of the graph-based FAA method [

18]. The results of the team’s findings can serve as a reference for future research in this area and summarize the performance comparison of state-of-the-art graph-based FAA methods, discussing the challenges and potential directions for future development.

Multimodal affective computing has been continuously improved by rapid development and has solved many of the problems raised previously, but new challenges have been raised by researchers one after another. Therefore, there are still many problems in this field of research, which urgently need to be solved. The core issues of the current research are how to efficiently extract effective features in multimodal datasets, eliminate redundant and invalid interference information, and achieve effective multimodal fusion, improving classification accuracy, and optimizing system performance. In order to solve these core problems in the field of multimodal affective computing, researchers are paying more attention to the application of deep-learning algorithms and the design of a more complete multimodal deep-learning model. The research on the combination of multimodal affective computing and deep learning has achieved great progress and remarkable results.

4. Experiment and Results

4.1. Training and Evaluation of Audio-Modal Emotion-Recognition Models

For audio mode, this paper employs the “time-distributed CNNs + LSTMs” approach and conducts 100 rounds of training on the model by continuously tuning parameters and executing other operations. In order to avoid overfitting and improve the generalization ability of the model, the dataset is divided into a training set and a test set in the ratio of 8:2, and the cross-validation method is used to assist in adjusting the model parameters, resulting in a more stable and better performing model. The differences in loss function values and accuracy values are compared between the training and validation sets at the end of the 1st training round and at the end of the 100th training round, as shown in

Table 5.

Based on the results in

Table 5 above, it is evident that after 100 rounds of training, the model’s loss function value on the training set decreases from 1.9524 to 0.2774, and the accuracy rate increases from 0.1696 to 0.9036. The loss function value on the validation set also decreases from 2.1361 to 0.3986, with the accuracy improving from 0.1450 to 0.8254. This demonstrates that while maintaining appropriate model complexity, the generalization ability of the model has been successfully improved. In order to better illustrate the change trends in the two datasets during the training process,

Figure 10 displays the loss function value and accuracy value curves for the audio-modality training and validation sets for rounds 1–100.

Upon completion of the training process, the “time-distributed CNNs + LSTMs”-based audio-modality emotion-recognition model is obtained. The model file can then be used for prediction, and the detailed parameters of each layer within the network are recorded. The network parameters are listed in

Table 6.

After training, this paper successfully constructs an audio-modal emotion-recognition model based on the “time-distributed CNNs + LSTMs” scheme and records the detailed parameters of each layer in the model. In the test phase, the performance of the model was evaluated using the RAVDESS dataset; six emotions were classified and predicted; and the “time-distributed CNNs + LSTMs” scheme was combined with the “SVM on global statistical features” [

49] program and the “hybrid LSTM-transformer model” [

50] in a comparative experiment. The specific effects are shown in

Table 7 below.

The results show that the combination of the “time-distributed CNNs + LSTMs” network and the log-mel spectrogram features of audio samples, compared with the traditional SVM combined with low-level statistical features, significantly improves the performance of the model. A six-classification accuracy 80.4% is achieved, a 12% improvement over the traditional scheme. It is also 4.8% more accurate than the well-performing hybrid LSTM-transformer model network model.

In order to further verify the generalization ability of the model, the IEMOCAP dataset with a larger data volume and audio duration is selected to be verified on the two model networks in the verification stage. This article excerpts the six emotions in the audio part of the IEMOCAP dataset to classify and predict based on the scheme of this article, calculates the accuracy of the six categories, and compares the verification results of the IEMOCAP dataset on the attention-oriented parallel CNN encoders network [

51]. The specific effects are shown in

Table 8 below.

The results show a slight decrease in accuracy when faced with the more complex IEMOCAP dataset compared to the RAVDESS dataset, probably due to the greater complexity of the IEMOCAP dataset and the long sample fragment times. However, it is also 2.5% more accurate than the attention-oriented parallel CNN encoders with a good performance.

In addition, the model complexity is successfully controlled within a reasonable range, and it can be quickly deployed for sentiment prediction. Our method meets the criteria for practical application, and in the field of emotion computing, using audio to analyze human emotions has greater advantages. Therefore, audio modalities should be given higher voting weights when fusing models.

4.2. Training and Evaluation of Video-Modality Emotion-Recognition Model

For the video mode, this paper adopts the “DeepID V3 + Xception architecture” scheme, and conducts 100 rounds of training on the experimental platform. The cross-validation method is also adopted for training. As mentioned earlier, the Xception structure has excellent working principles and features. In order to further improve the model performance of Xception in emotion-recognition tasks, optimization strategies such as data augmentation, early stopping, learning rate decay, L2 regularization, and class weight balance are optimized and adjusted.

After adopting relevant optimization strategies, the loss function value drops from 1.7515 to 1.0031, and the accuracy rate increases from 0.2968 to 0.6453 on the training set during the whole training process. At the same time, on the validation set, the loss function value drops from 2.3387 to 1.0921, and the accuracy rate increases from 0.2644 to 0.5965. The 1–100 round loss function value change curve of the video-modality training set and the verification set and the numerical change curve of the accuracy rate are shown in

Figure 11.

In addition to the aforementioned analysis, this paper also conducts comparative experiments with several schemes proposed by other researchers. These include SVM on HOG features, SVM on facial landmarks features, SVM on facial landmarks and HOG features, SVM on sliding window landmarks and HOG, and Inception architecture. The experiment employs the SVM classifier and utilizes methods such as HOG features, face feature point features, and their combined features and sliding window to conduct the experiments.

Finally, this paper also uses the Inception architecture network to conduct experiments.

Table 9 presents a comparison of the experimental results of the scheme proposed in this paper and the abovementioned comparison schemes.

After comparison, the DeepID V3 + Xception architecture is tested on the CK+ dataset using a combination of HOG features and facial feature points. The results show that the model achieves an accuracy of 64.5%, which is a 5% improvement over the Inception architecture. Furthermore, the model size is only 15 MB. It should be noted that the proposed scheme in this paper has much room for improvement in the video-modal emotion-recognition task due to the quality of the dataset, among other reasons. Therefore, when implementing model fusion, video modalities should be assigned larger weights to further improve the model performance.

4.3. Modal Experiment Results Comparison and Evaluation

The late-fusion decision method based on the idea of weight adaptation proposed in this paper is verified through experiments. The experimental results are presented in

Table 10 below.

The above table reveals that after incorporating individual differences and the significance level of each modality in emotional expression, the model’s performance improves substantially, with the average accuracy rate of six classifications reaching 0.8433. We compare the results obtained by all the algorithms proposed in this paper in

Figure 12.

The above figure reveals that in the single-modal emotion-recognition task, the accuracy of the algorithm presented in this paper is as follows: 80.40% for the audio mode and 64.50% for the video mode. The results indicate that the proposed algorithm utilizing audio modality performs better.

This paper further explores the multimodality late-fusion method by integrating audio and video modalities. Two late-fusion techniques based on average and weight-adaptive ideas are designed and used separately to predict the accuracy of the emotion-recognition model. As per the experimental results, the latter algorithm yields a prediction accuracy of 84.33%, surpassing the prediction accuracy of all single-mode models. Compared to the best single-modal model, the accuracy rate has improved by approximately 4%; findings which demonstrate the feasibility and effectiveness of multimodal fusion in emotion-recognition tasks.

In order to better reflect and verify the advantages of the algorithm proposed in this paper, the model training results obtained in this paper are compared with the experimental results of deep convolutional neural networks [

52] and an audio–visual and emotion-centered network [

53]. The experimental results are shown in

Table 11.

After the experimental results, it can be seen that the experimental results of multimodal emotion recognition in this chapter are the highest, and the accuracy rate of the model used reaches 84.33%, which is better than other network models, reflecting the superior performance of the algorithm proposed in this paper.

5. Conclusions

This paper delves into the direction of emotion recognition in the field of emotion computing through a detailed study of multimodal emotion recognition using audio and video data and deep-learning-based methods. Initially, we describe the construction process of the emotion-recognition models for each modality. In order to validate the model construction schemes proposed in this paper, experimental verification is conducted by comparing the model results with those obtained by previous researchers.

In order to achieve this, the paper introduces the comparative experimental methodology and analyzes and evaluates the outcomes. Finally, we provide a brief overview of the three fusion methods, i.e., early fusion, late fusion, and hybrid fusion, and realize the late fusion of the audio and video modalities based on two distinct ideas. The specific contributions and innovations of this paper are as follows:

(1) For the task of audio-modal emotion recognition, this paper determines the model construction scheme of “time-distributed CNNs + LSTMs”. Firstly, the audio-signal preprocessing is carried out on the audio-modality data, and then the log-mel spectrogram feature extraction is carried out on the audio sequence. Next, this paper performs a time-distributed framing operation on the data sample to adapt it to the subsequent network. Through model training and related comparative experiments, it reflects and proves the superior performance of the network model.

(2) For the video-modal emotion-recognition task, the model construction scheme of “DeepID V3 + Xception architecture” is determined. In the experiment, the image preprocessing of the video-modality data is first performed, and then the process of HOG feature extraction is performed. Finally, the role of DeepID V3 and the Xception system in the video feature-extraction network is introduced. At the same time, the residual network design is introduced, and the design of the network structure and the adjustment of related optimization strategies are carried out.

(3) In order to verify the audio- and video-modality emotion-recognition model construction scheme proposed in this paper, experimental verification is carried out, and the model construction scheme for each modality is compared with the existing common emotion-recognition algorithms. The results show that the emotion-recognition accuracy of the two modalities is increased by 12% and 5%, respectively, confirming the advantages and performance of the proposed algorithm. A late-fusion method based on the idea of weight self-adaptation is also attempted, which confirms the advantages of the multimodal fusion algorithm; the recognition accuracy is improved by nearly 4% on the basis of the optimal single-modal emotion-recognition algorithm proposed in this paper when compared with other multimodal network models, proving the superiority of the algorithm proposed herein.

In order to improve the recognition accuracy of the proposed multimodal model, this paper chooses to focus on the accuracy of emotion recognition, mainly by comparing the experimental results of different schemes and the accuracy of different datasets to verify the reliability and generalization of the scheme. It does not include other accuracy metrics for evaluating performance reliability, such as G-Mean, precision, recall, F1 value, Matthews correlation coefficient (MCC), and the area under the precision-recall curve (PR AUC). Although the accuracy index cannot fully reflect the performance of our model, we believe that the accuracy rate is still one of the most basic and simplest evaluation indicators. In many cases, the accuracy rate is still one of the main indicators for evaluating the performance of classifiers and remains of great reference value. In addition, it is helpful to our work when we have insufficient information. At the same time, this paper compares the results of different datasets and other network model schemes, proving the effectiveness and accuracy of the scheme proposed in this paper resulting in improvement to varying degrees.

Future prospects: The research on the multimodal emotion-recognition algorithm in this paper is based on the two modalities of audio and video. In view of the shortcomings of certain existing algorithms, corresponding improvement strategies and the algorithm scheme of this paper are proposed. After experimental verification, the validity and feasibility of the algorithm in this paper are proved. However, the accuracy rate is only one of the main indicators to evaluate the performance of the classifier. Although the reliability of the scheme is verified through comparative experiments in this paper, in follow-up research, we should consider further evaluation indicators to verify the reliability of the model. At the same time, there are many problems that have not yet been covered in the research of this paper. There are still many problems in the field of multimodal emotion recognition which urgently require further study by researchers. Research on multimodal effective fusion is the core issue in the field of multimodal emotion recognition. Realizing the effective fusion of multimodal information has always been a popular research direction in this field. The fusion results determine the upper limit of the performance of emotion recognition. Therefore, breakthroughs in multimodal fusion methods will give a huge boost to the development of the field and, at the same time, pose a huge challenge to researchers, who will focus on innovations in multimodal fusion methods in the next phase of research. In the next stage of research, we will focus on the innovation of multimodal fusion methods.

Emotion-recognition technology has a wide range of applications in the field of driver safety. In subsequent in-depth research, the driver’s emotion will be identified based on the emotion-recognition algorithm proposed in this paper, the attention mechanism will be used to weight the integrated feature vector, and the emotion-classification results of multiple modalities will be integrated to obtain the final emotion-recognition results, resulting in the integrated emotion state of the driver. Then, early warning tips will be given according to the driver’s emotion classification, thus improving driver safety and security and promoting the development of intelligent transportation.