Abstract

This study proposes a mobile positioning method that adopts recurrent neural network algorithms to analyze the received signal strength indications from heterogeneous networks (e.g., cellular networks and Wi-Fi networks) for estimating the locations of mobile stations. The recurrent neural networks with multiple consecutive timestamps can be applied to extract the features of time series data for the improvement of location estimation. In practical experimental environments, there are 4525 records, 59 different base stations, and 582 different Wi-Fi access points detected in Fuzhou University in China. The lower location errors can be obtained by the recurrent neural networks with multiple consecutive timestamps (e.g., two timestamps and three timestamps); from the experimental results, it can be observed that the average error of location estimation was 9.19 m by the proposed mobile positioning method with two timestamps.

1. Introduction

With the development of wireless networks and mobile networks, the techniques of location-based services (LBS) can provide the corresponding services to the users according to users’ current locations. LBS, which have played an important role in many fields, require the high accuracy of positioning technology [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22].

For LBS in outdoor environments, global positioning system (GPS) and assisted GPS (A-GPS) are popular techniques and meet most of the positioning requirements. However, these techniques may no longer be applicable if the problems of multi-path propagation of wireless signals exist [13]. The study indicated that the availability of GPS may be lower in urban roads, and the GPS modules may be invalid [9]. Furthermore, higher power consumption is required by these techniques [1]. Therefore, some studies proposed cellular-based positioning methods to analyze the signals of cellular networks for reliably estimating the locations of mobile stations [1,5,9,10].

For LBS in indoor environments, Wi-Fi-based positioning methods are popular techniques to detect and analyze the received signal strength indications (RSSIs) from Wi-Fi access points (APs) [5,8,10,11,12,13,14,15,16,18,19,20,21]. The fingerprinting positioning methods based on machine learning algorithms were proposed to learn the relationships among locations and RSSIs for the estimation of locations. Although these methods can estimate the locations of mobile stations without GPS modules, these methods may be invalid in outdoor environments if the transmission coverage of Wi-Fi APs is not sufficient.

Some deep learning methods (e.g., neural networks, convolutional neural networks, recurrent neural networks) have been applied to improve the accuracies of estimation locations [8,11,12,13,14,15]. For instance, a modified probability neural network was used for indoor positioning, and the accuracies of estimated locations by the method were higher than those by the triangulation technique [11]. An improved neural network was trained with the correlation of the initial parameters to achieve the highest possible accuracy of the Wi-Fi-based positioning method in indoor environments [8].

Although cellular-based positioning methods can obtain estimated locations in outdoor environments, the errors of estimated locations may be larger. Furthermore, Wi-Fi-based positioning methods can obtain higher precise locations, but these methods may be not applicable in outdoor environments. Therefore, this study proposed a mobile positioning method to analyze the network signals from heterogeneous networks (e.g., cellular networks and Wi-Fi networks) for LBS in outdoor environments. Furthermore, recurrent neural networks [23] are applied to the proposed mobile positioning method for the analyses of consecutive locations and network signals (e.g., time series data).

The remainder of the paper is organized as follows. Section 2 provides the overview of mobile positioning methods and fingerprinting positioning methods. Section 3 presents the proposed mobile positioning system and method based on recurrent neural networks. The practical experimental results and discussions are illustrated in Section 4. Finally, conclusions and future work are given in Section 5.

2. Related Work

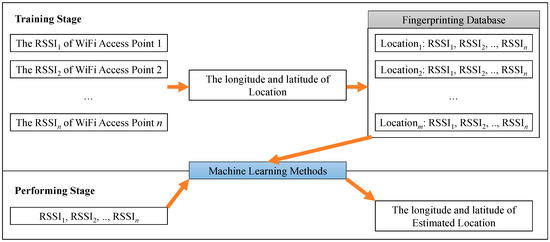

Mobile positioning and fingerprinting positioning methods include two stages: the training stage and performing stage (shown in Figure 1). In the training stage, the RSSIs and locations measured by the mobile stations are matched and stored in a fingerprinting database for training. Machine learning methods can be performed to learn the relationships among RSSIs and locations for the establishment of mobile positioning models. In the performing stage, mobile stations can detect the RSSIs of neighbor base stations and Wi-Fi APs, which can be adopted in the trained models to estimate the locations of these mobile stations.

Figure 1.

Fingerprinting positioning method. RSSI—received signal strength indication.

For training the mobile positioning models, some studies used k-nearest neighbors, Bayesian theory, support vector machine, neural networks, convolutional neural networks, or recurrent neural networks to estimate locations in accordance with RSSIs. For instance, a probabilistic positioning algorithm was proposed to store the probability distribution of RSSIs during a certain time in the fingerprinting database, and the probable locations of mobile stations were calculated by a Bayesian theory system [10]. However, the relationships among inputs were assumed as independent parameters, so big errors of estimated locations may be obtained if the inputs were not independent parameters. Some mobile positioning methods based on k-nearest neighbor algorithms can obtain higher accuracies of estimated locations, but these methods required more computation time in the performing stage. Some neural networks have been proposed to analyze the interrelated influences of inputs for the improvement of location estimation [8,11,12,13], and convolutional neural networks were applied to extract the features of spatio metrics in accordance with convolutional layers [14,18,19,20,21]. Although the spatio metrics may be analyzed by neural networks and convolutional neural networks, these methods cannot provide the solutions of temporal data analyses. Therefore, this study applies recurrent neural networks to analyze the temporal data for improving the accuracies of estimation locations.

3. Mobile Positioning System and Method

The architecture of the proposed mobile positioning system is presented in Section 3.1, and the concepts of the proposed mobile positioning method are illustrated in Section 3.2.

3.1. Mobile Positioning System

The proposed mobile positioning system includes (1) mobile stations, (2) a mobile positioning server, (3) a database server, and (4) a model server (shown in Figure 2). Each component in the proposed system is presented in the following subsections.

Figure 2.

The proposed mobile positioning system.

3.1.1. Mobile Stations

In the training stage, mobile stations can detect and receive the RSSIs of neighbor base stations and Wi-Fi APs from heterogeneous networks. GPS modules can be equipped in the mobile stations and estimate the locations of mobile stations (i.e., coordinates). Then, the mobile stations can send the vectors of GPS coordinates (i.e., longitudes and latitudes) and RSSIs to the mobile positioning server for the collection of network signals. In the performing stage, mobile stations can send the detected RSSIs of neighbor base stations and Wi-Fi APs to the mobile positioning server for location estimation.

3.1.2. Mobile Positioning Server

In the training stage, the mobile positioning server can receive GPS coordinates and network signals (i.e., the RSSIs of base stations and Wi-Fi APs) from mobile stations. These GPS coordinates and network signals can be sent to the database server for storing. The mobile positioning server can execute the proposed mobile positioning method to train RNN models. The network signals can be used as the input layer of the RNN models, and the GPS coordinates can be used as the output layer of the RNN models. Once the RNN models have been trained, these models can be sent to the model server for saving. In the performing stage, the mobile positioning server can load the trained RNN models from the model server. When the mobile positioning server receives network signals from mobile stations, these network signals can be adopted in the trained RNN models for estimating the locations of mobile stations.

3.1.3. Database Server

The database server can store the vectors of coordinates (i.e., longitudes and latitudes) and RSSIs from mobile stations via the mobile positioning server. These vectors can be queried and used to train RNN models.

3.1.4. Model Server

The model server can save the trained RNN models from the mobile positioning server in training stage, and the saved RNN models can be loaded for location estimation by the mobile positioning server.

3.2. Mobile Positioning Method

The proposed mobile positioning method includes (1) collection and normalization, (2) the execution of mobile positioning method based on recurrent neural networks, and (3) de-normalization and estimation. Each step in the proposed method is presented in the following subsections.

3.2.1. Collection and Normalization

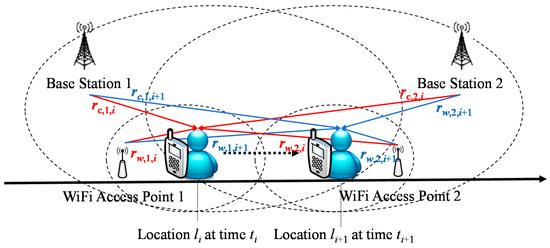

For the collection of network signals and GPS coordinates, the RSSIs of base stations from cellular networks (i.e., in Equation (1)), the RSSIs of Wi-Fi APs from Wi-Fi networks (i.e., in Equation (2)), and the GPS coordinates (i.e., in Equation (3)) can be detected and collected by the mobile station at time (shown in Figure 3). The RSSI of the j-th base station from a cellular network at time is defined as , and the RSSI of the k-th Wi-Fi AP from a Wi-Fi network at time is defined as . The RSSI dataset of heterogeneous networks (i.e., cellular networks and Wi-Fi networks) at time is defined as (shown in Equation (4)). Furthermore, the location (i.e., a GPS coordinate) includes a longitude and a latitude . There are m locations, n1 different base stations, and n2 different Wi-Fi APs detected in the experiments. If the RSSIs of base stations or Wi-Fi APs cannot be detected, the values of these RSSIs can be encoded as null. For instance, the mobile station cannot detect the RSSI of Wi-Fi AP2 at time in Figure 3, so the value of is encoded as null.

Figure 3.

The scenario of network signal and global positioning system (GPS) coordinate collection.

For the normalization of network signals and GPS coordinates, the minimum values and maximum values of RSSIs and coordinates are considered and adopted in Equations (5)–(8). The normalized RSSI of the j-th base station from a cellular network at time is defined as , in accordance with the minimum value and maximum value of the RSSIs (i.e., and in Equation (5)) from cellular networks; the normalized RSSI of the k-th Wi-Fi APs from a cellular network at time is defined as , in accordance with the minimum value and maximum value of the RSSIs (i.e., and in Equation (6)) from Wi-Fi networks. Furthermore, the normalized longitude at time is defined as in accordance with the minimum value and maximum value of longitudes (i.e., and in Equation (7)) from GPS coordinates, and the normalized latitude at time is defined as in accordance with the minimum value and maximum value of latitudes (i.e., and in Equation (8)) from GPS coordinates.

3.2.2. Mobile Positioning Method Based on Recurrent Neural Network

The proposed mobile positioning method adopts recurrent neural network algorithms to estimate the locations of mobile stations. The recurrent neural networks can be applied to extract the features of time series data, so this study considers and analyzes the normalized RSSIs with multiple consecutive timestamps. Section “Recurrent Neural Networks with One Timestamp” presents recurrent neural networks with one timestamp, and Section “Two Timestamps for Recurrent Neural Network” describes recurrent neural networks with multiple consecutive timestamps.

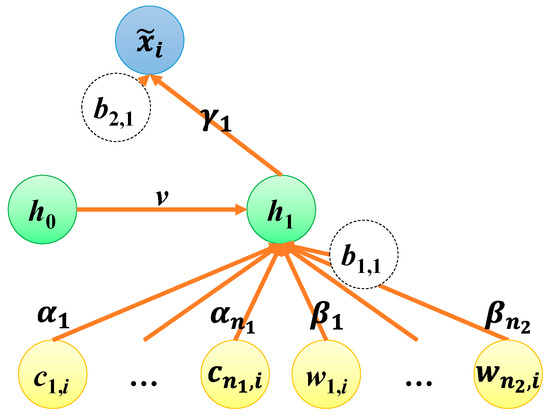

Recurrent Neural Networks with One Timestamp

This subsection shows the designs and optimization of recurrent neural networks with one timestamp. A case study of a recurrent neural network with one timestamp is illustrated in Figure 4. The recurrent neural network is constructed with an input layer, a recurrent hidden layer, and an output layer. The input layer includes the normalized RSSIs of n1 base stations (i.e., ) and n2 Wi-Fi APs (i.e., ), and the output layer includes the estimated normalized longitude and latitude (i.e., and ). The recurrent hidden layer includes a neuron, and the initial value of the neuron in the recurrent hidden layer is defined as h0. The value of the neuron in the recurrent hidden layer can be updated as h1 after calculating the RSSIs in the first timestamp. The weights of cj,i, wk,i, and h0 are , , and v, respectively; the weights of h1 for the outputs and are and , respectively. The biases of neurons in the hidden layer and the output layer are defined as b1,1, b2,1, and b3,1. The sigmoid function is elected as the activation function of each neuron, so the values of h0, h1, , and can be calculated by Equations (9)–(12), respectively. Furthermore, the loss function is defined as Equation (13) in accordance with squared errors.

Figure 4.

A recurrent neural network with one timestamp.

For the optimization of recurrent neural network, the learning rate and a gradient descent method is applied to update each weight and bias. The updates of , , , , , , , and are proven and calculated by Equations (14)–(21), respectively.

Furthermore, the number of neurons in the recurrent hidden layer can be extended for the extraction of time series data. The weight between each of the two neurons can be updated by the gradient descent method.

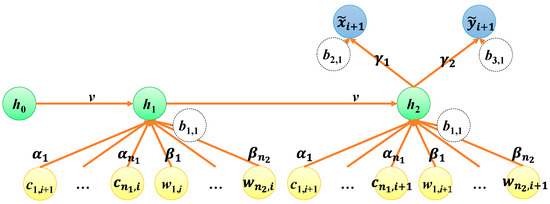

Two Timestamps for Recurrent Neural Network

This subsection illustrates the designs and optimization of recurrent neural networks with two consecutive timestamps. A case study of a recurrent neural network with two consecutive timestamps is showed in Figure 5. In the case, the recurrent neural network is constructed with an input layer, a recurrent hidden layer, and an output layer. The input layer includes (n1 + n2) normalized RSSIs (i.e., and ) in the first timestamp and (n1 + n2) normalized RSSIs (i.e., and ) in the second timestamp; the output layer includes the estimated normalized longitude and latitude (i.e., and ) in the second timestamp. The recurrent hidden layer includes a neuron, and the initial value of the neuron in the recurrent hidden layer is defined as h0 (shown in Equation (9)). The value of the neuron in the recurrent hidden layer can be updated as h1 in the first timestamp and as h2 in the second timestamp. The weights of base station j, Wi-Fi AP k in each timestamp are and ; the weights of h2 for the outputs and are and , respectively. Furthermore, the weight of the neurons in the recurrent hidden layer in the least timestamp is defined as v. In the case, the biases of neurons in the hidden layer and the output layer are defined as b1,1, b2,1, and b3,1. The sigmoid function is elected as the activation function of each neuron, so the values of h1, h2, , and can be calculated by Equations (22)–(25), respectively. Furthermore, the loss function is defined as Equation (26) in accordance with squared errors.

Figure 5.

A recurrent neural network with two consecutive timestamps.

For the optimization of recurrent neural network with two consecutive timestamps, the learning rate and a gradient descent method is applied to update each weight and bias. The updates of , , , , , , , and are proven and calculated by Equations (27)–(34), respectively.

Furthermore, the recurrent neural network can analyze with more consecutive timestamps, and the number of neurons in the recurrent hidden layer of the recurrent neural network can be extended for the extraction of time series data. The weight between each of the two neurons can be updated by the gradient descent method.

3.2.3. De-Normalization and Estimation

For de-normalization and estimation, the estimated normalized longitude and latitude (i.e., and ) can be adopted in Equations (35) and (36) to retrieve the estimated longitude and latitude (i.e., and ), respectively.

4. Practical Experimental Results and Discussion

This section presents and discusses the practical experimental results. Practical experimental environments are illustrated in Section 4.1, and practical experimental results are shown in Section 4.2. Section 4.3 discusses the results of different recurrent neural networks.

4.1. Practical Experimental Environments

In the practical experimental environments, an Android application was implemented and installed into mobile stations (e.g., Redmi 5 running Android platform 7.1.2). The Android application was performed to collect the coordinates of the GPS module and the RSSIs from cellular networks and Wi-Fi networks every second. The mobile stations were carried out on a 5.6 km long road segment in Fuzhou University in China (shown in Figure 6). The segment was traversed eight times by the same mobile station to collect GPS coordinates and network signals (i.e., the RSSIs of base stations and Wi-Fi APs). There are 4525 records (i.e., m = 4525), 59 different base stations (i.e., n1 = 59) in long term evolution (LTE) networks, and 582 different Wi-Fi APs (i.e., n2 = 582) detected in the experiments. The availability of position method based on cellular networks was 100%, but the availability of position method based on Wi-Fi networks was about 96%. Some road segments in experimental environments were not covered by Wi-Fi networks. Therefore, the proposed method based Wi-Fi network signals for outdoor, but in range of a nearby Wi-Fi networks. This study selected 2263 records including GPS coordinates and RSSIs as training data, and other 2262 records were selected as testing data. The mean and median of distances between each of the two measurement locations along the test route were 2.8 and 2.6 m, respectively.

Figure 6.

Practical experimental environments.

4.2. Practical Experimental Results

For the evaluation of the proposed mobile positioning method, nine experimental cases with different timestamp numbers (i.e., one timestamp, two timestamps, and three timestamps) and with different mobile networks (i.e., only cellular networks, only Wi-Fi networks, and cellular and Wi-Fi networks) were designed and performed. There were 30 neurons in the recurrent hidden layer of the recurrent neural network for each experimental case. The practical experimental results are shown in Table 1, as well as in Figure 7, Figure 8, Figure 9 and Figure 10. Table 1 and Figure 7 illustrated that the more precise location can be estimated by the proposed method with heterogeneous networks (i.e., long term evolution networks and Wi-Fi networks). The higher location errors may be obtained by the recurrent neural networks with one timestamp (i.e., traditional neural networks), which cannot extract the feature of time series data (shown in Table 1 and Figure 8). The lower location errors can be obtained by the recurrent neural networks with multiple consecutive timestamps (e.g., two timestamps and three timestamps); from the experimental results, it can be observed that the average error of location estimation was 9.19 m by the proposed mobile positioning method with two timestamps.

Table 1.

The average errors of estimated locations by the proposed mobile positioning method (unit: meters).

Figure 7.

The estimated locations by the proposed mobile positioning method with different mobile networks. G: GPS (a red point); C: cellular networks (a green point); W: Wi-Fi networks (a blue point); CW: cellular and Wi-Fi networks (a yellow point).

Figure 8.

The cumulative distribution function (CDF) of location errors by the proposed mobile positioning method with one timestamp.

Figure 9.

The cumulative distribution function of location errors by the proposed mobile positioning method with two timestamps.

Figure 10.

The cumulative distribution function of location errors by the proposed mobile positioning method with three timestamps.

4.3. Discussions

This section discusses the structure of neural networks, loss function, computation time, and power consumption in different cases.

4.3.1. The Structure of Neural Networks

In this subsection, the different structures of neural networks were constructed and performed for the evaluation of neural networks. The 641 RSSIs from cellular networks (i.e., n1 = 59) and Wi-Fi networks (i.e., n2 = 582) were considered as the neurons in the input layer of neural networks. One hidden layer was constructed in neural networks, and the output layer of neural networks included two neurons (i.e., longitude and latitude). When the hidden layer included 10 neurons, the structure of neural network was expressed as 641-10-2. This study considered four structures of hidden layers in neural networks, which included 10, 20, 30, and 40 neurons. Table 2 shows that the average location errors were lower in the case of 641-30-2. Therefore, this study adopted the structure of 641-30-2 for the proposed mobile positioning method.

Table 2.

The average errors of estimated locations by different structures of neural networks (unit: meters).

4.3.2. The Loss Function of Deep Learning Models

The proposed mobile positioning method used a trained recurrent neural network to simultaneously estimate longitudes and latitudes; in the recurrent neural network, the estimated longitudes and latitudes were determined in accordance with the same weights in the input layer and hidden layers. In addition, this study also considered separately training two recurrent neural networks for estimating longitudes and latitudes (shown in Figure 11 and Figure 12); the estimated longitudes and latitudes were determined in accordance with different weights in these recurrent neural networks. When the method only analyzed the RSSIs from cellular networks, the structure of neural network was expressed as 59-30-1; when the method only analyzed the RSSIs from Wi-Fi networks, the structure of neural network was expressed as 582-30-1. Furthermore, the structure of neural network was expressed as 641-30-1 when the RSSIs from cellular networks and Wi-Fi networks were considered and analyzed. The practical experimental results indicated that higher precise locations may be obtained by the recurrent neural networks with one timestamp (i.e., traditional neural network) (shown in Table 3). However, big errors of estimated locations may be obtained by the recurrent neural networks with multiple consecutive timestamps. The estimated location is a two-dimensional output, so overfitting problems may exist if longitudes and latitudes are estimated by different recurrent neural networks with multiple consecutive timestamps. Therefore, the interaction effects of longitudes and latitudes should be analyzed, so they should be estimated by the same recurrent neural network for determining higher precise locations.

Figure 11.

A recurrent neural network with one timestamp for estimating longitudes.

Figure 12.

A recurrent neural network with one timestamp for estimating latitudes.

Table 3.

The average errors of estimated locations by the proposed mobile positioning method (unit: meters).

4.3.3. Computation Time

For the analyses of computation time, a server with a GPU module (i.e., GeForce GTX 1080) was selected and used. The deep learning models were implemented and executed in TensorFlow and Keras libraries.

In the training stage, the number of epochs was 20,000 for each case. Table 4 showed that the lower computation time was needed for the proposed method with cellular networks (i.e., 59 base stations). The higher computation time was required in the case of cellular and Wi-Fi networks (i.e., 59 base stations and 582 Wi-Fi APs). The computation times of the proposed method with one timestamp (i.e., a neural network) in the cases of cellular networks, Wi-Fi networks, and cellular and Wi-Fi networks were 3022 s, 6928 s, and 7302 s, respectively. In recurrent neural networks, the higher computation time was required for the analyses of time series data. The experimental results showed that the computation times of the proposed method with two and three timestamps in the case of cellular and Wi-Fi networks were 14,533 s and 20,485 s, respectively.

Table 4.

The computation time of estimated locations by the proposed mobile positioning method in training stage (unit: seconds).

In the performing stage, Table 5 showed that the computation times of the proposed method with one, two, and three timestamps in the case of cellular and Wi-Fi networks were 0.37 s, 0.73 s, and 1.02 s, respectively. The precise location information can be quickly obtained by the proposed method with two timestamps.

Table 5.

The computation time of estimated locations by the proposed mobile positioning method in performing stage (unit: seconds).

4.3.4. Power Consumption

For the analyses of power consumption, an Android phone (i.e., Redmi 5 running Android platform 7.1.2) was selected and used for measuring its battery life as an indicator. In experiments, an Android application was implemented on the phone to periodically obtain location by GPS or the proposed mobile positioning method. However, the cellular network module was necessarily enabled for data communications via LTE networks. The experimental results were collected and summarized in Table 6. Suppose the battery had a capacity of J Joules. The baseline lifetime with an enabled cellular network module is 254,500 s, so the baseline power consumption B = J/254,500 Watts. For the analysis of Wi-Fi power consumption W, the lifetime with an enabled Wi-Fi module is 175,450 s, so W + B = J/175,450. Furthermore, the lifetime with an enabled GPS module is 81,000 s, so G + B = J/81,000 for the analysis of GPS power consumption G. For the comparison of power consumption, the values of G/B, G/W, and G/(W + B) can be measured as 2.14, 4.75, and 1.47, respectively. Therefore, the proposed method can obtain location information with lower power consumption.

Table 6.

The power consumption comparisons. GPS—global positioning system.

5. Conclusions and Future Work

This section summarizes and describes the contributions of this study in Section 5.1. The limitations of the proposed method and future work are presented in Section 5.2.

5.1. Conclusions

In previous studies, cellular-based positioning methods could estimate locations of mobile stations in outdoor environments, but the accuracies of estimated locations may have been lower. Moreover, Wi-Fi-based positioning methods can precisely estimate the locations of mobile stations, but the transmission coverage of Wi-Fi APs is not enough in outdoor environments. Although some studies used convolutional neural networks to extract the features of spatio metrics, temporal data of signals from cellular and Wi-Fi networks were not analyzed for reducing location errors. Therefore, a mobile positioning system and a mobile positioning method based on recurrent neural networks are proposed to analyze the RSSIs from heterogeneous networks, which include cellular networks and Wi-Fi networks. The network signals from heterogeneous networks can be analyzed to improve the accuracies of the estimation of locations. Furthermore, the RSSIs in multiple consecutive timestamps can be adopted in recurrent neural networks for the analyses of time series data and location estimation. In practical experimental environments, the results showed that the average error of location estimation was 9.19 m by the proposed mobile positioning method with two timestamps. Therefore, the proposed system and method can be applied to obtain LBS in outdoor environments.

5.2. Future Work

Although the higher accuracies of estimation locations can be obtained by recurrent neural networks with multiple consecutive timestamps, some overfitting problems may exist. For instance, the higher errors of estimated locations were obtained by recurrent neural networks with three timestamps. Therefore, overfitting solutions of time series data [24] can be investigated to improve the accuracies of estimated locations in the future.

Author Contributions

L.W. and C.-H.C. proposed and implemented the methodology. C.-H.C. analyzed and discussed the practical experimental results. L.W., C.-H.C., and Q.Z. wrote the manuscript.

Funding

The research was funded by the Natural Science Foundation of China under the project of 61300104, the Fujian Industry-Academy Cooperation Project under Grant No. 2017H6008, and the Natural Science Foundation of Fujian Province of China under the project of 2018J01791 and 2017J01752. This research was also funded by Fuzhou University, grant number 510730/XRC-18075.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, C.H.; Lin, B.Y.; Lin, C.H.; Liu, Y.S.; Lo, C.C. A green positioning algorithm for Campus Guidance System. Int. J. Mob. Commun. 2012, 10, 119–131. [Google Scholar] [CrossRef]

- Wu, C.; Yang, Z.; Xu, Y.; Zhao, Y.; Liu, Y. Human mobility enhances global positioning accuracy for mobile phone localization. IEEE Trans. Parallel Distrib. Syst. 2015, 26, 131–141. [Google Scholar] [CrossRef]

- Thejaswini, M.; Rajalakshmi, P.; Desai, U.B. Novel sampling algorithm for human mobility-based mobile phone sensing. IEEE Internet Things J. 2015, 2, 210–220. [Google Scholar] [CrossRef]

- Molina, B.; Olivares, E.; Palau, C.E.; Esteve, M. A multimodal fingerprint-based indoor positioning system for airports. IEEE Access 2018, 6, 10092–10106. [Google Scholar] [CrossRef]

- Chen, K.; Wang, C.; Yin, Z.; Jiang, H.; Tan, G. Slide: Towards fast and accurate mobile fingerprinting for Wi-Fi indoor positioning systems. IEEE Sens. J. 2018, 18, 1213–1223. [Google Scholar] [CrossRef]

- Liu, D.; Sheng, B.; Hou, F.; Rao, W.; Liu, H. From wireless positioning to mobile positioning: An overview of recent advances. IEEE Syst. J. 2014, 8, 1249–1259. [Google Scholar] [CrossRef]

- Taniuchi, D.; Liu, X.; Nakai, D.; Maekawa, T. Spring model based collaborative indoor position estimation with neighbor mobile devices. IEEE J. Sel. Top. Signal Process. 2015, 9, 268–277. [Google Scholar] [CrossRef]

- Mok, E.; Cheung, B.K.S. An improved neural network training algorithm for Wi-Fi fingerprinting positioning. ISPRS Int. J. Geo-Inf. 2013, 2, 854–868. [Google Scholar] [CrossRef]

- Chen, C.H.; Lin, J.H.; Kuan, T.S.; Lo, K.R. A high-efficiency method of mobile positioning based on commercial vehicle operation data. ISPRS Int. J. Geo-Inf. 2016, 5, 82. [Google Scholar] [CrossRef]

- Xia, S.; Liu, Y.; Yuan, G.; Zhu, M.; Wang, Z. Indoor fingerprint positioning based on Wi-Fi: An overview. ISPRS Int. J. Geo-Inf. 2017, 6, 135. [Google Scholar] [CrossRef]

- Chen, C.Y.; Yin, L.P.; Chen, Y.J.; Hwang, R.C. A modified probability neural network indoor positioning technique. In Proceedings of the 2012 IEEE International Conference on Information Security and Intelligent Control, Yunlin, Taiwan, 14–16 August 2012. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, Y. Neural network-based accuracy enhancement method for WLAN indoor positioning. In Proceedings of the 2012 IEEE Vehicular Technology Conference, Quebec City, QC, Canada, 3–6 September 2012. [Google Scholar] [CrossRef]

- Zhang, T.; Man, Y. The enhancement of WiFi fingerprint positioning using convolutional neural network. In Proceedings of the 2018 International Conference on Computer, Communication and Network Technology, Wuzhen, China, 29–30 June 2018. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Xu, J.; Zheng, A.X.; He, J.; Wu, C.; Li, V.O.K. WIFI fingerprinting indoor localization system based on spatio-temporal (S-T) metrics. In Proceedings of the 2014 IEEE International Conference on Indoor Positioning and Indoor Navigation, Busan, Korea, 27–30 October 2014. [Google Scholar] [CrossRef]

- Lukito, Y.; Chrismanto, A.R. Recurrent neural networks model for WiFi-based indoor positioning system. In Proceedings of the 2017 IEEE International Conference on Smart Cities, Automation & Intelligent Computing Systems, Yogyakarta, Indonesia, 8–10 November 2017. [Google Scholar] [CrossRef]

- Wang, X.; Gao, L.; Mao, S.; Pandey, S. DeepFi: Deep learning for indoor fingerprinting using channel state information. In Proceedings of the 2015 IEEE Wireless Communications and Networking Conference, New Orleans, LA, USA, 9–12 March 2015. [Google Scholar] [CrossRef]

- 3rd Generation Partnership Project. Technical Specification Group Services and System Aspects. Location Services (LCS); Service Description; Stage 1 (Release 15). 2018. Available online: https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=584 (accessed on 23 November 2018).

- Khatab, Z.E.; Hajihoseini, A.; Ghorashi, S.A. A fingerprint method for indoor localization using autoencoder based deep extreme learning machine. IEEE Sens. Lett. 2018, 2, 6000204. [Google Scholar] [CrossRef]

- Wang, X.; Gao, L.; Mao, S.; Pandey, S. CSI-based fingerprinting for indoor localization: A deep learning approach. IEEE Trans. Veh. Technol. 2017, 66, 763–776. [Google Scholar] [CrossRef]

- Wang, X.; Gao, L.; Mao, S. CSI phase fingerprinting for indoor localization with a deep learning approach. IEEE Internet Things J. 2016, 3, 135. [Google Scholar] [CrossRef]

- Shao, W.; Luo, H.; Zhao, F.; Ma, Y.; Zhao, Z.; Crivello, A. Indoor positioning based on fingerprint-image and deep learning. IEEE Access 2018. [Google Scholar] [CrossRef]

- Xiao, C.; Yang, D.; Chen, Z.; Tan, G. 3-D BLE Indoor localization based on denoising autoencoder. IEEE Access 2017, 5, 12751–12760. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.H. Reducing the dimensionality of time-series data with deep learning techniques. Science 2018. Available online: http://science.sciencemag.org/content/313/5786/504/tab-e-letters (accessed on 10 October 2018).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).