Detection of Moving Ships in Sequences of Remote Sensing Images

Abstract

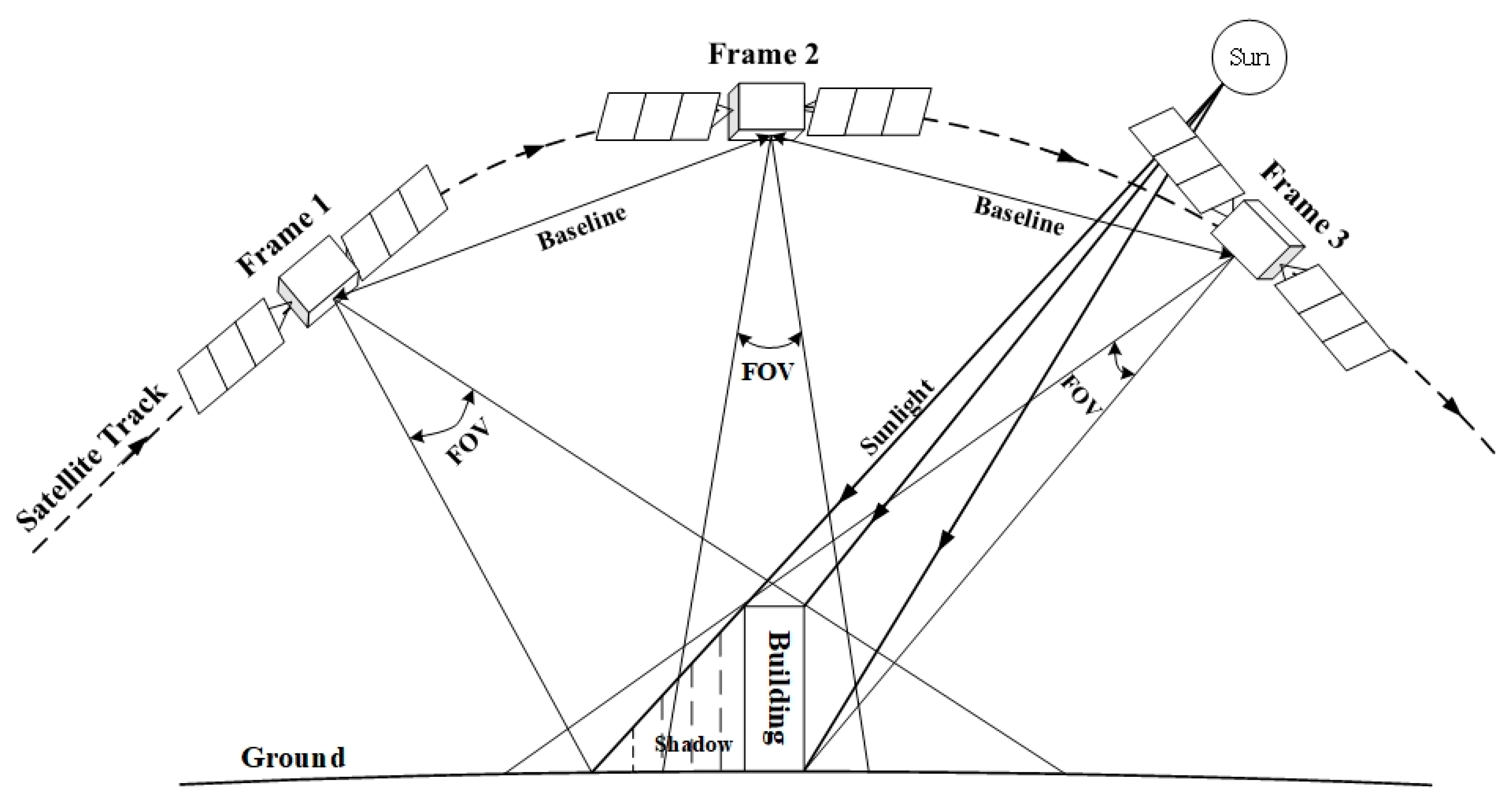

:1. Introduction

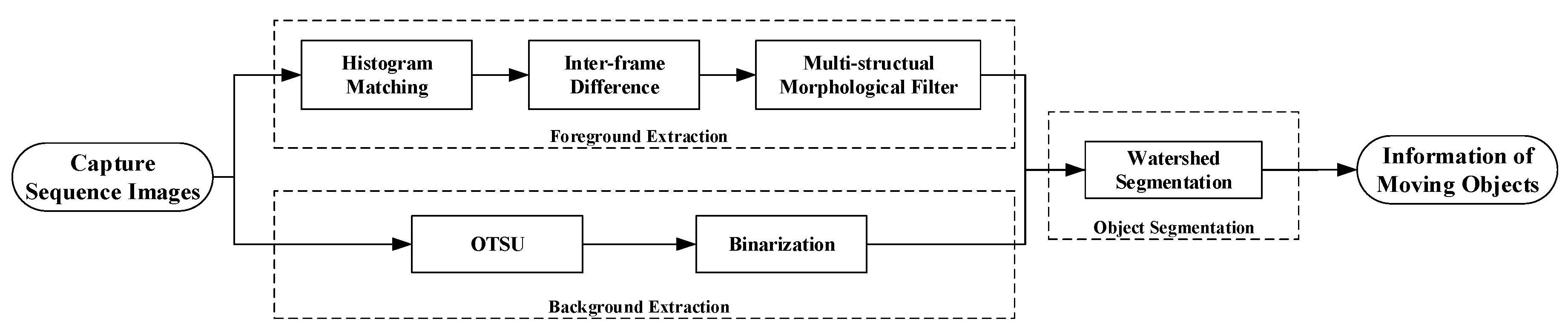

2. Methods

2.1. Methods of Foreground Extraction

2.1.1. Inter-Frame Difference Algorithm

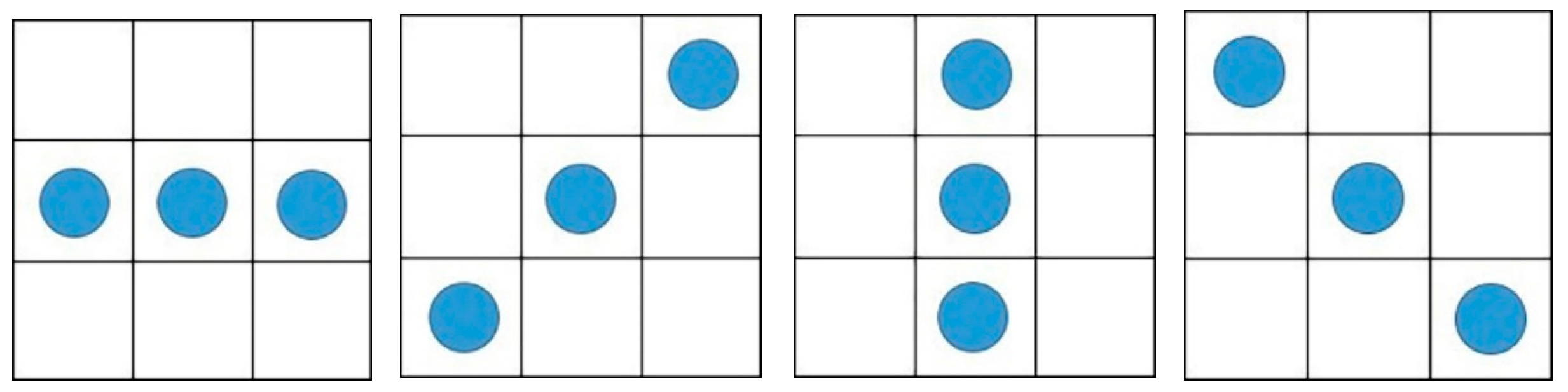

2.1.2. Multi-Structuring Element Morphological Filtering

2.2. Methods of Background Extraction and Segmentation

2.2.1. Otsu’s Method

2.2.2. Marker-Based Watershed Segmentation Algorithm

- (1)

- Different markers are given different labels, and pixels of the markers are the start of the immersion.

- (2)

- Corresponding to the gradient magnitude of the pixels neighboring markers, we insert the neighboring pixels of markers into a queue with a priority level. The gradient magnitude of the pixels is calculated as follows:where is the gradient of the pixel located at , and is the pixel value.

- (3)

- The pixel with the lowest priority level is extracted from the priority queue. If the neighbors of the extracted pixel that have already been labeled all have the same label, then the pixel is labeled with their label. All non-marked neighbors that are not yet in the priority queue are put into the priority queue.

- (4)

- Redo step 3 until the priority queue is empty.

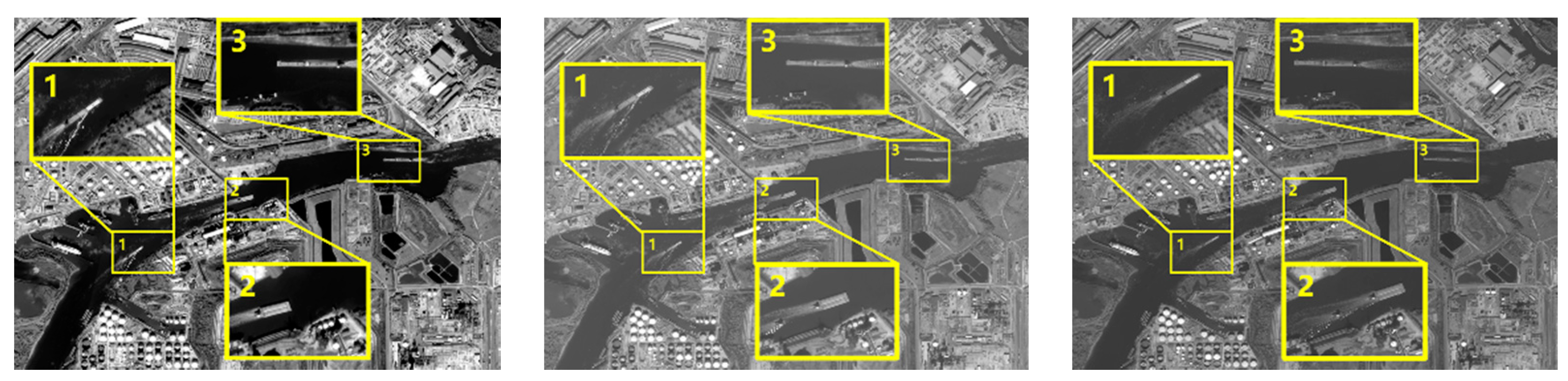

3. Experiments

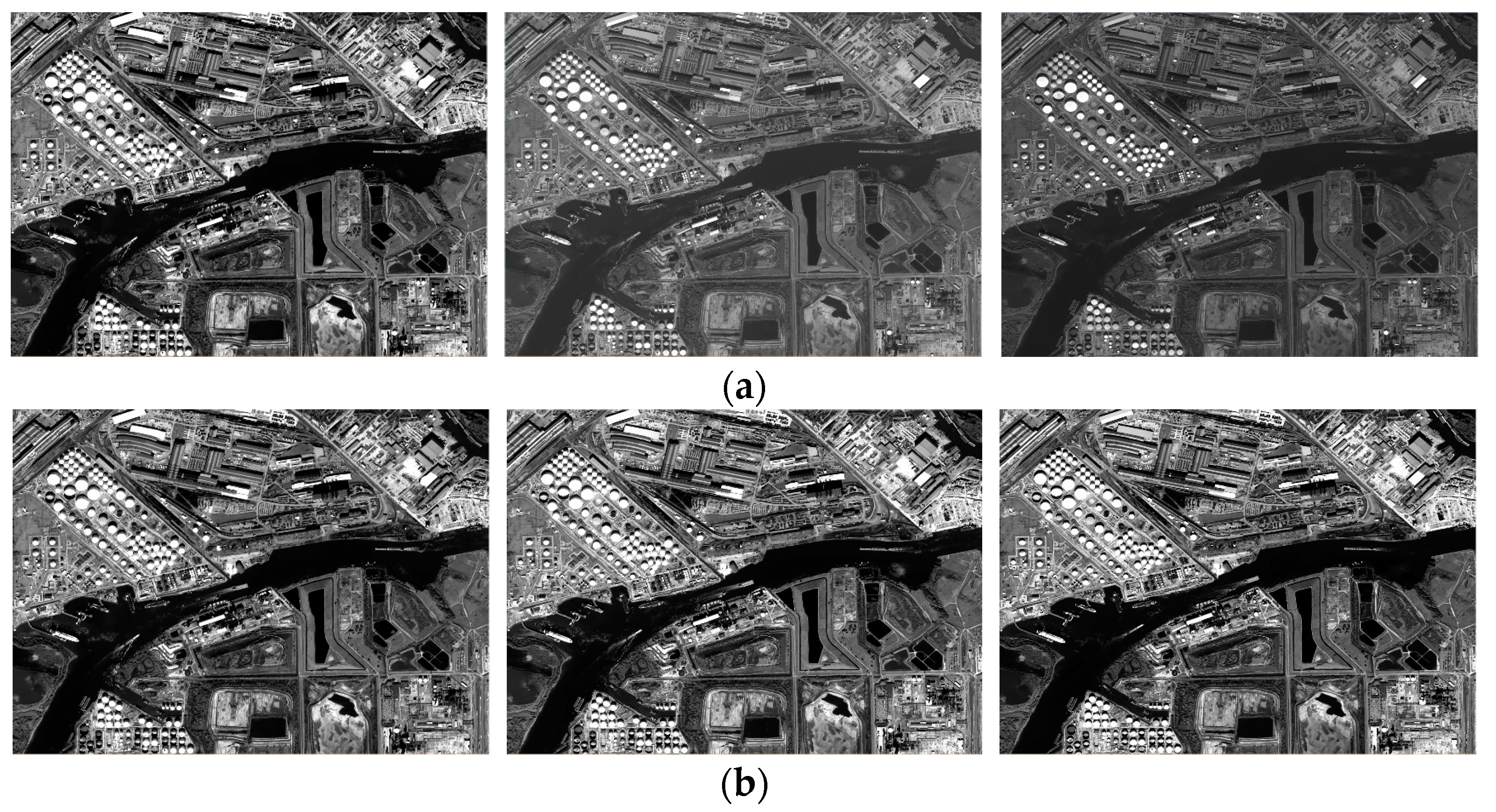

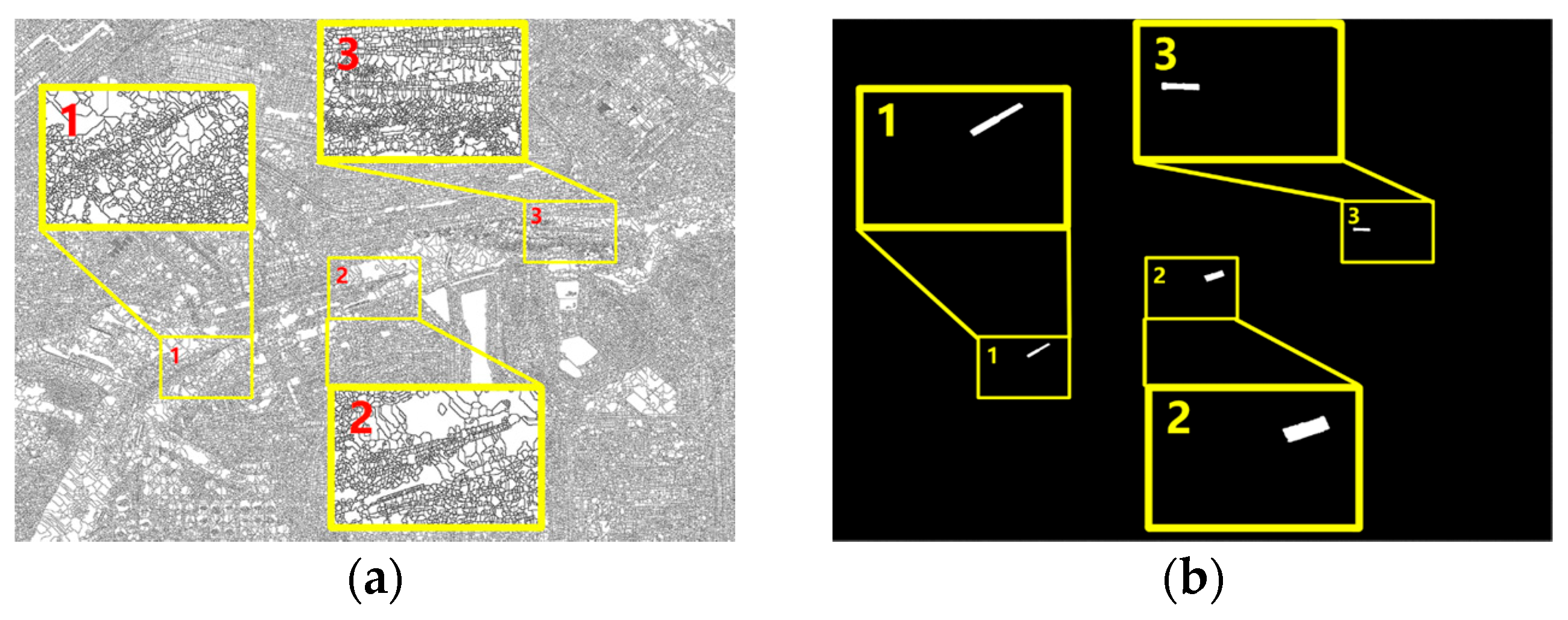

3.1. Data

3.2. Results of Foreground Extraction

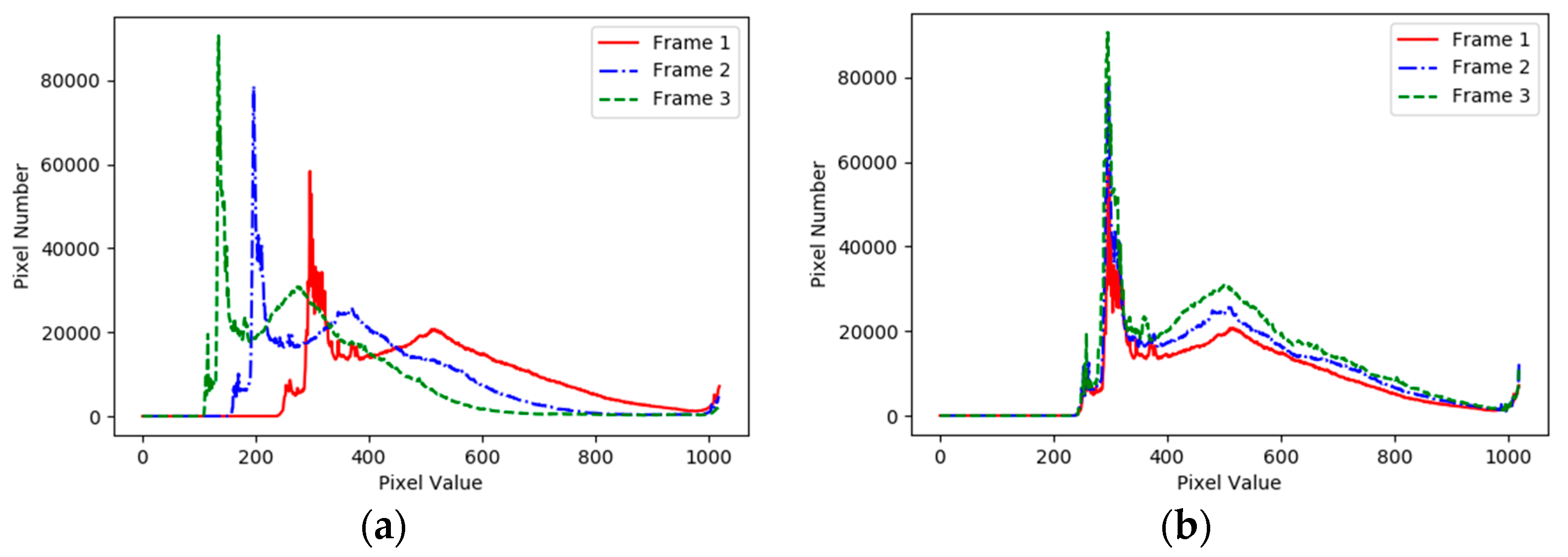

3.2.1. Histogram Matching

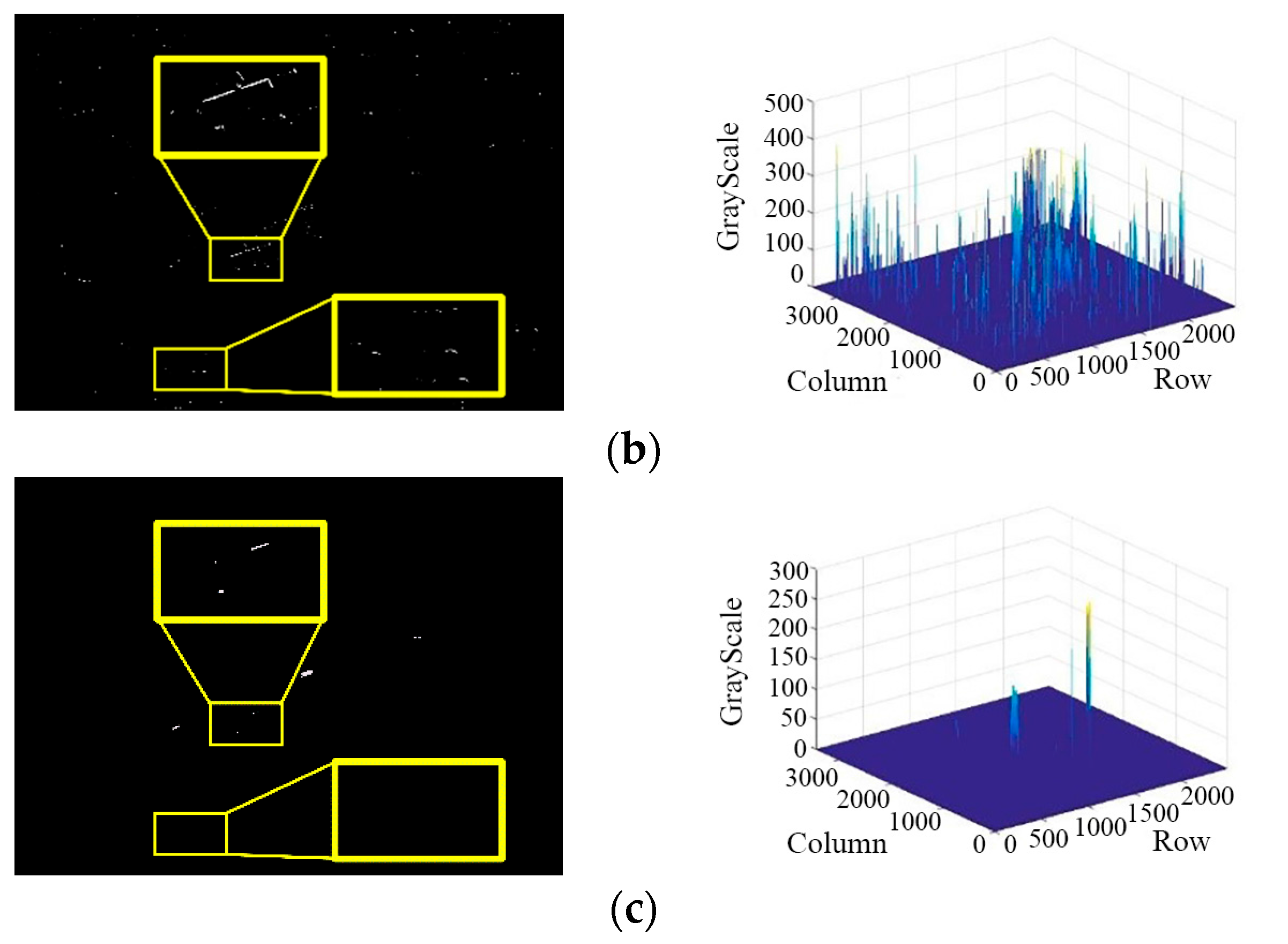

3.2.2. Inter-Frame Difference

3.2.3. Multi-Structuring Element Morphological Filter

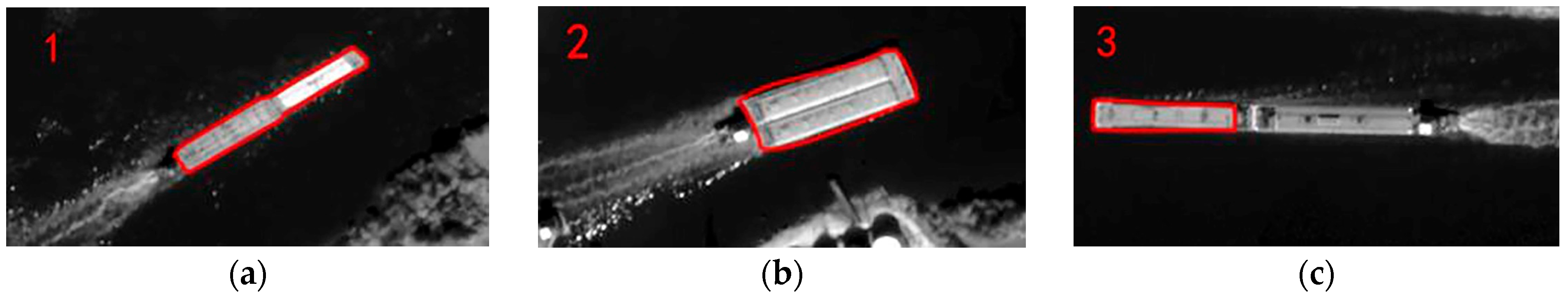

3.3. Results of Background Extraction and Segmentation

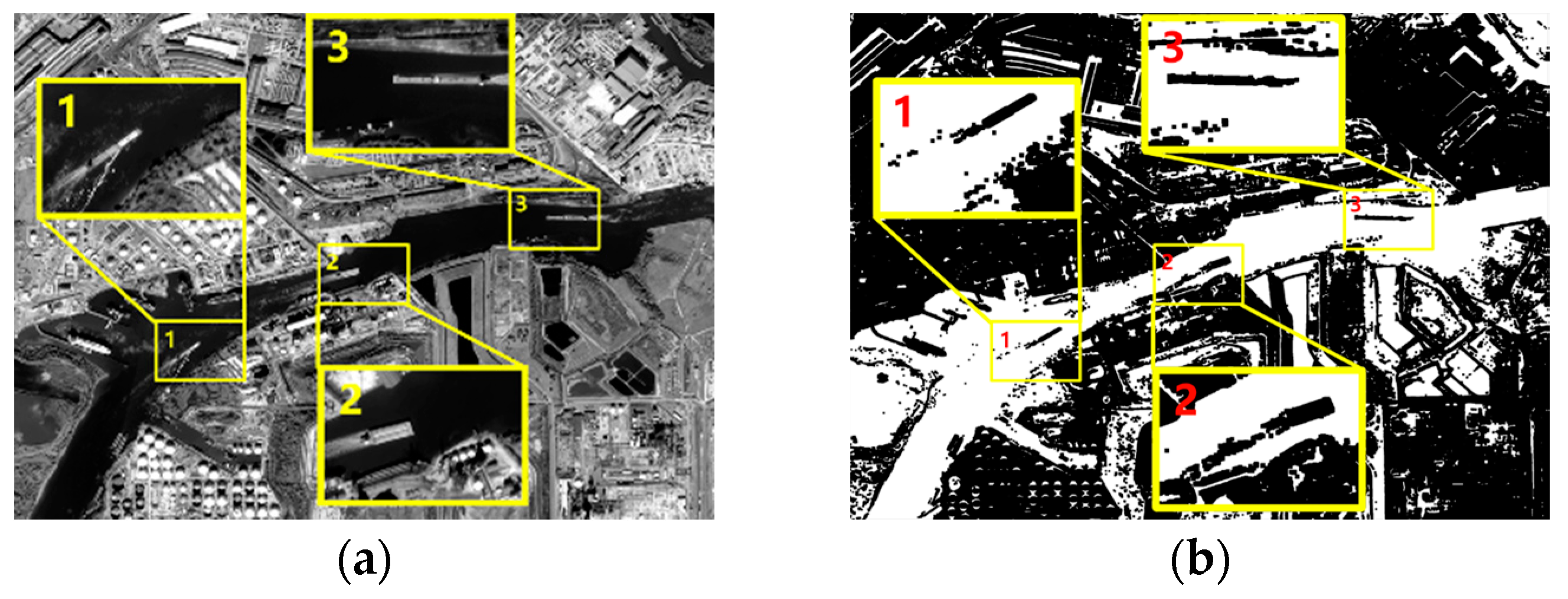

3.3.1. Otsu’s Method

3.3.2. Marker-Based Watershed Segmentation

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhao, S.; Yin, D.; Dou, X. Moving target information extraction based on single satellite image. Acta Geod. Cartogr. Sin. 2015, 44, 316–322. [Google Scholar]

- Zhang, J.; Mao, X.; Chen, T. Survey of moving object tracking algrithom. Appl. Res. Comput. 2009, 12, 4407–4410. [Google Scholar]

- Kornprobst, P.; Deriche, R.; Aubert, G. Image sequence analysis via partial differential equations. J. Math. Imaging Vis. 1999, 11, 5–26. [Google Scholar] [CrossRef]

- Aubert, G.; Kornprobst, P. Mathematical Problems in Image Processing: Partial Differential Equations and the Calculus of Variations; Springer Science & Business Media: Berlin, Germany, 2006; Volume 147. [Google Scholar]

- Elgammal, A.; Duraiswami, R.; Harwood, D.; Davis, L.S. Background and foreground modeling using nonparametric kernel density estimation for visual surveillance. Proc. IEEE 2002, 90, 1151–1163. [Google Scholar] [CrossRef]

- Elgammal, A.; Harwood, D.; Davis, L. Non-parametric model for background subtraction. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2000; pp. 751–767. [Google Scholar]

- Stauffer, C.; Grimson, W.E.L. Adaptive background mixture models for real-time tracking. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Fort Collins, CO, USA, 23–25 June 1999; IEEE: New York, NY, USA, 1999; pp. 246–252. [Google Scholar]

- Stauffer, C.; Grimson, W.E.L. Learning patterns of activity using real-time tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 747–757. [Google Scholar] [CrossRef]

- Grimson, W.E.L.; Stauffer, C.; Romano, R.; Lee, L. Using adaptive tracking to classify and monitor activities in a site. In Proceedings of the 1998 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Santa Barbara, CA, USA, 25 June 1998; IEEE: New York, NY, USA, 1998; pp. 22–29. [Google Scholar]

- Migliore, D.A.; Matteucci, M.; Naccari, M. A revaluation of frame difference in fast and robust motion detection. In Proceedings of the 4th ACM International Workshop on Video Surveillance and Sensor Networks, Santa Barbara, CA, USA, 27 October 2006; ACM: New York, NY, USA, 2006; pp. 215–218. [Google Scholar]

- Neri, A.; Colonnese, S.; Russo, G.; Talone, P. Automatic moving object and background separation. Signal Process. 1998, 66, 219–232. [Google Scholar] [CrossRef]

- Zhan, C.; Duan, X.; Xu, S.; Song, Z.; Luo, M. An improved moving object detection algorithm based on frame difference and edge detection. In Proceedings of the Fourth International Conference on Image and Graphics (ICIG), Sichuan, China, 22–24 August 2007; IEEE: New York, NY, USA, 2007; pp. 519–523. [Google Scholar]

- Ma, W.; Zhao, Y.; Zhang, G.; Jie, F.; Pan, Q.; Li, G.; Liu, Y. Infrared dim target detection based on multi-structural element morphological filter combined with adaptive threshold segmentation. Acta Photonica Sin. 2011, 7, 1020–1024. [Google Scholar]

- Aragón-Calvo, M.A.; Jones, B.J.T.; Van De Weygaert, R.; Van Der Hulst, J.M. The multiscale morphology filter: Identifying and extracting spatial patterns in the galaxy distribution. Astron. Astrophys. 2007, 474, 315–338. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. Automatica 1975, 11, 23–27. [Google Scholar] [CrossRef]

- Fan, J.; Zhao, F. Two-dimensional Otsu’s curve thresholding segmentation method for gray-Level images. Acta Electron. Sin. 2007, 35, 751–755. [Google Scholar]

- Vincent, L.; Soille, P. Watersheds in digital spaces: an efficient algorithm based on immersion simulations. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 583–598. [Google Scholar] [CrossRef]

- Lotufo, R.; Silva, W. Minimal set of markers for the watershed transform. In Proceedings of the ISMM, Berlin, German, 20–21 June 2002; ACM: New York, NY, USA, 2002; pp. 359–368. [Google Scholar]

- Strahler, A.N. Quantitative analysis of watershed geomorphology. Eos. Trans. Am. Geophys. Union 1957, 38, 913–920. [Google Scholar] [CrossRef]

- Xian, G.; Crane, M. Assessments of urban growth in the Tampa Bay watershed using remote sensing data. Remote Sens. Environ. 2005, 97, 203–215. [Google Scholar] [CrossRef]

- Fu, Q.; Celenk, M. Marked watershed and image morphology based motion detection and performance analysis. In Proceedings of the 2013 8th International Symposium on Image and Signal Processing and Analysis (ISPA), Trieste, Italy, 4–6 September 2013; IEEE: New York, NY, USA, 2013; pp. 159–164. [Google Scholar]

- Wei, J.; Li, P.; Yang, J.; Zhang, J. Removing the Effects of Azimuth Ambiguities on Ships Detection Based on Polarimetic SAR Data. Acta Geod. Cartogr. Sin. 2013, 42, 530–539. [Google Scholar]

- Wang, W.; Li, Q. A vehicle tracking algorithm with monte-carlo method. Acta Geod. Cartogr. Sin. 2011, 2, 200–203. [Google Scholar]

| Frame | 1–2 | 2–3 |

|---|---|---|

| Interval Time | 22 s | 23 s |

| Base-height Ratio | 0.02643 | 0.02655 |

| Frame | 1–2 | 1–3 | ||

|---|---|---|---|---|

| Before | After | Before | After | |

| Correlation | 0.4330 | 0.8130 | 0.1281 | 0.7050 |

| Chi-Square | 256,590.2673 | 29.4254 | 79,456.7418 | 64.9714 |

| Intersection | 77.0942 | 106.2336 | 48.8889 | 67.6905 |

| Bhattacharyya Distance | 0.4009 | 0.3118 | 0.5704 | 0.4224 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, S.; Chang, X.; Cheng, Y.; Jin, S.; Zuo, D. Detection of Moving Ships in Sequences of Remote Sensing Images. ISPRS Int. J. Geo-Inf. 2017, 6, 334. https://doi.org/10.3390/ijgi6110334

Yao S, Chang X, Cheng Y, Jin S, Zuo D. Detection of Moving Ships in Sequences of Remote Sensing Images. ISPRS International Journal of Geo-Information. 2017; 6(11):334. https://doi.org/10.3390/ijgi6110334

Chicago/Turabian StyleYao, Shun, Xueli Chang, Yufeng Cheng, Shuying Jin, and Deshan Zuo. 2017. "Detection of Moving Ships in Sequences of Remote Sensing Images" ISPRS International Journal of Geo-Information 6, no. 11: 334. https://doi.org/10.3390/ijgi6110334