Abstract

The European Space Agency (ESA) defines Earth observation (EO) Level 2 information product the stack of: (i) a single-date multi-spectral (MS) image, radiometrically corrected for atmospheric, adjacency and topographic effects, with (ii) its data-derived scene classification map (SCM), whose thematic map legend includes quality layers cloud and cloud–shadow. Never accomplished to date in an operating mode by any EO data provider at the ground segment, systematic ESA EO Level 2 product generation is an inherently ill-posed computer vision (CV) problem (chicken-and-egg dilemma) in the multi-disciplinary domain of cognitive science, encompassing CV as subset-of artificial general intelligence (AI). In such a broad context, the goal of our work is the research and technological development (RTD) of a “universal” AutoCloud+ software system in operating mode, capable of systematic cloud and cloud–shadow quality layers detection in multi-sensor, multi-temporal and multi-angular EO big data cubes characterized by the five Vs, namely, volume, variety, veracity, velocity and value. For the sake of readability, this paper is divided in two. Part 1 highlights why AutoCloud+ is important in a broad context of systematic ESA EO Level 2 product generation at the ground segment. The main conclusions of Part 1 are both conceptual and pragmatic in the definition of remote sensing best practices, which is the focus of efforts made by intergovernmental organizations such as the Group on Earth Observations (GEO) and the Committee on Earth Observation Satellites (CEOS). First, the ESA EO Level 2 product definition is recommended for consideration as state-of-the-art EO Analysis Ready Data (ARD) format. Second, systematic multi-sensor ESA EO Level 2 information product generation is regarded as: (a) necessary-but-not-sufficient pre-condition for the yet-unaccomplished dependent problems of semantic content-based image retrieval (SCBIR) and semantics-enabled information/knowledge discovery (SEIKD) in multi-source EO big data cubes, where SCBIR and SEIKD are part-of the GEO-CEOS visionary goal of a yet-unaccomplished Global EO System of Systems (GEOSS). (b) Horizontal policy, the goal of which is background developments, in a “seamless chain of innovation” needed for a new era of Space Economy 4.0. In the subsequent Part 2 (proposed as Supplementary Materials), the AutoCloud+ software system requirements specification, information/knowledge representation, system design, algorithm, implementation and preliminary experimental results are presented and discussed.

Keywords:

artificial intelligence; color naming; color constancy; cognitive science; computer vision; object-based image analysis (OBIA); physical and statistical data models; radiometric calibration; semantic content-based image retrieval; spatial topological and spatial non-topological information components 1. Introduction

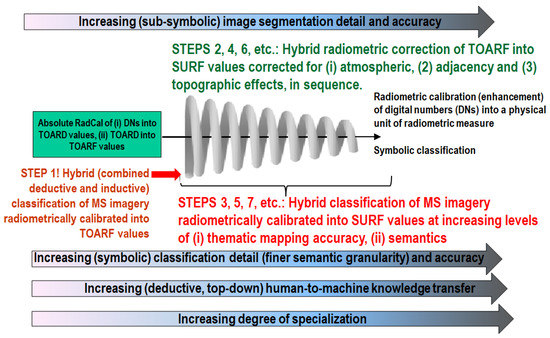

Radiometric calibration (Cal) is the process of transforming remote sensing (RS) sensory data, consisting of non-negative dimensionless digital numbers (DNs, where DN ≥ 0), provided with no physical meaning, i.e., featuring no radiometric unit of measure, into a physical variable provided with a community-agreed radiometric unit of measure, such as top-of-atmosphere reflectance (TOARF), surface reflectance (SURF) or surface albedo values belonging to the physical domain of change 0.0–1.0 [1,2,3].

To cope with the five Vs characterizing big data analytics, specifically, volume, variety, veracity, velocity and value [4], radiometric Cal of Earth observation (EO) big data is considered mandatory by the intergovernmental Group on Earth Observations (GEO)-Committee on Earth Observation Satellites (CEOS) Quality Accuracy Framework for Earth Observation (QA4EO) Calibration/Validation (Cal/Val) guidelines [3]. In agreement with the visionary goal of a GEO’s implementation plan for years 2005-2015 of a Global Earth Observation System of Systems (GEOSS) [5], unaccomplished to date, the ambitious goal of the GEO-CEOS QA4EO Cal/Val guidelines is systematic transformation of EO big data cubes into timely, comprehensive and operational EO value-adding information products and services (VAPS). Despite being considered a well-known “prerequisite for physical model-based analysis of airborne and satellite sensor measurements in the optical domain” [1], EO data radiometric Cal is largely oversighted in the RS common practice and existing literature. For example, in a pair of recent surveys about EO image classification systems published in the RS literature in years 2014 and 2016, the word “calibration” is absent [6,7], whereas radiometric calibration preprocessing issues are barely mentioned in a survey dating back to year 2007 [8]. A lack of EO input data Cal requirements means that statistical model-based data analytics and inductive learning-from-data algorithms are dominant in the RS community, including (geographic) object-based image analysis (GEOBIA) applications [9,10] in the domain of geographic information science (GIScience). On the one hand, statistical model-based and inductive learning-from-data algorithms require as input DNs provided with no physical meaning. On the other hand, inductive learning-from-data algorithms are inherently semi-automatic and site-specific [2]. In practice, they require no radiometric Cal data pre-processing, but they typically gain in robustness when input with radiometrically calibrated data.

In compliance with the GEO-CEOS QA4EO Cal/Val requirements and with the GEO’s visionary goal of a GEOSS, aiming at harmonization between missions acquiring EO data across time and geographic space, the European Space Agency (ESA) has recently defined an ESA EO Level 2 information product as follows [11,12]:

- (i)

- a single-date multi-spectral (MS) image, radiometrically corrected for atmospheric, adjacency and topographic effects,

- (ii)

- stacked with its data-derived scene classification map (SCM), whose general-purpose, user- and application-independent thematic map legend includes quality layers cloud and cloud–shadow,

- (iii)

- to be systematically generated at the ground segment, automatically (without human–machine interaction) and in near real-time.

Unlike the non-standard ESA EO Level 2 SCM legend adopted by the Sentinel 2 imaging sensor-specific (atmospheric, adjacency and topographic) Correction Prototype Processor (Sen2Cor), developed by ESA and distributed free-of-cost to be run on the user side [11,12], see Table 1, an alternative ESA EO Level 2 SCM legend, proposed in [13,14,15] and shown in Table 2, consists of an “augmented” fully-nested 3-level 9-class Dichotomous Phase (DP) taxonomy of land cover (LC) classes in the 4D geospatial-temporal scene-domain. It comprises: (i) a standard 3-level 8-class DP taxonomy of the Food and Agriculture Organization of the United Nations (FAO) Land Cover Classification System (LCCS) [16], see Figure 1, augmented with (ii) a thematic layer explicitly identified as class “others”, synonym for class “unknown” or “rest of the world”, which includes quality layers cloud and cloud–shadow. It is noteworthy that in traditional EO image classification system design and implementation requirements [17], the presence of an output class “unknown” was considered mandatory, to cope with uncertainty in inherently equivocal information-as-data-interpretation (classification) tasks [18].

Table 1.

Non-standard general-purpose, user- and application-independent European Space Agency (ESA) Earth observation (EO) Level 2 scene classification map (SCM) legend adopted by the sensor-specific Sentinel 2 (atmospheric, adjacency and topographic) Correction (Sen2Cor) Prototype Processor [11,12], developed and distributed free-of-cost by ESA to be run on the user side.

Table 2.

General-purpose, user- and application-independent ESA Level 2 SCM legend proposed in [13,14,15], consistent with the standard 3-level 8-class Food and Agriculture Organization (FAO) Land Cover Classification System (LCCS) Dichotomous Phase (DP) taxonomy [16]. The “augmented” standard taxonomy consists of the standard 3-level 8-class FAO LCCS-DP taxonomy (identified as classes A11 to B48) + quality layers Cloud and Cloud–shadow + class Others (Unknown) = 8 land cover (LC) classes + 2 LC classes (Cloud–shadow, Others) + 1 non-LC class (Cloud).

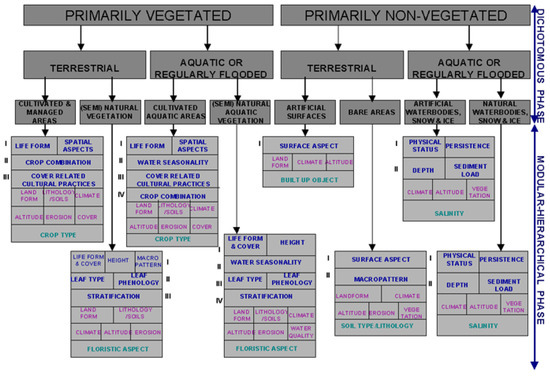

Figure 1.

As in [16], courtesy of the Food and Agriculture Organization (FAO of the United Nations (UN). Two-stage fully-nested FAO Land Cover Classification System (LCCS) taxonomy. The first-stage fully-nested 3-level 8-class FAO LCCS Dichotomous Phase (DP) taxonomy is general-purpose, user- and application-independent. It consists of a sorted set of three dichotomous layers: (i) vegetation versus non-vegetation, (ii) terrestrial versus aquatic, and (iii) managed versus natural or semi-natural. These three dichotomous layers deliver as output the following 8-class FAO LCCS-DP taxonomy. (A11) Cultivated and Managed Terrestrial (non-aquatic) Vegetated Areas. (A12) Natural and Semi-Natural Terrestrial Vegetation. (A23) Cultivated Aquatic or Regularly Flooded Vegetated Areas. (A24) Natural and Semi-Natural Aquatic or Regularly Flooded Vegetation. (B35) Artificial Surfaces and Associated Areas. (B36) Bare Areas. (B47) Artificial Waterbodies, Snow and Ice. (B48) Natural Waterbodies, Snow and Ice. The general-purpose user- and application-independent 3-level 8-class FAO LCCS-DP taxonomy is preliminary to a second-stage FAO LCCS Modular Hierarchical Phase (MHP) taxonomy, consisting of a battery of user- and application-specific one-class classifiers, equivalent to one-class grammars (syntactic classifiers) [19].

Figure 1 shows that the standard two-phase fully-nested FAO LCCS hierarchy consists of a first-stage fully-nested general-purpose, user- and application-independent 3-level 8-class FAO LCCS-DP legend, preliminary to a second-stage application-dependent and user-specific FAO LCCS Modular Hierarchical Phase (MHP) taxonomy, consisting of a hierarchical (deep) battery of one-class classifiers [16]. The standard first-stage 3-level 8-class FAO LCCS-DP hierarchy is “fully nested”. It comprises three dichotomous LC class-specific information layers, equivalent to a world ontology, world model or mental model of the real-world [13,16,19,20,21,22,23,24]: DP Level 1—Vegetation versus non-vegetation, DP Level 2—Terrestrial versus aquatic and DP Level 3—Managed versus natural or semi-natural. In recent years, the two-phase FAO LCCS taxonomy has become increasingly popular [25]. One reason for its popularity is that the FAO LCCS hierarchy is “fully nested” while alternative LC class hierarchies, such as the Coordination of Information on the Environment (CORINE) Land Cover (CLC) taxonomy [26], the U.S. Geological Survey (USGS) Land Cover Land Use (LCLU) taxonomy by J. Anderson [27], the International Global Biosphere Programme (IGBP) DISCover Data Set Land Cover Classification System [28] and the EO Image Librarian LC class legend [29], start from a Level 1 taxonomy which is already multi-class. In a hierarchical EO image understanding (EO-IU) system architecture submitted to a garbage in, garbage out (GIGO) information principle, synonym for error propagation through an information processing chain, the fully-nested two-phase FAO LCCS hierarchy makes explicit the full dependence of high-level LC class estimates, performed by any high-level (deep) LCCS-MHP data processing module, on the operational quality (in accuracy, efficiency, robustness, etc.) of lower-level LCCS modules, starting from the initial FAO LCCS-DP Level 1 vegetation/non-vegetation information layer whose relevance in thematic mapping accuracy (vice versa, in error propagation) becomes paramount for all subsequent LCCS layers.

The GIGO commonsense principle, intuitive to understand in general terms as error propagation through an information processing chain, becomes neither trivial nor obvious to understand when applied to a hierarchical LC class taxonomy, starting from a FAO LCCS-DP Level 1 vegetation/non-vegetation and featuring inter-layer semantic dependencies, equivalent to transmission lines where semantic error can propagate, from low-level (coarse) to high-level (fine) semantics [30]. On the one hand, an inherently difficult image classification scenario into vegetated/non-vegetated LC classes agrees with a minor portion of the RS literature where supervised data learning classification of EO image datasets at continental or global spatial extent into binary LC class vegetation/non-vegetation is considered very challenging [31]. On the other hand, it is at odd with the RS mainstream, where the semantic information gap from sub-symbolic EO data to multi-class LC taxonomies, where target LC classes are far deeper in semantics than the initial FAO LCCS-DP Level 1 vegetation/non-vegetation information layer, is typically filled in one conceptual stage, highly informative, but opaque (mysterious, unfathomable) in nature. This one-stage mapping from sub-symbolic sensory data to high-level (symbolic) concepts is typically implemented as a supervised data learning classification stage [32,33], e.g., a support vector machine, random forest or deep convolutional neural network (DCNN) [34,35,36,37,38], which is equivalent to a black box learned from supervised (labeled) data based on heuristics (e.g., architectural metaparameters are typically user-defined by trial-and-error) [30], whose opacity contradicts the well-known engineering principles of modularity, regularity and hierarchy typical of scalable systems [39]. In addition, inductive algorithms, capable of learning from either supervised (labeled) or unsupervised (unlabeled) data, are inherently semi-automatic and site-specific [2]. In general, "No Free Lunch” theorems have shown that inductive learning-from-data algorithms cannot be universally good [40,41].

Over land surfaces of the Earth, the global cloud cover is approximately 66% [42]. In the ESA EO Level 2 product definition, cloud and cloud–shadow quality layer requirements specification accounts for a well-known prerequisite of clear-sky multi-temporal EO image compositing and understanding (classification) solutions proposed by the RS community, where accurate masking of cloud and cloud–shadow phenomena is considered necessary, but not sufficient pre-condition [12,13,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63]. Intuitively, in single-date and multi-temporal MS image analysis, cloud and cloud–shadow preliminary detection is a relevant problem, because unflagged cloud and cloud–shadow phenomena may be mapped onto erroneous LC classes or false LC change (LCC) occurrences.

It is noteworthy that joint (combined) cloud and cloud–shadow detection is a typical example of physical model-based cause–effect relationship, expected to be very difficult to solve by inductive machine learning-from-data algorithms, such as increasingly popular DCNNs [34], with special regard to DCNNs designed and trained end-to-end for semantic segmentation [37] and instance segmentation [38] tasks, whereas DCNNs trained for object detection, such as [36], where image-objects are localized with bounding boxes and categorized into one-of-many categories, are inapplicable to the cloud/cloud–shadow instance segmentation problem of interest. In general, inductive supervised data learning algorithms are capable of learning complex correlations between input and output features, but unsuitable for inherent representations of causality [30,64], in agreement with the well-known dictum that correlation does not imply causation and vice versa [13,19,30,33,64,65].

In the last decade, many different cloud/cloud–shadow detection algorithms have been presented in the RS literature to run either on a single-date MS image or on an MS image time-series, typically acquired by either one EO spaceborne/airborne MS imaging sensor or a single family (e.g., Landsat) of MS imaging sensors [12,13,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60]. To be accomplished in operating mode at the ground segment (midstream) by EO data providers in support of the downstream sector within a “seamless chain of innovation” needed for a new era of Space Economy 4.0 [66], systematic radiometric Cal of multi-source multi-angular multi-temporal MS big image data cubes [1,3,13,67,68,69], encompassing either single-date or multi-temporal cloud and cloud–shadow detection as a necessary-but-not-sufficient pre-condition, is regarded by the RS community as an open problem to date [61,62,63].

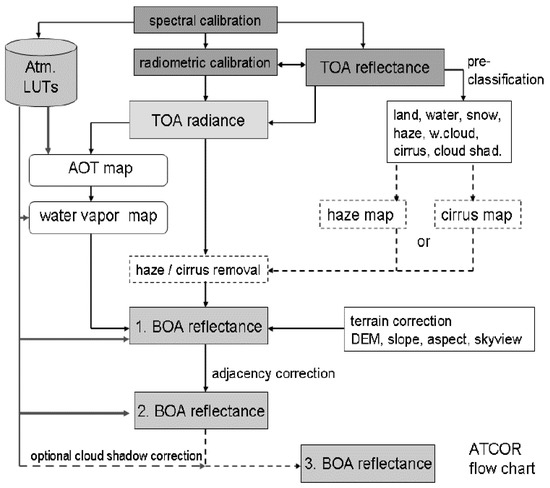

In agreement with the GEO-CEOS QA4EO Cal/Val requirements [3], this work presents an innovative AutoCloud+ computer vision (CV) software system for cloud and cloud–shadow quality layer detection. To be eligible for systematic ESA EO Level 2 product generation at the ground segment [43,67,70], AutoCloud+ must overcome conceptual (structural) limitations and well-known failure modes of standard cloud and cloud–shadow detection algorithms [44,47,61,62,63], such as the single-date multi-sensor Function of Mask (FMask) open source algorithm [58,59], the single-date single-sensor ESA Sen2Cor software toolbox [11,12,44], to be run free-of-cost on the user side, and the multi-date Multisensor Atmospheric Correction and Cloud Screening (MACCS)-Atmospheric/Topographic Correction (ATCOR) Joint Algorithm (MAJA) developed and run by the Centre national d’études spatiales (CNES)/Centre d’Etudes Spatiales de la Biosphère (CESBIO)/Deutsches Zentrum für Luft- und Raumfahrt (German Aerospace Center, DLR) [46,47,48], which incorporates capabilities of the ATCOR commercial software toolbox [71,72,73,74].

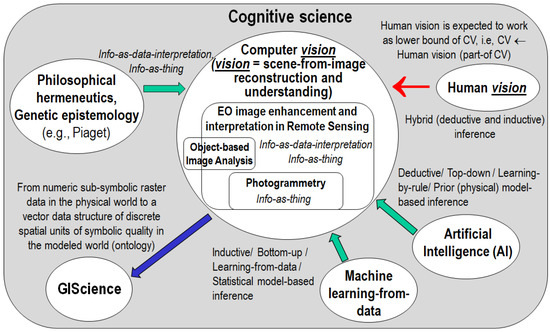

A synonym for inherently ill-posed scene-from-image reconstruction and understanding [13,23,75,76], vision is a cognitive (information-as-data-interpretation) process [18], encompassing both biological vision and CV, where CV is subset-of artificial general intelligence (AI) [77,78,79,80,81], i.e., AI ⊃ CV, in the multi-disciplinary domain of cognitive science [18,77,78,79,80,81], see Figure 2. In vision, spatial information dominates color information [23]. This unquestionable true fact is familiar to all human beings wearing sunglasses: in perceptual terms, human panchromatic and chromatic visions are nearly as effective [13].

Figure 2.

Multi-disciplinary cognitive science domain, adapted from [18,77,78,79,80,81], where it is postulated that ‘Human vision → computer vision (CV)’, where symbol ‘→’ denotes relationship part-of pointing from the supplier to the client, not to be confused with relationship subset-of, ‘⊃’, meaning specialization with inheritance from the superset to the subset, in agreement with the standard Unified Modeling Language (UML) for graphical modeling of object-oriented software [86]. The working hypothesis ‘Human vision → CV’ means that human vision is expected to work as lower bound of CV, i.e., a CV system is required to include as part-of a computational model of human vision [13,76,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104]. In practice, to become better conditioned for numerical solution, an inherently ill-posed CV system is required to comply with human visual perception phenomena in the multi-disciplinary domain of cognitive science. Cognitive science is the interdisciplinary scientific study of the mind and its processes. It examines what cognition (learning, adaptation, self-organization) is, what it does and how it works [18,77,78,79,80,81]. It especially focuses on how information/knowledge is represented, acquired, processed and transferred either in the neuro-cerebral apparatus of living organisms or in machines, e.g., computers. Like engineering, remote sensing (RS) is a meta-science [105], the goal of which is to transform knowledge of the world, provided by other scientific disciplines, into useful user- and context-dependent solutions in the world. Neuroscience, in particular neurophysiology, studies the neuro-cerebral apparatus of living organisms. Neural network (NN) is synonymous with distributed processing system, consisting of neurons as elementary processing elements and synapses as lateral connections. Is it possible and even convenient to mimic biological mental functions, e.g., human reasoning, by means of an artificial mind whose physical support is not an electronic brain implemented as an artificial NN (ANN)? The answer is no according to the “connectionists approach” promoted by traditional cybernetics, where a complex system always comprises an “artificial mind-electronic brain” combination. This is alternative to a traditional approach to artificial intelligence (AI), whose so-called symbolic approach investigates an artificial mind independently of its physical support [77].

Starting from this simple, yet not trivial, observation about human visual perception, in order to outperform standard CV software toolboxes in operating mode for cloud and cloud–shadow detection in EO big data cubes, such as the single-date sensor-specific ESA Sen2Cor [11,12,44] and the multi-date multi-sensor CESBIO/CNES/DLR MAJA software [46,47,48], degrees of novelties of an innovative “universal” AutoCloud+ CV software system are expected to encompass the Marr five levels of understanding of an information processing system, specifically [13,19,76,82,83]:

- outcome and process requirements specification, including computational complexity estimation,

- information/knowledge representation,

- system design (architecture),

- algorithm, and

- implementation.

Among these five levels, the three more abstract ones, namely, outcome and process requirements specification, information/knowledge representation and system design, are typically considered the linchpin of success of an information processing system, rather than algorithm and implementation [13,19,76,82,83].

To be considered “universal” and in operating mode, the AutoCloud+ software system’s outcome and process requirements were specified as follows.

- (i)

- “Fully automated”, i.e., no human–machine interaction and no labeled data set for supervised inductive learning-from-data are required by the system to run, which reduces timeliness, which is the time span from EO data acquisition to EO data-derived VAPS generation, as well as costs in manpower (e.g., to collect training data) and computer power (no training time is required).

- (ii)

- Near real-time, e.g., computational complexity increases linearly with image size.

- (iii)

- Robust to changes in input sensory data acquired across space, time and sensors.

- (iv)

- Scalable to changes in MS imaging sensor’s spatial and spectral resolution specifications.

- (v)

- Last but not least, AutoCloud+ must be eligible for use in multi-sensor, multi-temporal and multi-angular EO big data cubes, either radiometrically uncalibrated, such as MS images typically acquired without radiometric Cal metadata files by small satellites [84] or small unmanned aerial vehicles (UAVs) [85], or radiometrically calibrated into TOARF, SURF or surface albedo values in agreement with the GEO-CEOS QA4EO Cal/Val requirements [3].

For the sake of readability this paper is divided in two. The present Part 1 highlights why AutoCloud+ is important in a broad context of systematic ESA EO Level 2 product generation at the ground segment within a “seamless innovation chain” needed for a new era of Space 4.0 [66]. Heavily referenced, this in-depth problem background discussion can be skipped by expert readers. In the subsequent Part 2 (see Supplementary Materials), first, a “universal” AutoCloud+ CV software system is instantiated at the Marr five levels of understanding of an information processing system (refer to this Section above) [13,19,76,82,83]. Second, preliminary experimental results, collected from an AutoCloud+ prototypical implementation and integration, are presented and discussed.

The rest of the present Part 1 is organized as follows. Section 2 critically reviews the cognitive (information-as-data-interpretation) problem of systematic ESA EO Level 2 product generation, whose necessary-but-not-sufficient pre-condition is cloud and cloud–shadow quality layers detection. Section 3 surveys standard algorithms for cloud and cloud–shadow quality layers detection, available either open source or free-of-cost. Conclusions are reported in Section 4.

2. Systematic ESA EO Level 2 Information Product Generation as a Broad Context of Cloud/Cloud–Shadow Quality Layers Detection in a Cognitive Science Domain

Systematic ESA EO Level 2 product generation at the ground segment in multi-source EO big data cubes [11,12] is an inherently ill-posed CV problem [13,23,75,76]; the necessary-but-not-sufficient pre-condition of this CV problem is the inherently ill-posed CV sub-problem of cloud and cloud–shadow quality layers detection. The former is regarded as a broad context where the importance and degree of complexity of the latter are highlighted.

Featuring a relevant survey value in the multidisciplinary domain of cognitive science [18,77,78,79,80,81], encompassing AI ⊃ CV (see Figure 2), this section provides a critical review of ESA EO Level 2 product generation strategies. Expert readers non-interested in the broad context of cloud and cloud shadow quality layers detection can skip this review section and move directly to either Section 3 in the present Part 1 or the Part 2 (proposed as Supplementary Materials) of this paper.

In this review section, the Marr two lower levels of abstraction of an information processing system, identified as algorithm and implementation (see Section 1), are ignored. Rather, it focuses on the Marr (three more abstract) levels of understanding known as outcome and process requirements specification, information/knowledge representation and system design (see Section 1), because they are typically considered the cornerstone of success of an information processing system [13,19,76,82,83]. Hence, this critical review is not alternative, but complementary to surveys on EO image understanding systems typically proposed in the RS literature, such as [6,7,8], focused exclusively on the two lower levels of abstraction, specifically, algorithm and implementation. In more detail, among the three aforementioned surveys, EO image preprocessing requirements, such as radiometric Cal, atmospheric correction and topographic correction, although considered mandatory by the GEO-CEOS QA4EO Cal/Val guidelines [3], are totally ignored in surveys [6,7], published in year 2014 and 2016 respectively. In contrast, EO image pre-processing issues are briefly taken into account by the third survey [8], dating back to year 2007. This observation supports the thesis that, in more recent years, when computational power has been exponentially increasing according to the Moore law of productivity [106], statistical model-based (inductive) image analysis algorithms have been dominating the RS literature, whereas physical model-based or hybrid (combined statistical and physical model-based) inference algorithms, which require as input sensory data provided with a physical meaning, specifically, EO data provided with a physical unit of radiometric measure in agreement with the GEO-CEOS QA4EO Cal/Val requirements [3], have been increasingly oversighted.

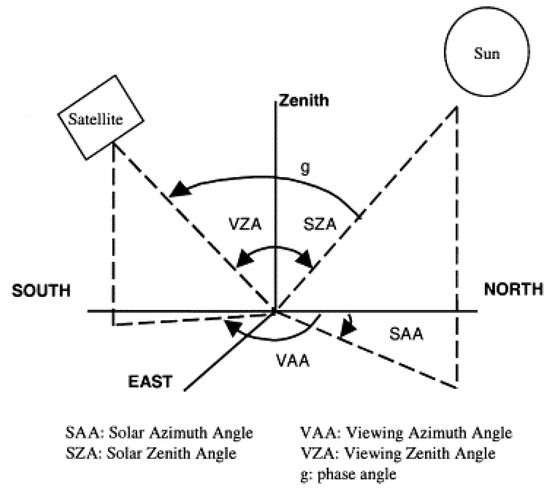

To successfully cope with the five Vs of EO big data analytics, specifically, volume, variety, veracity, velocity and value [4], multi-sensor analysis of multi-temporal multi-angular EO sensory data cubes depends upon the ability to distinguish between relevant changes and no-changes occurring at the Earth surface through time [68,69]. A necessary-but-not-sufficient pre-condition for EO big data transformation into timely, comprehensive and operational EO data-derived VAPS, expected by GEO to be pursued by a GEOSS never accomplished to date [5], is radiometric Cal, considered mandatory by the GEO-CEOS QA4EO Cal/Val requirements [3], but largely oversighted in the RS common practice and literature, e.g., see [6,7]. In short, radiometric Cal guarantees EO sensory data interoperability (consistency, harmonization, reconciliation, “normalization”) across time, geographic space and sensors. In greater detail, the capability to detect and quantify change/no-change in terms of either qualitative (nominal, categorical) Earth surface variables, such as LC classes belonging to a finite and discrete LC class taxonomy (legend), or quantitative (numeric) Earth surface variables, such as biophysical variables, e.g., leaf area index (LAI), biomass, etc., depends on the radiometric Cal of EO sensory data, equivalent to non-negative dimensionless DNs ≥ 0, provided with no physical meaning and typically affected at sensor-level by ever-varying atmospheric conditions, solar illumination conditions, spaceborne/airborne viewing geometries and Earth surface topography, into a physical variable provided with a community-agreed radiometric unit of measure, such as TOARF, SURF or surface albedo values in range 0.0–1.0 [1,2,3]. Solar illumination conditions are typically parameterized by metadata Cal parameters, such as image acquisition time, solar exo-atmospheric irradiance, solar zenith angle and solar azimuth angle, see Figure 3. Sensor viewing characteristics are typical metadata Cal parameters, such as sensor zenith angle and sensor azimuth angle, see Figure 3. Atmospheric conditions are described by categorical variables, such as aerosol type, haze, cloud and cloud–shadow, and by numeric variables, such as water vapor, temperature and aerosol optical thickness (AOT) [68,69]. Finally, Earth surface geometries must be inferred from ancillary data, such as a digital elevation model (DEM), in combination with solar and viewing conditions [107], see Figure 3.

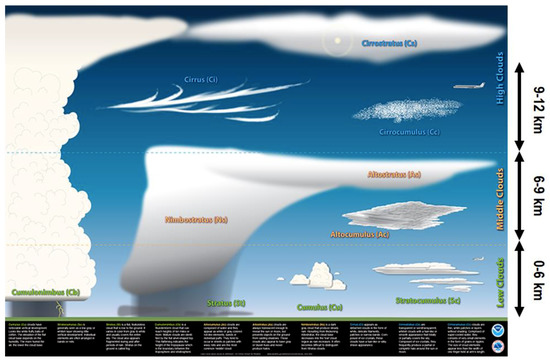

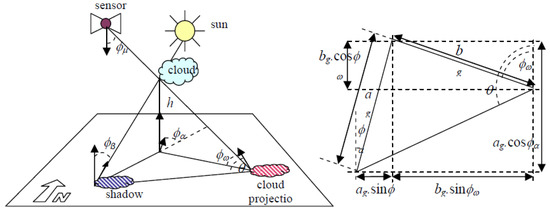

Figure 3.

Solar illumination geometries and viewpoint geometries in spaceborne and airborne EO image acquisition.

Adopted by different scientific disciplines, such as inductive machine learning-from-data [32,33], AI as superset-of CV [77], i.e., AI ⊃ CV (see Figure 2), and RS [2,13], popular synonyms for deductive inference are top-down inference, prior knowledge-based inference, learning-by-rule inference and physical model-based inference. Synonyms for inductive inference are bottom-up inference, learning-from-data inference, learning-from-examples inference and statistical model-based inference [82,83].

On the one hand, non-calibrated sensory data, provided with no physical meaning, can be investigated by statistical data models and inductive inference algorithms, exclusively. On the other hand, although they do not require physical variables as input, statistical data models and inductive learning-from-data algorithms can benefit from input data Cal in terms of augmented robustness to changes in the input data set acquired through time, space and sensors. In contrast, radiometrically calibrated data, provided with a physical meaning, can be interpreted by either inductive, deductive (physical model-based) or hybrid (combined deductive and inductive) inference algorithms. Although it is considered a well-known “prerequisite for physical model-based (and hybrid) analysis of airborne and satellite sensor measurements in the optical domain” [1,13,67,68,69], EO data Cal is largely neglected in the RS common practice. For example, in major portions of the RS literature, including the GEOBIA sub-domain of GIScience [9,10], no reference to radiometric Cal issues is found. This lack of input EO data Cal requirements proves that, to date, EO image analytics mainly consists of inductive learning-from-data algorithms, starting from scratch because no a priori physical knowledge is exploited in addition to data. This is in contrast with biological cognitive systems, where “there is never an absolute beginning” [108], because a priori genotype provides initial conditions (that reflect properties of the world, embodied through evolution, based on evolutionary experience) to learning-from-examples phenotype, according to a hybrid inference paradigm, where phenotype explores the neighborhood of genotype in a solution space [13,78]. Hybrid inference combines deductive and inductive inference to take advantage of each and overcome their shortcomings [2]. Inductive inference is typically semi-automatic and site-specific [2]. Deductive inference is static (non-adaptive to data) and typically lacks flexibility to transform ever-varying sensory data (sensations) into stable percepts (concepts) in a world model [13,23,82,83].

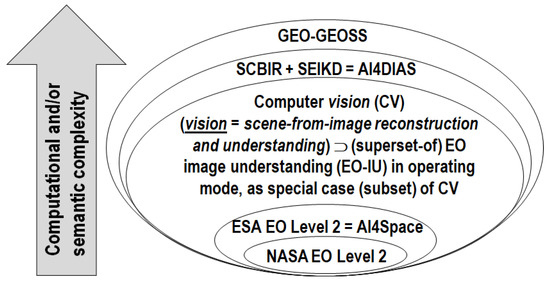

In compliance with the GEO-CEOS QA4EO Cal/Val requirements and the visionary goal of a GEOSS [5], ESA has recently provided an original ESA EO Level 2 information product definition, refer to Section 1 [11,12]. The ESA EO Level 2 product definition is non-trivial. Notably, it is more restrictive than the National Aeronautics and Space Administration (NASA) EO Level 2 product definition of “a data-derived geophysical variable at the same resolution and location as Level 1 source data” [109]. According to the standard Unified Modeling Language (UML) for graphical modeling of object-oriented software [86], where symbol ‘→’ denotes relationship part-of pointing from the supplier to the client (vice versa, it would denote relationship depend-on), not to be confused with relationship subset-of, whose symbol is ‘⊃’, meaning specialization with inheritance from the superset to the subset, the following dependence relationship holds true:

Depicted in Figure 4, this dependence relationship implies that a NASA EO Level 2 product can be accomplished although no ESA EO Level 2 product exists, whereas the vice versa does not hold.

‘NASA EO Level 2 product → ESA EO Level 2 product’.

Figure 4.

In agreement with the standard Unified Modeling Language (UML) for graphical modeling of object-oriented software [86], relationship part-of, denoted with symbol ‘→’ pointing from the supplier to the client, should not to be confused with relationship subset-of, ‘⊃’, meaning specialization with inheritance from the superset to the subset. A National Aeronautics and Space Administration (NASA) EO Level 2 product is defined as “a data-derived geophysical variable at the same resolution and location as Level 1 source data” [109]. Herein, it is considered part-of an ESA EO Level 2 product defined as [11,12]: (a) a single-date multi-spectral (MS) image whose digital numbers (DNs) are radiometrically corrected into surface reflectance (SURF) values for atmospheric, adjacency and topographic effects, stacked with (b) its data-derived general-purpose, user- and application-independent scene classification map (SCM), whose thematic map legend includes quality layers cloud and cloud–shadow. In this paper, ESA EO Level 2 product is regarded as an information primitive to be accomplished by Artificial Intelligence for the Space segment (AI4Space), such as in future intelligent small satellite constellations, rather than at the ground segment in an AI for data and information access services (AI4DIAS) framework. In this graphical representation, additional acronyms of interest are computer vision (CV), whose special case is EO image understanding (EO-IU) in operating mode, semantic content-based image retrieval (SCBIR) [13,110,111,112,113,114,115], semantics-enabled information/knowledge discovery (SEIKD), where SCIR + SEIKD is considered synonym for AI4DIAS, and Global Earth Observation System of Systems (GEOSS), defined by the Group on Earth Observations [5]. Our working hypothesis postulates that the following dependence relationship holds true. ‘NASA EO Level 2 product → ESA EO Level 2 product = AI4Space ⊂ EO-IU in operating mode ⊂ CV → [EO-SCBIR + SEIKD = AI4DIAS] → GEO-GEOSS’. This equation means that GEOSS, whose part-of are the still-unsolved (open) problems of SCBIR and SEIKD, cannot be achieved until the necessary-but-not-sufficient pre-condition of CV in operating mode, specifically, systematic ESA EO Level 2 product generation, is accomplished in advance. Encompassing both biological vision and CV, vision is synonym for scene-from-image reconstruction and understanding. Vision is a cognitive (information-as-data-interpretation) problem [18] very difficult to solve because: (i) non-deterministic polynomial (NP)-hard in computational complexity [87,116], (ii) inherently ill-posed in the Hadamard sense [23,75,117], because affected by: (I) a 4D-to-2D data dimensionality reduction from the 4D geospatial-temporal scene-domain to the (2D, planar) image-domain, e.g., responsible of occlusion phenomena, and (II) a semantic information gap from ever-varying sub-symbolic sensory data (sensations) in the physical world to stable symbolic percepts in the mental model of the physical world (modeled world, world ontology, real-world model) [13,18,19,20,21,22,23,24]. Since it is inherently ill-posed, vision requires a priori knowledge in addition to sensory data to become better posed for numerical solution [32,33]. If the aforementioned working hypothesis holds true, then the complexity of SCBIR + SEIKD is not inferior to the complexity of vision, acknowledged to be inherently ill-posed and NP-hard. To make the inherently-ill-posed CV problem better conditioned for numerical solution, a CV system is required to comply with human visual perception. In other words, a CV system is constrained to include a computational model of human vision [13,76,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104], i.e., ‘Human vision → CV’. Hence, dependence relationship: ‘Human vision → CV ⊃ EO-IU in operating mode ⊃ NASA EO Level 2 product → ESA EO Level 2 product → [EO-SCBIR + SEIKD = AI4DIAS] → GEO-GEOSS’ becomes our working hypothesis (to be duplicated in the body text). Equivalent to a first principle (axiom, postulate), This equation can be considered the first original contribution, conceptual in nature, of this research and technological development (RTD) study.

An improved (“augmented”) and more restrictive ESA EO Level 2 product definition could be defined to account for bidirectional reflectance distribution function (BRDF) effect correction, in addition to atmospheric, topographic and adjacency effects correction, to model surface anisotropy in multi-temporal multi-angular EO image data cubes [2,68,69,74,118,119,120,121]. A surface that reflects the incident energy equally in all directions is said to be Lambertian, where reflectance is invariant with respect to illumination and viewing conditions, see Figure 3. On the contrary, a surface is said to be anisotropic when its reflectance varies with respect to illumination and/or viewing geometries. These changes are driven by the optical and structural properties of the surface material. In other words, in EO image pre-processing (enhancement) for radiometric Cal, BRDF effect correction is LC class-specific [68,69,74,118,119,120,121]. The LC class-specific task of BRDF correction is to derive, for non-Lambertian surfaces, spectral albedo (bi-hemispherical reflectance, BHR) values, defined over all directions [2,74,118,119,120,121], from either SURF or TOARF values where the Lambertian surface assumption holds [71,72,73,74,118].

Our working hypothesis, depicted in Figure 4, when translated into symbols of the standard UML for graphical modeling of object-oriented software [86] can be formulated as follows (refer to the caption of Figure 4):

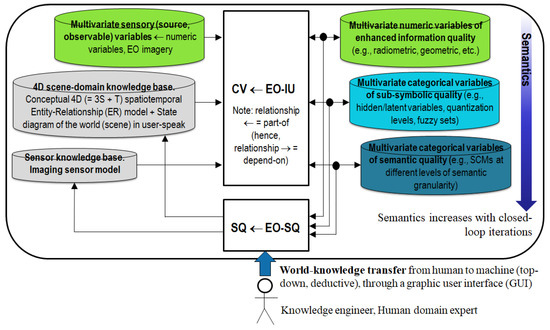

Duplicated from the caption of Figure 4 and regarded as the first original contribution of this research and technological development (RTD) study, Equation (1) shows our working hypothesis as dependence relationship, equivalent to a first principle (axiom, postulate). In more detail, Equation (1) postulates that systematic ESA EO Level 2 product generation is an inherently ill-posed CV problem, where CV ⊃ EO-IU, whose solution in operating mode is necessary-but-not-sufficient pre-condition for the yet-unaccomplished dependent problems of semantic content-based image retrieval (SCBIR) [13,110,111,112,113,114,115] and semantics-enabled information/knowledge discovery (SEIKD) in large-scale EO image data cubes, with SCBIR + SEIKD considered synonym for AI for Data and Information Access Services (AI4DIAS), where AI4DIAS is part-of a yet-unaccomplished GEOSS. The closed-loop AI4DIAS system architecture, suitable for semantics-enabled incremental learning [13,115], is sketched in Figure 5 [13].

‘Human vision → CV ⊃ EO-IU in operating mode ⊃ NASA EO Level 2 product → ESA EO Level 2 product → [EO-SCBIR + SEIKD = AI4DIAS] → GEO-GEOSS’.

Figure 5.

Artificial intelligence (AI) for Data and Information Access Services (AI4DIAS), synonym for semantics-enabled DIAS or closed-loop EO image understanding (EO-IU) for semantic querying (EO-IU4SQ) system architecture. At the Marr level of system understanding known as system design (architecture) [76], AI4DIAS is sketched as a closed-loop EO-IU4SQ system architecture, suitable for incremental semantic learning. It comprises a primary (dominant, necessary-but-not-sufficient) hybrid (combined deductive and inductive) feedback (provided with feedback loops) EO-IU subsystem in closed-loop with a secondary (dominated) hybrid feedback EO-SQ subsystem. Subset-of a computer vision (CV) system, where CV ⊃ EO-IU, the EO-IU subsystem is required to be automatic (no human–machine interaction is required by the CV system to run) and near real-time to provide the EO-SQ subsystem with useful information products, including thematic maps of symbolic quality, such as single-date ESA EO Level 2 Scene Classification Map (SCM) considered a necessary-but-not-sufficient pre-condition to semantic querying, synonym for semantics-enabled information/knowledge discovery (SEIKD) in massive multi-source EO image databases. The EO-SQ subsystem is provided with a graphic user interface (GUI) to streamline: (i) top-down knowledge transfer from-human-to-machine of an a priori mental model of the 4D geospatial-temporal real-world, (ii) high-level user- and application-specific EO semantic content-based image retrieval (SCBIR) operations. Output products generated by the closed-loop EO-IU4SQ system are expected to monotonically increase their value-added with closed-loop iterations, according to Bayesian updating where Bayesian inference is applied iteratively [122,123]: after observing some evidence, the resulting posterior probability can be treated as a prior probability and a new posterior probability computed from new evidence. One of Marr’s legacies is the notion of computational constraints required to make the typically ill-posed non-deterministic polynomial (NP)-hard problem of intelligence, encompassing vision [87], better conditioned for numerical solution [32,33]. Marr’s computational constraints reflecting properties of the world are embodied through evolution, equivalent to genotype [78], into the human visual complex system, structured as a hierarchical network of networks with feedback loops [87,88,89,90,91,92,93,96,97,98]. Marr’s computational constraints are Bayesian priors in a Bayesian inference approach to vision [76,122], where ever-varying sensations (sensory data) are transformed into stable percepts (concepts) about the world in a world model [23], to perform successfully in the world [18].

The working hypothesis (1) regards the ESA EO Level 2 information product as baseline information unit (information primitive) whose systematic generation is of paramount importance to contribute toward filling an analytic and pragmatic information gap from multi-sensor, multi-temporal and multi-angular EO big image data cubes into timely, comprehensive and operational EO data-derived VAPS, in compliance with the visionary goal of a GEOSS [3,5], unaccomplished to date. To justify our working hypothesis (1), let us introduce, first, the definition proposed for an EO-IU system to be considered in operating mode and, second, the background knowledge of vision stemming from the multidisciplinary domain of cognitive science [18,77,78,79,80,81].

Based on scientific literature [13,14,15,67,82,83,124], a CV ⊃ EO-IU system is defined in operating mode if and only if it scores “high” in every index of a minimally dependent and maximally informative (mDMI) set of EO outcome and process (OP) quantitative quality indicators (Q2Is), to be community-agreed upon for use by members of the RS community, in agreement with the GEO-CEOS QA4EO Cal/Val guidelines [3]. A proposed instantiation of an mDMI set of EO OP-Q2Is includes the following.

- (i)

- Degree of automation, inversely related to human–machine interaction, e.g., inversely related to the number of system’s free-parameters to be user-defined based on heuristics.

- (ii)

- Effectiveness, e.g., thematic mapping accuracy.

- (iii)

- Efficiency in computation time and in run-time memory occupation.

- (iv)

- Robustness (vice versa, sensitivity) to changes in input data.

- (v)

- Robustness to changes in input parameters to be user-defined.

- (vi)

- Scalability to changes in user requirements and in sensor specifications.

- (vii)

- Timeliness from data acquisition to information product generation.

- (viii)

- Costs in manpower and computer power.

- (ix)

- Value, e.g., semantic value of output products, economic value of output services, etc.

According to the Pareto formal analysis of multi-objective optimization problems, optimization of an mDMI set of OP-Q2Is is an inherently-ill posed problem in the Hadamard sense [117], where many Pareto optimal solutions lying on the Pareto efficient frontier can be considered equally good [125]. Any EO-IU system solution lying on the Pareto efficient frontier can be considered in operating mode, therefore suitable to cope with the five Vs of spatial-temporal EO big data, namely, volume, variety, veracity, velocity and value [4].

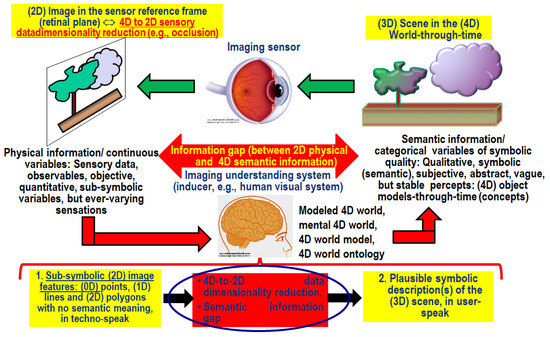

In the multidisciplinary domain of cognitive science (see Figure 2), vision is synonym for scene-from-image reconstruction and understanding [23], see Figure 6. Encompassing both biological vision and CV, vision is a cognitive (information-as-data-interpretation) problem [18], very difficult to solve because: (i) non-deterministic polynomial (NP)-hard in computational complexity [87,116], (ii) inherently ill-posed in the Hadamard sense [117], i.e., vision admits no solution, multiple solutions or, if the solution exists, the solution’s behavior changes continuously with the initial conditions [23,75]. Vision is inherently ill-posed because affected by: (I) a 4D-to-2D data dimensionality reduction, from the geospatial-temporal scene-domain to the (2D, planar) image-domain, e.g., responsible of occlusion phenomena, and (II) a semantic information gap, from ever-varying sub-symbolic sensory data (sensations) in the physical world to stable symbolic percepts in the mental model of the physical world (modeled world, world ontology, real-world model) [12,18,19,20,21,22,23,24], see Figure 6. Since it is inherently ill-posed, vision requires a priori knowledge in addition to sensory data to become better posed for numerical solution [32,33]. For example, in inherently ill-posed CV systems, a valuable source of a priori knowledge is reverse engineering primate visual perception [87,88,89,90,91,92,93], so that a CV system is constrained to include a computational model of human vision [13,76,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104], i.e., ‘Human vision → CV’, see Figure 2. According to cognitive science, AI ⊃ CV is not a problem in statistics [125], which is tantamount to saying there is no (qualitative, equivocal, nominal) semantics in (quantitative, unequivocal, numeric) sensory data [13]. These principles are implicit in the dual meaning of the word “information”, either quantitative (unequivocal) information-as-thing, typical of the Shannon data communication/transmission theory [126], or qualitative (equivocal) information-as-data-interpretation, typical of AI ⊃ CV tasks investigated by philosophical hermeneutics [18].

Figure 6.

Synonym for scene-from-image reconstruction and understanding, vision is a cognitive (information-as-data-interpretation) problem [18] very difficult to solve because: (i) non-deterministic polynomial (NP)-hard in computational complexity [87,116], and (ii) inherently ill-posed [23,75] in the Hadamard sense [117]. Vision is inherently ill-posed because affected by: (I) a 4D-to-2D data dimensionality reduction from the 4D geospatial-temporal scene-domain to the (2D, planar) image-domain, e.g., responsible of occlusion phenomena, and (II) a semantic information gap from ever-varying sub-symbolic sensory data (sensations) in the physical world-domain to stable symbolic percepts in the mental model of the physical world (modeled world, world ontology, real-world model) [13,18,19,20,21,22,23,24]. Since it is inherently ill-posed, vision requires a priori knowledge in addition to sensory data to become better posed for numerical solution [32,33].

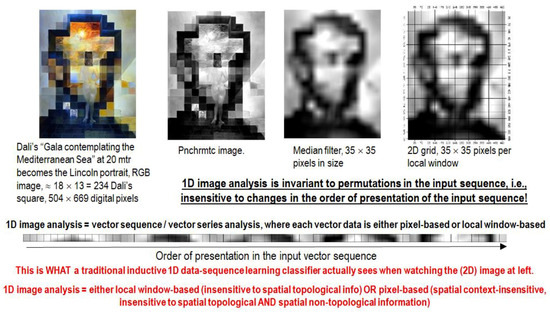

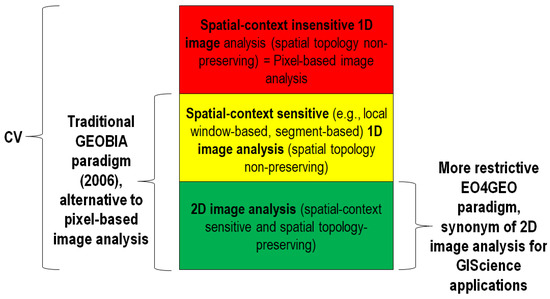

Largely oversighted by the RS and CV literature, an undisputable observation (true-fact) is that, in general, spatial information dominates color information in vision [23]. This commonsense knowledge is obvious, but not trivial. On the one hand, it may sound awkward to many readers, including RS experts and CV practitioners. On the other hand, it is acknowledged implicitly by all human beings wearing sunglasses: human panchromatic vision is nearly as effective as chromatic vision in scene-from-image reconstruction and understanding [13]. This true fact means that spatial information dominates both the 4D geospatial-temporal scene-domain and the (2D) image-domain involved with the cognitive task of vision, see Figure 6. This evidence is also acknowledged by the Tobler’s first law (TFL) of geography, familiar to geographers working in the real-world domain. The TFL of geography states that “all things are related, but nearby things are more related than distant things” [127], although certain phenomena clearly constitute exceptions [128]. Obscure to many geographers familiar with the TFL formulation, the statistical concept of spatial autocorrelation is the quantitative counterpart of the qualitative TFL of geography [13]. The relevance of spatial autocorrelation in both the 4D geospatial-temporal scene-domain and the (2D) image domain involved with vision is at the very foundation of the (GE)OBIA approach to CV, originally conceived around year 2000 by the GIScience community as a viable alternative to traditional 1D spatial-context insensitive (pixel-based) image analysis [9,10]. Unfortunately, rather than starting with background knowledge in the multi-disciplinary domain of cognitive science, the GEOBIA approach was started from scratch by a self-referencing GEOBIA sub-community within the GIScience domain, see Figure 2 [9,10,129,130]. As a consequence of its lack of interdisciplinarity, the GEOBIA community showed an increasing tendency to “re-invent the wheel” in ever-varying implementations of the same sub-optimal EO-IU system architecture, although the CV ⊂ AI communities clearly acknowledge that the key of success of an information processing system lies on outcome and process requirements specification, information/knowledge representation and system design, rather than algorithm or implementation (refer to Section 1) [13,19,76,82,83]. Based on these observations, to enforce a ‘Human vision → CV’ paradigm, see Figure 2, the following original constraint can be adopted to make an inherently ill-posed CV system better conditioned for numerical solution [13].

If a chromatic CV ⊃ EO-IU system does not down-scale seamlessly to achromatic image analysis, then it tends to ignore the paramount spatial information in favor of subordinate (secondary) spatial context-insensitive color information, such as MS signatures typically investigated in traditional pixel-based single-date or multi-temporal EO-IU algorithms. In other words, a necessary and sufficient condition for a CV ⊃ EO-IU system to fully exploit primary spatial topological information (e.g., adjacency, inclusion, etc.) and spatial non-topological information (e.g., spatial distance, angle distance) components, in addition to secondary colorimetric information, is to perform nearly as well when input with either panchromatic or color imagery.[13]

Underpinned by this background knowledge about the cognitive process of vision, an undisputable true fact is that ESA EO Level 2 product generation is an inherently ill-posed CV problem (chicken-and-egg dilemma), whose inherently ill-posed CV sub-problem is cloud and cloud–shadow quality layers detection. Since it is inherently ill-posed, ESA EO Level 2 information product generation is, first, very difficult to solve; in fact, no ESA EO Level 2 product has ever been accomplished in an operating mode by any EO data provider at the ground segment to date. Second, it requires a priori knowledge in addition to sensory data to become better conditioned for numerical solution [32,33].

Our conclusion is that systematic ESA EO Level 2 product generation, at the core of the present work, is of potential interest to relevant portions of the RS community, involved with EO big data transformation into timely, comprehensive and operational EO data-derived VAPS [3,5]. Regarded as necessary-but-not-sufficient pre-condition for a GEOSS to cope with the five Vs of EO big data analytics, see Figure 4, systematic ESA EO Level 2 product generation is still open for solution in operating mode.

As the second original contribution of this review section, the several degrees of novelty of the ESA EO Level 2 product definition (refer to Section 1) are described below.

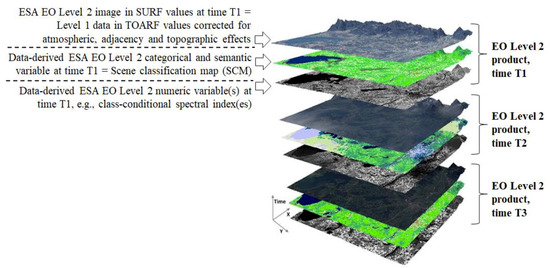

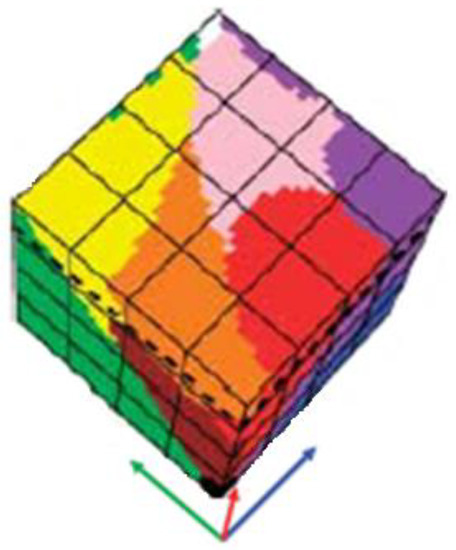

First, the ESA EO Level 2 product definition is innovative because it overtakes the traditional concept of EO data cube with an innovative EO data cube stacked with its data-derived value-adding information cube, synonym for semantics-enabled EO data cube or AI4DIAS, see Figure 7. A semantics-enabled EO data cube is alternative to existing EO data cubes, affected by the so-called data-rich information-poor (DRIP) syndrome [135], such as the existing first generation of the European Commission (EC) Data and Information Access Services (DIAS) [136,137]. Intuitively, EC-DIAS is affected by the DRIP syndrome because it is provided with no CV system in operating mode as inference engine, capable of transforming geospatial-temporal EO big data, characterized by the five Vs of volume, variety, veracity, velocity and value [4], into VAPS, starting from semantic information products, such as the ESA EO Level 2 SCM baseline product. Sketched in Figure 5, AI4DIAS complies with the Marr’s intuition that “vision goes symbolic almost immediately without loss of information” [76] (p. 343).

Figure 7.

Semantics-enabled EO big data cube, synonym for artificial intelligence (AI) for Data and Information Access Services (AI4DIAS). Each single-date EO Level 1 source image, radiometrically calibrated into top-of-atmosphere reflectance (TOARF) values and stored in the database, is automatically transformed into an ESA EO Level 2 product comprising: (i) a single-date multi-spectral (MS) image radiometrically calibrated from TOARF into surface reflectance (SURF) values, corrected for atmospheric, adjacency and topographic effects, stacked with (ii) its EO data-derived value-adding scene classification map (SCM), equivalent to a sensory data-derived categorical/nominal/qualitative variable of semantic quality, where the thematic map legend is general-purpose, user- and application-independent and comprises quality layers, such as cloud and cloud–shadow. It is eventually stacked with (iii) its EO data-derived value-adding numeric variables, such as biophysical variables, e.g., leaf area index (LAI) [2,131], class-conditional spectral indexes, e.g., vegetation class-conditional greenness index [132,133], categorical variables of sub-symbolic quality (geographic field-objects), e.g., fuzzy sets/discretization levels low/medium/high of a numeric variable, etc. [134].

Second, in our understanding the ESA EO Level 2 product definition is the new standard of EO Analysis Ready Data (ARD) format. This ARD definition is in contrast with the Committee on Earth Observation Satellites (CEOS) ARD for Land (CARD4L) product definition [138], where atmospheric effect removal is required exclusively, i.e., adjacency topographic and BRDF effect corrections are oversighted, and no EO data-derived SCM is expected as additional output product, which includes quality layers cloud and cloud–shadow. It is also in contrast with the U.S. Landsat ARD format [139,140,141,142,143], where atmospheric effect removal is required exclusively, i.e., adjacency topographic and BRDF effect corrections are omitted, and where quality layers cloud and cloud–shadow are required, but no EO data-derived SCM is provided as any additional output product. Finally, it is in contrast with the NASA Harmonized Landsat/Sentinel-2 (HLS) Project [142,143] where, first, atmospheric and BRDF effect corrections are required, but adjacency and topographic effect corrections are omitted, and, second, quality layers cloud and cloud–shadow are required, but no EO data-derived SCM is generated as additional output product. In practice, the CARD4L and U.S. Landsat ARD definitions are part-of the ESA EO Level 2 product definition. The latter encapsulates the former. When the latter is accomplished, so is the former; however, the vice versa does not hold.

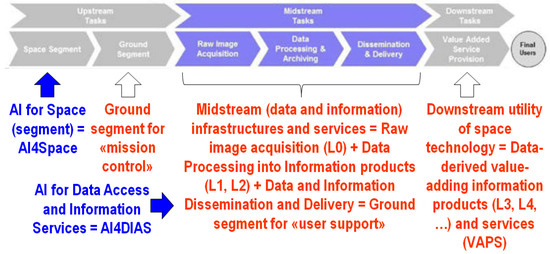

Third, we consider ESA EO Level 2 product generation a horizontal policy for background developments in support of a new era of Space Economy 4.0 [66], see Figure 8. In the notion of Space 4.0, global value chains will require both vertical and horizontal policies. Vertical policies are more directional and ‘active’, focusing on directing change, often through mission-oriented policies that require the active creation and shaping of markets. Horizontal policies are more focused on the background conditions necessary for innovation, correcting for different types of market and system failures [66].

Figure 8.

Definitions adopted in the notion of Space Economy 4.0: space segment, ground segment for «mission control» = upstream, ground segment for «user support» = midstream (infrastructures and services), downstream utility of space technology [66] (pp. 6, 57). Cable of transforming quantitative (unequivocal) big data into qualitative (equivocal) data-derived value-adding information and knowledge, AI technologies should be applied as early as possible to the “seamless innovation chain” needed for a new era of Space 4.0, starting from AI4Space applications at the space segment, which include the notion of future intelligent EO satellites (FIEOS) [144,145], and AI4DIAS applications at midstream, such as systematic ESA EO Level 2 product generation, considered synonym for Analysis Ready Data (ARD) eligible for use at downstream.

Fourth, in an “old” mission-oriented (vertical) space economy [66], the ground segment is typically divided into upstream and midstream, which are defined as the portion of ground segment for mission support and user support respectively, see Figure 8. ESA expects systematic ESA EO Level 2 product generation to be accomplished by EO data providers at midstream. Actually, systematic ESA EO Level 2 product generation should occur as early as possible in the information processing chain, e.g., in the space segment preliminary to the ground segment, in compliance with the Marr’s intuition that “vision goes symbolic almost immediatel without loss of information” [76] (p. 343). Hence, in Figure 4, ESA EO Level 2 product generation becomes synonym for AI applications for the space segment, AI4Space, where AI ⊃ CV. AI4Space comes before the application of AI techniques to the ground segment, specifically, AI4DIAS. If a CV ⊂ AI application in operating mode is implemented on-board a spaceborne platform of an EO imaging sensor to provide imagery with intelligence (semantics), then Future Intelligent EO imaging Satellites (FIEOS), conceived in the early 2000s [144], become realistic, such as future intelligent EO small satellite constellations. In EO small satellite constellations provided with no on-board radiometric Cal subsystem, improved time resolution is counterbalanced by inferior radiometric Cal capabilities, considered mandatory by the GEO-CEOS QA4EO Cal/Val guidelines [3] to guarantee interoperability of multiple platforms and sensors within and across constellations. The visionary goal of AI4Space is realistic, based on the recent announcement of an RTD project focused on future intelligent small satellite constellations. The quote is: “an Earth-i led consortium will develop a number of new Earth Observation technologies that will enable processes, such as the enhancement of image resolution, cloud-detection, change detection and video compression, to take place on-board a small satellite rather than on the ground. This will accelerate the delivery of high-quality images, video and information-rich analytics to end-users. On-board cloud detection will make the tasking of satellites more efficient and increase the probability of capturing a usable and useful image or video. To achieve these goals, ‘Project OVERPaSS‘ will implement, test and demonstrate very high-resolution optical image analysis techniques, involving both new software and dedicated hardware installed on-board small satellites to radically increase their ability to process data in space. The project will also determine the extent to which these capabilities could be routinely deployed on-board British optical imaging satellites in the future” [145].

Fifth, in the RS common practice, the potential impact of the ESA EO Level 2 product definition is relevant because it makes explicit, once and for all, the undisputable true fact that atmospheric effect correction [12,56,68,69,71,72,73,74,146], adjacency and topographic effect corrections [107,147,148,149,150,151], BRDF effect correction [68,69,74,118,119,120,121], in addition to cloud/cloud shadow quality layer detection [12,13,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63], are inherently ill-posed CV problems [23,75] in the Hadamard sense [117], whose solution does not exist or, if it exists, it is not unique or it is not robust to small changes in the initial condition, including changes to the input dataset. Since they are inherently ill-posed, atmospheric, topographic, adjacency and BRDF effect corrections, in addition to cloud/cloud shadow quality layer mapping, require a priori knowledge in addition to sensory data to become better posed for numerical solution [32,33]. This is tantamount to saying that radiometric Cal of EO imagery, encompassing ESA EO Level 2 product generation, is a chicken-and-egg dilemma [13,147]. On the one hand, no EO image understanding (classification) into a finite and discrete taxonomy of LC classes, in addition to categorical layers cloud and cloud–shadow, is possible in operating mode if radiometric Cal is not accomplished in advance, where dimensionless DNs are transformed into a physical unit of radiometric measure to guarantee data interoperability through space, time and sensors, in agreement with the GEO QA4EO Cal/Val requirements [5]. On the other hand, no radiometric Cal of EO imagery is possible without knowing in advance LC classes and nominal quality layers, such as cloud and cloud–shadow masks, since atmospheric, topographic and BRDF effect corrections are LC class-dependent. This is tantamount to saying that, to become better posed for automatic numerical solution (requiring no human–machine interaction), an inherently ill-conditioned CV algorithm for EO image correction from atmospheric, topographic, adjacency and BRDF effects needs to be run on a stratified (masked, layered, class-conditional, driven-by-prior-knowledge) basis, i.e., it should run separately on informative EO image strata (masks, layers). A stratified (masked, layered, class-conditional, driven-by-prior-knowledge) approach to CV complies with well-known criteria in the equivocal domain of information-as-data-interpretation [18].

- Well-known in statistics, the principle of statistic stratification states that “stratification will always achieve greater precision provided that the strata have been chosen so that members of the same stratum are as similar as possible in respect of the characteristic of interest” [152].

- The popular problem solving criterion known as divide-and-conquer (dividi-et-impera) [32], to be accomplished in agreement with the engineering principles of modularity, hierarchy and regularity considered necessary for scalability in structured system design [39].

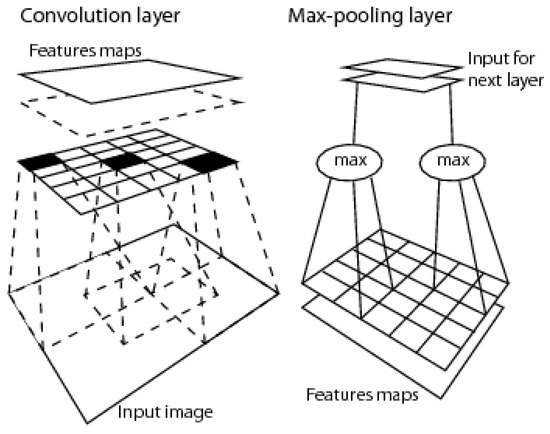

- A Bayesian approach to CV, where driven-without-knowledge (unconditional) data analytics is replaced by driven-by-(prior) knowledge (class-conditional, masked) data analytics [76,122,153,154]. In the words of Quinlan: “one of David Marr’s key is the notion of constraints. The idea that the human visual system embodies constraints that reflect properties of the world is foundational. Indeed, this general view seemed (to me) to provide a sensible way of thinking about Bayesian approaches to vision. Accordingly, Bayesian priors are Marr’s constraints. The priors/constraints have been incorporated into the human visual system over the course of its evolutionary history (according to the “levels of understanding of an information processing system” manifesto proposed by Marr and extended by Tomaso Poggio in 2012)” [153,154]. In agreement with a Bayesian approach to CV, our working hypothesis, shown in Figure 4, postulates that CV includes a computational model of human vision [13,76,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104], i.e., ‘Human vision → CV’. In practice, a CV system is constrained to comply with human visual perception. This CV requirement agrees with common sense, although it is largely oversighted in the RS and CV literature. In the words of Marcus: “there is no need for machines to literally replicate the human mind, which is, after all, deeply error prone, and far from perfect. But there remain many areas, from natural language understanding to commonsense reasoning, in which humans still retain a clear advantage. Learning the mechanisms underlying those human strengths could lead to advances in AI, even if the goal is not, and should not be, an exact replica of human brain. For many people, learning from humans means neuroscience; in my view, that may be premature. We do not yet know enough about neuroscience to literally reverse engineer the brain, per se, and may not for several decades, possibly until AI itself gets better. AI can help us to decipher the brain, rather than the other way around. Either way, in the meantime, it should certainly be possible to use techniques and insights drawn from cognitive and developmental psychology, now, in order to build more robust and comprehensive AI, building models that are motivated not just by mathematics but also by clues from the strengths of human psychology” [30]. According to Iqbal and Aggarwal: “frequently, no claim is made about the pertinence or adequacy of the digital models as embodied by computer algorithms to the proper model of human visual perception... This enigmatic situation arises because research and development in computer vision is often considered quite separate from research into the functioning of human vision. A fact that is generally ignored, is that biological vision is currently the only measure of the incompleteness of the current stage of computer vision, and illustrates that the problem is still open to solution” [155]. For example, according to Pessoa, “if we require that a CV system should be able to predict perceptual effects, such as the well-known Mach bands illusion where bright and dark bands are seen at ramp edges, then the number of published vision models becomes surprisingly small” [156], see Figure 9. In the words of Serre, “there is growing consensus that optical illusions are not a bug but a feature. I think they are a feature. They may represent edge cases for our visual system, but our vision is so powerful in day-to-day life and in recognizing objects" [97,98]. For example, to account for contextual optical illusions, Serre introduced innovative feedback connections between neurons within a layer [97,98], whereas typical DCNNs [34,35,36,37,38] feature feedforward connections exclusively. In the CV and RS common practice, constraint ‘Human vision → CV’ is a viable alternative to heuristics typically adopted to constrain inherently ill-posed inductive learning-from-data algorithms, where a priori knowledge is typically encoded by design based on empirical criteria [30,33,64]. For example, designed and trained end-to-end for either object detection [36], semantic segmentation [37] or instance segmentation [38], state-of-the-art DCNNs [34] encode a priori knowledge by design, where architectural metaparameters must be user-defined based on heuristics. In inductive DCNNs trained end-to-end, number of layers, number of filters per layer, spatial filter size, inter-filter spatial stride, local filter size for spatial pooling, spatial pooling filter stride, etc., are typically user-defined based on empirical trial-and-error strategies. As a result, inductive DCNNs work as heuristic black boxes [30,64], whose opacity contradicts the well-known engineering principles of modularity, regularity and hierarchy typical of scalable systems [39]. In general, inductive learning-from-data algorithms are inherently semi-automatic (requiring system’s free-parameters to be user-defined based on heuristics, including architectural metaparameters) and site-specific (data-dependent) [2]. "No Free Lunch” theorems have shown that inductive learning-from-data algorithms cannot be universally good [40,41].

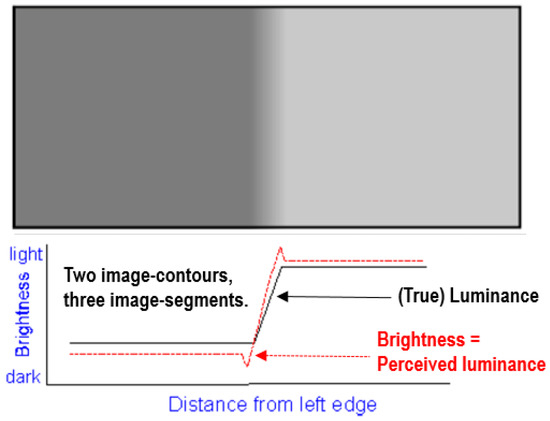

Figure 9. Mach bands illusion [13,156]. In black: Ramp in luminance units across space. In red: Brightness (perceived luminance) across space. One of the best-known brightness illusions, where brightness is defined as a subjective aspect of vision, i.e., brightness is the perceived luminance of a surface, is the psychophysical phenomenon of the Mach bands: where a luminance (radiance, intensity) ramp meets a plateau, there are spikes of brightness, although there is no discontinuity in the luminance profile. Hence, human vision detects two boundaries, one at the beginning and one at the end of the ramp in luminance. Since there is no discontinuity in luminance where brightness is spiking, the Mach bands effect is called a visual “illusion”. Along a ramp, no image-contour is perceived by human vision, irrespective of the ramp’s local contrast (gradient) in range (0, +∞). In the words of Pessoa, “if we require that a brightness model should at least be able to predict Mach bands, the bright and dark bands which are seen at ramp edges, the number of published models is surprisingly small” [156]. In 2D signal (image) processing, the important lesson to be learned from the Mach bands illusion is that local variance, contrast and first-order derivative (gradient) are statistical features (data-derived numeric variables) computed locally in the (2D) image-domain not suitable to detect image-objects (segments, closed contours) required to be perceptually “uniform” (“homogeneous”) in agreement with human vision. In other words, these popular local statistics, namely, local variance, contrast and first-order derivative (gradient), are not suitable visual features if detected image-segments/image-contours are required to be consistent with human visual perception, including ramp-edge detection. This straightforward (obvious), but not trivial observation is at odd with a large portion of the existing computer vision (CV) and remote sensing (RS) literature, where many semi-automatic image segmentation/image-contour detection algorithms are based on thresholding the local variance, contrast or first-order gradient, e.g., [157,158,159], where a system’s free-parameter for thresholding image-objects or image-contours must be user-defined in range ∈ (0, +∞) based on heuristics.

Figure 9. Mach bands illusion [13,156]. In black: Ramp in luminance units across space. In red: Brightness (perceived luminance) across space. One of the best-known brightness illusions, where brightness is defined as a subjective aspect of vision, i.e., brightness is the perceived luminance of a surface, is the psychophysical phenomenon of the Mach bands: where a luminance (radiance, intensity) ramp meets a plateau, there are spikes of brightness, although there is no discontinuity in the luminance profile. Hence, human vision detects two boundaries, one at the beginning and one at the end of the ramp in luminance. Since there is no discontinuity in luminance where brightness is spiking, the Mach bands effect is called a visual “illusion”. Along a ramp, no image-contour is perceived by human vision, irrespective of the ramp’s local contrast (gradient) in range (0, +∞). In the words of Pessoa, “if we require that a brightness model should at least be able to predict Mach bands, the bright and dark bands which are seen at ramp edges, the number of published models is surprisingly small” [156]. In 2D signal (image) processing, the important lesson to be learned from the Mach bands illusion is that local variance, contrast and first-order derivative (gradient) are statistical features (data-derived numeric variables) computed locally in the (2D) image-domain not suitable to detect image-objects (segments, closed contours) required to be perceptually “uniform” (“homogeneous”) in agreement with human vision. In other words, these popular local statistics, namely, local variance, contrast and first-order derivative (gradient), are not suitable visual features if detected image-segments/image-contours are required to be consistent with human visual perception, including ramp-edge detection. This straightforward (obvious), but not trivial observation is at odd with a large portion of the existing computer vision (CV) and remote sensing (RS) literature, where many semi-automatic image segmentation/image-contour detection algorithms are based on thresholding the local variance, contrast or first-order gradient, e.g., [157,158,159], where a system’s free-parameter for thresholding image-objects or image-contours must be user-defined in range ∈ (0, +∞) based on heuristics.

Although it is largely ignored by large portions of the RS community, the unequivocal true fact that inherently ill-posed CV ⊃ EO-IU algorithms for radiometric Cal of DNs into SURF or surface albedo values do require EO image classification to be performed in advance is implicitly confirmed by existing open source or commercial software toolboxes for EO image enhancement (pre-processing), critically reviewed hereafter.

Supported by NASA, the baseline of the U.S. Landsat ARD format [139,140,141,142,143] is atmospheric effect removal by the open source Landsat-4/5/7 Ecosystem Disturbance Adaptive Processing System (LEDAPS). In LEDAPS, exclusion masks for water, cloud, shadow and snow surface types were detected by an over-simplistic set of prior knowledge-based spectral decision rules applied per pixel [146]. Quantitative analyses of LEDAPS products led by its authors revealed that these exclusion masks were prone to errors, to be corrected in future LEDAPS releases [146]. The same considerations hold for the Landsat 8 OLI/TIRS-specific Landsat Surface Reflectance Code (LaSRC) [160] adopted by the U.S. Landsat ARD format [139,140,141,142,143]. Unfortunately, suitable for testing or Val purposes, a multi-level image consisting of exclusion masks has never been generated as standard output by either LEDAPS or LaSRC. To detect quality layers cloud and cloud–shadow, in addition to snow/ice pixels, recent versions of LEDAPS and LaSRC adopted the open source C Function of Mask (CFMask) algorithm [139,140]. CFMask was derived from the open source Function of Mask (FMask) algorithm [58,59], translated into the C programming language to facilitate its implementation in a production environment. Unfortunately, to date, in a recent comparison of cloud and cloud–shadow detectors, those implemented in LEDAPS scored low among alternative solutions [62]. By the way, potential users of U.S. Landsat ARD imagery are informed by USGS in advance about typical CFMask artifacts [63]. Like other cloud detection algorithms [61,62], CFMask may have difficulties over bright surface types such as building tops, beaches, snow/ice, sand dunes, and salt lakes. Optically thin clouds will always be challenging to identify and have a higher probability of being omitted by the U.S. Landsat ARD algorithm. In addition, the algorithm performance has only been validated for cloud detection, and to a lesser extent for cloud shadows. No rigorous evaluation of the snow/ice detection has ever been performed [63].

Transcoded into CFMask by the U.S. Landsat ARD processor, the open source Fmask algorithm for cloud, cloud–shadow and snow/ice detection was originally developed for single-date 30 m resolution 7-band (from visible blue, B, to thermal InfraRed, TIR) Landsat-5/7/8 MS imagery, which includes a thermal band as key input data requirement [58]. In recent years, FMask was extended to 10 m/20 m resolution Sentinel-2 MS imagery [59], featuring no thermal band, and to Landsat image time-series (multiTemporal Mask, TMask) [60]. For more details about the FMask software design and implementation, refer to the further Section 3.

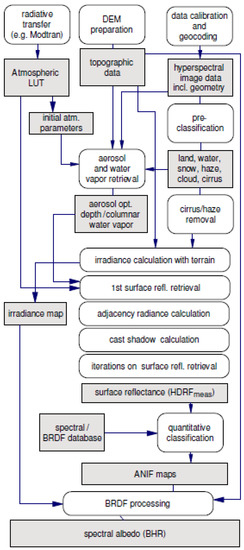

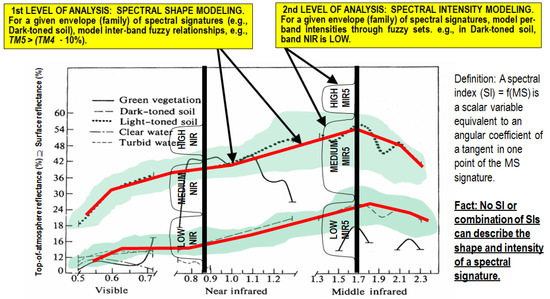

In the Atmospheric/Topographic Correction for Satellite Imagery (ATCOR) commercial software product, several per-pixel (spatial context-insensitive) deductive spectral rule-based decision trees are implemented for use in different stages of an EO image enhancement pipeline [71,72,73,74,161,162], see Figure 10. According to Richter and Schläpfer [71,72], “pre-classification as part of the atmospheric correction has a long history, e.g., in the NASA’s processing chain for MODIS” [57], also refer to [12,56]. One of the ATCOR’s prior knowledge-based per-pixel decision trees delivers as output a haze/cloud/water (and snow) classification mask file (“image_hcw.bsq”), see Table 3. In addition, ATCOR includes a so-called prior knowledge-based decision tree for Spectral Classification of surface reflectance signatures (SPECL) [73], see Table 4. Unfortunately, SPECL has never been tested by its authors in the RS literature, although it has been validated by independent means [161,162].

Figure 10.