Towards HD Maps from Aerial Imagery: Robust Lane Marking Segmentation Using Country-Scale Imagery

Abstract

:1. Introduction

1.1. HD Maps for Ego Positioning

1.2. HD Maps for Scene Understanding

1.3. Experiments on Public Roads Using HD Maps

1.4. Descriptive Parameters, Metrics and Content of HD Maps

1.5. HD Maps and Aerial/Satellite Imagery, Literature Review

1.6. Aim of This Paper

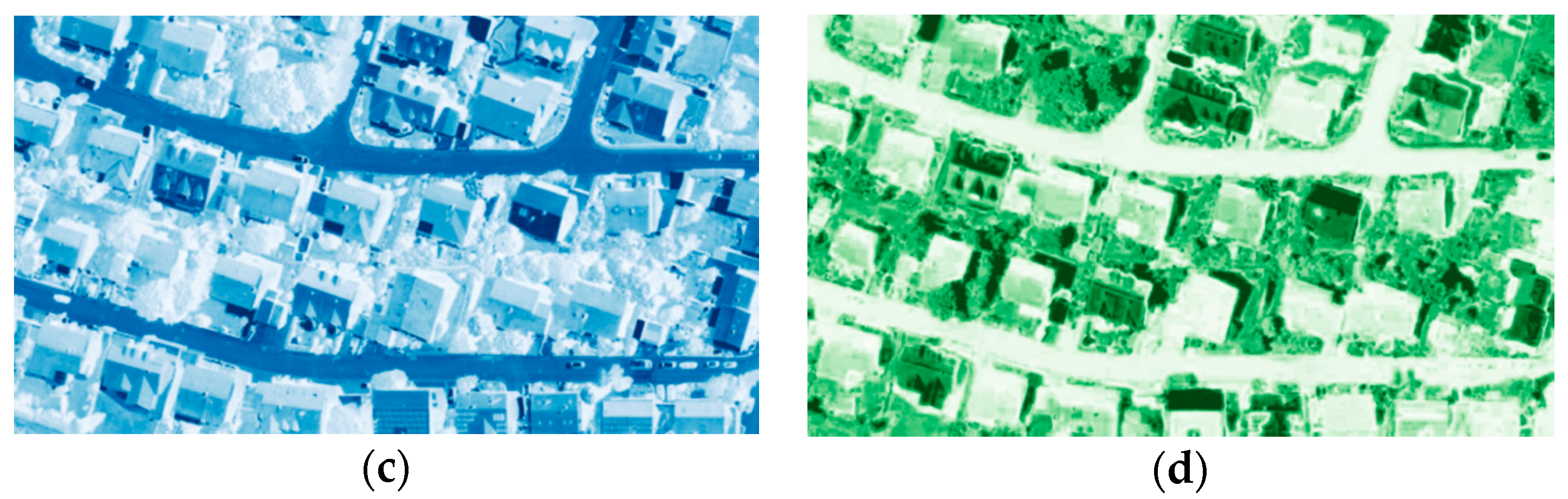

2. Materials/Image Data

3. Methodology

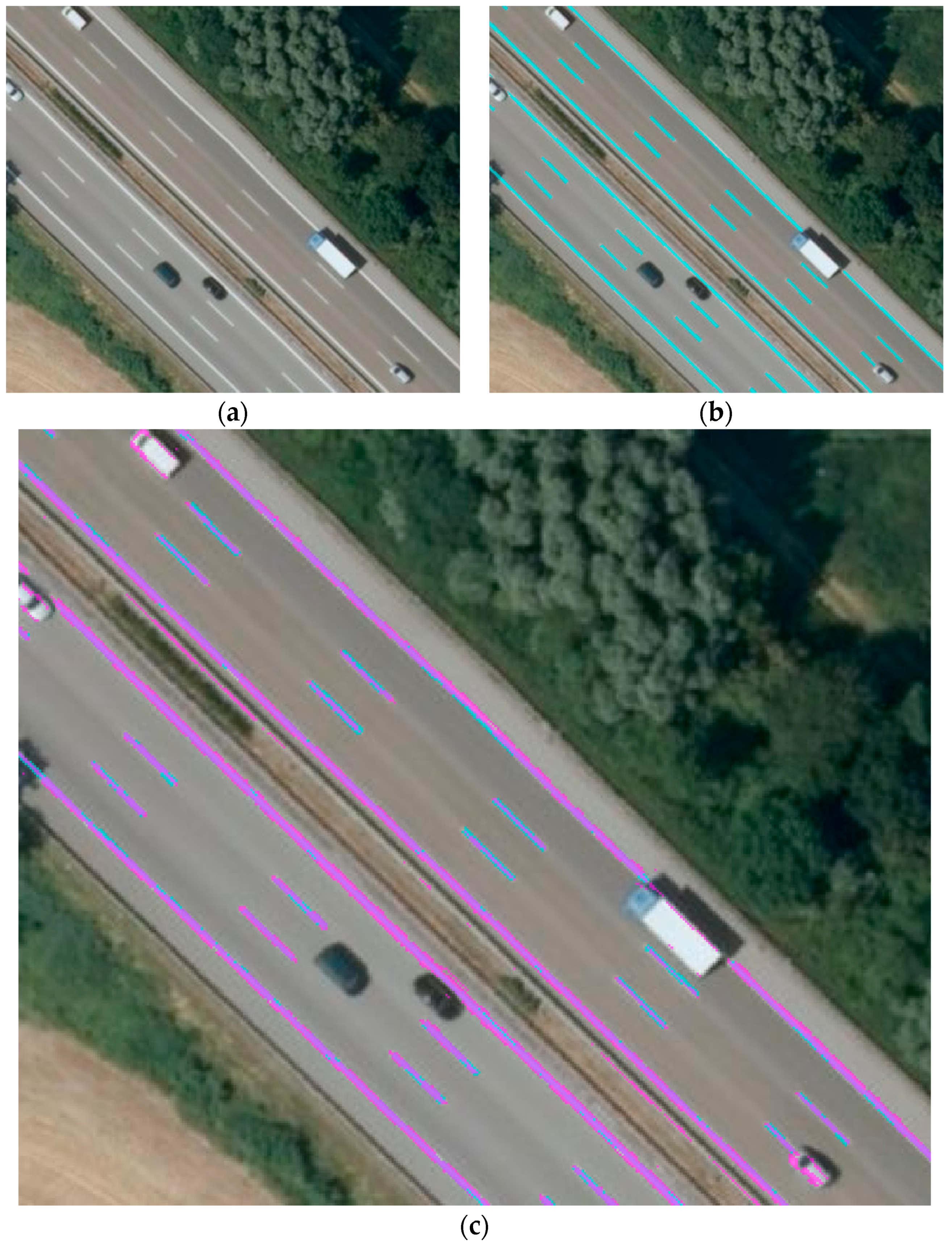

3.1. Raw Image Segmentation

3.2. Image Classification—Lane Marking Determination

4. Results and Discussion

4.1. Results

4.2. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Anderson, J.M.; Kalra, N.; Stanley, K.D.; Sorensen, P.; Samaras, C.; Oluwatola, O.A. Autonomous Vehicle Technology: A Guide for Policymakers; RAND Corporation: Santa Monica, CA, USA, 2014. [Google Scholar]

- Bagloe, S.A.; Tavana, M.; Asadi, M.; Oliver, T. Autonomous vehicles: Challenges, opportunities, and future implications for transportation policies. J. Mod. Transp. 2016, 24, 284–303. [Google Scholar] [CrossRef]

- Geiger, A. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), CVPR ’12, Providence, RI, USA, 16–21 June 2012; IEEE Computer Society: Washington, DC, USA, 2012; pp. 3354–3361. [Google Scholar]

- Seif, H.G.; Hu, X. Autonomous driving in the ICity—HD maps as a key challenge of the automotive industry. Engineering 2016, 2, 159–162. [Google Scholar] [CrossRef]

- Obst, M.; Bauer, S.; Reisdorf, P.; Wanielik, G. Multipath detection with 3D digital maps for robust multi-constellation GNSS/INS vehicle localization in urban areas. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Alcala de Henares, Spain, 3–7 June 2012; pp. 184–190. [Google Scholar]

- Tao, Z.; Bonnifait, P. Tightly coupling GPS with lanemarkings for autonomous vehicle navigation. In Proceedings of the 17th International IEEE Conference on Intelligent TransportationSystems (ITSC), Qingdao, China, 8–11 October 2014; pp. 439–444. [Google Scholar]

- Tao, Z.; Bonnifait, P. Road invariant extended Kalman filter for an enhanced estimation of GPS errors using lane markings. In Proceedings of the2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 3119–3124. [Google Scholar]

- Hosseinyalamdary, S.; Peter, M. Lane level localization; using images and HD maps to mitigate the lateral error. ISPRSInt. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-1/W1, 129–134. [Google Scholar] [CrossRef]

- Bauer, S.; Alkhorshid, Y.; Wanielik, G. Using high definition maps for precise urban vehicle localization. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 492–497. [Google Scholar]

- Schreiber, M.; Knöppel, C.; Franke, U. Laneloc: Lanemarking based localization using highly accurate maps. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; pp. 449–454. [Google Scholar]

- Burgard, W.; Brock, O.; Stachniss, C. Map-Based Precision Vehicle Localization in Urban Environments; MIT Press: Cambridge, MA, USA, 2008. [Google Scholar]

- Wang, S.; Urtason, S.; Filder, S. Holistic 3d scene understanding from a single monocular image. In Proceedings of the CVPR 2015 28th IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Kammel, S.; Ziegler, J.; Pitzer, B.; Werling, M.; Gindele, T.; Jagszent, D.; Schröder, J.; Thuy, M.; Goebl, M.; von Hundelshausen, F.; et al. Team annieway’s autonomous system for the DARPA urban challenge 2007. In The DARPA Urban Challenge, Springer Transactions in Advanced Robotics; Buehler, M., Iagnemma, K., Singh, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 56, pp. 359–391. [Google Scholar]

- Ziegler, J.; Bender, P.; Schreiber, M.; Lategahn, H.; Strauss, T.; Stiller, C.; Dang, T.; Franke, U.; Appenrodt, N.; Keller, C.G.; et al. Making bertha drive—An autonomous journey on a historic route. IEEE Intell. Transp. Syst. Mag. 2014, 6, 8–20. [Google Scholar] [CrossRef]

- Aeberhard, M.; Rauch, S.; Bahram, M.; Tanzmeister, G.; Thomas, J.; Pilat, Y.; Homm, F.; Huber, W.; Kaempchen, N. Experience, results and lessons learned from automated driving on Germany’s highways. IEEE Intell. Transp. Syst.Mag. 2015, 7, 42–57. [Google Scholar] [CrossRef]

- Macfarlane, J.; Stroila, M. Addressing the uncertainties in autonomous driving. SIGSPATIAL Spec. 2016, 8, 35–40. [Google Scholar] [CrossRef]

- Massow, K.; Kwella, B.; Pfeifer, N.; Husler, F.; Pontow, J.; Radusch, I.; Hipp, J.; Dlitzscher, F.; Haueis, M. Deriving HD maps for highly automated driving from vehicular probedata. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1745–1752. [Google Scholar]

- Dabeer, O.; Gowaiker, R.; Grzechnik, S.K.; Lakshman, M.J.; Reitmayr, G.; Somasundaram, K.; Sukhavasi, R.T.; Wu, X. An end-to-end system for crowd sourced 3d maps for autonomous vehicles: The mapping component. arXiv, 2017; arXiv:1703.10193. [Google Scholar]

- Kim, J.G.; Han, D.Y.; Yu, K.Y.; Kim, Y.I.; Rhee, S.M. Efficient extraction of road information for car navigation applications using road pavement markings obtained from aerial images. Can. J. Civil Eng. 2006, 33, 1320–1331. [Google Scholar] [CrossRef]

- Jin, H.; Miska, M.; Chung, E.; Li, M.; Feng, Y. Road feature extraction from high resolution aerial images upon rural regions based on multi-resolution image analysis and Gabor filters. In Remote Sensing-Advanced Techniques and Platforms; IntechOpen: Rijeka, Croatia, 2012. [Google Scholar]

- Jin, H.; Feng, Y. Automated road pavement marking detection from high resolution aerial images based on multi-resolution image analysis and anisotropic Gaussian filtering. In Proceedings of the 2010 2nd International Conference onSignal Processing Systems (ICSPS), Dalian, China, 5–7 July 2010; Volume 1, pp. 337–341. [Google Scholar]

- Jin, H.; Feng, Y.; Li, M. Towards an automatic system for road lane marking extraction in large-scale aerial images acquired over rural areas by hierarchical image analysis and Gabor filter. Int. J. Remote Sens. 2012, 33, 2747–2769. [Google Scholar] [CrossRef]

- Hinz, S.; Baumgartner, A. Automatic extraction of urban road networks from multi-view aerial imagery. ISPRS J. Photogramm. Remote Sens. 2003, 58, 83–98. [Google Scholar] [CrossRef] [Green Version]

- Mnih, V.; Hinton, G.E. Learning to Detect Roads in High Resolution Aerial Images; Springer: Berlin/Heidelberg, Germany, 2010; pp. 210–223. [Google Scholar]

- Mattyus, G.; Wang, S.; Fidler, S.; Urtasun, R. HD maps: Fine-grained road segmentation by parsing ground and aerial images. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3611–3619. [Google Scholar]

- Gellert, M.; Luo, W.; Urtasun, R. DeepRoadMapper: Extracting Road Topology from Aerial Images. In Proceedings of the International Conference on Computer Vision (CVPR), Honolulu, Hawaii, 21–26 July 2017. [Google Scholar]

- Mayer, H.; Hinz, S.; Bacher, U.; Baltsavias, E. A test of automatic road extraction approaches. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 209–214. [Google Scholar]

- Wang, W.; Yang, N.; Zhang, Y.; Wang, F.; Cao, T.; Eklund, P. A review of road extraction from remote sensing images. J. Traffic Transp. Eng. (Engl. Ed.) 2016, 3, 271–282. [Google Scholar] [CrossRef]

- Greenwalt, C.; Shultz, M. Principles of Error Theory and Cartographic Applications; ACIC Technical Report; Aeronautical Chart and Information Center: Springfield, VA, USA, 1965. [Google Scholar]

- Javanmardi, M.; Javanmardi, E.; Gu, Y.; Kamijo, S. Towards high-definition 3D urban mapping: Road feature-based registration of mobile mapping systems and aerial imagery. Remote Sens. 2017, 9, 975. [Google Scholar] [CrossRef]

- Huang, J.; Liang, H.; Wang, Z.; Song, Y.; Deng, Y. December. Lane marking detection based on adaptive threshold segmentation and road classification. In Proceedings of the 2014 IEEE International Conference on Robotics and Biomimetics (ROBIO), Bali, Indonesia, 5–10 December 2014; pp. 291–296. [Google Scholar]

- Tournaire, O.; Paparoditis, N.; Lafarge, F. Rectangular road marking detection with marked point processes. In Proceedings of theConference on Photogrammetric Image Analysis, Munich, Germany, 19–21 September 2007; Volume 3. [Google Scholar]

- Azimi, S.M.; Fischer, P.; Körner, M.; Reinartz, P. Aerial LaneNet: Lane Marking Semantic Segmentation in Aerial Imagery using Wavelet-Enhanced Cost-sensitive Symmetric Fully Convolutional Neural Networks. arXiv, 2018; arXiv:1803.06904. [Google Scholar]

- Lee, S.; Kim, J.; Yoon, J.S.; Shin, S.; Bailo, O.; Kim, N.; Lee, T.H.; Hong, H.S.; Han, S.H.; Kweon, I.S. October. Vpgnet: Vanishing point guided network for lane and road marking detection and recognition. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1965–1973. [Google Scholar]

- Gurghian, A.; Koduri, T.; Bailur, S.V.; Carey, K.J.; Murali, V.N. Deeplanes: End-to-end lane position estimation using deep neural networksa. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 38–45. [Google Scholar]

- Baltrusch, S. TrueDOP—A new quality step for official orthophotos. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B4, 619–624. [Google Scholar] [CrossRef]

- Fischer, P.; Plaß, B.; Kurz, F.; Krauß, T.; Runge, H. Validation of HD Maps for autonomous driving. In Proceedings of the International Conference on Intelligent Transportation Systems in Theory and Practice mobil.TUM, Munich, Germany, 4–6 July 2017. [Google Scholar]

- Kurz, F.; Waigand, D.; Pekezou-Fouopi, P.; Vig, E.; Corentin, H.; Merkle, N.; Rosenbaum, D.; Gstaiger, V.; Azimi, S.M.; Auer, S.; et al. DLRAD—A first look on the new vision and mapping benchmark dataset. In Proceedings of the ISPRS TC1 Symposium—Accepted Contribution, Hannover, Germany, 10–12 October 2018. [Google Scholar]

| Scene | Accuracy | Sensitivity | IoU |

|---|---|---|---|

| 1 | 0.99 | 0.54 | 0.5 |

| 2 | 0.99 | 0.65 | 0.6 |

| 3 | 0.99 | 0.62 | 0.59 |

| Mean | 0.99 | 0.6 | 0.56 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fischer, P.; Azimi, S.M.; Roschlaub, R.; Krauß, T. Towards HD Maps from Aerial Imagery: Robust Lane Marking Segmentation Using Country-Scale Imagery. ISPRS Int. J. Geo-Inf. 2018, 7, 458. https://doi.org/10.3390/ijgi7120458

Fischer P, Azimi SM, Roschlaub R, Krauß T. Towards HD Maps from Aerial Imagery: Robust Lane Marking Segmentation Using Country-Scale Imagery. ISPRS International Journal of Geo-Information. 2018; 7(12):458. https://doi.org/10.3390/ijgi7120458

Chicago/Turabian StyleFischer, Peter, Seyed Majid Azimi, Robert Roschlaub, and Thomas Krauß. 2018. "Towards HD Maps from Aerial Imagery: Robust Lane Marking Segmentation Using Country-Scale Imagery" ISPRS International Journal of Geo-Information 7, no. 12: 458. https://doi.org/10.3390/ijgi7120458