3D Visualization of Trees Based on a Sphere-Board Model

Abstract

:1. Introduction

1.1. Tree Modeling

1.1.1. Rule-Based Methods

1.1.2. Image-Based Methods

1.2. Tree Rendering

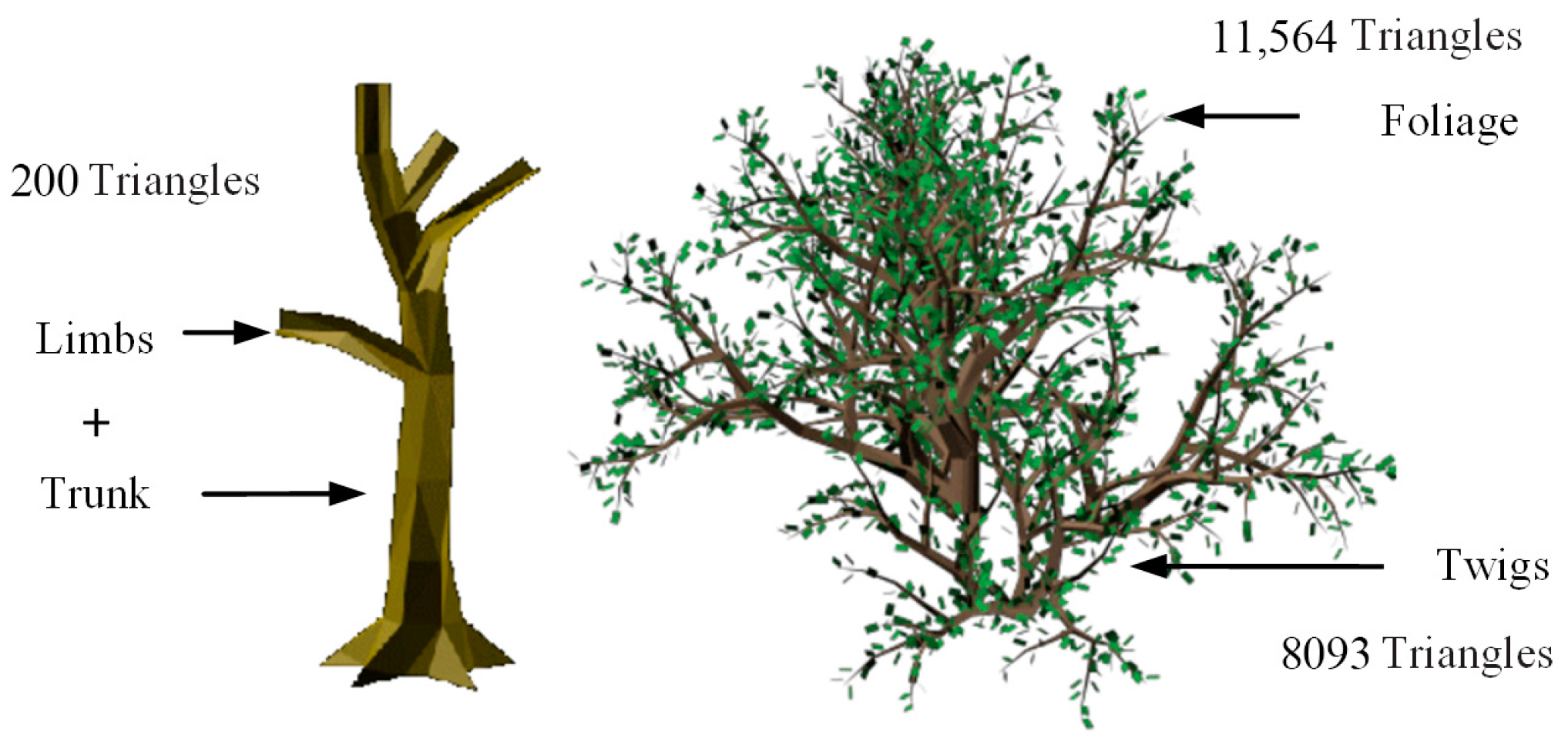

1.2.1. Graphics-Based Methods

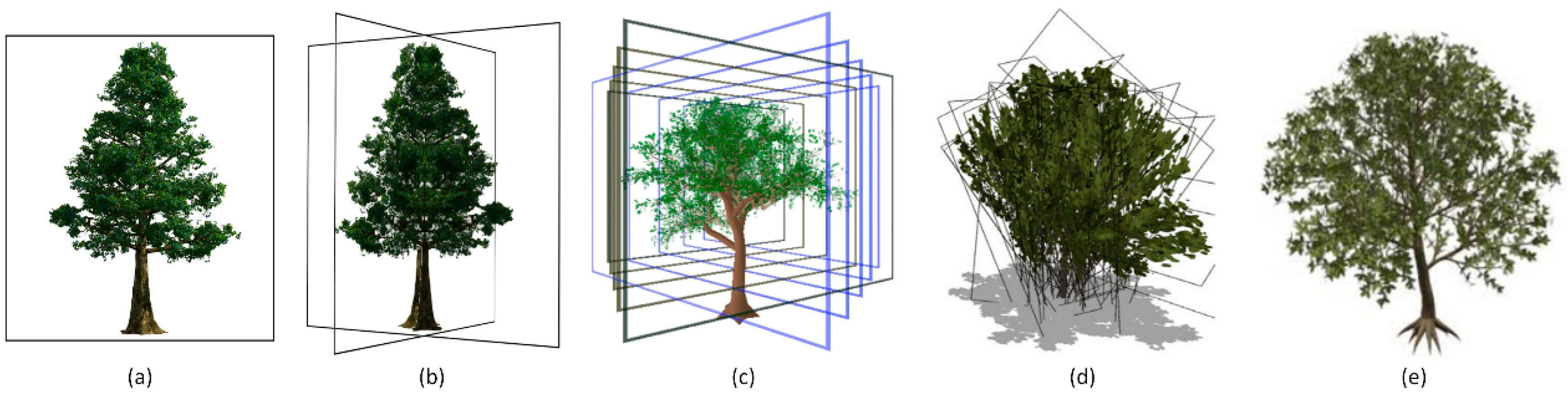

1.2.2. Image-Based Methods

1.3. Texture Synthesis

2. Sphere-Board-Based Tree Modeling

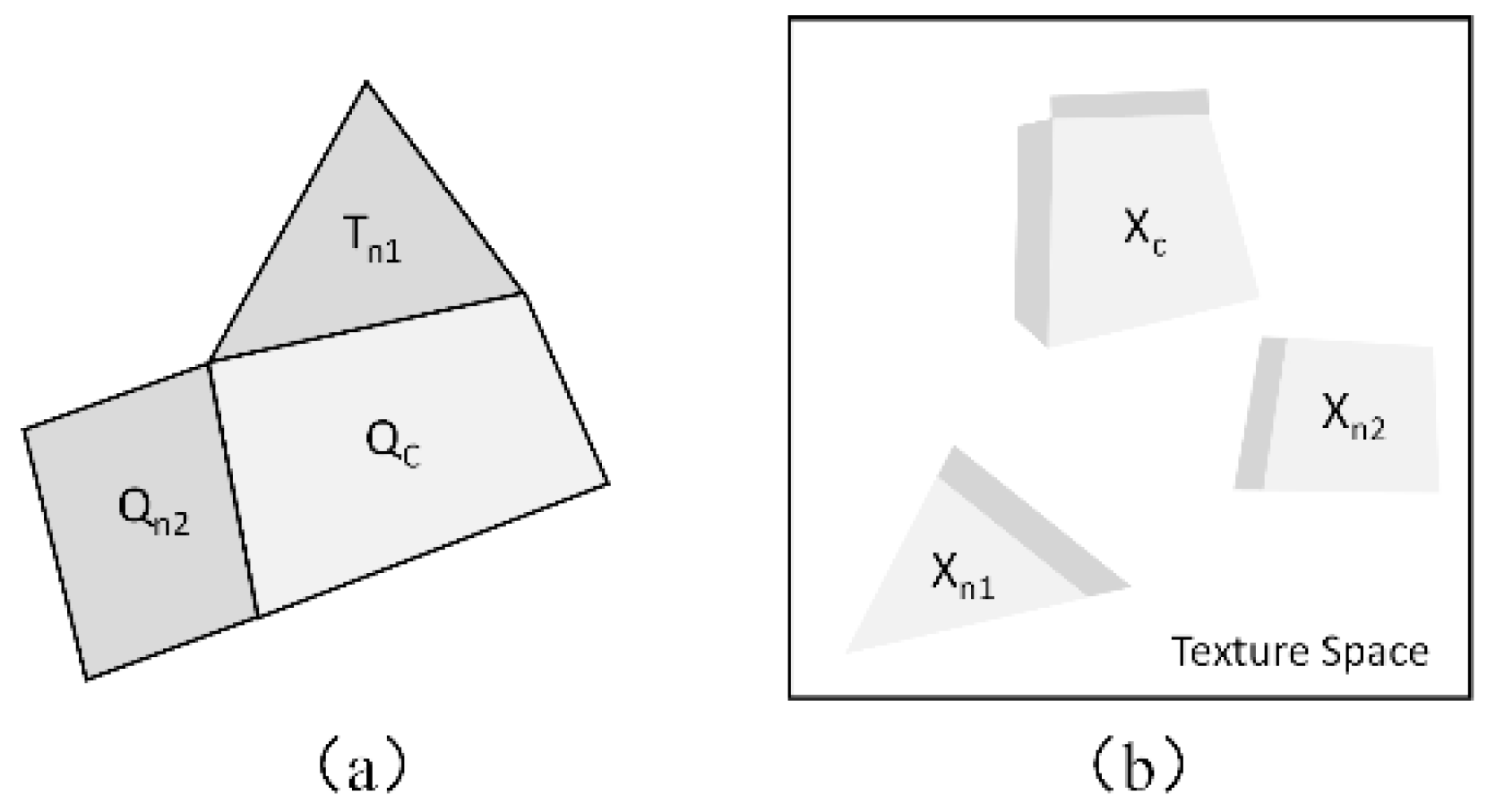

2.1. Billboard to Sphere-Board

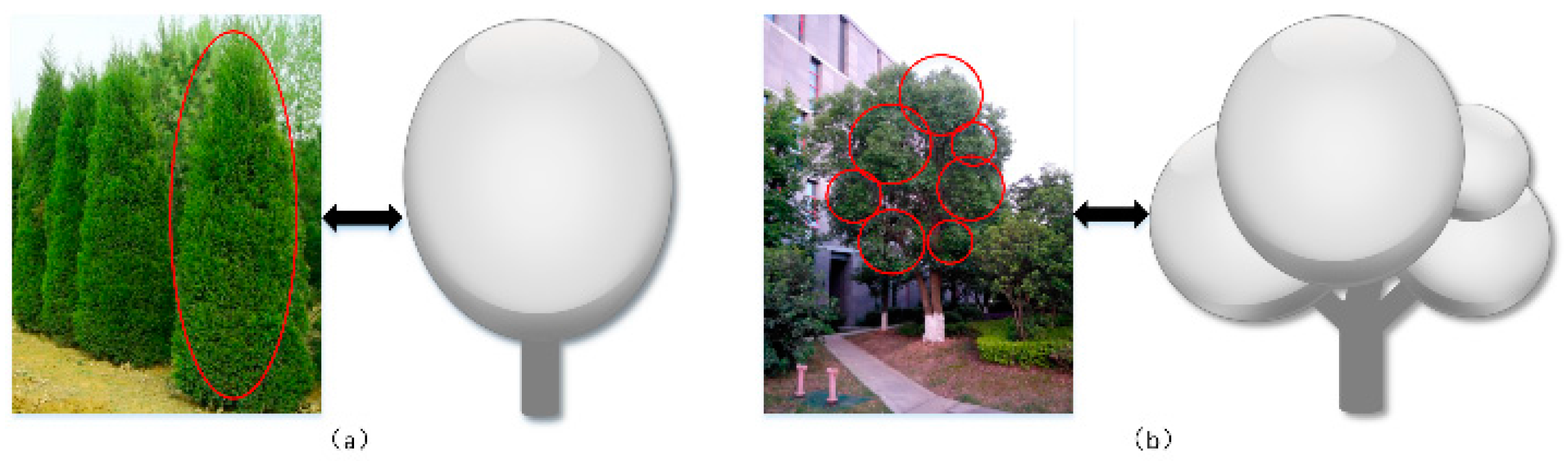

2.2. Applicable Tree Styles

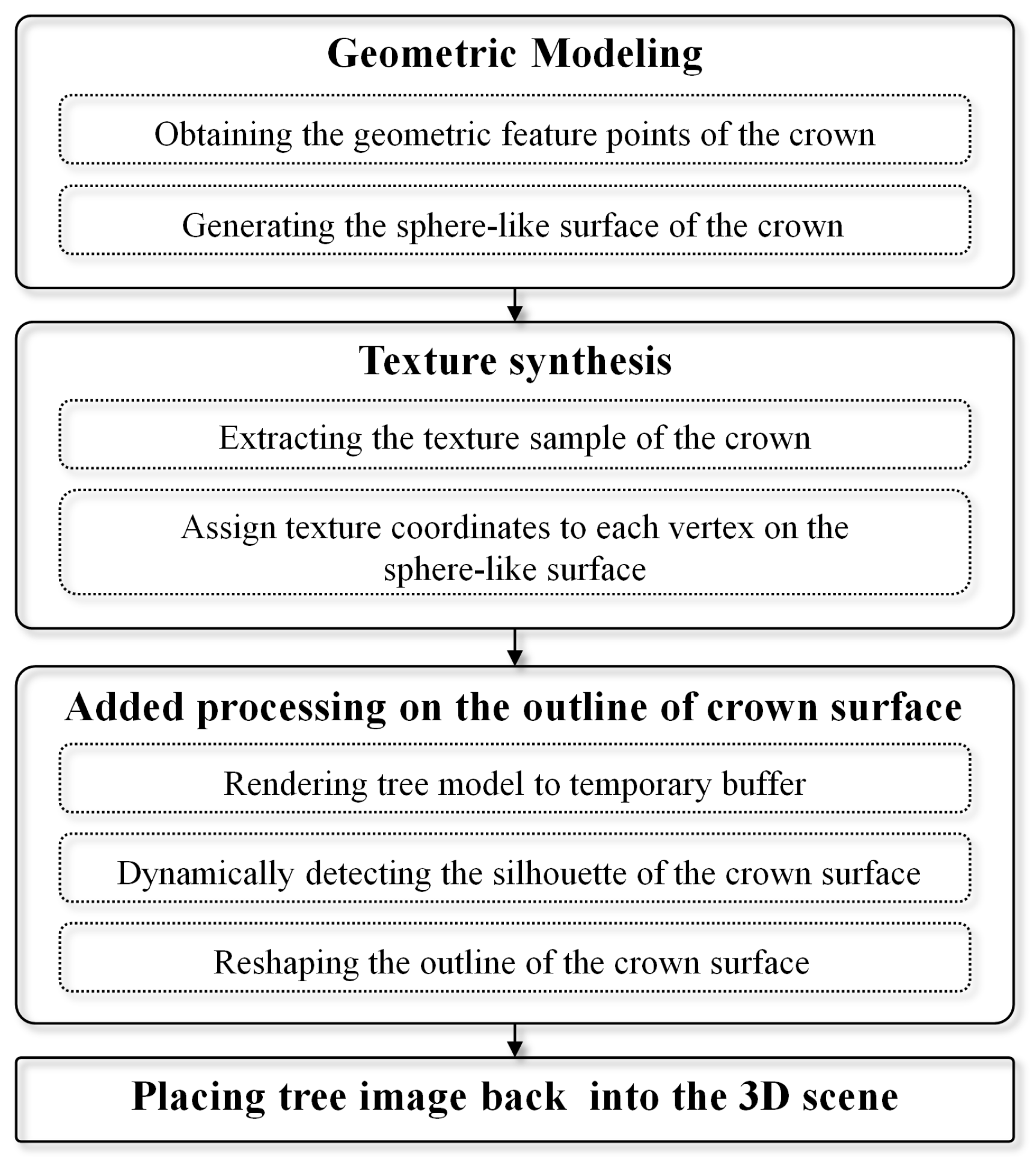

2.3. Process of the Technique

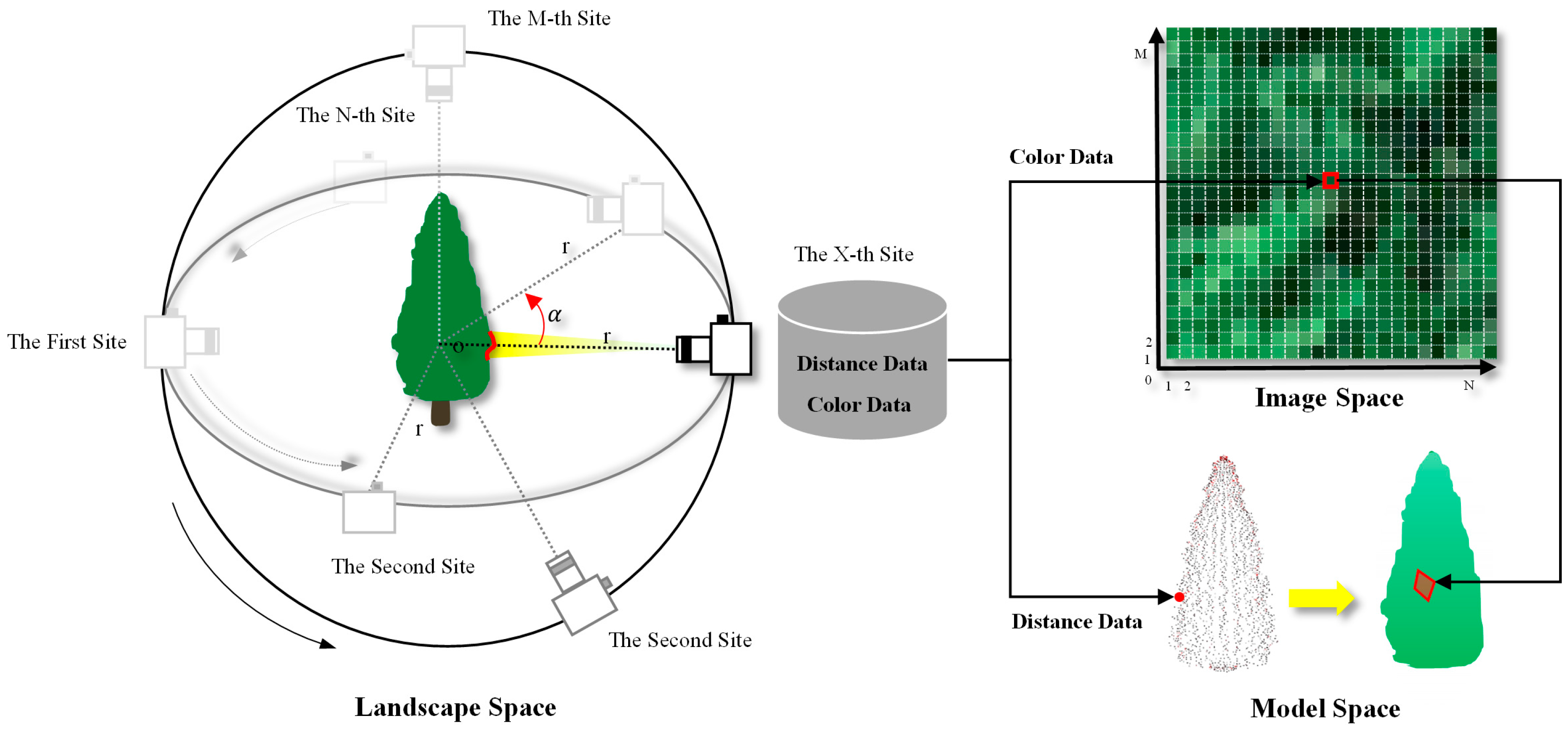

3. Case Study of Sabina Chinensis

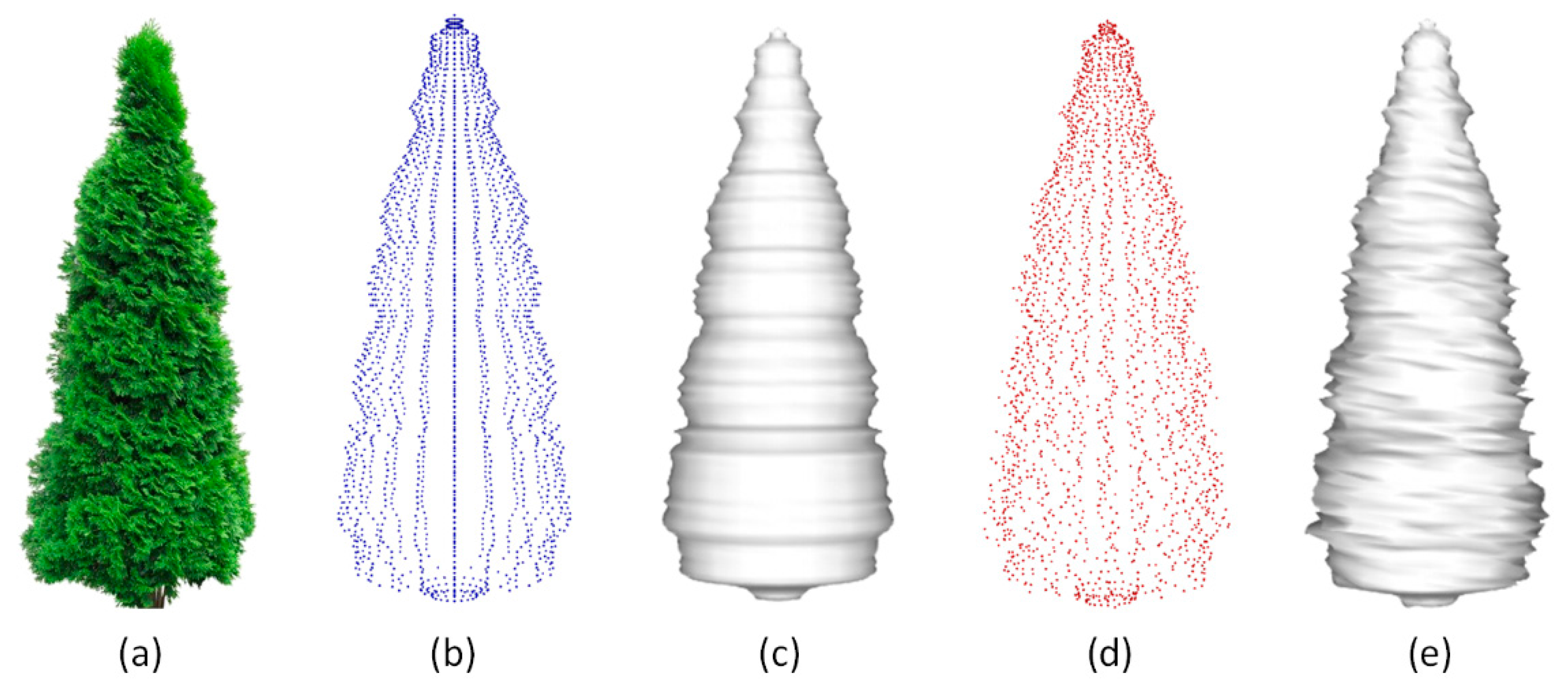

3.1. Geometric Modeling

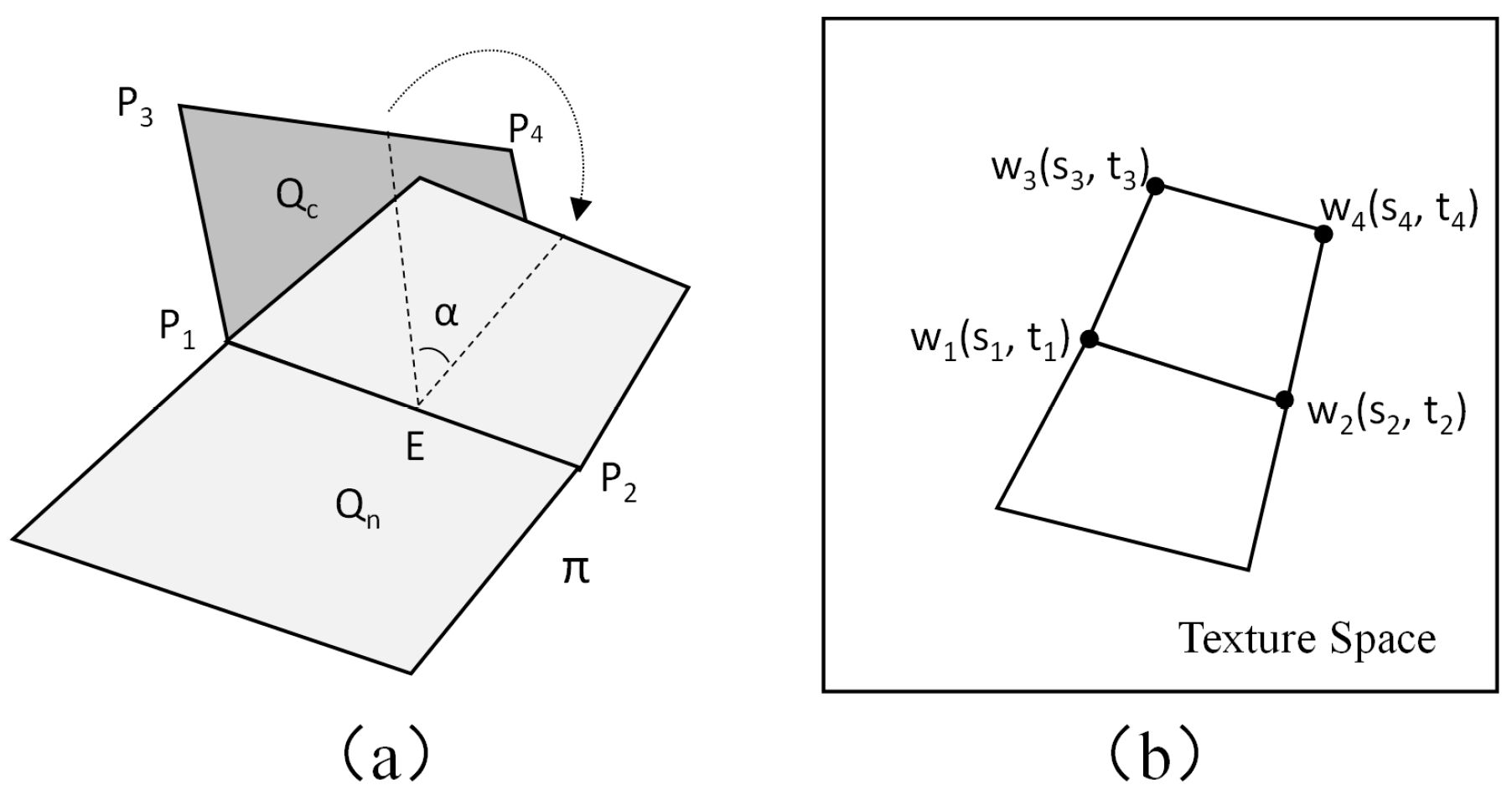

3.2. Texture Synthesis

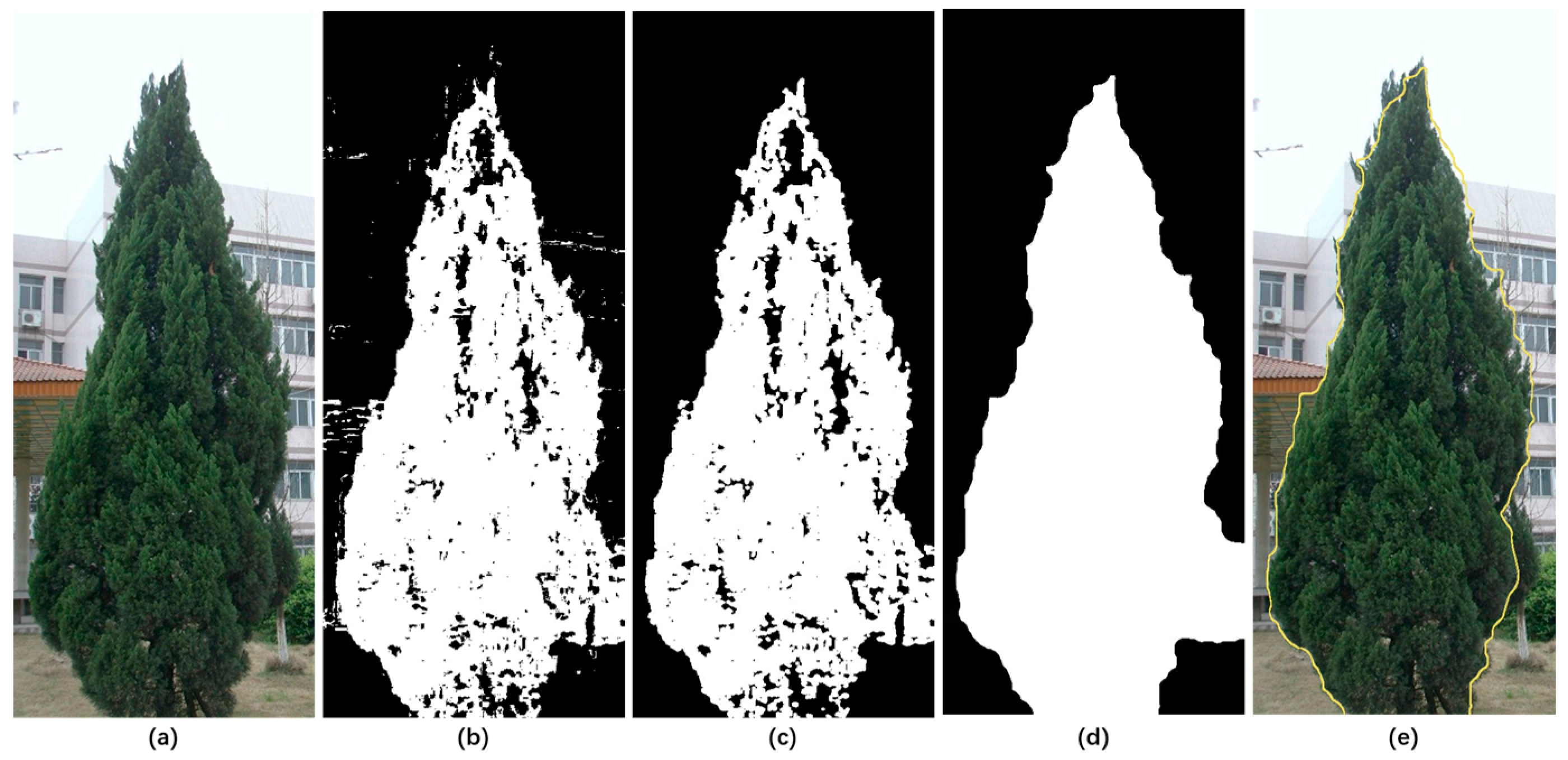

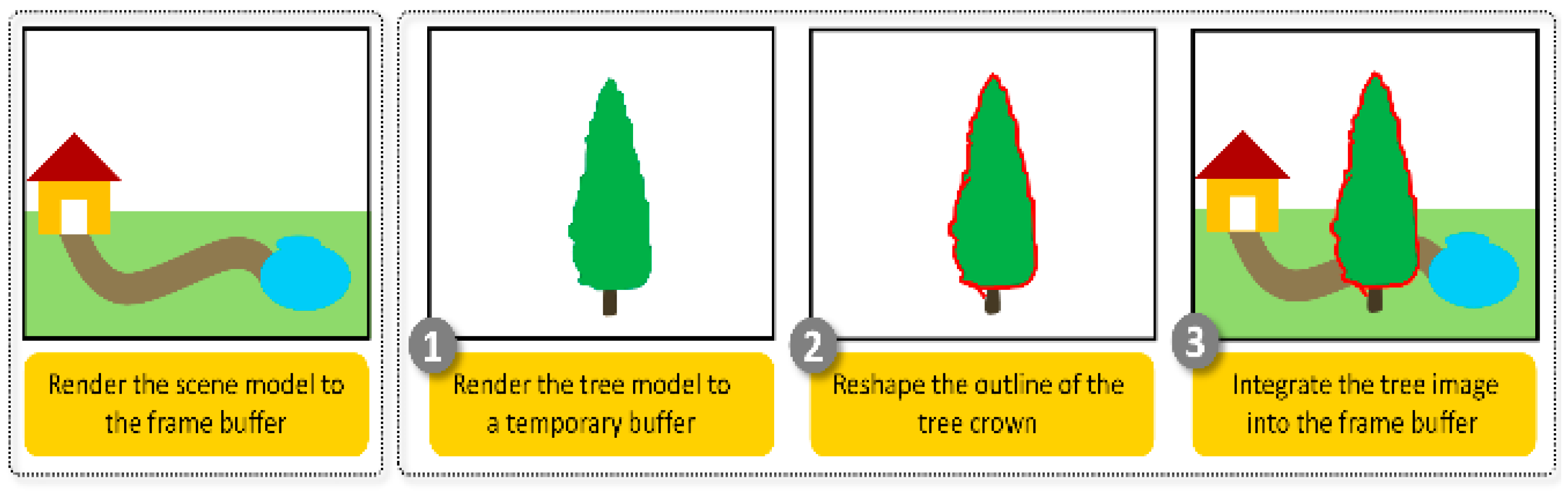

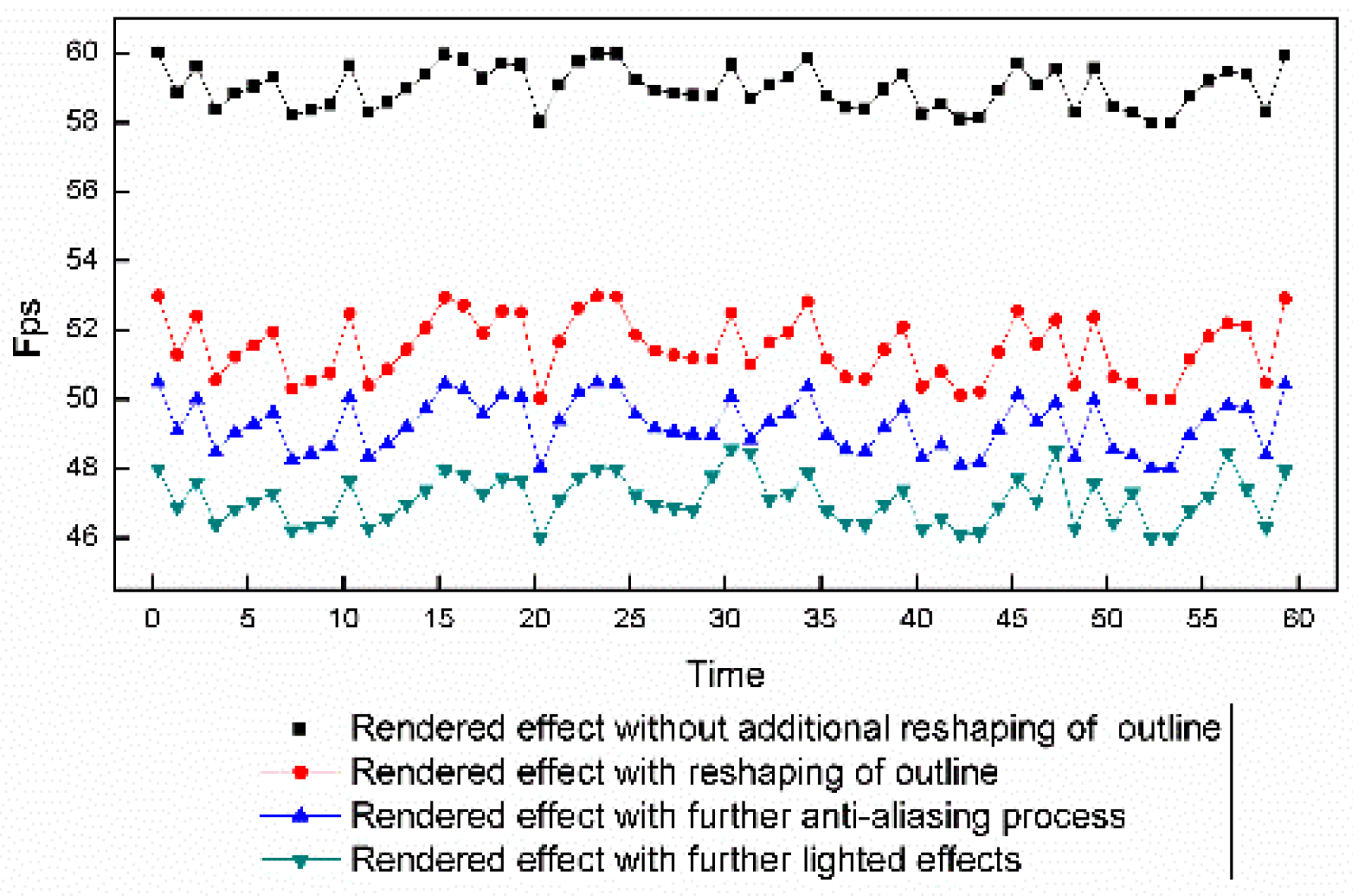

3.3. Reshaping of the Crown Surface Outline

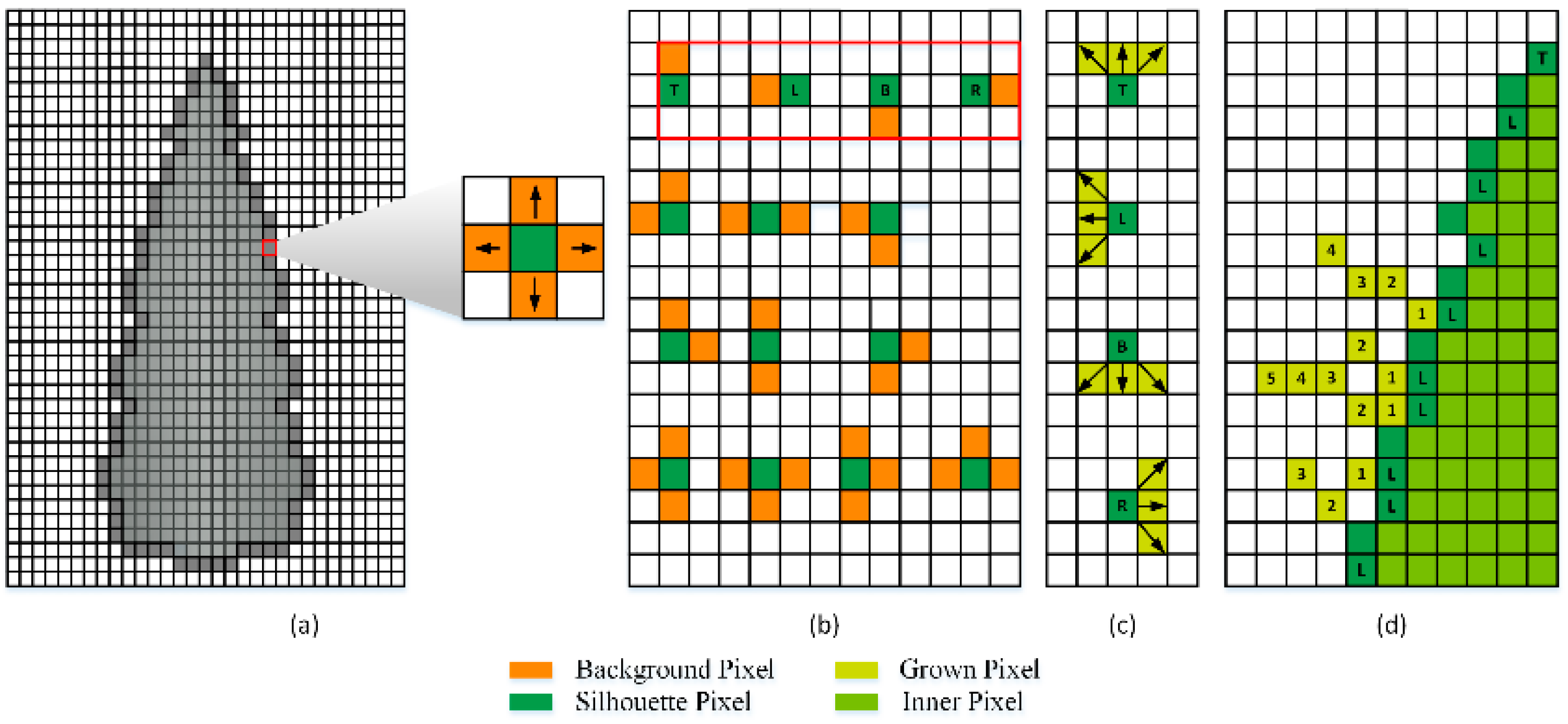

- Silhouette pixels: When rendering a tree into a temporary buffer, the resulting depth value of the tree area is distinctly different from that of the background area, which can assist in determining the tree area. For each pixel in the tree area, if it has one or more neighboring background pixels, the pixel is considered to be a silhouette pixel (Figure 13a).

- Root pixels: The silhouette pixels with only one neighboring background pixel (marked with a red box in Figure 13b) are selected as root pixels, which are made to grow randomly to simulate the irregularity and burr effects of the tree crown.

- Grown pixels: The new pixels grown from the root pixels are defined as grown pixels. They are described by a 3D vector (direction, color, depth). The growth direction is randomly copied from one of three predefined values (Figure 13c). The grown pixels share the color and depth value of their root pixels.

- Growth length: The total accumulated quantity of grown pixels is termed the growth length (GL), which is limited to lie within a predefined range (Qmin, Qmax) (Figure 13d). The limitation range varies dynamically based on the depth value of the root pixel, i.e., the growth limitations may be different for root pixels located at different distances.

3.4. Integration of the Rendering Results

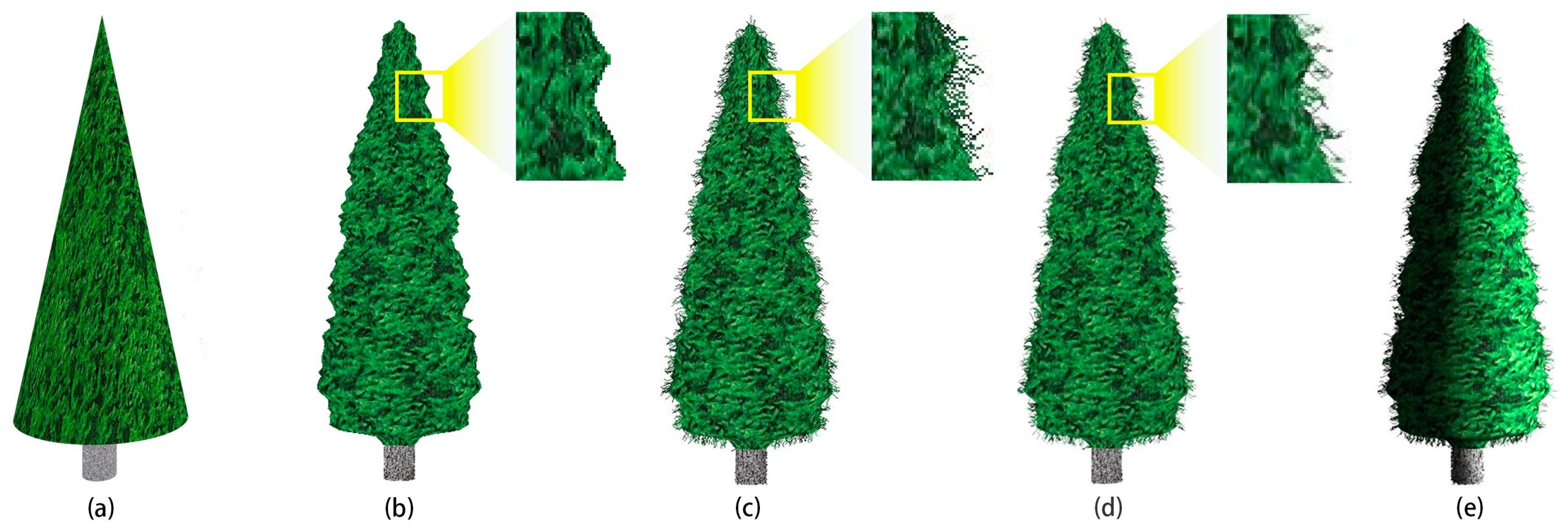

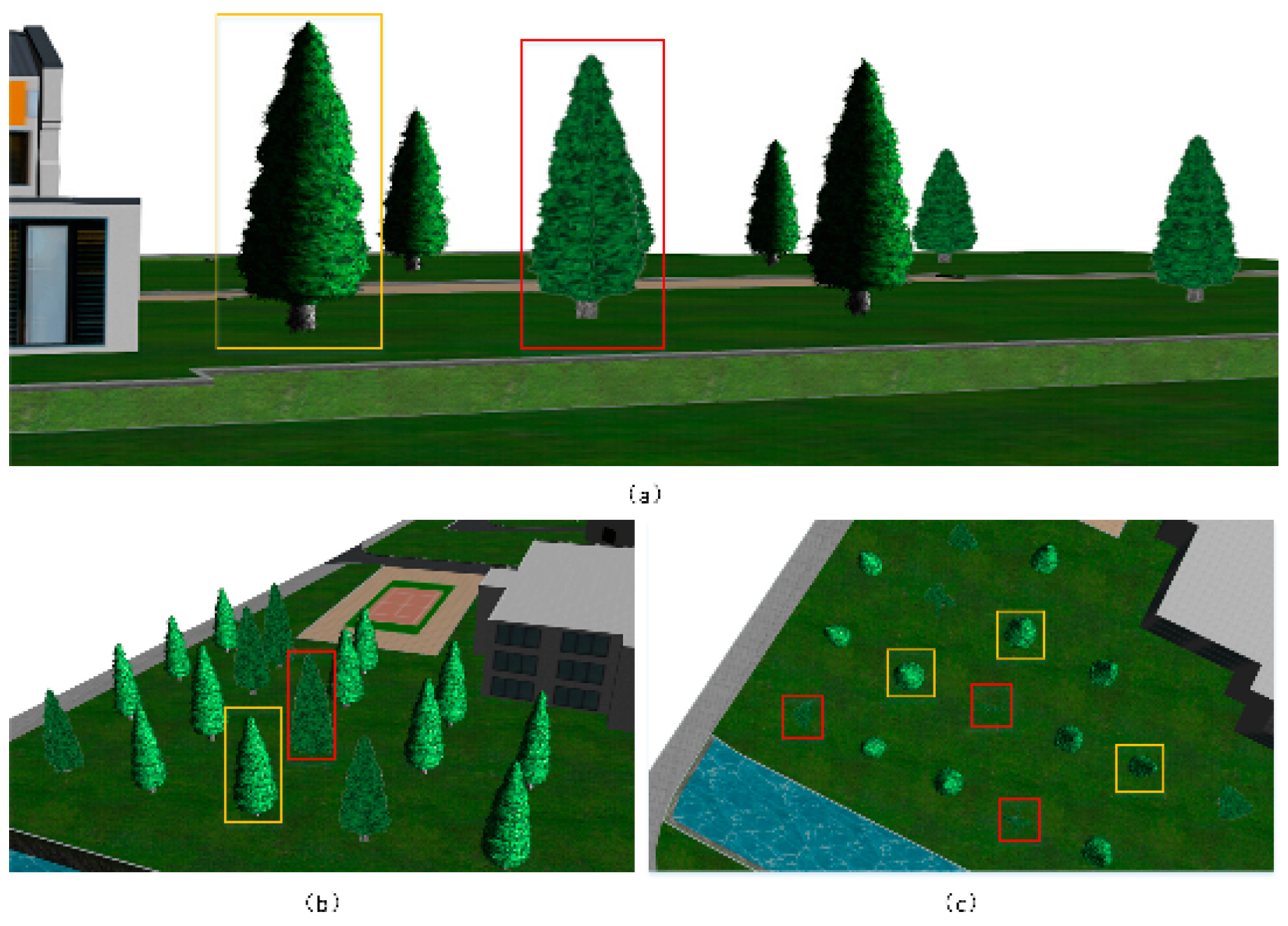

3.5. Final 3D Effects

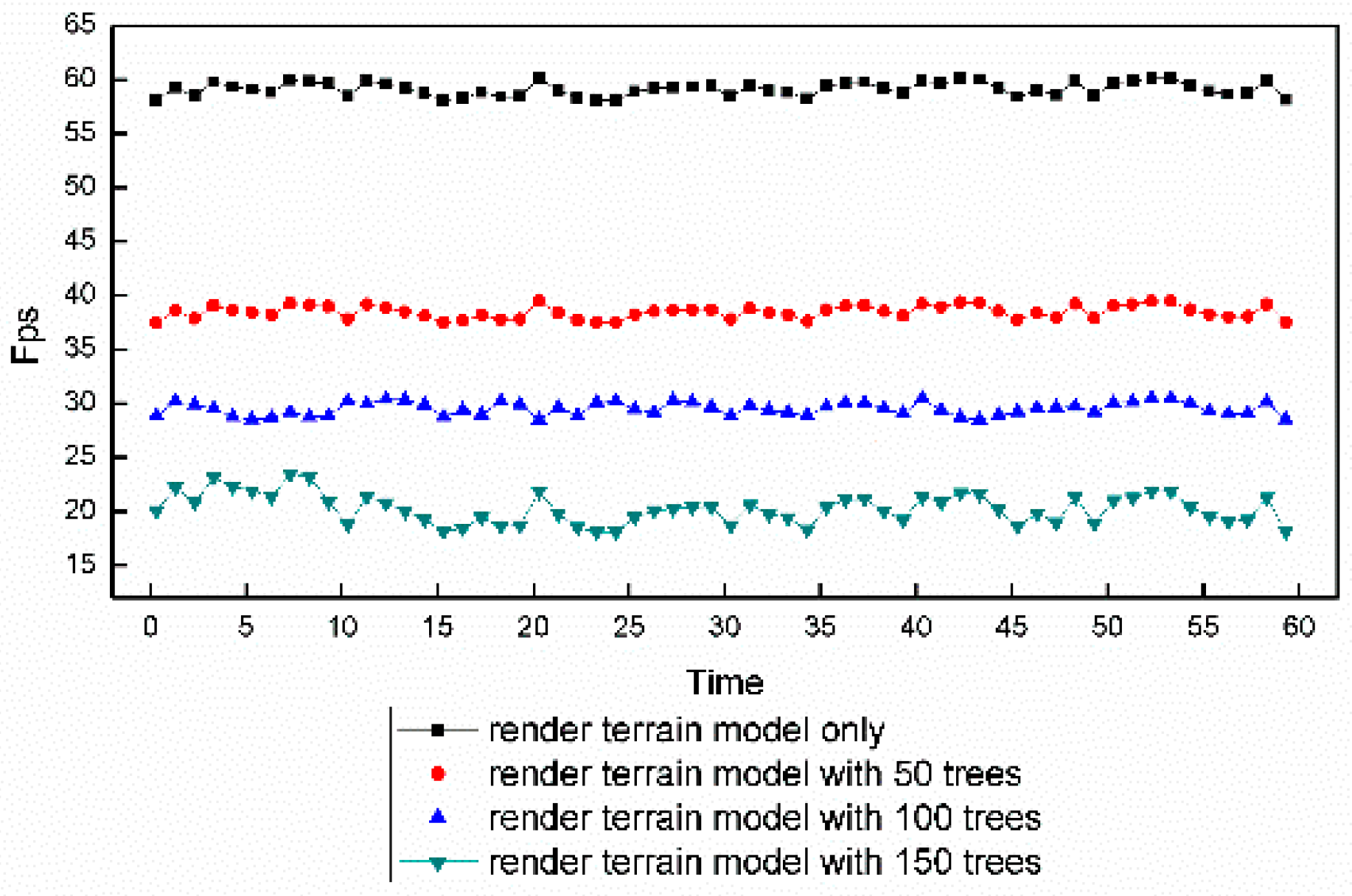

4. Discussion and Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Gardner, G.Y. Simulation of natural scenes using textured quadric surfaces. In Proceedings of the SIGGRAPH ’84 Conf Proc (11th Annu Conf on Comput Graph and Interact Tech), Minneapolis, MN, USA, 23–27 July 1984; ACM: New York, NY, USA, 1984; Volume 18, pp. 11–20. [Google Scholar]

- Boudon, F.; Meyer, A.; Godin, C. Survey on Computer Representations of Trees for Realistic and Efficient Rendering; Research Report; CNRS: Paris, France, 2006; Available online: https://hal.inria.fr/hal-00830069/ (accessed on 29 January 2018).

- Quan, L.; Tan, P.; Zeng, G.; Yuan, L.; Wang, J.; Kang, S.B. Image-based plant modeling. In Proceedings of SIGGRAPH ‘06 Special Interest Group on Computer Graphics and Interactive Techniques Conference, Boston, MA, USA, 30 July–3 August 2006; ACM: New York, NY, USA, 2006; Volume 25, pp. 599–604. [Google Scholar]

- Shlyakhter, I.; Rozenoer, M.; Dorsey, J.; Teller, S. Reconstructing 3D tree models from instrumented photographs. IEEE Comput. Graph. Appl. 2001, 21, 53–61. [Google Scholar] [CrossRef]

- Tan, P.; Zeng, G.; Wang, J.; Kang, S.B.; Quan, L. Image-based tree modeling. ACM Trans. Graph. 2007, 26, 87. [Google Scholar] [CrossRef]

- Mantler, S.; Tobler, R.F.; Fuhrmann, A.L. The State of the Art in Realtime Rendering of Vegetation; VRVis Certer for Virtual Reality and Visualization: Vienna, Austria, 2003. [Google Scholar]

- Prusinkiewicz, P.; James, M.; Měch, R. Synthetic topiary. In Proceedings of the 21st Annual Conference on Computer Graphics and Interactive Techniques, Orlando, FL, USA, 24–29 July 1994; pp. 351–358. [Google Scholar]

- Weber, J.; Penn, J. Creation and rendering of realistic trees. In Proceedings of the 22nd Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 6–11 August 1995; pp. 119–128. [Google Scholar]

- De Reffye, P.; Edelin, C.; Francon, J.; Jaeger, M.; Puech, C. Plant models faithful to botanical structure and development. Comput. Graph. 1988, 22, 151–158. [Google Scholar] [CrossRef]

- Bloomenthal, J.; Bajaj, C. (Eds.) Introduction to Implicit Surfaces; Morgan Kaufmann: Burlington, MA, USA, 1997; pp. 178–180. [Google Scholar]

- Deussen, O.; Lintermann, B. Digital Design of Nature: Computer Generated Plants and Organics; Springer Science & Business Media: Berlin, Germany, 2006; pp. 84–86. [Google Scholar]

- Wei, L.Y.; Levoy, M. Texture synthesis over arbitrary manifold surfaces. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001; pp. 355–360. [Google Scholar]

- Praun, E.; Finkelstein, A.; Hoppe, H. Lapped textures. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; pp. 465–470. [Google Scholar]

- Han, F.; Zhu, S.C. Bayesian reconstruction of 3d shapes and scenes from a single image. In Proceedings of the First IEEE International Workshop on Higher-Level Knowledge in 3D Modeling and Motion Analysis, HLK 2003, Nice, France, 17 October 2003; pp. 12–20. [Google Scholar]

- Jakulin, A. Interactive vegetation rendering with slicing and blending. In Proceedings of the Eurographics, Short Presentations, Norrköping, Sweden, 3–7 May 2000. [Google Scholar]

- Behrendt, S.; Colditz, C.; Franzke, O.; Kopf, J.; Deussen, O. Realistic real-time rendering of landscapes using billboard clouds. Comput. Graph. Forum 2005, 24, 507–516. [Google Scholar] [CrossRef]

- Lee, J.; Kuo, C.C.J. Tree model simplification with hybrid polygon/billboard approach and human-centered quality evaluation. In Proceedings of the 2010 IEEE International Conference on Multimedia and Expo (ICME), Suntec City, Singapore, 19–23 July 2010; pp. 932–937. [Google Scholar]

- Catmull, E. A Subdivision Algorithm for Computer Display of Curved Surfaces. Ph.D. Thesis, University of Utah, Salt Lake City, UT, USA, 1974. [Google Scholar]

- Perlin, K. An image synthesizer. ACM SIGGRAPH Comput. Graph. 1985, 19, 287–296. [Google Scholar] [CrossRef]

- Peachey, D.R. Solid texturing of complex surfaces. ACM SIGGRAPH Comput. Graph. 1985, 19, 279–286. [Google Scholar] [CrossRef]

- Efros, A.; Leung, T.K. Texture synthesis by non-parametric sampling. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1033–1038. [Google Scholar]

- Portilla, J.; Simoncelli, E.P. A parametric texture model based on joint statistics of complex wavelet coefficients. Int. J. Comput. Vis. 2000, 40, 49–70. [Google Scholar] [CrossRef]

- Wei, L.Y.; Levoy, M. Fast texture synthesis using tree-structured vector quantization. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; pp. 479–488. [Google Scholar]

- Magda, S.; Kriegman, D. Fast texture synthesis on arbitrary meshes. In Proceedings of the 14th Eurographics Workshop on Rendering, Leuven, Belgium, 25–27 June 2003; pp. 82–89. [Google Scholar]

- Soler, C.; Cani, M.P.; Angelidis, A. Hierarchical pattern mapping. ACM Trans. Graph. 2002, 2, 673–680. [Google Scholar]

- Turk, G. Texture synthesis on surfaces. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001; pp. 347–354. [Google Scholar]

- Wu, F.L.; Mei, C.H.; Shi, J.Y. Method of direct texture synthesis on arbitrary surfaces. J. Comput. Sci. Technol. 2004, 19, 643–649. [Google Scholar] [CrossRef]

- Xue, F.; Zhang, Y.; Jiang, J.; Hu, M.; Jiang, T. Fast texture synthesis on arbitrary surfaces using texture extension and triangular texture matching. J. Comput. Aided Des. Comput. Graph. 2007, 19, 221–226. [Google Scholar]

- Al-Amri, S.S.; Kalyankar, N.V. Image segmentation by using threshold techniques. arXiv, 2010; arXiv:1005.4020. [Google Scholar]

- Tremeau, A.; Borel, N. A region growing and merging algorithm to color segmentation. Pattern Recognit. 1997, 30, 1191–1203. [Google Scholar] [CrossRef]

- Barghout, L.; Sheynin, J. Real-world scene perception and perceptual organization: Lessons from Computer Vision. J. Vis. 2013, 13, 709. [Google Scholar] [CrossRef]

| Tree Model | Primitive Type | Number of Primitives | |

|---|---|---|---|

| Crown/Sparse Part | Trunk/Solid Part | ||

| Sphere-board-based tree model | Triangle | 56 | 16 |

| Quadrilateral | 2124 | 24 | |

| Graphics-based tree model | Triangle | 19657 | 200 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

She, J.; Guo, X.; Tan, X.; Liu, J. 3D Visualization of Trees Based on a Sphere-Board Model. ISPRS Int. J. Geo-Inf. 2018, 7, 45. https://doi.org/10.3390/ijgi7020045

She J, Guo X, Tan X, Liu J. 3D Visualization of Trees Based on a Sphere-Board Model. ISPRS International Journal of Geo-Information. 2018; 7(2):45. https://doi.org/10.3390/ijgi7020045

Chicago/Turabian StyleShe, Jiangfeng, Xingchen Guo, Xin Tan, and Jianlong Liu. 2018. "3D Visualization of Trees Based on a Sphere-Board Model" ISPRS International Journal of Geo-Information 7, no. 2: 45. https://doi.org/10.3390/ijgi7020045