Survey on Urban Warfare Augmented Reality

Abstract

:1. Introduction

2. Overview of UWAR

2.1. The Conception of UWAR

- (1)

- Keeping the system stable during intensive urban combat, in which soldiers need to move fast and often changes their head poses quickly.

- (2)

- Developing a robust registration solution under poor operational conditions. For instance, vision-based registration would fail at night or in smoky environment because of poor imaging quality. Signal blockage and electromagnetic interference in urban battlefield will reduce the accuracy of GPS positioning.

- (3)

- Improving computational efficiency of the UWAR system so as to retained portability of the overall computing hardware.

- (4)

- Facilitating users, soldiers in urban battlefield, whose cognitive capabilities drop to a lower level due to their intense mental state.

- (5)

- Enabling multi-tasking for soldiers during the operation.

2.2. The Effect of UWAR

- (1)

- Enhancement of soldiers’ ability to perceive battlefield information.

- (2)

- Supporting commanders to do operational task planning.

- (3)

- Empowering military training through a realistic battle scene simulation.

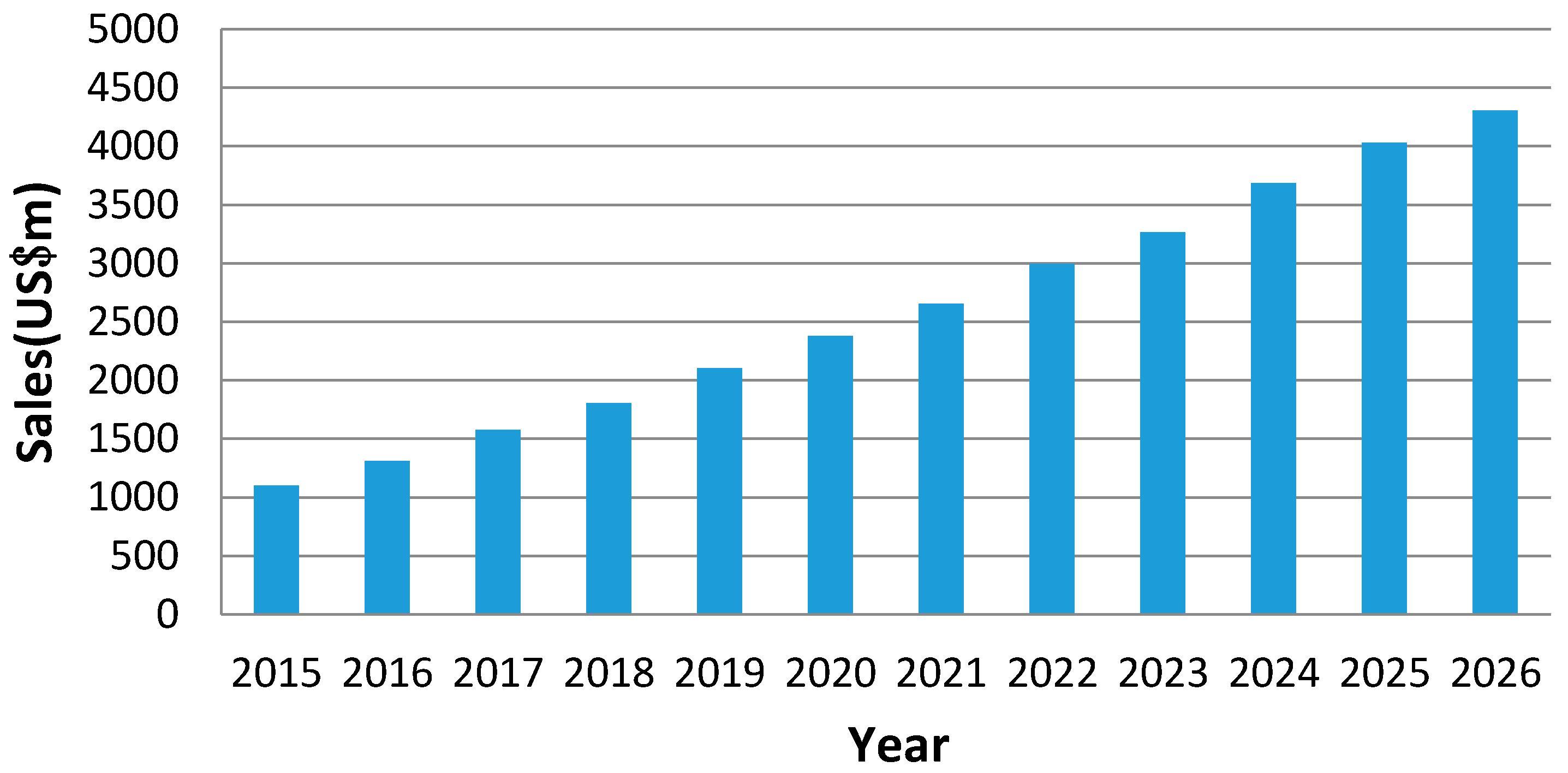

2.3. The Development of UWAR System

3. Key Technologies of UWAR

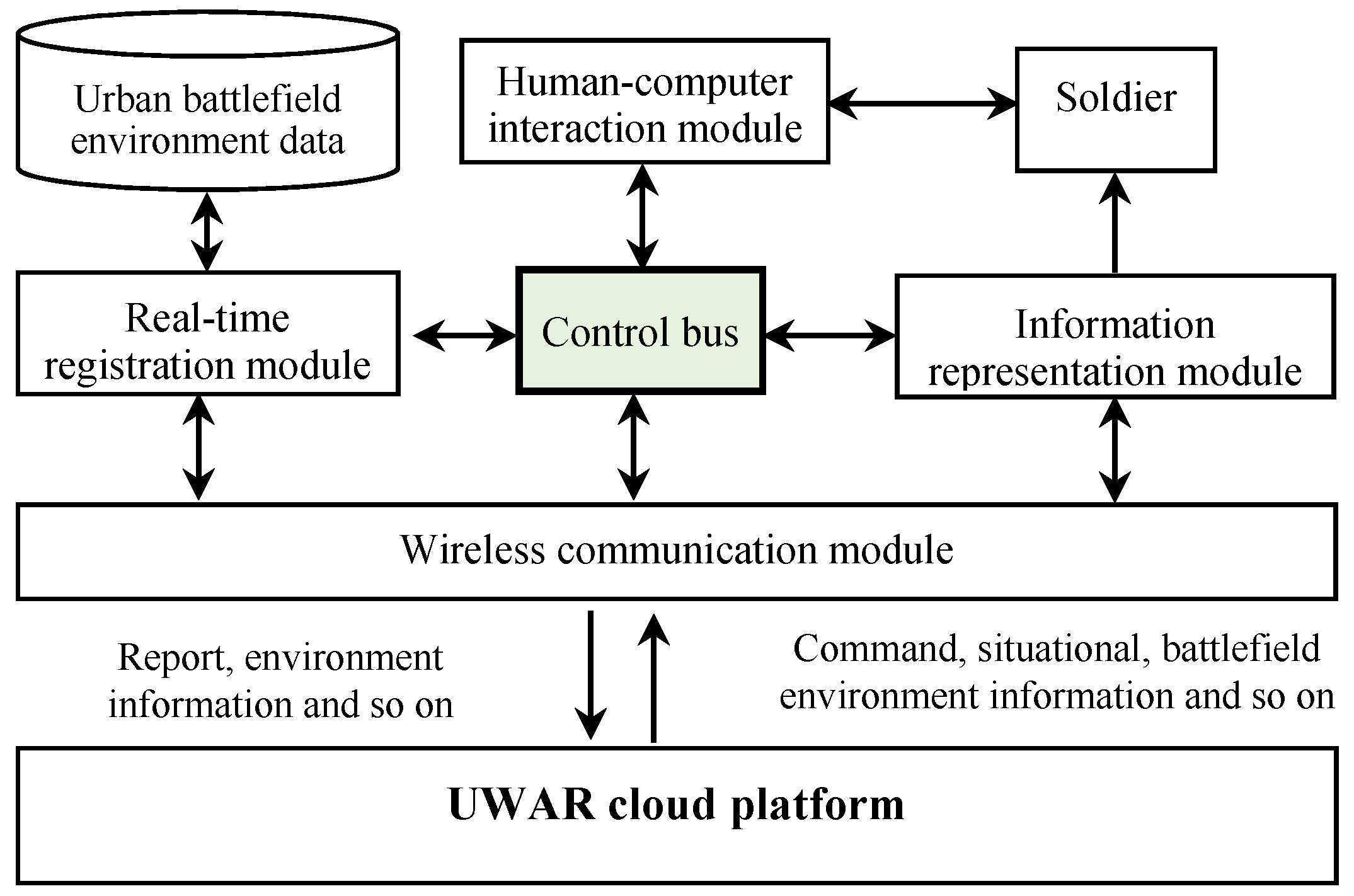

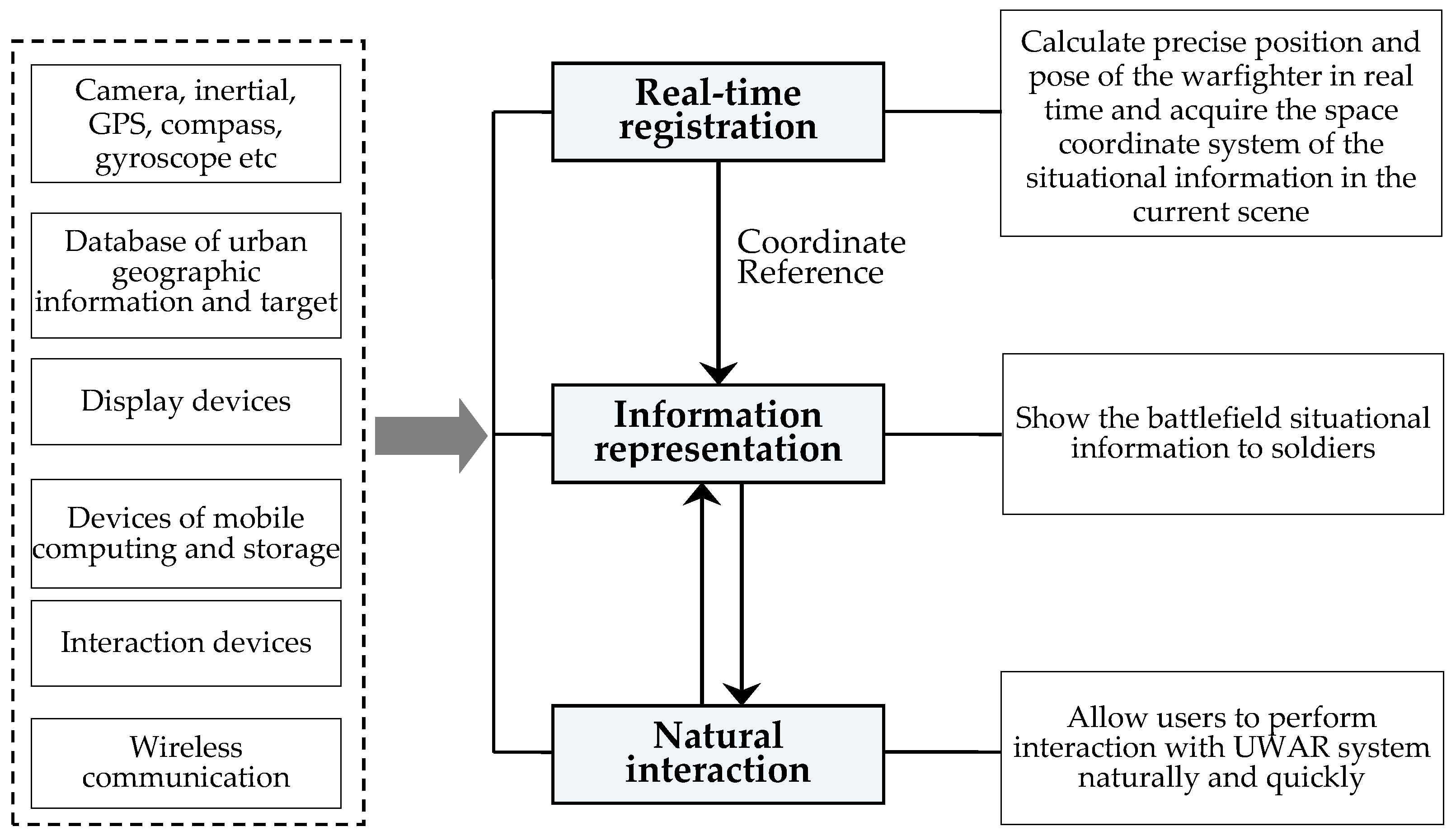

3.1. Architecture of UWAR

3.2. Registration of UWAR

3.2.1. Main Issues of Registration Technology

3.2.2. Sensor-Based Registration

3.2.3. Vision-Based Registration

- (a)

- Restoring the pose via the registration of the sky silhouettes. Baatz et al. tried to align the contour lines extracted from the DEM (digital elevation model) and the silhouettes of the mountain and sky in the input image, to restore the current pose of view [33].

- (b)

- (c)

- Using the scene semantic segmentation information to improve the accuracy of pose. Arth et al. [37] combined the building edges and the semantic segmentation information (such as building surface, roof, vegetation, ground, sky, etc.), and then aligned them with the 2.5D building model to restore the current global pose of the camera. Compared with the work of Chu [34], Arth registered the semantic segmentation of the input image with the existing map model to increase the accuracy in the pose estimation. The orientation error was less than 5° and the majority (87.5%) position error was less than 4 meters. Some similar research was also done by Baatz et al. [38] and Taneja et al. [39].

3.2.4. Hybrid Registration Technology

3.2.5. Application of Registration Technology in UWAR

3.3. Information Representation of UWAR

3.3.1. Main Issues of Information Representation

3.3.2. Information Overload

3.3.3. Occlusion Handling

3.3.4. View Management

3.3.5. Application of Information Representation Technology in UWAR

3.4. UWAR Interaction Technology

4. Future Development

- (1)

- More powerful hardware. UWAR system will be more suitable for soldiers to use in battlefield with the development of the enabling technologies, such as more powerful portable computing device, see-through display with higher specification (e.g., brightness, resolution, and perspective), longer endurance, and more natural human-computer interaction. Therefore, the envisioned features of UWAR system can be realized and fully meet the needs of soldiers in urban warfare.

- (2)

- More intelligent Software. With the development of artificial intelligence, it’s able for the system to understand the intentions of soldiers and achieve high degree human-machine collaboration. What’s more, the system can understand the geometric structure of the battlefield environment as well as its semantic structure, and thus to facilitate information filtering for adaptive display in terms of where (on the display), what (content), when. UWAR system will evolve into an indispensable combat assistant for soldiers in urban warfare.

- (3)

- Better integration with GIS and VGE. GIS and VGE often serve as the spatial data infrastructure of UWAR, which play an important role in real-time registration and information representation of UWAR. However, seldom research work has been devoted to integration of real-world model developed in GIS and VGE into UWAR, which should be paid more attention to in order to put the UWAR system into practical use.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Defense Advanced Research Projects Agency of USA (U.S. Department of Defense, State of Washington, USA). Urban Leader Tactical Response, Awareness & Visualization (ULTRA-Vis); Defense Advanced Research Projects Agency of USA: Arlington, VA, USA, 2008.

- Roberts, D.; Menozzi, A.; Clipp, B.; Menozzi, A.; Clipp, B.; Russler, P. Soldier-worn augmented reality system for tactical icon visualization. Head- and Helmet-Mounted Displays XVII; and Display Technologies and Applications for Defense. SPIE 2012, 8383. [Google Scholar] [CrossRef]

- Livingston, M.A.; Swan, J.E.; Simon, J.J. Evaluating system capabilities and user performance in the battle eld augmented reality system. In Proceedings of the NIST/DARPA Workshop on Performance Metrics for Intelligent Systems, Gaithersburg, MD, USA, 24–26 August 2004. [Google Scholar]

- Colbert, H.J.; Tack, D.W.; Bossi, L.C.L. Augmented Reality for Battlefield Awareness; DRDC Toronto CR-2005-053; HumanSystems® Incorporated: Guelph, ON, Canada, 2005. [Google Scholar]

- Le Roux, W. The use of augmented reality in command and control situation awareness. Sci. Mil. S. Afr. J. Mil. Stud. 2010, 38, 115–133. [Google Scholar] [CrossRef]

- Zysk, T.; Luce, J.; Cunningham, J. Augmented Reality for Precision Navigation-Enhancing performance in High-Stress Operations. GPS World 2012, 23, 47. [Google Scholar]

- Kenny, R.J. Augmented Reality at the Tactical and Operational Levels of War; Naval War College Newport United States: Newport, RI, USA, 2015. [Google Scholar]

- Julier, S.; Baillot, Y.; Lanzagorta, M. BARS: Battlefield Augmented Reality System. Nato Symposium on Information Processing Techniques for Military Systems. In Proceedings of the NATO Symposium on Information Processing Techniques for Military Systems, Istanbul, Turkey, 9–11 October 2000; pp. 9–11. [Google Scholar]

- Gans, E.; Roberts, D.; Bennett, M. Augmented reality technology for day/night situational awareness for the dismounted Soldier. Proc. SPIE 2015, 9470, 947004:1–947004:11. [Google Scholar]

- Heads Up: Augmented Reality Prepares for the Battlefield. Available online: https://arstechnica.com/information-technology/ (accessed on 1 January 2018).

- Heads-Up Display to Give Soldiers Improved Situational Awareness. Available online: https://www.army.mil/ (accessed on 1 January 2018).

- Feiner, S.; Macintyre, B.; Hollerer, T. A touring machine: Prototyping 3D mobile augmented reality systems for exploring the urban environment. Pers. Technol. 1997, 1, 208–217. [Google Scholar] [CrossRef]

- Menozzi, A.; Clipp, B.; Wenger, E. Development of vision-aided navigation for a wearable outdoor augmented reality system. In Proceedings of the 2014 IEEE/ION Position, Location and Navigation Symposium—PLANS 2014, Monterey, CA, USA, 5–8 May 2014; pp. 460–472. [Google Scholar]

- Maus, S. An ellipsoidal harmonic representation of Earth’s lithospheric magnetic field to degree and order 720. Geochem. Geophys. Geosyst. 2010, 11, 1–12. [Google Scholar] [CrossRef]

- Ventura, J.; Arth, C.; Reitmayr, G. Global localization from monocular slam on a mobile phone. IEEE Trans. Visual. Comput. Graph. 2014, 20, 531–539. [Google Scholar] [CrossRef] [PubMed]

- Hays, J.; Efros, A.A. IM2GPS: Estimating geographic information from a single image. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Kalogerakis, E.; Vesselova, O.; Hays, J. Image sequence geolocation with human travel priors. In Proceedings of the IEEE 12th International Conference on Computer Vision, Tokyo, Japan, 29 September–2 October 2009; pp. 253–260. [Google Scholar]

- Schindler, G.; Brown, M.; Szeliski, R. City-scale location recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7. [Google Scholar]

- Zhang, W.; Kosecka, J. Image based localization in urban environments. In Proceedings of the IEEE Third International Symposium on 3D Data Processing, Visualization, and Transmission, Chapel Hill, NC, USA, 4–16 June 2006; pp. 33–40. [Google Scholar]

- Robertson, D.P.; Cipolla, R. An Image-Based System for Urban Navigation. In Proceedings of the 15th British Machine Vision Conference (BMVC’04), Kingston-upon-Thames, UK, 7–9 September 2004; pp. 819–828. [Google Scholar]

- Zamir, A.R.; Shah, M. Accurate image localization based on google maps street view. In Proceedings of the 11th European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 255–268. [Google Scholar]

- Vaca, C.G.; Zamir, A.R.; Shah, M. City scale geo-spatial trajectory estimation of a moving camera. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1186–1193. [Google Scholar]

- Irschara, A.; Zach, C.; Frahm, J.M. From structure-from-motion point clouds to fast location recognition. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2599–2606. [Google Scholar]

- Klopschitz, M.; Irschara, A.; Reitmayr, G. Robust incremental structure from motion. In Proceedings of the Fifth International Symposium on 3D Data Processing, Visualization and Transmission (3DPVT), Paris, France, 17–20 May 2010; Volume 2, pp. 1–8. [Google Scholar]

- Haralick, B.M.; Lee, C.N.; Ottenberg, K. Review and analysis of solutions of the three point perspective pose estimation problem. Int. J. Comput. Vis. 1994, 13, 331–356. [Google Scholar] [CrossRef]

- Haralick, R.M.; Lee, D.; Ottenburg, K. Analysis and solutions of the three point perspective pose estimation problem. In Proceedings of the 1991 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Lahaina, Maui, HI, USA, 3–6 June 1991; pp. 592–598. [Google Scholar]

- Li, Y.; Snavely, N.; Huttenlocher, D.P. Location recognition using prioritized feature matching. In Proceedings of the 11th European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 791–804. [Google Scholar]

- Li, Y.; Snavely, N.; Dan, H. Worldwide Pose Estimation Using 3D Point Clouds. In Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 15–29. [Google Scholar]

- Lim, H.; Sinha, S.N.; Cohen, M.F. Real-time image-based 6-dof localization in large-scale environments. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1043–1050. [Google Scholar]

- Lim, H.; Sinha, S.N.; Cohen, M.F. Real-time monocular image-based 6-DoF localization. Int. J. Robot. Res. 2015, 34, 476–492. [Google Scholar] [CrossRef]

- Wagner, D.; Reitmayr, G.; Mulloni, A. Real-time detection and tracking for augmented reality on mobile phones. IEEE Trans. Visual. Comput. Graph. 2010, 16, 355–368. [Google Scholar] [CrossRef] [PubMed]

- Arth, C.; Wagner, D.; Klopschitz, M. Wide area localization on mobile phones. In Proceedings of the 8th IEEE International Symposium on ISMAR 2009, Orlando, FL, USA, 19–22 October 2009; pp. 73–82. [Google Scholar]

- Baatz, G.; Saurer, O.; Köser, K. Large Scale Visual Geo-Localization of Images in Mountainous Terrain. In Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 517–530. [Google Scholar]

- Chu, H.; Gallagher, A.; Chen, T. GPS refinement and camera orientation estimation from a single image and a 2D map. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 171–178. [Google Scholar]

- Cham, T.J.; Ciptadi, A.; Tan, W.C. Estimating camera pose from a single urban ground-view omnidirectional image and a 2D building outline map. In Proceedings of the Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 366–373. [Google Scholar]

- David, P.; Ho, S. Orientation descriptors for localization in urban environments. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 494–501. [Google Scholar]

- Arth, C.; Pirchheim, C.; Ventura, J. Global 6DOF Pose Estimation from Untextured 2D City Models. Comput. Sci. 2015, 25, 1–8. [Google Scholar]

- Baatz, G.; Saurer, O.; Köser, K. Leveraging Topographic Maps for Image to Terrain Alignment. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission (3DIMPVT), Zurich, Switzerland, 13–15 October 2012; pp. 487–492. [Google Scholar]

- Taneja, A.; Ballan, L.; Pollefeys, M. Registration of spherical panoramic images with cadastral 3d models. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission (3DIMPVT), Zurich, Switzerland, 13–15 October 2012; pp. 479–486. [Google Scholar]

- Middelberg, S.; Sattler, T.; Untzelmann, O. Scalable 6-dof localization on mobile devices. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 268–283. [Google Scholar]

- Oskiper, T.; Samarasekera, S.; Kumar, R. Multi-sensor navigation algorithm using monocular camera, IMU and GPS for large scale augmented reality. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR2012), Atlanta, GA, USA, 5–8 November 2012. [Google Scholar]

- Hartmann, G.; Huang, F.; Klette, R. Landmark initialization for unscented kalman filter sensor fusion in monocular camera localization. Int. J. Fuzzy Logic Intell. Syst. 2013, 13, 1–11. [Google Scholar] [CrossRef]

- Billinghurst, M.; Clark, A.; Lee, G. A survey of augmented reality. Found. Trends Hum.–Comput. Interact. 2015, 8, 73–272. [Google Scholar] [CrossRef]

- Kendall, A.; Grimes, M.; Cipolla, R. Posenet: A convolutional network for real-time 6-dof camera relocalization. In Proceedings of the IEEE International Conference on Computer Vision, Shenzhen, China, 7–13 December 2015. [Google Scholar]

- Rambach, J.; Tewari, A.; Pagani, A.; Stricker, A. Learning to Fuse: A Deep Learning Approach to Visual-Inertial Camera Pose Estimation. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR2016), Merida, Mexico, 19–21 September 2016. [Google Scholar]

- Livingston, M.A.; Rosenblum, L.J.; Brown, D.G.; Schmidt, G.S.; Julier, S.J. Military applications of augmented reality. In Handbook of Augmented Reality; Springer: New York, NY, USA, 2011; pp. 671–706. [Google Scholar]

- Argenta, C.; Murphy, A.; Hinton, J.; Cook, J.; Sherrill, T.; Snarski, S. Graphical User Interface Concepts for Tactical Augmented Reality. Proc. SPIE 2010, 7688. [Google Scholar] [CrossRef]

- Tatzgern, M.; Kalkofen, D.; Grasset, R.; Schmalstieg, D. Hedgehog Labeling: View Management Techniques for External Labels in 3D Space. Virtual Real. 2014. [Google Scholar] [CrossRef]

- Grasset, R.; Langlotz, T.; Kalkofen, D. Image-driven view management for augmented reality browsers. In Proceedings of the 11th IEEE International Symposium on ISMAR, Atlanta, GA, USA, 5–8 November 2012; pp. 177–186. [Google Scholar]

- Karlsson, M. Challenges of Designing Augmented Reality for Military Use; Karlstad University: Karlstad, Sweden, 2015. [Google Scholar]

- Livingston, M.A.; Swan, J.; Gabbard, J.; Hollerer, T.H.; Hix, D.; Julier, S.J.; Baillot, Y.; Brown, D. Resolving multiple occluded layers in augmented reality. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR2003), Tokyo, Japan, 7–10 October 2003. [Google Scholar]

- Livingston, M.A.; Ai, Z.M.; Karsch, K.; Gibson, G.O. User interface design for military AR applications. Virtual Real. 2011, 15, 175–184. [Google Scholar] [CrossRef]

- Tatzgern, M.; Kalkofen, D.; Schmalstieg, D. Multi-perspective compact explosion diagrams. Comput. Graph. 2011, 35, 135–147. [Google Scholar] [CrossRef]

- Tatzgern, M.; Kalkofen, D.; Schmalstieg, D. Dynamic compact visualizations for augmented reality. IEEE Virtual Real. 2013, 3–6. [Google Scholar] [CrossRef]

- Tatzgern, M.; Kalkofen, D.; Schmalstieg, D. Compact explosion diagrams. Comput. Graph. 2010, 35, 135–147. [Google Scholar] [CrossRef]

- Tatzgern, M.; Orso, V.; Kalkofen, D. Adaptive information density for augmented reality displays. In Proceedings of the IEEE VR 2016 Conference, Greenville, SC, USA, 19–23 March 2016; pp. 83–92. [Google Scholar]

- Furmanski, C.; Azuma, R.; Daily, M. Augmented-reality visualizations guided by cognition: Perceptual heuristics for combining visible and obscured information. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR2002), Darmstadt, Germany, 30 September–1 October 2002. [Google Scholar]

- Zhou, R.; Jingjing, M.; Junsong, Y. Depth camera based hand gesture recognition and its applications in Human-Computer-Interaction. In Proceedings of the 2011 8th International Conference Information, Communication Signal Process, Singapore, 13–16 December 2011; Volume 5. [Google Scholar] [CrossRef]

- Kulshreshth, A.; Zorn, C.; Laviola, J.J. Poster: Real-time markerless kinect based finger tracking and hand gesture recognition for HCI. In Proceedings of the 2013 IEEE Symposium on 3D User Interfaces (3DUI), Orlando, FL, USA, 16–17 March 2013; pp. 187–188. [Google Scholar]

- Zocco, A.; Zocco, M.; Greco, A.; Livatino, S. Touchless Interaction for Command and Control in Military Operations. In Proceedings of the International Conference on Augmented and Virtual Reality AVR 2015: Augmented and Virtual Reality, Lecce, Italy, 31 August–3 September 2015; pp. 432–445. [Google Scholar]

- Visiongain. Military Augmented Reality (MAR) Technologies Market Report 2016–2026; Business Intelligence Company: London, UK, 2016. [Google Scholar]

| Vision-Based Registration Methods | Algorithm | Basic Principle | Related Researches |

|---|---|---|---|

| Image-based method | Image retrieval-based method | The current image was located by using the most similar reference image’s (GPS) position | Schindler 2007 |

| Zamir 2010 Zamir A.R. 2012 | |||

| Pre-reconstruction based on SFM | Pre-reconstruction the 3D point cloud based on SFM, and then restore the pose using (PnP) algorithm | Irschara 2009 Li 2012 Lim 2012 Lim 2015 | |

| Wagner 2010 Arth 2009 Arth 2011 Ventura 2012 | |||

| Model-based method | Restore the current image viewpoint by register to the existing 2D/3D model | Restoring the current pose through the sky silhouettes registration | Baatz 2012 Bansal 2014 |

| Based on the building outline edges to estimate the camera pose | Chu 2014 Cham 2010 David 2011 | ||

| Using the scene semantic segmentation information to improve the pose accuracy | Arth 2015 Arth 2015 Baatz 2012 Taneja 2012 | ||

| Combination of Vision-based methods | Image-based localization + SLAM | Combine the 3D point cloud data with SLAM | Ventura 2014 Sattler 2014 |

| Model-based localization + SLAM | Integrate existing geographic information into SLAM | Arth 2015 |

| Information Type | Information Content |

|---|---|

| Battlefield situation information | Enemy’s situation, the situation of friendly force etc. |

| Battlefield environment information | Geographic information, landmark information, electromagnetic information etc. |

| Navigation information | Navigation route, azimuth etc. |

| Combat information | Threaten area, command etc. |

| Other auxiliary information | Time, coordinate, system information etc. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

You, X.; Zhang, W.; Ma, M.; Deng, C.; Yang, J. Survey on Urban Warfare Augmented Reality. ISPRS Int. J. Geo-Inf. 2018, 7, 46. https://doi.org/10.3390/ijgi7020046

You X, Zhang W, Ma M, Deng C, Yang J. Survey on Urban Warfare Augmented Reality. ISPRS International Journal of Geo-Information. 2018; 7(2):46. https://doi.org/10.3390/ijgi7020046

Chicago/Turabian StyleYou, Xiong, Weiwei Zhang, Meng Ma, Chen Deng, and Jian Yang. 2018. "Survey on Urban Warfare Augmented Reality" ISPRS International Journal of Geo-Information 7, no. 2: 46. https://doi.org/10.3390/ijgi7020046