Phenotype Analysis of Arabidopsis thaliana Based on Optimized Multi-Task Learning

Abstract

:1. Introduction

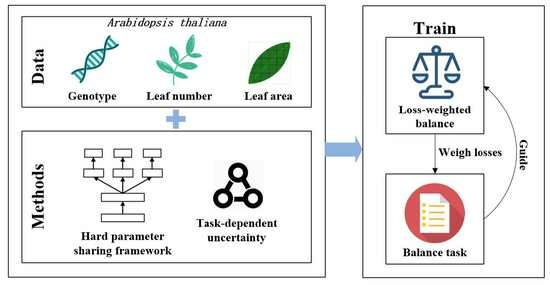

2. Multi-Task Learning Architecture for Arabidopsis thaliana Phenotypic Analysis

2.1. Modified Hard Parameter Sharing in VGG16 Network

2.2. Loss Functions for Different Tasks

2.3. Loss Balance for Each Task

2.3.1. Likelihood Function for the Regression Task

2.3.2. Likelihood Function for Classification Task

2.3.3. Multi-Task Joint Loss

2.3.4. Multi-Tasking Learning Processing

3. Experimental Results

3.1. Experimental Environment

3.2. Dataset

3.3. Evaluation Metrics

3.3.1. Classification Metrics

3.3.2. Regression Metrics

3.4. Experimental Results

3.4.1. Learning Rate Test

3.4.2. Comparison with Different Networks

- (1)

- Classification task

- (2)

- Regression tasks

3.4.3. Weighted Loss Function Results

- (1)

- Classification task

- (2)

- Regression tasks

3.5. Comparison with Previous Works

- (1)

- Classification task

- (2)

- Regression tasks

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pieruschka, R.; Schurr, U. Plant phenotyping: Past, present, and future. Plant Phenomics 2019, 2019, 7507131. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Zhang, Y.; Dong, W.; Bie, Z.; Peng, C.; Huang, Y. Early identification and localization algorithm for weak seedlings based on phenotype detection and machine learning. Agriculture 2023, 13, 212. [Google Scholar] [CrossRef]

- Song, P.; Wang, J.; Guo, X.; Yang, W.; Zhao, C. High-throughput phenotyping: Breaking through the bottleneck in future crop breeding. Crop J. 2021, 9, 633–645. [Google Scholar] [CrossRef]

- Fu, X.; Jiang, D. High-throughput phenotyping: The latest research tool for sustainable crop production under global climate change scenarios. In Sustainable Crop Productivity and Quality Under Climate Change; Elsevier: Amsterdam, The Netherlands, 2022; pp. 313–381. [Google Scholar]

- Xiao, Q.; Bai, X.; Zhang, C.; He, Y. Advanced high-throughput plant phenotyping techniques for genome-wide association studies: A review. J. Adv. Res. 2022, 35, 215–230. [Google Scholar] [CrossRef]

- Xiong, J.; Yu, D.; Liu, S.; Shu, L.; Wang, X.; Liu, Z. A review of plant phenotypic image recognition technology based on deep learning. Electronics 2021, 10, 81. [Google Scholar] [CrossRef]

- Kolhar, S.; Jagtap, J. Leaf segmentation and counting for phenotyping of rosette plants using xception-style u-net and watershed algorithm. In Proceedings of the International Conference on Computer Vision and Image Processing, Nagpur, India, 3–5 December 2020; Springer: Berlin/Heidelberg, Germany, 2022; pp. 139–150. [Google Scholar]

- Maghuly, F.; Molin, E.M.; Saxena, R.; Konkin, D.J. Functional genomics in plant breeding 2.0. Int. J. Mol. Sci. 2022, 23, 6959. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems—Volume 1, NIPS’12, Red Hook, NY, USA, 3–6 December 2012; Curran Associates Inc.: Nice, France, 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 17–30 June 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Gao, S.-H.; Cheng, M.-M.; Zhao, K.; Zhang, X.-Y.; Yang, M.-H.; Torr, P. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 652–662. [Google Scholar] [CrossRef]

- Yang, L.; Jiang, H.; Cai, R.; Wang, Y.; Song, S.; Huang, G.; Tian, Q. Condensenet v2: Sparse feature reactivation for deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 3569–3578. [Google Scholar]

- Maaz, M.; Shaker, A.; Cholakkal, H.; Khan, S.; W. Zamir, S.; Anwer, R.M.; Shahbaz Khan, F. Edgenext: Efficiently Amalgamated Cnn-Transformer Architecture for Mobile Vision Applications. In Computer Vision—ECCV 2022 Workshops; Karlinsky, L., Michaeli, T., Nishino, K., Eds.; Springer: Cham, Switzerland, 2023; pp. 3–20. [Google Scholar]

- Tang, Y.; Han, K.; Guo, J.; Xu, C.; Xu, C.; Wang, Y. Ghostnetv2: Enhance cheap operation with long-range attention. In Advances in Neural Information Processing Systems; Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Curran Associates, Inc.: Nice, France, 2022; Volume 35, pp. 9969–9982. [Google Scholar]

- Guo, X.; Qiu, Y.; Nettleton, D.; Schnable, P.S. High-throughput field plant phenotyping: A self-supervised sequential cnn method to segment overlapping plants. Plant Phenomics 2023, 5, 52. [Google Scholar] [CrossRef]

- Zan, X.; Zhang, X.; Xing, Z.; Liu, W.; Zhang, X.; Su, W.; Liu, Z.; Zhao, Y.; Li, S. Automatic detection of maize tassels from uav images by combining random forest classifier and vgg16. Remote Sens. 2020, 12, 3094. [Google Scholar] [CrossRef]

- Lee, S.H.; Chan, C.S.; Wilkin, P.; Remagnino, P. Deep-plant: Plant identification with convolutional neural networks. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 452–456. [Google Scholar]

- Dyrmann, M.; Karstoft, H.; Midtiby, H.S. Plant species classification using deep convolutional neural network. Biosyst. Eng. 2016, 151, 72–80. [Google Scholar] [CrossRef]

- Barré, P.; Stöver, B.C.; Müller, K.F.; Steinhage, V. Leafnet: A computer vision system for automatic plant species identification. Ecol. Inform. 2017, 40, 50–56. [Google Scholar] [CrossRef]

- Namin, S.T.; Esmaeilzadeh, M.; Najafi, M.; Brown, T.B.; Borevitz, J.O. Deep phenotyping: Deep learning for temporal phenotype/genotype classification. Plant Methods 2018, 14, 66. [Google Scholar] [CrossRef] [PubMed]

- Roitsch, T.; Cabrera-Bosquet, L.; Fournier, A.; Ghamkhar, K.; Jiménez-Berni, J.; Pinto, F.; Ober, E.S. New sensors and data-driven approaches—A path to next generation phenomics. Plant Sci. 2019, 282, 2–10. [Google Scholar] [CrossRef]

- Kong, H.; Chen, P. Mask r-cnn-based feature extraction and three-dimensional recognition of rice panicle ct images. Plant Direct 2021, 5, e00323. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q. A survey on multi-task learning. IEEE Trans. Knowl. Data Eng. 2021, 34, 5586–5609. [Google Scholar] [CrossRef]

- Wallingford, M.; Li, H.; Achille, A.; Ravichandran, A.; Fowlkes, C.; Bhotika, R.; Soatto, S. Task adaptive parameter sharing for multi-task learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2022; pp. 7561–7570. [Google Scholar]

- Dobrescu, A.; Giuffrida, M.V.; Tsaftaris, S.A. Doing more with less: A multitask deep learning approach in plant phenotyping. Front. Plant Sci. 2020, 11, 141. [Google Scholar] [CrossRef]

- Pound, M.P.; Atkinson, J.A.; Wells, D.M.; Pridmore, T.P.; French, A.P. Deep learning for multi-task plant phenotyping. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2055–2063. [Google Scholar]

- Wen, C.; Zhang, H.; Li, H.; Li, H.; Chen, J.; Guo, H.; Cheng, S. Multi-scene citrus detection based on multi-task deep learning network. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 912–919. [Google Scholar]

- La Rosa, L.E.C.; Zortea, M.; Gemignani, B.; Oliveira, D.A.B.; Feitosa, R.Q. Fcrn-based multi-task learning for automatic citrus tree detection from uav images. In Proceedings of the 2020 IEEE Latin American GRSS & ISPRS Remote Sensing Conference (LAGIRS), Santiago, Chile, 22–26 March 2020; pp. 403–408. [Google Scholar]

- Khaki, S.; Safaei, N.; Pham, H.; Wang, L. Wheatnet: A lightweight convolutional neural network for high-throughput image-based wheat head detection and counting. Neurocomputing 2020, 489, 78–89. [Google Scholar] [CrossRef]

- Chaudhury, B.; Joshi, V.; Mitra, P.; Sahadevan, A.S. Multi task learning for plant leaf segmentation and counting. In Proceedings of the 2023 IEEE Applied Sensing Conference (APSCON), Bengaluru, India, 23– 25 January 2023; pp. 1–3. [Google Scholar]

- Keceli, A.S.; Kaya, A.; Catal, C.; Tekinerdogan, B. Deep learning-based multi-task prediction system for plant disease and species detection. Ecol. Inform. 2020, 69, 101679. [Google Scholar] [CrossRef]

- Wang, D.; Wang, J.; Ren, Z.; Li, W. Dhbp: A dual-stream hierarchical bilinear pooling model for plant disease multi-task classification. Comput. Electron. Agric. 2022, 195, 106788. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2018; pp. 7482–7491. [Google Scholar]

- Minervini, M.; Fischbach, A.; Scharr, H.; Tsaftaris, S.A. Finely-grained annotated datasets for image-based plant phenotyping. Pattern Recognit. Lett. 2016, 81, 80–89. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

| Symbol | Description |

|---|---|

| Learning rate | |

| Weighting relationship factors | |

| Weighted precision of the classification task | |

| Weighted recall of the classification task | |

| A | Accuracy of the classification task |

| Weighted comprehensive performance of precision and recall | |

| Coefficient of determination | |

| Mean absolute percentage error in the regression task | |

| Mean absolute error in the regression task | |

| Mean square error in the regression task |

| No. | Genotype Classification | Leaf Number Counting Regression | Leaf Area Regression |

|---|---|---|---|

| 1 | input (224 × 224 RGB image) | ||

| 2 | conv3-64 | ||

| 3 | conv3-64 | ||

| 4 | maxpool | ||

| 5 | conv3-128 | ||

| 6 | conv3-128 | ||

| 7 | maxpool | ||

| 8 | conv3-256 | ||

| 9 | conv3-256 | ||

| 10 | conv3-256 | ||

| 11 | maxpool | ||

| 12 | conv3-512 | ||

| 13 | conv3-512 | ||

| 14 | conv3-512 | ||

| 15 | FC-1536 | ||

| 16 | FC-512 | FC-512 | FC-512 |

| 17 | FC-256 | FC-1 | FC-1 |

| 18 | softmax | - | - |

| Genotype | Col-0 | ein2 | pgm | ctr | adh1 |

|---|---|---|---|---|---|

| Original image |  |  |  |  |  |

| Leaf segmentation image |  |  |  |  |  |

| Leaf numbers | 7 | 8 | 5 | 6 | 7 |

| Total number of samples | 35 | 39 | 35 | 32 | 24 |

| Percentage of categories | 21.21% | 23.64% | 21.21% | 19.39% | 14.55% |

| True Results | Predicted Results | |

|---|---|---|

| Positive Example | Negative Example | |

| Positive example | True positive example () | False negative example () |

| Negative example | False positive example () | True negative example () |

| Accuracy | |||

|---|---|---|---|

| 0.9688 | 0.9688 | 0.9750 | 0.9674 |

| Task | MSE | MAE | MAPE | |

|---|---|---|---|---|

| Leaf Number Regression | 0.7944 | 0.5892 | 0.5846 | 0.0691 |

| Leaf Area Regression | 0.9787 | 0.3029 | 0.4551 | 0.0328 |

| Accuracy | ||||

|---|---|---|---|---|

| Unweighted loss | 0.9063 | 0.9063 | 0.9375 | 0.9096 |

| Weighted loss | 0.9688 | 0.9688 | 0.9750 | 0.9674 |

| Task | Unweighted Loss | Weighted Loss | ||||

|---|---|---|---|---|---|---|

| R2 | MSE | MAE | R2 | MSE | MAE | |

| Leaf number regression | 0.7171 | 0.8109 | 0.7238 | 0.7944 | 0.5892 | 0.5846 |

| Leaf area regression | 0.9703 | 0.4209 | 0.5465 | 0.9787 | 0.3029 | 0.4551 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, P.; Xu, S.; Zhai, Z.; Xu, H. Phenotype Analysis of Arabidopsis thaliana Based on Optimized Multi-Task Learning. Mathematics 2023, 11, 3821. https://doi.org/10.3390/math11183821

Yuan P, Xu S, Zhai Z, Xu H. Phenotype Analysis of Arabidopsis thaliana Based on Optimized Multi-Task Learning. Mathematics. 2023; 11(18):3821. https://doi.org/10.3390/math11183821

Chicago/Turabian StyleYuan, Peisen, Shuning Xu, Zhaoyu Zhai, and Huanliang Xu. 2023. "Phenotype Analysis of Arabidopsis thaliana Based on Optimized Multi-Task Learning" Mathematics 11, no. 18: 3821. https://doi.org/10.3390/math11183821