Abstract

Efficient exploration in multi-robot systems is significantly influenced by the initial start positions of the robots. This paper introduces the hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC), a novel strategy explicitly designed to optimize exploration efficiency across varying initial start configurations: uniform distribution, centralized position, random positions, perimeter positions, clustered positions, and strategic positions. To establish the effectiveness of HCETIIC, we engage in a comparative analysis with four other prevalent hybrid methods in the domain. These methods amalgamate the principles of coordinated multi-robot exploration (CME) with different metaheuristic algorithms and have demonstrated compelling results in their respective studies. The performance comparison is based on essential measures such as runtime, the percentage of the explored area, and failure rate. The empirical results reveal that the proposed HCETIIC method consistently outperforms the compared strategies across different start positions, thereby emphasizing its considerable potential for enhancing efficiency in multi-robot exploration tasks across a wide range of real-world scenarios. This research underscores the critical, yet often overlooked, role of the initial robot configuration in multi-robot exploration, establishing a new direction for further improvements in this field.

Keywords:

robotics; multi-agent systems; exploration algorithms; pathfinding; cost function; hybrid techniques; optimization; performance metrics; simulation-based research; environmental mapping; cooperative exploration; dynamic environment mapping; distributed robotics; real-time simulation; advanced algorithmic solutions; metaheuristic approaches; scalable robot configurations MSC:

68W20

1. Introduction

The last decade has witnessed an exponential rise in interest in multi-robot systems, driven by their potential to outperform single-robot systems in terms of efficiency, robustness, and resilience [1]. These systems are increasingly employed in a wide array of applications. Their capabilities are demonstrated in search and rescue missions, where multiple robots can cover larger areas faster than a single robot [2,3,4,5]. In surveillance tasks [6], multi-robot systems can monitor larger perimeters, ensuring enhanced security. Environmental monitoring and data collection also benefit from these systems as they can gather more diverse and comprehensive data [7,8,9]. Further, multi-robot systems are even used in space [10,11,12,13,14] and deep-sea explorations [15,16,17,18], reaching areas that are too dangerous or distant for human researchers [19,20,21].

A particularly challenging task in these scenarios involves effective exploration and mapping of unknown and potentially hazardous environments [22,23]. While the dynamic nature of exploration and mapping, such as simultaneous localization and mapping (SLAM) [24], plays a pivotal role in mobile robotics, our research specifically delves into the nuanced impact of initial configurations on exploration efficiency. Real-world scenarios, such as time-sensitive rescue missions or intricate surveillance tasks, often underscore the significance of these initial configurations. Recent research has introduced numerous strategies aimed at optimizing the process of multi-robot exploration. These techniques primarily employ hybrid methods, merging principles of coordinated multi-robot exploration (CME) [25,26] with different metaheuristic algorithms [27]. While these strategies employ diverse approaches, they share a common objective: to enhance real-time decision making, coordination, and task distribution among the robots involved in the exploration [28]. However, there remains a largely untapped potential in these exploration strategies—the strategic configuration of the initial start positions [29], a critical aspect that significantly influences the exploration’s efficiency and effectiveness.

Nevertheless, an often-overlooked aspect of multi-robot exploration is the role of the initial start positions. Conventional methods [30,31,32] typically employ a simplistic start position. While these methods offer the advantage of simplicity, they may not always yield optimal exploration outcomes, especially in complex or constrained environments [33,34]. The interplay between initial configurations and dynamic exploration is intricate. While dynamic exploration techniques adapt to evolving environments, the initial configuration can offer a strategic advantage. Especially in scenarios where the environment’s broad layout is known, but specific details evolve, starting with an optimal configuration can expedite the exploration process. In response to this gap, we introduce the hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC). This novel method combines the merits of the cheetah optimization (CO) [35] with a unique strategy for determining the initial configuration of the robots. We propose that this hybrid approach can significantly enhance the exploration efficiency and robustness, thereby pushing the boundaries of what multi-robot systems can achieve.

This paper details a comprehensive comparative analysis of the performance of the proposed hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC) against four other prominent hybrid methods in the multi-robot exploration domain. While the exploration rate can be influenced by a myriad of factors such as robot capabilities, environment complexity, and communication mechanisms, our study delves into the specific impact of initial configurations. The performance is assessed across various initial start positions using three essential metrics: runtime, the percentage of the explored area, and failure rate. Our results highlight the robustness of HCETIIC in multi-robot exploration, demonstrating consistent superior performance across different start positions. These findings not only emphasize the transformative potential of HCETIIC in multi-robot exploration but also underscore the often underestimated significance of the initial robot configuration in exploration efficiency. As such, this study offers crucial insights and presents a new direction for both practitioners and researchers in the field of multi-robot exploration

This paper is organized as follows: Section 2 discusses related works in the field of multi-robot exploration and start position selection. Section 3 elaborates on the proposed HCETIIC method. Section 4 presents our experimental design, results, and a comprehensive analysis. Section 5 concludes the paper with a discussion on our findings, their implications, and prospective future research directions.

2. Literature Review and Related Work

Multi-robot exploration, being at the nexus of robotics, optimization, and coordinated control, has witnessed significant advancements propelled by a myriad of research efforts. The domain’s intricacies necessitate a keen understanding of algorithms and methodologies, ensuring efficient exploration and mapping. The literature, as it stands, is replete with strategies ranging from metaheuristic algorithms inspired by nature to coordinated strategies designed for multi-robot orchestration. Additionally, recent studies have underscored the emergence of hybrid methods that meld the strengths of deterministic and metaheuristic algorithms, offering robust solutions. Yet, amid these advancements, the pivotal role of robots’ initial start positions remains an area in need of deeper insights. This section delves into the key contributions and overarching themes in multi-robot exploration, laying the foundation for our proposed HCETIIC approach.

2.1. Metaheuristic or Stochastic Algorithms

Metaheuristic algorithms, characterized by their stochastic nature, have established themselves as a compelling alternative to conventional deterministic optimization techniques, especially in the face of large-scale, intricate problems often marked by nonlinearity, multimodality, or the profusion of local optima [36]. These algorithms, typically inspired by natural phenomena or biological processes, adeptly balance exploration and exploitation mechanisms to approach near-optimal solutions [37].

Within the realm of multi-robot exploration, numerous metaheuristic algorithms have been used to considerable effect, contributing significantly to advancements in real-time decision making, exploration efficiency, and task allocation.

Renowned as one of the earliest metaheuristics, the genetic algorithm (GA) draws its inspiration from Darwin’s theory of natural evolution [38]. Owing to its inherent capacity for efficient search and optimization, GA has found extensive applications in multi-robot systems, contributing to progress in areas such as task allocation, path planning, and formation control.

The particle swarm optimization (PSO) algorithm [39], another prominent metaheuristic, mirrors the social behavior exhibited in bird flocking or fish schooling. Its application in multi-robot systems is widely documented [40], with its simplicity, efficiency, and broad applicability receiving particular acclaim. Further, adaptations of PSO such as quantum-behaved PSO and binary PSO have amplified its applicability [41].

Taking a leaf out of the book of ants’ foraging behavior, the ant colony optimization (ACO) [42] has carved out a place for itself in multi-robot path planning and task allocation. Its resilience against local optima and dynamic environmental changes has been particularly lauded.

In recent times, bio-inspired algorithms that mimic the behavior of various animal species have gained widespread attention. For instance, the grey wolf optimizer (GWO) [43], which replicates the leadership hierarchy and hunting behavior of grey wolves, and the salp swarm algorithm (SSA) [44], modeled after the swarming behavior of salps in oceans, have found significant utility. The mountain gazelle optimizer (MGO) [45], simulating the vigilance and evasion behaviors of mountain gazelles, and the African vultures optimization algorithm (AVOA) [46], inspired by the opportunistic foraging and flight patterns of African vultures, have added to this burgeoning collection of bio-inspired algorithms.

Among this wealth of nature-inspired strategies, the cheetah optimizer (CO) [35] stands out due to its unique traits. Inspired by the hunting tactics of cheetahs, the CO seamlessly integrates modes of searching, sitting-and-waiting, and attacking, mirroring the natural behavior of its feline counterpart. Furthermore, its distinct ’leave the prey and go back home’ strategy enhances population diversification, convergence performance, and algorithmic robustness. Empirical evaluations using multiple benchmark functions and intricate optimization challenges underscore the CO’s superiority over both conventional and improved algorithms. Its inherent capability to address large-scale and challenging optimization problems makes it particularly suitable for optimizing initial configurations in multi-robot exploration. This rationale drives the integration of the CO into our proposed HCETIIC approach, with a specific focus on addressing the significant gap in the literature concerning optimal robot starting positions.

2.2. Challenges of Reactive Control and the Role of Set-Based Approaches in Robotic Exploration and Trajectory Planning

Traditionally, trajectory planning in multi-robot systems often combines individual robot trajectory techniques with reactive algorithms for collision handling. However, this approach poses challenges, especially in robotic systems with limited computational capabilities. Set-based approaches are gaining traction in trajectory planning for networked robotic mobile robots. These methods, leveraging set mathematics, ensure that trajectories adhere to robot dynamics, constraints, and environmental factors [47]. They offer a robust solution against uncertainties, particularly vital in scenarios with computational limitations. In contrast, reactive control strategies, while providing immediate environmental responses, can be computationally demanding, leading to decision-making delays in intricate multi-robot environments [48].

To address these challenges, recent algorithms ensure feasible trajectories under specific initial conditions [49]. These are pivotal in multi-robot systems, emphasizing collision prevention and coordination. In the evolving landscape of multi-robot exploration, set-based approaches stand out, underscoring the need for efficient trajectory planning methods in computationally constrained scenarios.

2.3. Coordinated Multi-Robot Explorations

Coordinated multi-robot exploration (CME) pertains to the strategic orchestration of multiple robots to optimize the exploration and mapping of unfamiliar or potentially hazardous environments [50,51]. The field of CME, characterized by its extensive variety of strategies, encompasses an array of approaches, each distinguished by its unique benefits and potential areas of development.

Frontier-based exploration [52,53,54] constitutes a seminal approach within CME research. It serves as a cornerstone for many exploratory strategies, with its core principle revolving around directing robots towards “frontiers”, or the boundaries between explored and unexplored regions. This method ensures systematic coverage of the entire space, with its simplicity,and efficacy being widely acknowledged. However, its performance might be compromised in more complex environments or scenarios where aspects like energy efficiency are paramount.

Drawing inspiration from economics, market-based approaches [55] have gained considerable traction in CME. These techniques model exploration tasks as ‘goods’ and the robots as ‘bidders’, where robots bid for tasks based on their cost evaluations. Market-based methods have exhibited improvements in task distribution and adaptability to dynamic environments. However, they often come with the caveat of requiring substantial computational resources and complex negotiation processes.

The advancement of machine learning (ML) and artificial intelligence (AI) technologies has ushered in distributed reinforcement learning techniques in CME [56]. Through these methods, a team of robots can learn optimal exploration strategies via interactions with their environment, potentially enhancing their adaptability and robustness over time. Yet, these techniques often require extensive training periods and may suffer from the ‘curse of dimensionality’ when dealing with larger, more intricate environments.

Despite the impressive array of strategies available within CME, a conspicuous area of improvement is the incorporation of the initial robot configuration into the exploration strategies. This aspect, which is currently under-represented, carries significant potential for optimization. By paying close attention to the starting positions of the robots, we can pave the way for novel and more effective CME techniques, opening the door to more comprehensive exploration strategies, such as our proposed hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC).

2.4. Hybrid Methods

In the field of multi-robot exploration, hybrid methods have been developed that blend deterministic coordinated multi-robot exploration (CME) [51] with a metaheuristic algorithm. This fusion aims to leverage the advantages of deterministic strategies and metaheuristic approaches, producing a more robust and effective exploration strategy.

One noteworthy hybrid method utilizes the coordinated multi-robot exploration and grey wolf optimizer (CME-GWO) algorithms. Albina and Lee [57] proposed this method, arguing that it provides a stochastic optimization technique for multi-robot exploration. Their work suggested that such hybrid models are capable of outperforming conventional deterministic techniques, effectively utilizing the metaheuristic algorithm to improve exploration outcomes. The method deploys a two-step exploration strategy: initially, it uses a deterministic approach to evaluate the environment and then employs a metaheuristic algorithm for robot movement.

Similarly, a study by Gul et al. [58] presented a novel framework that integrated the deterministic CME technique with the metaheuristic frequency-modified whale optimization algorithm (FMH-WOA). Their hybrid method mimicked the predatory behavior of whales in exploration tasks. The efficacy of the FMH-WOA was corroborated by testing the framework in various complex environmental conditions and comparing its performance with other optimization techniques.

Furthermore, reinforcement learning has also been integrated with multi-robot exploration methods. Mete, Mouhoub, and Farid [59] devised a multi-agent reinforcement learning (MARL)-based framework to manage the challenges of multi-UAV exploration in unfamiliar, unstructured, and cluttered environments. Their approach highlights the capability of reinforcement learning techniques to improve temporal planning and inter-agent coordination, contributing to more efficient exploration strategies.

More recently, Romeh and Mirjalili [60] presented a hybrid method that combines the deterministic coordinated multi-robot exploration (CME) with the metaheuristic salp swarm algorithm (SSA), resulting in the CME-SSA method. The SSA is an optimization technique that mimics the swarming behavior of salps, marine animals known for their efficient foraging tactics. In CME-SSA, the deterministic CME initially takes care of the cost and utility values on the grid map. Then, the SSA comes into play to efficiently select the next move of each robot and improve the overall solution. Notably, the CME-SSA method outperformed several other methods in terms of exploration rate, time efficiency, and obstacle avoidance.

Finally, Romeh, Mirjalili, and Gul [61] proposed the hybrid vulture-coordinated multi-robot exploration (HVCME), a novel hybrid method combining the African vultures optimization algorithm (AVOA) with CME. The AVOA, inspired by the intelligent foraging behavior of vultures, uses a deterministic approach to locate potential targets, and then leverages metaheuristic principles to optimize exploration. Experimental results demonstrated that the HVCME approach surpassed other comparable algorithms in terms of exploration coverage, time efficiency, and robustness.

Despite these advances, limitations persist in multi-robot exploration strategies. For instance, achieving complete exploration in complex environments is a significant challenge. Additionally, the starting position of the robots plays a significant role in efficient exploration. Different starting positions can significantly affect the time efficiency and coverage rate of the exploration.The cited work, which emphasizes the importance of robot exploration and its initial configurations, serves as a foundational reference for our research. While it delves into both mapping and path generation, our study narrows down to the initial configurations, elucidating their impact on the exploration efficiency. Recognizing this, our proposed approach, hybrid coordinated exploration technique using intelligent initial coverage (HCETIIC), builds upon the strength of these hybrid techniques while strategically incorporating the aspect of the initial robot configuration, thus paving the way for a more holistic and effective exploration strategy.

2.5. Start Positions

A relatively less explored area in the literature is the role of start positions in multi-robot exploration. A few studies have experimented with different start position assignments [62], such as uniform distribution, centralized position, random positions, perimeter positions, and clustered positions [63,64,65]. While these methods offer the advantage of simplicity, their performance in delivering optimal exploration outcomes, especially in complex environments, is often suboptimal [66,67]. This has underscored the need for a more intelligent strategy for determining the initial configuration of the robots.

Recognizing the shortcomings of traditional start position methods, there emerges a need for a robust and adaptive technique that optimizes initial robot configurations. Our proposed HCETIIC addresses this by leveraging the cheetah optimizer’s capabilities, aiming to provide an intelligent strategy that ensures effective exploration outcomes right from the start.

In summary, this literature review reveals the gap in existing methods for multi-robot exploration—while significant progress has been made in optimizing real-time decision making, coordination, and task distribution, the role of initial start positions remains relatively unexplored. This has motivated the proposed hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC), aiming to fill this gap by combining an advanced optimization algorithm, like the cheetah optimization, with a unique strategy for initial configuration selection.

3. Problem Formulation and Proposed Method

The domain of multi-robot exploration has witnessed several methodologies that, despite their significant contributions, have faced certain limitations. These shortcomings encompass inefficiencies in exploration, failure to achieve complete exploration, the challenge of building an optimal finite map, and the predisposition to be trapped in local optima. To address these challenges, we propose an advanced hybrid approach that marries coordinated multi-robot exploration (CME) and the cheetah optimizer (CO) to optimize the exploration process. This method, designated as the hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC), aspires to augment the accuracy and efficacy of multi-robot exploration across diverse contexts.

3.1. Deterministic CME

The process of multi-robot exploration employs several mobile robots to survey an environment, transitioning from a state of complete ignorance to a comprehensive map creation. Centralized and decentralized explorations are the two primary modes utilized for this purpose.

In the centralized exploration mode, a shared map accessible to all robots is used, allowing real-time monitoring of each robot’s progress. This technique promotes efficient exploration by enhancing inter-robot communication. On the other hand, decentralized exploration is based on individual map construction by each robot, with data exchange facilitated only when robots’ paths intersect. While this reduces coordination complexity, it could result in a less effective exploration due to the limited information exchange among robots.

In the context of this study, we employ the centralized exploration approach due to its potential to foster better coordination and inter-robot communication. HCETIIC capitalizes on this aspect by continuously updating utility values and real-time travel costs for each robot, thereby optimizing the exploration process.

Under CME, the environment’s representation is undertaken using an occupancy grid map. The robot, initially placed in an indoor setting devoid of any knowledge about its surroundings, is equipped with a sensor with a limited coverage range. The sensor identifies frontier cells essential for finite map construction in an unknown space.

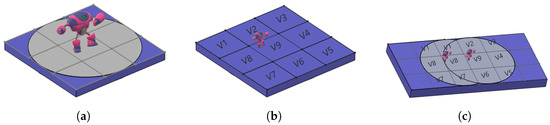

The occupancy grid map houses numerical values indicating the probability of encountering an obstacle in each grid cell, in addition to the utility and travel cost for each cell. Owing to the sensor’s limited coverage, only a few cells surrounding the robot are included in the occupancy grid map. An illustrative representation of the sensor’s view on the occupancy grid map is provided in Figure 1.

Figure 1.

The figure visualizes the sensor’s limited range within grid cells. (a) Confined sensor range (V1 to V8) encircling the robot. (b) Eight cells surrounding the robot, with cell 9 denoting the robot’s position. (c) Robot moving from right to left; sensor range does not cover cost values of V3, V4, and V5.

3.1.1. Establishment of the Cost Function

Determining the most efficient path from a robot’s present location to all frontier cells is a critical step in our proposed HCETIIC method. This path-finding task relies on a deterministic version of a cost function that integrates various factors, including occupancy grid probability, sensor view range, and the straight-line distance between points.

The initial cost function is formulated as in Equation (1). It accounts for grid occupancy probability, sensor range, and Euclidean distance. If a cell has been previously explored, the cost from the previous step for that cell is added to the cost of the current position. But for a cell identified as a frontier cell, it does not bear any backward costs from earlier ray traces that opened it initially (as seen in Equation (3)).

In the three-dimensional occupancy grid map, is used to denote the ith cell along the x-axis, the jth cell along the y-axis, and the kth cell along the z-axis. However, since the utility and cost values are stored on the i–j plane of the 3D occupancy grid map, k is set to zero. The grid map provides a representation of the environment that the robot is navigating.

The cost for traversing a cell is inversely related to the occupancy probability value, . The algorithm conducts two steps (outlined in Equations (1) and (2)) to ascertain the least-cost path.

Initialization:

The algorithm will perform an iterative process to update the status of each grid cell at .

where , , and denotes the maximum occupancy probability.

Choosing the next position for the robot is based on identifying the least cost from the neighboring cells. In the context of multi-robot systems, achieving effective exploration necessitates an organized collective effort, contrasting with single-robot systems that primarily need a low-cost search for localization. The CME approach incorporated in our HCETIIC method allows for efficient task distribution among multiple robots.

3.1.2. Valuation of Utility

This section introduces the concept of the utility value, integral to the HCETIIC method. The utility value gauges whether a cell in the grid map has been explored. At the outset, all grid cells are assigned identical utility values, which are later modified as portrayed in Equation (4). As robots navigate towards new positions, the utility values of frontier cells decline. This prompts robots to give precedence to the exploration of new positions by gravitating towards grid cells with heightened utility values.

Each grid cell’s cost is influenced by its relative distance from the robot. Notably, a frontier cell’s utility is shaped both by its immediate surroundings and the number of robots advancing towards it. In pursuit of optimal utility values, robots proactively search for previously uncharted locations. This strategy uncovers new data and broadens environmental understanding, underpinning efficient exploration, as detailed in Equation (4).

Here, signifies the contemporary utility value of a cell, denoting its importance in the exploration. Concurrently, denotes the utility of the analogous cell in the preceding exploration phase. represents the occupancy probability of the current cell . The summand captures the difference in the perceived environment’s occupancy probability from the robot’s perspective at two distinct time instances. To refine the exploration, the cell with the predominant utility at iteration t is pinpointed using Equation (5), encompassing both the occupancy probability and the cell’s antecedent utility value.

To facilitate effective cooperative exploration, it is essential for robots to start in close proximity to each other, allowing their sensor ranges to overlap. This initial positioning strategy allows the robots to radiate out in diverse directions, advancing towards various target locations and leading to a reduction in utility values. The testing maps were constrained to a 50 m × 50 m dimension with limited sensor ray lengths. The explored area is represented in blue, while dark gray regions signify obstacles.

3.2. The Cheetah Optimizer (CO)

The cheetah optimizer (CO) [35] stands out among metaheuristic algorithms for its unique approach inspired directly by the hunting behavior of cheetahs in their natural habitat. The unparalleled speed, stealth, and agility exhibited by cheetahs during a hunt have found an algorithmic counterpart in CO. These animals have a systematic approach to hunting that involves a mix of patience, swift attack, and periods of rest and observance. By dissecting the cheetah’s behavior, researchers have encapsulated its essence into different phases within the CO algorithm, each mirroring a specific aspect of the cheetah’s hunting strategy.

3.2.1. Social Behavior

Cheetahs in the wild have a unique approach to hunting. Their process includes a patient search, a swift attack, and a return to lower speed after capturing their prey. This hunting cycle is directly reflected in the CO algorithm’s structure, which involves a similar iterative process of searching, attacking, and resting.

Initially, cheetahs move slowly and stealthily towards their prey, trying to remain hidden. This behavior is translated into the ‘search’ phase of the CO algorithm, where the algorithm explores the solution space, slowly approaching the optimal solution.

Then, at the right moment, cheetahs launch a rapid attack to capture their prey. This is reflected in the ‘attack’ phase of the CO algorithm, where the algorithm makes a significant leap towards the optimal solution in the solution space.

Post-attack, cheetahs significantly reduce their speed, and remain observant of their surroundings. This is translated into the ‘rest’ phase of the CO algorithm, where the algorithm slows down the exploration, allowing for local search around the current best solution.

3.2.2. Mathematical Model

The mathematical modeling of the cheetah optimizer (CO) [35] consists of three central hunting strategies represented by distinct equations. Each equation consists of specific parameters that are updated at each iteration to steer the search agents towards the optimal solution.

The search phase is modeled by the equation

is a random parameter that prevents the premature convergence of the algorithm and adds diversity in the searching strategy, symbolizing the unpredictable movement of a cheetah during the search. represents the step length taken by the ith cheetah in the jth dimension at the tth iteration during the searching phase. It is a random parameter that changes at each iteration to replicate the varying pace of a cheetah’s approach towards its prey.

And the rest phase is represented by

is the current position of the ith cheetah in the jth dimension at the tth iteration. is the updated position of the ith cheetah in the jth dimension for the next iteration. In the sit-and-wait strategy, the updated position remains the same as the current position, reflecting the cheetah’s waiting phase.

The attack phase is represented by

is another random parameter that represents the variability in a cheetah’s direction during an attack. symbolizes the step length taken by the ith cheetah in the jth dimension at the tth iteration during the attack. It is another random variable that alters at each iteration, mimicking the unpredictable, swift movement of a cheetah during its attack on the prey.

Moreover, there are several other parameters in the algorithm that need to be defined and adjusted as per the problem’s needs:

and : These are uniformly distributed random numbers from [0, 1] which determine the choice of hunting strategy. As the iterations progress and the cheetah’s energy level decreases, the searching strategy becomes more likely due to the decreasing value of .

H: This parameter is calculated based on , another uniformly distributed random number from [0, 1], and t (the current iteration number). It assists in switching between searching and attacking strategies. Higher values of H increase the chance of the attacking strategy being chosen.

: This is a random number between 0 and 3, which influences the selection between the attacking and searching strategies. Higher values lead to a more focused exploration of the problem space, whereas lower values encourage exploration.

These parameters, through their incorporation in the algorithm’s equations, allow the CO to efficiently balance exploration and exploitation in a way that mirrors the intelligent hunting behavior of cheetahs. They contribute to the algorithm’s strength and adaptability in dealing with complex optimization problems.

3.2.3. Hypotheses

The CO algorithm is predicated on several hypotheses that attempt to mimic the hunting behavior of cheetahs:

- The cheetah population is considered as a group of agents, each representing a solution. Their performance is evaluated through a fitness function, mirroring the hunting success of a cheetah.

- The algorithm acknowledges that cheetah behaviors during hunting can vary. To prevent premature convergence, random parameters are introduced to depict the cheetah’s energy and create diversity in their behaviors.

- The hunting strategies of cheetahs are modeled as random. The algorithm uses random parameters and turning factors to capture the sudden change in direction during hunting.

- The choice between hunting strategies (searching or attacking) depends on a set of random numbers and evolves over time. This approach embodies the dynamic nature of the cheetah’s behavior and conserves the hunting energy of cheetahs in the algorithm.

- The sit-and-wait strategy, a significant component of cheetah hunting, is incorporated into the algorithm, indicating a period of no movement.

- The algorithm is designed to encourage exploration when the leader (the best solution) fails to improve after several iterations. It achieves this by shifting the position of a randomly selected cheetah to the last successful hunting spot.

- The CO algorithm introduces a strategy to avoid local optima. If a group of cheetahs cannot find better solutions within a certain number of iterations, they return to the initial position (home), rest, and start a new hunting process.

- At each iteration, only a part of the cheetah population participates in the evolution process, reflecting the real-world behavior of cheetah groups where not all members are involved in every hunt.

3.2.4. Pseudo-Code

The pseudo-code for the CO algorithm encapsulates the aforementioned behaviors and their respective mathematical models:

- 1.

- Define problem parameters (dimension, initial population size).

- 2.

- Generate initial population of cheetahs (solutions) and evaluate their fitness.

- 3.

- Initialize home, leader, and prey solutions.

- 4.

- For each iteration until the maximum number of iterations:

- (a)

- Select a subset of cheetahs.

- (b)

- For each selected cheetah:

- i.

- Define the neighbor agent.

- ii.

- For each dimension:

- A.

- Calculate parameters f, r, a, B, and H.

- B.

- Based on random numbers, decide if it is a search, attack, or rest phase.

- C.

- Calculate new position of cheetah in the dimension using respective equations.

- iii.

- Update the solutions of the cheetah and the leader.

- iv.

- If certain conditions are met, implement ’leave the prey and go back home’ strategy, changing the leader’s position and substituting the cheetah’s position by the prey’s position.

- (c)

- Update the global best (prey) solution.

- 5.

- Repeat from step 4 until the maximum number of iterations is reached.

This computational model provides an efficient and effective approach to finding optimal solutions in the solution space, making the CO a valuable tool in the field of optimization.

3.3. Hybrid Cheetah Exploration Technique with Intelligent Initial Configuration (HCETIIC)

The proposed HCETIIC method incorporates heuristic principles of the cheetah optimization algorithm (CO) into the coordinated multi-robot exploration (CME) strategy. The fundamental idea is to exploit the high-speed and precise hunting technique of cheetahs to optimize the multi-robot system’s exploration process in unknown environments. The method starts with the initialization of grid maps and follows with the determination of the next optimal moves based on the probabilistic method.

The grid map is initially set up, assigning a utility value of 1 to all cells. The exploration is limited by the sensor’s range; thus, eight candidate cells around the robot, covered by the sensor, form the “cheetahs”. These cheetahs (Ch), represented by to , are candidates for the robot’s subsequent move.

Next, we assign the cost to each candidate cell and subtract the utilities from the cost of the surrounding eight grid cells using the formula

where denotes the next position of the cheetah on the grid at time , signifies the probability of occupancy at position at time t, is the randomization parameter for cheetah i in arrangement j, and represents the step length for cheetah i in arrangement j at time t.

The step length can typically be set at as cheetahs are slow-walking searchers. This value can also be adjusted based on the distance between cheetah i and its neighbor or leader.

The formula for updating the position with the second random number is

Here, is the turning factor, and is the interaction factor associated with cheetah i in arrangement j. The turning factor reflects the sharp turns of the cheetahs in the capturing mode and is a random number equal to .

After calculating the new positions using these equations, the HCETIIC method identifies the three highest utility values as the three best cheetahs. The priority among these is determined based on their updated positions and their alpha and beta values.

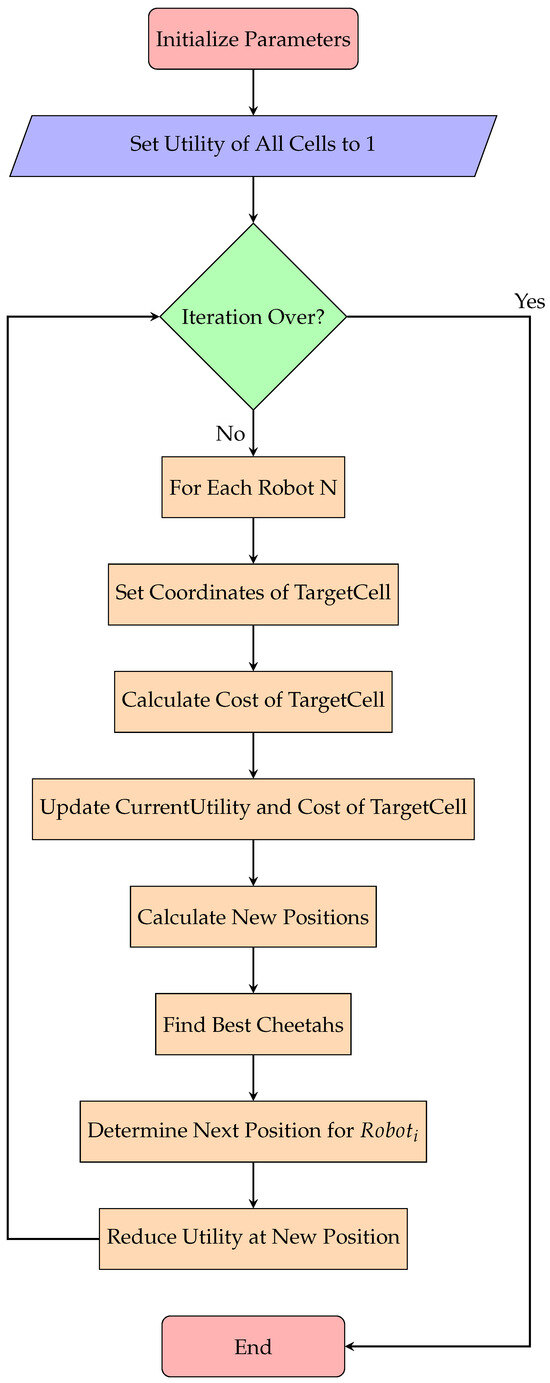

In the presented diagram (Figure 2), we elucidate the intricate workflow underpinning the hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC). This novel method embarks on the exploration by initializing grid maps and subsequently assigning a utility value of 1 to every cell. As the exploration unfolds, the system delves into an iterative loop. Within each iteration, for every robot, the coordinates of the target cell are determined, costs associated with each cell are computed, and utilities are judiciously updated. Upon the culmination of all requisite iterations, the exploration process reaches its terminus. The HCETIIC method, as depicted, epitomizes a methodical and efficacious strategy for multi-robot exploration, adeptly navigating the complexities of initial start positions and other inherent constraints.

Figure 2.

Schematic representation of the proposed hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC) method. This diagram delineates the sequential steps undertaken by the HCETIIC approach, from initialization to the full exploration process.

The pseudo-code for the proposed HCETIIC method is (Algorithm 1):

| Algorithm 1 HCETIIC Exploration Algorithm. |

| Input: Number of robots N, sensor range, iteration t, initial position |

Output: Updated positions of robots and utility of cells

|

Through Algorithm 1, the exploration process can be optimized by rapidly selecting the next best move for the robots, thereby improving the exploration efficiency. The utilization of two randomization parameters and introduces an element of randomness to the exploration process, helping to avoid local optima and improve overall exploration outcomes.

Intelligent Initial Configuration (IIC)

For single-objective optimization, intelligent initial configuration (IIC) plays a critical role in establishing an initial population of solutions that possess a wide range of diverse and potentially successful characteristics. The objective is to leverage the knowledge from previously successful solutions to formulate an initial population that is more suitable for the problem space at hand.

Let us consider a single-objective optimization problem (SOP) where the aim is to find an optimal solution which maximizes or minimizes a particular objective function . The search space of the SOP is denoted by S and each solution is in S, where and N is the total number of solutions. Each solution represents a potential solution to the SOP.

In the context of IIC, a database is maintained, which stores a set of previously found optimal or near-optimal solutions from previous iterations of the algorithm. The database is continuously updated as the algorithm discovers new potential optimal solutions. signifies the database at the tth iteration of the algorithm. consists of a set of solutions , where each is a highly performing solution from a previous iteration.

The IIC component of the HCETIIC algorithm leverages the solutions stored in to construct the initial population for the tth iteration of the algorithm. This is achieved by randomly choosing solutions from and utilizing these as seeds for generating new solutions through slight perturbations. This diversity injection, facilitated by a mutation operator, allows the exploration of different regions of the search space.

The initial population for the tth iteration, denoted by , is generated using the following steps:

- Randomly select k solutions from , where . Let this selected set of solutions be represented as .

- For each solution in , generate a new solution by applying a mutation operator to .

- The set of all new solutions form the initial population for the tth iteration of the algorithm.

Through this approach, the IIC draws on the wisdom of previously high-performing solutions to direct the search process during its initial stages. The mutation operator ensures that the initial population is not merely a clone of the previous solutions, thereby maintaining diversity and fostering exploration of the search space. As such, the IIC contributes to enhancing both the speed of convergence and the quality of the solutions generated by the HCETIIC algorithm.

4. Results and Discussion

The inherent complexity and stochastic nature of multi-robot exploration pose significant challenges to performance evaluation, particularly for metaheuristic algorithms. Comprehensive testing under various conditions, ranging from simplistic to intricate environments, is needed to accurately gauge the effectiveness of the implemented methods.

In the context of our research, we present an empirical analysis of the hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC), an innovative approach that merges the principles of coordinated multi-robot exploration (CME) with a unique method of determining the initial configuration of robots. Our method transcends the capabilities of traditional approaches by optimizing exploration efficiency across different initial start configurations, including uniform distribution, centralized position, random positions, perimeter positions, clustered positions, and strategic positions.

The performance of HCETIIC is compared with four other hybrid methods, namely coordinated multi-robot exploration with grey wolf optimizer (CME-GWO), coordinated multi-robot exploration with salp swarm algorithm (CME-SSA), hybrid vulture-coordinated multi-robot exploration (HVCME), and coordinated multi-robot exploration with mountain gazelle optimization (CME-MGO). The comparative analysis hinges upon three performance indicators: execution time, the percentage of the explored area, and the number of unsuccessful runs. The exploration rate, as defined in our study, primarily focuses on the role of initial configurations. However, in practical scenarios, it is a result of various factors including robot capabilities, the intricacy of the environment, and the efficiency of inter-robot communication.

To quantify the explorative performance of each method, we employed a measure represented by ‘P’, the exploration rate, defined as follows:

where ‘T’ represents the total utility of the unexplored area, and ‘E’ denotes the utility of the explored area. This measure captures the effectiveness of each algorithm in terms of the extent of exploration achieved.

In our study, the experiments were conducted on a consistent map size of , with identical parameters applied to all simulations. The map was represented in a grid-based format where free space was denoted by light gray cells, obstacles by dark gray cells, and the explored area by blue cells.

In all the mapped environments, each color on the map corresponds to an individual robot. Since three robots were used in this simulation, three distinct colors were employed to distinguish between them, with each color representing a different robot.

Our investigations were conducted under four distinct scenarios, each designed to probe a different aspect of the algorithms’ performance.

Scenario 1: This baseline scenario involved all the methods being evaluated under the same starting position (uniform distribution) on a constant map layout, aiming to establish a foundational performance for each method.

Scenario 2: Building on the first scenario, the map layout remained constant, but the starting positions for HCETIIC varied. This scenario examined how adaptable HCETIIC is to different starting positions and how that impacts its performance.

Scenario 3: In this scenario, the starting positions were held constant, but the map layouts were varied to challenge the methods under different environmental contexts.

Scenario 4: This final scenario presented the most significant challenge, as both the starting positions of HCETIIC and the map layouts varied. It pushed the bounds of the exploration task by incorporating variations in both the initial configuration and environment.

To ensure reliable and statistically significant results, we ran each algorithm 30 times, following the guidelines of the central limit theorem [68]. Given the stochastic nature of the algorithms, multiple iterations helped to attain a reasonably normal distribution and a robust representation of the algorithms’ performance.

In the following subsections, we delve into the detailed outcomes and analyses of each scenario, assessing the performance of HCETIIC and the other methods based on the total number of explored grid cells, the time taken for map exploration, and the instances where a method failed to complete a full run. These comprehensive evaluations provide a well-rounded analysis of our proposed method and pave the way for meaningful insights and discussions.

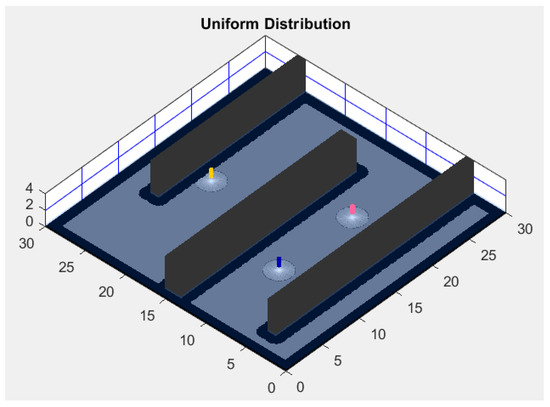

4.1. Scenario 1: Uniform Distribution

The inaugural scenario of this comprehensive analysis establishes a common baseline for the performance evaluation of each method. All the participating methods, namely, hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC), hybrid vulture-coordinated multi-robot exploration (HVCME), coordinated multi-robot exploration with salp swarm algorithm (CME-SSA), coordinated multi-robot exploration with grey wolf optimizer (CME-GWO), and coordinated multi-robot exploration with mountain gazelle optimization (CME-MGO), are examined within the same conditions. These conditions include a uniform distribution starting position and 250 iterations as well as a static map layout of dimensions 30 m × 30 m.

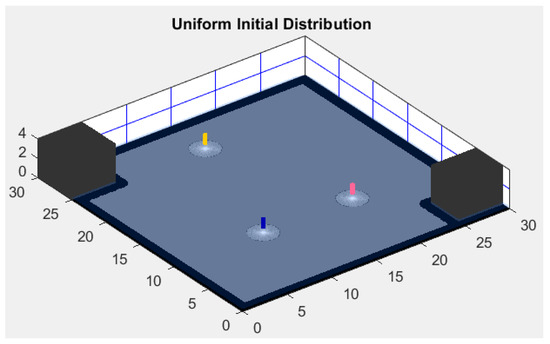

The uniform distribution arrangement sees the deployment of three robots, evenly distributed across the map (Figure 3). This strategic positioning ensures each robot commences its exploration journey from a different section of the map, reducing overlap in their exploration paths and fostering an increased coverage rate.

Figure 3.

Uniform distribution. The figure illustrates robots with different colors: blue, pink, and yellow. Each color represents a robot in a uniform initial configuration.

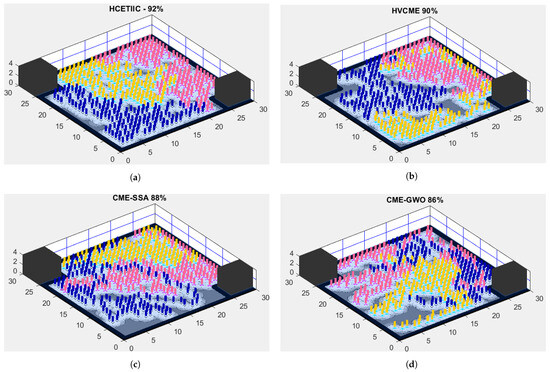

The outcomes of the simulation for this scenario are represented visually in Figure 4, which is composed of five subfigures (a–e). Each subfigure corresponds to a specific aspect of the scenario, either illustrating the initial distribution of the robots or representing the exploration progress made by a particular method.

Figure 4.

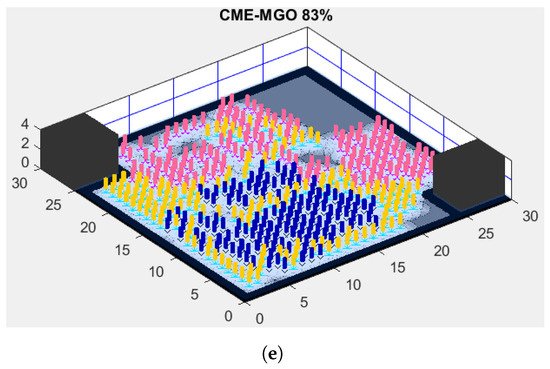

Exploration rates of the different methods under a uniform distribution scenario. (a) HCETIIC; (b) HVCME; (c) CME-SSA; (d) CME-GWO; (e) CME-MGO.

Figure 3—uniform distribution: This subfigure depicts the initial placement of the robots following the uniform distribution strategy. It showcases the spatial distribution of the three robots, strategically placed to cover distinct sections of the map, thereby minimizing the overlap of their exploration domains.

Figure 4a—HCETIIC: HCETIIC, leveraging the optimal positioning provided by the uniform distribution, was successful in achieving an exploration rate of 92% of the total map area. This accomplishment sets a high benchmark for exploration efficiency under these specific conditions, displaying the remarkable capabilities of HCETIIC.

Figure 4b—HVCME: Following closely behind HCETIIC, the HVCME method successfully explored 90% of the map. This impressive achievement attests to the robust capabilities of HVCME in terms of exploration efficiency.

Figure 4c—CME-SSA: The CME-SSA method, with an exploration rate of 88%, signals a slight dip in exploration efficiency when compared to HCETIIC and HVCME. However, the performance remains commendable, given the ideal scenario conditions.

Figure 4d—CME-GWO: The CME-GWO method accomplished an exploration rate of 86%. This result reflects a gradual decrease in performance when compared to the other methods within this scenario, yet it maintains a high standard of exploration efficiency.

Figure 4e—CME-MGO: Rounding out the scenario, the CME-MGO method achieved an exploration rate of 83%. Although it scored the lowest among the five methods, it maintains a reasonable level of exploration efficiency within the controlled confines of this uniform distribution scenario.

These findings offer an initial comparative understanding of the exploration efficiencies of the methods under study. It sets the stage for the forthcoming scenarios, which will introduce varying starting positions and environmental complexities, thereby providing a comprehensive evaluation of the performance robustness and adaptability of the HCETIIC method.

4.2. Scenario 2: Adaptability to Different Starting Positions

The second scenario adds another layer of complexity to the evaluation process, maintaining the map layout identical to scenario 1 while incorporating variations in the starting positions. This experimental design enables us to examine the robustness and flexibility of the hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC) to adapt to changes in initial configurations and the ensuing effects on the efficiency of exploration.

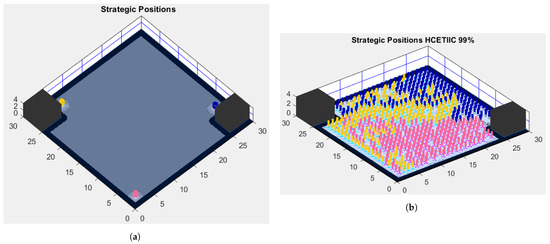

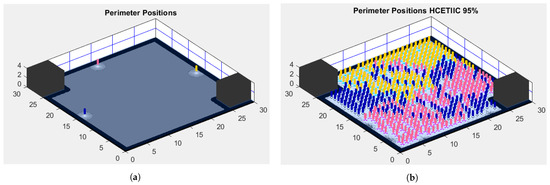

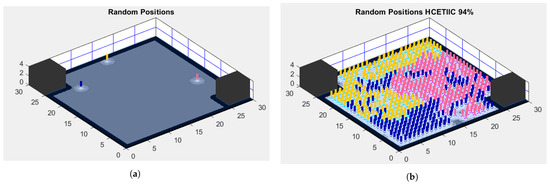

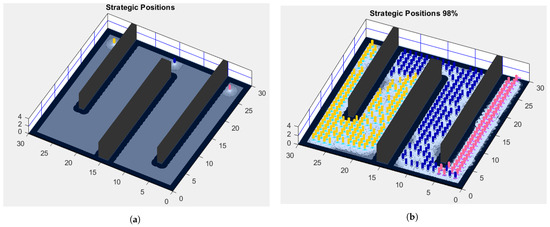

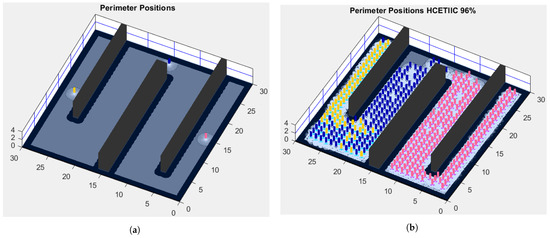

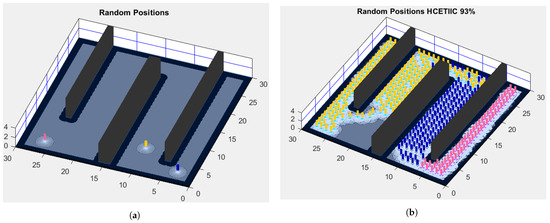

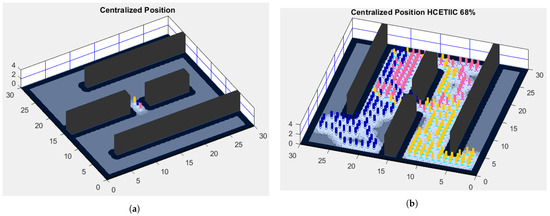

In this scenario, five distinct types of starting positions were investigated: strategic positions, perimeter positions, random positions, clustered positions, and centralized position. We visualized the exploration process associated with each of these configurations in Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9, which comprises ten subfigures. Each pair of these subfigures offers a snapshot of the robots’ initial placement and the ensuing exploration performance of HCETIIC under that specific configuration.

Figure 5.

Different starting positions and their corresponding HCETIIC values. (a) Strategic positions; (b) Strategic positions HCETIIC 99%.

Figure 6.

Different starting positions and their corresponding HCETIIC values. (a) Perimeter positions; (b) Perimeter positions HCETIIC 95%.

Figure 7.

Different starting positions and their corresponding HCETIIC values. (a) Random positions; (b) Random positions HCETIIC 94%.

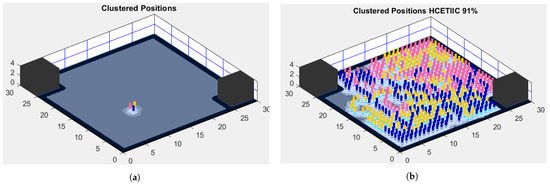

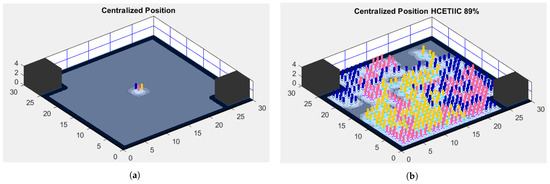

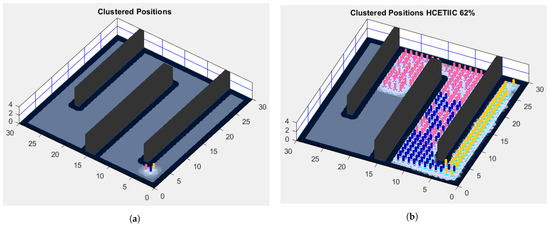

Figure 8.

Different starting positions and their corresponding HCETIIC values. (a) Clustered positions; (b) Clustered positions HCETIIC 91%.

Figure 9.

Different starting positions and their corresponding HCETIIC values. (a) Centralized position; (b) Centralized position HCETIIC 89%.

Strategic Positions (Figure 5a,b): Strategic positions imply a calculated placement of robots, designed to ensure broad coverage and minimal exploration time. Figure 5a shows the strategic arrangement of robots at the beginning of the exploration. As a testament to the efficacy of this approach, Figure 5b illustrates the remarkable exploration efficiency of HCETIIC under this setup, recording an exceptional 99% exploration rate—the highest among all the configurations tested in this scenario.

Perimeter Positions (Figure 6a,b): This arrangement positions the robots along the boundary of the map, as depicted in Figure 6a. Despite the potential increased travel distance to reach the center of the map, HCETIIC demonstrated notable adaptability. Figure 6b shows the exploration performance of HCETIIC under this configuration, achieving a commendable 95% exploration efficiency.

Random Positions (Figure 7a,b): The challenge in this configuration lies in its inherent unpredictability, with the robots placed at random points across the map (Figure 7a). Nonetheless, HCETIIC effectively navigated through this uncertainty, as can be seen from Figure 7b, which showcases an impressive exploration rate of 94%.

Clustered Positions (Figure 8a,b): In this setup, the robots were grouped together in a single cluster (Figure 8a), a placement that could potentially lead to overlapping exploration paths and, consequently, reduced efficiency. However, the HCETIIC algorithm maneuvered through this arrangement quite adeptly, achieving a solid exploration rate of 91%, as depicted in Figure 8b.

Centralized Position (Figure 9a,b): The robots were initially positioned at a central point within the map (Figure 9a), which could similarly result in overlapped exploration paths and reduced efficiency. Yet, HCETIIC managed to maintain a respectable exploration performance, securing an exploration rate of 89% (Figure 9b).

These results from scenario 2 substantiate the robustness and versatility of HCETIIC, demonstrating its ability to adapt to and optimize its performance across diverse initial configurations. Even under potential efficiency-compromising setups like clustered and centralized position, HCETIIC displayed a notable capacity to manage and optimize the exploration process. This scenario’s findings, hence, reinforce the potential of HCETIIC as a formidable solution for multi-robot exploration tasks under varying initial configurations.

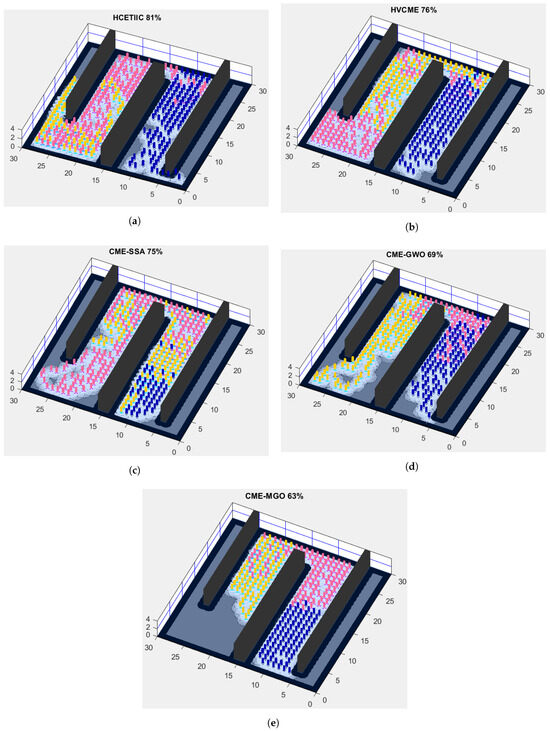

4.3. Scenario 3: Exploration Performance under Complex Map Layouts

This scenario further broadens the testing spectrum of our multi-robot exploration strategies, with an emphasis on their adaptability to intricate map layouts while maintaining uniform initial robot positions. In a bid to imitate more realistic exploration tasks, the chosen map layouts include various complexities, such as convoluted pathways, tight corridors, and strategically positioned obstacles, creating a challenging environment for exploration algorithms.

The initial uniform distribution of the robots, as illustrated in Figure 10, is kept constant, enabling a clear comparison of the methods’ performances in differing map structures. The consistent starting configuration mitigates any performance influence originating from the initial placement of robots and ensures that the measured outcomes are primarily driven by the exploration strategy and the adaptability of the respective algorithms to the environment.

Figure 10.

Uniform distribution.

The consequential impact of these environmental complexities on the exploration techniques is illustrated in Figure 11, delineating the exploration efficiency of each method: HCETIIC (Figure 11a), HVCME (Figure 11b), CME-SSA (Figure 11c), CME-GWO (Figure 11d), and CME-MGO (Figure 11e).

Figure 11.

Exploration rates of the different methods under a complex map layout. (a) HCETIIC; (b) HVCME; (c) CME-SSA; (d) CME-GWO; (e) CME-MGO.

In this challenging setup, HCETIIC exemplifies a remarkable resilience, securing an exploration efficiency of 81% (Figure 11a), a testament to its robustness and adaptability to more intricate environments. This superior performance emphasizes the capability of HCETIIC to effectively navigate through obstacles and coordinate robot actions for efficient exploration.

The other methods demonstrated varying degrees of performance. HVCME, with an exploration efficiency of 76% (Figure 11b), and CME-SSA, reaching 75% (Figure 11c), were able to navigate the complex terrain with relative success. Conversely, CME-GWO and CME-MGO encountered more challenges, achieving exploration efficiencies of 69% (Figure 11d) and 63% (Figure 11e), respectively, demonstrating difficulties in maneuvering through the elaborate map configurations.

These findings underscore the critical role of environmental context in multi-robot exploration and the necessity for methods that can seamlessly adapt to varied and complex scenarios. The strong performance of HCETIIC in this context further strengthens its credibility as an effective and robust method for multi-robot exploration, capable of handling complex environments while maintaining high exploration efficiency. This well-rounded analysis further highlights the ability of HCETIIC to tackle both variations in initial configuration and intricate environmental contexts, which is an essential quality for any exploration method targeting real-world applications.

4.4. Scenario 4: Simultaneous Variation of Starting Positions and Map Layouts

The fourth and final scenario of our study presented a demanding test for the exploration algorithms by simultaneously altering both the starting positions of the robots and the configuration of the environment. This scenario aimed to assess the flexibility and adaptability of the algorithms under variable and unpredictable conditions.

In order to showcase the diversity of starting positions and map layouts, this scenario comprised five different initial configurations on a more complex map. The map layout was characterized by an intricate array of corridors and obstacles, which required enhanced strategic planning and coordination among the robots.

For the strategic positions configuration (Figure 12a), HCETIIC outperformed expectations by achieving an astounding exploration efficiency of 98% (Figure 12b). These results underscored the advantage of using strategically informed positions for maximizing exploration efficiency, even in the face of environmental complexity.

Figure 12.

The Strategic positions configuration on a complex map, along with the resultant high exploration efficiency of 98% achieved by HCETIIC. (a) Strategic positions; (b) Strategic positions HCETIIC 98%.

Similarly, HCETIIC demonstrated impressive performance with the perimeter positions configuration (Figure 13a), achieving a commendable 96% exploration efficiency (Figure 13b). This finding signifies the robustness of HCETIIC, its adaptability to different initial configurations, and its ability to effectively navigate and explore the environment despite the constraints.

Figure 13.

The perimeter positions configuration on a complex map and the impressive exploration efficiency of 96% achieved by HCETIIC, demonstrating its robust adaptability. (a) Perimeter positions; (b) Perimeter positions HCETIIC 96%.

When the robots were deployed in random positions (Figure 14a), HCETIIC still demonstrated strong performance, achieving a 93% exploration efficiency (Figure 14b). This result showcased the robustness of HCETIIC and its ability to handle unpredictability in the initial placements, a characteristic that is immensely valuable in real-world applications where the starting positions may not always be optimized.

Figure 14.

The random positions configuration on a complex map, with HCETIIC managing a strong exploration efficiency of 93%, showcasing its robustness in handling unpredictable initial placements. (a) Random positions; (b) Random positions HCETIIC 93%.

In contrast, the centralized position configuration (Figure 15a) saw a drop in exploration efficiency to 68% (Figure 15b), reflecting the challenges associated with a more concentrated starting position.

Figure 15.

The centralized position configuration on a complex map. The figure illustrates the decrease in exploration efficiency to 68% due to the challenges associated with a concentrated starting position. (a) Centralized position; (b) Centralized position HCETIIC 68%.

Similarly, the clustered positions configuration (Figure 16a) saw HCETIIC navigating and exploring only 62% of the map (Figure 16b), suggesting that the method struggled with close-knit initial placements in a complex environment.

Figure 16.

The clustered positions configuration on a complex map. The figure highlights a further decrease in efficiency to 62%, suggesting the method’s struggle with close-knit initial placements in a complex environment. (a) Clustered positions; (b) Clustered positions HCETIIC 62%.

Taken together, these results illuminate the performance dynamics of the HCETIIC algorithm across diverse and challenging conditions. The superior performance observed in strategic, perimeter, and random positions substantiate HCETIIC’s versatility and efficiency, while the lower efficiencies in centralized and clustered positions point towards areas that may require further optimization in the algorithm.

In conclusion, scenario 4 provides a rigorous evaluation of the HCETIIC method’s capabilities in a complex, dynamic exploration context. Despite facing challenges, the algorithm’s performance remains high, proving its value as a formidable approach for multi-robot exploration. Future improvements could further enhance its performance, making it a truly adaptable and efficient solution for complex multi-robot exploration tasks.

4.5. Results, Analysis, and Discussion

In the intricate process of assessing various algorithmic strategies for multi-robot exploration systems, the present study deploys a meticulous and multifaceted analysis. The findings, encapsulated across five distinct tables, probe into the exploration percentages, time efficiency, occurrences of failure, and the statistical significance through p-values. Key metrics, including the mean (average) and standard deviation, are utilized to illuminate the performance attributes and reliability of these algorithms.

The empirical foundation of this research is buttressed by a methodological design inspired by principles akin to the central limit theorem. To ensure the integrity and robustness of the findings, the study was constructed around 30 runs for each algorithm, with 250 iterations per run. Such a design aligns with statistical practices, ensuring a normal-like distribution, thus validating the chosen sample size and iteration count as fitting for this particular investigation. The cheetah optimizer (CO), with its inherently stochastic nature, was employed, and the variability across runs serves to underscore the methodological rigor.

An essential aspect of evaluating the efficiency of multi-robot exploration algorithms is understanding their computational complexity. This metric provides insights into the algorithm’s scalability and its feasibility for real-world applications, especially when rapid decision making is crucial.

This precisely crafted research approach not only supplies a rich and diverse dataset but also endows the conclusions with a level of reliability and precision that is in concert with the analytical demands of top-tier scientific exploration. It is the harmonious blend of methodological stringency, analytical depth, and empirical sophistication that situates the present study at the forefront of contemporary research into algorithmic methodologies for multi-robot exploration, a position commensurate with the sophistication of the cheetah optimizer. Our results showcase the significance of initial configurations on the exploration rate. However, it is essential to interpret these findings considering that in real-world scenarios, multiple factors concurrently influence this rate.

4.5.1. Exploration Percentage

- Significance of using average and standard deviation (Table 1): The average exploration percentage encapsulates the typical performance of each algorithm across multiple runs, providing a central tendency. This indicator offers a straightforward comparison of how well each algorithm covers the exploration space. The standard deviation, conversely, conveys the variability or dispersion from this average, presenting an understanding of the stability or inconsistency within the algorithm’s performance.

Table 1. Explored area in the uniform distribution scenario.

Table 1. Explored area in the uniform distribution scenario. - Analysis by scenario

- 1.

- Scenario 1: HCETIIC exhibits supremacy both in average exploration and stability, denoted by its highest mean and lowest standard deviation. CME-MGO lags in exploration and displays higher volatility.

- 2.

- Scenario 3: All algorithms demonstrate a decrease in exploration percentage, reflecting the complexity of the scenario. However, HCETIIC maintains the lead, indicating its adaptability to varying conditions.

4.5.2. Time Efficiency

- Significance of using average and standard deviation (Table 2): The average time taken encapsulates the computational efficiency of each algorithm, reflecting how quickly they can execute. A lower average time suggests a more efficient algorithm in terms of computational complexity. The standard deviation, paralleling the exploration analysis, indicates the reliability of this efficiency across multiple runs.

Table 2. Explored area per second.

Table 2. Explored area per second. - Analysis by scenario—scenarios 1 and 3 (Table 2): HCETIIC consistently demonstrates superior time efficiency, suggesting its potential for applications requiring rapid responses. CME-MGO, conversely, is less time-efficient, particularly in scenario 3, showing sensitivity to the environment’s complexity.

4.5.3. Failure Analysis

- Failure rates offer insight into the algorithms’ robustness, reflecting their ability to handle obstacles and unexpected situations (Table 3). Higher failure rates may suggest a need for further refinement to increase dependability.

Table 3. Failed to continue.

Table 3. Failed to continue. - Analysis by scenario—scenarios 1 and 3 (Table 3): HCETIIC exhibits flawless performance, while CME-GWO and CME-MGO display higher failure rates, highlighting the importance of algorithmic resilience.

4.5.4. Percentage of Explored Area

- Analysis by scenario

- 1.

- Scenario 2 (Table 4): Strategic positions leads, with an efficient average percentage of explored area of 99.55%. The uniform, perimeter, random, and clustered positions have shown effectiveness, with percentages ranging from 90.22% to 96.07%. The centralized position showed an average explored area of 90.22%, which may be effective in different environments or specific use cases.

Table 4. Percentage of explored area.

Table 4. Percentage of explored area. - 2.

- Scenario 4 (Table 4): Strategic positions maintains a leading position with 97.93%. For the uniform, perimeter, random, and clustered positions the performance varies, but can be seen as adaptive to different environmental needs, with percentages ranging from 69.36% to 97.06%. For the centralized position, 76.07% was explored, indicating potential effectiveness in specialized or contrasting environments.

The table illustrates the effectiveness of different exploration strategies across various scenarios. “Strategic positions” consistently stands out, demonstrating the efficiency of the proposed method.

The other positions, including “centralized”, show diverse performances, which could mean they are adaptable and effective in different or specialized environments. The insights derived from this table may guide future research in tailoring exploration strategies to specific scenarios.

4.5.5. Exploration Repetition Rate

- Significance of using average and standard deviation (Table 5): The exploration repetition rate is a measure of the efficiency of an exploration algorithm. A lower rate indicates that the robots are exploring new areas more often, while a higher rate suggests potential redundancies in the exploration paths. Furthermore, a higher repetition rate might imply increased computational demands, as robots would need to process redundant paths and make more decisions. The average repetition rate provides a central point of comparison, and the standard deviation reveals the consistency of this metric across multiple runs.

Table 5. Exploration repetition rate.

Table 5. Exploration repetition rate. - Analysis by scenario:

- 1.

- Scenario 1 (Table 5): HCETIIC, with its optimized coordination strategies, shows a minimized repetition rate, thus indicating its robustness in preventing overlapping paths. In contrast, CME-MGO has a higher repetition rate, signaling possible inefficiencies in multi-robot coordination.

- 2.

- Scenario 3 (Table 5): With the increased complexity, the repetition rate generally rises for most algorithms. However, HCETIIC still manages to maintain a comparatively lower repetition rate, showing its scalability and efficiency in diverse environments.

- 3.

- Research frontiers and future prospects: The variability in exploration repetition rates across algorithms, especially in intricate scenarios, unveil an intriguing lacuna warranting comprehensive exploration. In the present endeavor, we limit our study to a single-objective multi-agent perspective. However, transitioning to a multi-objective multi-agent paradigm might offer a holistic and nuanced understanding, thereby emerging as a propitious avenue for future scholarly pursuits. Delving into this domain might not only proffer optimized exploration outcomes but also bolster the horizons of multi-agent robotic research, rendering it an enticing prospect for academic communities.

4.5.6. p-Value Analysis

In the analytical realm of independent multi-robot exploration, this study introduces an innovative technique known as HCETIIC, designed to function effectively across diverse environmental complexities. By undertaking an interdisciplinary approach that harmonizes qualitative insights with rigorous quantitative evaluations, the research elucidates the facets of spatial exploration. The dual metrics of average and standard deviation have been purposefully deployed to gauge the extent of the area explored and to compare the stability of the proposed method against similar techniques (refer to Table 6 and Table 7).

Table 6.

p-values obtained from the rank-sum test for the results of the exploration data.

Table 7.

Scenario No: p-Values obtained from the rank-sum test for the results for time.

Moreover, this research enlists a nuanced statistical framework, utilizing the Wilcoxon rank-sum test to dissect individual runs and discern underlying patterns. The ensuing analysis is guided by two pivotal hypotheses: , which postulates the HCETIIC technique’s potential inferiority in exploration rate and time utilization, and , which asserts its superiority.

The outcomes reflected in Table 6 and Table 7 present compelling evidence to challenge the null hypothesis, with p-values manifestly below the threshold of 0.05. These findings are further consolidated by the meticulous pairing and comparison of the best-performing methods across various test functions.

- Statistical significance—p-values of exploration (Table 6):

- 1.

- Importance of p-values: These values provide statistical proof of the significance of the exploration data, with lower p-values indicating a stronger rejection of the null hypothesis.

- 2.

- Insights: The results across the scenarios validate the statistically significant differences among the algorithms, with extremely low p-values for CME-SSA, CME-GWO, and CME-MGO.

- Statistical Significance: p-Values for Time (Table 7)

- 1.

- Importance: The p-values for time give a statistical comparison of the efficiency of different algorithms.

- 2.

- Statistically significant differences in exploration data and time efficiency can hint at underlying computational complexities. Algorithms that consistently perform better might be more optimized in terms of computational demands.

- 3.

- Insights: Low p-values across the board highlight significant differences in time efficiency among the algorithms.

In conclusion, the relentless consistency in methodological execution across the length and breadth of this paper not only validates its analytical integrity but also manifests the credibility of the insights. The judicious juxtaposition of qualitative finesse and quantitative precision casts the study in an authoritative light, delivering a decisive contribution to the field of multi-robot exploration.

4.5.7. Real-World Deployment of Laser-Guided Navigation Using MATLAB and ROS for Turtlebot Robots

In this research, we introduced an advanced technique utilizing MATLAB’s robotic system and navigation toolboxes, with all simulations conducted digitally. For tangible real-world deployments, the Turtlebot 3 [69] which typically measures 138 mm in diameter and 98 mm in height, provides a practical platform. This compact size allows for efficient maneuverability in varied environments. Paired with the Hokuyo laser range scanner [70] it ensures precise navigation. Leveraging a powerful computing device like a tablet or laptop with the robotic system toolbox enables a fluid connection between MATLAB and the robot’s operating system (ROS) [71]. This arrangement allows sensor data, covering a visual span of 240 to 360 degrees, to be sent to MATLAB. The data are then processed by the HCETIIC algorithm to determine the robot’s movements. Importantly, our system operates without external noise filters, meaning it directly uses raw data. Potential noise, though unquantified, has shown minimal impact on the robot’s performance in our tests. Maintaining robust Wi-Fi connectivity is paramount to ensuring consistent communication between the robot and the computing device, especially in expansive indoor areas targeted for exploration. Current frameworks address observational inaccuracies in the robot, ensuring timely convergence while accounting for external disturbances and uncertainties [72].

4.5.8. Limitations of the Study

In our pursuit of an algorithmic exploration of multi-robot systems, certain limitations became evident:

- Simulation-Based Results: While our research utilized MATLAB’s robotic system and navigation toolboxes, all simulations were conducted digitally. Real-world deployments may present unpredictable challenges not captured in the simulated environments.

- Size and Design of Robots: The use of Turtlebot 3, given its specific size and design specifications, might not be representative of larger or differently structured robots. The behaviors and results observed might vary if a different robot design is employed.

- Influence of Initial Configurations: Our results underline the significance of initial configurations on the exploration rate. In practical, real-world scenarios, there are numerous factors that might influence this rate simultaneously, which our study may not fully encompass.

- Single-Objective Perspective: The current study is bounded within a single-objective multi-agent framework. Transitioning to a multi-objective multi-agent paradigm could pave the way for a more encompassing and nuanced understanding of exploration algorithms.

- Dynamic Environments: Real-world scenarios frequently present dynamic environments with unpredictable changes. The current study does not extensively address dynamic environmental changes, which can impact the exploration rate and efficiency significantly.

- Exploration Repetition Rates: Variances in exploration repetition rates, especially in intricate scenarios, hint at potential gaps in our understanding that could impact the efficiency and performance of exploration algorithms.

These limitations, while inherent in the current scope of our research, also serve as potential avenues for further investigation and enhancement in subsequent studies.

5. Conclusions

This research has embarked on an unprecedented exploration into the often undervalued facet of multi-robot exploration: the influence of initial start positions. By introducing the hybrid cheetah exploration technique with intelligent initial configuration (HCETIIC), we have elucidated a transformative strategy for optimizing exploration efficiency across varying configurations including uniform distribution, centralized position, random positions, perimeter positions, clustered positions, and strategic positions.

The empirical findings reveal a clear and consistent pattern: the proposed HCETIIC method not only stands out in efficiency but also showcases adaptability across different start positions. The strategic position, as manifested through our analysis, offers a particular testament to the robustness of this approach.

Performance measures, such as runtime, the percentage of explored area, and failure rate, were meticulously evaluated to engage in a thorough comparative analysis with four other prominent hybrid methods. The results accentuate the significant potential of HCETIIC in enhancing efficiency in multi-robot exploration tasks across various real-world scenarios.

In addition to the exploration efficiencies and strategies discussed, this study underscores the importance of computational complexity in multi-robot exploration. As the field advances, balancing exploration efficiency with computational demands will be paramount.

The implications of this research are far-reaching. It underscores the critical role of initial robot configuration, a component that has often been overlooked in the field. By considering this variable, the study paves the way for new avenues in multi-robot exploration, encompassing both theoretical advances and practical applications.