Multiview-Learning-Based Generic Palmprint Recognition: A Literature Review

Abstract

:1. Introduction

- (1)

- We completely introduce different types of open-set palmprint databases in real-world application scenarios.

- (2)

- To the best of our knowledge, this study is the first work to create a detailed and comprehensive summary and analysis for multiview palmprint recognition methods.

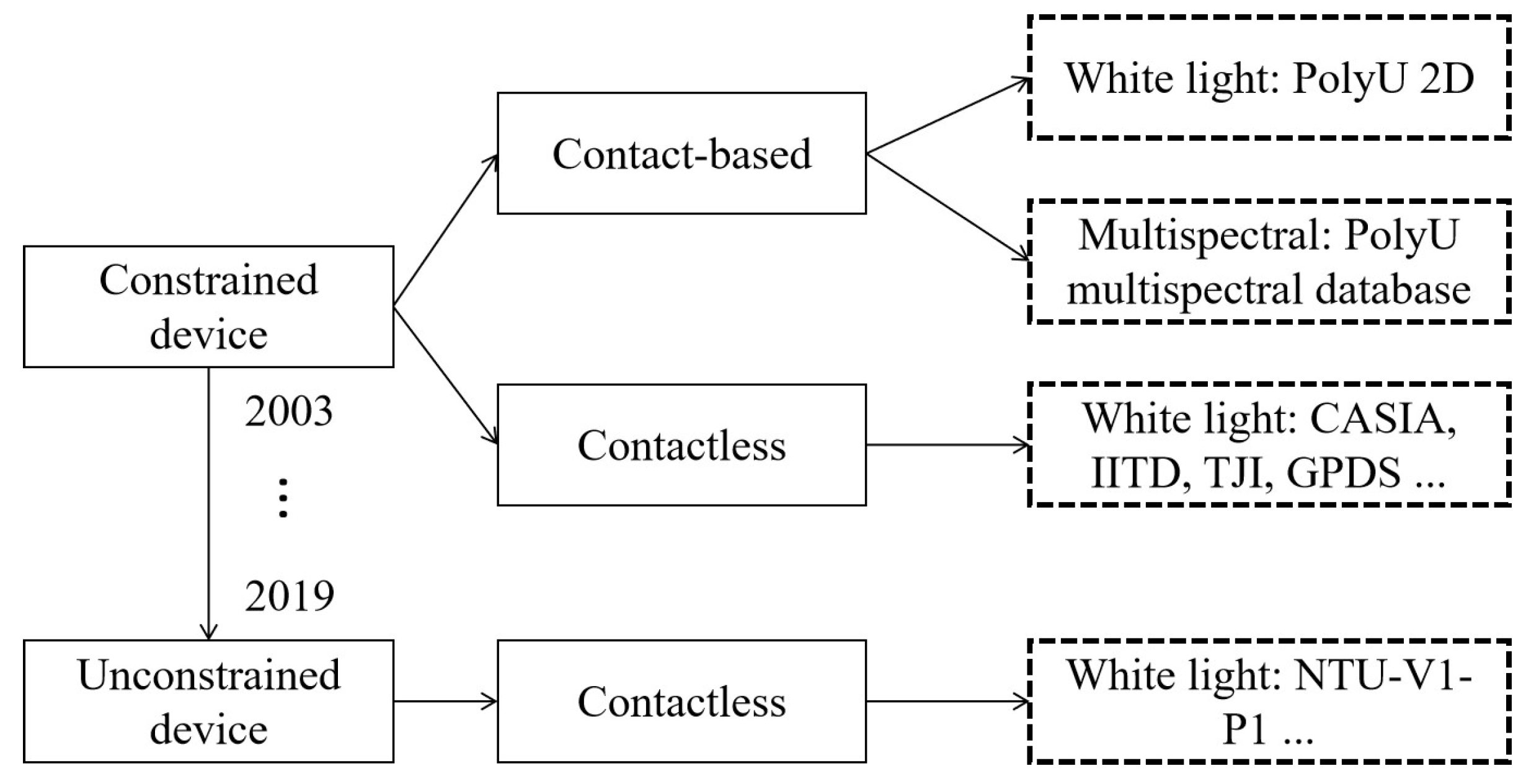

2. Generic Palmprint Databases and Image Preprocessing

2.1. Palmprint Dataset Construction

2.2. Palmprint ROI Segmentation

3. Multiview Palmprint Recognition

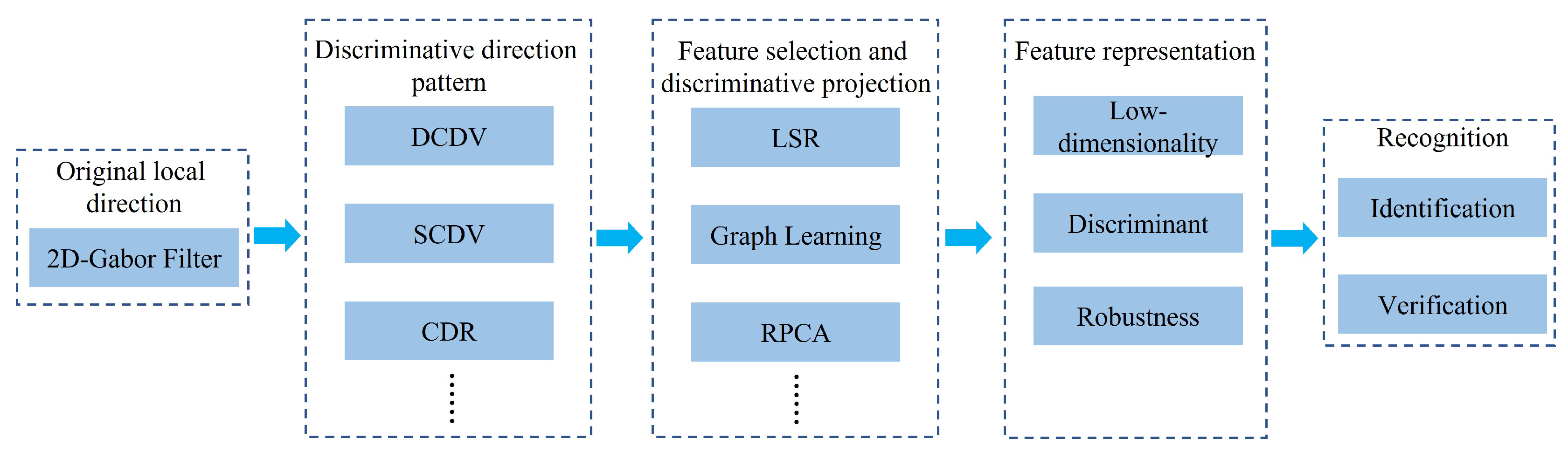

3.1. Multiview Feature Containers

3.2. Multiview Palmprint Representation

3.3. Analysis

- (1)

- Single-view palmprint representation methods usually have a satisfactory performance in a specific scene. However, when the application environment changes, the feature representation ability will decrease.

- (2)

- Since multiview feature learning can adopt different complementary types of features from diverse views, multiview palmprint representation methods can achieve stable recognition results by enhancing the palmprint feature expression.

- (3)

- Multiview palmprint recognition can adapt to more complex application scenarios, where single-view palmprint representation has significant application limitations.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fei, L.; Zhang, B.; Xu, Y.; Guo, Z.; Wen, J.; Jia, W. Learning discriminant direction binary palmprint descriptor. IEEE Trans. Image Process. 2019, 28, 3808–3820. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Zhang, B. Learning complete and discriminative direction pattern for robust palmprint recognition. IEEE Trans. Image Process. 2020, 30, 1001–1014. [Google Scholar] [CrossRef]

- Ungureanu, A.S.; Salahuddin, S.; Corcoran, P. Toward unconstrained palmprint recognition on consumer devices: A literature review. IEEE Access 2020, 8, 86130–86148. [Google Scholar] [CrossRef]

- Genovese, A.; Piuri, V.; Plataniotis, K.N.; Scotti, F. PalmNet: Gabor-PCA Convolutional Networks for Touchless Palmprint Recognition. IEEE Trans. Inf. Forensics Secur. 2019, 14, 3160–3174. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Li, L.; Yang, A.; Shen, Y.; Yang, M. Towards contactless palmprint recognition: A novel device, a new benchmark, and a collaborative representation based identification approach. Pattern Recognit. 2017, 69, 199–212. [Google Scholar] [CrossRef]

- Gao, Q.; Xia, W.; Wan, Z.; Xie, D.; Zhang, P. Tensor-SVD based graph learning for multi-view subspace clustering. Proc. AAAI Conf. Artif. Intell. 2020, 34, 3930–3937. [Google Scholar] [CrossRef]

- Chen, Y.; Xiao, X.; Zhou, Y. Multi-view subspace clustering via simultaneously learning the representation tensor and affinity matrix. Pattern Recognit. 2020, 106, 107441. [Google Scholar] [CrossRef]

- Chen, Y.; Xiao, X.; Zhou, Y. Jointly learning kernel representation tensor and affinity matrix for multi-view clustering. IEEE Trans. Multimed. 2020, 22, 1985–1997. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, B. Deep discriminative representation for generic palmprint recognition. Pattern Recognit. 2020, 98, 107071. [Google Scholar] [CrossRef]

- Zhong, D.; Du, X.; Zhong, K. Decade progress of palmprint recognition: A brief survey. Neurocomputing 2019, 328, 16–28. [Google Scholar] [CrossRef]

- Chowdhury, A.M.M.; Imtiaz, M.H. Contactless Fingerprint Recognition Using Deep Learning—A Systematic Review. J. Cybersecur. Priv. 2022, 2, 714–730. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, B. Robust adaptive algorithm for hyperspectral palmprint region of interest extraction. IET Biom. 2019, 8, 391–400. [Google Scholar] [CrossRef]

- Kumar, A.; Zhang, D. Personal recognition using hand shape and texture. IEEE Trans. Image Process. 2006, 15, 2454–2461. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Younesi, A.; Amirani, M.C. Gabor filter and texture based features for palmprint recognition. Procedia Comput. Sci. 2017, 108, 2488–2495. [Google Scholar] [CrossRef]

- Huang, D.-S.; Jia, W.; Zhang, D. Palmprint verification based on principal lines. Pattern Recognit. 2008, 41, 1316–1328. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, D.; Zhang, L.; Zuo, W. Palmprint verification using binary orientation co-occurrence vector. Pattern Recognit. Lett. 2009, 30, 1219–1227. [Google Scholar] [CrossRef]

- Jia, W.; Zhang, B.; Lu, J.; Zhu, Y.; Zhao, Y.; Zuo, W.; Ling, H. Palmprint recognition based on complete direction representation. IEEE Trans. Image Process. 2017, 26, 4483–4498. [Google Scholar] [CrossRef] [PubMed]

- Fei, L.; Xu, Y.; Zhang, B.; Fang, X.; Wen, J. Low-rank representation integrated with principal line distance for contactless palmprint recognition. Neurocomputing 2016, 218, 264–275. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, B. Learning salient and discriminative descriptor for palmprint feature extraction and identification. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 5219–5230. [Google Scholar] [CrossRef]

- Li, G.; Kim, J. Palmprint recognition with local micro-structure tetra pattern. Pattern Recognit. 2017, 61, 29–46. [Google Scholar] [CrossRef]

- Jia, W.; Ren, Q.; Zhao, Y.; Li, S.; Min, H.; Chen, Y. EEPNet: An efficient and effective convolutional neural network for palmprint recognition. Pattern Recognit. Lett. 2022, 159, 140–149. [Google Scholar] [CrossRef]

- Fei, L.; Zhang, B.; Teng, S.; Guo, Z.; Li, S.; Jia, W. Joint Multiview Feature Learning for Hand-Print Recognition. IEEE Trans. Instrum. Meas. 2020, 69, 9743–9755. [Google Scholar] [CrossRef]

- NTU Forensic Image Databases. Available online: https://github.com/BFLTeam/NTU_Dataset (accessed on 1 October 2019).

- Wang, X.; Fu, L.; Zhang, Y.; Wang, Y.; Li, Z. MMatch: Semi-supervised Discriminative Representation Learning For Multi-view Classification. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6425–6436. [Google Scholar] [CrossRef]

- Li, J.; Zhang, B.; Lu, G.; Zhang, D. Generative multi-view multi-feature learning for classification. Inf. Fusion 2019, 45, 215–256. [Google Scholar] [CrossRef]

- Li, J.; Li, Z.; Lu, G.; Xu, Y.; Zhang, D. Asymmetric gaussian process multi-view learning for visual classification. Inf. Fusion 2021, 65, 108–118. [Google Scholar] [CrossRef]

- Zhao, S.; Wu, J.; Fei, L.; Zhang, B.; Zhao, P. Double-cohesion learning based multiview and discriminant palmprint recognition. Inf. Fusion 2022, 83, 96–109. [Google Scholar] [CrossRef]

- Zhao, S.; Fei, L.; Wen, J.; Wu, J.; Zhang, B. Intrinsic and Complete Structure Learning Based Incomplete Multiview Clustering. IEEE Trans. Multimed. 2021. [Google Scholar] [CrossRef]

- Yang, M.; Deng, C.; Nie, F. Adaptive-weighting discriminative regression for multi-view classification. Pattern Recognit. 2019, 88, 236–245. [Google Scholar] [CrossRef]

- Tao, H.; Hou, C.; Yi, D.; Zhu, J. Multiview classification with cohesion and diversity. IEEE Trans. Cybern. 2018, 50, 2124–2137. [Google Scholar] [CrossRef]

- Shu, T.; Zhang, B.; Tang, Y.Y. Multi-view classification via a fast and effective multi-view nearest-subspace classifier. IEEE Access 2019, 7, 49669–49679. [Google Scholar] [CrossRef]

- Gupta, A.; Khan, R.U.; Singh, V.K.; Tanveer, M.; Kumar, D.; Chakraborti, A.; Pachori, R.B. A novel approach for classification of mental tasks using multiview ensemble learning (MEL). Neurocomputing 2020, 417, 558–584. [Google Scholar] [CrossRef]

- Lin, C.; Kumar, A. Contactless and partial 3D fingerprint recognition using multi-view deep representation. Pattern Recognit. 2018, 83, 314–327. [Google Scholar] [CrossRef]

- Zhao, S.; Fei, L.; Wen, J.; Zhang, B.; Zhao, P.; Li, S. Structure Suture Learning-Based Robust Multiview Palmprint Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2022. [Google Scholar] [CrossRef]

- Liang, X.; Li, Z.; Fan, D.; Zhang, B.; Lu, G.; Zhang, D. Innovative contactless palmprint recognition system based on dual-camera alignment. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 6464–6476. [Google Scholar] [CrossRef]

- CASIA Palmprint Image Database. Available online: http://biometrics.idealtest.org/ (accessed on 1 January 2018).

- GPDS Palmprint Image Database. Available online: http://www.gpds.ulpgc.es (accessed on 1 May 2016).

- Kumar, A. Incorporating cohort information for reliable palmprint authentication. In Proceedings of the 2008 Sixth Indian Conference on Computer Vision, Graphics & Image Processing, Bhubaneswar India, 16–19 December 2008; pp. 583–590. [Google Scholar]

- REgim Sfax Tunisian Hand Database. Available online: http://www.regim.org/publications/databases/REST/ (accessed on 2 September 2020).

- Zhang, D.; Kong, W.-K.; You, J.; Wong, M. Online palmprint identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1041–1050. [Google Scholar] [CrossRef] [Green Version]

- Zhang, D.; Guo, Z.; Lu, G.; Zhang, L.; Zuo, W. An online system of multispectral palmprint verification. IEEE Trans. Instrum. Meas. 2009, 59, 480–490. [Google Scholar] [CrossRef] [Green Version]

- Zhao, S.; Zhang, B.; Chen, C.P. Joint deep convolutional feature representation for hyperspectral palmprint recognition. Inf. Sci. 2019, 489, 167–181. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, B. Joint constrained least-square regression with deep convolutional feature for palmprint recognition. IEEE Trans. Syst. Man Cybern. Syst. 2020, 52, 511–522. [Google Scholar] [CrossRef]

- Harun, N.; Abd Rahman, W.E.Z.W.; Abidin, S.Z.Z.; Othman, P.J. New algorithm of extraction of palmprint region of interest (ROI). J. Phys. Conf. Ser. 2017, 890, 012024. [Google Scholar] [CrossRef] [Green Version]

- Li, Q.; Lai, H.; You, J. A novel method for touchless palmprint ROI extraction via skin color analysis. In Recent Trends in Intelligent Computing, Communication and Devices; Springer: Singapore, 2020; pp. 271–276. [Google Scholar]

- Chai, T.; Wang, S.; Sun, D. A palmprint ROI extraction method for mobile devices in complex environment. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; pp. 1342–1346. [Google Scholar]

- Mokni, R.; Drira, H.; Kherallah, M. Combining shape analysis and texture pattern for palmprint identification. Multimed. Tools Appl. 2017, 6, 23981–24008. [Google Scholar] [CrossRef]

- Gao, F.; Cao, K.; Leng, L.; Yuan, Y. Mobile palmprint segmentation based on improved active shape model. J. Multimed. Inf. Syst. 2018, 5, 221–228. [Google Scholar]

- Aykut, M.; Ekinci, M. Developing a contactless palmprint authentication system by introducing a novel ROI extraction method. Image Vis. Comput. 2015, 40, 65–74. [Google Scholar] [CrossRef] [Green Version]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Matkowski, W.M.; Chai, T.; Kong, A.W.K. Palmprint recognition in uncontrolled and uncooperative environment. IEEE Trans. Inf. Forensics Secur. 2019, 15, 1601–1615. [Google Scholar] [CrossRef] [Green Version]

- Izadpanahkakhk, M.; Razavi, S.; Taghipour-Gorjikolaie, M.; Zahiri, S.; Uncini, A. Deep region of interest and feature extraction models for palmprint verification using convolutional neural networks transfer learning. Appl. Sci. 2018, 8, 1210. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Kumar, A. A deep learning based framework to detect and recognize humans using contactless palmprints in the wild. arXiv 2018, arXiv:1812.11319. [Google Scholar]

- Leng, L.; Gao, F.; Chen, Q.; Kim, C. Palmprint recognition system on mobile devices with double-line-single-point assistance. Pers. Ubiquitous Comput. 2018, 22, 93–104. [Google Scholar] [CrossRef]

- Afifi, M. 11K hands: Gender recognition and biometric identification using a large dataset of hand images. Multimed. Tools Appl. 2019, 78, 20835–20854. [Google Scholar] [CrossRef] [Green Version]

- Xiao, Q.; Lu, J.; Jia, W.; Liu, X. Extracting palmprint ROI from whole hand image using straight line clusters. IEEE Access 2019, 7, 74327–74339. [Google Scholar] [CrossRef]

- Lin, S.; Xu, T.; Yin, X. Region of interest extraction for palmprint and palm vein recognition. In Proceedings of the 2016 9th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Datong, China, 15–17 October 2016; pp. 538–542. [Google Scholar]

- Michele, A.; Colin, V.; Santika, D. Mobilenet convolutional neural networks and support vector machines for palmprint recognition. Procedia Comput. Sci. 2019, 157, 110–117. [Google Scholar] [CrossRef]

- Daas, S.; Yahi, A.; Bakir, T.; Sedhane, M.; Boughazi, M.; Bourennane, E. Multimodal biometric recognition systems using deep learning based on the finger vein and finger knuckle print fusion. IET Image Process. 2021, 14, 3859–3868. [Google Scholar] [CrossRef]

- Liu, Y.; Kumar, A. Contactless palmprint identification using deeply learned residual features. IEEE Trans. Biom. Behav. Identity Sci. 2020, 2, 172–181. [Google Scholar] [CrossRef]

- Idrssi, A.E.; Merabet, Y.E.; Ruichek, Y. Palmprint recognition using state-of-the-art local texture descriptors: A comparative study. IET Biom. 2009, 9, 143–153. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, D.; Wang, K.; Huang, B. Palmprint classification using principal lines. Pattern Recognit. 2004, 37, 1987–1998. [Google Scholar] [CrossRef]

- Sun, Z.; Tan, T.; Wang, Y.; Li, S.Z. Ordinal palmprint represention for personal identification. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2005, 1, 279–284. [Google Scholar]

- Fei, L.; Zhang, B.; Zhang, W.; Teng, S. Local apparent latent direction extraction for palmprint recognition. Inform. Sci. 2019, 473, 59–72. [Google Scholar] [CrossRef]

- Annadurai, C.; Nelson, I.; Devi, K.N.; Manikandan, R.; Jhanjhi, N.Z.; Masud, M.; Sheikh, A. Biometric Authentication-Based Intrusion Detection Using Artificial Intelligence Internet of Things in Smart City. Energies 2022, 15, 7430. [Google Scholar] [CrossRef]

- Abdullahi, S.B.; Khunpanuk, C.; Bature, Z.A.; Chiroma, H.; Pakkaranang, N.; Abubakar, A.B.; Ibrahim, A.H. Biometric Information Recognition Using Artificial Intelligence Algorithms: A Performance Comparison. IEEE Access 2022, 10, 49167–49183. [Google Scholar] [CrossRef]

- Chen, Y.; Yi, Z. Locality-constrained least squares regression for subspace clustering. Knowl.-Based Syst. 2019, 163, 51–56. [Google Scholar] [CrossRef]

- Wang, M.; Wang, Q.; Hong, D.; Roy, S.K.; Chanussot, J. Learning Tensor Low-Rank Representation for Hyperspectral Anomaly Detection. IEEE Trans. Cybern. 2022, 53, 679–691. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Chang, W.; Lu, J.; Nie, F.; Wang, R.; Li, X. Adaptive-order proximity learning for graph-based clustering. Pattern Recognit. 2022, 126, 108550. [Google Scholar] [CrossRef]

- Zha, Z.; Wen, B.; Yuan, X.; Zhou, J.; Zhu, C.; Kot, A.C. Low-rankness guided group sparse representation for image restoration. IEEE Trans. Neural Netw. Learn. Syst. 2022. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Zhang, B.; Li, S. Discriminant sparsity based least squares regression with l1 regularization for feature representation. In Proceedings of the IEEE International Conference on Acoustics, Speech Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1504–1508. [Google Scholar]

- Zhao, S.; Wu, J.; Zhang, B.; Fei, L. Low-rank inter-class sparsity based semi-flexible target least squares regression for feature representation. Pattern Recognit. 2022, 123, 108346. [Google Scholar] [CrossRef]

- Liu, G.; Yan, S.C. Latent low-rank representation for subspace segmentation and feature extraction. In Proceedings of the, Barcelona, Spain, 6–13 November 2011; pp. 1615–1622. [Google Scholar]

- Zhang, Y.; Xiang, M.; Yang, B. Low-rank preserving embedding. Pattern Recognit. 2017, 70, 112–125. [Google Scholar] [CrossRef]

- Wong, W.K.; Lai, Z.; Wen, J.; Fang, X.; Lu, Y. Low-rank embedding for robust image feature extraction. IEEE Trans. Image Process. 2017, 26, 2905–2917. [Google Scholar] [CrossRef]

- Wu, Z.; Liu, S.; Ding, C.; Ren, Z.; Xie, S. Learning graph similarity with large spectral gap. IEEE Trans. Syst. Man, Cybern. Syst. 2021, 51, 1590–1600. [Google Scholar] [CrossRef]

- Li, Z.; Nie, F.; Chang, X.; Yang, Y.; Zhang, C.; Sebe, N. Dynamic affinity graph construction for spectral clustering using multiple features. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 6323–6332. [Google Scholar] [CrossRef]

- Lu, J.; Wang, H.; Zhou, J.; Chen, Y.; Lai, Z.; Hu, Q. Low-rank adaptive graph embedding for unsupervised feature extraction. Pattern Recognit. 2021, 113, 107758. [Google Scholar] [CrossRef]

- Qiao, L.; Chen, S.; Tan, X. Sparsity preserving projections with applications to face recognition. Pattern Recognit. 2010, 43, 331–341. [Google Scholar] [CrossRef] [Green Version]

- Yang, W.; Wang, Z.; Sun, C. A collaborative representation based projections method for feature extraction. Pattern Recognit. 2015, 48, 20–27. [Google Scholar] [CrossRef]

- Elhamifar, E.; Vidal, R. Sparse subspace clustering: Algorithm, theory, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2765–2781. [Google Scholar] [CrossRef] [Green Version]

- Zheng, S.; Cai, X.; Ding, C.; Nie, F.; Huang, H. A closed form solution to multi-view low-rank regression. In Proceedings of the in AAAI, Austin, TX, USA, 25–29 January 2015; pp. 1973–1979. [Google Scholar]

- Yaxin, Z.; Huanhuan, L.; Xuefei, G.; Lili, L. Palmprint recognition based on multi-feature integration. In Proceedings of the 2016 IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Xi‘an, China, 3–5 October 2016; pp. 992–995. [Google Scholar]

- Zhao, S.; Nie, W.; Zhang, B. Multi-feature fusion using collaborative residual for hyperspectral palmprint recognition. In Proceedings of the 2018 IEEE 4th International Conference on Computer and Communications (ICCC), Chengdu, China, 7–10 December 2018; pp. 1402–1406. [Google Scholar]

- Zheng, Y.; Fei, L.; Wen, J.; Teng, S.; Zhang, W.; Rida, I. Joint Multiple-type Features Encoding for Palmprint Recognition. In Proceedings of the 2020 IEEE Symposium Series on Computational Intelligence (SSCI), Canberra, ACT, Australia, 1–4 December 2020; pp. 1710–1717. [Google Scholar]

- Jaswal, G.; Kaul, A.; Nath, R. Multiple feature fusion for unconstrained palmprint authentication. Comput. Electr. Eng. 2018, 72, 53–78. [Google Scholar] [CrossRef]

- Dai, J.; Zhou, J. Multifeature-based high-resolution palmprint recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 945–957. [Google Scholar]

- Zhou, Q.; Jia, W.; Yu, Y. Multi-stream Convolutional Neural Networks Fusion for Palmprint Recognition. In Proceedings of the Chinese Conference on Biometric Recognition, Beijing, China, 11–13 November 2022; pp. 72–81. [Google Scholar]

- Liang, L.; Chen, T.; Fei, L. Orientation space code and multi-feature two-phase sparse representation for palmprint recognition. Int. J. Mach. Learn. Cybern. 2020, 11, 1453–1461. [Google Scholar] [CrossRef]

- Jia, W.; Ling, B.; Chau, K.W.; Heutte, L. Palmprint identification using restricted fusion. Appl. Math. Comput. 2008, 205, 927–934. [Google Scholar] [CrossRef] [Green Version]

- Fei, L.; Zhang, B.; Zhang, L.; Jia, W.; Wen, J.; Wu, J. Learning compact multifeature codes for palmprint recognition from a single training image per palm. IEEE Trans. Multimed. 2020, 23, 2930–2942. [Google Scholar] [CrossRef]

- Gayathri, R.; Ramamoorthy, P. Multifeature palmprint recognition using feature level fusion. Int. J. Eng. Res. Appl. 2012, 2, 1048–1054. [Google Scholar]

- You, J.; Kong, W.K.; Zhang, D.; Cheung, K.H. On hierarchical palmprint coding with multiple features for personal identification in large databases. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 234–243. [Google Scholar] [CrossRef]

- Badrinath, G.S.; Gupta, P. An efficient multi-algorithmic fusion system based on palmprint for personnel identification. In Proceedings of the 15th International Conference on Advanced Computing and Communications (ADCOM 2007), Guwahati, India, 18–21 December 2007; pp. 759–764. [Google Scholar]

- Zhou, J.; Sun, D.; Qiu, Z.; Xiong, K.; Liu, D.; Zhang, Y. Palmprint recognition by fusion of multi-color components. In Proceedings of the 2009 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery, Zhangjiajie, China, 10–11 October 2009; pp. 273–278. [Google Scholar]

- Zhang, S.; Wang, H.; Huang, W. Palmprint identification combining hierarchical multi-scale complete LBP and weighted SRC. Soft Comput. 2020, 24, 4041–4053. [Google Scholar] [CrossRef]

- Zhou, L.; Guo, H.; Lin, S.; Hao, S.; Zhao, K. Combining multi-wavelet and CNN for palmprint recognition against noise and misalignment. IET Image Process. 2019, 13, 1470–1478. [Google Scholar] [CrossRef]

- Zhang, L.; Shen, Y.; Li, H.; Lu, J. 3D palmprint identification using block-wise features and collaborative representation. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1730–1736. [Google Scholar] [CrossRef] [Green Version]

- Wu, L.; Xu, Y.; Cui, Z.; Zuo, Y.; Zhao, S.; Fei, L. Triple-type feature extraction for palmprint recognition. Sensors 2021, 21, 4896. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, M.I.; Woo, W.L.; Dlay, S. Non-stationary feature fusion of face and palmprint multimodal biometrics. Neurocomputing 2016, 177, 49–61. [Google Scholar] [CrossRef]

- Izadpanahkakhk, M.; Razavi, S.M.; Taghipour-Gorjikolaie, M.; Zahiri, S.H.; Uncini, A. Joint feature fusion and optimization via deep discriminative model for mobile palmprint verification. J. Electron. Imaging 2019, 28, 043026. [Google Scholar] [CrossRef]

- Attallah, B.; Brik, Y.; Chahir, Y.; Djerioui, M.; Boudjelal, A. Fusing palmprint, finger-knuckle-print for bi-modal recognition system based on LBP and BSIF. In Proceedings of the 2019 6th International Conference on Image and Signal Processing and their Applications (ISPA), Mostaganem, Algeria, 24–25 November 2019; pp. 1–5. [Google Scholar]

- Li, Z.; Liang, X.; Fan, D.; Li, J.; Zhang, D. BPFNet: A unified framework for bimodal palmprint alignment and fusion. In Proceedings of the International Conference on Neural Information Processing, Bali, Indonesia, 8–12 December 2021; pp. 28–36. [Google Scholar]

- Rane, M.E.; Bhadade, U. Face and palmprint Biometric recognition by using weighted score fusion technique. In Proceedings of the 2020 IEEE Pune Section International Conference (PuneCon), Pune, India, 16–18 December 2020; pp. 11–16. [Google Scholar]

- Fei, L.; Qin, J.; Liu, P.; Wen, J.; Tian, C.; Zhang, B.; Zhao, S. Jointly Learning Multiple Curvature Descriptor for 3D Palmprint Recognition. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 302–308. [Google Scholar]

| Databases | Total Number | Individual Number | Contactless or Contact Based | Posing Variation | Year |

|---|---|---|---|---|---|

| CASIA | 5501 | 310 | Contactless | Small | 2005 |

| GPDS | 1000 | 100 | Contactless | Small | 2011 |

| TJI | 12,000 | 300 | Contactless | Small | 2017 |

| NTU-PI-V1 | 7781 | 1093 | Contactless | Large | 2019 |

| REST | 1945 | 179 | Contactless | Small | 2021 |

| IITD | 2601 | 230 | Contactless | Small | 2006 |

| PolyU | 6000 | 500 | Contact based | No | 2003 |

| PV_790 | 5180 | 2109 | Contact based | No | 2018 |

| M_NIR | 6000 | 500 | Contact based | No | 2009 |

| M_Blue | 6000 | 500 | Contact based | No | 2009 |

| Methods | Authors | Ref. | Year |

|---|---|---|---|

| Active shape model | Gao, F.; Cao, K. | [48] | 2018 |

| Active appearance model | Aykut, M.; Ekinci, M. | [49] | 2015 |

| Ensemble of Regression Trees | Kazemi, V.; Sullivan, J. | [50] | 2014 |

| Key point detection | Matkowski, W. M.; Chai, T.; Kong, A. W. K. | [51] | 2019 |

| CNN Transfer | Izadpanahkakhk, M.; Razavi, S | [52] | 2018 |

| Palmprint Detector | Liu, Y.; Kumar, A. | [53] | 2018 |

| On-screen guide | Leng, L.; Gao, F.; Chen, Q.; Kim, C | [54] | 2018 |

| Whole image | Afifi, M. | [55] | 2019 |

| Robust adaptive hyperspectral ROI extraction | Zhao, S.; Zhang, B. | [12] | 2019 |

| Methods | Ref. | Year | Description | Classifier | Database |

|---|---|---|---|---|---|

| LDDBP | [1] | 2019 | Dominant direction learning | Chi-square distance | CASIA IITD TJI HFUT |

| LCDDP | [2] | 2021 | Complete and discriminative direction | Euclidean distance | PolyU IITD CASIA GPDS REST M_NIR PV_780 |

| Local texture descriptor | [61] | 2020 | Local orientation encoding | distance | TJI CASIA GPDS IITD |

| Principal lines | [62] | 2004 | Principal lines detection | Chi-square distance | PolyU |

| Ordinal palmprint feature | [63] | 2005 | Ordinal direction encoding | Hamming distance | PolyU |

| DDR | [9] | 2020 | Deep discriminative representation | Euclidean distance | CASIA, IITD, M_NIR, M_B, M_G, M_R |

| JDCFR | [42] | 2019 | Joint deep representation | Euclidean distance | Hyperspectral palmprint database |

| PalmNet | [4] | 2019 | Deep palmprint representation | Euclidean distance | CASIA, IITD, REST, TJI |

| ALDC | [64] | 2019 | Local apparent latent direction | Chi-square distance | PolyU, IITD, GPDS, CASIA |

| Class | Methods | Ref. | Year | Brief Description | Contributions to MFL |

|---|---|---|---|---|---|

| LSR | LC_LSR | [67] | 2019 | Local structure preservation | Provide a consistency subspace for feature enhancement. |

| DS_LSR | [71] | 2020 | Self-representation for local structure capture | ||

| LIS_LSR | [72] | 2022 | Low-rank structure learning for robust representation | ||

| LR-R | LRR | [73] | 2011 | Latent low-rank structure representation | Provide a low-rank representation for robust recognition |

| LRP | [74] | 2017 | Low-rank preserving embedding | ||

| LR_IMF | [75] | 2017 | Low-rank projection learning | ||

| Graph Learning | LRS_LSG | [76] | 2021 | Learning graph similarity | Utilize the local structures between different samples for representation enhancement |

| DAG_SC | [77] | 2018 | Dynamic affinity graph construction | ||

| LRAG | [78] | 2021 | Low-rank adaptive graph embedding | ||

| SR | SPP | [79] | 2010 | Sparsity preserving projections | Structure reconstruction and robust representation |

| CRP | [80] | 2015 | Collaborative-representation-based projections | ||

| SSL | [81] | 2013 | Sparse subspace clustering |

| Methods | Ref. | Year | Description | Databases | Application Categories |

|---|---|---|---|---|---|

| DC_MDPR | [27] | 2022 | Descriminative representation with double-cohesion learning | CASIA, IITD, GPDS, TJI, M_NIR, PV_790 | Contact based, contactless, palm–vein |

| SSL_RMPR | [34] | 2022 | Multiview representation in the same sub space | CASIA, IITD, GPDS, TJI, PV_790, DHV_860 | Contact based, contactless, palm–vein, dorsal hand vein |

| JMvFL | [22] | 2020 | Joint multiview feature learning | PolyU, CASIA, TJI, GPDS, PolyU_FKP | Contact-based, contactless, finger-knuckle-print |

| PR_MFR | [83] | 2016 | Multi-feature integration with local and global features | PolyU | Contact based |

| MFF_CRPR | [84] | 2018 | Multi-feature fusion using collaborative residual | Hyperspectral palmprint database | Hyperspectral |

| GMF Encoding | [85] | 2020 | Joint multiple-type features encoding | CASIA, IITD, TJI | Contactless |

| MFF_UPR | [86] | 2018 | Multiple feature fusion on feature level | CASIA, IITD, PolyU | Contact based, contactless |

| MHPR | [87] | 2010 | Feature fusion with four types of features | High-resolution palmprint database | High resolution |

| MSCNN | [88] | 2022 | Multi-stream CNN fausion | PolyU, M_B, HFUT, TJI | Contact based, contactless |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, S.; Fei, L.; Wen, J. Multiview-Learning-Based Generic Palmprint Recognition: A Literature Review. Mathematics 2023, 11, 1261. https://doi.org/10.3390/math11051261

Zhao S, Fei L, Wen J. Multiview-Learning-Based Generic Palmprint Recognition: A Literature Review. Mathematics. 2023; 11(5):1261. https://doi.org/10.3390/math11051261

Chicago/Turabian StyleZhao, Shuping, Lunke Fei, and Jie Wen. 2023. "Multiview-Learning-Based Generic Palmprint Recognition: A Literature Review" Mathematics 11, no. 5: 1261. https://doi.org/10.3390/math11051261