Inverse Problem of Recovering the Initial Condition for a Nonlinear Equation of the Reaction–Diffusion–Advection Type by Data Given on the Position of a Reaction Front with a Time Delay

Abstract

:1. Introduction

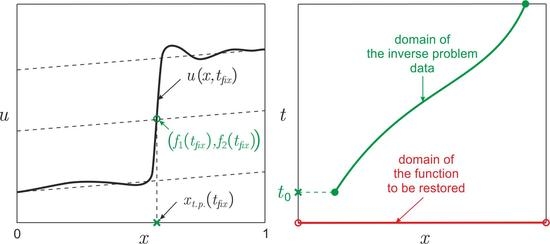

2. Statement of the Inverse Problem and a Gradient Method of Its Solution

- Set and as an initial guess.

- Find the solution of the direct problem:

- Find the solution of the adjoint problem:Here is the Dirac delta function., is the Heaviside step function.

- Find the gradient of the functional (4):

- Find an approximate solution at the next step of the iteration:where is the descent parameter.

- Check a condition for stopping the iterative process. If it is satisfied, we put as a solution of the inverse problem. Otherwise, set and go to step 2.

- (a)

- In the case of experimental data measured with errors and , the stopping criterion isHere is the position of the reaction front determined by the direct problem (1) for a given function .

- (b)

- In the case of exact input data, the iterative process stops when is less than the error of the finite-difference approximation.

Examples of Numerical Calculations

3. Deep Machine Learning Method

Examples of Numerical Calculations

4. Discussion

- The question of the theoretical justification of the uniqueness and stability of the solution of the considered inverse problem remains open. This may be the subject for a separate work. In this article, we limited ourselves to testing the effectiveness of the proposed approach using numerical experiments.

- When constructing the objective functional, it is possible to use additional smoothing terms (for example, in the form of Tikhonov’s functional [53]). We have limited ourselves to considering the cost functional that determines the least squares method, since its use has already given rather good results.

- Applying deep machine learning, we aimed to demonstrate the fundamental possibility of solving problems of the considered type with limited experimental data using this method. In this regard, we used a fairly good dataset to train the neural network. The question of choosing the optimal neural network configuration remains open. This issue is of significant interest and may be the topic of a separate work.

- The methods of asymptotic analysis were used only to determine the function in the formulation of the direct problem. However, other equivalent ways of defining this function are possible that will not affect the quality of the recovered solution.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Danilov, V.; Maslov, V.; Volosov, K. Mathematical Modelling of Heat and Mass Transfer Processes; Kluwer: Dordrecht, The Netherlands, 1995. [Google Scholar]

- Butuzov, V.; Vasil’eva, A. Singularly perturbed problems with boundary and interior layers: Theory and applications. Adv. Chem. Phys. 1997, 97, 47–179. [Google Scholar]

- Liu, Z.; Liu, Q.; Lin, H.C.; Schwartz, C.; Lee, Y.H.; Wang, T. Three-dimensional variational assimilation of MODIS aerosol optical depth: Implementation and application to a dust storm over East Asia. J. Geophys. Res. Atmos. 2010, 116. [Google Scholar] [CrossRef] [Green Version]

- Egger, H.; Fellner, K.; Pietschmann, J.F.; Tang, B.Q. Analysis and numerical solution of coupled volume-surface reaction-diffusion systems with application to cell biology. Appl. Math. Comput. 2018, 336, 351–367. [Google Scholar] [CrossRef] [Green Version]

- Yaparova, N. Method for determining particle growth dynamics in a two-component alloy. Steel Transl. 2020, 50, 95–99. [Google Scholar] [CrossRef]

- Wu, X.; Ni, M. Existence and stability of periodic contrast structure in reaction-advection-diffusion equation with discontinuous reactive and convective terms. Commun. Nonlinear Sci. Numer. Simul. 2020, 91, 105457. [Google Scholar] [CrossRef]

- Volpert, A.; Volpert, V.; Volpert, V. Traveling Wave Solutions of Parabolic Systems; American Mathematical Society: Providence, RI, USA, 2000. [Google Scholar]

- Meinhardt, H. Models of Biological Pattern Formation; Academic Press: London, UK, 1982. [Google Scholar]

- FitzHugh, R. Impulses and physiological states in theoretical model of nerve membrane. Biophys. J. 1961, 1, 445–466. [Google Scholar] [CrossRef] [Green Version]

- Murray, J. Mathematical Biology. I. An Introduction; Springer: New York, NY, USA, 2002. [Google Scholar] [CrossRef]

- Egger, H.; Pietschmann, J.F.; Schlottbom, M. Identification of nonlinear heat conduction laws. J. Inverse Ill-Posed Probl. 2015, 23, 429–437. [Google Scholar] [CrossRef] [Green Version]

- Gholami, A.; Mang, A.; Biros, G. An inverse problem formulation for parameter estimation of a reaction-diffusion model of low grade gliomas. J. Math. Biol. 2016, 72, 409–433. [Google Scholar] [CrossRef] [Green Version]

- Aliev, R.; Panfilov, A.V. A simple two-variable model of cardiac excitation. Chaos Solitons Fractals 1996, 7, 293–301. [Google Scholar] [CrossRef]

- Generalov, E.; Levashova, N.; Sidorova, A.; Chumankov, P.; Yakovenko, L. An autowave model of the bifurcation behavior of transformed cells in response to polysaccharide. Biophysics 2017, 62, 876–881. [Google Scholar] [CrossRef]

- Mang, A.; Gholami, A.; Davatzikos, C.; Biros, G. PDE-constrained optimization in medical image analysis. Optim. Eng. 2018, 19, 765–812. [Google Scholar] [CrossRef] [Green Version]

- Kabanikhin, S.I.; Shishlenin, M.A. Recovering a Time-Dependent Diffusion Coefficient from Nonlocal Data. Numer. Anal. Appl. 2018, 11, 38–44. [Google Scholar]

- Mamkin, V.; Kurbatova, J.; Avilov, V.; Mukhartova, Y.; Krupenko, A.; Ivanov, D.; Levashova, N.; Olchev, A. Changes in net ecosystem exchange of CO2, latent and sensible heat fluxes in a recently clear-cut spruce forest in western Russia: Results from an experimental and modeling analysis. Environ. Res. Lett. 2016, 11, 125012. [Google Scholar] [CrossRef]

- Levashova, N.; Muhartova, J.; Olchev, A. Two approaches to describe the turbulent exchange within the atmospheric surface layer. Math. Model. Comput. Simul. 2017, 9, 697. [Google Scholar] [CrossRef]

- Levashova, N.; Sidorova, A.; Semina, A.; Ni, M. A spatio-temporal autowave model of shanghai territory development. Sustainability 2019, 11, 3658. [Google Scholar] [CrossRef] [Green Version]

- Kadalbajoo, M.; Gupta, V. A brief survey on numerical methods for solving singularly perturbed problems. Appl. Math. Comput. 2010, 217, 3641–3716. [Google Scholar] [CrossRef]

- Cannon, J.; DuChateau, P. An Inverse problem for a nonlinear diffusion equation. SIAM J. Appl. Math. 1980, 39, 272–289. [Google Scholar] [CrossRef]

- DuChateau, P.; Rundell, W. Unicity in an inverse problem for an unknown reaction term in a reaction-diffusion equation. J. Differ. Equ. 1985, 59, 155–164. [Google Scholar] [CrossRef] [Green Version]

- Pilant, M.; Rundell, W. An inverse problem for a nonlinear parabolic equation. Commun. Partial. Differ. Equ. 1986, 11, 445–457. [Google Scholar] [CrossRef]

- Kabanikhin, S. Definitions and examples of inverse and ill-posed problems. J. Inverse Ill-Posed Probl. 2008, 16, 317–357. [Google Scholar] [CrossRef]

- Kabanikhin, S. Inverse and Ill-posed Problems Theory and Applications; de Gruyter: Berlin, Germany, 2011. [Google Scholar]

- Jin, B.; Rundell, W. A tutorial on inverse problems for anomalous diffusion processes. Inverse Probl. 2015, 31, 035003. [Google Scholar] [CrossRef] [Green Version]

- Belonosov, A.; Shishlenin, M. Regularization methods of the continuation problem for the parabolic equation. Lect. Notes Comput. Sci. 2017, 10187, 220–226. [Google Scholar]

- Kaltenbacher, B.; Rundell, W. On the identification of a nonlinear term in a reaction-diffusion equation. Inverse Probl. 2019, 35, 115007. [Google Scholar] [CrossRef] [Green Version]

- Belonosov, A.; Shishlenin, M.; Klyuchinskiy, D. A comparative analysis of numerical methods of solving the continuation problem for 1D parabolic equation with the data given on the part of the boundary. Adv. Comput. Math. 2019, 45, 735–755. [Google Scholar] [CrossRef]

- Kaltenbacher, B.; Rundell, W. The inverse problem of reconstructing reaction-diffusion systems. Inverse Probl. 2020, 36, 065011. [Google Scholar] [CrossRef]

- Beck, J.V.; Blackwell, B.; Claire, C.R.S.J. Inverse Heat Conduction: Ill-Posed Problems; Wiley: New York, NY, USA, 1985. [Google Scholar]

- Alifanov, O.M. Inverse Heat Transfer Problems; International Series in Heat and Mass Transfer; Springer: Berlin/Heidelberg, Germany, 1994. [Google Scholar]

- Ismail-Zadeh, A.; Korotkii, A.; Schubert, G.; Tsepelev, I. Numerical techniques for solving the inverse retrospective problem of thermal evolution of the Earth interior. Comput. Struct. 2009, 87, 802–811. [Google Scholar] [CrossRef]

- Lavrent’ev, M.; Romanov, V.; Shishatskij, S. Ill-Posed Problems of Mathematical Physics and Analysis, Translations of Mathematical Monographs; Schulenberger, J.R., Translator; American Mathematical Society: Providence, RI, USA, 1986; Volume 64. [Google Scholar]

- Isakov, V. Inverse Problems for Partial Differential Equations; Springer: New York, NY, USA, 2006. [Google Scholar]

- Showalter, R.E. The final value problem for evolution equations. J. Math. Anal. Appl. 1974, 47, 563–572. [Google Scholar] [CrossRef] [Green Version]

- Ames, K.A.; Clark, G.W.; Epperson, J.F.; Oppenheimer, S.F. A comparison of regularizations for an ill-posed problem. Math. Comput. 1998, 67, 1451–1471. [Google Scholar] [CrossRef] [Green Version]

- Seidman, T.I. Optimal filtering for the backward heat equation. SIAM J. Numer. Anal. 1996, 33, 162–170. [Google Scholar] [CrossRef] [Green Version]

- Mera, N.S.; Elliott, L.; Ingham, D.B.; Lesnic, D. An iterative boundary element method for solving the one-dimensional backward heat conduction problem. Int. J. Heat Mass Transf. 2001, 44, 1937–1946. [Google Scholar] [CrossRef]

- Hao, D. A mollification method for ill-posed problems. Numer. Math. 1994, 68, 469–506. [Google Scholar]

- Liu, C.S. Group preserving scheme for backward heat conduction problems. Int. J. Heat Mass Transf. 2004, 47, 2567–2576. [Google Scholar] [CrossRef]

- Kirkup, S.M.; Wadsworth, M. Solution of inverse diffusion problems by operator-splitting methods. Appl. Math. Model. 2002, 26, 1003–1018. [Google Scholar] [CrossRef]

- Fu, C.L.; Xiong, X.T.; Qian, Z. Fourier regularization for a backward heat equation. J. Math. Anal. Appl. 2007, 331, 472–480. [Google Scholar] [CrossRef] [Green Version]

- Dou, F.F.; Fu, C.L.; Yang, F.L. Optimal error bound and Fourier regularization for identifying an unknown source in the heat equation. J. Comput. Appl. Math. 2009, 230, 728–737. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Z.; Meng, Z. A modified Tikhonov regularization method for a backward heat equation. Inverse Probl. Sci. Eng. 2011, 19, 1175–1182. [Google Scholar] [CrossRef]

- Parzlivand, F.; Shahrezaee, A. Numerical solution of an inverse reaction-diffusion problem via collocation method based on radial basis functions. Appl. Math. Model. 2015, 39, 3733–3744. [Google Scholar] [CrossRef]

- Vasil’eva, A.; Butuzov, V.; Nefedov, N. Singularly perturbed problems with boundary and internal layers. Proc. Steklov Inst. Math. 2010, 268, 258–273. [Google Scholar] [CrossRef]

- Lukyanenko, D.; Shishlenin, M.; Volkov, V. Asymptotic analysis of solving an inverse boundary value problem for a nonlinear singularly perturbed time-periodic reaction–diffusion–advection equation. J. Inverse Ill-Posed Probl. 2019, 27, 745–758. [Google Scholar] [CrossRef]

- Lukyanenko, D.; Grigorev, V.; Volkov, V.; Shishlenin, M. Solving of the coefficient inverse problem for a nonlinear singularly perturbed two-dimensional reaction-diffusion equation with the location of moving front data. Comput. Math. Appl. 2019, 77, 1245–1254. [Google Scholar] [CrossRef]

- Lukyanenko, D.; Prigorniy, I.; Shishlenin, M. Some features of solving an inverse backward problem for a generalized Burgers’ equation. J. Inverse Ill-Posed Probl. 2020, 28, 641–649. [Google Scholar] [CrossRef]

- Hopf, E. The partial Differential Equation ut+uux=μuxx. Commun. Pure Appl. Math. 1950, 3, 201–230. [Google Scholar] [CrossRef]

- Alifanov, O.; Artuhin, E.; Rumyantsev, S. Extreme Methods for the Solution of Ill-Posed Problems; Nauka: Moscow, Russia, 1988. [Google Scholar]

- Tikhonov, A.N.; Goncharsky, A.V.; Stepanov, V.V.; Yagola, A.G. Numerical Methods for the Solution of Ill-Posed Problems; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1995. [Google Scholar]

- Hanke, M.; Neubauer, A.; Scherzer, O. A convergence analysis of the Landweber iteration for nonlinear ill-posed problems. Numer. Math. 1995, 72, 21–37. [Google Scholar] [CrossRef]

- Engl, H.W.; Hanke, M.; Neubauer, A. Regularization of Inverse Problems; Kluwer Academic Publ.: Dordrecht, The Netherlands, 1996. [Google Scholar]

- Kabanikhin, S.I.; Scherzer, O.; Shishlenin, M.A. Iteration methods for solving a two dimensional inverse problem for a hyperbolic equation. J. Inverse Ill-Posed Probl. 2003, 11, 87–109. [Google Scholar] [CrossRef]

- Hairer, E.; Wanner, G. Solving Ordinary Differential Equations II. Stiff and Differential-Algebraic Problems; Springer: Berlin/Heidelberg, Germany, 1996. [Google Scholar]

- Rosenbrock, H. Some general implicit processes for the numerical solution of differential equations. Comput. J. 1963, 5, 329–330. [Google Scholar] [CrossRef] [Green Version]

- Alshin, A.; Alshina, E.; Kalitkin, N.; Koryagina, A. Rosenbrock schemes with complex coefficients for stiff and differential algebraic systems. Comput. Math. Math. Phys. 2006, 46, 1320–1340. [Google Scholar] [CrossRef]

- Wen, X. High order numerical methods to a type of delta function integrals. J. Comput. Phys. 2007, 226, 1952–1967. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Ruck, D.; Rogers, S.; Kabrisky, M. Feature selection using a multilayer perceptron. J. Neural Netw. Comput. 1990, 2, 40–48. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Nair, V.; Hinton, G. Rectified Linear Units Improve Restricted Boltzmann Machines; ICML: Lugano, Switzerland, 2010. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lukyanenko, D.; Yeleskina, T.; Prigorniy, I.; Isaev, T.; Borzunov, A.; Shishlenin, M. Inverse Problem of Recovering the Initial Condition for a Nonlinear Equation of the Reaction–Diffusion–Advection Type by Data Given on the Position of a Reaction Front with a Time Delay. Mathematics 2021, 9, 342. https://doi.org/10.3390/math9040342

Lukyanenko D, Yeleskina T, Prigorniy I, Isaev T, Borzunov A, Shishlenin M. Inverse Problem of Recovering the Initial Condition for a Nonlinear Equation of the Reaction–Diffusion–Advection Type by Data Given on the Position of a Reaction Front with a Time Delay. Mathematics. 2021; 9(4):342. https://doi.org/10.3390/math9040342

Chicago/Turabian StyleLukyanenko, Dmitry, Tatyana Yeleskina, Igor Prigorniy, Temur Isaev, Andrey Borzunov, and Maxim Shishlenin. 2021. "Inverse Problem of Recovering the Initial Condition for a Nonlinear Equation of the Reaction–Diffusion–Advection Type by Data Given on the Position of a Reaction Front with a Time Delay" Mathematics 9, no. 4: 342. https://doi.org/10.3390/math9040342