1. Introduction

The respiratory rate (RR) can be useful for critically ill patients as a significant indicator to evaluate a patient’s prognosis and status, as it not only represents a clinical status change in a patient’s respiratory system function but also participates in the respiratory compensation mechanism for tissue hypoperfusion and systemic circulation dysfunction such as shock [

1,

2]. The importance of the RR can also be seen in the various scoring systems such as the Acute Physiology And Chronic Health Evaluation (APACHE) [

3], which assesses the prognosis of a patient’s disease in the intensive care unit (ICU); the Sequential Organ Failure Assessment (SOFA, qSOFA) [

4], which conventionally assesses the level of organ failure and infection; and the Modified Early Warning Score (MEWS) [

5], which is used for the continuous surveillance of the patient’s condition. However, the general RR measuring method that is currently conducted in clinical practice is a manual counting method measured by nursing staff, which does not fit for continuous all-time surveillance [

6]. Although various RR estimation methods using physiological signals (etCO2, ECG, Impedance Pneumography signal, and Oral–Nasal Pressure) have been developed to compensate for this shortcoming [

7,

8,

9], a requirement of additional equipment such as an impedance meter, ECG leads, the restriction of movement due to such equipment, and the difficulty of always-on measurement come as its limitations. Eventually, this limits the applicability of these methods as standard methods for continuous real-time RR surveillance in critically ill patients.

A photoplethysmogram (PPG) is a physiological signal that implies the information of blood volume changes in the microvascular bed of tissue detected using the pulse oximeter. It is normally acquired from a subject’s peripheral site such as a fingertip, with a light-emitting diode that illuminates a red or a near-infrared wavelength and detects its reflections through the photodetector. At this point, the most affecting factor of the signal is the blood volume, especially in the arteries, as the blood vessel constantly changes in response to cardiac contraction, breathing, and the autonomic nervous system [

10,

11]. Therefore, the morphological characteristics and time-dependent attributes of the PPG signal contain various and significant clinical information that indirectly indicates a subject’s heart rate, blood pressure, oxygen saturation, and respiratory rate [

11,

12].

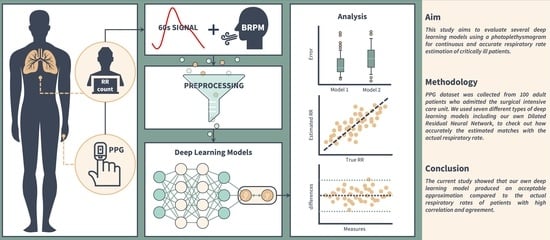

Deep learning (DL), an Artificial Intelligence algorithm that is widely utilized in image detection, time forecasting, natural language processing, etc., according to the architectural design, can be trained to extract various features effectively from the physiological signal (e.g., PPG) using a feature extractor [

13] and to estimate another signal (e.g., RR). Herein, we collected real-time PPG and RR data from patients admitted to the surgical intensive care unit (SICU) of a tertiary referral hospital in Korea, and they were used in several previously studied DL models for RR estimation. Moreover, we implemented a novel DL model and compared its performance with others to verify the significance of a PPG-derived DL model’s RR estimation.

3. Results

From June 2022 to July 2022, a total of 100 patients admitted to the SICU of our institution were subjected to PPG and RR data collection and outcome analysis, including 56 males and 44 females. The mean age was 67.5 years, and the mean SOFA score on the day of admission to the SICU was 2.5 (range 0–6). A respiratory history of COPD or asthma was observed in seven patients (7%), and the most common diagnosis at admission was malignancy (54 patients; 54%), followed by non-cancerous lesions including ulcer perforation, pan-peritonitis, and bowel obstruction (28 patients; 28%). There were no differences in the baseline characteristics and disease profiles between the genders. (

Table 1) As a result of the comparative analysis of the PPG data by gender, it was determined that the signal data of the two groups showed equal variance and consistency in the model training as the data did not show significant differences.

The data used in the study were first split into training data and testing data. The testing data were extracted in proportion to the three breathing groups, which were slow breathing (five subjects), normal breathing (five subjects), and rapid breathing (five subjects) for our own dataset, and one subject, three subjects, and three subjects for BIDMC, respectively. The reason why only one slow breathing subject was selected as the testing data in BIDMC was that there were only two slow breathing subjects in the entire BIDMC data, so we wanted to use the data of at least one subject for training. The training data were then split into a training dataset and validation dataset according to the 5-fold cross-validation method.

Table 3 summarizes the number of samples for each dataset.

Based on the above data, we ran the experiments described in the Experiments subsection and obtained the following results.

Figure 5 shows the RR of the BIDMC validation dataset subject (bidmc_17), which was estimated using the Dilated ResNet model.

3.1. Training Model Using Self-Collected Dataset

All seven models introduced above were trained on our dataset, and the performances of the trained models were evaluated using the validation dataset.

Table 4 summarizes these results. Next, an unseen dataset of each respiratory group was input to each model, and the results are shown in

Table 5.

The results of evaluating the performance of the model trained with the self-collected dataset and the validation dataset are shown in

Table 4. In

Table 5, which shows the evaluation of RespNet’s unseen data, we can see that RespNet has the best performance on the same dataset as the trained data (in bold). We can also see that overall, most of the models have the best estimation performance for the normal breathing group within the dataset (in bold). The difference in the MAE between our dataset and CapnoBase within the same model is a maximum of 10.5005 and a minimum of 2.5712.

3.2. Training Model Using BIDMC Dataset

The performances of the seven models trained on BIDMC were evaluated on the validation dataset and are shown in

Table 4. The models were also trained on the unseen dataset of each breathing group, and the results are shown in

Table 6.

The performance evaluation on the BIDMC validation dataset in

Table 4 shows that Bian’s ResNet, Ravichandran’s RespNet, and our proposed Dilated ResNet model perform well compared to the LSTM-based models. The MAE of these three models is around 1.27, while the MAE of the LSTM-based models is around 1.68. This behavior is also evident in

Table 6, which shows the respiration rate estimation results of the models using the unseen dataset from various datasets. In this table, we again see that the three models perform best in alternating groups of datasets (in bold). We also see that when we drill down into each dataset by group, the best performing group is generally the normal breathing group. This is true across all seven models.

We wanted to check the results of the three most prominent models using the BIDMC validation dataset and the boxplot in

Figure 6. The y-axis of the figure shows the absolute error (rpm), and the lowest error among the three models is obtained by RespNet, which is close to zero. The model with the lowest median is BianResNet, with a value of 0.5843. On the other hand, the model with the highest median is Dilated ResNet, with a value of 0.6503. Among the three models, Dilated ResNet has the fewest outliers for respiration rate estimation, with 454 outliers (13.48%) out of 3368 total data samples. Conversely, the model with the most outliers is BianResNet, with 531 outliers (15.77%). Furthermore, this paper calculated the Pearson correlation coefficient (PCC) between the estimated RR and the actual RR of each of the three models using the BIDMC validation dataset. As a result, BianResNet does not show a correlation between the estimated RR and actual RR at −0.0519 (

p < 0.01). For RespNet, there is a weak negative correlation between the estimated and actual values at −0.3211 (

p < 0.01). On the other hand, for Dilated ResNet, there is a weak positive correlation between the estimated and actual values at 0.5069 (

p < 0.01).

3.3. Testing Model’s Robustness in Different SNRs

To test the robustness of the three models in the above experiments, we input the signals of different SNRs to compare and evaluate the results (

Table 7).

According to the result, when the SNR is 10 db, BianResNet has the lowest error among the three models with an MAE of 2.2513, while our proposed Dilated ResNet model has the highest error with an MAE of 4.1142. However, in all other cases, we can see that our proposed model performs the best as it obtained values of 0.6303, 0.6417, and 0.8123 (in bold). However, the overall RR estimation error consistently increased as the SNR decreased in our experiment.

4. Discussion

In our study, we can see that RespNet and CNNLSTM perform better than the other models in

Table 5, and BianResNet, RespNet, and Dilated ResNet perform better in

Table 6. We believe this is because these models extract the spatial–spectral feature of the signal, which is often used in various PPG analysis studies [

19,

47]. Models that focus on temporal feature extraction, such as BiLSTM, also occasionally perform well using the temporal features of the signal, but more consistently perform well when using convolution and taking the shapes of previous signals and adding them together, such as ResNet.

In

Table 5 and

Table 6, we can confirm that the normal breathing group has the best results for all models in most cases. This is because of the group’s imbalance in the dataset that we used to train the model. This also can be seen in

Table 3, which shows that both datasets have most of the data in the normal breathing group and have the least data in the slow breathing group. In the case of BIDMC, about 85% of the dataset consisted of data from the normal breathing group, which is a larger number than the other groups. The slow breathing group data, on the other hand, is only 3% of the dataset. Understanding this explains why the normal breathing group performs well in most cases. The reason for the better performance of the model trained on the BIDMC dataset compared to our dataset in

Table 4 can be understood by expanding on the following: there are much more normal breathing data to train on, and the validation data are dominated by data from the same breathing class.

Using the BIDMC validation dataset, we compared each model’s estimates of RRs to the actual RRs to see if they were correlated. If the models overestimated the normal RR, i.e., if they had less error in estimating the actual RR, their estimates were positively correlated with the actual RR. However, BianResNet and RespNet produced either a negative correlation or no correlation of −0.0519 (p < 0.01) and −0.3211 (p < 0.01), respectively. This is likely due to the imbalance in the respiratory data, as discussed above. A negative correlation means that the straight-line output based on the actual and estimated values descends downward, and since BIDMC is almost dominated by normal breathing data, a negative slope may occur when the model estimates data belonging to the slow breathing group with higher estimated values than the actual values. On the other hand, in the case of Dilated ResNet, we confirmed a weak positive correlation of 0.5069 (p < 0.01). This means that the RR estimates, using the current signal data, are not highly correlated with the actual values, which points to the limitations of the current preprocessing.

To further consider that motion artifacts from patients in clinical practice can affect PPG-based RR estimation, we also confirmed the robustness of the three best-performing models to input signals of different SNRs, as shown in

Table 7. The results show that Dilated ResNet has the highest estimation error at 10 db and the lowest errors are seen in the rest of the models. However, while the other models illustrate a gradual increase in error as the SNR decreases (i.e., as the shape of the input signal becomes more distorted), our model shows a sharp increase in error. This suggests that our model is more sensitive to noise that affects morphological features, as mentioned above. To improve this in future work, we would like to introduce regularization techniques to prevent our model from overfitting with the existing features. We would also like to use filters that are flexible to changes in the signal, such as adaptive filters [

48], in preprocessing to increase the robustness of the overall model to various noises.

To estimate the RR, we filtered out only the 0.1–0.4 frequency band signals from the PPG that are likely to be associated with it. Also, it was processed to obtain a low-frequency signal from the PPG that contains information about movement due to respiration. At the same time, it was an attempt to exclude information such as the heartbeat. Many studies suggested various frequency bands [

16,

20,

22,

23]. In addition, in this paper, we resampled the input signal to reduce the computation of the model, in the same way as Bian [

25]. Although this preprocessing allowed us to better focus on the data that we wanted to study, it caused a loss of information and the deformation of a signal in the original data. Such limitation has the potential of degrading the model’s performance and weakening correlations. In our future research, we should be aware of these points to improve the accuracy of RR estimation and more thoroughly investigate the appropriate frequency bands to remove unnecessary noise and capture more information for the estimation. Alternatively, it would be interesting to see and discuss the results of inputting such data without any information-losing preprocessing, taking advantage of the DL model’s ability to analyze the signal. This may be one way to improve the accuracy of RR estimation by applying preprocessing layers of noise filtering and signal detrending to PPG to overcome the existing manual de-modulation method, as an advantage of deep learning models is that they can convolve various filters and signals into PPG, which is difficult for humans to process. However, improving the reliability of these results remains a challenge. Although there are various attempts at Explainable AI [

49,

50], this is also an area for future research to improve the reliability of respiration rate estimation using PPG. It is possible to provide some evidence for the reliability of the result if the convolution filters, frequency filters, or detrending functions initialized in the DL process are adjusted to be more specific to RR estimation and placed in layers. The variety of factors that are present in a patient is another limitation that weakens the model’s performance. In our study, such components—diseases (e.g., hypertension, atrial fibrillation, etc.), interventions (e.g., vasopressor, ventilation, etc.), and other external influences—that modulate the PPG signal were not considered. Thus, in future work, we would like to study these characteristics, categorize patients, and confirm the model’s RR estimation performance for each factor.

Checking the box plot in

Figure 6, we confirmed that Dilated ResNet has the highest standard deviation and median in errors among the three models. This suggests that our proposed model may be affected by various hyperparameters, as shown in

Table 2, by applying a convolutional layer. Thus, future research will not only reduce the error of the proposed model but also clarify and study the causes of such deviations. To improve our model, it is necessary to generalize the model by applying regularization techniques such as L2 regularization, Dropout [

51], replacing the RespBlock inner layer of the Dilated ResNet model to reduce RR estimation bias, and collecting additional data to provide the model with numerous signal patterns. A signal quality index (SQI) algorithm should also be added to reduce RR estimation error. The SQI algorithm is a technique that is intended to assess the signal and exclude signals with noise, which affect the training of the DL model in the preprocessing stage. Various algorithms, such as the skewness-based method, F1-score-based method, entropy-based method, machine learning-based method, etc., have been proposed to assess the quality of the PPG [

16,

52,

53,

54,

55]. If we improve the quality of the PPG signal using an appropriate SQI algorithm that fits the PPG-derived DL model that we implemented for RR estimation in the follow-up study, we expect to estimate a more precise RR.

In

Table 5 and

Table 6, the difference in RR estimation resulting from the breathing rate group in the testing dataset is an obvious limitation that needs to be improved. To overcome this, in future research, we will fully utilize public datasets, but at the same time, try to configure our dataset to evenly test patients with different breathing rates and develop it into a public dataset. To carry this out, we are planning to carefully organize the database schema and data collection environment subject to a large data collection group and to collect the data over a long period, including the subject’s demographic information (e.g., age, gender, weight, etc.), medical record (e.g., whether they underwent surgery, infusion drug time, diagnosis, etc.), and various physiological signals (e.g., ECG, etCO

2, etc.). Furthermore, by improving the methodology of this study, which collected data using only fingertips, we will collect PPG data from various body sites such as the earlobe or foot of a patient, analyze the measurements to compare the accuracy of the RR estimation between sites, and analyze the validity and association of PPG-derived RR estimation AI models according to the patient’s underlying disease and functional level. We expect to propose detailed guidance that is capable of sophisticated application according to the patient’s clinical status and measurement environment for the PPG-derived RR estimation AI model.