Discriminative-Region Multi-Label Classification of Ultra-Widefield Fundus Images

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

3.1. Dataset and Disease Description

- DR is a diabetes complication that affects blood vessels in the retina. DR is marked by microaneurysms, hemorrhages, hard exudates, cotton wool spots, neovascularization, and vitreous hemorrhage. These lesions typically appear in the retina’s periphery.

- RB is a type of retinal disorder involving the detachment or separation of the retina from the underlying tissue. Retinal breaks are characterized by a retinal tear or hole. These lesions can occur anywhere on the UFI.

- RVO is a blockage of retinal veins, causing blood and fluid accumulation in the retina. RVO is marked by retinal hemorrhages, cotton wool spots, macular edema, and neovascularization. These lesions generally appear in the central and mid-peripheral retina on UFI.

- ERM is a condition where a thin tissue layer grows on the retina’s surface, causing visual distortion. ERM is characterized by a wrinkled or folded retina, cystic spaces, and macula distortion. These lesions typically appear in the macular region on UFI.

- AMD is a condition in which the macula, responsible for central vision, deteriorates over time. AMD is marked by drusen, pigmentary changes, geographic atrophy, and neovascularization. These lesions generally appear in the macular region on UFI.

- GS refers to situations that might develop glaucoma. Glaucoma is a group of eye conditions that damage the optic nerve and can lead to blindness. Some of the findings on UFI that could indicate a GS include optic disc changes and retinal nerve fiber layer defects. These findings occur in the area surrounding the optic disc.

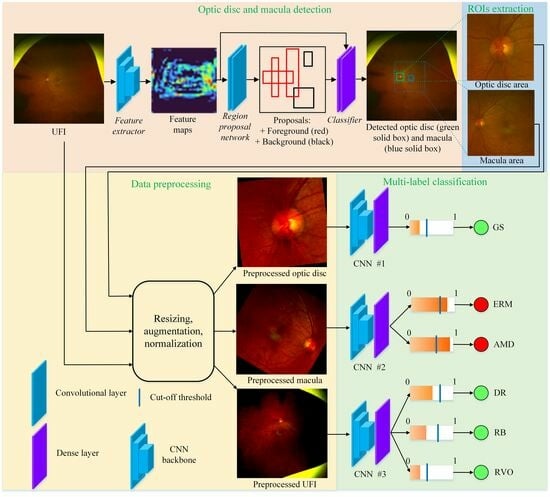

3.2. Proposed Method

3.2.1. Region of Interest Extraction

3.2.2. Multi-Label Classification

4. Performance Evaluation

4.1. Implementation Details and Evaluation Metrics

4.2. Experiment Results

4.2.1. Comparison with Existing Works

4.2.2. Ablation Study

4.2.3. Discussion

5. Conclusions and Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bhambra, N.; Antaki, F.; Malt, F.E.; Xu, A.; Duval, R. Deep learning for ultra-widefield imaging: A scoping review. Graefe’s Arch. Clin. Exp. Ophthalmol. 2022, 260, 3737–3778. [Google Scholar] [CrossRef]

- Nagiel, A.; Lalane, R.A.; Sadda, S.R.; Schwartz, S.D. Ultra-widefield fundus imaging: A review of clinical applications and future trends. Retina 2016, 36, 660–678. [Google Scholar] [CrossRef] [PubMed]

- Kumar, V.; Surve, A.; Kumawat, D.; Takkar, B.; Azad, S.; Chawla, R.; Shroff, D.; Arora, A.; Singh, R.; Venkatesh, P. Ultra-wide field retinal imaging: A wider clinical perspective. Indian J. Ophthalmol. 2021, 69, 824. [Google Scholar] [CrossRef] [PubMed]

- Nagasawa, T.; Tabuchi, H.; Masumoto, H.; Morita, S.; Niki, M.; Ohara, Z.; Yoshizumi, Y.; Mitamura, Y. Accuracy of diabetic retinopathy staging with a deep convolutional neural network using ultra-wide-field fundus ophthalmoscopy and optical coherence tomography angiography. J. Ophthalmol. 2021, 2021, 6651175. Available online: https://www.hindawi.com/journals/joph/2021/6651175/ (accessed on 1 May 2023). [CrossRef] [PubMed]

- Hirano, T.; Imai, A.; Kasamatsu, H.; Kakihara, S.; Toriyama, Y.; Murata, T. Assessment of diabetic retinopathy using two ultra-wide-field fundus imaging systems, the Clarus® and Optos™ systems. BMC Ophthalmol. 2018, 18, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.B.; Ahmad, M.; Yaakob, S.B.; Shahrior, R.; Rashid, M.A.; Higa, H. Automated Diagnosis of Diabetic Retinopathy Using Deep Learning: On the Search of Segmented Retinal Blood Vessel Images for Better Performance. Bioengineering 2023, 10, 413. [Google Scholar] [CrossRef] [PubMed]

- Domalpally, A.; Barrett, N.; Reimers, J.; Blodi, B. Comparison of Ultra-Widefield Imaging and Standard Imaging in Assessment of Early Treatment Diabetic Retinopathy Severity Scale. Ophthalmol. Sci. 2021, 1, 100029. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Guo, C.; Lin, D.; Nie, D.; Zhu, Y.; Chen, C.; Zhao, L.; Wang, J.; Zhang, X.; Dongye, M.; et al. Deep learning for automated glaucomatous optic neuropathy detection from ultra-widefield fundus images. Br. J. Ophthalmol. 2021, 105, 1548–1554. [Google Scholar] [CrossRef]

- Zhou, W.D.; Dong, L.; Zhang, K.; Wang, Q.; Shao, L.; Yang, Q.; Liu, Y.M.; Fang, L.J.; Shi, X.H.; Zhang, C.; et al. Deep Learning for Automatic Detection of Recurrent Retinal Detachment after Surgery Using Ultra-Widefield Fundus Images: A Single-Center Study. Adv. Intell. Syst. 2022, 4, 2200067. [Google Scholar] [CrossRef]

- Feng, B.; Su, W.; Chen, Q.; Gan, R.; Wang, M.; Wang, J.; Zhang, J.; Yan, X. Quantitative Analysis of Retinal Vasculature in Rhegmatogenous Retinal Detachment Based on Ultra-Widefield Fundus Imaging. Front. Med. 2022, 8, 2913. [Google Scholar] [CrossRef]

- Lake, S.R.; Bottema, M.J.; Lange, T.; Williams, K.A.; Reynolds, K.J. Swept-Source OCT Mid-Peripheral Retinal Irregularity in Retinal Detachment and Posterior Vitreous Detachment Eyes. Bioengineering 2023, 10, 377. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Zhao, X.; Chen, Y.; Zhong, J.; Yi, Z. Deepuwf: An automated ultra-wide-field fundus screening system via deep learning. IEEE J. Biomed. Health Inform. 2020, 25, 2988–2996. [Google Scholar] [CrossRef] [PubMed]

- Antaki, F.; Coussa, R.G.; Kahwati, G.; Hammamji, K.; Sebag, M.; Duval, R. Accuracy of automated machine learning in classifying retinal pathologies from ultra-widefield pseudocolour fundus images. Br. J. Ophthalmol. 2023, 107, 90–95. [Google Scholar] [CrossRef] [PubMed]

- Engelmann, J.; McTrusty, A.D.; MacCormick, I.J.; Pead, E.; Storkey, A.; Bernabeu, M.O. Detecting multiple retinal diseases in ultra-widefield fundus imaging and data-driven identification of informative regions with deep learning. Nat. Mach. Intell. 2022, 4, 1143–1154. [Google Scholar] [CrossRef]

- Sun, G.; Wang, X.; Xu, L.; Li, C.; Wang, W.; Yi, Z.; Luo, H.; Su, Y.; Zheng, J.; Li, Z.; et al. Deep Learning for the Detection of Multiple Fundus Diseases Using Ultra-widefield Images. Ophthalmol. Ther. 2023, 12, 895–907. [Google Scholar] [CrossRef]

- Liu, Y.; Shen, J.; Yang, L.; Bian, G.; Yu, H. ResDO-UNet: A deep residual network for accurate retinal vessel segmentation from fundus images. Biomed. Signal Process. Control 2023, 79, 104087. [Google Scholar] [CrossRef]

- Sevgi, D.D.; Srivastava, S.K.; Wykoff, C.; Scott, A.W.; Hach, J.; O’Connell, M.; Whitney, J.; Vasanji, A.; Reese, J.L.; Ehlers, J.P. Deep learning-enabled ultra-widefield retinal vessel segmentation with an automated quality-optimized angiographic phase selection tool. Eye 2022, 36, 1783–1788. [Google Scholar] [CrossRef] [PubMed]

- Dinç, B.; Kaya, Y. A novel hybrid optic disc detection and fovea localization method integrating region-based convnet and mathematical approach. Wirel. Pers. Commun. 2023, 129, 2727–2748. [Google Scholar] [CrossRef]

- Yang, Z.; Li, X.; He, X.; Ding, D.; Wang, Y.; Dai, F.; Jin, X. Joint localization of optic disc and fovea in ultra-widefield fundus images. In Proceedings of the Machine Learning in Medical Imaging: 10th International Workshop, MLMI 2019, Held in Conjunction with MICCAI 2019, Shenzhen, China, 13 October 2019; Proceedings 10. Springer: Cham, Switzerland, 2019; pp. 453–460. [Google Scholar]

- Benvenuto, G.A.; Colnago, M.; Dias, M.A.; Negri, R.G.; Silva, E.A.; Casaca, W. A Fully Unsupervised Deep Learning Framework for Non-Rigid Fundus Image Registration. Bioengineering 2022, 9, 369. [Google Scholar] [CrossRef]

- Saha, S.K.; Xiao, D.; Bhuiyan, A.; Wong, T.Y.; Kanagasingam, Y. Color fundus image registration techniques and applications for automated analysis of diabetic retinopathy progression: A review. Biomed. Signal Process. Control 2019, 47, 288–302. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Punn, N.S.; Agarwal, S. Modality specific U-Net variants for biomedical image segmentation: A survey. Artif. Intell. Rev. 2022, 55, 5845–5889. [Google Scholar] [CrossRef] [PubMed]

- De Vos, B.D.; Berendsen, F.F.; Viergever, M.A.; Sokooti, H.; Staring, M.; Išgum, I. A deep learning framework for unsupervised affine and deformable image registration. Med. Image Anal. 2019, 52, 128–143. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.J.; Cho, K.J.; Oh, S. Development of machine learning models for diagnosis of glaucoma. PLoS ONE 2017, 12, e0177726. [Google Scholar] [CrossRef] [PubMed]

- Long, S.; Chen, J.; Hu, A.; Liu, H.; Chen, Z.; Zheng, D. Microaneurysms detection in color fundus images using machine learning based on directional local contrast. Biomed. Eng. Online 2020, 19, 1–23. [Google Scholar] [CrossRef] [PubMed]

- García-Floriano, A.; Ferreira-Santiago, Á.; Camacho-Nieto, O.; Yáñez-Márquez, C. A machine learning approach to medical image classification: Detecting age-related macular degeneration in fundus images. Comput. Electr. Eng. 2019, 75, 218–229. [Google Scholar] [CrossRef]

- Abitbol, E.; Miere, A.; Excoffier, J.B.; Mehanna, C.J.; Amoroso, F.; Kerr, S.; Ortala, M.; Souied, E.H. Deep learning-based classification of retinal vascular diseases using ultra-widefield colour fundus photographs. BMJ Open Ophthalmol. 2022, 7, e000924. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Lee, J.; Cho, S.; Song, J.; Lee, M.; Kim, S.H.; Lee, J.Y.; Shin, D.H.; Kim, J.M.; Bae, J.H.; et al. Development of decision support software for deep learning-based automated retinal disease screening using relatively limited fundus photograph data. Electronics 2021, 10, 163. [Google Scholar] [CrossRef]

- Wang, J.; Yang, L.; Huo, Z.; He, W.; Luo, J. Multi-label classification of fundus images with efficientnet. IEEE Access 2020, 8, 212499–212508. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Pytorch. Available online: https://pytorch.org/ (accessed on 25 May 2023).

| Method | DR | RB | RVO | ERM | AMD | GS |

|---|---|---|---|---|---|---|

| Lee et al. [30] | 92.52 ± 0.80 | 97.63 ± 0.59 | 97.12 ± 0.61 | 90.58 ± 0.83 | 93.30 ± 0.54 | 88.93 ± 0.38 |

| Wang et al. [31] | 95.21 ± 0.23 | 98.55 ± 0.15 | 98.97 ± 0.15 | 91.16 ± 0.81 | 93.23 ± 0.20 | 89.14 ± 0.80 |

| Zhang et al. [12] | 94.66 ± 0.51 | 95.94 ± 0.96 | 98.46 ± 0.68 | 89.38 ± 0.38 | 91.14 ± 0.75 | 89.78 ± 1.12 |

| Proposed | 97.34 ± 0.25 | 99.14 ± 0.12 | 99.08 ± 0.13 | 96.21 ± 0.28 | 95.36 ± 0.10 | 95.07 ± 0.52 |

| DR | RB | RVO | ERM | AMD | GS | |

|---|---|---|---|---|---|---|

| vs. Lee et al. [30] | <0.05 | <0.05 | <0.05 | <0.05 | <0.05 | <0.05 |

| vs. Wang et al. [31] | <0.05 | <0.05 | 0.31 | <0.05 | <0.05 | <0.05 |

| vs. Zhang et al. [12] | <0.05 | <0.05 | 0.15 | <0.05 | <0.05 | <0.05 |

| Metric | Method | DR | RB | RVO | ERM | AMD | GS | Normal |

|---|---|---|---|---|---|---|---|---|

| Lee et al. [30] | 89.15 ± 1.63 | 93.26 ± 1.64 | 92.79 ± 1.97 | 87.31 ± 3.47 | 86.48 ± 3.64 | 87.38 ± 2.71 | 84.64 ± 0.51 | |

| Accuracy | Wang et al. [31] | 91.29 ± 1.12 | 95.76 ± 0.35 | 97.26 ± 1.73 | 90.55 ± 1.17 | 86.74 ± 2.58 | 77.50 ± 2.98 | 85.57 ± 1.82 |

| Zhang et al. [12] | 89.82 ± 1.68 | 92.32 ± 2.58 | 95.63 ± 0.87 | 81.20 ± 5.21 | 85.08 ± 3.44 | 85.64 ± 1.11 | 84.47 ± 1.47 | |

| Proposed | 93.02 ± 1.58 | 95.79 ± 2.17 | 95.76 ± 2.36 | 92.09 ± 1.97 | 87.58 ± 1.40 | 88.11 ± 1.90 | 87.15 ± 1.04 | |

| Lee et al. [30] | 79.85 ± 3.50 | 94.42 ± 3.09 | 95.24 ± 0.00 | 81.70 ± 4.08 | 87.77 ± 4.10 | 81.88 ± 1.23 | 75.52 ± 1.48 | |

| Sensitivity | Wang et al. [31] | 86.67 ± 1.55 | 95.35 ± 0.00 | 95.24 ± 2.95 | 79.15 ± 3.59 | 87.20 ± 3.41 | 91.88 ± 3.68 | 73.06 ± 4.52 |

| Zhang et al. [12] | 86.67 ± 2.97 | 90.70 ± 0.00 | 95.24 ± 2.95 | 82.98 ± 6.98 | 82.72 ± 4.23 | 86.25 ± 1.50 | 72.99 ± 3.47 | |

| Proposed | 91.63 ± 2.06 | 97.21 ± 2.66 | 96.19 ± 3.49 | 91.49 ± 3.73 | 90.87 ± 1.66 | 92.50 ± 3.13 | 76.27 ± 2.30 | |

| Lee et al. [30] | 91.70 ± 2.52 | 93.16 ± 1.99 | 92.70 ± 2.04 | 87.79 ± 4.09 | 86.21 ± 5.23 | 87.69 ± 2.92 | 92.03 ± 0.98 | |

| Specificity | Wang et al. [31] | 92.55 ± 1.81 | 95.79 ± 0.38 | 97.34 ± 1.89 | 91.52 ± 1.54 | 86.77 ± 3.83 | 76.69 ± 3.34 | 95.71 ± 1.07 |

| Zhang et al. [12] | 90.68 ± 2.92 | 92.45 ± 2.78 | 95.64 ± 0.95 | 81.05 ± 6.25 | 85.56 ± 4.87 | 85.61 ± 1.24 | 93.78 ± 0.76 | |

| Proposed | 93.40 ± 2.37 | 95.68 ± 2.47 | 95.74 ± 2.56 | 92.14 ± 2.33 | 86.90 ± 2.03 | 87.87 ± 2.13 | 95.95 ± 0.83 |

| Method | Micro-Average | ||

|---|---|---|---|

| Accuracy | Sensitivity | Specificity | |

| Lee et al. [30] | 88.83 ± 0.87 | 80.90 ± 0.68 | 90.27 ± 0.95 |

| Wang et al. [31] | 89.24 ± 0.85 | 81.55 ± 1.30 | 90.63 ± 0.86 |

| Zhang et al. [12] | 87.85 ± 1.05 | 79.41 ± 1.96 | 89.37 ± 1.26 |

| Proposed | 91.24 ± 0.74 | 85.66 ± 0.83 | 92.15 ± 0.61 |

| CNN Backbone | DR | RB | RVO | Average | ERM | AMD | Average | GS |

|---|---|---|---|---|---|---|---|---|

| MobileNetV3 | 95.90 ± 0.36 | 98.54 ± 0.20 | 99.43 ± 0.18 | 97.96 | 95.26 ± 0.41 | 94.14 ± 0.57 | 94.70 | 90.58 ± 0.63 |

| EfficientNetB3 | 97.34 ± 0.25 | 99.14 ± 0.12 | 99.08 ± 0.13 | 98.52 | 96.79 ± 0.44 | 95.21 ± 0.29 | 96.00 | 93.03 ± 0.64 |

| Xception | 96.77 ± 0.21 | 98.86 ± 0.50 | 99.42 ± 0.29 | 98.35 | 96.10 ± 0.49 | 95.52 ± 0.06 | 95.81 | 95.07 ± 0.52 |

| Resnet50 | 94.68 ± 0.95 | 98.38 ± 0.58 | 98.80 ± 0.49 | 97.29 | 95.75 ± 0.50 | 95.65 ± 0.57 | 95.70 | 90.03 ± 0.26 |

| (a) Macula Area | |||

|---|---|---|---|

| Edge Length of Macula Area | ERM | AMD | Average |

| 2 d | 96.40 ± 0.31 | 94.52 ± 0.26 | 95.46 |

| 3 d | 96.09 ± 0.50 | 95.15 ± 0.27 | 95.62 |

| 4 d | 96.09 ± 0.44 | 95.40 ± 0.36 | 95.75 |

| 5 d | 96.17 ± 0.30 | 95.28 ± 0.35 | 95.73 |

| 6 d | 96.79 ± 0.44 | 95.21 ± 0.29 | 96.00 |

| Entire UFI | 93.96 ± 0.68 | 94.54 ± 0.30 | 94.25 |

| (b) Optic disc area | |||

| Edge Length of Optic Disc Area | GS | ||

| 2.00 d | 92.55 ± 0.59 | ||

| 2.50 d | 94.32 ± 0.54 | ||

| 3.00 d | 95.07 ± 0.52 | ||

| 3.50 d | 94.89 ± 0.61 | ||

| 4.00 d | 94.81 ± 0.78 | ||

| Entire UFI | 88.86 ± 0.49 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pham, V.-N.; Le, D.-T.; Bum, J.; Kim, S.H.; Song, S.J.; Choo, H. Discriminative-Region Multi-Label Classification of Ultra-Widefield Fundus Images. Bioengineering 2023, 10, 1048. https://doi.org/10.3390/bioengineering10091048

Pham V-N, Le D-T, Bum J, Kim SH, Song SJ, Choo H. Discriminative-Region Multi-Label Classification of Ultra-Widefield Fundus Images. Bioengineering. 2023; 10(9):1048. https://doi.org/10.3390/bioengineering10091048

Chicago/Turabian StylePham, Van-Nguyen, Duc-Tai Le, Junghyun Bum, Seong Ho Kim, Su Jeong Song, and Hyunseung Choo. 2023. "Discriminative-Region Multi-Label Classification of Ultra-Widefield Fundus Images" Bioengineering 10, no. 9: 1048. https://doi.org/10.3390/bioengineering10091048