Eyes in the Sky: Drones Applications in the Built Environment under Climate Change Challenges

Abstract

:1. Introduction

1.1. Motivation and Purpose

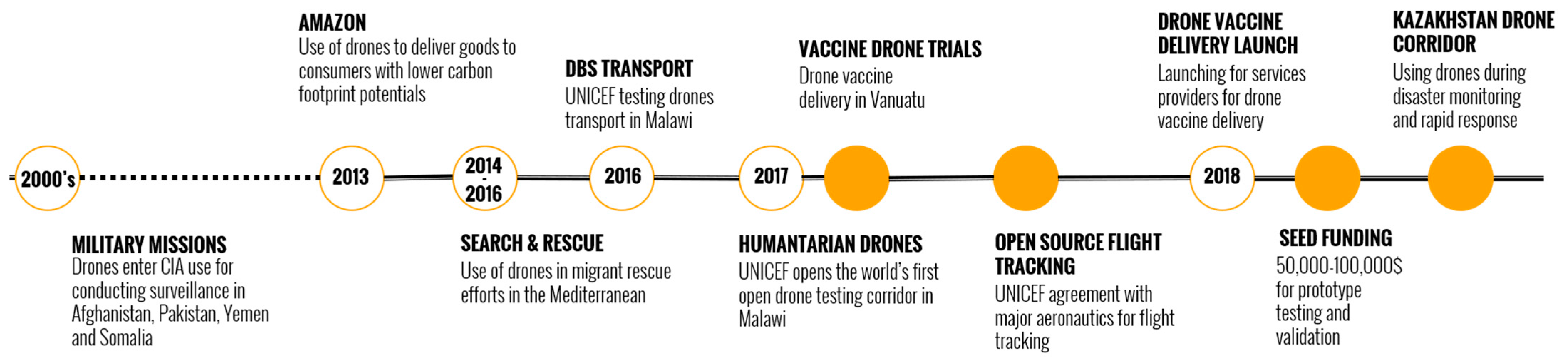

1.2. UAVs: Roots and Advancements

1.2.1. Military Influence and Technological Advancements in UAVs

1.2.2. UAVs and Civilian Applications

1.2.3. Advanced Technologies and UAVs Advancements

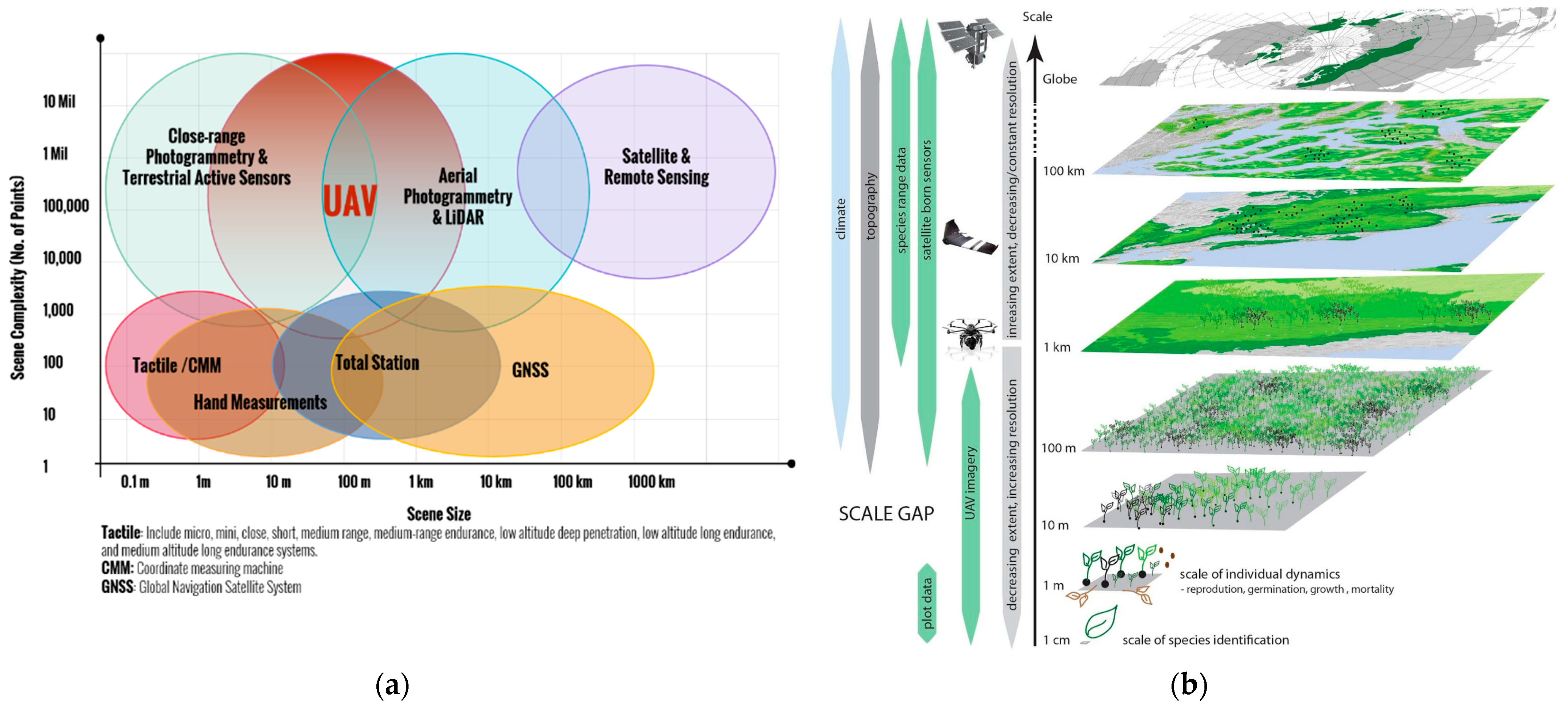

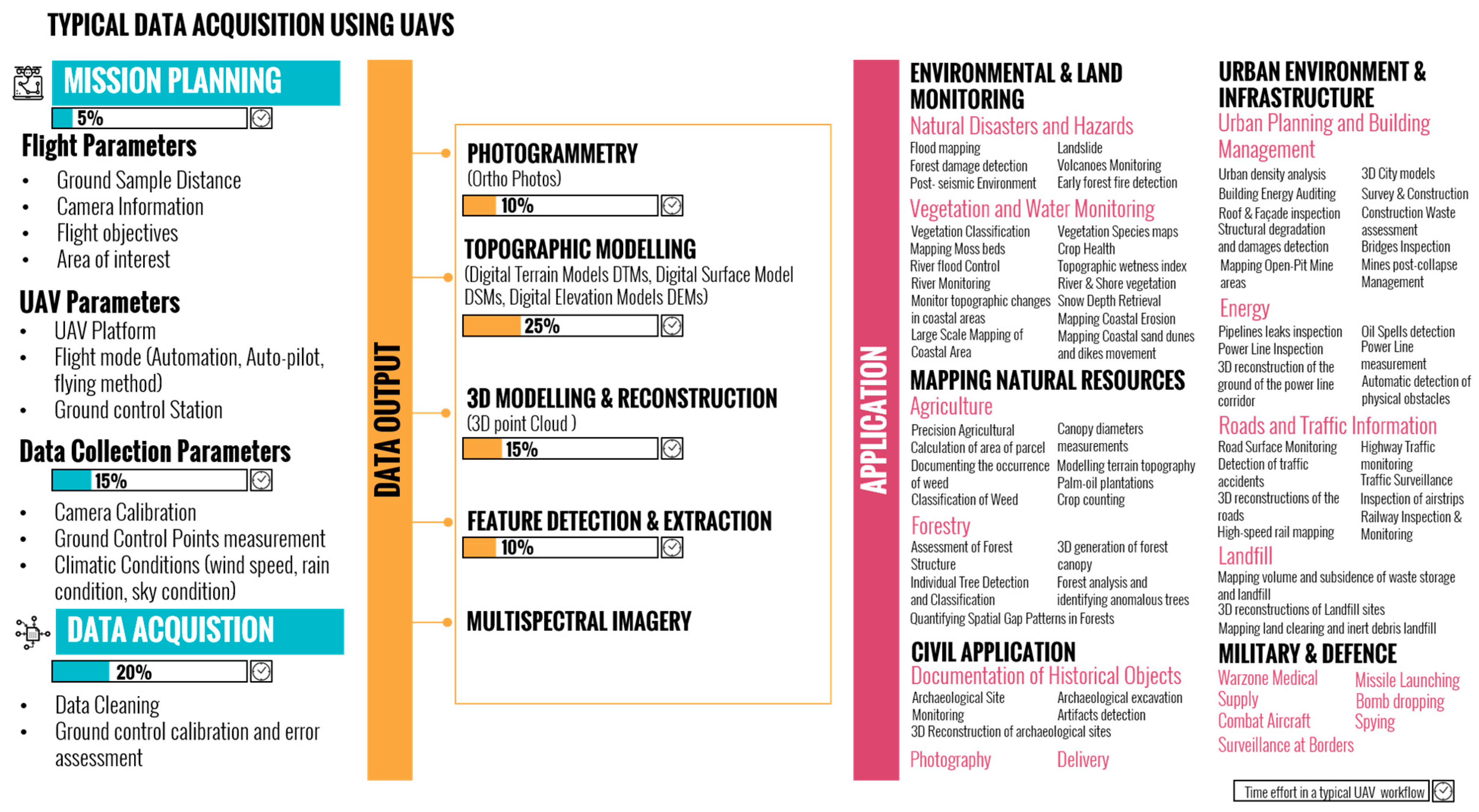

1.3. UAVs and Data Capabilities

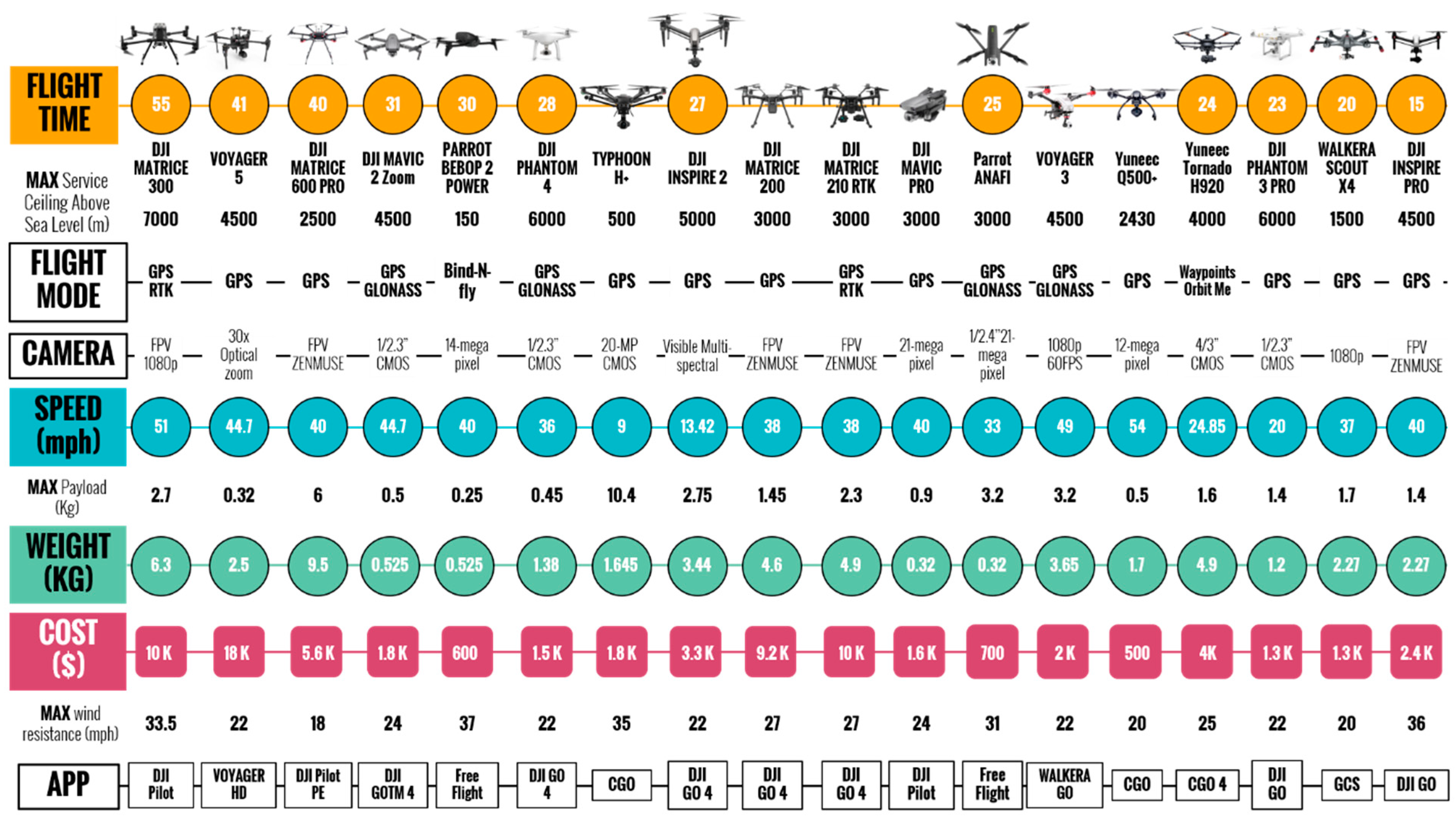

2. UAV Platform Evolution

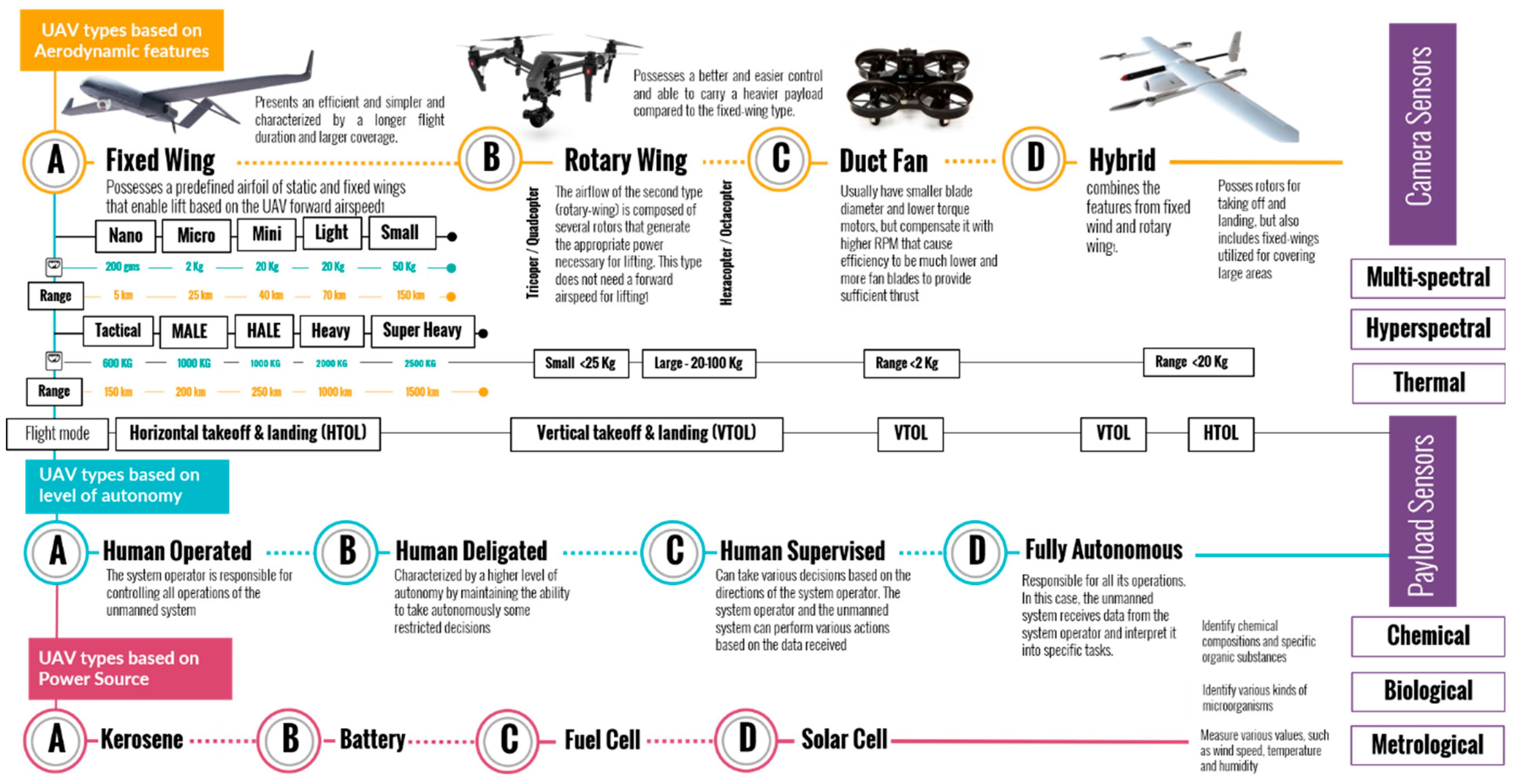

2.1. UAV Platforms Based on Aerodynamic Features

| Platform | Flight Speed | Flight Range | Applications in the Built Environment | Limitations |

|---|---|---|---|---|

| Quadcopters | 0–35 mph | 1–3 km | Urban inspection Urban microclimate | Limited payload capacity Short flight times |

| Ducted Fan | 0–60 mph | 2–7 km | Utility inspection Vertical infrastructure mapping | Limited payload Complex maintenance |

| Fixed Wings | 50–90 mph | 10–40 km | Urban thermal mapping Air pollution monitoring | Require assisted launch/landing Minimal maneuverability |

| Hybrid VTOL | 0–80 mph | 5–25 km | Large-scale mapping Environmental monitoring | Complex transition mechanism Heavier than fixed wings |

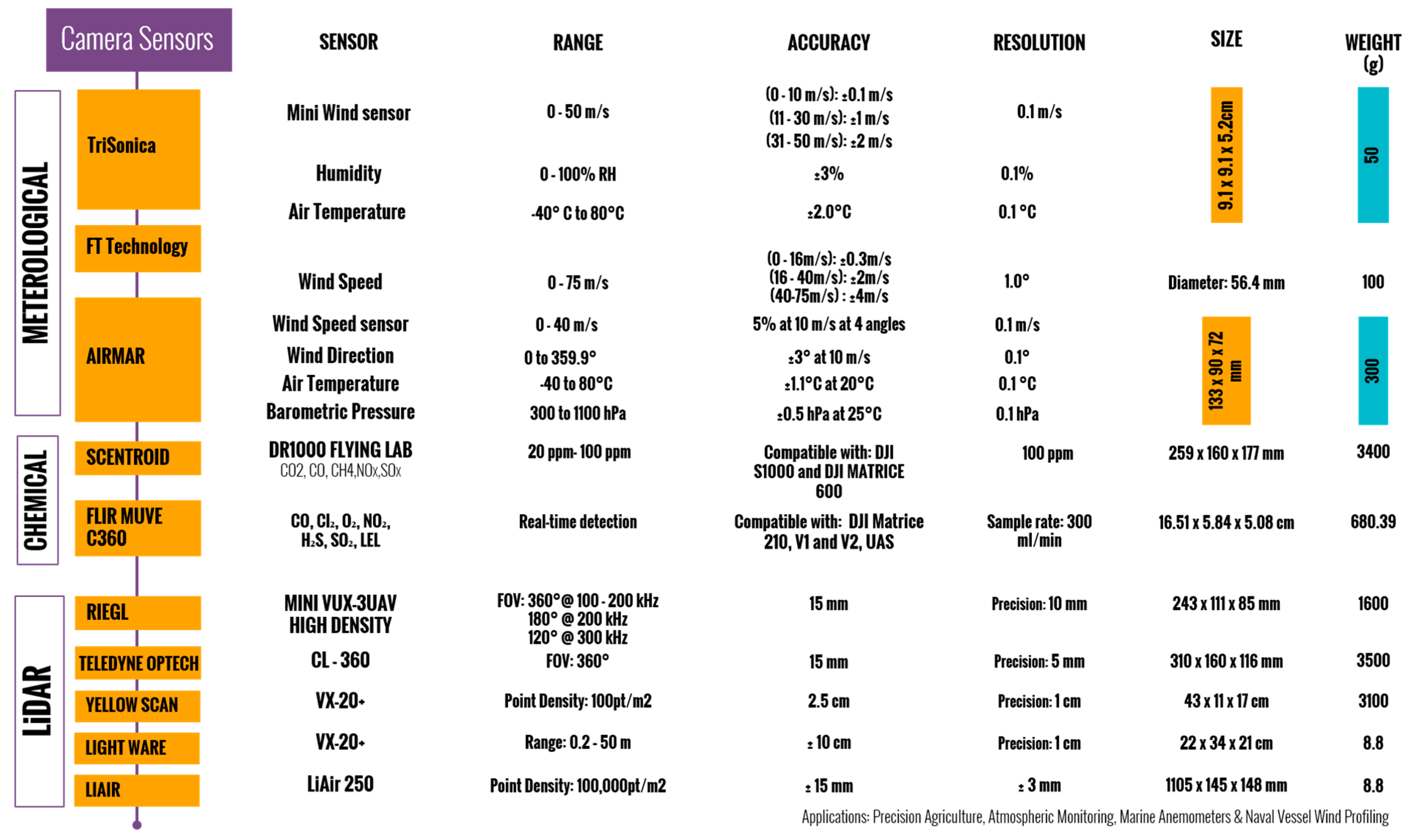

2.2. UAV Sensors

- A.

- High-Resolution Visible-Spectrum Cameras

- B.

- Multispectral Sensors

- C.

- Hyperspectral Sensors

- D.

- Meteorological, Chemical, and LiDAR Sensors

- E.

- Infrared Sensors

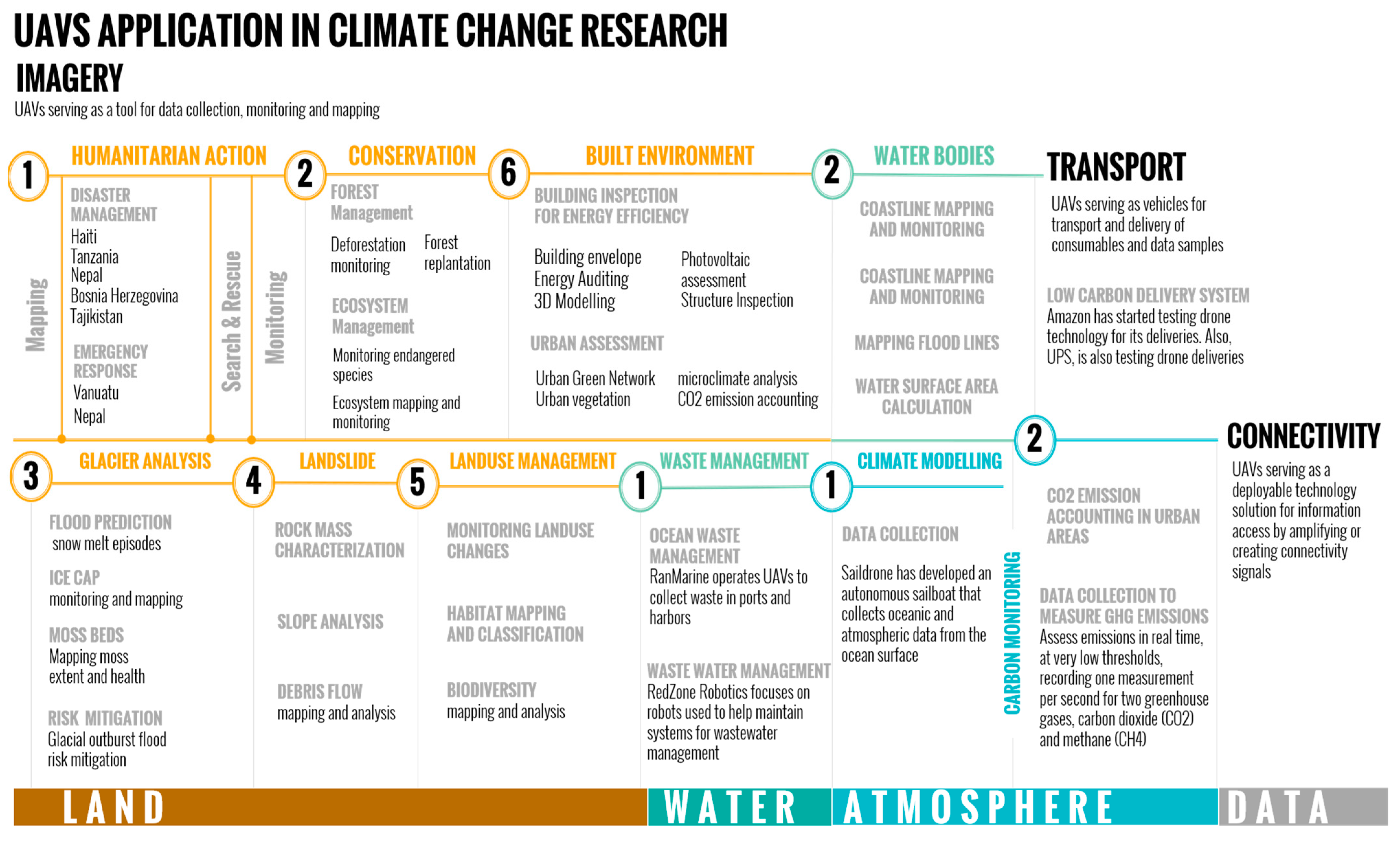

3. UAV Applications in Climate Change Research

3.1. UAVs in Climate Change Research

3.2. Urban Challenges and UAVs Opportunities

- A.

- Urban Microclimate Assessment

- B.

- Building Envelope Performance

- C.

- Inspection and Monitoring of Urban Infrastructures

- D.

- Assessment of Climate Hazard Impacts and Emergency Response Coordination

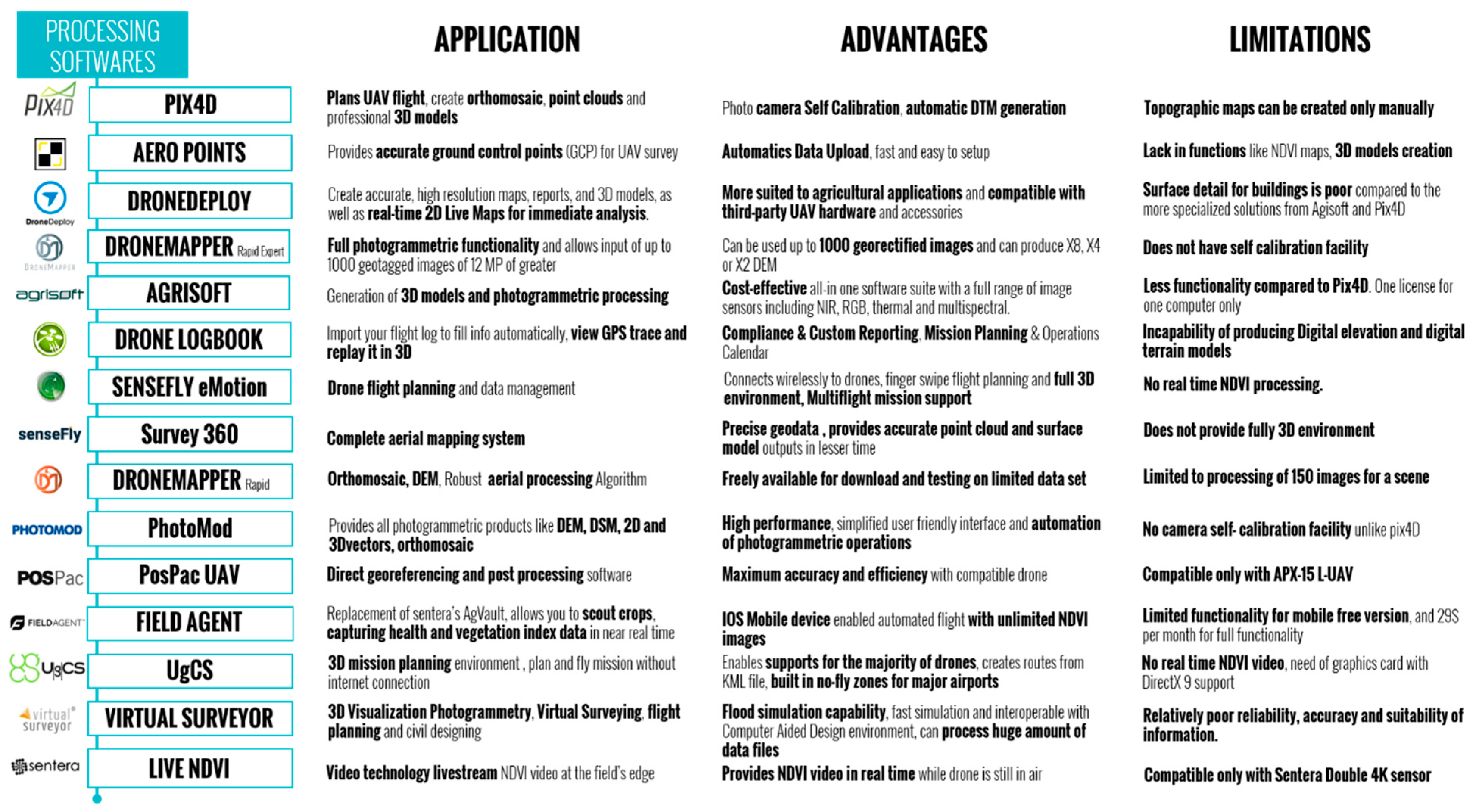

3.3. Data Collection and Processing Platforms

4. UAVs and Artificial Intelligence

4.1. AI and UAVs: Breakthroughs and Crossovers

4.2. AI-Empowered UAVs: Supporting Adaptation and Mitigation Strategies

5. Challenges and Future Directions

5.1. Technical Challenges

- A.

- Energy Use and Payload

- B.

- Automation and Data

- C.

- Weather, Communication, and Path Planning

5.2. Regulations and Security

5.3. The Future of UAVs

- A.

- Power Supply and Flight Duration

- B.

- Data and Sensor Capabilities

- C.

- Safety and Privacy

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [PubMed]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Pajares, G. Overview and Current Status of Remote Sensing Applications Based on Unmanned Aerial Vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef]

- Tmušić, G.; Manfreda, S.; Aasen, H.; James, M.R.; Gonçalves, G.; Ben-Dor, E.; Brook, A.; Polinova, M.; Arranz, J.J.; Mészáros, J.; et al. Current Practices in UAS-based Environmental Monitoring. Remote Sens. 2020, 12, 1001. [Google Scholar] [CrossRef]

- Roldán, J.J.; Joossen, G.; Sanz, D.; Del Cerro, J.; Barrientos, A. Mini-UAV Based Sensory System for Measuring Environmental Variables in Greenhouses. Sensors 2015, 15, 3334–3350. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Jung, J.; Sohn, G.; Cohen, M. Thermal Infrared Inspection Of Roof Insulation Using Unmanned Aerial Vehicles. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-1/W4, 381–386. [Google Scholar] [CrossRef]

- Rakha, T.; Amanda Liberty; Gorodetsky, A.; Kakillioglu, B.; Velipasalar, S. Heat Mapping Drones: An Autonomous Computer-Vision-Based Procedure for Building Envelope Inspection Using Unmanned Aerial Systems (UAS). Technol. Des. 2018, 2, 30–44. [Google Scholar] [CrossRef]

- Villa, T.F.; Gonzalez, F.; Miljievic, B.; Ristovski, Z.D.; Morawska, L. An Overview of Small Unmanned Aerial Vehicles for Air Quality Measurements: Present Applications and Future Prospectives. Sensors 2016, 16, 1072. [Google Scholar] [CrossRef]

- Isibue, E.W.; Pingel, T.J. Unmanned aerial vehicle based measurement of urban forests. Urban For. Urban Green. 2019, 48, 126574. [Google Scholar] [CrossRef]

- Yigitcanlar, T.; Desouza, K.C.; Butler, L.; Roozkhosh, F. Contributions and Risks of Artificial Intelligence (AI) in Building Smarter Cities: Insights from a Systematic Review of the Literature. Energies 2020, 13, 1473. [Google Scholar] [CrossRef]

- Wagner, B.; Egerer, M. Application of UAV remote sensing and machine learning to model and map land use in urban gardens. J. Urban Ecol. 2022, 8, juac008. [Google Scholar] [CrossRef]

- Olson, D.; Anderson, J. Review on unmanned aerial vehicles, remote sensors, imagery processing, and their applications in agriculture. Agron. J. 2021, 113, 971–992. [Google Scholar] [CrossRef]

- Bouvry, P.; Chaumette, S.; Danoy, G.; Guerrini, G.; Jurquet, G.; Kuwertz, A.; Muller, W.; Rosalie, M.; Sander, J. Using heterogeneous multilevel swarms of UAVs and high-level data fusion to support situation management in surveillance scenarios. In Proceedings of the 2016 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Baden-Baden, Germany, 19–21 September 2016; pp. 424–429. [Google Scholar] [CrossRef]

- Khosiawan, Y.; Park, Y.; Moon, I.; Nilakantan, J.M.; Nielsen, I. Task scheduling system for UAV operations in indoor environment. Neural Comput. Appl. 2018, 31, 5431–5459. [Google Scholar] [CrossRef]

- Alanezi, M.A.; Shahriar, M.S.; Hasan, B.; Ahmed, S.; Sha’Aban, Y.A.; Bouchekara, H.R.E.H. Livestock Management With Unmanned Aerial Vehicles: A Review. IEEE Access 2022, 10, 45001–45028. [Google Scholar] [CrossRef]

- Lan, Y.; Chen, S. Current status and trends of plant protection UAV and its spraying technology in China. Int. J. Precis. Agric. Aviat. 2018, 1, 1–9. [Google Scholar] [CrossRef]

- Cai, H.; Geng, Q. Research on the Development Process and Trend of Unmanned Aerial Vehicle. In Proceedings of the 2015 International Industrial Informatics and Computer Engineering Conference; Xi'an, Shaanxi, China Advances in Computer Science Research; Atlantis Press: Paris, France, 2015. [Google Scholar] [CrossRef]

- Qi, N.; Wang, W.; Ye, D.; Wang, M.; Tsiftsis, T.A.; Yao, R. Energy-efficient full-duplex UAV relaying networks: Trajectory design for channel-model-free scenarios. ETRI J. 2021, 43, 436–446. [Google Scholar] [CrossRef]

- Szalanczi-Orban, V.; Vaczi, D. Use of Drones in Logistics: Options in Inventory Control Systems. Interdiscip. Descr. Complex Syst. 2022, 20, 295–303. [Google Scholar] [CrossRef]

- Ventura, D.; Grosso, L.; Pensa, D.; Casoli, E.; Mancini, G.; Valente, T.; Scardi, M.; Rakaj, A. Coastal benthic habitat mapping and monitoring by integrating aerial and water surface low-cost drones. Front. Mar. Sci. 2023, 9, 1096594. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Baylis, S.M.; Mott, R.; Herrod, A.; Clarke, R.H. Precision wildlife monitoring using unmanned aerial vehicles. Sci. Rep. 2016, 6, 22574. [Google Scholar] [CrossRef]

- Mangewa, L.J.; Ndakidemi, P.A.; Munishi, L.K. Integrating UAV Technology in an Ecological Monitoring System for Community Wildlife Management Areas in Tanzania. Sustainability 2019, 11, 6116. [Google Scholar] [CrossRef]

- Jiang, W.; Liu, L.; Xiao, H.; Zhu, S.; Li, W.; Liu, Y. Composition and distribution of vegetation in the water level fluctuating zone of the Lantsang cascade reservoir system using UAV multispectral imagery. PLoS ONE 2021, 16, e0247682. [Google Scholar] [CrossRef]

- Serafinelli, E. Imagining the social future of drones. Converg. Int. J. Res. N. Media Technol. 2022, 28, 1376–1391. [Google Scholar] [CrossRef]

- Hobbs, A.; Lyall, B. Human factors guidelines for unmanned aircraft systems. Ergon. Des. 2016, 24, 23–28. [Google Scholar] [CrossRef]

- Abdelkader, M.; Koubaa, A. Unmanned Aerial Vehicles Applications: Challenges and Trends; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Minkina, W. Theoretical basics of radiant heat transfer—Practical examples of calculation for the infrared (IR) used in infrared thermography measurements. Quant. Infrared Thermogr. J. 2020, 18, 269–282. [Google Scholar] [CrossRef]

- Deane, S.; Avdelidis, N.P.; Ibarra-Castanedo, C.; Williamson, A.A.; Withers, S.; Zolotas, A.; Maldague, X.P.V.; Ahmadi, M.; Pant, S.; Genest, M.; et al. Development of a thermal excitation source used in an active thermographic UAV platform. Quant. Infrared Thermogr. J. 2022, 1–32. [Google Scholar] [CrossRef]

- Radmanesh, M.; Kumar, M.; Nemati, A.; Sarim, M. Dynamic optimal UAV trajectory planning in the National Airspace System via mixed integer linear programming. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2015, 230, 1668–1682. [Google Scholar] [CrossRef]

- Sarim, M.; Radmanesh, M.; Dechering, M.; Kumar, M.; Pragada, R.; Cohen, K. Distributed Detect-and-Avoid for Multiple Unmanned Aerial Vehicles in National Air Space. J. Dyn. Syst. Meas. Control 2019, 141, 071014. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Madrigal, V.P.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Mader, D.; Blaskow, R.; Westfeld, P.; Maas, H.-G. Uav-based acquisition of 3d point cloud—A comparison of a low-cost laser scanner and sfm-tools. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-3/W3, 335–341. [Google Scholar] [CrossRef]

- Wang, Q.; Mao, B.; Stoliarov, S.I.; Sun, J. A review of lithium ion battery failure mechanisms and fire prevention strategies. Prog. Energy Combust. Sci. 2019, 73, 95–131. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Ahmed, F.; Mohanta, J.C.; Keshari, A.; Yadav, P.S. Recent Advances in Unmanned Aerial Vehicles: A Review. Arab. J. Sci. Eng. 2022, 47, 7963–7984. [Google Scholar] [CrossRef] [PubMed]

- Ranyal, E.; Jain, K. Unmanned Aerial Vehicle’s Vulnerability to GPS Spoofing a Review. J. Indian Soc. Remote Sens. 2020, 49, 585–591. [Google Scholar] [CrossRef]

- Koubaa, A.; Ammar, A.; Abdelkader, M.; Alhabashi, Y.; Ghouti, L. AERO: AI-Enabled Remote Sensing Observation with Onboard Edge Computing in UAVs. Remote Sens. 2023, 15, 1873. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Othman, N.Q.H.; Li, Y.; Alsharif, M.H.; Khan, M.A. Unmanned aerial vehicles (UAVs): Practical aspects, applications, open challenges, security issues, and future trends. Intell. Serv. Robot. 2023, 16, 1–29. [Google Scholar] [CrossRef]

- Piekkoontod, T.; Pachana, B.; Hrimpeng, K.; Charoenjit, K. Assessments of Nipa Forest Using Landsat Imagery Enhanced with Unmanned Aerial Vehicle Photography. Appl. Environ. Res. 2020, 42, 49–59. [Google Scholar] [CrossRef]

- Suran, N.A.; Shafri, H.Z.M.; Shaharum, N.S.N.; Radzali, N.A.W.M.; Kumar, V. Uav-based hyperspectral data analysis for urban area mapping. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W16, 621–626. [Google Scholar] [CrossRef]

- Dario, J.; Millian, R. Towards the Application of UAS for Road Maintenance at the Norvik Port. Master’s Thesis, KTH Royal Institute of Technology School of Architecture and the Built Environment, Stockholm, Sweden, 2019. [Google Scholar]

- Zohdi, T.I. Multiple UAVs for Mapping: A Review of Basic Modeling, Simulation, and Applications. Annu. Rev. Environ. Resour. 2018, 43, 523–543. [Google Scholar] [CrossRef]

- Chisholm, R.A.; Cui, J.; Lum, S.K.Y.; Chen, B.M. UAV LiDAR for below-canopy forest surveys. J. Unmanned Veh. Syst. 2013, 1, 61–68. [Google Scholar] [CrossRef]

- Ader, M.; Axelsson, D. Drones in Arctic Environments. Master’s Thesis, KTH School of Industrial Engineering and Management (ITM), Stockholm, Sweden, 2017. [Google Scholar]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Merkert, R.; Bushell, J. Managing the drone revolution: A systematic literature review into the current use of airborne drones and future strategic directions for their effective control. J. Air Transp. Manag. 2020, 89, 101929. [Google Scholar] [CrossRef]

- Bansod, B.; Singh, R.; Thakur, R.; Singhal, G. A comparision between satellite based and drone based remote sensing technology to achieve sustainable development: A review. J. Agric. Environ. Int. Dev. 2017, 111, 383–407. [Google Scholar] [CrossRef]

- Myburgh, A.; Botha, H.; Downs, C.T.; Woodborne, S.M. The Application and Limitations of a Low-Cost UAV Platform and Open-Source Software Combination for Ecological Mapping and Monitoring. Afr. J. Wildl. Res. 2021, 51, 166–177. [Google Scholar] [CrossRef]

- Cook, K.L. An evaluation of the effectiveness of low-cost UAVs and structure from motion for geomorphic change detection. Geomorphology 2017, 278, 195–208. [Google Scholar] [CrossRef]

- Awais, M.; Li, W.; Hussain, S.; Cheema, M.J.M.; Li, W.; Song, R.; Liu, C. Comparative Evaluation of Land Surface Temperature Images from Unmanned Aerial Vehicle and Satellite Observation for Agricultural Areas Using In Situ Data. Agriculture 2022, 12, 184. [Google Scholar] [CrossRef]

- Al-Farabi, M.; Chowdhury, M.; Readuzzaman, M.; Hossain, M.R.; Sabuj, S.R.; Hossain, M.A. Smart Environment Monitoring System using Unmanned Aerial Vehicle in Bangladesh. EAI Endorsed Trans. Smart Cities 2018, 5, e1. [Google Scholar] [CrossRef]

- Gordan, M.; Ismail, Z.; Ghaedi, K.; Ibrahim, Z.; Hashim, H.; Ghayeb, H.H.; Talebkhah, M. A Brief Overview and Future Perspective of Unmanned Aerial Systems for In-Service Structural Health Monitoring. Eng. Adv. 2021, 1, 9–15. [Google Scholar] [CrossRef]

- Eiris, R.; Albeaino, G.; Gheisari, M.; Benda, W.; Faris, R. InDrone: A 2D-based drone flight behavior visualization platform for indoor building inspection. Smart Sustain. Built Environ. 2021, 10, 438–456. [Google Scholar] [CrossRef]

- Sabour, M.; Jafary, P.; Nematiyan, S. Applications and classifications of unmanned aerial vehicles: A literature review with focus on multi-rotors. Aeronaut. J. 2022, 127, 466–490. [Google Scholar] [CrossRef]

- Idrissi, M.; Salami, M.; Annaz, F. A Review of Quadrotor Unmanned Aerial Vehicles: Applications, Architectural Design and Control Algorithms. J. Intell. Robot. Syst. 2022, 104, 1–33. [Google Scholar] [CrossRef]

- Wang, J.; Ma, C.; Chen, P.; Yao, W.; Yan, Y.; Zeng, T.; Chen, S.; Lan, Y. Evaluation of aerial spraying application of multi-rotor unmanned aerial vehicle for Areca catechu protection. Front. Plant Sci. 2023, 14, 1093912. [Google Scholar] [CrossRef]

- Johnson, E.N.; Mooney, J.G. A Comparison of Automatic Nap-of-the-earth Guidance Strategies for Helicopters. J. Field Robot. 2014, 31, 637–653. [Google Scholar] [CrossRef]

- Amorim, M.; Lousada, A. Tethered Drone for Precision Agriculture. Master’s Thesis, University of Porto, Porto, Portugal, 2021. [Google Scholar]

- Winnefeld, J.A., Jr.; Kendall, F. Unmanned Systems Integrated Roadmap FY2011–2036; Technical Report 14-S-0553; United States Department of Defence: Washington, DC, USA, 2017. [Google Scholar]

- Tang, H.; Zhang, D.; Gan, Z. Control System for Vertical Take-off and Landing Vehicle’s Adaptive Landing Based on Multi-Sensor Data Fusion. Sensors 2020, 20, 4411. [Google Scholar] [CrossRef]

- Misra, A.; Jayachandran, S.; Kenche, S.; Katoch, A.; Suresh, A.; Gundabattini, E.; Selvaraj, S.K.; Legesse, A.A. A Review on Vertical Take-Off and Landing (VTOL) Tilt-Rotor and Tilt Wing Unmanned Aerial Vehicles (UAVs). J. Eng. 2022, 2022, 1–27. [Google Scholar] [CrossRef]

- Vergouw, B.; Nagel, H.; Bondt, G.; Custers, B. Drone technology: Types, payloads, applications, frequency spectrum issues and future developments. In The Future of Drone Use; TMC Asser Press: Hague, The Netherlands, 2016; pp. 21–45. [Google Scholar] [CrossRef]

- Hayat, S.; Yanmaz, E.; Muzaffar, R. Survey on Unmanned Aerial Vehicle Networks for Civil Applications: A Communications Viewpoint. IEEE Commun. Surv. Tutor. 2016, 18, 2624–2661. [Google Scholar] [CrossRef]

- Gupta, L.; Jain, R.; Vaszkun, G. Survey of Important Issues in UAV Communication Networks. IEEE Commun. Surv. Tutor. 2016, 18, 1123–1152. [Google Scholar] [CrossRef]

- Villa, T.F.; Salimi, F.; Morton, K.; Morawska, L.; Gonzalez, F. Development and Validation of a UAV Based System for Air Pollution Measurements. Sensors 2016, 16, 2202. [Google Scholar] [CrossRef]

- Wallace, L.O.; Lucieer, A.; Watson, C.S. Assessing the feasibility of uav-based lidar for high resolution forest change detection. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B7, 499–504. [Google Scholar] [CrossRef]

- Naughton, J.; McDonald, W. Evaluating the Variability of Urban Land Surface Temperatures Using Drone Observations. Remote Sens. 2019, 11, 1722. [Google Scholar] [CrossRef]

- Eagle, A. S.O.D.A—eBee Series. 2023. Available online: https://ageagle.com/solutions/ebee-series/ (accessed on 15 May 2023).

- DJI. DJI Drones. 2023. Available online: https://www.dji.com/ (accessed on 8 July 2023).

- Parrot. Parrot Drones—Anafi. 2023. Available online: https://www.parrot.com/us/drones (accessed on 22 July 2023).

- Yuneec. Yuneec Drones. 2023. Available online: https://yuneec.online/ (accessed on 23 June 2023).

- Walkera Tech. Voyager 3. 2023. Available online: http://www.walkeratech.com/25.html (accessed on 10 July 2023).

- Rajan, J.; Shriwastav, S.; Kashyap, A.; Ratnoo, A.; Ghose, D. Disaster Management Using Unmanned Aerial Vehicles; Elsevier: Amsterdam, The Netherlands, 2021; pp. 129–155. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Guillén-Climent, M.L.; Hernández-Clemente, R.; Catalina, A.; González, M.R.; Martín, P. Estimating leaf carotenoid content in vineyards using high resolution hyperspectral imagery acquired from an unmanned aerial vehicle (UAV). Agric. Forest Meteorol. 2013, 171–172, 281–294. [Google Scholar] [CrossRef]

- Cillero Castro, C.; Domínguez Gómez, J.A.; Delgado Martín, J.; Hinojo Sánchez, B.A.; Cereijo Arango, J.L.; Cheda Tuya, F.A.; Díaz-Varela, R. An UAV and Satellite Multispectral Data Approach to Monitor Water Quality in Small Reservoirs. Remote Sens 2020, 12, 1–33. [Google Scholar] [CrossRef]

- Wei, G.; Li, Y.; Zhang, Z.; Chen, Y.; Chen, J.; Yao, Z.; Lao, C.; Chen, H. Estimation of soil salt content by combining UAV-borne multispectral sensor and machine learning algorithms. PeerJ 2020, 8, e9087. [Google Scholar] [CrossRef]

- Meier, F.; Scherer, D.; Richters, J.; Christen, A. Atmospheric correction of thermal-infrared imagery of the 3-D urban environment acquired in oblique viewing geometry. Atmos. Meas. Tech. 2011, 4, 909–922. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; González-Dugo, V.; Berni, J.A.J. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a micro-hyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV Remote Sensing for Urban Vegetation Mapping Using Random Forest and Texture Analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Ren, S.; Malof, J.; Fetter, R.; Beach, R.; Rineer, J.; Bradbury, K. Utilizing Geospatial Data for Assessing Energy Security: Mapping Small Solar Home Systems Using Unmanned Aerial Vehicles and Deep Learning. ISPRS Int. J. GeoInf. 2022, 11, 222. [Google Scholar] [CrossRef]

- Jia, J.; Cui, W.; Liu, J. Urban Catchment-Scale Blue-Green-Gray Infrastructure Classification with Unmanned Aerial Vehicle Images and Machine Learning Algorithms. Front. Environ. Sci. 2022, 9, 734. [Google Scholar] [CrossRef]

- Ahmad, J.; Eisma, J.A. Capturing Small-Scale Surface Temperature Variation across Diverse Urban Land Uses with a Small Unmanned Aerial Vehicle. Remote Sens. 2023, 15, 2042. [Google Scholar] [CrossRef]

- Sentera. High-Precision Single Sensor. 2023. Available online: https://sentera.com/products/fieldcapture/sensors/single/ (accessed on 10 August 2023).

- Mapir. Survey3 Cameras. 2023. Available online: https://www.mapir.camera/collections/survey3 (accessed on 5 July 2023).

- GeoSpatial PhaseOne. Phaseone iXM-100|iXM-50. 2023, p. 100. Available online: https://geospatial.phaseone.com/cameras/ixm-100/ (accessed on 5 August 2023).

- Imaging, R. RICOH GR III/GR IIIx. 2023. Available online: https://www.ricoh-imaging.co.jp/ (accessed on 15 May 2023).

- Sentek Systems. Gems Sensor. 2023. Available online: http://precisionaguavs.com/ (accessed on 18 July 2023).

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Green, D.R.; Hagon, J.J.; Gómez, C.; Gregory, B.J. Using Low-Cost UAVs for Environmental Monitoring, Mapping, and Modelling: Examples From the Coastal Zone. In Coastal Management; Elsevier: Amsterdam, The Netherlands, 2019; pp. 465–501. [Google Scholar] [CrossRef]

- Popescu, D.; Ichim, L.; Stoican, F. Unmanned Aerial Vehicle Systems for Remote Estimation of Flooded Areas Based on Complex Image Processing. Sensors 2017, 17, 446. [Google Scholar] [CrossRef] [PubMed]

- Kuras, A.; Brell, M.; Rizzi, J.; Burud, I. Hyperspectral and Lidar Data Applied to the Urban Land Cover Machine Learning and Neural-Network-Based Classification: A Review. Remote Sens. 2021, 13, 3393. [Google Scholar] [CrossRef]

- Headwall. Hyperspectral Sensors. 2023. Available online: https://www.headwallphotonics.com/products/hyperspectral-sensors (accessed on 22 May 2023).

- Corning Optics. Nova Sol. 2023. Available online: https://www.corning.com/asean/en/products/advanced-optics/product-materials/aerospace-defense/spectral-sensing.html (accessed on 19 April 2023).

- Cubert. Hyperspectral Sensors. 2023. Available online: https://www.cubert-hyperspectral.com (accessed on 25 April 2023).

- Resonon. Hyperspectral Imaging Cameras|Hyperspectral Imaging Solutions. 2023. Available online: https://resonon.com/objective-lenses (accessed on 13 June 2023).

- Carotenuto, F.; Brilli, L.; Gioli, B.; Gualtieri, G.; Vagnoli, C.; Mazzola, M.; Viola, A.P.; Vitale, V.; Severi, M.; Traversi, R.; et al. Long-Term Performance Assessment of Low-Cost Atmospheric Sensors in the Arctic Environment. Sensors 2020, 20, 1919. [Google Scholar] [CrossRef] [PubMed]

- Wildmann, N.; Ravi, S.; Bange, J. Towards higher accuracy and better frequency response with standard multi-hole probes in turbulence measurement with remotely piloted aircraft (RPA). Atmos. Meas. Tech. 2014, 7, 1027–1041. [Google Scholar] [CrossRef]

- Brosy, C.; Krampf, K.; Zeeman, M.; Wolf, B.; Junkermann, W.; Schäfer, K.; Emeis, S.; Kunstmann, H. Simultaneous multicopter-based air sampling and sensing of meteorological variables. Atmos. Meas. Tech. 2017, 10, 2773–2784. [Google Scholar] [CrossRef]

- Fumian, F.; Chierici, A.; Bianchelli, M.; Martellucci, L.; Rossi, R.; Malizia, A.; Gaudio, P.; D’errico, F.; Di Giovanni, D. Development and performance testing of a miniaturized multi-sensor system combining MOX and PID for potential UAV application in TIC, VOC and CWA dispersion scenarios. Eur. Phys. J. Plus 2021, 136, 1–19. [Google Scholar] [CrossRef]

- Scheller, J.H.; Mastepanov, M.; Christensen, T.R. Toward UAV-based methane emission mapping of Arctic terrestrial ecosystems. Sci. Total. Environ. 2022, 819, 153161. [Google Scholar] [CrossRef]

- Luo, Z.; Che, J.; Wang, K. Detection of UAV target based on Continuous Radon transform and Matched filtering process for Passive Bistatic Radar. Authorea Preprints; 7 April 2022. [CrossRef]

- Tian, B.; Liu, W.; Mo, H.; Li, W.; Wang, Y.; Adhikari, B.R. Detecting the Unseen: Understanding the Mechanisms and Working Principles of Earthquake Sensors. Sensors 2023, 23, 5335. [Google Scholar] [CrossRef]

- Li-Cor. TriSonica Weather Sensors. 2023. Available online: https://anemoment.com/shop/sensors/trisonica-mini-wind-and-weather-sensor/ (accessed on 18 August 2023).

- AirMar. AIRMAR Sensors 2023. Available online: https://www.airmar.com/ (accessed on 23 August 2023).

- FLIR. MUVE C360 2023. Available online: https://www.flir.com/products/muve-c360/ (accessed on 5 August 2023).

- Optech, T. Teledyne LiDAR 2023. Available online: https://www.teledyneoptech.com/en/HOME/ (accessed on 10 June 2023).

- Esin, A.I.; Akgul, M.; Akay, A.O.; Yurtseven, H. Comparison of LiDAR-based morphometric analysis of a drainage basin with results obtained from UAV, TOPO, ASTER and SRTM-based DEMs. Arab. J. Geosci. 2021, 14, 1–15. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Brede, B.; Lau, A.; Bartholomeus, H.M.; Kooistra, L. Comparing RIEGL RiCOPTER UAV LiDAR Derived Canopy Height and DBH with Terrestrial LiDAR. Sensors 2017, 17, 2371. [Google Scholar] [CrossRef] [PubMed]

- Gao, M.; Yang, F.; Wei, H.; Liu, X. Individual Maize Location and Height Estimation in Field from UAV-Borne LiDAR and RGB Images. Remote Sens. 2022, 14, 2292. [Google Scholar] [CrossRef]

- Thiel, C.; Schmullius, C. Comparison of UAV photograph-based and airborne lidar-based point clouds over forest from a forestry application perspective. Int. J. Remote Sens. 2016, 38, 2411–2426. [Google Scholar] [CrossRef]

- Ressl, C.; Brockmann, H.; Mandlburger, G.; Pfeifer, N. Dense Image Matching vs. Airborne Laser Scanning—Comparison of two methods for deriving terrain models. Photogramm. Fernerkund. Geoinf. 2016, 2016, 57–73. [Google Scholar] [CrossRef]

- Štroner, M.; Urban, R.; Línková, L. A New Method for UAV Lidar Precision Testing Used for the Evaluation of an Affordable DJI ZENMUSE L1 Scanner. Remote Sens. 2021, 13, 4811. [Google Scholar] [CrossRef]

- Yan, K.; Di Baldassarre, G.; Solomatine, D.P.; Schumann, G.J. A review of low-cost space-borne data for flood modelling: Topography, flood extent and water level. Hydrol. Process. 2015, 29, 3368–3387. [Google Scholar] [CrossRef]

- Casas-Mulet, R.; Pander, J.; Ryu, D.; Stewardson, M.J.; Geist, J. Unmanned Aerial Vehicle (UAV)-Based Thermal Infra-Red (TIR) and Optical Imagery Reveals Multi-Spatial Scale Controls of Cold-Water Areas Over a Groundwater-Dominated Riverscape. Front. Environ. Sci. 2020, 8, 1–16. [Google Scholar] [CrossRef]

- Rakha, T.; Gorodetsky, A. Review of Unmanned Aerial System (UAS) applications in the built environment: Towards automated building inspection procedures using drones. Autom. Constr. 2018, 93, 252–264. [Google Scholar] [CrossRef]

- Eschmann, C.; Kuo, C.M.; Kuo, C.H.; Boller, C. Unmanned aircraft systems for remote building inspection and monitoring. In Proceedings of the 6th European Workshop—Structural Health Monitoring—Th.2.B.1, Dresden, Germany, 3–6 July 2012; pp. 1179–1186. [Google Scholar]

- Feng, L.; Liu, Y.; Zhou, Y.; Yang, S. A UAV-derived thermal infrared remote sensing three-temperature model and estimation of various vegetation evapotranspiration in urban micro-environments. Urban For. Urban Green. 2022, 69, 127495. [Google Scholar] [CrossRef]

- Rakha, T.; El Masri, Y.; Chen, K.; De Wilde, P. 3D drone-based time-lapse thermography: A case study of roof vulnerability characterization using photogrammetry and performance simulation implications. In Proceedings of the 17th IBPSA Conference, Bruges, Belgium, 1–3 September 2021; pp. 2023–2030. [Google Scholar] [CrossRef]

- FLIR. FLIR IR Sensors n.d. Available online: https://www.flir.com/ (accessed on 17 May 2023).

- Workswell. Thermal Imaging Cameras for UAV Systems. 2023. Available online: https://workswell-thermal-camera.com/ (accessed on 12 August 2023).

- Cox, T.H.; Somers, I.; Fratello, S. Earth Observations and the Role of UAVs: A Capabilities Assessment, Version 1.1; Technical Report; Civil UAV Team, NASA: Washington, DC, USA, 2006; p. 346. [Google Scholar]

- Lessard-Fontaine, A.; Alschner, F.; Soesilo, D. Using High-resolution Imagery to Support the Post-earthquake Census in Port-au-Prince, Haiti. Drones Humanit Action 2013:0–4. European Civil Protection and Humanitarian Aid Operations, Brussels, Belgium. Available online: https://reliefweb.int/report/haiti/drones-humanitarian-action-case-study-no7-using-high-resolution-imagery-support-post (accessed on 7 July 2023).

- UNICEF Innovation. Low-Cost Drones Deliver Medicines in Malawi; UNICEF: New York, NY, USA, 2017. [Google Scholar]

- UNICEF. Drone Testing Corridors Established in Kazakhstan; UNICEF: New York, NY, USA, 2018. [Google Scholar]

- Lim, J.S.; Gleason, S.; Williams, M.; Matás, G.J.L.; Marsden, D.; Jones, W. UAV-Based Remote Sensing for Managing Alaskan Native Heritage Landscapes in the Yukon-Kuskokwim Delta. Remote Sens. 2022, 14, 728. [Google Scholar] [CrossRef]

- Djimantoro, M.; Suhardjanto, G. The Advantage by Using Low-Altitude UAV for Sustainable Urban Development Control. IOP Conf. Ser. Earth Environ. Sci. 2017, 109, 012014. [Google Scholar] [CrossRef]

- Dash, J.P.; Pearse, G.D.; Watt, M.S. UAV Multispectral Imagery Can Complement Satellite Data for Monitoring Forest Health. Remote Sens. 2018, 10, 1216. [Google Scholar] [CrossRef]

- Gaffey, C.; Bhardwaj, A. Applications of Unmanned Aerial Vehicles in Cryosphere: Latest Advances and Prospects. Remote Sens. 2020, 12, 948. [Google Scholar] [CrossRef]

- Musso, R.F.G.; Oddi, F.J.; Goldenberg, M.G.; Garibaldi, L.A. Applying unmanned aerial vehicles (UAVs) to map shrubland structural attributes in northern Patagonia, Argentina. Can. J. For. Res. 2020, 50, 615–623. [Google Scholar] [CrossRef]

- Addo, K.A.; Jayson-Quashigah, P.-N.; Codjoe, S.N.A.; Martey, F. Drone as a tool for coastal flood monitoring in the Volta Delta, Ghana. Geoenviron. Disasters 2018, 5, 1–13. [Google Scholar] [CrossRef]

- Shaw, A.; Hashemi, M.R.; Spaulding, M.; Oakley, B.; Baxter, C. Effect of Coastal Erosion on Storm Surge: A Case Study in the Southern Coast of Rhode Island. J. Mar. Sci. Eng. 2016, 4, 85. [Google Scholar] [CrossRef]

- Gålfalk, M.; Påledal, S.N.; Bastviken, D. Sensitive Drone Mapping of Methane Emissions without the Need for Supplementary Ground-Based Measurements. ACS Earth Space Chem. 2021, 5, 2668–2676. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Shaw, J.T.; Shah, A.; Yong, H.; Allen, G. Methods for quantifying methane emissions using unmanned aerial vehicles: A review. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2021, 379, 20200450. [Google Scholar] [CrossRef]

- Gullett, B.; Aurell, J.; Mitchell, W.; Richardson, J. Use of an unmanned aircraft system to quantify NOx emissions from a natural gas boiler. Atmos. Meas. Tech. 2021, 14, 975–981. [Google Scholar] [CrossRef] [PubMed]

- Raval, S. Smart Sensing for Mineral Exploration through to Mine Closure. Int. J. Georesour. Environ. 2018, 4, 115–119. [Google Scholar] [CrossRef]

- Namburu, A.; Selvaraj, P.; Mohan, S.; Ragavanantham, S.; Eldin, E.T. Forest Fire Identification in UAV Imagery Using X-MobileNet. Electronics 2023, 12, 733. [Google Scholar] [CrossRef]

- Carvajal-Ramírez, F.; da Silva, J.R.M.; Agüera-Vega, F.; Martínez-Carricondo, P.; Serrano, J.; Moral, F.J. Evaluation of Fire Severity Indices Based on Pre- and Post-Fire Multispectral Imagery Sensed from UAV. Remote Sens. 2019, 11, 993. [Google Scholar] [CrossRef]

- Yavuz, M.; Koutalakis, P.; Diaconu, D.C.; Gkiatas, G.; Zaimes, G.N.; Tufekcioglu, M.; Marinescu, M. Identification of Streamside Landslides with the Use of Unmanned Aerial Vehicles (UAVs) in Greece, Romania, and Turkey. Remote Sens. 2023, 15, 1006. [Google Scholar] [CrossRef]

- Brook, M.S.; Merkle, J. Monitoring active landslides in the Auckland region utilising UAV/structure-from-motion photogrammetry. Jpn. Geotech. Soc. Spec. Publ. 2019, 6, 1–6. [Google Scholar] [CrossRef]

- Ilinca, V.; Șandric, I.; Chițu, Z.; Irimia, R.; Gheuca, I. UAV applications to assess short-term dynamics of slow-moving landslides under dense forest cover. Landslides 2022, 19, 1717–1734. [Google Scholar] [CrossRef]

- Mora, O.E.; Lenzano, M.G.; Toth, C.K.; Grejner-Brzezinska, D.A.; Fayne, J.V. Landslide Change Detection Based on Multi-Temporal Airborne LiDAR-Derived DEMs. Geosciences 2018, 8, 23. [Google Scholar] [CrossRef]

- Migliazza, M.; Carriero, M.T.; Lingua, A.; Pontoglio, E.; Scavia, C. Rock Mass Characterization by UAV and Close-Range Photogrammetry: A Multiscale Approach Applied along the Vallone dell’Elva Road (Italy). Geosciences 2021, 11, 436. [Google Scholar] [CrossRef]

- Mineo, S.; Caliò, D.; Pappalardo, G. UAV-Based Photogrammetry and Infrared Thermography Applied to Rock Mass Survey for Geomechanical Purposes. Remote Sens. 2022, 14, 473. [Google Scholar] [CrossRef]

- Loiotine, L.; Andriani, G.F.; Derron, M.-H.; Parise, M.; Jaboyedoff, M. Evaluation of InfraRed Thermography Supported by UAV and Field Surveys for Rock Mass Characterization in Complex Settings. Geosciences 2022, 12, 116. [Google Scholar] [CrossRef]

- Fu, X.; Ding, H.; Sheng, Q.; Chen, J.; Chen, H.; Li, G.; Fang, L.; Du, W. Reproduction Method of Rockfall Geologic Hazards Based on Oblique Photography and Three-Dimensional Discontinuous Deformation Analysis. Front. Earth Sci. 2021, 9, 755876. [Google Scholar] [CrossRef]

- Dimitrov, S.; Popov, A.; Iliev, M. Mapping and assessment of urban heat island effects in the city of Sofia, Bulgaria through integrated application of remote sensing, unmanned aerial systems (UAS) and GIS. In Proceedings of the Eighth International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2020), Paphos, Cyprus, 16–18 March 2020. [Google Scholar] [CrossRef]

- Erenoglu, R.C.; Erenoglu, O.; Arslan, N. Accuracy Assessment of Low Cost UAV Based City Modelling for Urban Planning. Teh. Vjesn. Tech. Gaz. 2018, 25, 1708–1714. [Google Scholar] [CrossRef]

- Trepekli, K.; Balstrøm, T.; Friborg, T.; Allotey, A.N. UAV-Borne, LiDAR-Based Elevation Modelling: An Effective Tool for Improved Local Scale Urban Flood Risk Assessment. Nat. Hazards 2021, 113, 423–451. [Google Scholar] [CrossRef]

- Pratomo, J.; Widiastomo, T. Implementation of the markov random field for urban land cover classification of uav vhir data. Geoplanning J. Geomat. Plan. 2016, 3, 127–136. [Google Scholar] [CrossRef]

- Yang, Y.; Song, F.; Ma, J.; Wei, Z.; Song, L.; Cao, W. Spatial and temporal variation of heat islands in the main urban area of Zhengzhou under the two-way influence of urbanization and urban forestry. PLoS ONE 2022, 17, e0272626. [Google Scholar] [CrossRef] [PubMed]

- Bayomi, N.; Nagpal, S.; Rakha, T.; Fernandez, J.E. Building envelope modeling calibration using aerial thermography. Energy Build. 2020, 233, 110648. [Google Scholar] [CrossRef]

- Rathinam, S.; Kim, Z.; Soghikian, A.; Sengupta, R. Vision Based Following of Locally Linear Structures using an Unmanned Aerial Vehicle. In Proceedings of the 44th IEEE Conference on Decision and Control, Seville, Spain, 15 December 2005; pp. 6085–6090. [Google Scholar] [CrossRef]

- Falorca, J.F.; Lanzinha, J.C.G. Facade inspections with drones–theoretical analysis and exploratory tests. Int. J. Build. Pathol. Adapt. 2020, 39, 235–258. [Google Scholar] [CrossRef]

- Ding, W.; Yang, H.; Yu, K.; Shu, J. Crack detection and quantification for concrete structures using UAV and transformer. Autom. Constr. 2023, 152, 104929. [Google Scholar] [CrossRef]

- Alzarrad, A.; Awolusi, I.; Hatamleh, M.T.; Terreno, S. Automatic assessment of roofs conditions using artificial intelligence (AI) and unmanned aerial vehicles (UAVs). Front. Built Environ. 2022, 8, 1026225. [Google Scholar] [CrossRef]

- Shao, H.; Song, P.; Mu, B.; Tian, G.; Chen, Q.; He, R.; Kim, G. Assessing city-scale green roof development potential using Unmanned Aerial Vehicle (UAV) imagery. Urban For. Urban Green. 2020, 57, 126954. [Google Scholar] [CrossRef]

- Vance, S.J.; Richards, M.E.; Walters, M.C. Evaluation of Roof Leak Detection Utilizing Unmanned Aircraft Systems Equipped with Thermographic Sensors; Construction Engineering Research Laboratory (U.S.): Champaign, IL, USA; The U.S. Army Engineer Research and Development Center (ERDC): Vicksburg, MS, USA, 2018. [Google Scholar]

- Seo, J.; Duque, L.; Wacker, J. Drone-enabled bridge inspection methodology and application. Autom. Constr. 2018, 94, 112–126. [Google Scholar] [CrossRef]

- Ellenberg, A.; Kontsos, A.; Moon, F.; Bartoli, I. Bridge related damage quantification using unmanned aerial vehicle imagery. Struct. Control. Health Monit. 2016, 23, 1168–1179. [Google Scholar] [CrossRef]

- Duque, L.; Seo, J.; Wacker, J. Synthesis of Unmanned Aerial Vehicle Applications for Infrastructures. J. Perform. Constr. Facil. 2018, 32, 04018046. [Google Scholar] [CrossRef]

- AgEagle Aerial Systems Inc. Drones vs. Traditional Instruments: Corridor Mapping in Turkey UAVs vs. Classical Surveying 2015. pp. 1–4. Available online: https://geo-matching.com/articles/corridor-mapping-in-turkey-using-drones-versus-traditional-instruments (accessed on 25 June 2023).

- Nikhil, N.; Shreyas, S.M.; Vyshnavi, G.; Yadav, S. Unmanned Aerial Vehicles (UAV) in Disaster Management Applications. In Proceedings of the 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 August 2020. [Google Scholar]

- Nugroho, G.; Taha, Z.; Nugraha, T.S.; Hadsanggeni, H. Development of a Fixed Wing Unmanned Aerial Vehicle (UAV) for Disaster Area Monitoring and Mapping. J. Mechatron. Electr. Power Veh. Technol. 2015, 6, 83–88. [Google Scholar] [CrossRef]

- Gao, Y.; Lyu, Z.; Assilzadeh, H.; Jiang, Y. Small and low-cost navigation system for UAV-based emergency disaster response applications. In Proceedings of the 4th Joint International Symposium on Deformation Monitoring (JISDM), Athens, Greece, 15–17 May 2019; pp. 15–17. [Google Scholar]

- Suzuki, T.; Miyoshi, D.; Meguro, J.-I.; Amano, Y.; Hashizume, T.; Sato, K.; Takiguchi, J.-I. Real-time hazard map generation using small unmanned aerial vehicle. In Proceedings of the 2008 SICE Annual Conference, Chofu, Japan, 20–22 August 2008. [Google Scholar]

- Erdelj, M.; Natalizio, E.; Chowdhury, K.R.; Akyildiz, I.F. Help from the Sky: Leveraging UAVs for Disaster Management. IEEE Pervasive Comput. 2017, 16, 24–32. [Google Scholar] [CrossRef]

- Półka, M.; Ptak, S.; Kuziora, Ł. The Use of UAV's for Search and Rescue Operations. Procedia Eng. 2017, 192, 748–752. [Google Scholar] [CrossRef]

- Sheng, T.; Jin, R.; Yang, C.; Qiu, K.; Wang, M.; Shi, J.; Zhang, J.; Gao, Y.; Wu, Q.; Zhou, X.; et al. Unmanned Aerial Vehicle Mediated Drug Delivery for First Aid. Adv. Mater. 2023, 35, e2208648. [Google Scholar] [CrossRef]

- Casado, M.R.; Irvine, T.; Johnson, S.; Palma, M.; Leinster, P. The Use of Unmanned Aerial Vehicles to Estimate Direct Tangible Losses to Residential Properties from Flood Events: A Case Study of Cockermouth Following the Desmond Storm. Remote Sens. 2018, 10, 1548. [Google Scholar] [CrossRef]

- Giordan, D.; Manconi, A.; Remondino, F.; Nex, F. Use of unmanned aerial vehicles in monitoring application and management of natural hazards. Geomat. Nat. Hazards Risk 2016, 8, 1–4. [Google Scholar] [CrossRef]

- Khan, M.A.; Ectors, W.; Bellemans, T.; Janssens, D.; Wets, G. UAV-Based Traffic Analysis: A Universal Guiding Framework Based on Literature Survey. Transp. Res. Procedia 2017, 22, 541–550. [Google Scholar] [CrossRef]

- Cermakova, I.; Komarkova, J. Modelling a process of UAV data collection and processing. In Proceedings of the 2016 International Conference on Information Society (i-Society), Dublin, Ireland, 10–13 October 2016. [Google Scholar]

- Mohanty, S.N.; Ravindra, J.V.; Narayana, G.S.; Pattnaik, C.R.; Sirajudeen, Y.M. Drone Technology: Future Trends and Practical Applications; Wiley: New Jersey, NJ, USA, 2023. [Google Scholar] [CrossRef]

- Pix4D. Surveying and Mapping. 2023. Available online: https://www.pix4d.com/ (accessed on 10 May 2023).

- DroneDeploy Platform. DroneDeploy. 2023. Available online: https://drondeploy.com (accessed on 17 April 2023).

- Hartmann, J.; Jueptner, E.; Matalonga, S.; Riordan, J.; White, S. Artificial Intelligence, Autonomous Drones and Legal Uncertainties. Eur. J. Risk Regul. 2022, 14, 31–48. [Google Scholar] [CrossRef]

- Rezwan, S.; Choi, W. Artificial Intelligence Approaches for UAV Navigation: Recent Advances and Future Challenges. IEEE Access 2022, 10, 26320–26339. [Google Scholar] [CrossRef]

- Zhang, S.; Zhuo, L.; Zhang, H.; Li, J. Object Tracking in Unmanned Aerial Vehicle Videos via Multifeature Discrimination and Instance-Aware Attention Network. Remote Sens. 2020, 12, 2646. [Google Scholar] [CrossRef]

- McEnroe, P.; Wang, S.; Liyanage, M. A Survey on the Convergence of Edge Computing and AI for UAVs: Opportunities and Challenges. IEEE Internet Things J. 2022, 9, 15435–15459. [Google Scholar] [CrossRef]

- Hu, L.; Tian, Y.; Yang, J.; Taleb, T.; Xiang, L.; Hao, Y. Ready Player One: UAV-Clustering-Based Multi-Task Offloading for Vehicular VR/AR Gaming. IEEE Netw. 2019, 33, 42–48. [Google Scholar] [CrossRef]

- Flammini, F.; Naddei, R.; Pragliola, C.; Smarra, G. Towards automated drone surveillance in railways: State-of-the-art and future directions. In Advanced Concepts for Intelligent Vision Systems 17th International Conference, ACIVS 2016, Lecce, Italy, 24–27 October 2016; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- AlMahamid, F.; Grolinger, K. Autonomous Unmanned Aerial Vehicle navigation using Reinforcement Learning: A systematic review. Eng. Appl. Artif. Intell. 2022, 115, 105321. [Google Scholar] [CrossRef]

- Giusti, A.; Guzzi, J.; Ciresan, D.C.; He, F.-L.; Rodriguez, J.P.; Fontana, F.; Faessler, M.; Forster, C.; Schmidhuber, J.; Di Caro, G.; et al. A Machine Learning Approach to Visual Perception of Forest Trails for Mobile Robots. IEEE Robot. Autom. Lett. 2015, 1, 661–667. [Google Scholar] [CrossRef]

- Hummel, K.A.; Pollak, M.; Krahofer, J. A Distributed Architecture for Human-Drone Teaming: Timing Challenges and Interaction Opportunities. Sensors 2019, 19, 1379. [Google Scholar] [CrossRef]

- Kumari, S.; Tripathy, K.K.; Kumbhar, V. Data Science and Analytics; Emerald Publishing Limited: Bingley, UK, 2020. [Google Scholar] [CrossRef]

- Yue, L.; Yang, R.; Zhang, Y.; Yu, L.; Wang, Z. Deep Reinforcement Learning for UAV Intelligent Mission Planning. Complexity 2022, 2022, 3551508. [Google Scholar] [CrossRef]

- Yang, Q.; Zhang, J.; Shi, G.; Hu, J.; Wu, Y. Maneuver Decision of UAV in Short-Range Air Combat Based on Deep Reinforcement Learning. IEEE Access 2020, 8, 363–378. [Google Scholar] [CrossRef]

- Kinaneva, D.; Hristov, G.; Raychev, J.; Zahariev, P. Early Forest Fire Detection Using Drones and Artificial Intelligence. In Proceedings of the 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019. [Google Scholar] [CrossRef]

- Rovira-Sugranes, A.; Razi, A.; Afghah, F.; Chakareski, J. A review of AI-enabled routing protocols for UAV networks: Trends, challenges, and future outlook. Ad Hoc Netw. 2022, 130, 102790. [Google Scholar] [CrossRef]

- Ross, S.; Melik-Barkhudarov, N.; Shankar, K.S.; Wendel, A.; Dey, D.; Bagnell, J.A.; Hebert, M. Learning monocular reactive UAV control in cluttered natural environments. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013. [Google Scholar] [CrossRef]

- Chakravarty, P.; Kelchtermans, K.; Roussel, T.; Wellens, S.; Tuytelaars, T.; Van Eycken, L. CNN-based single image obstacle avoidance on a quadrotor. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar] [CrossRef]

- Ditria, E.M.; Buelow, C.A.; Gonzalez-Rivero, M.; Connolly, R.M. Artificial intelligence and automated monitoring for assisting conservation of marine ecosystems: A perspective. Front. Mar. Sci. 2022, 9, 918104. [Google Scholar] [CrossRef]

- Verendel, V. Tracking AI in climate inventions with patent data. Nat. Clim. Change 2021, 13, 40–47. [Google Scholar] [CrossRef]

- Gupta, R.; Goodman, B.; Patel, N.; Hosfelt, R.; Sajeev, S.; Heim, E.; Doshi, J.; Lucas, K.; Choset, H.; Gaston, M. Creating XBD: A dataset for assessing building damage from satellite imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- MacDonald, N.; Howell, G. Killing Me Softly: Competition in Artificial Intelligence and Unmanned Aerial Vehicles. Prism 2020, 8, 102–126. [Google Scholar]

- Reichstein, M.; Camps-Valls, G.; Stevens, B.; Jung, M.; Denzler, J.; Carvalhais, N.; Prabhat, F. Deep learning and process understanding for data-driven Earth system science. Nature 2019, 566, 195–204. [Google Scholar] [CrossRef]

- Molina, M.J.; O’brien, T.A.; Anderson, G.; Ashfaq, M.; Bennett, K.E.; Collins, W.D.; Dagon, K.; Restrepo, J.M.; Ullrich, P.A.; Henry, A.J.; et al. A Review of Recent and Emerging Machine Learning Applications for Climate Variability and Weather Phenomena. Artif. Intell. Earth Syst. 2023, 1, 1–46. [Google Scholar] [CrossRef]

- Kontokosta, C.E.; Tull, C. A data-driven predictive model of city-scale energy use in buildings. Appl. Energy 2017, 197, 303–317. [Google Scholar] [CrossRef]

- Puhm, M.; Deutscher, J.; Hirschmugl, M.; Wimmer, A.; Schmitt, U.; Schardt, M. A Near Real-Time Method for Forest Change Detection Based on a Structural Time Series Model and the Kalman Filter. Remote Sens. 2020, 12, 3135. [Google Scholar] [CrossRef]

- Abeywickrama, H.V.; Jayawickrama, B.A.; He, Y.; Dutkiewicz, E. Comprehensive Energy Consumption Model for Unmanned Aerial Vehicles, Based on Empirical Studies of Battery Performance. IEEE Access 2018, 6, 58383–58394. [Google Scholar] [CrossRef]

- Morbidi, F.; Cano, R.; Lara, D. Minimum-energy path generation for a quadrotor UAV. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar] [CrossRef]

- Abdilla, A.; Richards, A.; Burrow, S. Power and endurance modelling of battery-powered rotorcraft. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015. [Google Scholar] [CrossRef]

- Rojas-Perez, L.O.; Martinez-Carranza, J. On-board processing for autonomous drone racing: An overview. Integration 2021, 80, 46–59. [Google Scholar] [CrossRef]

- Hassanalian, M.; Abdelkefi, A. Classifications, applications, and design challenges of drones: A review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Derrouaoui, S.H.; Bouzid, Y.; Guiatni, M.; Dib, I. Comprehensive Review on Reconfigurable Drones: Classification, Characteristics, Design and Control. Unmanned Syst. 2022, 10, 3–29. [Google Scholar] [CrossRef]

- Roseman, C.A.; Argrow, B.M. Weather Hazard Risk Quantification for sUAS Safety Risk Management. J. Atmospheric Ocean. Technol. 2020, 37, 1251–1268. [Google Scholar] [CrossRef]

- Gianfelice, M.; Aboshosha, H.; Ghazal, T. Real-time Wind Predictions for Safe Drone Flights in Toronto. Results Eng. 2022, 15, 100534. [Google Scholar] [CrossRef]

- Hu, S.; Mayer, G. Three-dimensional Euler solutions for drone delivery trajectory prediction under extreme environments. Soc. Photo Opt. Instrum. Eng. 2022, 12259, 1185–1190. [Google Scholar] [CrossRef]

- Lin, H.-Y.; Peng, X.-Z. Autonomous Quadrotor Navigation With Vision Based Obstacle Avoidance and Path Planning. IEEE Access 2021, 9, 102450–102459. [Google Scholar] [CrossRef]

- Cheng, C.; Sha, Q.; He, B.; Li, G. Path planning and obstacle avoidance for AUV: A review. Ocean Eng. 2021, 235, 109355. [Google Scholar] [CrossRef]

- Singh, J.; Dhuheir, M.; Refaey, A.; Erbad, A.; Mohamed, A.; Guizani, M. Navigation and Obstacle Avoidance System in Unknown Environment. In Proceedings of the 2020 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), London, ON, Canada, 30 August–2 September 2020. [Google Scholar] [CrossRef]

- Lin, Y.; Saripalli, S. Path planning using 3D dubins curve for unmanned aerial vehicles. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 296–304. [Google Scholar] [CrossRef]

- Zhao, Y.; Zheng, Z.; Zhang, X.; Liu, Y. Q learning algorithm based UAV path learning and obstacle avoidence approach. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 3397–3402. [Google Scholar] [CrossRef]

- Hou, X.; Liu, F.; Wang, R.; Yu, Y. A UAV dynamic path planning algorithm. In Proceedings of the 2020 35th Youth Academic Annual Conference of Chinese Association of Automation (YAC), Zhanjiang, China, 16–18 October 2020; pp. 127–131. [Google Scholar] [CrossRef]

- Labib, N.S.; Brust, M.R.; Danoy, G.; Bouvry, P. The Rise of Drones in Internet of Things: A Survey on the Evolution, Prospects and Challenges of Unmanned Aerial Vehicles. IEEE Access 2021, 9, 115466–115487. [Google Scholar] [CrossRef]

- FAA. Small Unmanned Aircraft Systems (UAS) Regulations (Part 107). 2023. Available online: https://www.faa.gov/newsroom/small-unmanned-aircraft-systems-uas-regulations-part-107 (accessed on 22 August 2023).

- Krichen, M.; Adoni, W.Y.H.; Mihoub, A.; Alzahrani, M.Y.; Nahhal, T. Security Challenges for Drone Communications: Possible Threats, Attacks and Countermeasures. In Proceedings of the 2022 2nd International Conference of Smart Systems and Emerging Technologies (SMARTTECH), Riyadh, Saudi Arabia, 9–11 May 2022; pp. 184–189. [Google Scholar] [CrossRef]

- Vattapparamban, E.; Güvenç, I.; Yurekli, A.I.; Akkaya, K.; Uluaǧaç, S. Drones for smart cities: Issues in cybersecurity, privacy, and public safety. In Proceedings of the 2016 International Wireless Communications and Mobile Computing Conference (IWCMC), Paphos, Cyprus, 5–9 September 2016; pp. 216–221. [Google Scholar] [CrossRef]

- Lv, Z.; Li, Y.; Feng, H.; Lv, H. Deep Learning for Security in Digital Twins of Cooperative Intelligent Transportation Systems. IEEE Trans. Intell. Transp. Syst. 2021, 23, 16666–16675. [Google Scholar] [CrossRef]

- Zhang, L.; Gao, T.; Cai, G.; Hai, K.L. Research on electric vehicle charging safety warning model based on back propagation neural network optimized by improved gray wolf algorithm. J. Energy Storage 2022, 49, 104092. [Google Scholar] [CrossRef]

- Cao, B.; Fan, S.; Zhao, J.; Tian, S.; Zheng, Z.; Yan, Y.; Yang, P. Large-Scale Many-Objective Deployment Optimization of Edge Servers. IEEE Trans. Intell. Transp. Syst. 2021, 22, 3841–3849. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Khan, M.A.; Alsharif, M.H.; Uthansakul, P.; Solyman, A.A.A. Intelligent Reflecting Surfaces Assisted UAV Communications for Massive Networks: Current Trends, Challenges, and Research Directions. Sensors 2022, 22, 5278. [Google Scholar] [CrossRef] [PubMed]

- Wan, S.; Lu, J.; Fan, P.; Letaief, K.B. To Smart City: Public Safety Network Design for Emergency. IEEE Access 2017, 6, 1451–1460. [Google Scholar] [CrossRef]

- Ko, Y.; Kim, J.; Duguma, D.G.; Astillo, P.V.; You, I.; Pau, G. Drone Secure Communication Protocol for Future Sensitive Applications in Military Zone. Sensors 2021, 21, 2057. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bayomi, N.; Fernandez, J.E. Eyes in the Sky: Drones Applications in the Built Environment under Climate Change Challenges. Drones 2023, 7, 637. https://doi.org/10.3390/drones7100637

Bayomi N, Fernandez JE. Eyes in the Sky: Drones Applications in the Built Environment under Climate Change Challenges. Drones. 2023; 7(10):637. https://doi.org/10.3390/drones7100637

Chicago/Turabian StyleBayomi, Norhan, and John E. Fernandez. 2023. "Eyes in the Sky: Drones Applications in the Built Environment under Climate Change Challenges" Drones 7, no. 10: 637. https://doi.org/10.3390/drones7100637