1. Introduction

Undoubtedly, the ever-growing global energy demand in conjunction with the immaturity of carbon free energy sources has forwarded natural gas as the transition fuel [

1,

2] until the net zero task [

3] is accomplished. As a result, gas hydrocarbon reservoirs, which have been traditionally ignored due to limited quality of their organic content, are now considered as potential candidates. A typical example of such reservoirs is those containing CO

2 and H

2S rich fluids which, in turn, leads to the production of sour surface gas streams. Primary to its transportation and market release, the sour gas must undergo a process of selectively removing the acid components, i.e., H

2S and CO

2, due to the high toxicity of the former [

4] as well as the corrosive nature and direct impact on the increase of the greenhouse effect [

5,

6] of the latter. This separation process, known as gas sweetening, takes place in the amine unit (AU) and results in an acid-free sweet gas stream and a second waste acid gas stream, which is extremely flammable and explosive, containing significant amounts of H

2S and CO

2. In order to protect the environment from toxic and greenhouse gases, the aforementioned waste stream must be handled properly.

Typically, the waste acid gas stream is driven into a Claus Sulfur Recovery (CSR) unit, where elemental sulfur from gaseous H

2S is recovered [

7,

8,

9,

10]. However, due to declining global demand for elemental sulfur [

11] as well as increasingly stringent emission standards [

12], alternative ways of handling the acid gas are sought after, with the most straightforward application being the stream injection into the reservoir [

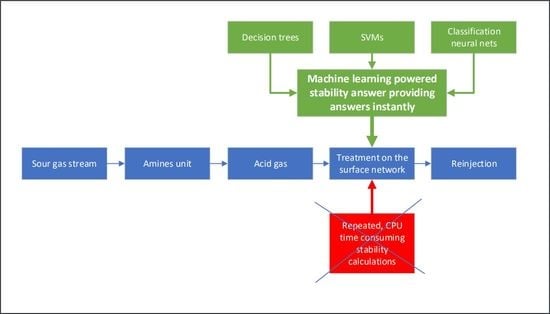

13]. This process is illustrated in

Figure 1 and can be described by the following steps. Firstly, the fluids collected from all wellheads contributing to production are flowed to a separator where complete liquid–gas separation occurs at predetermined pressure and temperature conditions [

14]. The resulting sour gas stream is then led to a drying unit where most of the water content is removed [

15]. Subsequently, this dried sour gas is directed towards the AU plant where, in contact with an amine solution, an absorption process enables the sweet components to pass through, while maintaining the acid components in the water phase [

16,

17]. Ultimately, conforming to pipeline and market specifications for low acid gas content [

18], the sweet gas is driven to the sales point. The remaining acid gas-saturated amine solvent is regenerated by heating the aqueous solution and the water-saturated acid gas stream exits the regenerator unit at 35 to 70 kPa to be subsequently cooled and compressed at suitable pressure stages [

19]. Finally, the acid gas stream is driven through pipelines to the wellheads [

13] where it is injected selectively back to the reservoir.

The study and development of an acid gas injection scheme requires thorough estimation of the fluid’s complex phase equilibria [

13,

20]. However, literature provides limited experimental data as far as the phase behavior and the physical properties of acid gas mixtures are concerned [

21,

22,

23,

24]. Therefore, the phase behavior and physical properties must be evaluated using computational models with conditions varying in a very wide range, from near-atmospheric ones, to those prevailing at the surface processing plant and transportation network, and eventually to those encountered in the wellbore and the reservoir. Additionally, the phases encountered at each stage of the reinjection process also vary between those of gas, liquid, and supercritical at the AU outlet, the transportation network, and the wellbore respectively. For the latter case, the acid gas must be injected at a supercritical state to ensure high density and lack of gas bubbles, which could lead to severe erosion and to the prevention of adverse permeability effects [

20,

23]. On top of the above concerns, the compositional variability of the injected gas stream, as influenced by the production planning of the field, must be further considered. This is due to the commingling of fluids originating from different parts of the reservoir which contain diverse fluids with respect to their acid components concentration [

25].

To study the acid gas stream flow from the AU outlet to the reservoir, the differential equations accounting for the conservation of mass, momentum and energy need to be solved. Commonly, pipelines and wellbores are discretized into 1D elements within which the conservation equations are converted to sets of algebraic equations and solved iteratively. The number and properties of the flowing phases need to be determined at each discretization block, for all iterations until convergence, and for every time step [

26]. To attack this problem in compositional simulation, the standard method to answer the phase state question is running a phase stability test which determines the number of phases present in the flowing fluid at the prevailing pressure and temperature conditions [

27,

28,

29], i.e., whether it lies inside or outside the phase envelope, as shown in

Figure 2. Although the algorithm is straightforward, a highly non-linear optimization problem needs to be solved to get the number of phases, which imposes a big CPU time burden on the total simulation time cost. This is due to the iterative nature of the optimization problem as well as the implemented EoS model, the cost of which may vary between as low as that of a conventional cubic EoS model, to as high as that of much more complex models such as the Cubic Plus Association (CPA) [

30,

31]. Since speed is a critical concern in current compositional flow simulators, accelerating stability calculations without too much compromise in accuracy and reliability is an active research topic in both academia and industry [

32,

33,

34].

In the Machine Learning (ML) context, classification is a process that assigns a given object (pattern) to a class (target, label, or category) [

35]. In its simplest case, the classification problem is binary with the assigned class being on/off, high/low etc., although multiclass problems can be handled as well. During its training against a dataset, the classifier learns the classes decision boundary using ML algorithms, which aim at minimizing the misclassification error [

36]. This dataset is referred to as a training dataset and includes several samples, as well as the desired class for each sample from which to learn, in what is known as a supervised learning scheme. The decision boundary is often a parametric expression of the input features, and the optimal values of the parameters are obtained through the training process. The classifier’s efficiency to correctly map input data to a specific category is evaluated based on its ability to classify previously “unseen” test data, which have not been utilized throughout the training process. Special attention should be paid to the classifier’s complexity as it must be adjusted to optimize model’s generalization capability and to avoid obtaining overtrained complex models which may exhibit flawless classification results on the training set, but not on new data (overfitting) [

37]. Therefore, the tradeoff between a highly complicated structure that is prone to overfitting and a simplistic structure that produces poor classification results on novel observation samples (underfitting) must be optimized.

Clearly, the phase stability problem can be mapped to a two-class classification—one with the two classes corresponding to stable/unstable or equivalently to single/two-phase flow. Therefore, ML classification techniques can be used to generate accurate and rapid predictions regarding the number of phases present of various acid gas instances. The input features are the acid gas composition,

, pressure,

, and temperature,

[

38]. The exact phase boundaries in the

p-T phase diagrams represent the decision boundaries that need to be learned and accurately reproduced by the classifier (

Figure 2). Once such a machine has been trained, it can be directly incorporated to the flow simulator, fully replacing the conventional, iterative, time-consuming stability algorithm, thus offering significant acceleration of the flow simulation time cost.

Various applications of similar proxy modeling have appeared in the literature. Such models have been developed by means of soft computing tools, varying from classic statistical methods to high end ML approaches. Water-Alternating-Gas procedures and the Box–Behnken design have been optimized by means of such methods to establish Enhanced Recovery [

39,

40,

41]. Moreover, full scale reservoir simulation of hydrocarbon recovery or CO

2 injection for storage purposes have also been drastically accelerated by means of proxy modeling using neural networks [

42,

43]. Similarly, proxy modeling by means of ML has been applied to accelerate turbulent multiphase flow simulations [

44], whereas vast acceleration of condensate gas reservoir simulation has been reported [

45]. Fault detection in complex NGL fractionation processes has also been treated [

46].

In this paper, we investigate the applicability of ML techniques in handling the phase behavior classification, with the purpose of dealing more efficiently with the complexity and computational cost of phase equilibrium calculations in acid gas flow simulation. Various classification models from the ML field have been tested to come up with the optimal architecture, which combines optimal error rate and fast predictions on new data.

The paper is laid out as follows:

Section 2 discusses all materials and methods utilized in this work, including conventional stability algorithm and CPU time needs when complex Equation of State (EoS) models are utilized, how phase stability can be mapped to a classification problem, and sets forth the classification techniques used in the paper consisting of Decision Trees (DTs), Support Vector Machines (SVMs), and Neural Network (NN)-based classifiers as investigated candidates.

Section 3 describes how the training data were generated, explains all data treatment techniques employed, and discusses the results obtained for each classification model, as well as the special techniques utilized to further accelerate computations. Conclusions are presented in

Section 4.

4. Conclusions

New solutions are required in humanity’s endeavor to handle the current climate change crisis while ensuring sufficient global energy supply. Although natural gas is part of the solution due to the limited competence of the renewable energy sources, acid gas often appears as a byproduct which needs to be treated due to its environmental and health impact. For that purpose, operation of acid gas reservoirs involves gas reinjection, a flow procedure which is commonly simulated by means of extremely time-consuming phase equilibria calculations, among them binary stability calculations. In this work, classification models from the ML field such as DTs, SVMs, and classification NNs were introduced in an attempt to drastically reduce calculation time at minor or even no loss of accuracy.

It was shown that the generated dataset covering the pressure, temperature, and composition ranges encountered in an acid gas reservoir and surface facilities is noiseless and well defined, thus allowing for good training results. Among the classification models examined, NNs exhibited by far the best performance with the lowest total misclassification rate of the testing data, and misclassifications practically located on the phase boundary, thus having an insubstantial effect on the flow simulation. The CPU time cost to get a stability prediction using the developed classifiers was shown to be orders of magnitude less than that of a conventional, iterative stability calculation. Finally, it was shown that calculations can be further accelerated by limiting the operating space between the p-T boundaries, which optimally enclose the instability area, and by further splitting the operating range in subdomains with a separate, low size model built for each region.

Concluding, ML was shown to act as the perfect method to generate proxy models to accelerate stability calculations in acid gas flow simulations. This way, the process engineers will be able to run more complex scenarios and offer a more detailed, more versatile, and less expensive system design.