Machine Learning in Computer Engineering Applications

A topical collection in Applied Sciences (ISSN 2076-3417). This collection belongs to the section "Computing and Artificial Intelligence".

Viewed by 135563Editor

Interests: computer engineering; electrical engineering; machine learning; modelling and monitoring of industrial objects and processes; time series prediction; signal, image and video processing; text processing and analysis; applied computational intelligence

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

Machine learning is a dynamically developing branch of artificial intelligence, which has a wide range of applications. In particular, it becomes an indispensable part of computer systems dealing with complex problems, difficult or infeasible to solve by means of conventional algorithms.

In computational intelligence, machine learning algorithms are used to build models of systems or processes, which are based on experimental data and offer good generalization properties. This means that such data-driven models can be applied to make reliable predictions or decisions for new data which were not previously available during the learning process. At present, machine learning is an important element of intelligent computer systems with numerous applications in engineering, medicine, economics, education, etc.

The aim of this Topical Collection is to provide a comprehensive appraisal of innovative applications of machine learning algorithms in computer engineering employing novel approaches and methods, including deep learning, hybrid models, multimodal data fusion etc.

This Topical Collection will focus on the applications of machine learning in different fields of computer engineering. Topics of interest include but are not limited to the following:

- Machine learning and decision making in engineering and economics;

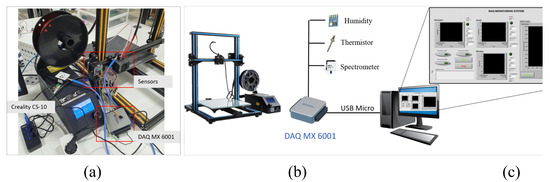

- Intelligent sensors and systems in machine vision and control;

- Machine learning methods to process monitoring and prediction;

- Pattern recognition in medical diagnosis;

- Big data analysis;

- Natural language processing;

- AI-based efficient energy management;

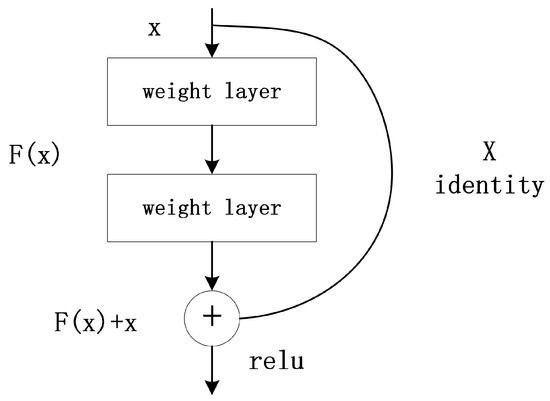

- Deep learning architectures;

- Data-driven models;

Machine learning in different applications.

Prof. Dr. Lidia Jackowska-Strumillo

Collection Editor

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 250 words) can be sent to the Editorial Office for assessment.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Applied Sciences is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2400 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

Keywords

- Machine learning

- Deep learning

- Data-driven models

- Artificial neural networks

- Computer engineering

- Intelligent systems

- Computational intelligence