Abstract

Many interesting rare events in molecular systems, like ligand association, protein folding or conformational changes, occur on timescales that often are not accessible by direct numerical simulation. Therefore, rare event approximation approaches like interface sampling, Markov state model building, or advanced reaction coordinate-based free energy estimation have attracted huge attention recently. In this article we analyze the reliability of such approaches. How precise is an estimate of long relaxation timescales of molecular systems resulting from various forms of rare event approximation methods? Our results give a theoretical answer to this question by relating it with the transfer operator approach to molecular dynamics. By doing so we also allow for understanding deep connections between the different approaches.

1. Introduction

The problem of accurate estimation of long relaxation timescales associated with rare events in molecular dynamics like ligand association, protein folding, or conformational changes has attracted a lot of attention recently. Often, these timescales are not accessible by direct numerical simulation. Therefore, different discrete coarse graining approaches for their approximation, like Markov state model (MSM) building [1,2] or time-lagged independent component analysis (TiCA) [3,4] have been introduced and successfully applied to various molecular systems [5,6]. These approaches are based on finite-dimensional Galerkin discretization [1] or variational approximation [7,8] of the transfer operator of the molecular dynamics process [9]. In several theoretical studies the approximation error of these numerical techniques regarding the longest relaxation timescales has been analyzed resulting in error estimates in terms of the dominant eigenvalues of the transfer operator [3,9]. In this article we first show how to obtain similar error estimates when replacing the transfer operator by the infinitesimal generator [10] associated with it. Furthermore, the analysis exhibits that the different approaches are deeply connected, that is, in the end they lead to an identical numerical problem. In addition to the different discrete coarse graining approaches, the literature contains various alternative reaction coordinate sampling approaches aiming at approximation of very long relaxation processes. In these sampling approaches, one assumes that the effective dynamical behavior of the systems on long timescales can be described by a relatively low dimensional object given by some reaction coordinates. Various advanced methods such as umbrella sampling [11,12], metadynamics [13,14], blue moon sampling [15], the adaptive biasing force method [16], or temperature-accelerated molecular dynamics (TAMD) [17], as well as trajectory-based techniques like milestoning [18], transition interface sampling [19], or forward flux sampling [20] may serve as some examples. These methods result in free energy barriers, transition rates, or first mean passage times for the rare events of interest; they are complemented by several approaches to the effective dynamics of the reaction coordinate space [21,22,23] that allow for significantly faster simulation of these rare events [24,25,26] including details of the underlying molecular mechanisms. Surprisingly, our analytic tools, originally developed for discrete coarse graining approaches, can also be utilized for evaluating the approximation quality of reaction coordinate sampling approaches to the effective dynamics. We derive an explicit error estimate for the longest timescale resulting from the choice of specific reaction coordinates.

However, estimating the approximation quality is not the only way of utilizing the analytical insights presented in this article. We also demonstrate how the new techniques for simulation of the effective dynamics can be used for efficient MSM building or TiCA applications.

Mathematically, the article is based on the analysis of the dominant timescales of reversible and ergodic diffusion processes in energy landscapes. The leading eigenvalues of the transfer operator (or, equivalently, the infinitesimal generator) and the corresponding eigenfunctions characterize the dynamical behavior of the process on long timescales [9,27]. Firstly, in several articles the approximation error with respect to these leading eigenvalues under discretization of the transfer operator has been discussed, cf. [3,7,8,28,29,30]. Following this work, we characterize the approximation quality for the (low-lying) eigenvalues of the infinitesimal generator. This permits us to study the connection between the effective dynamics considered in [23] and Galerkin discretization schemes for the transfer operator. Secondly, following the work [7,8], we study the variational approach for the infinitesimal generator. In fact, we will see that this approach leads to the same generalized matrix eigenproblem as the one resulting from Galerkin discretization. Thirdly, numerical issues related to the estimation of the coefficient matrices by means of the effective dynamics are discussed.

The paper is organized as follows. In Section 2, we introduce the various operators associated to the reversible diffusion processes and discuss the relation between eigenvalues and relaxation timescales. Next, in Section 3, we study the Galerkin discretization of generators/transfer operators for solving the eigenproblem and show that previous results can be extended to reaction coordinate subspaces. In Section 4, the variational approach to the approximation of the eigenproblem is considered and its relations to the Galerkin approach are worked out in detail. Then, in Section 5, we discuss numerical issues related to estimating the discretization matrices by means of simulating the effective dynamics for given reaction coordinates; the performance of this approach is studied numerically in Section 6. Finally, conclusions and some further remarks are given in Section 7. After being familiar with the facts in Section 2, readers who are more interested in numerical algorithmic aspects rather than detailed mathematical analysis can skip Section 3 and Section 4 and refer to Section 5 and Section 6 on first reading.

2. Diffusion Process and the Associated Operators

We consider a diffusion process given by the stochastic differential equation (SDE)

where , parameter is related to the inverse of system’s temperature, and is an n-dimensional Brownian motion. is a potential function which is assumed to be smooth and bounded from below. The results presented subsequently can be extended to more general reversible diffusion processes with a state-dependent noise intensity matrix, cf. [23]. However, for the sake of simplicity of presentation we restrict our considerations to the specific case (1) typically studied in molecular dynamics.

The infinitesimal generator of the dynamics (1) is given by,

It is known that, under mild conditions on V, the solution process of (1) is ergodic [31], and its unique invariant measure is given by where,

We introduce the Hilbert space , which is endowed with the inner product,

and the norm , . The domain of the operator will be denoted as .

It is also known that the process is a reversible process and that is a self-adjoint operator with respect to the inner product (4). Whenever the potential V grows to infinity fast enough at infinity, its spectrum is discrete [9]. Let and be the eigenvalues and the corresponding (normalized) eigenfunctions of , that is, the solutions of the eigenproblem,

in H, or in weak form,

Due to the self-adjointness of and the fact that,

we can assume that with,

with .

Given , we define the operator by,

where denotes the expectation taken with respect to the paths of (1) under the initial condition that . It is well-known that is the solution of the Kolmogorov backward equation

that is, the operators , form a one-parameter semigroup whose infinitesimal generator is , and therefore they are self-adjoint in H as well. Because of Equation (10), the formal expression is often used in the literature. Similarly to (8), we also know that the eigenvalues of are given by,

with the same eigenfunctions , .

In the following we introduce another operator called the transfer operator, which has been extensively considered in the literature, to investigate the metastability of molecular systems and to build Markov state models (MSM) [1,6,9]. A lag time is fixed, with being the transition density function of the process (1) starting from , i.e., describes the probability density of starting from state x at time and arriving at after time . For a bounded and continuous function , the transfer operator is defined by [1,27,32],

From (12), it follows immediately that,

which then implies , i.e., the transfer operator coincides with the operator , a member within the semigroup . Denote the eigenvalues of as , , such that,

Then from the discussions above and the eigenvalues of in (11), we can conclude that and the corresponding eigenfunctions are the same as the eigenfunctions of the infinitesimal generator . These eigenvalues and eigenfunctions encode crucial timescale information of the dynamical system. Specifically, the relaxation timescales of the dynamics (1) are given by [10],

This means that the dominant relaxation timescales of the dynamics (1) can be obtained by computing the dominant eigenvalues of (or, equivalently, , ), cf. [10,27].

3. Galerkin Approximation of the Eigenvalues of the Generator

In this section, we study the Galerkin method for computing the eigenvalues of the infinitesimal generator . While Galerkin discretization of the transfer operator has been studied to some extent [9], results on the associated infinitesimal generator are rather sparse.

3.1. Some General Results

To introduce the Galerkin method, let be a Hilbert subspace of H containing the constant function, and let denote the orthogonal projection operator from H to , which satisfies and,

The Galerkin method aims at approximating the solution of (6) in the subspace . Specifically, we want to find , such that,

for some constant . Using the property (14), we know that problem (15) is equivalent to the eigenproblem for the operator on the subspace , i.e.,

It is straightforward to verify that is a self-adjoint operator on . Similarly to (8), let be the orthonormal eigenfunctions of the operator corresponding to eigenvalues , where,

and . When is an infinite dimensional subspace, we assume as .

In the following, we want to study the condition under which the eigenvalues of the projected generator are reliable approximations of the eigenvalues of the full generator . The following approximation result was obtained in [23] and we include its proof for completeness:

Theorem 1.

For , let and be the orthonormal eigenfunctions of the operators and corresponding to the eigenvalues and , respectively. We have,

Proof.

From (15), we have . Define the subspace for . It follows from the orthogonality of the functions that is an -dimensional subspace of H. Using (17) it is direct to verify that,

Applying the min–max theorem to the eigenvalues of the operator , we conclude,

where goes over all -dimensional subspaces of H. For the upper bound, we can compute that,

where we have used the fact that . The conclusion follows from (7). ☐

Previous studies on the Galerkin approximation of the dominant eigenvalues of the transfer operator have shown that the approximation error of eigenvalues can be reliably bounded by means of the projection errors of the corresponding eigenfunctions [28,29,30]. Next we will derive a similar result for the generator . To this end, we introduce the orthogonal projection from H to the complement subspace of , that is, . We have

Theorem 2.

Let φ be a normalized eigenfunction of the operator corresponding to the eigenvalue λ. Define constants,

and suppose that . Then there is an eigenvalue of the operator , such that,

Proof.

Since , we have . Let , where , and the summation consists of finite terms when is a finite dimensional subspace. For all , we can compute,

which implies , and,

Therefore we have,

☐

Remark 1.

Notice that our error bound above relies on both constants and , while the error bound in [30] for the transfer operator only depends on one constant, the projection error . This difference is due to the fact that the generator is an unbounded operator while the transfer operator is bounded.

3.2. Finite Dimensional Subspaces

In applications, it is often assumed that is spanned by finitely many basis functions. In particular, this is the situation when constructing MSMs based on indicator functions of partition sets [30] or based on core sets [10].

Let be the finite dimensional space , where are the basis functions, and consider the eigenproblem (15). As a direct application of Theorem 1 and Theorem 2, we have,

Corollary 1.

For Galerkin approximation of the eigenproblem (15) using the finite-dimensional ansatz space , the following three statements are valid:

- 1.

- Write and let . Then problem (15) is equivalent to the generalized matrix eigenproblem,where are matrices whose entries are given by,

- 2.

- Let be the smallest eigenvalues of problem (24) and,be the orthonormal eigenvector corresponding to such that . Define , then we have,where , are the eigenvalues and the eigenfunctions of the operator , respectively.

- 3.

- Let be the orthogonal projection operator from H to , and φ be an eigenfunction of the operator corresponding to the eigenvalue λ. Define constants,and suppose that . Then there is an eigenvalue of problem (24) such that,

3.3. Infinite Dimensional Subspace: Effective Dynamics

In this subsection, we discuss Galerkin approximations based on infinite-dimensional ansatz spaces; these cases appear when studying the effective dynamics given by a so-called reaction coordinate, cf. [23]. In order to explain the relation between Galerkin approximation and effective dynamics, let us first recall some definitions and results regarding the effective dynamics. For more details, readers are referred to [21,23,33] for related work.

Let be a reaction coordinate function, . For any function and , we define,

where , denotes the delta function, and is a normalization factor satisfying . Define the probability measure on given by for and consider the Hilbert space . induces a (infinite dimensional) linear subspace of H, namely,

and (28) clearly implies that .

Let satisfy . Then, using (28), we can verify that and,

Therefore, the mapping actually is the orthogonal projection operator from H to the subspace . For , , in the following we will also write instead of , where such that . The effective dynamics of the dynamics (1) for the reaction coordinate is defined on and satisfies the SDE,

where , is a Brownian motion on , and the coefficients , are given by,

for , . The infinitesimal generator of the process governed by (31) is given by,

which is a self-adjoint operator on space with discrete spectrum under appropriate conditions on . We consider the eigenproblem,

and let be the orthonormal eigenfunctions of the operator corresponding to the eigenvalues , where,

Applying Theorems 1 and 2, we have the following result.

Corollary 2.

For the eigenproblem (34) associated with the effective dynamics, the following three statements are valid:

- 1.

- For where , we have,

- 2.

- Let and be the normalized eigenfunctions of the operators and corresponding to eigenvalues and , respectively. We have,

- 3.

- Let φ be the normalized eigenfunction of the operator corresponding to the eigenvalue λ. Define constants,and suppose . Then there is an eigenvalue of the problem (34), such that,

Proof.

The proof of the first assertion can be found in [23]. Using (30) and (36), we can derive,

i.e., and are the eigenvalues and eigenfunctions of the projected operator on the subspace , respectively. Furthermore, , i.e., is normalized. Therefore, the second assertion is implied by Theorem 1. The third assertion follows from Theorem 2 in the same way. ☐

Remark 2.

As an interesting conclusion of the first assertion, we can conclude that, on the infinitesimal subspace defined in (29), the projected operator is essentially described by another differential operator , which is defined in the Hilbert space and coincides with the infinitesimal generator of the effective dynamics on .

4. Variational Approach to Generator Eigenproblem

In this section, we study the variational approach to approximate the eigenvalues and eigenfunctions of the operator . This approach has been considered in [4,7,8] to study the related eigenproblem of the transfer operator. Its main idea is to approximate the dominant eigenvalues of a self-adjoint transfer operator via an appropriate form of the Rayleigh variational principle instead via Galerkin discretization [7]. Herein, we present a similar approach to the low-lying generator eigenvalues.

4.1. Variational Principle

The main object of the variational approach is the following functional , that acts on functions from .

Given arbitrary constants , , we define the functional,

Clearly, for the (normalized) leading eigenfunctions of , we have,

where are the corresponding eigenvalues. The main workhorse of the variational principle is the following lower and upper bound:

Theorem 3 (Variational principle).

Let , be a decreasing sequence of positive real numbers, i.e., . For any orthonormal family of functions , , we have,

or more explicitly,

In order to prove this variational principle we need the following simple lemma:

Lemma 1.

Suppose , and let and be two ordered sequences of real numbers such that,

Then, for any permutation of the sequence , we have,

Proof.

The proof of Theorem 3 is given in two steps:

- For the lower bound, we consider the optimization problem,Next, we introduce the Lagrange multipliers for , and consider the auxiliary functional,Applying calculus of variation, we conclude that the minimizer of (44) satisfies,Multiplying for some in the first equation of (46) and integrating, we obtain . In the same way we could also obtain . Using the fact that is self-adjoint and for , we conclude that,and (46) reduces to an eigenproblem,Therefore, the minimizer of (44) is given by the orthonormal eigenfunctions. Applying Lemma 1, we can further conclude that the lower bound is obtained when , with value,

- For the upper bound, similarly to the proof of Theorem 1, direct computation gives,where we have used the fact that and , since both are normalized functions.

☐

4.2. Optimization Problem

The variational principle of Theorem 3 allows for approximation of the low-lying eigenvalues of the generator. In order to turn it into an algorithm, we again introduce N basis functions , ⋯, . We want to approximate the first eigenvalues , as well as the eigenfunctions , by approximating the eigenfunctions using linear combinations of the basis functions. That is, we consider the functions,

where are real-valued coefficients to be determined, , . Inspired by Theorem 3, we wish to determine the coefficients by solving the optimization problem,

Recalling the matrices defined in (25) and defining the vectors , , the optimization problem (51) can be reformulated as,

or, equivalently, in matrix form,

Using a similar argument as in the proof of Theorem 3, we can obtain,

Theorem 4.

Remark 3.

Combining the above result with SubSection 3.2, we see that both the Galerkin method and the variational approach lead to the same generalized matrix eigenproblem with an identical estimate for the eigenvalue error.

5. Numerical Algorithms

In this section, we consider how the matrices defined in (25), that is,

can be approximated from trajectories of the diffusion process. For the transfer operator this problem has been studied in [4,7,8] using trajectories of the original diffusion process given by (1). In contrast, we herein will consider trajectories of the effective dynamics (31) instead of the original diffusion process.

5.1. Computing Coefficient Matrices Using Effective Dynamics

Similar to the setup in SubSection 3.3, we assume that a reaction coordinate function , as well as N basis functions , , are given. Furthermore, we suppose that the basis functions can be written as for some functions , i.e., . In this case, it follows from the first assertion of Corollary 2 and the relation (30) that,

These equalities, though simple, are quite interesting, because they relate the entries of the coefficient matrices to the infinitesimal generator of the effective dynamics in (33). Since ν is the unique invariant measure of the effective dynamics [23], we can apply the ergodic theorem and get,

where denotes a realization of the effective dynamics (31), is the step size, is a large integer, and only the parts of trajectories after time are used for estimation.

For the matrix C, using (57), the definition of the infinitesimal generator , as well as the ergodic theorem, we can derive,

In the above, denotes the mathematical expectation with respect to the effective dynamics , and the last equality follows from the symmetry of the matrix C.

To compute numerically, we further introduce a parameter , and approximate (59) by,

Formulas (58) and (60) can be used to estimate the coefficient matrices , provided that we can obtain a long trajectory of the effective dynamics (31).

Remark 4.

From the discussions in Section 2, we know that the eigenvalues of the transfer operator and those of the operator satisfy the relation , . When the lag time τ is small, the approximation holds for the leading eigenvalues since is small. In fact, estimating the matrix C using the last expression in (60), we will have , where the matrix is given by,

It is easy to observe that the eigenvalue estimations resulting from problem (54) are related to those of the problem by . Note that (61) is very similar to the estimator derived in [3] except for the fact that here we use trajectories of the effective dynamics instead of the original dynamics. To summarize, when the lag time τ is small, the above discussion implies that after solving the problem (54) we can approximate the leading eigenvalues of the transfer operator by .

5.2. Algorithms for Simulating the Effective Dynamics

In order to utilize the above results we have to be able to efficiently compute (long) realizations of the effective dynamics (31). In this subsection, we discuss two numerical algorithms for realizing this.

5.2.1. Algorithm 1

The first algorithm is based on the following formula for the coefficients given in (32):

where is a realization of the original diffusive dynamics (1) and is the restriction of the invariant measure π to the submanifold . We refer readers to [23] for more details.

In order to utilize this for simulation, we fix two parameters and proceed as follows:

- At step , starting from , generate N trajectories of length of the (unconstrained) full dynamics by discretizing (1). Compute the coefficients by,where .

- Compute from by matrix decomposition. Update by,where are independent standard Gaussian variables, .

In the above, denotes the lth components of . The initial states are sampled from the probability measure ; this can be achieved by using the numerical schemes proposed in [15,34,35], which simulate the original dynamics (1) and then project the state onto the submanifold .

5.2.2. Algorithm 2

The second algorithm is inspired by the TAMD method proposed in [17]. In the following we provide a slightly different argument which motivates the method. The main idea is to consider the extended dynamics,

where κ is a large constant, , are independent Brownian motions on , and denotes the ith component of the state (similar notations for ). Note that the invariant measure of the dynamics (65) has a probability density,

with respect to the Lebesgue measure on the extended space . If we choose as the reaction coordinate function of (65) and derive the effective dynamics following [21,23], we can obtain,

where is a Brownian motion on , and,

for , . Note that in (68), is the generator given in (2) and integration by parts has been used to derive the second expression for . It is not difficult to show that and , when . Therefore (67) is an approximation of the effective dynamics (31) when . For numerical simulations, we can express (68) as time averages,

where satisfies the SDE (65) with fixed , i.e.,

The main steps of the algorithm can be summarized as follows:

- Compute from by matrix decomposition. Update the state according to,where are independent standard Gaussian variables, .

6. Illustrative Example

In order to illustrate the analysis and the performance of the numerical methods presented in the previous sections, we study simple two-dimensional dynamics:

where , and are two independent one-dimensional Brownian motions.

The potential V in dynamics (73) is defined as,

where ,

and is the polar coordinate of the state satisfying,

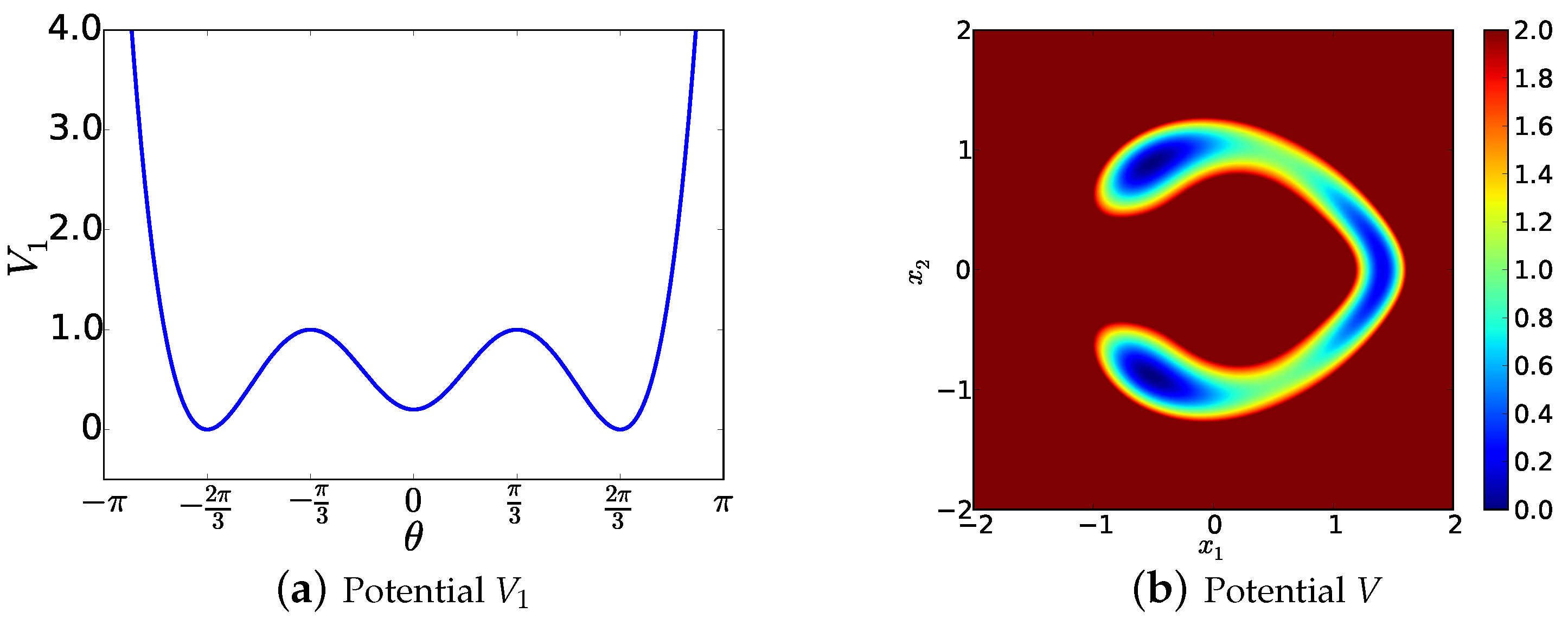

Under the polar coordinate, it is easy to see that the potential V contains three local minima at linebreak where the radius is determined by the relation . Furthermore, when parameter ϵ is small, one can expect that the dynamics (73) will be mainly confined in the neighbourhood of the curve defined by the relation , where the potential is relatively flat. Profiles of the potentials and V are displayed in Figure 1.

Figure 1.

(a) Function as a function of angle θ; (b) Potential V defined in (74) with parameter .

The main purpose of this numerical experiment is to demonstrate that the leading eigenvalues of the operator corresponding to dynamics (73) can be approximated with the help of its effective dynamics, provided that the reaction coordinate function as well as the basis functions are chosen appropriately.

We choose parameters and in the following numerical experiment. In fact, for this two-dimensional problem, it is possible to directly solve the eigenproblem (5) by discretizing the operator . First of all, we note that the generator can be written as . Defining the operator such that for a function f, it is straightforward to see that the operator has the same eigenvalues as and the corresponding eigenfunctions are given by , where are the eigenfunctions of . Furthermore, is a self-adjoint operator under the standard inner product. Instead of , we will work with and solve the eigenproblem because the discretized matrix will be symmetric and the corresponding eigenfunctions decay rapidly.

Taking into account the profile of the potential V in Figure 1b, we truncate the whole space into a finite domain , which is then discretized using a uniform mesh, leading to the cell resolution . For , let denote the values of the functions f, V evaluated at state , respectively. Other notations such as are defined in a similar way. Approximating by the centered finite difference scheme, we obtain,

for . For boundary cells, the Neumann condition is applied when the neighboring cells are lying outside of the truncated domain. From (76), it can be observed that the resulting discretization matrix is both symmetric and sparse. Solving the eigenvalues of this matrix (of order 250,000 ) using the Krylov–Schur method through the numerical package SLEPc [36], we obtain the first four eigenvalues,

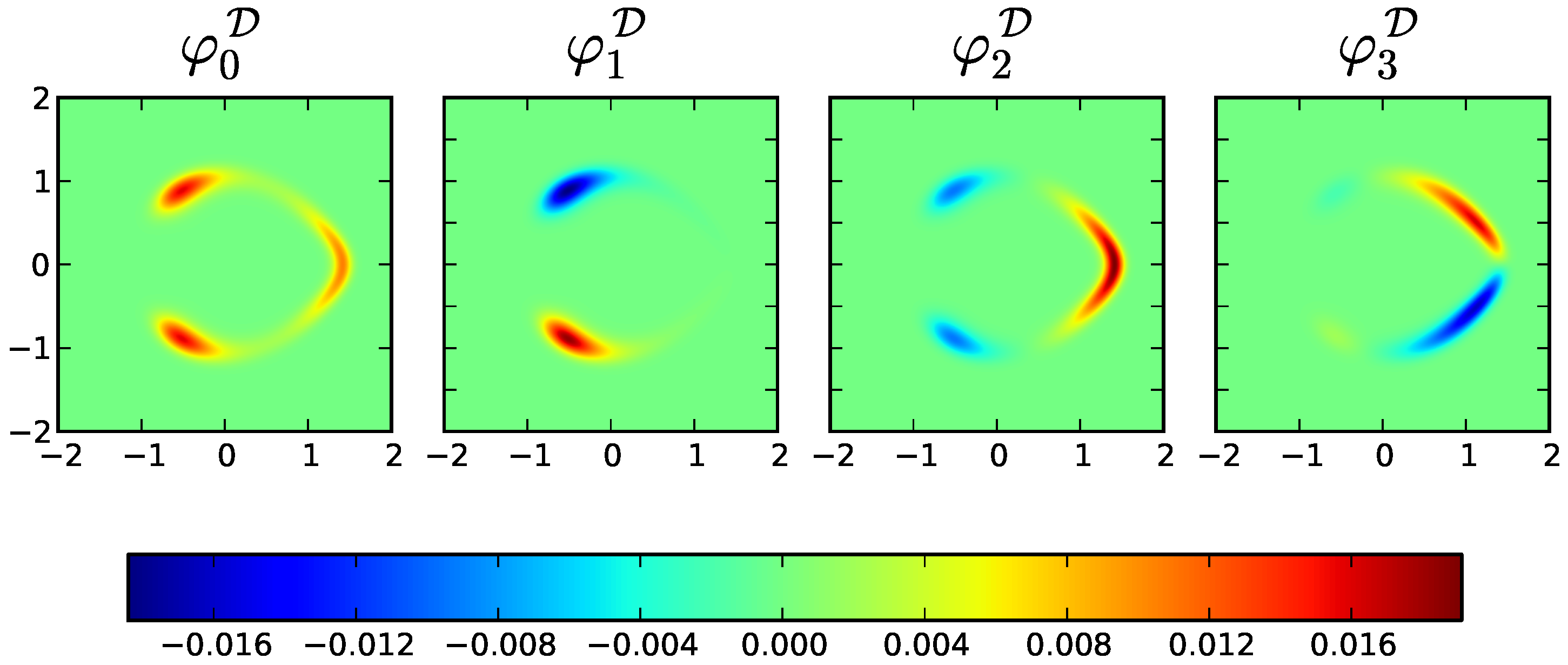

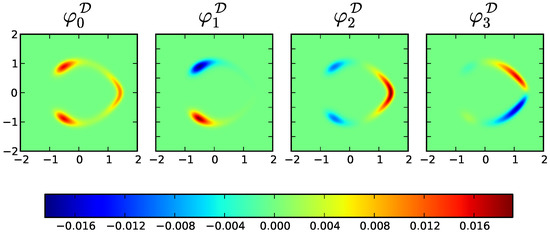

with relative residual errors smaller than . The corresponding eigenvectors are shown in Figure 2.

Figure 2.

Eigenfunctions of operator corresponding to the first four eigenvalues in (77).

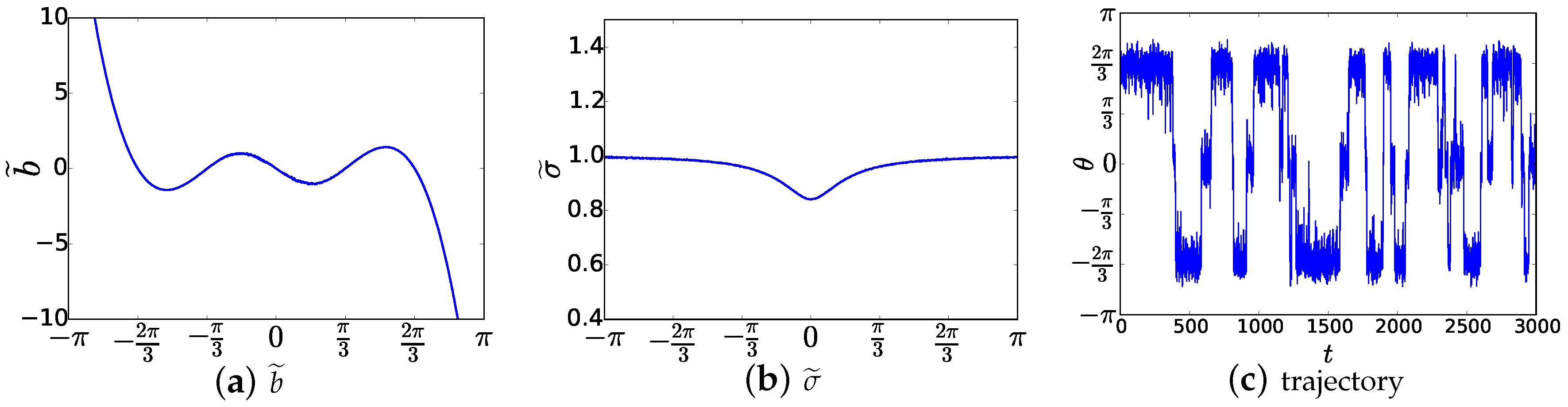

With the above reference result at hand, we continue to study the approximation quality of the effective dynamics with respect to the leading eigenvalues. For this purpose, we choose the reaction coordinate function as , i.e., our reaction coordinate is the angle of the polar coordinate representation. Direct calculation shows that the coefficients in (32) reduces to,

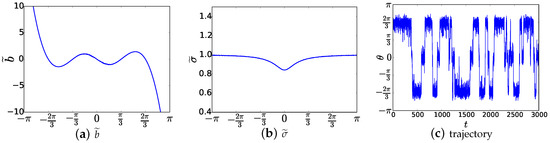

Discretizing the interval into 1000 subintervals and applying the projection scheme proposed in [34] for each fixed , , we can compute the coefficients of the effective dynamics; the resulting profiles are shown in Figure 3a,b. After these preparations, we can generate trajectories of the effective dynamics by simulating the SDE (31) using standard time stepping schemes. As shown in Figure 3c, the effective dynamics spend long times around values and , which is accordance with the behavior of dynamics (73) as well as with the profile of the potential V in Figure 1b. Since the effective dynamics is one-dimensional, we can also discretize its infinitesimal generator in (33) and compute the eigenvalues of which gives,

Figure 3.

(a,b) Coefficients and as given in (78). For each , , the coefficients , are estimated by generating a trajectory of the constrained version of dynamics (73) using the projection scheme proposed in [34] with the time step size , and steps are simulated; (c) A typical sample trajectory of the effective dynamics for dynamics (73) with reaction coordinate function .

Comparing to (77), we conclude that the eigenvalues of the original dynamics (73) are quite well approximated by those of the effective dynamics.

As the final step of our experiment, we test the trajectory-based method proposed in SubSection 5.1. First of all, we define basis functions and , , where,

That is, we have located two Gaussian-like basis functions with different radiuses ( and ) at each of the three local minima . The matrices S and C are then estimated according to (58) and (60) by generating four long trajectories of the effective dynamics with time step size , and parameters , , are used for each trajectories. Solving the generalized matrix eigenproblem , we obtain the leading eigenvalues,

As before, we conclude that the eigenvalues , , of the original dynamics are relatively well approximated.

7. Conclusions

In this work we have studied the approximation of eigenvalues and eigenfunctions of the infinitesimal generator associated with the longest relaxation processes of diffusive processes in energy landscapes. Following the previous studies on transfer operators, we consider the Galerkin discretization method, the variational approach and the effective dynamics given by a low-dimensional reaction coordinate for solving the eigenvalue problem in application to the generator. It turns out that: (1) there are rather similar results for the approximation error of the three methods; and (2) the first two methods lead to the same generalized matrix eigenproblem while the third can be used for efficient estimation of the associated coefficient matrices.

Before we conclude, it is worth mentioning several issues which go beyond the scope of our current work. Firstly, while we have assumed that the dynamics are driven by the gradient of a potential function, we emphasize that the analysis in the current work can be directly applied to more general reversible processes (see [23] for details). Secondly, for non-reversible dynamics, as, for example, for Langevin dynamics, it is not immediately clear how the results in the current work can be applied. However, the approach in [9] (Section 5.3), shows that the extended reversibility of Langevin dynamics may well allow for a generalization of our results. Thirdly, for the numerical algorithms which are briefly outlined in Section 5, both the numerical analysis and their applications to more complicated systems need to be further investigated. Lastly, both the analysis and the algorithms in our current work depend on the choice of the reaction coordinate function. Different choices will have different approximation qualities of the eigenvalues/eigenfunctions of the system [21,23,37]. Algorithmic identification of reaction coordinate functions for high-dimensional systems is a challenging problem and has attracted considerable attention; most approaches utilize machine learning approaches [38], while the relation between identification and effective dynamics has only been explored recently [39]. All of these issues are topics of ongoing research.

Acknowledgments

This research has been funded by Deutsche Forschungsgemeinschaft (DFG) through grant CRC 1114.

Author Contributions

Christof Schütte conceived and designed research; Wei Zhang and Christof Schütte developed the basic theory; Wei Zhang performed numerical experiment; Wei Zhang and Christof Schütte wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Schütte, C.; Fischer, A.; Huisinga, W.; Deuflhard, P. A direct approach to conformational dynamics based on hybrid Monte Carlo. J. Comput. Phys. 1999, 151, 146–168. [Google Scholar]

- Pande, V.S.; Beauchamp, K.; Bowman, G.R. Everything you wanted to know about Markov state models but were afraid to ask. Methods 2010, 52, 99–105. [Google Scholar]

- Pérez-Hernández, G.; Paul, F.; Giorgino, T.; De Fabritiis, G.; Noé, F. Identification of slow molecular order parameters for Markov model construction. J. Chem. Phys. 2013, 139, 015102. [Google Scholar]

- Nüske, F.; Schneider, R.; Vitalini, F.; Noé, F. Variational tensor approach for approximating the rare-event kinetics of macromolecular systems. J. Chem. Phys. 2016, 144, 054105. [Google Scholar]

- Noé, F.; Schütte, C.; Vanden-Eijnden, E.; Reich, L.; Weikl, T.R. Constructing the full ensemble of folding pathways from short off-equilibrium simulations. Proc. Natl. Acad. Sci. USA 2009, 106, 19011–19016. [Google Scholar]

- Bowman, G.R.; Pande, V.S.; Noé, F. (Eds.) Advances in Experimental Medicine and Biology. In An Introduction to Markov State Models and Their Application to Long Timescale Molecular Simulation; Springer: Dordrecht, The Netherlands, 2014; Volume 797. [Google Scholar]

- Noé, F.; Nüske, F. A variational approach to modeling slow processes in stochastic dynamical systems. Multiscale Model. Simul. 2013, 11, 635–655. [Google Scholar]

- Nüske, F.; Keller, B.G.; Pérez-Hernández, G.; Mey, A.; Noé, F. Variational approach to molecular kinetics. J. Chem. Theory Comput. 2014, 10, 1739–1752. [Google Scholar]

- Schütte, C.; Sarich, M. Metastability and Markov State Models in Molecular Dynamics: Modeling, Analysis, Algorithmic Approaches; Courant Lecture Notes; American Mathematical Society/Courant Institute of Mathematical Science: New York, NY, USA, 2014. [Google Scholar]

- Schütte, C.; Noé, F.; Lu, J.; Sarich, M.; Vanden-Eijnden, E. Markov state models based on milestoning. J. Chem. Phys. 2011, 134, 204105. [Google Scholar]

- Torrie, G.M.; Valleau, J.P. Nonphysical sampling distributions in Monte Carlo free-energy estimation: Umbrella sampling. J. Comput. Phys. 1977, 23, 187–199. [Google Scholar]

- Kumar, S.; Rosenberg, J.M.; Bouzida, D.; Swendsen, R.H.; Kollman, P.A. THE weighted histogram analysis method for free-energy calculations on biomolecules. I. The method. J. Comput. Chem. 1992, 13, 1011–1021. [Google Scholar]

- Laio, A.; Parrinello, M. Escaping free-energy minima. Proc. Natl. Acad. Sci. USA 2002, 99, 12562–12566. [Google Scholar]

- Laio, A.; Gervasio, F.L. Metadynamics: A method to simulate rare events and reconstruct the free energy in biophysics, chemistry and material science. Rep. Prog. Phys. 2008, 71, 126601. [Google Scholar]

- Ciccotti, G.; Kapral, R.; Vanden-Eijnden, E. Blue moon sampling, vectorial eeaction coordinates, and unbiased constrained dynamics. ChemPhysChem 2005, 6, 1809–1814. [Google Scholar]

- Darve, E.; Rodríguez-Gömez, D.; Pohorille, A. Adaptive biasing force method for scalar and vector free energy calculations. J. Chem. Phys. 2008, 128, 144120. [Google Scholar]

- Maragliano, L.; Vanden-Eijnden, E. A temperature accelerated method for sampling free energy and determining reaction pathways in rare events simulations. Chem. Phys. Lett. 2006, 426, 168–175. [Google Scholar]

- Faradjian, A.K.; Elber, R. Computing time scales from reaction coordinates by milestoning. J. Chem. Phys. 2004, 120, 10880–10889. [Google Scholar]

- Moroni, D.; van Erp, T.; Bolhuis, P. Investigating rare events by transition interface sampling. Physica A 2004, 340, 395–401. [Google Scholar]

- Becker, N.B.; Allen, R.J.; ten Wolde, P.R. Non-stationary forward flux sampling. J. Chem. Phys. 2012, 136, 174118. [Google Scholar]

- Legoll, F.; Lelièvre, T. Effective dynamics using conditional expectations. Nonlinearity 2010, 23, 2131–2163. [Google Scholar]

- Froyland, G.; Gottwald, G.A.; Hammerlindl, A. A computational method to extract macroscopic variables and their dynamics in multiscale systems. SIAM J. Appl. Dyn. Syst. 2014, 13, 1816–1846. [Google Scholar]

- Zhang, W.; Hartmann, C.; Schutte, C. Effective dynamics along given reaction coordinates, and reaction rate theory. Faraday Discuss. 2016, 195, 365–394. [Google Scholar]

- Kevrekidis, I.G.; Gear, C.W.; Hummer, G. Equation-free: The computer-aided analysis of complex multiscale systems. AIChE J. 2004, 50, 1346–1355. [Google Scholar]

- Kevrekidis, I.G.; Samaey, G. Equation-free multiscale computation: Algorithms and applications. Annu. Rev. Phys. Chem. 2009, 60, 321–344. [Google Scholar]

- Kevrekidis, I.G.; Gear, C.W.; Hyman, J.M.; Kevrekidid, P.G.; Runborg, O.; Theodoropoulos, C. Equation-free, coarse-grained multiscale computation: Enabling mocroscopic simulators to perform system-level analysis. Commun. Math. Sci. 2003, 1, 715–762. [Google Scholar]

- Prinz, J.H.; Wu, H.; Sarich, M.; Keller, B.; Senne, M.; Held, M.; Chodera, J.D.; Schütte, C.; Noé, F. Markov models of molecular kinetics: Generation and validation. J. Chem. Phys. 2011, 134, 174105. [Google Scholar]

- Djurdjevac, N.; Sarich, M.; Schütte, C. Estimating the eigenvalue error of Markov state models. Multiscale Model. Simul. 2012, 10, 61–81. [Google Scholar]

- Sarich, M.; Noé, F.; Schütte, C. On the approximation quality of Markov state models. Multiscale Model. Simul. 2010, 8, 1154–1177. [Google Scholar]

- Sarich, M.; Schütte, C. Approximating selected non-dominant timescales by Markov state models. Comm. Math. Sci. 2012, 10, 1001–1013. [Google Scholar]

- Mattingly, J.C.; Stuart, A.M.; Higham, D.J. Ergodicity for SDEs and approximations: locally Lipschitz vector fields and degenerate noise. Stoch. Proc. Appl. 2002, 101, 185–232. [Google Scholar]

- Schütte, C.; Huisinga, W.; Deuflhard, P. Transfer operator approach to conformational dynamics in biomolecular systems. In Ergodic Theory, Analysis, and Efficient Simulation of Dynamical Systems; Fiedler, B., Ed.; Springer: Berlin/Heidelberg, Germany, 2001; pp. 191–223. [Google Scholar]

- Gyöngy, I. Mimicking the one-dimensional marginal distributions of processes having an Ito differential. Probab. Theory Relat. Fields 1986, 71, 501–516. [Google Scholar]

- Ciccotti, G.; Lelièvre, T.; Vanden-Eijnden, E. Projection of diffusions on submanifolds: Application to mean force computation. Commun. Pure Appl. Math. 2008, 61, 371–408. [Google Scholar]

- Lelièvre, T.; Rousset, M.; Stoltz, G. Langevin dynamics with constraints and computation of free energy differences. Math. Comput. 2012, 81, 2071–2125. [Google Scholar]

- Hernandez, V.; Roman, J.E.; Vidal, V. SLEPc: A scalable and flexible toolkit for the solution of eigenvalue problems. ACM Trans. Math. Softw. 2005, 31, 351–362. [Google Scholar]

- Hartmann, C.; Schütte, C.; Zhang, W. Model reduction algorithms for optimal control and importance sampling of diffusions. Nonlinearity 2016, 29, 2298–2326. [Google Scholar]

- Rohrdanz, M.A.; Zheng, W.; Maggioni, M.; Clementi, C. Determination of reaction coordinates via locally scaled diffusion map. J. Chem. Phys. 2011, 134, 124116. [Google Scholar]

- Bittracher, A.; Koltai, P.; Klus, S.; Banisch, R.; Dellnitz, M.; Schütte, C. Transition manifolds of complex metastable systems: Theory and data-driven computation of effective dynamics. J. Nonlinear Sci. 2017, submitted. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).