Complex Correntropy with Variable Center: Definition, Properties, and Application to Adaptive Filtering

Abstract

1. Introduction

2. Complex Correntropy with Variable Center

3. MCCC-VC Algorithm

3.1. Cost Function

3.2. Gradient Descent Algorithm Based On MCCC-VC

3.3. Optimization of the Parameters in MCCC-VC

3.3.1. Optimization Problem in MCCC-VC

3.3.2. Stochastic Gradient Descent Approach

3.4. Performance Analysis

3.4.1. Convergence Analysis

3.4.2. Steady-State Mean Square

- (1)

- is zero-mean distributed and independent of , and is circular.

- (2)

- is zero-mean and independent of .

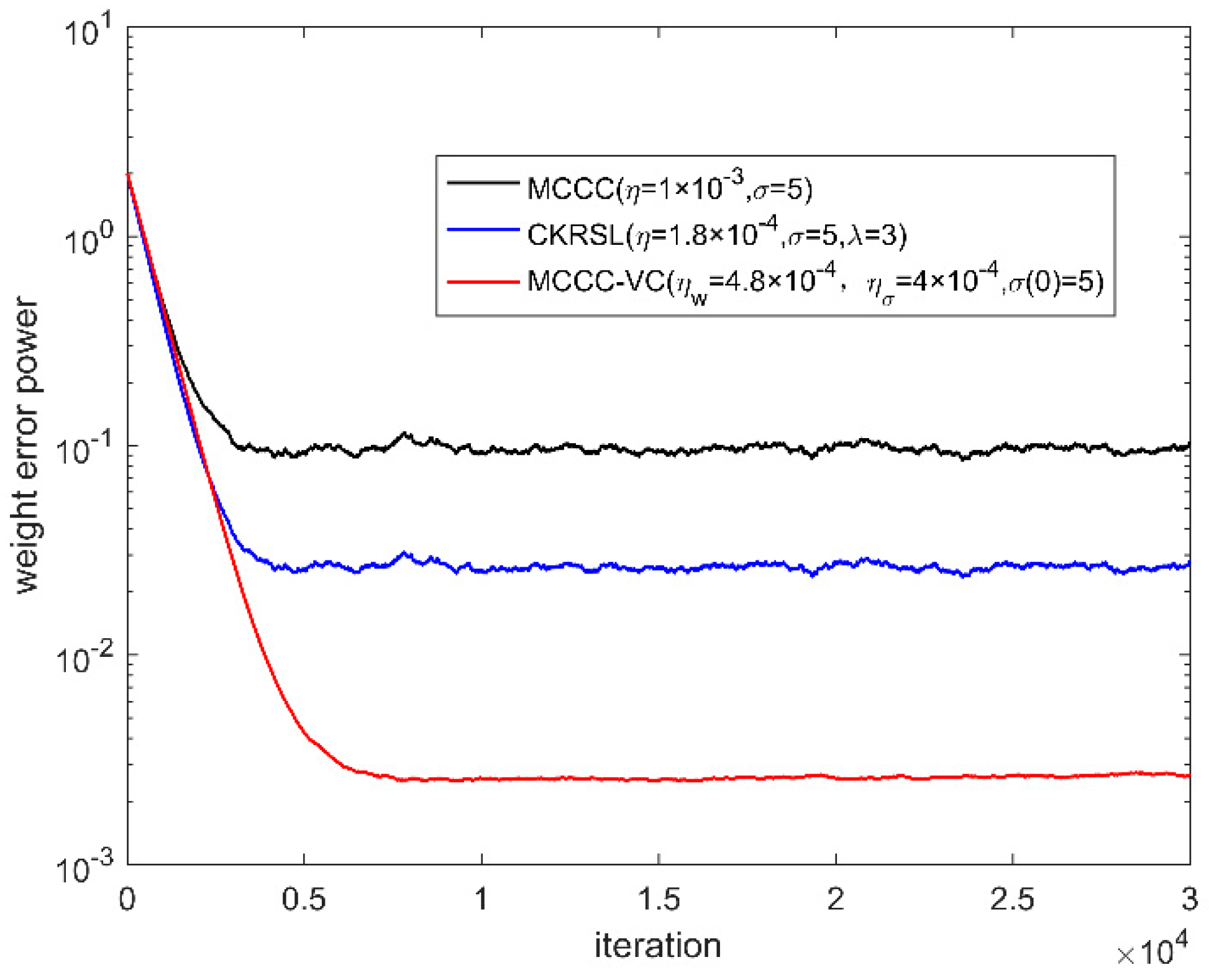

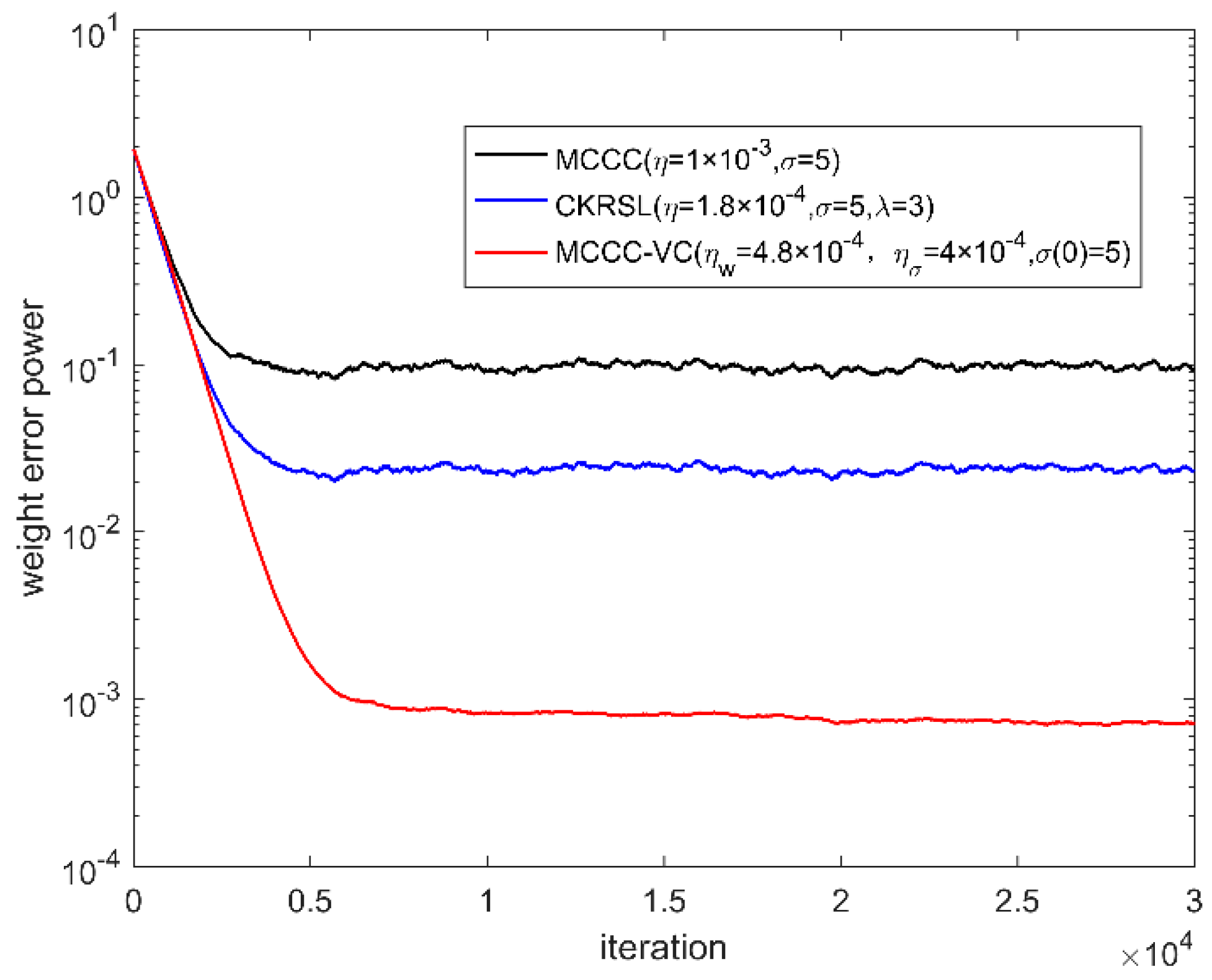

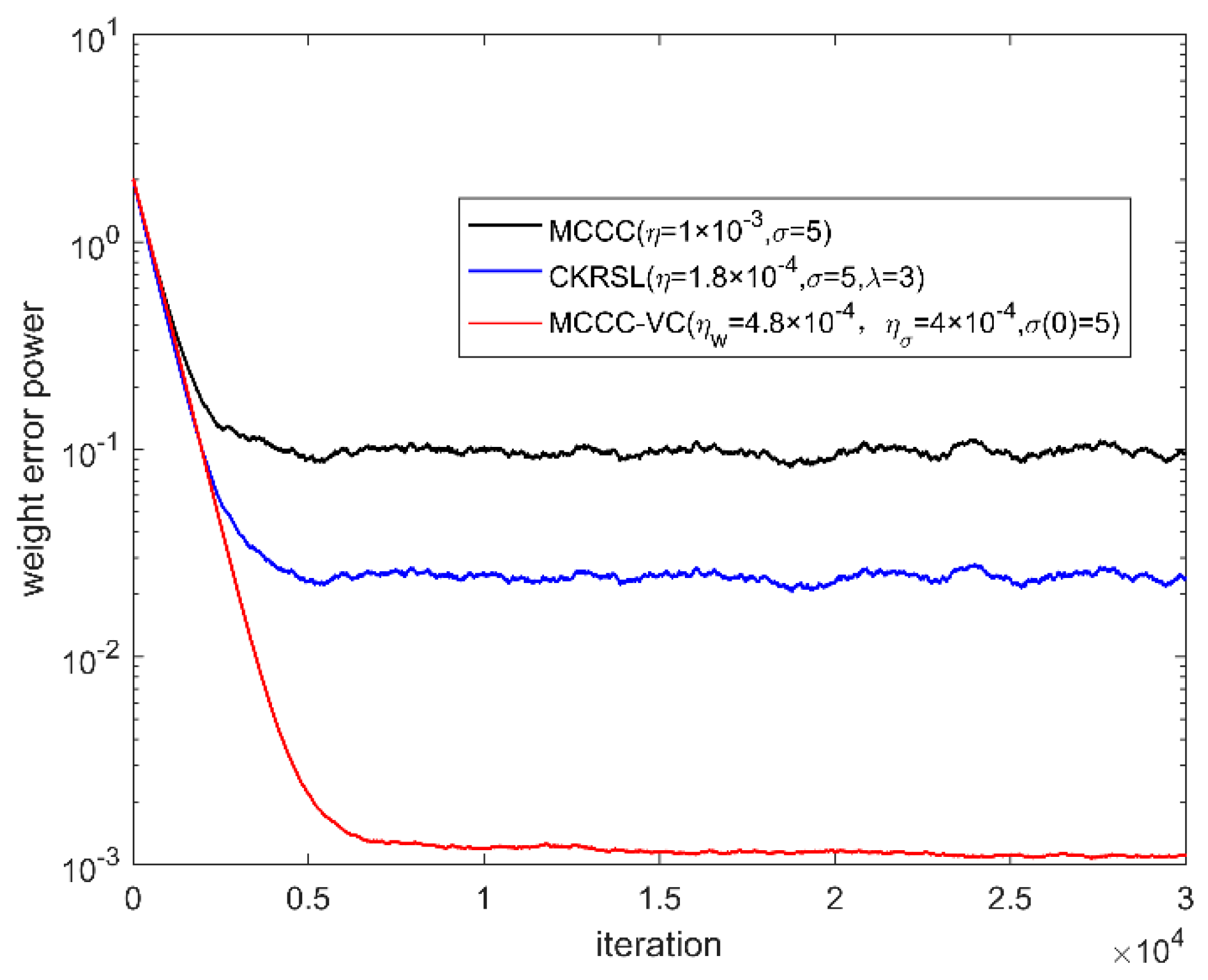

4. Simulation

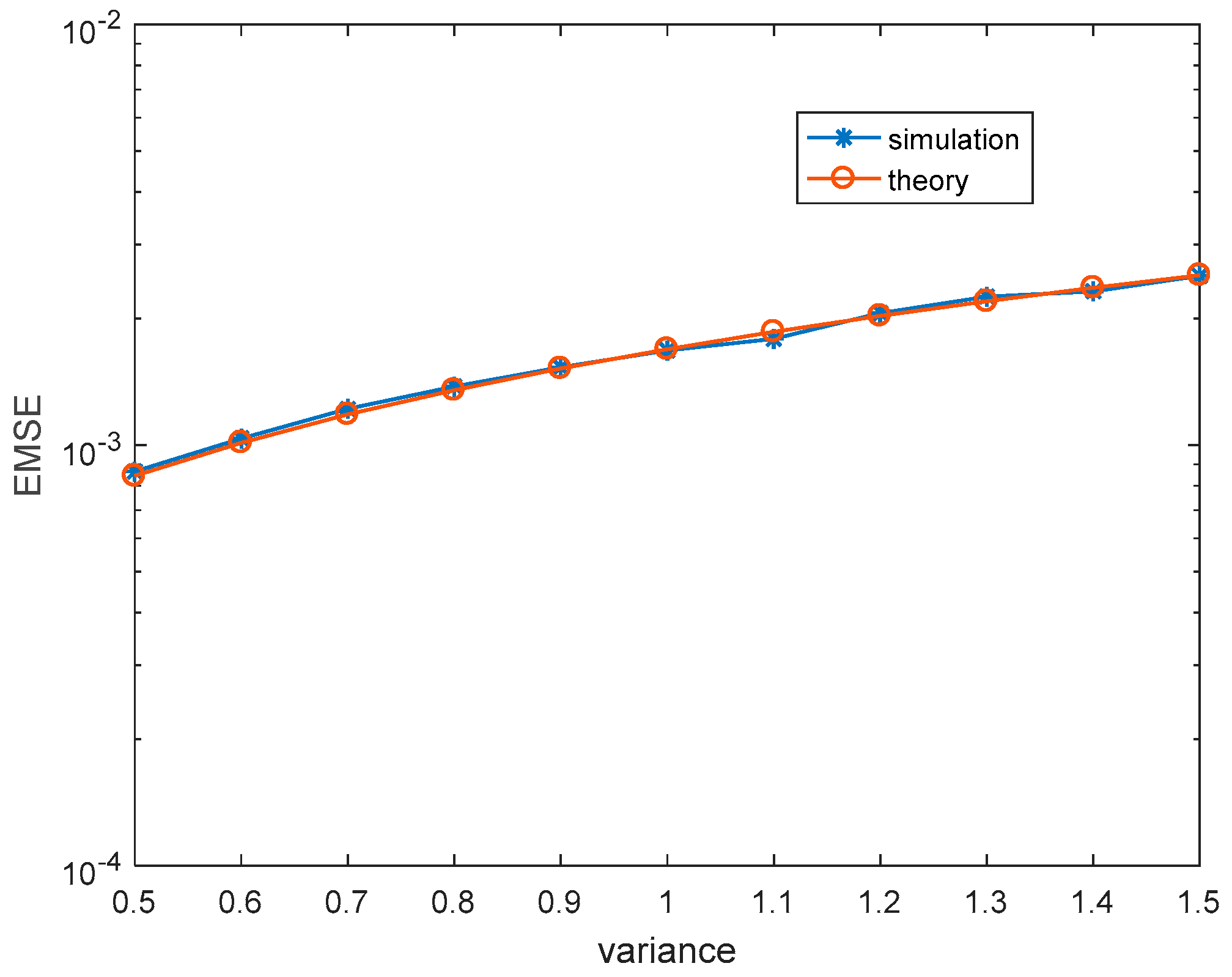

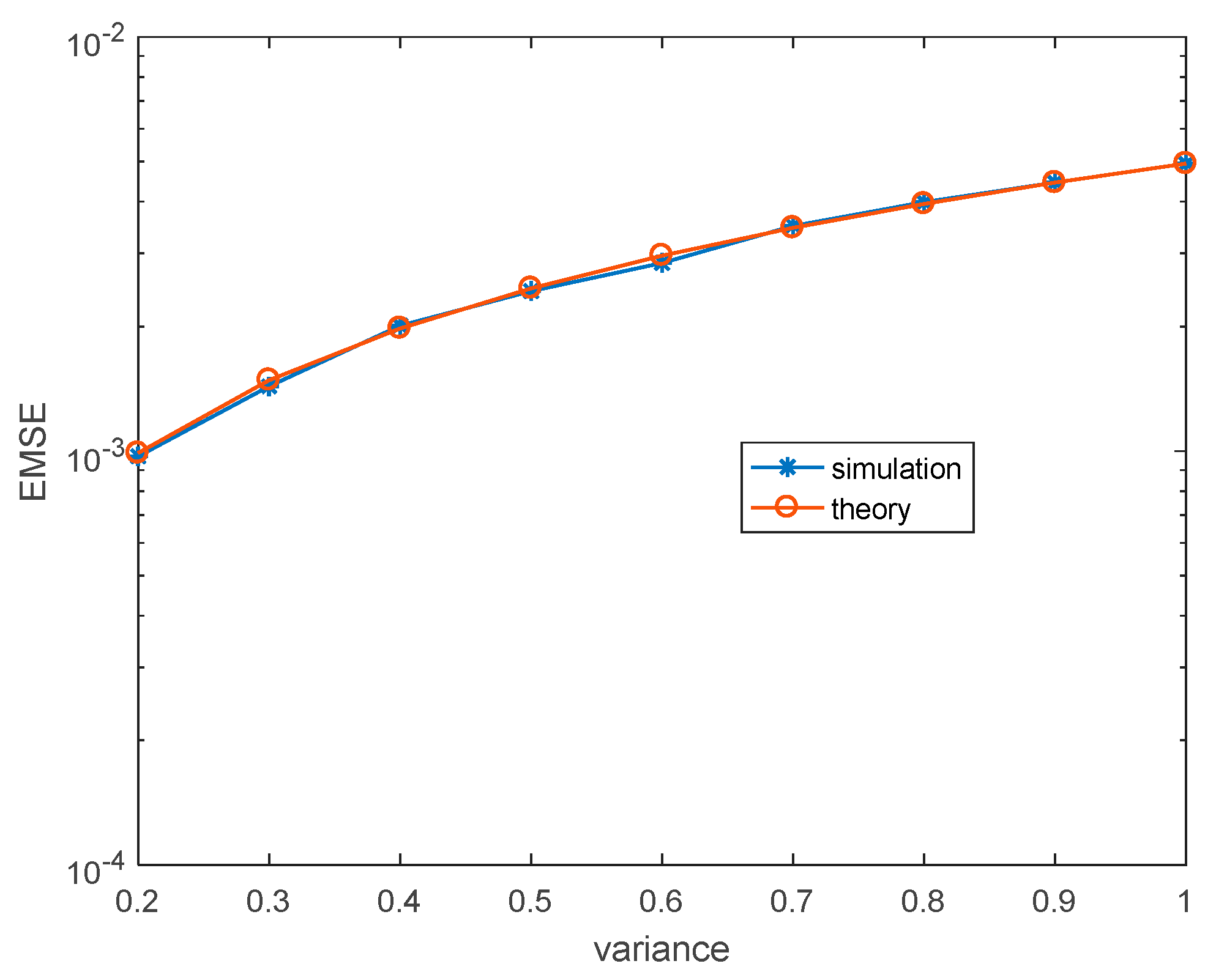

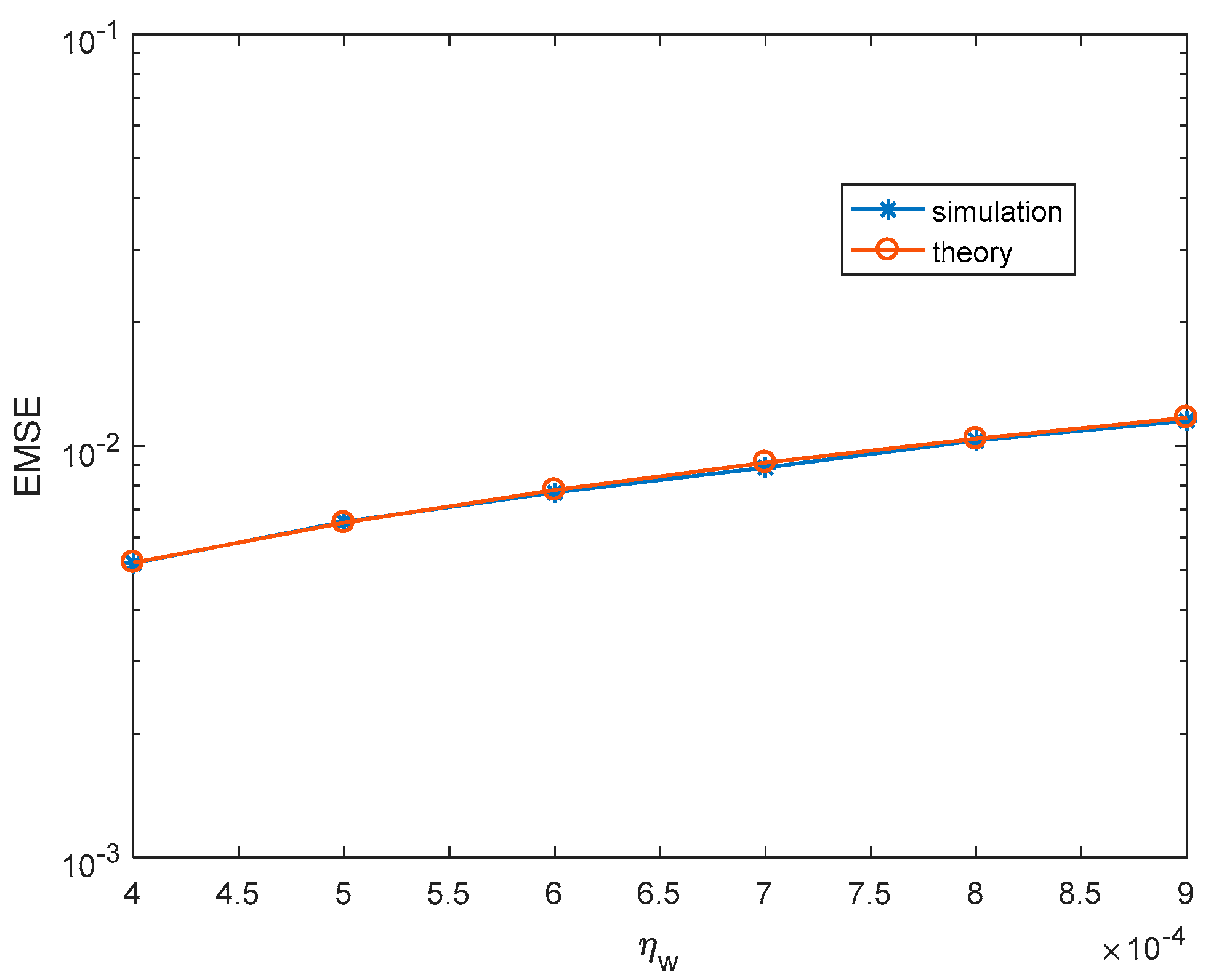

4.1. Steady-State Performance

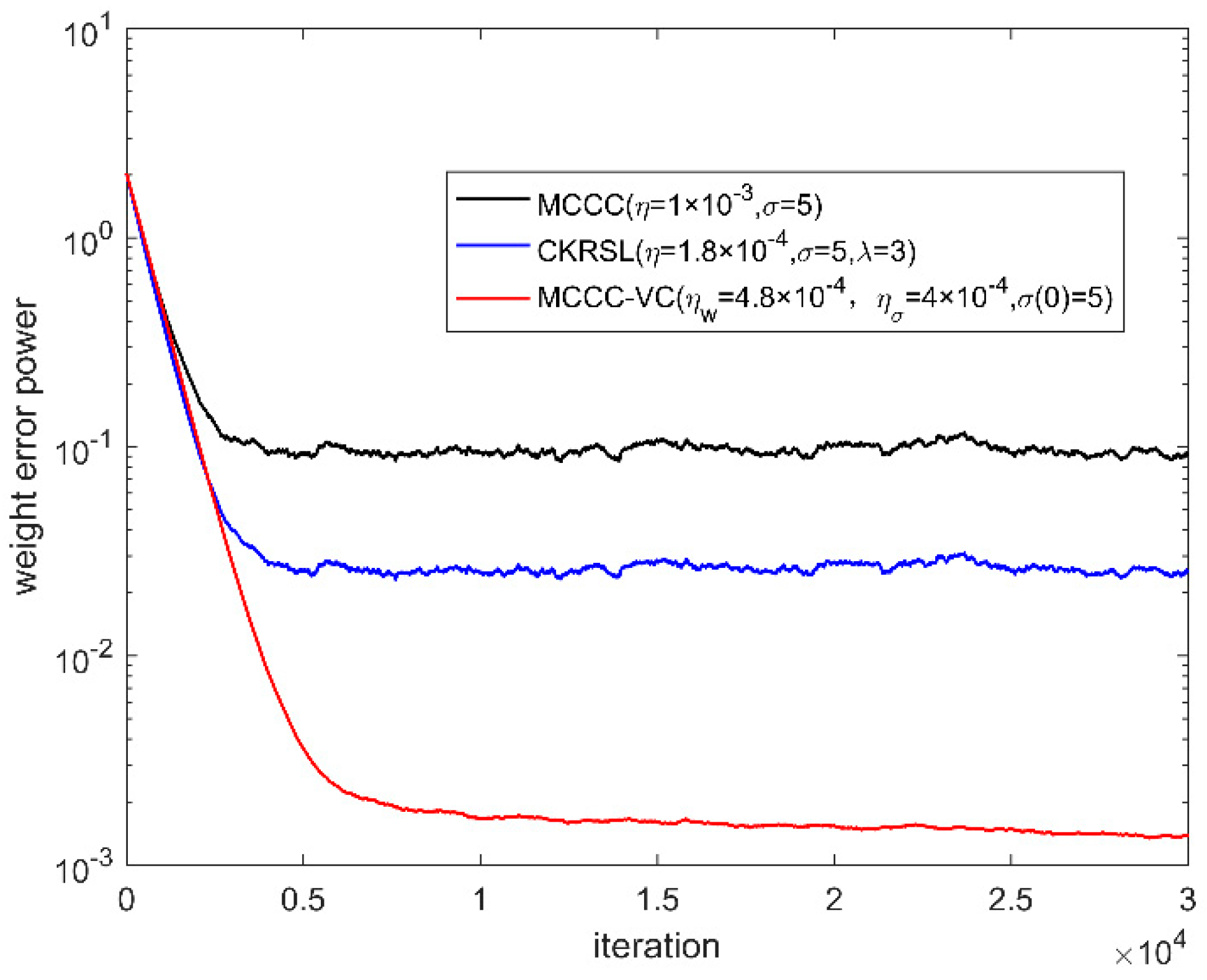

4.2. Performance Comparison

- (1)

- ;

- (2)

- ;

- (3)

- , with denoting the uniform distribution over ;

- (4)

- , , where .

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Haykin, S. Adaptive Filter Theory, 3rd ed.; Prentice Hall: New York, NY, USA, 1996. [Google Scholar]

- Sayed, A.H. Fundamentals of adaptive filtering. IEEE Control Syst. 2003, 25, 77–79. [Google Scholar]

- Chen, B.; Zhu, Y.; Hu, J.; Principe, J.C. System Parameter Identification: Information Criteria and Algorithms; Newnes: Oxford, UK, 2013. [Google Scholar]

- Widrow, B.; McCool, J.M.; Larimore, M.G.; Johnson, C.R. Stationary and nonstationary learning characteristics of the LMS adaptive filter. Proc. IEEE. 1976, 64, 1151–1162. [Google Scholar] [CrossRef]

- Kwong, R.H.; Johnston, E.W. A variable step size LMS algorithm. IEEE Trans. Signal Process. 1992, 40, 1633–1642. [Google Scholar] [CrossRef]

- Benesty, J.; Duhamel, P. A fast exact least mean square adaptive algorithm. IEEE Trans. Signal Process. 1992, 40, 2904–2920. [Google Scholar] [CrossRef]

- Diniz, P.S.R. Adaptive Filtering: Algorithms and Practical Implementation, 4th ed.; Springer: New York, NY, USA, 2013. [Google Scholar]

- Pei, S.C.; Tseng, C.C. Least mean p-power error criterion for adaptive FIR filter. IEEE J. Sel. Areas Commun. 1994, 12, 1540–1547. [Google Scholar]

- AI-Naffouri, T.Y.; Sayed, A.H. Adaptive filters with error nonlinearities: Mean-square analysis and optimum design. EURASIP J. Appl. Signal Process. 2001, 1, 192–205. [Google Scholar] [CrossRef]

- Erdogmus, D.; Principe, J.C. Generalized information potential criterion for adaptive system training. IEEE Trans. Neural Netw. 2002, 13, 1035–1044. [Google Scholar] [CrossRef]

- Principe, J.C. Information Theoretic Learning: Renyi’s Entropy and Kernel Perspectives; Springer: New York, NY, USA, 2010. [Google Scholar]

- Sayin, M.O.; Vanli, N.D.; Kozat, S.S. A novel family of adaptive filtering algorithms based on the logarithmic cost. IEEE Trans. Signal Process. 2014, 62, 4411–4424. [Google Scholar] [CrossRef]

- Liu, W.; Pokharel, P.P.; Príncipe, J.C. Correntropy: Properties and applications in non-Gaussian signal processing. IEEE Trans. Signal Process. 2007, 55, 5286–5298. [Google Scholar] [CrossRef]

- Chen, B.; Xing, L.; Zhao, H.; Zheng, N.; Príncipe, J.C. Generalized correntropy for robust adaptive filtering. IEEE Trans. Signal Process. 2016, 64, 3376–3387. [Google Scholar] [CrossRef]

- Ma, W.; Qu, H.; Gui, G.; Xu, L.; Zhao, J.; Chen, B. Maximum correntropy criterion based sparse adaptive filtering algorithms for robust channel estimation under non-Gaussian environments. J. Franklin Inst. 2015, 352, 2708–2727. [Google Scholar] [CrossRef]

- Chen, B.; Xing, L.; Xu, B.; Zhao, H.; Zheng, N.; Príncipe, J.C. Kernel Risk-Sensitive Loss: Definition, Properties and Application to Robust Adaptive Filtering. IEEE Trans. Signal Process. 2017, 65, 2888–2901. [Google Scholar] [CrossRef]

- Mandic, D.; Goh, V. Complex Valued Nonlinear Adaptive Filters: Noncircularity, Widely Linear and Neural Models (ser. Adaptive and Cognitive Dynamic Systems: Signal Processing, Learning, Communications and Control); Wiley: New York, NY, USA, 2009. [Google Scholar]

- Shi, L.; Zhao, H.; Zakharov, Y. Performance analysis of shrinkage linear complex-valued LMS algorithm. IEEE Signal Process. Lett. 2019, 26, 1202–1206. [Google Scholar] [CrossRef]

- Guimaraes, J.P.F.; Fontes, A.I.R.; Rego, J.B.A.; Martins, A.M.; Principe, J.C. Complex correntropy: Probabilistic interpretation and application to complex-valued data. IEEE Signal Process. Lett. 2017, 24, 42–45. [Google Scholar] [CrossRef]

- Guimarães, J.P.F.; Fontes, A.I.R.; Rego, J.B.A.; Martins, A.M.; Principe, J.C. Complex Correntropy Function: properties, and application to a channel equalization problem. Expert Syst. Appl. 2018, 107, 173–181. [Google Scholar] [CrossRef]

- Qian, G.; Wang, S. Generalized Complex Correntropy: Application to Adaptive Filtering of Complex Data. IEEE Access. 2018, 6, 19113–19120. [Google Scholar] [CrossRef]

- Qian, G.; Wang, S. Complex Kernel Risk-Sensitive Loss: Application to Robust Adaptive Filtering in Complex Domain. IEEE Access. 2018, 6, 60329–60338. [Google Scholar] [CrossRef]

- Guimarães, J.P.F.; Fontes, A.I.R.; da Silva, F.B. Complex Correntropy Induced Metric Applied to Compressive Sensing with Complex-Valued Data. In Proceedings of the 2018 IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI), Las Vegas, NV, USA, 8–10 April 2018; pp. 21–24. [Google Scholar]

- Qian, G.; Luo, D.; Wang, S. A Robust Adaptive Filter for a Complex Hammerstein System. Entropy 2019, 21, 162. [Google Scholar] [CrossRef]

- Chen, B.; Wang, X.; Li, Y.; Principe, J.C. Maximum correntropy criterion with variable center. IEEE Signal Process. 2019, 26, 1212–1216. [Google Scholar] [CrossRef]

- Zhu, L.; Song, C.; Pan, L.; Li, J. Adaptive filtering under the maximum correntropy criterion with variable center. IEEE Access. 2019, 7, 105902–105908. [Google Scholar] [CrossRef]

- Wirtinger, W. Zur formalen theorie der funktionen von mehr complexen veränderlichen. Math. Ann. 1927, 97, 357–375. [Google Scholar] [CrossRef]

- Bouboulis, P.; Theodoridis, S. Extension of Wirtinger’s calculus to reproducing Kernel Hilbert spaces and the complex kernel LMS. IEEE Trans. Signal Process. 2011, 59, 964–978. [Google Scholar] [CrossRef]

- Picinbono, B. On circularity. IEEE Trans. Signal Process. 1994, 42, 3473–3482. [Google Scholar] [CrossRef]

| Algorithm | MCCC | MCKRSL | MCCC-VC |

|---|---|---|---|

| Parameters | , . | , , . | , , . |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, F.; Qian, G.; Wang, S. Complex Correntropy with Variable Center: Definition, Properties, and Application to Adaptive Filtering. Entropy 2020, 22, 70. https://doi.org/10.3390/e22010070

Dong F, Qian G, Wang S. Complex Correntropy with Variable Center: Definition, Properties, and Application to Adaptive Filtering. Entropy. 2020; 22(1):70. https://doi.org/10.3390/e22010070

Chicago/Turabian StyleDong, Fei, Guobing Qian, and Shiyuan Wang. 2020. "Complex Correntropy with Variable Center: Definition, Properties, and Application to Adaptive Filtering" Entropy 22, no. 1: 70. https://doi.org/10.3390/e22010070

APA StyleDong, F., Qian, G., & Wang, S. (2020). Complex Correntropy with Variable Center: Definition, Properties, and Application to Adaptive Filtering. Entropy, 22(1), 70. https://doi.org/10.3390/e22010070