Discrete Information Dynamics with Confidence via the Computational Mechanics Bootstrap: Confidence Sets and Significance Tests for Information-Dynamic Measures

Abstract

1. Introduction

2. Methods

2.1. Notation and Conventions

2.2. Information Dynamics and Computational Mechanics

2.3. Model Inference and Model Selection

2.4. Information- and Computation-Theoretic Estimators from the Inferred -Machine

2.5. Confidence Distributions and Their Use for Inference

2.6. Bootstrapping Confidence Distributions from -Machines

- Construct the -machines from using CSSR, where .

- Select the -machine that minimizes the BIC (7).

- Compute from .

- For :

- (a)

- Generate the time series from .

- (b)

- Construct the -machine from using CSSR.

- (c)

- Compute from

- Construct the confidence distribution

3. Results

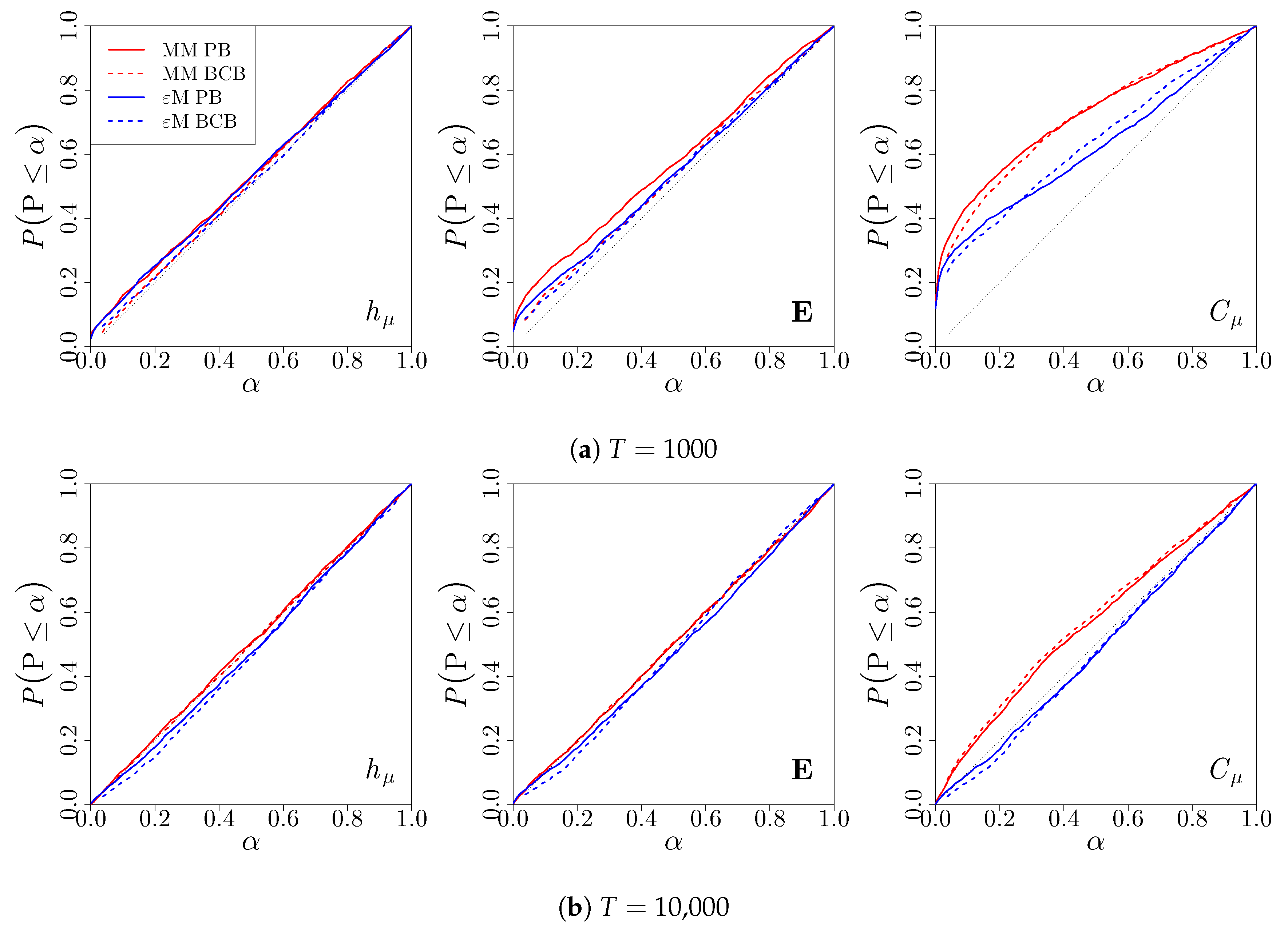

3.1. Simulation Study

3.1.1. Renewal Process

3.1.2. Alternating Renewal Process

3.1.3. Even Process

3.1.4. Simple Nonunifilar Source

3.2. Twitter Data

4. Discussion

5. Conclusions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CSSR | Causal State Splitting Reconstruction |

References

- Palmer, A.J.; Fairall, C.W.; Brewer, W. Complexity in the atmosphere. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2056–2063. [Google Scholar] [CrossRef]

- Varn, D.P.; Canright, G.S.; Crutchfield, J.P. Discovering planar disorder in close-packed structures from x-ray diffraction: Beyond the fault model. Phys. Rev. B 2002, 66, 174110. [Google Scholar] [CrossRef]

- Gilpin, C.; Darmon, D.; Siwy, Z.; Martens, C. Information Dynamics of a Nonlinear Stochastic Nanopore System. Entropy 2018, 20, 221. [Google Scholar] [CrossRef]

- Haslinger, R.; Klinkner, K.L.; Shalizi, C.R. The computational structure of spike trains. Neural Comput. 2010, 22, 121–157. [Google Scholar] [CrossRef] [PubMed]

- Hu, F.; Nie, L.J.; Fu, S.J. Information dynamics in the interaction between a prey and a predator fish. Entropy 2015, 17, 7230–7241. [Google Scholar] [CrossRef]

- Crosato, E.; Jiang, L.; Lecheval, V.; Lizier, J.T.; Wang, X.R.; Tichit, P.; Theraulaz, G.; Prokopenko, M. Informative and misinformative interactions in a school of fish. Swarm Intell. 2018, 12, 283–305. [Google Scholar] [CrossRef]

- Chu, Z.; Gianvecchio, S.; Wang, H.; Jajodia, S. Who is tweeting on Twitter: Human, bot, or cyborg? In Proceedings of the 26th Annual Computer Security Applications Conference, Austin, TX, USA, 6–10 December 2010; ACM: New York, NY, USA, 2010; pp. 21–30. [Google Scholar]

- Darmon, D.; Omodei, E.; Garland, J. Followers are not enough: A multifaceted approach to community detection in online social networks. PLoS ONE 2015, 10, e0134860. [Google Scholar] [CrossRef]

- Darmon, D.; Rand, W.; Girvan, M. Computational landscape of user behavior on social media. Phys. Rev. E 2018, 98, 062306. [Google Scholar] [CrossRef]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. Local information transfer as a spatiotemporal filter for complex systems. Phys. Rev. E 2008, 77, 026110. [Google Scholar] [CrossRef]

- Sun, Y.; Rossi, L.F.; Shen, C.C.; Miller, J.; Wang, X.R.; Lizier, J.T.; Prokopenko, M.; Senanayake, U. Information transfer in swarms with leaders. arXiv 2014, arXiv:1407.0007. [Google Scholar]

- Cliff, O.M.; Lizier, J.T.; Wang, X.R.; Wang, P.; Obst, O.; Prokopenko, M. Quantifying long-range interactions and coherent structure in multi-agent dynamics. Artif. Life 2017, 23, 34–57. [Google Scholar] [CrossRef]

- Hilbert, M.; Darmon, D. How Complexity and Uncertainty Grew with Algorithmic Trading. Entropy 2020, 22, 499. [Google Scholar] [CrossRef]

- Kennel, M.B.; Shlens, J.; Abarbanel, H.D.; Chichilnisky, E. Estimating entropy rates with Bayesian confidence intervals. Neural Comput. 2005, 17, 1531–1576. [Google Scholar] [CrossRef] [PubMed]

- Shlens, J.; Kennel, M.B.; Abarbanel, H.D.; Chichilnisky, E. Estimating information rates with confidence intervals in neural spike trains. Neural Comput. 2007, 19, 1683–1719. [Google Scholar] [CrossRef]

- Strelioff, C.C.; Crutchfield, J.P. Bayesian structural inference for hidden processes. Phys. Rev. E 2014, 89, 042119. [Google Scholar] [CrossRef]

- Darmon, D.; Cellucci, C.J.; Rapp, P.E. Information dynamics with confidence: Using reservoir computing to construct confidence intervals for information-dynamic measures. Chaos Interdiscip. J. Nonlinear Sci. 2019, 29, 083113. [Google Scholar] [CrossRef]

- Singh, K.; Xie, M.; Strawderman, W.E. Confidence distribution (CD)—Distribution estimator of a parameter. In Complex Datasets and Inverse Problems; Institute of Mathematical Statistics: Beachwood, OH, USA, 2007; pp. 132–150. [Google Scholar]

- Schweder, T.; Hjort, N.L. Confidence, Likelihood, Probability; Cambridge University Press: Cambridge, UK, 2016; Volume 41. [Google Scholar]

- Hjort, N.L.; Schweder, T. Confidence distributions and related themes. J. Stat. Plan. Inference 2018, 195, 1–13. [Google Scholar] [CrossRef]

- Caires, S.; Ferreira, J.A. On the non-parametric prediction of conditionally stationary sequences. Stat. Inference Stoch. Process. 2005, 8, 151–184. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- James, R.G.; Ellison, C.J.; Crutchfield, J.P. Anatomy of a bit: Information in a time series observation. Chaos Interdiscip. J. Nonlinear Sci. 2011, 21, 037109. [Google Scholar] [CrossRef]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. A framework for the local information dynamics of distributed computation in complex systems. In Guided Self-Organization: Inception; Springer: Berlin/Heidelberg, Germany, 2014; pp. 115–158. [Google Scholar]

- Crutchfield, J.; Packard, N. Symbolic dynamics of one-dimensional maps: Entropies, finite precision, and noise. Int. J. Theor. Phys. 1982, 21, 433–466. [Google Scholar] [CrossRef]

- Crutchfield, J.P.; Feldman, D.P. Regularities unseen, randomness observed: Levels of entropy convergence. Chaos Interdiscip. J. Nonlinear Sci. 2003, 13, 25–54. [Google Scholar] [CrossRef]

- Shalizi, C.R.; Crutchfield, J.P. Computational mechanics: Pattern and prediction, structure and simplicity. J. Stat. Phys. 2001, 104, 817–879. [Google Scholar] [CrossRef]

- Crutchfield, J.P. Between order and chaos. Nat. Phys. 2012, 8, 17–24. [Google Scholar] [CrossRef]

- Shalizi, C.R.; Klinkner, K.L. Blind Construction of Optimal Nonlinear Recursive Predictors for Discrete Sequences. In Uncertainty in Artificial Intelligence: Proceedings of the Twentieth Conference (UAI 2004); Chickering, M., Halpern, J.Y., Eds.; AUAI Press: Arlington, VA, USA, 2004; pp. 504–511. [Google Scholar]

- Crutchfield, J.P.; Young, K. Inferring statistical complexity. Phys. Rev. Lett. 1989, 63, 105. [Google Scholar] [CrossRef] [PubMed]

- Varn, D.P.; Canright, G.S.; Crutchfield, J.P. ϵ-Machine spectral reconstruction theory: A direct method for inferring planar disorder and structure from X-ray diffraction studies. Acta Crystallogr. Sect. A Found. Crystallogr. 2013, 69, 197–206. [Google Scholar] [CrossRef] [PubMed]

- Henter, G.E.; Kleijn, W.B. Picking up the pieces: Causal states in noisy data, and how to recover them. Pattern Recognit. Lett. 2013, 34, 587–594. [Google Scholar] [CrossRef]

- Paulson, E.; Griffin, C. Minimum Probabilistic Finite State Learning Problem on Finite Data Sets: Complexity, Solution and Approximations. arXiv 2014, arXiv:1501.01300. [Google Scholar]

- Shalizi, C.R.; Shalizi, K.L.; Crutchfield, J.P. An algorithm for pattern discovery in time series. arXiv 2002, arXiv:cs/0210025. [Google Scholar]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Katz, R.W. On some criteria for estimating the order of a Markov chain. Technometrics 1981, 23, 243–249. [Google Scholar] [CrossRef]

- Csiszár, I.; Shields, P.C. The consistency of the BIC Markov order estimator. Ann. Stat. 2000, 28, 1601–1619. [Google Scholar]

- Strelioff, C.C.; Crutchfield, J.P.; Hübler, A.W. Inferring Markov chains: Bayesian estimation, model comparison, entropy rate, and out-of-class modeling. Phys. Rev. E 2007, 76, 011106. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Marton, K.; Shields, P.C. Entropy and the consistent estimation of joint distributions. Ann. Probab. 1994, 22, 960–977. [Google Scholar] [CrossRef]

- Crutchfield, J.P.; Ellison, C.J.; Mahoney, J.R. Time’s barbed arrow: Irreversibility, crypticity, and stored information. Phys. Rev. Lett. 2009, 103, 094101. [Google Scholar] [CrossRef] [PubMed]

- Ellison, C.J.; Mahoney, J.R.; Crutchfield, J.P. Prediction, retrodiction, and the amount of information stored in the present. J. Stat. Phys. 2009, 136, 1005. [Google Scholar] [CrossRef]

- Crutchfield, J.P.; Ellison, C.J.; Riechers, P.M. Exact complexity: The spectral decomposition of intrinsic computation. Phys. Lett. A 2016, 380, 998–1002. [Google Scholar] [CrossRef]

- Crutchfield, J.P. Mixed States of Hidden Markov Processes and Their Presentations: What and How to Calculate; Working Paper; Santa Fe Institute: Santa Fe, NM, USA, 2013. [Google Scholar]

- Oliveira, J.G.; Barabási, A.L. Human dynamics: Darwin and Einstein correspondence patterns. Nature 2005, 437, 1251. [Google Scholar] [CrossRef]

- Malmgren, R.D.; Stouffer, D.B.; Motter, A.E.; Amaral, L.A. A Poissonian explanation for heavy tails in e-mail communication. Proc. Natl. Acad. Sci. USA 2008, 105, 18153–18158. [Google Scholar] [CrossRef]

- Malmgren, R.D.; Hofman, J.M.; Amaral, L.A.; Watts, D.J. Characterizing individual communication patterns. In Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Paris, France, 28 June–1 July 2009; ACM: New York, NY, USA, 2009; pp. 607–616. [Google Scholar]

- Jiang, Z.Q.; Xie, W.J.; Li, M.X.; Podobnik, B.; Zhou, W.X.; Stanley, H.E. Calling patterns in human communication dynamics. Proc. Natl. Acad. Sci. USA 2013, 110, 1600–1605. [Google Scholar] [CrossRef]

- Wu, Y.; Zhou, C.; Xiao, J.; Kurths, J.; Schellnhuber, H.J. Evidence for a bimodal distribution in human communication. Proc. Natl. Acad. Sci. USA 2010, 107, 18803–18808. [Google Scholar] [CrossRef]

- Bialek, W.; de Ruyter van Steveninck, R.; Rieke, F.; Warland, D. Spikes: Exploring the Neural Code; MIT Press: Cambridge, UK, 1999. [Google Scholar]

- Dayan, P.; Abbott, L.F. Theoretical neuroscience: Computational and mathematical modeling of neural systems. J. Cogn. Neurosci. 2003, 15, 154–155. [Google Scholar]

- Marzen, S.E.; Crutchfield, J.P. Informational and causal architecture of discrete-time renewal processes. Entropy 2015, 17, 4891–4917. [Google Scholar] [CrossRef]

- Weiss, B. Subshifts of finite type and sofic systems. Mon. Math. 1973, 77, 462–474. [Google Scholar] [CrossRef]

- Badii, R.; Politi, A. Complexity: Hierarchical Structures and Scaling in Physics; Cambridge University Press: Cambridge, UK, 1999; Volume 6. [Google Scholar]

- Crutchfield, J.P. The calculi of emergence: Computation, dynamics and induction. Phys. D Nonlinear Phenom. 1994, 75, 11–54. [Google Scholar] [CrossRef]

- Wood, S.N. Generalized Additive Models: An Introduction with R, 2nd ed.; Chapman and Hall/CRC: Boca Raton, FL, USA, 2017. [Google Scholar]

- Kunsch, H.R. The jackknife and the bootstrap for general stationary observations. In The Annals of Statistics; Institute of Mathematical Statistics: Hayward, CA, USA, 1989; pp. 1217–1241. [Google Scholar]

- Politis, D.N.; Romano, J.P. A circular block-resampling procedure for stationary data. In Exploring the Limits of Bootstrap; Stanford University: Stanford, CA, USA, 1992. [Google Scholar]

- Politis, D.N.; Romano, J.P. The stationary bootstrap. J. Am. Stat. Assoc. 1994, 89, 1303–1313. [Google Scholar] [CrossRef]

- Efron, B. Better bootstrap confidence intervals. J. Am. Stat. Assoc. 1987, 82, 171–185. [Google Scholar] [CrossRef]

- Efron, B.; Hastie, T. Computer Age Statistical Inference; Cambridge University Press: Cambridge, UK, 2016; Volume 5. [Google Scholar]

- Beran, R. Prepivoting test statistics: A bootstrap view of asymptotic refinements. J. Am. Stat. Assoc. 1988, 83, 687–697. [Google Scholar] [CrossRef]

- Liu, R.Y.; Singh, K. Notions of limiting P values based on data depth and bootstrap. J. Am. Stat. Assoc. 1997, 92, 266–277. [Google Scholar] [CrossRef]

| Process | (Bits) | E (Bits) | (Bits) |

|---|---|---|---|

| 3-State Renewal | 0.08560820 | 0.06376713 | 0.21520538 |

| 4-State Alternating Renewal | 0.39456572 | 0.19801359 | 0.98714503 |

| Even | 2/3 | 0.91829583 | 0.91829583 |

| Simple Nonunifilar Source | 0.67786718 | 0.14723194 | 2.71146872 |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Darmon, D. Discrete Information Dynamics with Confidence via the Computational Mechanics Bootstrap: Confidence Sets and Significance Tests for Information-Dynamic Measures. Entropy 2020, 22, 782. https://doi.org/10.3390/e22070782

Darmon D. Discrete Information Dynamics with Confidence via the Computational Mechanics Bootstrap: Confidence Sets and Significance Tests for Information-Dynamic Measures. Entropy. 2020; 22(7):782. https://doi.org/10.3390/e22070782

Chicago/Turabian StyleDarmon, David. 2020. "Discrete Information Dynamics with Confidence via the Computational Mechanics Bootstrap: Confidence Sets and Significance Tests for Information-Dynamic Measures" Entropy 22, no. 7: 782. https://doi.org/10.3390/e22070782

APA StyleDarmon, D. (2020). Discrete Information Dynamics with Confidence via the Computational Mechanics Bootstrap: Confidence Sets and Significance Tests for Information-Dynamic Measures. Entropy, 22(7), 782. https://doi.org/10.3390/e22070782