The Natural Philosophy of Economic Information: Autonomous Agents and Physiosemiosis

Abstract

:1. Introduction

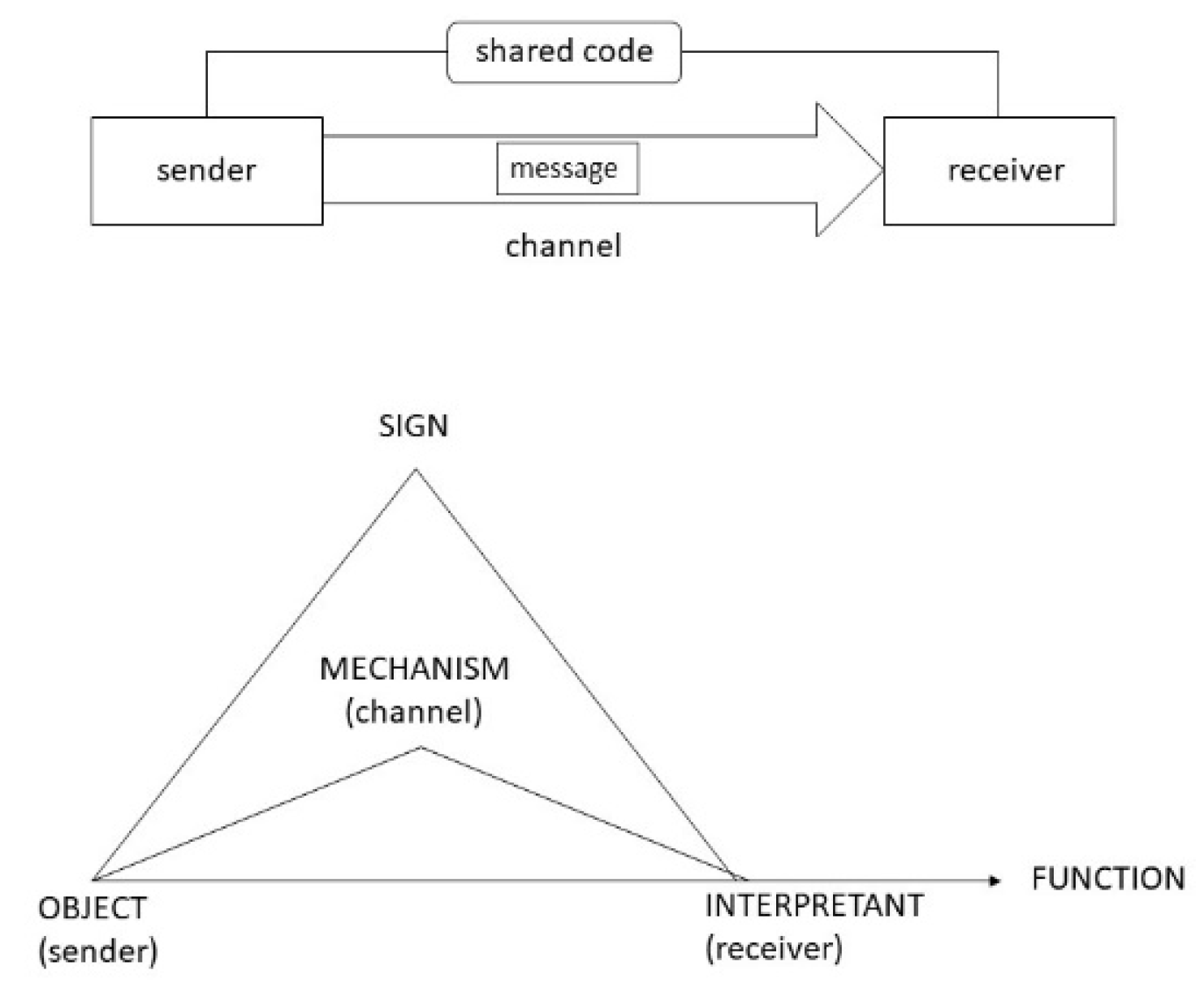

2. Basic principles of the Physiosemiotics

2.1. Shannon Information versus Semiotics

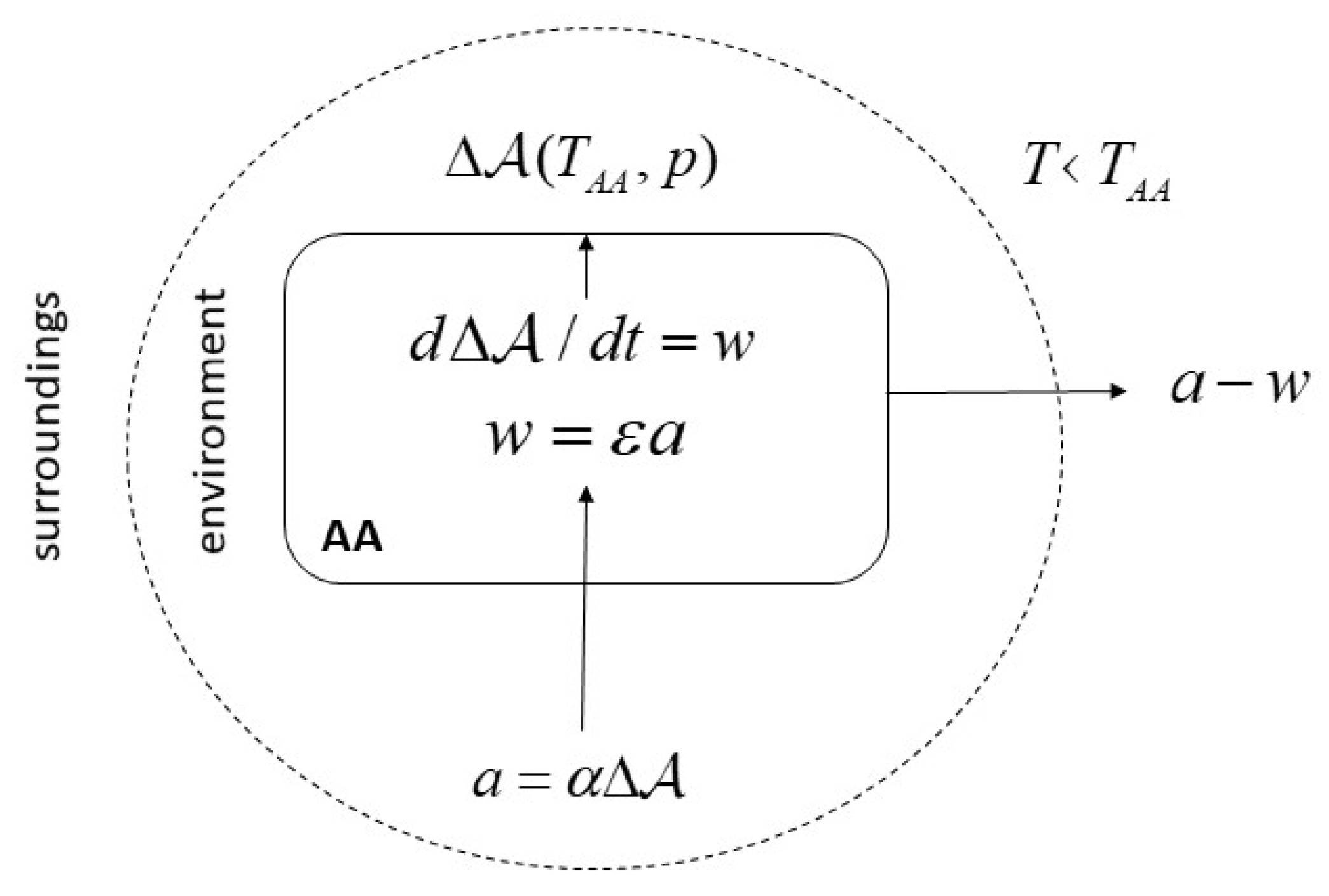

2.2. Autonomous Agents and the Physical Economy of Information

- An AA is an individual demarcated by physical boundaries between environment and internal systemic states. Environmental states are only accessible via “observations” (or: “measurements”).

- The AA actively reproduces these boundaries and pursues the goal to sustaining its existence through time, involving a distinction between “self” and “non-self” (e.g., the immune system).

- For achieving this goal, the AA operates a metabolism, i.e., manages flows of material and energetic throughputs to generate work. Observation is a kind of work.

- These operations require the physical processing of information generated by observations that is necessary for obtaining and processing the resources needed by the metabolism, and generally, proper functioning of the AA.

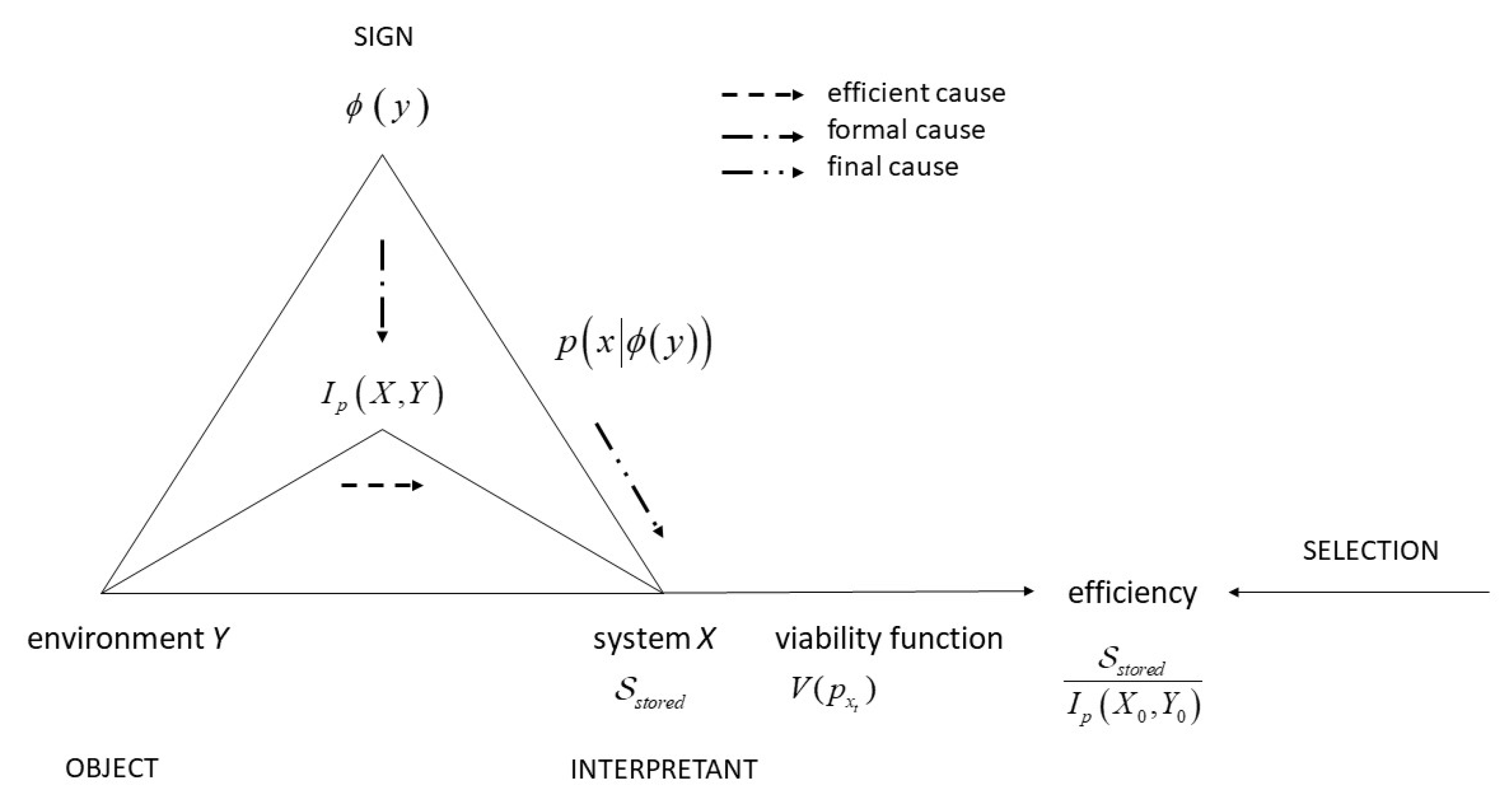

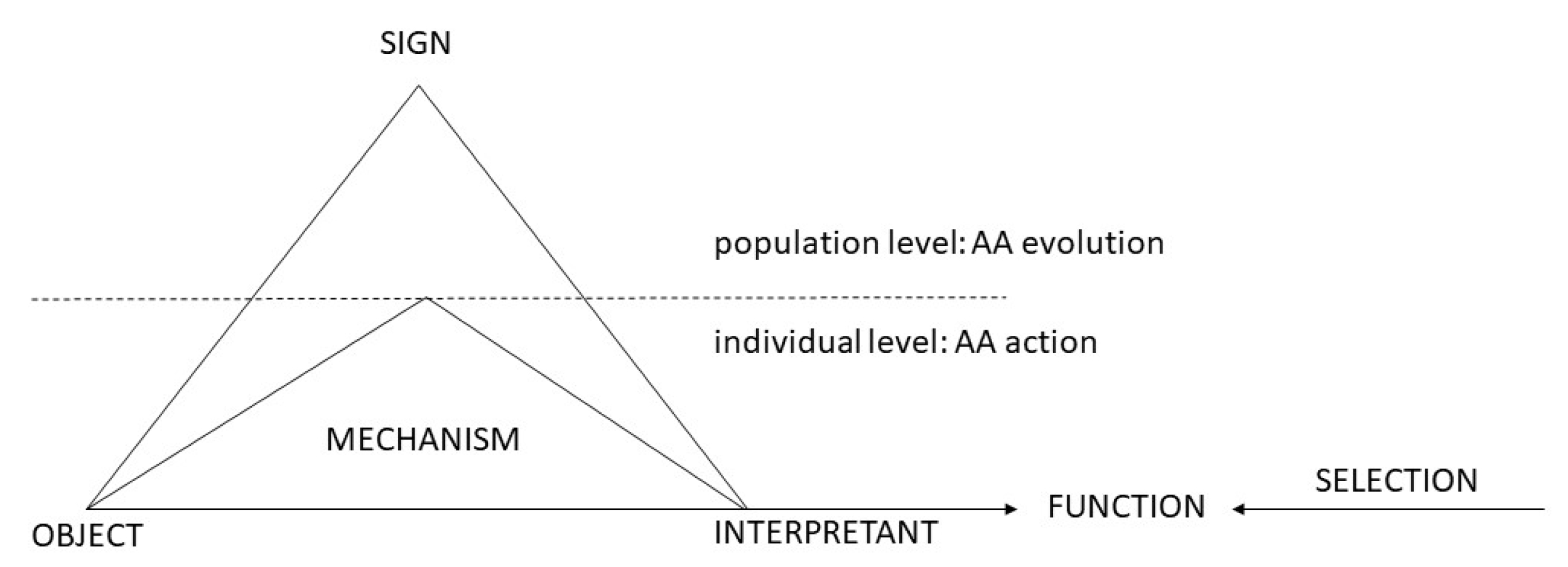

3. The Physiosemiotic Approach to Semantic Information

3.1. Semantic Information and Thermodynamics

3.2. The Physiosemiotic Triad

4. Consequences for Theorizing the Economic Agent: The Case of Neuroeconomics

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Costanza, R.; Cumberland, J.H.; Daly, H.; Goodland, R.; Norgaard, R.B.; Kubiszewski, I.; Franco, C. An Introduction to Ecological Economics; CRC Press: Boca Raton, FL, USA; New York, NY, USA; London, UK, 2014. [Google Scholar]

- Georgescu-Roegen, N. The Entropy Law and the Economic Process; Harvard University Press: Cambridge, MA, USA, 1971. [Google Scholar]

- Jaynes, E.T. Comments on “Natural Resources: The Laws of Their Scarcity” by N. Georgescu–Roegen”. Available online: http://bayes.wustl.edu/etj/articles/natural.resources.pdf (accessed on 26 April 2020).

- Herrmann-Pillath, C. The Evolutionary Approach to Entropy: Reconciling Georgescu–Roegen’s Natural Philosophy with the Maximum Entropy Framework. Ecol. Econ. 2011, 70, 606–616. [Google Scholar] [CrossRef] [Green Version]

- Von Hayek, F.A. The Use of Knowledge in Society. Am. Econ. Rev. 1945, 35, 519–530. [Google Scholar]

- Stiglitz, J. The Revolution of Information Economics: The Past and the Future, NBER Working Paper 23780. 2017. Available online: http://www.nber.org/papers/w23780 (accessed on 12 January 2021).

- Mirowski, P.; Nik-Khah, E. The Knowledge We Have Lost in Information: The History of Information in Modern Economics; Oxford University Press: New York, NY, USA, 2017. [Google Scholar]

- Osborne, M.J.; Rubinstein, A. A Course in Game Theory; MIT Press: Cambridge, MA, USA, 1994. [Google Scholar]

- International Energy Agency. Digitalisation: Making Energy Systems More Connected, Efficient, Resilient and Sustainable. 2020. Available online: https://www.iea.org/topics/digitalisation (accessed on 30 April 2020).

- Ayres, R.U. Information, Entropy, and Progress: A New Evolutionary Paradigm; AIP Press: New York, NY, USA, 1994. [Google Scholar]

- Ayres, R.U.; Warr, B. The Economic Growth Engine: How Energy and Work Drive Material Prosperity; Edward Elgar: Cheltenham, UK; Northampton, MA, USA, 2009. [Google Scholar]

- Hidalgo, C.A. Why Information Grows: The Evolution of Order, from Atoms to Economies; Penguin: London, UK, 2016. [Google Scholar]

- Hausmann, R.; Hidalgo, C.A.; Bustos, S.; Coscia, M.; Simoes, A. The Atlas of Economic Complexity: Mapping Paths to Prosperity; MIT Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Floridi, L. Semantic Conceptions of Information. In The Stanford Encyclopedia of Philosophy (Winter 2019 Edition); Zalta, E.N., Ed.; 2019; Available online: https://plato.stanford.edu/archives/win2019/entries/information-semantic/ (accessed on 25 April 2020).

- Kåhre, J. The Mathematical Theory of Information; Kluwer: Boston, MA, USA; Dordrecht, The Netherlands; London, UK, 2002. [Google Scholar]

- Short, T.L. Peirce’s Theory of Signs; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2007. [Google Scholar]

- Salthe, S.N. Meaning in Nature: Placing Biosemiotics in Pansemiotics. In Biosemiotics: Information, Codes and Signs in Living Systems; Chapter 10; Barbieri, M., Ed.; Nova Publishers: New York, NY, USA, 2007. [Google Scholar]

- El-Hani, C.N.; Queiroz, J.; Emmeche, C. A Semiotic Analysis of the Genetic Information System. Semiotica 2006, 160, 1–68. [Google Scholar] [CrossRef]

- Deely, J. Physiosemiosis in the Semiotic Spiral: A Play of Musement. Sign Syst. Stud. 2001, 29, 27–47. [Google Scholar]

- Kauffman, S.A. Investigations; Oxford University Press: Oxfod, UK, 2000. [Google Scholar]

- Kolchinsky, A.; Wolpert, D.H. Semantic Information, Autonomous Agency and Non-Equilibrium Statistical Physics. Interface Focus 2018, 8, 20180041. [Google Scholar] [CrossRef]

- Queiroz, J.; Emmeche, C.; El-Hani, C.N. A Peircean Approach to ‘Information’ and its Relationship with Bateson’s and Jablonka’s Ideas. Am. J. Semiot. 2008, 24, 75–94. [Google Scholar] [CrossRef]

- Volkenstein, M.V. Entropy and Information; Birkhäuser: Boston, MA, USA; Berlin, Germany, 2009. [Google Scholar]

- Burch, R. Charles Sanders Peirce. In The Stanford Encyclopedia of Philosophy (Spring 2021 Edition); Zalta, E.N., Ed.; Available online: https://plato.stanford.edu/archives/spr2021/entries/peirce/ (accessed on 6 January 2021).

- Searle, J.R. The Construction of Social Reality; Free Press: New York, NY, USA, 1995. [Google Scholar]

- Parrondo, J.M.R.; Horowitz, J.M.; Sagawa, T. Thermodynamics of Information. Nat. Phys. 2015, 11, 131–139. [Google Scholar] [CrossRef]

- Wright, L. Functions. Philos. Rev. 1973, 82, 139–168. [Google Scholar] [CrossRef]

- Perlman, M. Changing the Mission of Theories of Teleology: DOs and DON’Ts for Thinking About Function. In Functions in Biological and Artifical Worlds; Krohs, U., Kroes, P., Eds.; MIT Press: Cambridge, MA, USA, 2009; pp. 17–35. [Google Scholar]

- Levin, J. Functionalism. In The Stanford Encyclopedia of Philosophy (Fall 2018 Edition); Zalta, E.D., Ed.; Available online: https://plato.stanford.edu/archives/fall2018/entries/functionalism/ (accessed on 6 January 2021).

- Macdonald, G.; Papineau, D. Introduction: Prospects and Problems for Teleosemantics. In Teleosemantics: New Philosophical Essays; Macdonald, G., Papineau, D., Eds.; Oxford University Press: Oxford, UK; New York, NY, USA, 2007; pp. 1–22. [Google Scholar]

- Millikan, R.G. Biosemantics. In The Oxford Handbook of Philosophy of Mind; McLaughlin, B.P., Beckerman, A., Walter, S., Eds.; Clarendon Press: Oxford, UK, 2009; pp. 394–406. [Google Scholar]

- Nöth, W.; Charles, S. Peirce’s Theory of Information: A Theory of the Growth of Symbols and of Knowledge. Cybern. Hum. Knowing 2012, 19, 137–161. [Google Scholar]

- Gould, S.J. The Structure of Evolutionary Theory; Belknap: Cambridge, MA, USA; London, UK, 2002. [Google Scholar]

- Deacon, T.W. Incomplete Nature: How Mind Emerged from Matter; Norton: New York, NY, USA, 2013. [Google Scholar]

- Pyke, G.H. Animal Movements—An Optimal Foraging Theory Approach, In Encyclopedia of Animal Behavior, 2nd ed.; Choe, J.C., Ed.; Academic Press: Cambridge, MA, USA, 2019; pp. 149–156. [Google Scholar]

- Atkins, P. Four Laws That Drive the Universe; Oxford University Press: Oxford, UK, 2007. [Google Scholar]

- Collier, J. Causation Is the Transfer of Information. In Causation, Natural Laws and Explanation; Sankey, H., Ed.; Kluwer: Dordrecht, The Netherlands, 1996; pp. 279–331. [Google Scholar]

- Garrett, T.J. Are There Basic Physical Constraints on Future Anthropogenic Emissions of Carbon Dioxide? Clim. Chang. 2011, 3, 437–455. [Google Scholar] [CrossRef] [Green Version]

- Odling-Smee, F.J.; Laland, K.; Feldman, M.W. Niche Construction: The Neglected Process in Evolution; Princeton University Press: Princeton, NJ, USA, 2003. [Google Scholar]

- Smil, V. Growth: From Microorganisms to Megacities; The MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Annila, A.; Salthe, S.N. Physical Foundations of Evolutionary Theory. J. Non-Equilib. Thermodyn. 2010, 35, 301–321. [Google Scholar] [CrossRef]

- Ramstead, M.J.; Kirchhoff, M.D.; Friston, K.J. A Tale of Two Densities: Active Inference Is Enactive Inference. Adapt. Behav. 2020, 28, 225–239. [Google Scholar] [CrossRef] [Green Version]

- Dennett, D.C. Darwin’s Dangerous Idea: Evolution and the Meanings of Life; Simon & Schuster: New York, NY, USA, 1995. [Google Scholar]

- Ulanowicz, R.E. Ecology, the Ascendent Perspective; Columbia University Press: New York, NY, USA, 1997. [Google Scholar]

- Von Uexkuell, J.; Kriszat, G. Streifzüge durch die Umwelten von Tieren und Menschen. Bedeutungslehre; Rowohlt: Hamburg, Germany, 1956. [Google Scholar]

- Hulswit, M. From Cause to Causation: A Peircean Perspective. Dordrecht; Kluwer Academic Publishers: Boston, MA, USA, 2002. [Google Scholar]

- Salthe, S.N. The System of Interpretance: Meaning as Finality’, Biosemiotics 2009, 1, 285–294. Biosemiotics 2009, 1, 285–294. [Google Scholar] [CrossRef]

- Falcon, A. Aristotle on Causality. In The Stanford Encyclopedia of Philosophy (Spring 2019 Edition); Zalta, E.N., Ed.; 2019; Available online: https://plato.stanford.edu/archives/spr2019/entries/aristotle-causality/ (accessed on 6 January 2021).

- Stadler, B.M.R.; Stadler, P.F.; Wagner, G.P.; Fontana, W. The Topology of the Possible: Formal Spaces Underlying Patterns of Evolutionary Change’. J. Theor. Biol. 2001, 213, 241–274. [Google Scholar] [CrossRef] [Green Version]

- Fuhrman, G. Rehabilitating Information. Entropy 2010, 12, 164–196. [Google Scholar] [CrossRef]

- Ereshefsky, M. Species. In The Stanford Encyclopedia of Philosophy (Fall 2017 Edition); Zalta, E.N., Ed.; Available online: https://plato.stanford.edu/archives/fall2017/entries/species/ (accessed on 6 January 2021).

- Herrmann-Pillath, C. Foundations of Economic Evolution: A Treatise on the Natural Philosophy of Economics; Edward Elgar: Cheltenham, UK, 2013. [Google Scholar]

- Padoa-Schioppa, C. Neurobiology of Economic Choice: A Good-based Model. Annu. Rev. Neurosci. 2011, 34, 333–359. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hunt, L.T.; Hayden, B.Y. A Distributed, Hierarchical and Recurrent Framework for Reward-based Choice. Nat. Rev. Neurosci. 2017, 18, 172–182. [Google Scholar] [CrossRef]

- Fehr, E.; Rangel, A. Neuroeconomic Foundations of Economic Choice—Recent Advances. J. Econ. Perspect. 2011, 25, 3–30. [Google Scholar] [CrossRef] [Green Version]

- Von Hayek, F.A. The Sensory Order. An Inquiry into the Foundations of Theoretical Psychology; University of Chicago Press: Chicago, IL, USA, 1952. [Google Scholar]

- Cisek, P. Cortical Mechanisms of Action Selection: The Affordance Competition Hypothesis. Philos. Trans. R. Soc. B Biol. Sci. 2007, 362, 1585–1599. [Google Scholar] [CrossRef] [PubMed]

- Verschure, P.F.M.J. Synthetic Consciousness: The Distributed Adaptive Control Perspective. Philos. Trans. R. Soc. B Biol. Sci. 2016, 371, 20150448. [Google Scholar] [CrossRef] [PubMed]

- Wiers, R.W.; Gladwin, T.W. Reflective and impulsive processes in addiction and the role of motivation. In Reflective and Impulsive Determinants of Human Behaviour; Deutsch, R., Gawronski, B., Hofmann, W., Eds.; Routledge: New York, NY, USA, 2017; pp. 173–188. [Google Scholar]

- Cisek, P. Making Decisions Through a Distributed Consensus. Curr. Opin. Neurobiol. 2012, 22, 927–936. [Google Scholar] [CrossRef]

- Pezzulo, G.; Cisek, P. Navigating the Affordance Landscape: Feedback Control as a Process Model of Behavior and Cognition. Trends Cogn. Sci. 2016, 20, 414–424. [Google Scholar] [CrossRef] [PubMed]

- Mobbs, D.; Trimmer, P.T.; Blumstein, D.T.; Dayan, P. Foraging for Foundations in Decision Neuroscience: Insights from Ethology. Nat. Rev. Neurosci. 2018, 19, 419–427. [Google Scholar] [CrossRef] [Green Version]

- Friston, K.J.; Stephan, K.E. Free–energy and the Brain. Synthese 2006, 159, 417–458. [Google Scholar] [CrossRef] [Green Version]

- Friston, K. The Free–energy Principle: A Unified Brain Theory. Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef]

- Clark, A. Whatever Next? Predictive Brains, Situated agents, and the Future of Cognitive Science. Behav. Brain Sci. 2013, 36, 181–204. [Google Scholar] [CrossRef] [PubMed]

- Piaget, J. L’Équilibration des Structures Cognitives: Problème Central du Développement; Presses Universitaires de France: Paris, France, 1975. [Google Scholar]

- Henriksen, M. Variational Free Energy and Economics Optimizing with Biases and Bounded Rationality. Front. In Psychol. 2020, 11, 549187. [Google Scholar] [CrossRef] [PubMed]

- Hodgson, G.M. Reclaiming Habit for Institutional Economics. J. Econ. Psychol. 2004, 25, 651–660. [Google Scholar] [CrossRef] [Green Version]

- Herrmann-Pillath, C. Evolutionary Mechanisms of Choice: Hayekian Perspectives on Neurophilosophical Foundations of Neuroeconomics. Econ. Philos. 2020, 1–20. [Google Scholar] [CrossRef]

- Herrmann-Pillath, C. Mechanistic Integration of Social Sciences and Neurosciences: Context and Causality in Social Neuroeconomics. In Social Neuroeconomics: Mechanistic Integration of the Neurosciences and the Social Sciences; Harbecke, J., Herrmann-Pillath, C., Eds.; Routledge: London, UK, 2020; pp. 47–73. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Herrmann-Pillath, C. The Natural Philosophy of Economic Information: Autonomous Agents and Physiosemiosis. Entropy 2021, 23, 277. https://doi.org/10.3390/e23030277

Herrmann-Pillath C. The Natural Philosophy of Economic Information: Autonomous Agents and Physiosemiosis. Entropy. 2021; 23(3):277. https://doi.org/10.3390/e23030277

Chicago/Turabian StyleHerrmann-Pillath, Carsten. 2021. "The Natural Philosophy of Economic Information: Autonomous Agents and Physiosemiosis" Entropy 23, no. 3: 277. https://doi.org/10.3390/e23030277

APA StyleHerrmann-Pillath, C. (2021). The Natural Philosophy of Economic Information: Autonomous Agents and Physiosemiosis. Entropy, 23(3), 277. https://doi.org/10.3390/e23030277