Abstract

The asymmetric skew divergence smooths one of the distributions by mixing it, to a degree determined by the parameter , with the other distribution. Such divergence is an approximation of the KL divergence that does not require the target distribution to be absolutely continuous with respect to the source distribution. In this paper, an information geometric generalization of the skew divergence called the -geodesical skew divergence is proposed, and its properties are studied.

1. Introduction

Let be a measure space where denotes the sample space, the -algebra of measurable events, and a positive measure. The set of the strictly positive probability measure is defined as

and the set of nonnegative probability measure is defined as

Then a number of divergences that appear in statistics and information theory [1,2] are introduced.

Definition 1

(Kullback–Leibler divergence [3]). The Kullback–Leibler divergence or KL-divergence is defined between two Radon–Nikodym densities p and q of μ-absolutely continuous probability measures by

KL-divergence is a measure of the difference between two probability distributions in statistics and information theory [4,5,6,7]. This is also called the relative entropy and is known not to satisfy the axiom of distance. Because the KL-divergence is asymmetric, several symmetrizations have been proposed in the literature [8,9,10].

Definition 2

(Jensen–Shannon divergence [8]). The Jensen–Shannon divergence or JS-divergence is defined between two Radon–Nikodym densities p and q of μ-absolutely continuous probability measures by

The JS-divergence is a symmetrized and smoothed version of the KL-divergence, and it is bounded as

This property contrasts with the fact that KL-divergence is unbounded.

Definition 3

(Jeffreys divergence [11]). The Jeffreys divergence is defined between two Radon–Nikodym densities p and q of μ-absolutely continuous probability measures by

Such symmetrized KL-divergences have appeared in various pieces of literature [12,13,14,15,16,17,18].

For continuous distributions, the KL-divergence is known to have computational difficulty. To be more specific, if q takes a small value relative to p, the value of may diverge to infinity. The simplest idea to avoid this is to use very small and modify as follows:

However, such an extension is unnatural in the sense that no longer satisfies the condition for a probability measure: . As a more natural way to stabilize KL-divergence, the following skew divergences have been proposed:

Definition 4

(Skew divergence [8,19]). The skew divergence is defined between two Radon–Nikodym densities p and q of μ-absolutely continuous probability measures by

where .

Skew divergences have been experimentally shown to perform better in applications such as natural language processing [20,21], image recognition [22,23] and graph analysis [24,25]. In addition, there is research on quantum generalization of skew divergence [26].

The main contributions of this paper are summarized as follows:

- Several symmetrized divergences or skew divergences are generalized from an information geometry perspective.

- It is proved that the natural skew divergence for the exponential family is equivalent to the scaled KL-divergence.

- Several properties of geometrically generalized skew divergence are proved. Specifically, the functional space associated with the proposed divergence is shown to be a Banach space.

Implementation of the proposed divergence is available on GitHub (https://github.com/nocotan/geodesical_skew_divergence (accessed on 3 April 2021)).

2. α-Geodesical Skew Divergence

The skew divergence is generalized based on the following function.

Definition 5

(f-interpolation). For any , and , f-interpolation is defined as

where

is the function that defines the f-mean [27].

The f-mean function satisfies

It is easy to see that this family includes various known weighted means including the e-mixture and m-mixture for in the literature of information geometry [28]:

The inverse function is convex when , and concave when . It is worth noting that the f-interpolation is a special case of the Kolmogorov–Nagumo average [29,30,31] when is restricted in the interval .

In order to consider the geometric meaning of this function, the notion of the statistical manifold is introduced.

2.1. Statistical Manifold

Let

be a family of probability distribution on , where each element is parameterized by n real-valued variables . The set is called a statistical model and is a subset of . We also denote as a statistical model equipped with the Riemannian metric . In particular, let be the Fisher–Rao metric, which is the Riemannian metric induced from the Fisher information matrix [32].

In the rest of this paper, the abbreviations

are used.

Definition 6

(Christoffel symbols). Let be a Riemannian metric, particularly the Fisher information matrix, then the Christoffel symbols are given by

Definition 7

(Levi-Civita connection). Let g be a Fisher–Riemannian metric on which is a 2-covariant tensor defined locally by

where and are vector fields in the 0-representation on . Then, its associated Levi-Civita connection is defined by

The fact that is a metrical connection can be written locally as

It is worth noting that the superscript of corresponds to a parameter of the connection. Based on the above definitions, several connections parameterized by the parameter are introduced. The case corresponds to the Levi-Civita connection induced by the Fisher metric.

Definition 8

(-connection). Let g be the Fisher-Riemannian metric on , which is a 2-covariant tensor. Then, the -connection is defined by

It can also be expressed equivalently by explicitly writing as the Christoffel coefficients

Definition 9

(-connection). Let g be the Fisher–Riemannian metric on , which is a 2-covariant tensor. Then, the -connection is defined by

In the following, the ∇-flatness is considered with respect to the corresponding coordinates system. More details can be found in [28].

Proposition 1.

The exponential family is -flat.

Proposition 2.

The exponential family is -flat if and only if it is -flat.

Proposition 3.

The mixture family is -flat.

Proposition 4.

The mixture family is -flat if and only if it is -flat.

Proposition 5.

The relation between the foregoing three connections is given by

Proof.

It suffices to show

From the definitions of and ,

which proves the proposition. □

The connections and are two special connections on with respect to the mixture family and the exponential family, respectively. Moreover, they are related by the duality condition, and the following 1-parameter family of connections is defined.

Definition 10

(-connection). For , the -connection on the statistical model is defined as

Proposition 6.

The components can be written as

The -coordinate system associated with the -connection is endowed with the -geodesic, which is a straight line on the corresponding coordinates system. Then, we introduce some relevant notions.

Definition 11

(α-divergence [33]). Let α be a real parameter. The α-divergence between two probability vectors and is defined as

The KL-divergence, which is a special case with , induces the linear connection as follows.

Proposition 7.

The diagonal part of the third mixed derivatives of the KL-divergence is the negative of the Christoffel symbol:

Proof.

The second derivative in the argument is given by

and differentiating it with respect to yields

Then, considering the diagonal part, one yields

□

More generally, the -divergence with induces the -connection.

Definition 12

(α-representation [34]). For some positive measure , the coordinate system derived from the α-divergence is

and is called the α-representation of a positive measure .

Definition 13

(α-geodesic [28]). The α-geodesic connecting two probability vectors and is defined as

where is determined as

It is known that the appropriate reparameterizations for the parameter t are necessary for a rigorous discussion in the space of probability measures [35,36]. However, as mentioned in the literature [35], an explicit expression for the reparametrizations and is unknown. A similar discussion has been made in the derivation of the -path [37], where it is mentioned that the normalizing factor is unknown in general. Furthermore, the f-mean is not convex depending on the . For these reasons, it is generally difficult to discuss -geodesics in probability measures by normalization or reparameterization, and to avoid unnecessary complexity, the parameter t is assumed to be appropriately reparameterized.

Let . Then, the dual coordinate system is given by as

Hence, it is the ()-representation of .

2.2. Generalization of Skew Divergences

From Definition 13, the f-interpoloation is considered as an unnormalized version of the -geodesic. Using the notion of geodesics, skew divergence is generalized in terms of information geometry as follows.

Definition 14

(α-Geodesical Skew Divergence). The α-geodesical skew divergence is defined between two Radon–Nikodym densities p and q of μ-absolutely continuous probability measures by:

where and .

Some special cases of -geodesical skew divergence are listed below:

Furthermore, -geodesical skew divergence is a special form of the generalized skew K-divergence [10,38], which is a family of abstract means-based divergences. In this paper, the skew K-divergence touched upon in [10] is characterized in terms of -geodesic on positive measures, and its geometric and functional analytic properties are investigated. When the Kolmogorov–Nagumo average (i.e., when the function in Equation (8) is a strictly monotone convex function) the geodesic has been shown to be well-defined [37].

2.3. Symmetrization of -Geodesical Skew Divergence

It is easy to symmetrize the -geodesical skew divergence as follows.

Definition 15

(Symmetrized α-Geodesical Skew Divergence). The symmetrized α-geodesical skew divergence is defined between two Radon–Nikodym densities p and q of μ-absolutely continuous probability measures by:

where and .

It is seen that includes several symmetrized divergences.

The last one is the -JS-divergence [39], which is a generalization of the JS-divergence.

3. Properties of α-Geodesical Skew Divergence

In this section, the properties of the -geodesical skew divergence are studied.

Proposition 8

(Non-negativity of the α-geodesical skew divergence). For and , the α-geodesical skew divergence satisfies the following inequality:

Proof.

When λ is fixed, the f-interpolation has the following inverse monotonicity with respect to α:

From Gibbs’ inequality [40] and Equation (29), one obtains

□

Proposition 9

(Asymmetry of the α-geodesical skew divergence). α-Geodesical skew divergence is not symmetric in general:

Proof.

For example, if , then , it holds that

and the asymmetry of the KL-divergence results in an asymmetry of the geodesic skew divergence. □

When a function of satisfies , it is referred to as centrosymmetric.

Proposition 10

(Non-centrosymmetricity of the α-geodesical skew divergence with respect to λ). α-Geodesical skew divergence is not centrosymmetric in general with respect to the parameter :

Proof.

For example, if , then , we have

□

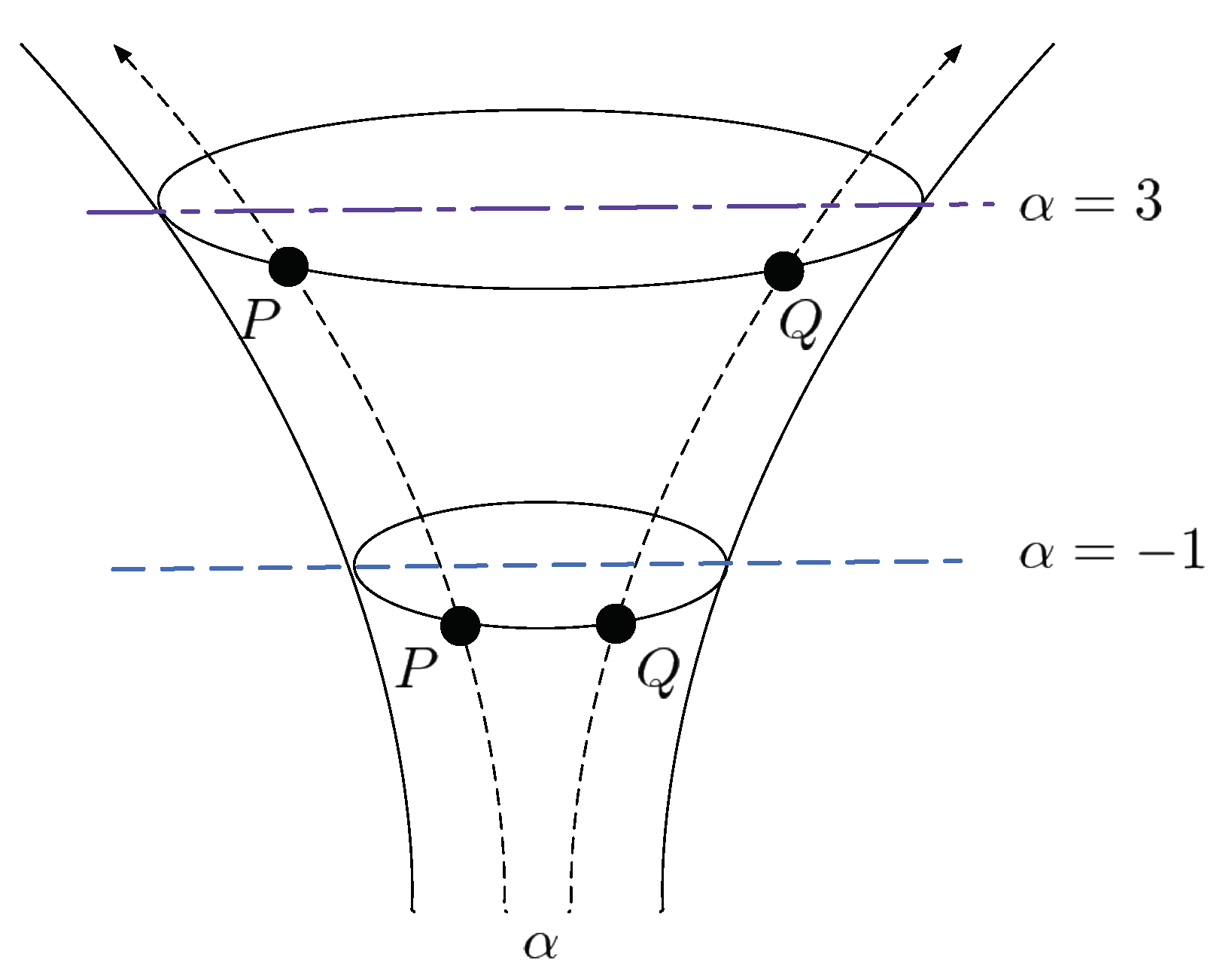

Proposition 11

(Monotonicity of the α-geodesical skew divergence with respect to α). α-Geodesical skew divergence satisfies the following inequality for all .

Proof.

Obvious from the inverse monotonicity of the f-interpolation (29) and the monotonicity of the logarithmic function. □

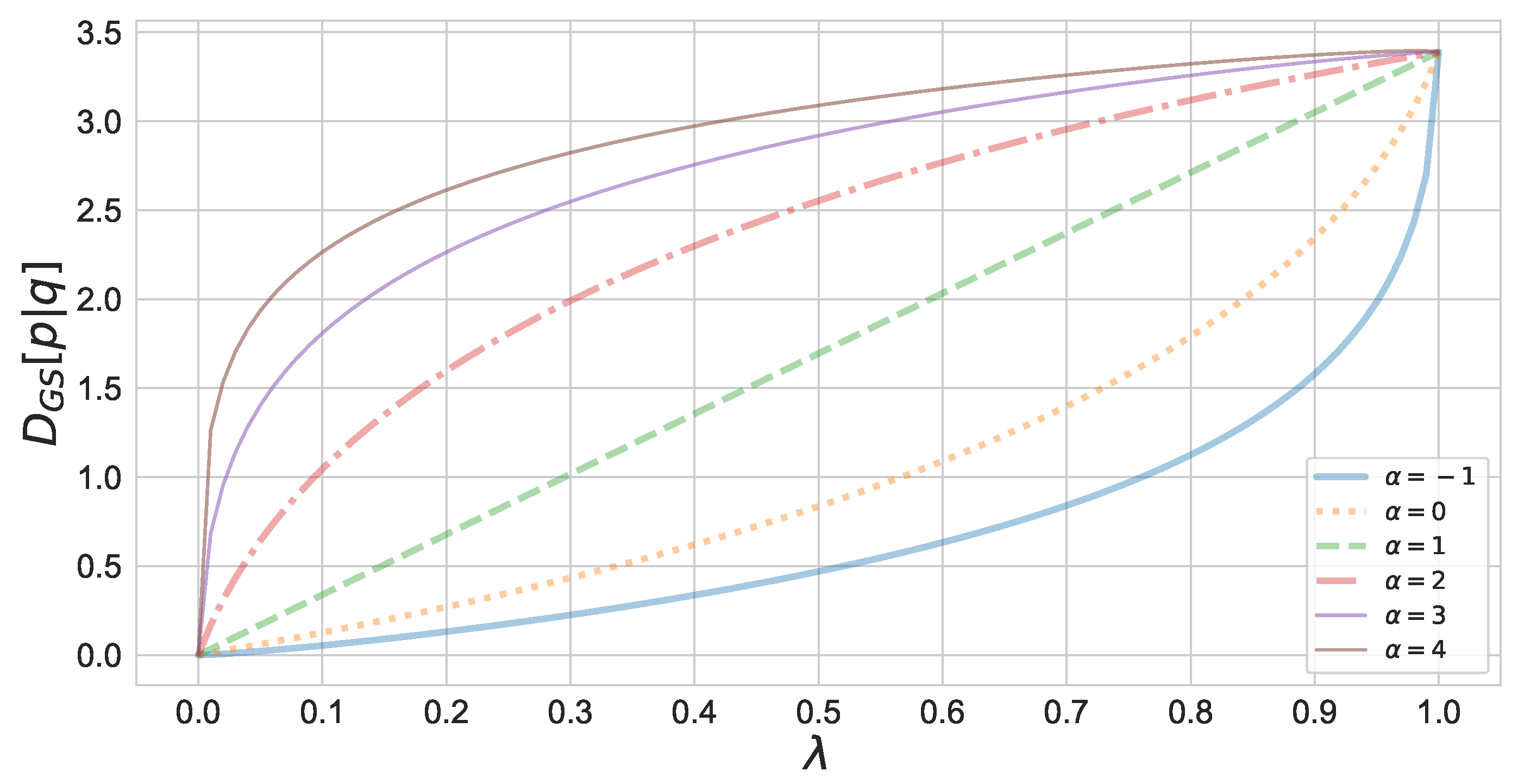

Figure 1 shows the monotonicity of the geodesic skew divergence with respect to . In this figure, divergence is calculated between two binomial distributions.

Figure 1.

Monotonicity of the -geodesical skew divergence with respect to . The -geodesical skew divergence between the binomial distributions and has been calculated.

Proposition 12

(Subadditivity of the α-geodesical skew divergence with respect to α). α-Geodesical skew divergence satisfies the following inequality for all

Proof.

For some and , takes the form of the Kolmogorov mean [29], which is obvious from its continuity, monotonicity and self-distributivity. □

Proposition 13

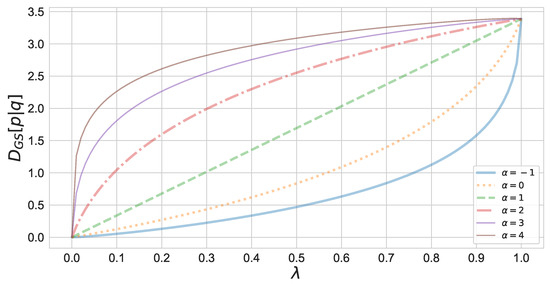

(Continuity of the α-geodesical skew divergence with respect to α and λ). α-Geodesical skew divergence has the continuity property.

Proof.

We can prove from the continuity of the KL-divergence and the Kolmogorov mean. □

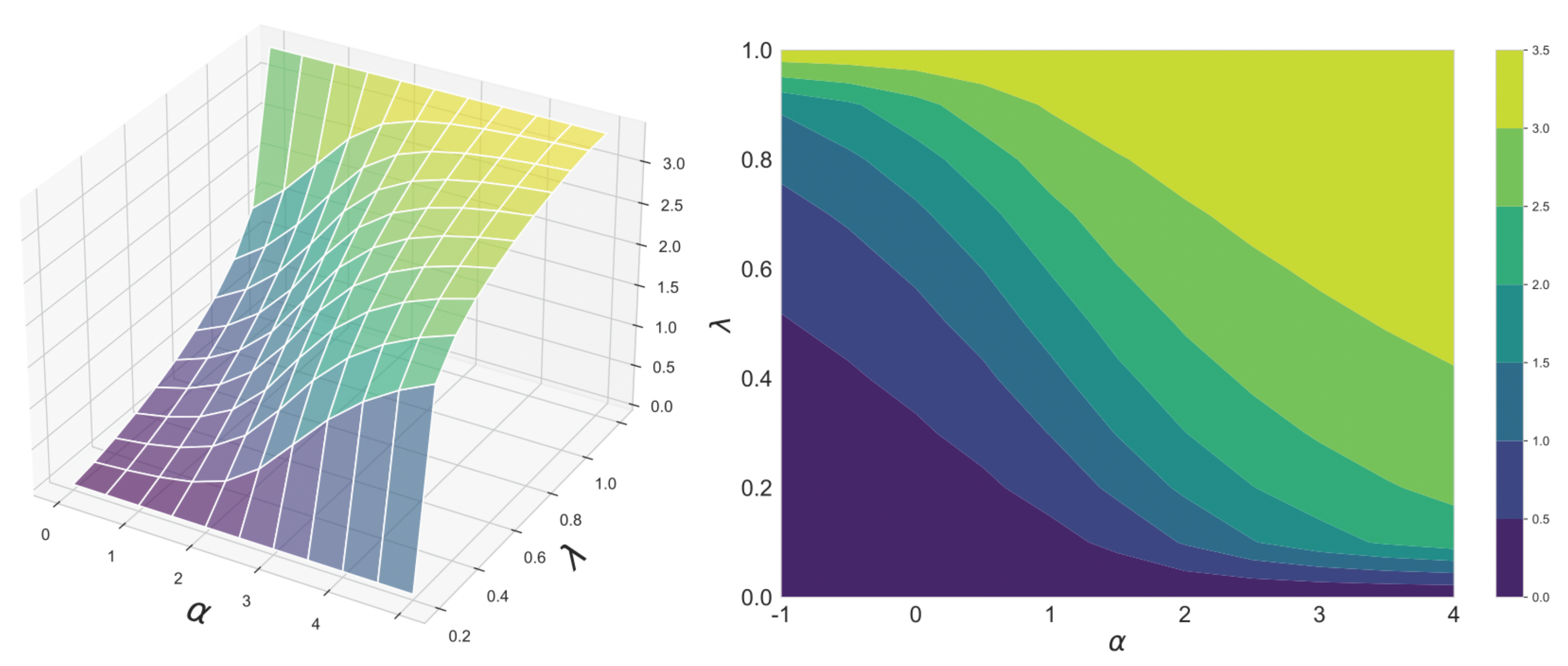

Figure 2 shows the continuity of the geodesic skew divergence with respect to and . Both source and target distributions are binomial distributions. From this figure, it can be seen that the divergence changes smoothly as the parameters change.

Figure 2.

Continuity of the -geodesmcal skew divergence with respect to and . The -geodesical skew divergence between the binomial distributions and has been calculated.

Lemma 1.

Suppose . Then,

holds for all .

Proof.

Let . Then . Assuming , it holds that

Then, the following equality

holds. □

Lemma 2.

Suppose . Then,

holds for all .

Proof.

Let . Then . Assuming , it holds that

Then, the following equality

holds. □

Proposition 14

(Lower bound of the α-geodesical skew divergence). α-Geodesical skew divergence satisfies the following inequality for all .

Proof.

It follows from the definition of the inverse monotonicity of f-interpolation (29) and Lemma 2. □

Proposition 15

(Upper bound of the α-geodesical skew divergence). α-Geodesical skew divergence satisfies the following inequality for all .

Proof.

It follows from the definition of the f-interpolation (29) and Lemma 1. □

Theorem 1

(Strong convexity of the α-geodesical skew divergence). α-Geodesical skew divergence is strongly convex in p with respect to the total variation norm.

Proof.

Let and , so that . From Taylor’s theorem, for and , it holds that

Let

where

Then,

where

Now, it is suffice to prove that . For all , it is seen that is absolutely continuous with respect to . Let . One obtains

and hence, for ,

□

4. Natural α-Geodesical Skew Divergence for Exponential Family

In this section, the exponential family is considered in which the probability density function is given by

where is a random variable. In the above equation, is an n-dimensional vector parameter to specify distribution, is a function of and corresponds to the normalization factor.

In skew divergence, the probability distribution of the target is a weighted average of the two distributions. This implicitly assumes that interpolation of the two probability distributions is properly given by linear interpolation. Here, in the exponential family, the interpolation between natural parameters rather than interpolation between probability distributions themselves is considered. Namely, the geodesic connecting two distributions and on the -coordinate system is considered:

where is the parameter. The probability distributions on the geodesic are

Hence, a geodesic itself is a one-dimensional exponential family, where is the natural parameter. A geodesic consists of a linear interpolation of the two distributions in the logarithmic scale because

This corresponds to the case on the f-interpolation with normalization factor ,

This induces the natural geodesic skew divergence with as

and this is equal to the scaled KL divergence.

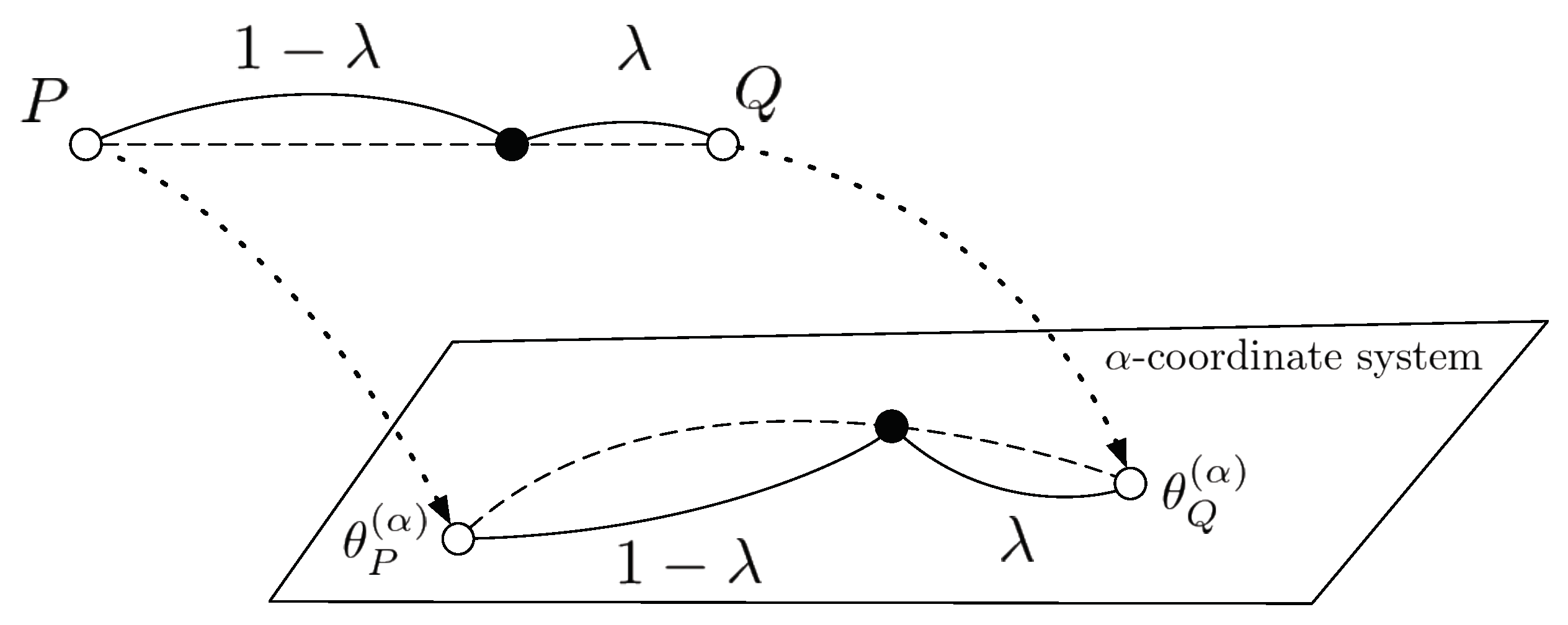

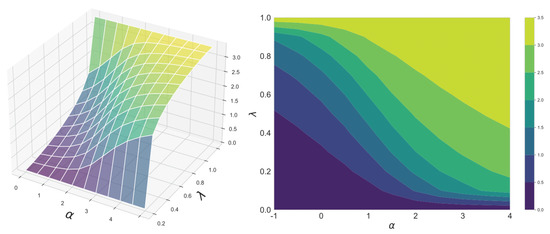

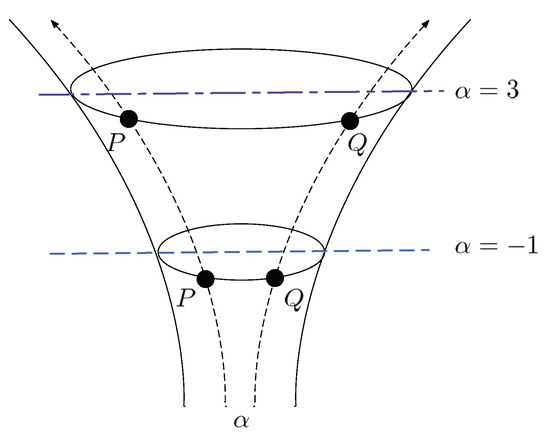

More generally, let and be the parameter representations on the -coordinate system of probability distributions P and Q. Then, the geodesics between them are represented as in Figure 3, and it induces the -geodesical skew divergence.

Figure 3.

The geodesic between two probability distributions on the -coordinate system.

5. Function Space Associated with the α-Geodesical Skew Divergence

To discuss the functional nature of the -geodesical skew divergence in more depth, the function space it constitutes is considered. For an -geodesical skew divergence with one side of the distribution fixed, let the entire set be

For , its semi-norm is defined by

By defining addition and scalar multiplication for , as follows, becomes a semi-norm vector space:

Theorem 2.

Let be the kernel of as follows:

Then the quotient space is a Banach space.

Proof.

It is sufficient to prove that is integrable to the power of p and that is complete. From Proposition 15, the -geodesical skew divergence is bounded from above for all and . Since is continuous, we know that it is p-power integrable.

Let be a Cauchy sequence of :

As can be taken to be monotonically increasing and

with respect to , let

If , it is non-negatively monotonically increasing at each point, and from the subadditivity of the norm, . From the monotonic convergence theorem, we have

That is, exists almost everywhere, and . From , we have

converges absolutely almost everywhere to That is, . Then

and from the superior convergence theorem, we can obtain

We have now confirmed the completeness of . □

Corollary 1.

Let

Then the space is a Banach space.

Proof.

If we restrict , if and only if . Then, has the unique identity element, and then is a complete norm space. □

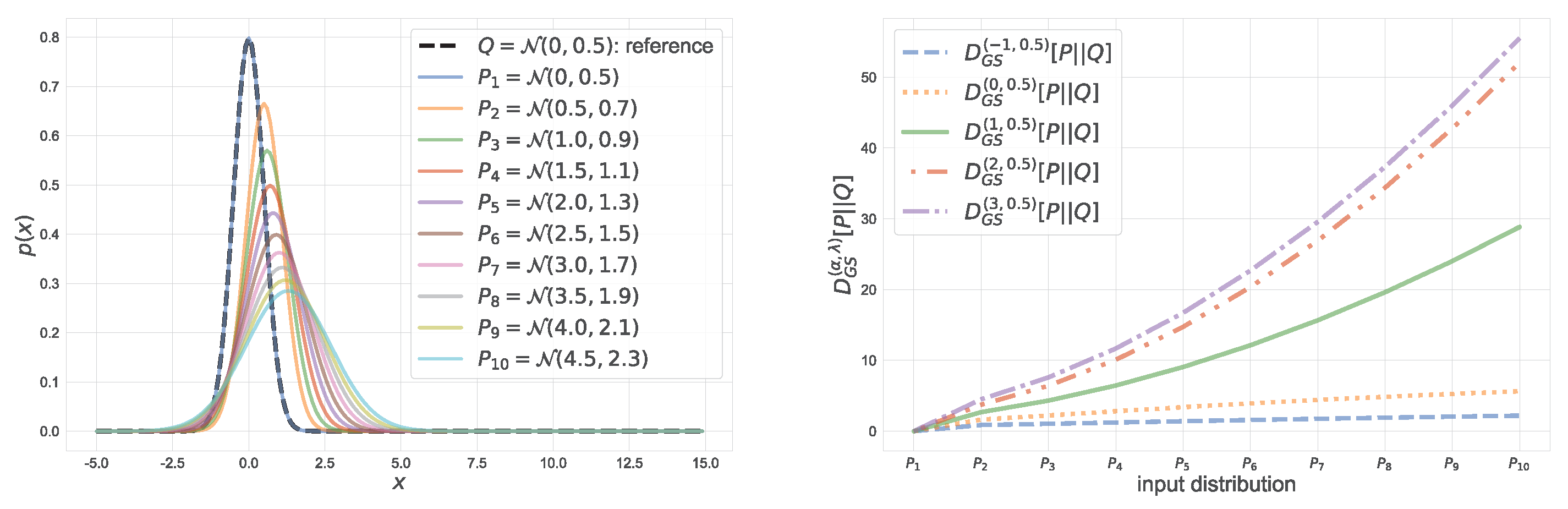

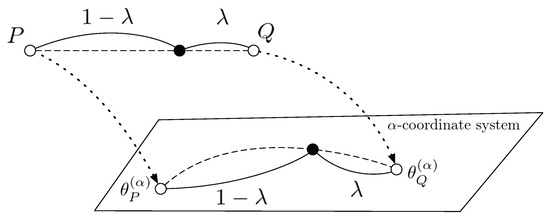

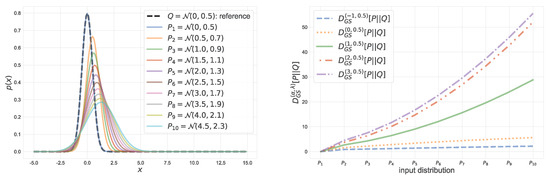

Consider the second argument Q of is fixed, which is referred to as the reference distribution. Figure 4 shows values of the -geodesical skew divergence for a fixed reference Q, where both P and Q are restricted to be Gaussian. In this figure, the reference distribution is and the parameters of input distributions are varied in and . From this figure, one can see that a larger value of emphasizes the discrepancy between distributions P and Q. Figure 5 illustrates a coordinate system associated with the -geodesical skew divergence for different . As seen from the figure, for the same pair of distributions P and Q, the value of divergence with is larger than that with .

Figure 4.

-geodesical skew divergence between two normal distributions. The reference distribution is . For , let their mean and variance be and , respectively, where and .

Figure 5.

Coordinate system of or . Such a coordinate system is not Euclidean.

6. Conclusions and Discussion

In this paper, a new family of divergence is proposed to address the computational difficulty of KL-divergence. The proposed -geodesical skew divergence is a natural derivation from the concept of -geodesics in information geometry and generalizes many existing divergences.

Furthermore, -geodesical skew divergence leads to several applications. For example, the new divergence can be applied to the annealed importance sampling by the same analogy as in previous studies using q-paths [41]. It could also be applied to linguistics, a field in which skew divergence was originally used [19].

Author Contributions

Formal analysis, M.K. and H.H.; Investigation, M.K.; Methodology, M.K. and H.H.; Software, M.K.; Supervision, H.H.; Validation, H.H.; Visualization, M.K.; Writing—original draft, M.K.; Writing—review & editing, H.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by JPSJ (KAKENHI) grant number JP17H01793, JST CREST Grant No. JPMJCR2015 and NEDO JPNP18002.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors express special thanks to the editor and reviewers, whose comments led to valuable improvements to the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Deza, M.M.; Deza, E. Encyclopedia of distances. In Encyclopedia of Distances; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–583. [Google Scholar]

- Basseville, M. Divergence measures for statistical data processing—An annotated bibliography. Signal Process. 2013, 93, 621–633. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Sakamoto, Y.; Ishiguro, M.; Kitagawa, G. Akaike Information Criterion Statistics; D. Reidel: Dordrecht, The Netherlands, 1986; Volume 81, p. 26853. [Google Scholar]

- Goldberger, J.; Gordon, S.; Greenspan, H. An Efficient Image Similarity Measure Based on Approximations of KL-Divergence Between Two Gaussian Mixtures. ICCV 2003, 3, 487–493. [Google Scholar]

- Yu, D.; Yao, K.; Su, H.; Li, G.; Seide, F. KL-divergence regularized deep neural network adaptation for improved large vocabulary speech recognition. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 16–31 May 2013. [Google Scholar]

- Solanki, K.; Sullivan, K.; Madhow, U.; Manjunath, B.; Chandrasekaran, S. Provably secure steganography: Achieving zero KL divergence using statistical restoration. In Proceedings of the 2006 International Conference on Image Processing, Atlanta, GA, USA, 8–11 October 2006. [Google Scholar]

- Lin, J. Divergence measures based on the Shannon entropy. IEEE Trans. Inf. Theory 1991, 37, 145–151. [Google Scholar] [CrossRef]

- Menéndez, M.; Pardo, J.; Pardo, L.; Pardo, M. The jensen-shannon divergence. J. Frankl. Inst. 1997, 334, 307–318. [Google Scholar] [CrossRef]

- Nielsen, F. On the Jensen–Shannon symmetrization of distances relying on abstract means. Entropy 2019, 21, 485. [Google Scholar] [CrossRef]

- Jeffreys, H. An Invariant Form for the Prior Probability in Estimation Problems. Available online: https://royalsocietypublishing.org/doi/10.1098/rspa.1946.0056 (accessed on 24 April 2021).

- Chatzisavvas, K.C.; Moustakidis, C.C.; Panos, C. Information entropy, information distances, and complexity in atoms. J. Chem. Phys. 2005, 123, 174111. [Google Scholar] [CrossRef]

- Bigi, B. Using Kullback-Leibler distance for text categorization. In European Conference on Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2003; pp. 305–319. [Google Scholar]

- Wang, F.; Vemuri, B.C.; Rangarajan, A. Groupwise point pattern registration using a novel CDF-based Jensen-Shannon divergence. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Nishii, R.; Eguchi, S. Image classification based on Markov random field models with Jeffreys divergence. J. Multivar. Anal. 2006, 97, 1997–2008. [Google Scholar] [CrossRef]

- Bayarri, M.; García-Donato, G. Generalization of Jeffreys divergence-based priors for Bayesian hypothesis testing. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2008, 70, 981–1003. [Google Scholar] [CrossRef]

- Nielsen, F. Jeffreys centroids: A closed-form expression for positive histograms and a guaranteed tight approximation for frequency histograms. IEEE Signal Process. Lett. 2013, 20, 657–660. [Google Scholar] [CrossRef]

- Nielsen, F. On a generalization of the Jensen–Shannon divergence and the Jensen–Shannon centroid. Entropy 2020, 22, 221. [Google Scholar] [CrossRef]

- Lee, L. Measures of distributional similarity. In Proceedings of the 37th Annual Meeting of the Association for Computational Linguistics on Computational Linguistics, College Park, MD, USA, 20–26 June 1999. [Google Scholar]

- Lee, L. On the Effectiveness of the Skew Divergence for Statistical Language Analysis. In Proceedings of the Eighth International Workshop on Artificial Intelligence and Statistics, Key West, FL, USA, 4–7 January 2001; pp. 176–783. [Google Scholar]

- Xiao, F.; Wu, Y.; Zhao, H.; Wang, R.; Jiang, S. Dual skew divergence loss for neural machine translation. arXiv 2019, arXiv:1908.08399. [Google Scholar]

- Carvalho, B.M.; Garduño, E.; Santos, I.O. Skew divergence-based fuzzy segmentation of rock samples. J. Phys. Conf. Ser. 2014, 490, 012010. [Google Scholar] [CrossRef]

- Revathi, P.; Hemalatha, M. Cotton leaf spot diseases detection utilizing feature selection with skew divergence method. Int. J. Sci. Eng. Technol. 2014, 3, 22–30. [Google Scholar]

- Ahmed, N.; Neville, J.; Kompella, R.R. Network Sampling via Edge-Based Node Selection with Graph Induction. Available online: https://docs.lib.purdue.edu/cgi/viewcontent.cgi?article=2743&context=cstech (accessed on 24 April 2021).

- Hughes, T.; Ramage, D. Lexical semantic relatedness with random graph walks. In Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL), Prague, Czech Republic, 28–30 June 2007. [Google Scholar]

- Audenaert, K.M. Quantum skew divergence. J. Math. Phys. 2014, 55, 112202. [Google Scholar] [CrossRef]

- Hardy, G.H.; Littlewood, J.E.; Pólya, G. Inequalities; Cambridge University Press: Cambridge, UK, 1952. [Google Scholar]

- Amari, S.I. Information Geometry and Its Applications; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Kolmogorov, A.N.; Castelnuovo, G. Sur la Notion de la Moyenne; Atti Accad. Naz: Lincei, French, 1930. [Google Scholar]

- Nagumo, M. Über eine klasse der mittelwerte. Jpn. J. Math. 1930, 7, 71–79. [Google Scholar] [CrossRef]

- Nielsen, F. Generalized Bhattacharyya and Chernoff upper bounds on Bayes error using quasi-arithmetic means. Pattern Recognit. Lett. 2014, 42, 25–34. [Google Scholar] [CrossRef][Green Version]

- Amari, S.I. Differential-Geometrical Methods in Statistics; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012; Volume 28. [Google Scholar]

- Amari, S. Differential-geometrical methods in statistics. Lect. Notes Stat. 1985, 28, 1. [Google Scholar]

- Amari, S. α-Divergence Is Unique, Belonging to Both f-Divergence and Bregman Divergence Classes. IEEE Trans. Inf. Theory 2009, 55, 4925–4931. [Google Scholar] [CrossRef]

- Ay, N.; Jost, J.; Lê, H.V.; Schwachhöfer, L. Information Geometry; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Morozova, E.A.; Chentsov, N.N. Markov invariant geometry on manifolds of states. J. Sov. Math. 1991, 56, 2648–2669. [Google Scholar] [CrossRef]

- Eguchi, S.; Komori, O. Path Connectedness on a Space of Probability Density Functions. In Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 615–624. [Google Scholar] [CrossRef]

- Nielsen, F. On a Variational Definition for the Jensen-Shannon Symmetrization of Distances Based on the Information Radius. Entropy 2021, 23, 464. [Google Scholar] [CrossRef]

- Nielsen, F. A family of statistical symmetric divergences based on Jensen’s inequality. arXiv 2010, arXiv:1009.4004. [Google Scholar]

- Cover, T.M. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 1999. [Google Scholar]

- Brekelmans, R.; Masrani, V.; Bui, T.D.; Wood, F.D.; Galstyan, A.; Steeg, G.V.; Nielsen, F. Annealed Importance Sampling with q-Paths. arXiv 2020, arXiv:2012.07823. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).