CSI Amplitude Fingerprinting for Indoor Localization with Dictionary Learning

Abstract

:1. Introduction

1.1. Fingerprinting Localization

1.2. Dictionary Learning

- Dictionary items are selected from an initialized large dictionary to generate a more compact dictionary.

- The discriminant term is incorporated into the objective function to enhance the identifiability of sparse coding. Among them, distinguishing KSVD (D-KSVD) [20], label consistent KSVD (LC-KSVD) [21] and Fisher discrimination DL (FDDL) [22] are three representative algorithms. D-KSVD introduces the classification discriminant error in the objective function, thereby increasing the classification ability; LC-KSVD further merges the label consistency constraint in the objective function of KSVD to ensure that sparse coding can represent data while also having a high degree of discrimination [12,23]; FDDL looks for structured dictionaries and forces sparse coding to have smaller intra-class walks and larger inter-class walks.

- The goal of the third category is to calculate category-specific sub-dictionaries, thereby encouraging each sub-dictionary to correspond to a single category. For example, in [24], it introduces an incoherent promotion term to ensure the independence between the learned sub-dictionaries that serve a specific category. Zhou et al. [25] proposed a DL algorithm that associates target categories by learning multiple dictionaries.

1.3. Limitations

1.4. Contributions

2. Preliminary

2.1. CSI

2.2. Sparse Coding

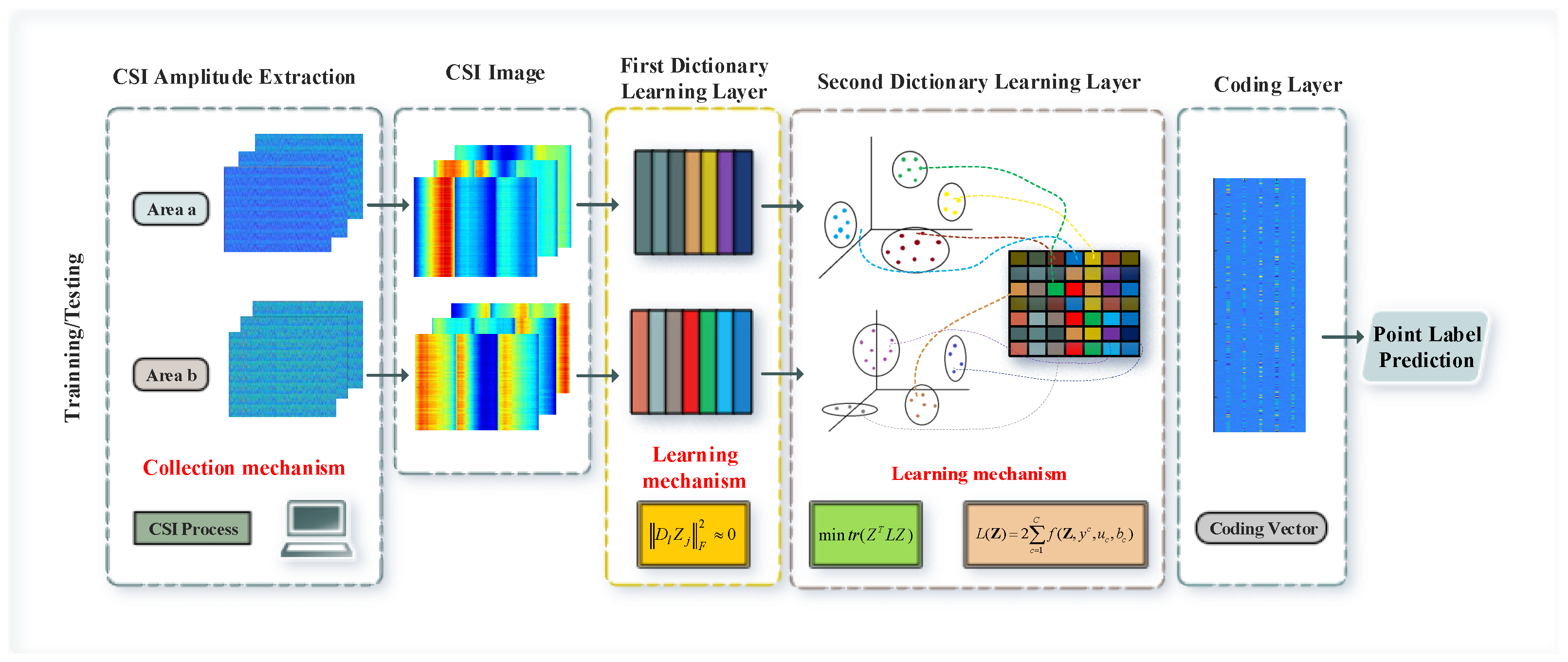

3. Materials and Methods

3.1. CSI Images

3.2. First Dictionary Learning Layer

3.3. Second Dictionary Learning Layer

3.4. Optimization

| Algorithm 1 Optimization Procedure. |

| Require: Training data Y, dictionary size K, parameters while First DL not converged do for l = 1 to L do Update the sparse codes by (19) end for Update the dictionary by (23) Initialize while Second DL not converged do for to L do Construct the graph Laplacian matrix Update by (33) for to n do Update by using (29) for to C do Update and by solving (34) end for end for end for end while end while return Dictionary D,, Parameters: |

4. Experiments

4.1. Experimental Scene Setting

4.1.1. Single Laboratory

4.1.2. Comprehensive Environment

4.2. Convergence Analysis

4.3. Parameter Setting

4.4. Positioning Accuacy Assessment

4.5. Comparison of DL Methods

4.5.1. Matching Results on Laboratory

4.5.2. Matching Results on Comprehensive Environment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Basri, C.; El Khadimi, A. Survey on indoor localization system and recent advances of WIFI fingerprinting technique. In Proceedings of the 5th International Conference on Multimedia Computing and Systems (ICMCS), Marrakech, Morocco, 29 September–1 October 2016. [Google Scholar]

- Zafari, F.; Gkelias, A.; Leung, K.K. A survey of indoor localization systems and technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599. [Google Scholar] [CrossRef] [Green Version]

- Xiao, J.; Zhou, Z.; Yi, Y.; Ni, L.M. A survey on wireless indoor localization from the device perspective. ACM Comput. Surv. (CSUR) 2016, 49, 1–31. [Google Scholar] [CrossRef]

- Wang, X.; Gao, L.; Mao, S. PhaseFi: Phase fingerprinting for indoor localization with a deep learning approach. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), San Diego, CA, USA, 6–10 December 2015; pp. 1–6. [Google Scholar]

- Wang, X.; Wang, X.; Mao, S. ResLoc: Deep residual sharing learning for indoor localization with CSI tensors. In Proceedings of the IEEE 28th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Montreal, QC, Canada, 8–13 October 2017; pp. 1–6. [Google Scholar]

- Zhang, Y.; Li, D.; Wang, Y. An indoor passive positioning method using CSI fingerprint based on AdaBoost. IEEE Sens. J. 2019, 19, 5792–5800. [Google Scholar] [CrossRef]

- Youssef, M.; Agrawala, A. The Horus WLAN location determination system. In Proceedings of the 3rd International Conference on Mobile Systems, Applications, and Services, Seattle, WA, USA, 6–8 June 2005. [Google Scholar]

- Xiao, J.; Wu, K.; Yi, Y.; Ni, L.M. FIFS: Fine-grained indoor fingerprinting system. In Proceedings of the 21st International Conference on Computer Communications and Networks (ICCCN), Munich, Germany, 30 July–2 August 2012. [Google Scholar]

- Wang, X.; Gao, L.; Mao, S.; Pandey, S. DeepFi: Deep learning for indoor fingerprinting using channel state information. In Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC), New Orleans, LA, USA, 9–12 March 2015. [Google Scholar]

- Zhang, Z.; Xuelong, L.; Yang, J.; Li, X.; Zhang, D. A survey of sparse representation: Algorithms and applications. IEEE Access 2015, 3, 490–530. [Google Scholar] [CrossRef]

- Fardel, R.; Nagel, M.; Nüesch, F.; Lippert, T.; Wokaun, A. Fabrication of organic light emitting diode pixels by laser-assisted forward transfer. Appl. Phys. Lett. 2007, 91, 061103. [Google Scholar] [CrossRef]

- Maggu, J.; Aggarwal, H.K.; Majumdar, A. Label-Consistent Transform Learning for Hyperspectral Image Classification. IEEE Geosci. Remote. Sens. Lett. 2019, 16, 1502–1506. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Guo, Y.; Guo, J.; Luo, X.; Kong, X. Class-aware analysis dictionary learning for pattern classification. IEEE Signal Process. Lett. 2017, 24, 1822–1826. [Google Scholar] [CrossRef]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Projective dictionary pair learning for pattern classification. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014; pp. 793–801. [Google Scholar]

- Michal, A.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar]

- Chen, B.; Li, J.; Ma, B.; Wei, G. Discriminative dictionary pair learning based on differentiable support vector function for visual recognition. Neurocomputing 2018, 272, 306–313. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, F.; Chow, T.W.S.; Zhang, L.; Yan, S. Sparse codes auto-extractor for classification: A joint embedding and dictionary learning framework for representation. IEEE Trans. Signal Process. 2016, 64, 3790–3805. [Google Scholar] [CrossRef]

- Wang, X.; Gao, L.; Mao, S. BiLoc: Bi-modal deep learning for indoor localization with commodity 5 GHz WiFi. IEEE Access 2017, 5, 4209–4220. [Google Scholar] [CrossRef]

- Yang, M.; Chang, H.; Luo, W. Discriminative analysis-synthesis dictionary learning for image classification. Neurocomputing 2017, 219, 404–411. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Q.; Li, B. Discriminative K-SVD for dictionary learning in face recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Jiang, Z.; Lin, Z.; Davis, L.S. Label consistent K-SVD: Learning a discriminative dictionary for recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2651–2664. [Google Scholar] [CrossRef]

- Yang, M.; Zhang, L.; Feng, X.; Zhang, D. Fisher discrimination dictionary learning for sparse representation. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Shao, S.; Wang, Y.-J.; Liu, B.-D.; Liu, W.; Xu, R. Label embedded dictionary learning for image classification. arXiv 2019, arXiv:1903.03087. [Google Scholar] [CrossRef] [Green Version]

- Yin, H.-F.; Wu, X.-J.; Chen, S.-G. Locality constraint dictionary learning with support vector for pattern classification. IEEE Access 2019, 7, 175071–175082. [Google Scholar] [CrossRef]

- Tang, H.; Liu, H.; Xiao, W.; Sebe, N. When dictionary learning meets deep learning: Deep dictionary learning and coding network for image recognition with limited data. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 2129–2141. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Cheng, Q.; Deng, Z.; Fu, X.; Zheng, X. C-Map: Hyper-Resolution Adaptive Preprocessing System for CSI Amplitude-Based Fingerprint Localization. IEEE Access 2019, 7, 135063–135075. [Google Scholar] [CrossRef]

- Wang, Z.; Jiang, K.; Hou, Y.; Dou, W.; Zhang, C.; Huang, Z.; Guo, Y. A Survey on Human Behavior Recognition Using Channel State Information. IEEE Access 2019, 7, 155986–156024. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference Learn. Represent. (ICLR), San Diego, CA, USA, 5–8 May 2015. [Google Scholar]

- Wang, X.; Wang, X.; Mao, S. Deep Convolutional Neural Networks for Indoor Localization with CSI Images. IEEE Trans. Netw. Sci. Eng. 2018, 7, 316–327. [Google Scholar] [CrossRef]

- Mahdizadehaghdam, S.; Panahi, A.; Krim, H.; Dai, L. Deep dictionary learning: A parametric network approach. IEEE Trans. Image Process. 2019, 28, 4790–4802. [Google Scholar] [CrossRef] [Green Version]

- Shin, H.-C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Y.; Zhang, J.; Cao, Y.; Wang, Z. A deep CNN method for underwater image enhancement. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 1382–1386. [Google Scholar]

- Xu, Y.; Zhang, D.; Yang, J.; Yang, J.-Y. A two-phase test sample sparse representation method for use with face recognition. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 1255–1262. [Google Scholar]

- Gao, Y.; Ma, J.; Yuille, A.L. Semi-supervised sparse representation based classification for face recognition with insufficient labeled samples. IEEE Trans. Image Process. 2017, 26, 2545–2560. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Zhang, Y.; Li, W.; Tao, X.; Zhang, P. ConFi: Convolutional neural networks based indoor Wi-Fi localization using channel state information. IEEE Access 2017, 5, 18066–18074. [Google Scholar] [CrossRef]

| Method | Lab. Mean (m) | Lab. Std (m) | Com. Mean (m) | Com. Std (m) |

|---|---|---|---|---|

| DeepFi | 0.78 | 0.91 | 1.18 | 1.75 |

| BiLoc | 0.82 | 1.03 | 1.29 | 1.98 |

| ConFi | 0.71 | 0.84 | 1.07 | 1.24 |

| DDLC | 0.56 | 0.71 | 0.68 | 1.54 |

| Num. of Tr. Samp. | 10 | 15 | 20 | 25 | 30 |

|---|---|---|---|---|---|

| SRC | 70.7 | 79.6 | 82.2 | 85.4 | 90.1 |

| KSVD | 61.1 | 70.7 | 77.2 | 82.7 | 85.1 |

| D-KSVD | 63.9 | 75.8 | 82.7 | 87.1 | 92.9 |

| LC-KSVD1 | 64.7 | 77.9 | 82.8 | 86.7 | 90.4 |

| LC-KSVD2 | 64.1 | 78.3 | 82.7 | 87.6 | 90.7 |

| Our DDLC | 74.4 | 82.6 | 87.8 | 93.3 | 94.6 |

| Num. of Tr. Samp. | 10 | 15 | 20 | 25 | 30 |

|---|---|---|---|---|---|

| SRC | 67.2 | 73.4 | 78.5 | 84.1 | 86.0 |

| KSVD | 58.0 | 67.4 | 76.6 | 79.3 | 82.2 |

| D-KSVD | 59.8 | 70.0 | 78.3 | 84.3 | 86.7 |

| LC-KSVD1 | 60.7 | 69.9 | 77.3 | 82.7 | 88.1 |

| LC-KSVD2 | 61.6 | 71.3 | 77.8 | 82.2 | 87.6 |

| Our DDLC | 81.4 | 87.5 | 89.8 | 91.1 | 92.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Wang, X.; Deng, Z. CSI Amplitude Fingerprinting for Indoor Localization with Dictionary Learning. Entropy 2021, 23, 1164. https://doi.org/10.3390/e23091164

Liu W, Wang X, Deng Z. CSI Amplitude Fingerprinting for Indoor Localization with Dictionary Learning. Entropy. 2021; 23(9):1164. https://doi.org/10.3390/e23091164

Chicago/Turabian StyleLiu, Wen, Xu Wang, and Zhongliang Deng. 2021. "CSI Amplitude Fingerprinting for Indoor Localization with Dictionary Learning" Entropy 23, no. 9: 1164. https://doi.org/10.3390/e23091164