An Edge Server Placement Method Based on Reinforcement Learning

Abstract

:1. Introduction

- A new solution model is proposed for the ESPP based on MDP and reinforcement learning. In this model, location sequences of edge servers are modeled as states, decisions of move direction of edge servers are modeled as actions, and the negative access delay and standard deviation of workloads are modeled as rewards. By maximizing the cumulative long-term reward, the ESPP can be solved by the reinforcement learning algorithm.

- An edge server placement algorithm based on deep q-network (DQN), named DQN-ESPA, is proposed to solve the ESPP by combining a deep neural network and a Q-learning algorithm.

2. Related Work

3. System Model

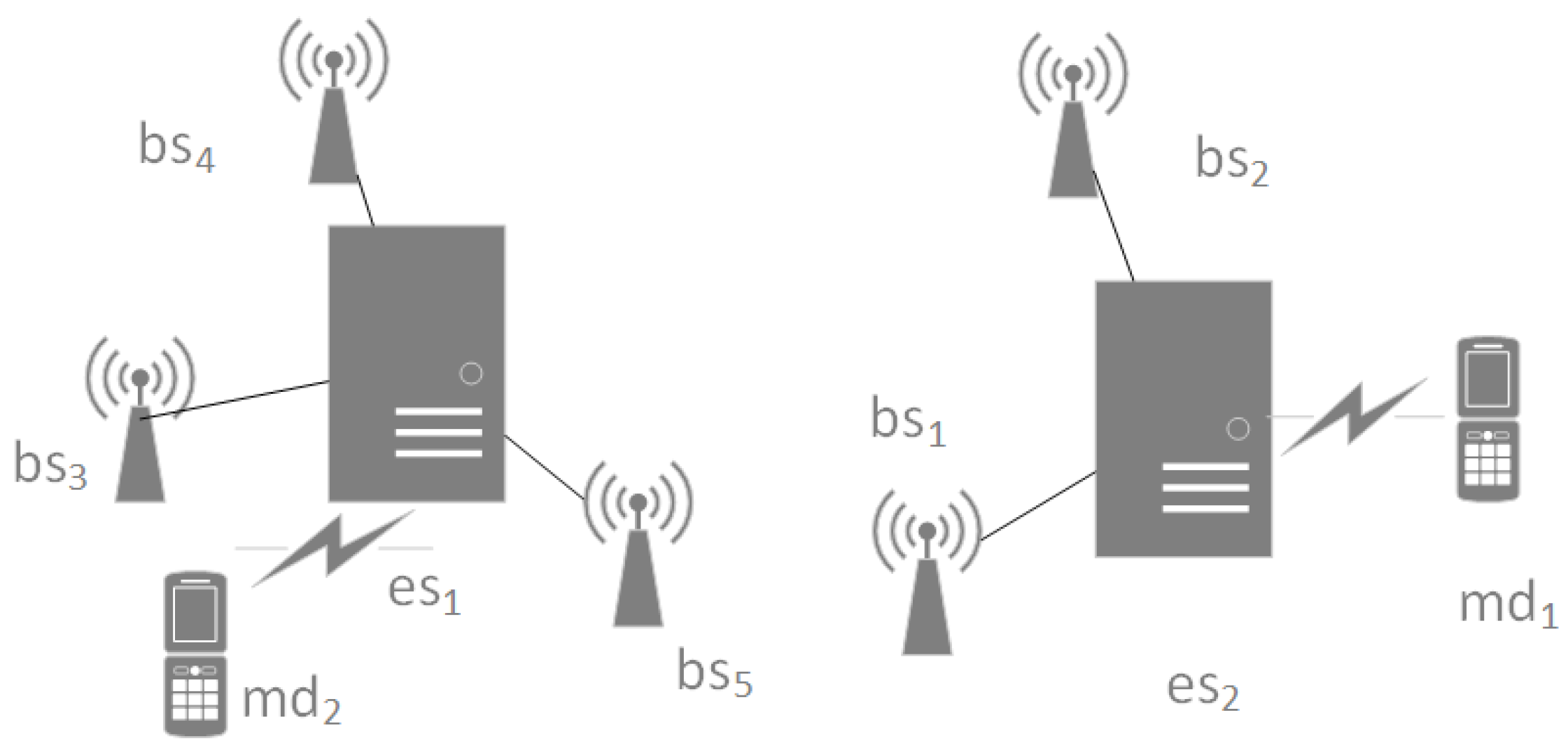

3.1. MEC Model

3.2. Problem Description

- Each edge server is homogeneous and has the same processing and storage capabilities.

- Let the set of base stations covered by each edge server be , the set of base stations covered by each edge server does not intersect, i.e., . The union of the set of base stations covered by all edge servers is .

- An edge server is placed next to a base station, and n base stations have n positions.

- Only one edge server can be placed in each location.

- Each edge server is only responsible for processing requests uploaded by base stations within the signal coverage, and each base station within the signal coverage of each edge server can only forward requests to the edge server.

- Workload balanceThe edge server is responsible for processing network service requests forwarded through all base stations in the base station set . The workload of each base station is represented as , and the workload of the edge server is represented as which is the sum of the workloads of all base stations in , as shown in Formula (1).Then the standard deviation of all the edge servers’ workloads is shown in Formula (2), where is the average workload of m edge servers.

- Access delayEach base station can directly request the edge server to obtain network services through the link connection. Assuming that the positions of the base station and the edge server are denoted as and , respectively, the length of the connecting edge between the two can be obtained based on the longitude and latitude information of the base station, as shown in Formula (3). In this paper, we use the length of the edge to measure the data transmission delay between the base station and the edge server.Then the average access delay is represented as Formula (4).

4. Algorithm Design

- S is a limited state space.

- A is a limited action space.

- , it is the state transition model. Specifically, represents the probability distribution that the agent transitions to state after executing an action a in the state s.

- , it represents the reward function. For example, represents the reward value given by the environment after the agent executes the action a in the state s.

- is a discount factor used to balance the importance of immediate and long-term rewards.

4.1. MDP Model

4.1.1. State

4.1.2. Action

4.1.3. Reward

4.2. DQN-ESPA

| Algorithm 1 DQN-ESPA |

|

5. Performance Evaluation

5.1. Configuration of the Experiments

5.2. Dataset Description

5.3. Experimental Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- You, C.; Huang, K.; Chae, H.; Kim, B.H. Energy-efficient resource allocation for mobile-edge computation offloading. IEEE Trans. Wirel. Commun. 2016, 16, 1397–1411. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, J.; Letaief, K.B. Dynamic computation offloading for mobile-edge computing with energy harvesting devices. IEEE J. Sel. Areas Commun. 2016, 34, 3590–3605. [Google Scholar] [CrossRef] [Green Version]

- Arkian, H.R.; Diyanat, A.; Pourkhalili, A. MIST: Fog-based data analytics scheme with cost-efficient resource provisioning for IoT crowdsensing applications. J. Netw. Comput. Appl. 2017, 82, 152–165. [Google Scholar] [CrossRef]

- Gu, L.; Zeng, D.; Guo, S.; Barnawi, A.; Xiang, Y. Cost efficient resource management in fog computing supported medical cyber-physical system. IEEE Trans. Emerg. Top. Comput. 2015, 5, 108–119. [Google Scholar] [CrossRef]

- Taleb, T.; Ksentini, A. An analytical model for follow me cloud. In Proceedings of the 2013 IEEE Global Communications Conference (GLOBECOM), Atlanta, GA, USA, 9–13 December 2013; pp. 1291–1296. [Google Scholar]

- Taleb, T.; Ksentini, A.; Frangoudis, P. Follow-me cloud: When cloud services follow mobile users. IEEE Trans. Cloud Comput. 2016, 7, 369–382. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Guo, Y.; Zhang, N.; Yang, P.; Zhou, A.; Shen, X.S. Delay-aware microservice coordination in mobile edge computing: A reinforcement learning approach. IEEE Trans. Mob. Comput. 2019, 20, 939–951. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Y.; Huang, L.; Xu, J.; Hsu, C.H. QoS prediction for service recommendations in mobile edge computing. J. Parallel Distrib. Comput. 2019, 127, 134–144. [Google Scholar] [CrossRef]

- Ma, L.; Wu, J.; Chen, L. DOTA: Delay bounded optimal cloudlet deployment and user association in WMANs. In Proceedings of the 2017 17th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGRID), Madrid, Spain, 14–17 May 2017; pp. 196–203. [Google Scholar]

- Xu, Z.; Liang, W.; Xu, W.; Jia, M.; Guo, S. Capacitated cloudlet placements in wireless metropolitan area networks. In Proceedings of the 2015 IEEE 40th Conference on Local Computer Networks (LCN), Clearwater Beach, FL, USA, 26–29 October 2015; pp. 570–578. [Google Scholar]

- Meng, J.; Shi, W.; Tan, H.; Li, X. Cloudlet placement and minimum-delay routing in cloudlet computing. In Proceedings of the 2017 3rd International Conference on Big Data Computing and Communications (BIGCOM), Chengdu, China, 10–11 August 2017; pp. 297–304. [Google Scholar]

- Wang, S.; Zhao, Y.; Xu, J.; Yuan, J.; Hsu, C.H. Edge server placement in mobile edge computing. J. Parallel Distrib. Comput. 2019, 127, 160–168. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, S.; Zhou, A.; Xu, J.; Yuan, J.; Hsu, C.H. User allocation-aware edge cloud placement in mobile edge computing. Software Pract. Exp. 2020, 50, 489–502. [Google Scholar] [CrossRef]

- Zhang, R.; Yan, F.; Xia, W.; Xing, S.; Wu, Y.; Shen, L. An optimal roadside unit placement method for vanet localization. In Proceedings of the GLOBECOM 2017-2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar]

- Lu, H.; Gu, C.; Luo, F.; Ding, W.; Liu, X. Optimization of lightweight task offloading strategy for mobile edge computing based on deep reinforcement learning. Future Gener. Comput. Syst. 2020, 102, 847–861. [Google Scholar] [CrossRef]

- Curran, W.; Brys, T.; Taylor, M.; Smart, W. Using PCA to efficiently represent state spaces. arXiv 2015, arXiv:1505.00322. [Google Scholar]

- Dolui, K.; Datta, S.K. Comparison of edge computing implementations: Fog computing, cloudlet and mobile edge computing. In Proceedings of the 2017 Global Internet of Things Summit (GIoTS), Geneva, Switzerland, 6–9 June 2017; pp. 1–6. [Google Scholar]

- Lhderanta, T.; Leppnen, T.; Ruha, L.; Lovén, L.; Harjula, E.; Ylianttila, M.; Riekki, J.; Sillanp, M.J. Edge computing server placement with capacitated location allocation. J. Parallel Distrib. Comput. 2021, 153, 130–149. [Google Scholar] [CrossRef]

- Zhao, X.; Zeng, Y.; Ding, H.; Li, B.; Yang, Z. Optimize the placement of edge server between workload balancing and system delay in smart city. Peer-to-Peer Netw. Appl. 2021, 14, 3778–3792. [Google Scholar] [CrossRef]

- Liang, Y.; Liu, H.; Rajan, D. Optimal placement and configuration of roadside units in vehicular networks. In Proceedings of the 2012 IEEE 75th Vehicular Technology Conference (VTC Spring), Yokohama, Japan, 6–9 May 2012; pp. 1–6. [Google Scholar]

- Wang, Z.; Zheng, J.; Wu, Y.; Mitton, N. A centrality-based RSU deployment approach for vehicular ad hoc networks. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017. [Google Scholar]

- Aslam, B.; Amjad, F.; Zou, C.C. Optimal roadside units placement in urban areas for vehicular networks. In Proceedings of the 2012 IEEE Symposium on Computers and Communications (ISCC), Cappadocia, Turkey, 1–4 July 2012; pp. 000423–000429. [Google Scholar]

- Trullols, O.; Fiore, M.; Casetti, C.; Chiasserini, C.F.; Ordinas, J.B. Planning roadside infrastructure for information dissemination in intelligent transportation systems. Comput. Commun. 2010, 33, 432–442. [Google Scholar] [CrossRef]

- Balouchzahi, N.M.; Fathy, M.; Akbari, A. Optimal road side units placement model based on binary integer programming for efficient traffic information advertisement and discovery in vehicular environment. IET Intell. Transp. Syst. 2015, 9, 851–861. [Google Scholar] [CrossRef]

- Premsankar, G.; Ghaddar, B.; Di Francesco, M.; Verago, R. Efficient placement of edge computing devices for vehicular applications in smart cities. In Proceedings of the NOMS 2018—2018 IEEE/IFIP Network Operations and Management Symposium, Taipei, Taiwan, 23–27 April 2018; pp. 1–9. [Google Scholar]

- Fan, Q.; Ansari, N. Cost aware cloudlet placement for big data processing at the edge. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017. [Google Scholar]

- Jia, M.; Cao, J.; Liang, W. Optimal cloudlet placement and user to cloudlet allocation in wireless metropolitan area networks. IEEE Trans. Cloud Comput. 2015, 5, 725–737. [Google Scholar] [CrossRef]

- Lewis, G.; Echeverría, S.; Simanta, S.; Bradshaw, B.; Root, J. Tactical cloudlets: Moving cloud computing to the edge. In Proceedings of the 2014 IEEE Military Communications Conference, Baltimore, MD, USA, 6–8 October 2014; pp. 1440–1446. [Google Scholar]

- Li, H.; Dong, M.; Liao, X.; Jin, H. Deduplication-based energy efficient storage system in cloud environment. Comput. J. 2015, 58, 1373–1383. [Google Scholar] [CrossRef]

- Li, H.; Dong, M.; Ota, K.; Guo, M. Pricing and repurchasing for big data processing in multi-clouds. IEEE Trans. Emerg. Top. Comput. 2016, 4, 266–277. [Google Scholar] [CrossRef] [Green Version]

- Zeng, F.; Ren, Y.; Deng, X.; Li, W. Cost-effective edge server placement in wireless metropolitan area networks. Sensors 2019, 19, 32. [Google Scholar] [CrossRef] [Green Version]

- Mao, H.; Alizadeh, M.; Menache, I.; Kandula, S. Resource management with deep reinforcement learning. In Proceedings of the 15th ACM Workshop on Hot Topics in Networks, Atlanta, GA, USA, 9–10 November 2016; pp. 50–56. [Google Scholar]

- Skarlat, O.; Nardelli, M.; Schulte, S.; Borkowski, M.; Leitner, P. Optimized IoT service placement in the fog. Serv. Oriented Comput. Appl. 2017, 11, 427–443. [Google Scholar] [CrossRef]

- Yang, L.; Cao, J.; Liang, G.; Han, X. Cost aware service placement and load dispatching in mobile cloud systems. IEEE Trans. Comput. 2015, 65, 1440–1452. [Google Scholar] [CrossRef]

- Messaoudi, F.; Ksentini, A.; Bertin, P. On using edge computing for computation offloading in mobile network. In Proceedings of the GLOBECOM 2017-2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–7. [Google Scholar]

- Watkins, C.J. Technical note q-learning. Reinf. Learn. 1993, 8, 279–292. [Google Scholar]

- Bertsekas, D.P. Stable optimal control and semicontractive dynamic programming. SIAM J. Control. Optim. 2018, 56, 231–252. [Google Scholar] [CrossRef] [Green Version]

- Scheffler, K.; Young, S. Automatic learning of dialogue strategy using dialogue simulation and reinforcement learning. In Proceedings of the Second International Conference on Human Language Technology Research, Citeseer, San Francisco, CA, USA, 24–27 March 2002; pp. 12–19. [Google Scholar]

- Yang, G.S.; Chen, E.K.; An, C.W. Mobile robot navigation using neural Q-learning. In Proceedings of the 2004 International Conference on Machine Learning and Cybernetics (IEEE Cat. No. 04EX826), Shanghai, China, 26–29 August 2004; Volume 1, pp. 48–52. [Google Scholar]

- Wang, Y.C.; Usher, J.M. Application of reinforcement learning for agent-based production scheduling. Eng. Appl. Artif. Intell. 2005, 18, 73–82. [Google Scholar] [CrossRef]

- Djonin, D.V.; Krishnamurthy, V. Q-Learning Algorithms for Constrained Markov Decision Processes With Randomized Monotone Policies: Application to MIMO Transmission Control. IEEE Trans. Signal Process. 2007, 55, 2170–2181. [Google Scholar] [CrossRef]

- Bellman, R. Dynamic programming. Science 1966, 153, 34–37. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

| No. | DQN-ESPA | SAPA | KMPA | TKPA | RPA |

|---|---|---|---|---|---|

| 100 | 0.8380 | 0.8529 | 0.8896 | 0.9589 | 0.9677 |

| 300 | 0.8144 | 0.8343 | 0.8593 | 0.9389 | 0.9643 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, F.; Zheng, S.; Ding, W.; Fuentes, J.; Li, Y. An Edge Server Placement Method Based on Reinforcement Learning. Entropy 2022, 24, 317. https://doi.org/10.3390/e24030317

Luo F, Zheng S, Ding W, Fuentes J, Li Y. An Edge Server Placement Method Based on Reinforcement Learning. Entropy. 2022; 24(3):317. https://doi.org/10.3390/e24030317

Chicago/Turabian StyleLuo, Fei, Shuai Zheng, Weichao Ding, Joel Fuentes, and Yong Li. 2022. "An Edge Server Placement Method Based on Reinforcement Learning" Entropy 24, no. 3: 317. https://doi.org/10.3390/e24030317

APA StyleLuo, F., Zheng, S., Ding, W., Fuentes, J., & Li, Y. (2022). An Edge Server Placement Method Based on Reinforcement Learning. Entropy, 24(3), 317. https://doi.org/10.3390/e24030317