DIA-TTS: Deep-Inherited Attention-Based Text-to-Speech Synthesizer

Abstract

:1. Introduction

2. Model Architecture

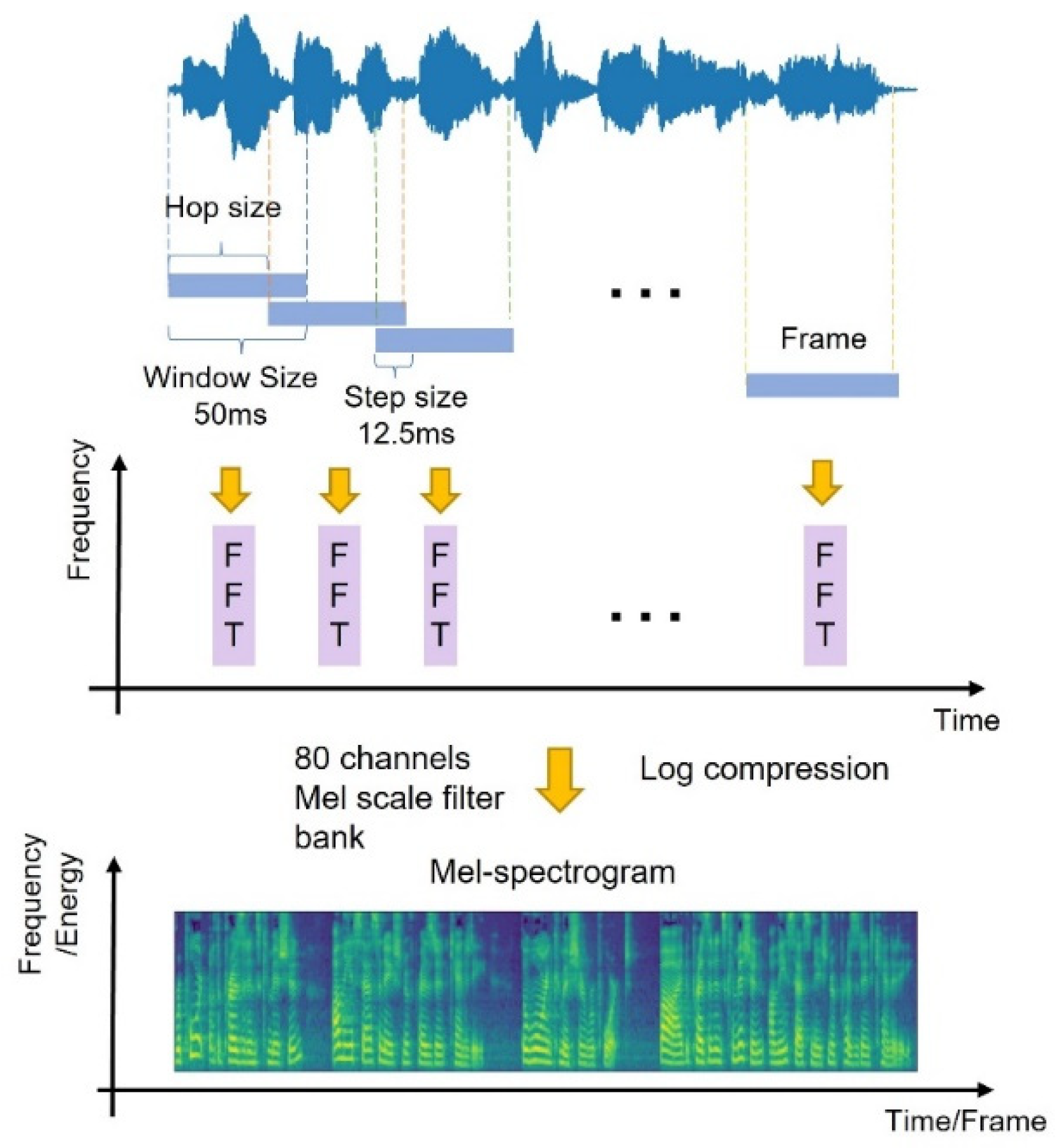

2.1. Encoder

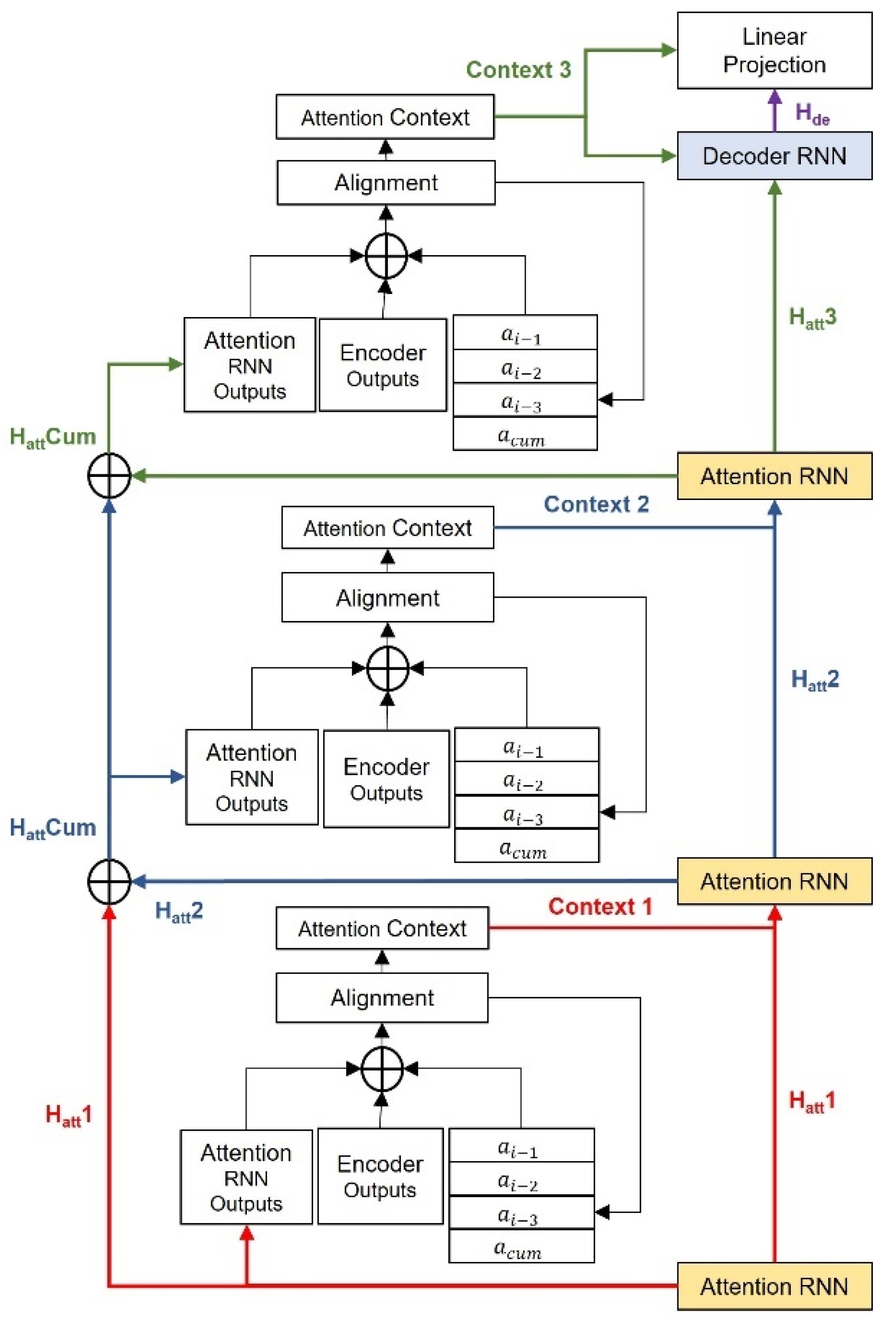

2.2. DIA-Based Decoder

2.2.1. Tribal-Inherited Local-Sensitive Attention

2.2.2. Four-Layer RNN Block

2.3. WaveGlow Vocoder Model

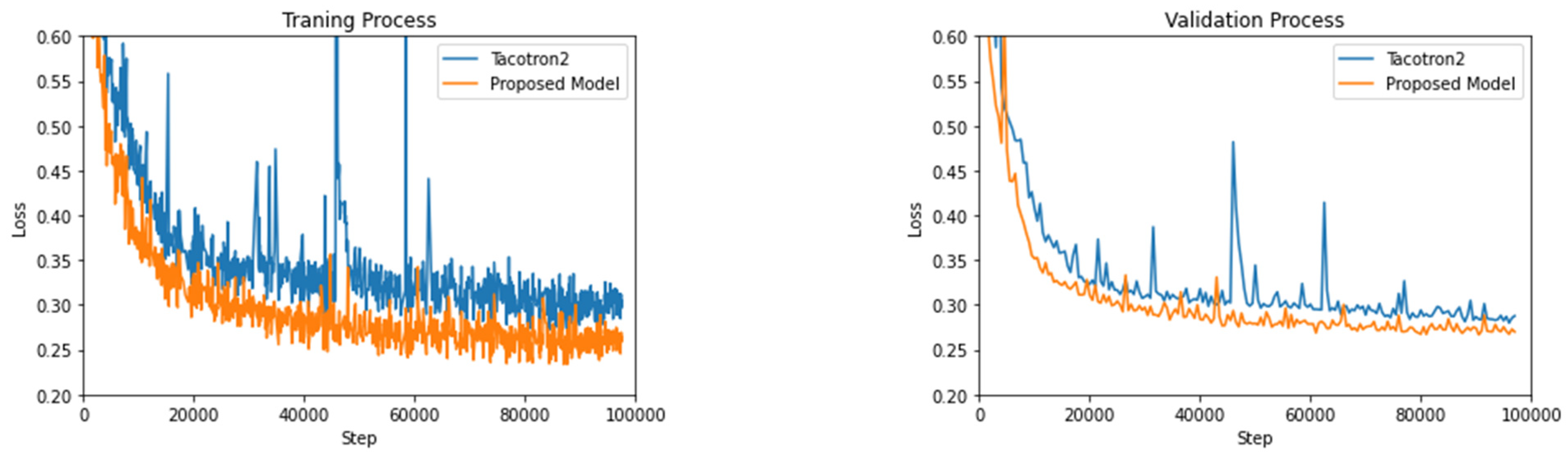

3. Results

3.1. WaveGlow Vocoder Model

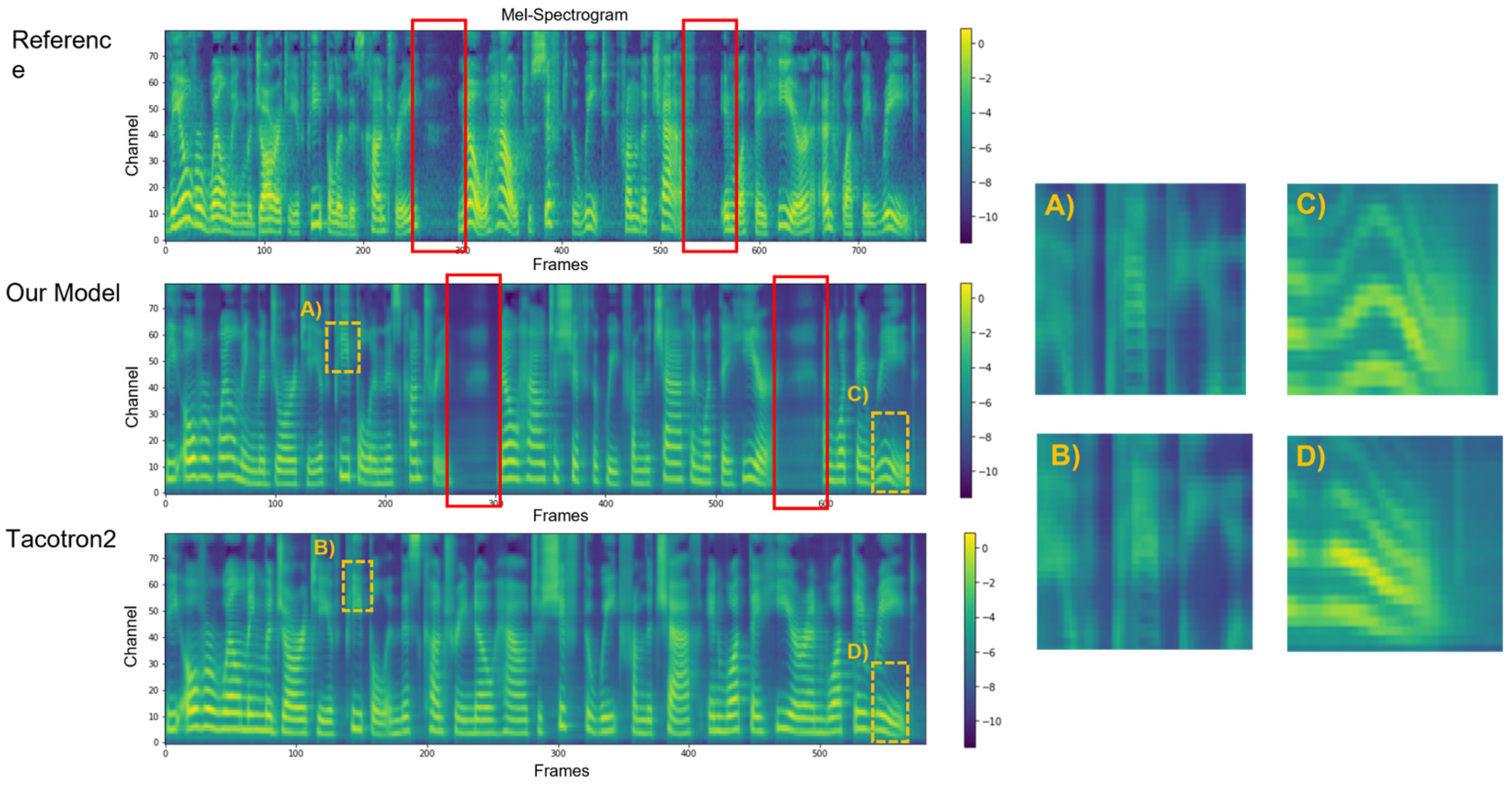

3.2. Mel-Spectrogram Comparison Experiment

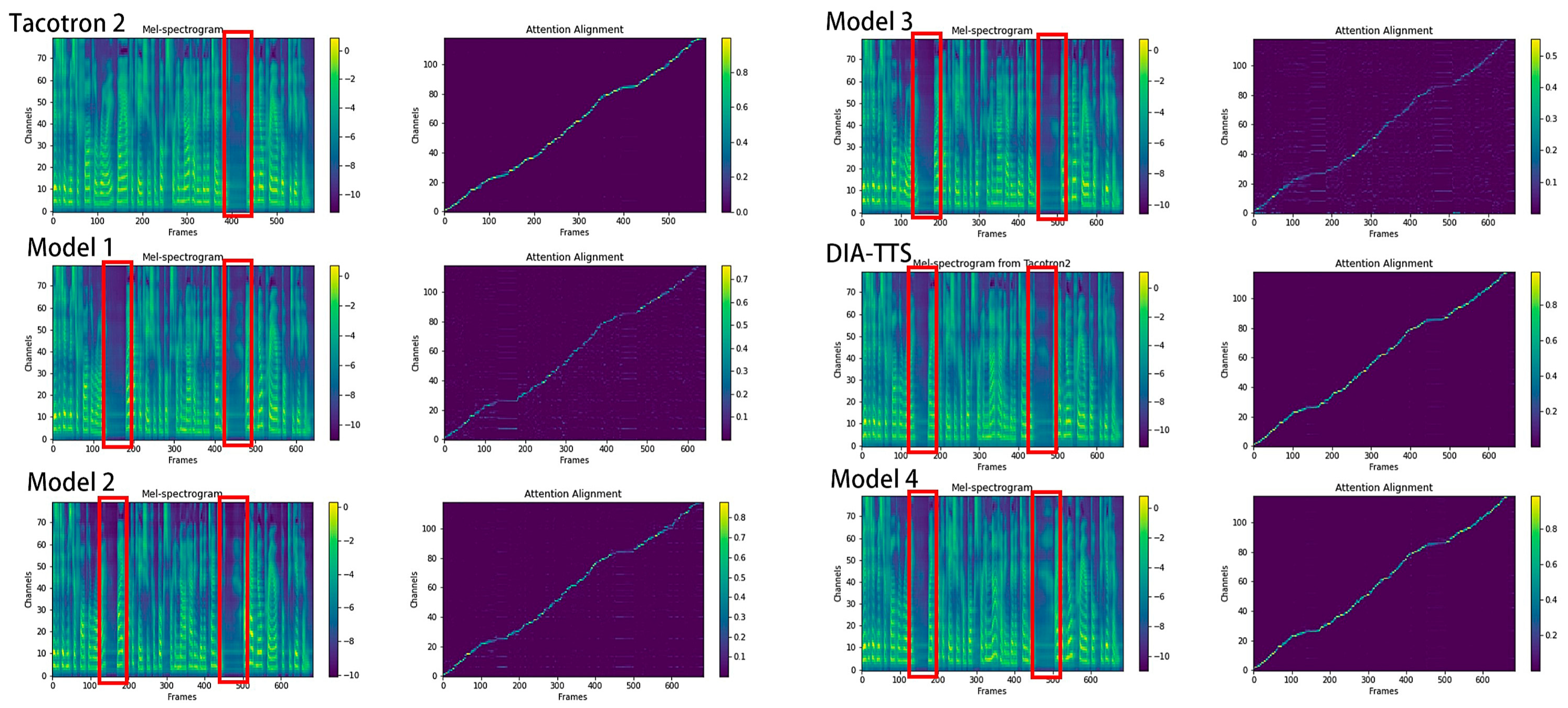

3.3. Human Subjective Evaluation Experiments

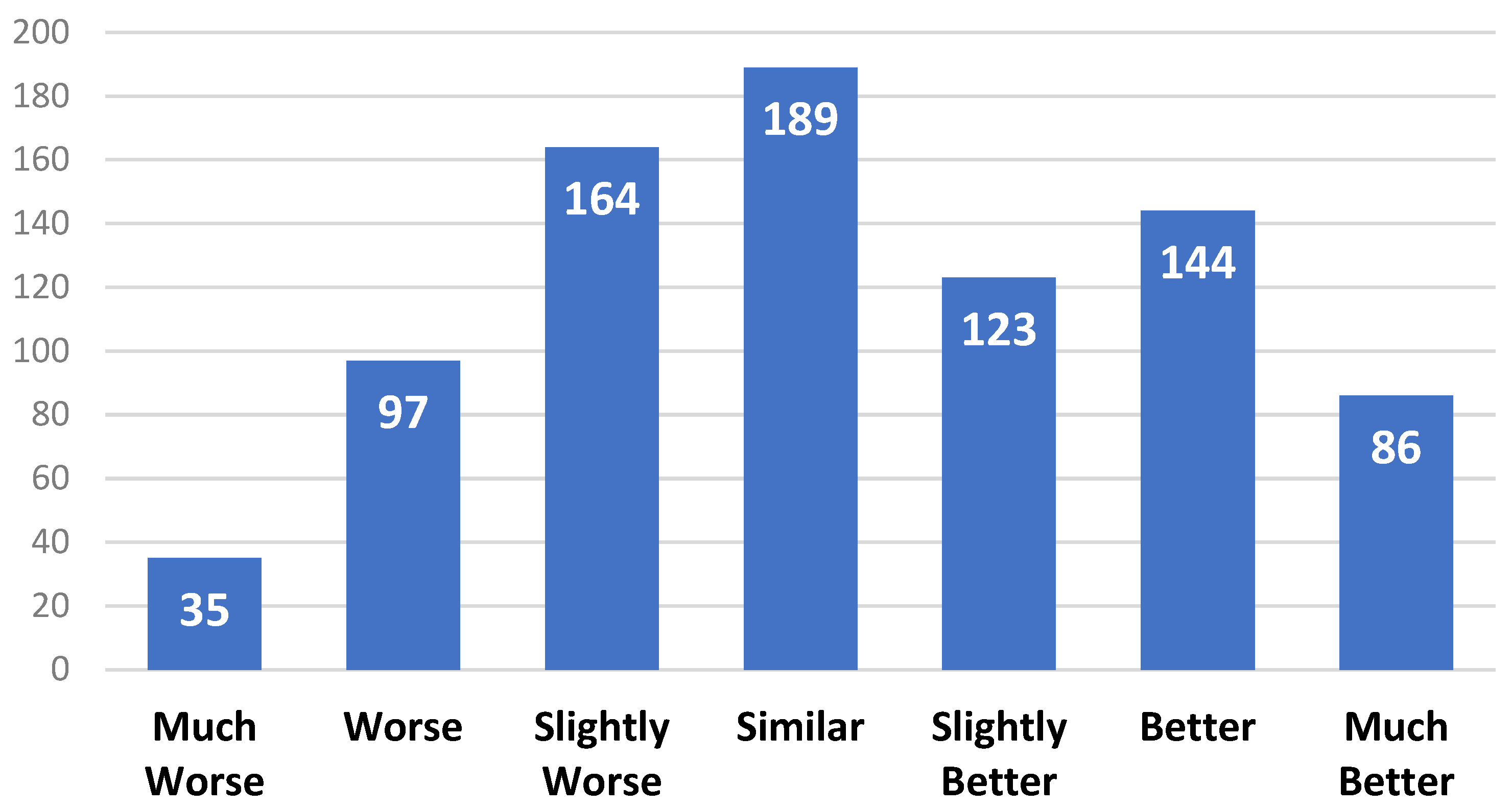

3.4. Ablation Study

4. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shahira, K.C.; Lijiya, A. Towards Assisting the Visually Impaired: A Review on Techniques for Decoding the Visual Data from Chart Images. IEEE Access 2021, 9, 52926–52943. [Google Scholar] [CrossRef]

- Jiang, H.; Gonnot, T.; Yi, W.-J.; Saniie, J. Computer Vision and Text Recognition for Assisting Visually Impaired People Using Android Smartphone. In Proceedings of the 2017 IEEE International Conference on Electro Information Technology (EIT), Lincoln, NE, USA, 14–17 May 2017; pp. 350–353. [Google Scholar]

- Gladston, A.R.; Sreenidhi, S.; Vijayalakshmi, P.; Nagarajan, T. Incorporation of Happiness in Neutral Speech by Modifying Time-Domain Parameters of Emotive-Keywords. Circuits Syst. Signal Process 2022, 41, 2061–2087. [Google Scholar] [CrossRef]

- McLean, G.; Osei-Frimpong, K. Hey Alexa… Examine the Variables Influencing the Use of Artificial Intelligent in-Home Voice Assistants. Comput. Hum. Behav. 2019, 99, 28–37. [Google Scholar] [CrossRef]

- Subhash, S.; Srivatsa, P.N.; Siddesh, S.; Ullas, A.; Santhosh, B. Artificial Intelligence-Based Voice Assistant. In Proceedings of the 2020 Fourth World Conference on Smart Trends in Systems, Security and Sustainability (WorldS4), London, UK, 27–28 July 2020; pp. 593–596. [Google Scholar]

- Juang, B.-H.; Rabiner, L. Mixture Autoregressive Hidden Markov Models for Speech Signals. IEEE Trans. Acoust. Speech Signal Process. 1985, 33, 1404–1413. [Google Scholar] [CrossRef] [Green Version]

- Sotelo, J.M.R.; Mehri, S.; Kumar, K.; Santos, J.F.; Kastner, K.; Courville, A.C. Char2Wav: End-to-End Speech Synthesis. In Proceedings of the 5th International Conference on Learning Representations (ICLR 2017), Toulon, France, 24–26 April 2017. [Google Scholar]

- Wang, Y.; Skerry-Ryan, R.J.; Stanton, D.; Wu, Y.; Weiss, R.J.; Jaitly, N. Tacotron: Towards End-to-End Speech Synthesis. arXiv 2017, arXiv:1703.10135. [Google Scholar]

- Shen, J.; Pang, R.; Weiss, R.J.; Schuster, M.; Jaitly, N.; Yang, Z. Natural Tts Synthesis by Conditioning Wavenet on Mel Spectrogram Predictions. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4779–4783. [Google Scholar]

- Ren, Y.; Ruan, Y.; Tan, X.; Qin, T.; Zhao, S.; Zhao, Z. Fastspeech: Fast, Robust and Controllable Text to Speech. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Ren, Y.; Hu, C.; Tan, X.; Qin, T.; Zhao, S.; Zhao, Z. Fastspeech 2: Fast and High-Quality End-to-End Text to Speech. arXiv 2020, arXiv:2006.04558. [Google Scholar]

- Ping, W.; Peng, K.; Gibiansky, A.; Arik, S.O.; Kannan, A.; Narang, S. Deep Voice 3: Scaling Text-to-Speech with Convolutional Sequence Learning. arXiv 2017, arXiv:1710.07654. [Google Scholar]

- Arik, S.; Diamos, G.; Gibiansky, A.; Miller, J.; Peng, K.; Ping, W. Deep Voice 2: Multi-Speaker Neural Text-to-Speech. arXiv 2017, arXiv:1705.08947. [Google Scholar]

- Arık, S.Ö.; Chrzanowski, M.; Coates, A.; Diamos, G.; Gibiansky, A.; Kang, Y. Deep Voice: Real-Time Neural Text-to-Speech. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 195–204. [Google Scholar]

- Oord, A.V.D.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A. Wavenet: A Generative Model for Raw Audio. arXiv 2016, arXiv:1609.03499. [Google Scholar]

- van den Oord, A.; Kalchbrenner, N.; Espeholt, L.; Vinyals, O.; Graves, A. Conditional Image Generation with Pixelcnn Decoders. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 4797–4805. [Google Scholar]

- Paine, T.L.; Khorrami, P.; Chang, S.; Zhang, Y.; Ramachandran, P.; Hasegawa-Johnson, M.A. Fast Wavenet Generation Algorithm. arXiv 2016, arXiv:1611.09482. [Google Scholar]

- Mehri, S.; Kumar, K.; Gulrajani, I.; Kumar, R.; Jain, S.; Sotelo, J. Samplernn: An Unconditional End-to-End Neural Audio Generation Model. arXiv 2016, arXiv:1612.07837. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Chorowski, J.K.; Bahdanau, D.; Serdyuk, D.; Cho, K.; Bengio, Y. Attention-Based Models for Speech Recognition. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Liu, R.; Sisman, B.; Li, J.; Bao, F.; Gao, G.; Li, H. Teacher-Student Training for Robust Tacotron-Based Tts. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6274–6278. [Google Scholar]

- Liu, R.; Bao, F.; Gao, G.; Zhang, H.; Wang, Y. Improving Mongolian Phrase Break Prediction by Using Syllable and Morphological Embeddings with Bilstm Model. In Proceedings of the Interspeech, Hyderabad, India, 2–6 September 2018; pp. 57–61. [Google Scholar]

- He, M.; Deng, Y.; He, L. Robust Sequence-to-Sequence Acoustic Modeling with Stepwise Monotonic Attention for Neural Tts. arXiv 2019, arXiv:1906.00672. [Google Scholar]

- Zhu, X.; Zhang, Y.; Yang, S.; Xue, L.; Xie, L. Pre-Alignment Guided Attention for Improving Training Efficiency and Model Stability in End-to-End Speech Synthesis. IEEE Access 2019, 7, 65955–65964. [Google Scholar] [CrossRef]

- Elias, I.; Zen, H.; Shen, J.; Zhang, Y.; Jia, Y.; Skerry-Ryan, R.J. Parallel Tacotron 2: A Non-Autoregressive Neural Tts Model with Differentiable Duration Modeling. arXiv 2021, arXiv:2103.14574. [Google Scholar]

- Elias, I.; Zen, H.; Shen, J.; Zhang, Y.; Jia, Y.; Weiss, R.J. Parallel Tacotron: Non-Autoregressive and Controllable Tts. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 5709–5713. [Google Scholar]

- Okamoto, T.; Toda, T.; Shiga, Y.; Kawai, H. Tacotron-Based Acoustic Model Using Phoneme Alignment for Practical Neural Text-to-Speech Systems. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Singapore, 14–18 December 2019; pp. 214–221. [Google Scholar]

- Prenger, R.; Valle, R.; Catanzaro, B. Waveglow: A Flow-Based Generative Network for Speech Synthesis. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3617–3621. [Google Scholar]

- Wang, Y.; Stanton, D.; Zhang, Y.; Ryan, R.; Battenberg, E.; Shor, J. Style Tokens: Unsupervised Style Modeling, Control and Transfer in End-to-End Speech Synthesis. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 5180–5189. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 630–645. [Google Scholar]

- Kingma, D.P.; Dhariwal, P. Glow: Generative Flow with Invertible 1 × 1 Convolutions. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Theis, L.; Oord, A.V.D.; Bethge, M. A Note on the Evaluation of Generative Models. arXiv 2015, arXiv:1511.01844. [Google Scholar]

- Li, N.; Liu, S.; Liu, Y.; Zhao, S.; Liu, M. Neural Speech Synthesis with Transformer Network. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 6706–6713. [Google Scholar]

| System | MOS | CMOS |

|---|---|---|

| Tacotron2 + WaveGlow | 4.38 ± 0.052 | Reference |

| DIA-TTS + WaveGlow | 4.48 ± 0.045 | +0.257 |

| DIA-TTS + WaveNet | 4.41 ± 0.047 | N/A |

| Ground Truth | 4.51 ± 0.039 | N/A |

| Model | Inheritance Depth | LSF | MOS | Inference Time/s |

|---|---|---|---|---|

| Tacotron2 | 1 | 2 | 4.38 ± 0.052 | 0.724 |

| Model 1 | 2 | 2 | 4.38 ± 0.027 | 0.966 |

| Model 2 | 2 | 3 | 4.40 ± 0.052 | 1.032 |

| Model 3 | 3 | 3 | 4.43 ± 0.044 | 1.270 |

| DIA-TTS | 3 | 4 | 4.48 ± 0.045 | 1.310 |

| Model 4 | 4 | 5 | 4.49 ± 0.043 | 1.625 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, J.; Xu, Z.; He, X.; Wang, J.; Liu, B.; Feng, R.; Zhu, S.; Wang, W.; Li, J. DIA-TTS: Deep-Inherited Attention-Based Text-to-Speech Synthesizer. Entropy 2023, 25, 41. https://doi.org/10.3390/e25010041

Yu J, Xu Z, He X, Wang J, Liu B, Feng R, Zhu S, Wang W, Li J. DIA-TTS: Deep-Inherited Attention-Based Text-to-Speech Synthesizer. Entropy. 2023; 25(1):41. https://doi.org/10.3390/e25010041

Chicago/Turabian StyleYu, Junxiao, Zhengyuan Xu, Xu He, Jian Wang, Bin Liu, Rui Feng, Songsheng Zhu, Wei Wang, and Jianqing Li. 2023. "DIA-TTS: Deep-Inherited Attention-Based Text-to-Speech Synthesizer" Entropy 25, no. 1: 41. https://doi.org/10.3390/e25010041

APA StyleYu, J., Xu, Z., He, X., Wang, J., Liu, B., Feng, R., Zhu, S., Wang, W., & Li, J. (2023). DIA-TTS: Deep-Inherited Attention-Based Text-to-Speech Synthesizer. Entropy, 25(1), 41. https://doi.org/10.3390/e25010041