Abstract

Large corporations, government entities and institutions such as hospitals and census bureaus routinely collect our personal and sensitive information for providing services. A key technological challenge is designing algorithms for these services that provide useful results, while simultaneously maintaining the privacy of the individuals whose data are being shared. Differential privacy (DP) is a cryptographically motivated and mathematically rigorous approach for addressing this challenge. Under DP, a randomized algorithm provides privacy guarantees by approximating the desired functionality, leading to a privacy–utility trade-off. Strong (pure DP) privacy guarantees are often costly in terms of utility. Motivated by the need for a more efficient mechanism with better privacy–utility trade-off, we propose Gaussian FM, an improvement to the functional mechanism (FM) that offers higher utility at the expense of a weakened (approximate) DP guarantee. We analytically show that the proposed Gaussian FM algorithm can offer orders of magnitude smaller noise compared to the existing FM algorithms. We further extend our Gaussian FM algorithm to decentralized-data settings by incorporating the protocol and propose . Our method can offer the same level of utility as its centralized counterparts for a range of parameter choices. We empirically show that our proposed algorithms outperform existing state-of-the-art approaches on synthetic and real datasets.

1. Introduction

Differential privacy (DP) [1] has emerged as a de facto standard for privacy-preserving technologies in research and practice due to the quantifiable privacy guarantee it provides. DP involves randomizing the outputs of an algorithm in such a way that the presence or absence of a single individual’s information within a database does not significantly affect the outcome of the algorithm. DP typically introduces randomness in the form of additive noise, ensuring that an adversary cannot infer any information about a particular record with high confidence. The key challenge is to keep the performance or utility of the noisy algorithm close enough to the unperturbed one to be useful in practice [2].

In its pure form, DP measures privacy risk by a parameter , which can be interpreted as the privacy budget, that bounds the log-likelihood ratio of the output of a private algorithm under two datasets differing in a single individual’s data. The smaller used, the greater the privacy ensured, but at the cost of worse performance. In privacy-preserving machine learning models, higher values of are generally chosen to achieve acceptable utility. However, setting to arbitrarily large values severely undermines privacy, although there are no hard threshold values for above which formal guarantees provided by DP become meaningless in practice [3]. In order to improve utility for a given privacy budget, a relaxed definition of differential privacy, referred to as -DP, was proposed [4]. Under this privacy notion, a randomized algorithm is considered privacy-preserving if the privacy loss of the output is smaller than with a high probability (i.e., with probability at least ) [5].

Our current work is motivated by the necessity of a decentralized differentially private algorithm to efficiently solve practical signal estimation and learning problems that (i) offers better privacy–utility trade-off compared to existing approaches, and (ii) offers similar utility as the pooled-data (or centralized) scenario. Some noteworthy real-world examples of systems that may need such differentially private decentralized solutions include [6]: (i) medical research consortium of healthcare centers and labs, (ii) decentralized speech processing systems for learning model parameters for speaker recognition, (iii) multi-party cyber-physical systems. To this end, we first focus on improving the privacy–utility trade-off of a well known DP mechanism, called the functional mechanism (FM) [7]. The FM approach is more general and requires fewer assumptions on the objective function than other objective perturbation approaches [8,9].

The functional mechanism was originally proposed for “pure” -DP. However, it involves an additive noise with very large variance for datasets with even moderate ambient dimension, leading to a severe degradation in utility. We propose a natural “approximate” -DP variant using Gaussian noise and show that the proposed Gaussian FM scheme significantly reduces the additive noise variance. A recent work by Ding et al. [10] proposed relaxed FM using the Extended Gaussian mechanism [11], which also guarantees approximate -DP instead of pure DP. However, we will show analytically and empirically that, just like the original FM, the relaxed FM also suffers from prohibitively large noise variance even for moderate ambient dimensions. Our tighter sensitivity analysis for the Gaussian FM, which is different from the technique used in [10], allows us to achieve much better utility for the same privacy guarantee. We further extend the proposed Gaussian FM framework to the decentralized or “federated” learning setting using the protocol [6]. Our algorithm can offer the same level of utility as the centralized case over a range of parameters. Our empirical evaluation of the proposed algorithms on synthetic and real datasets demonstrates the superiority of the proposed schemes over the existing methods. We now review the relevant existing research works in this area before summarizing our contributions.

Related Works. There is a vast literature on the perturbation techniques to ensure DP in machine learning algorithms. The simplest method for ensuring that an algorithm satisfies DP is input perturbation, where noise is introduced to the input of the algorithm [2]. Another common approach is output perturbation, which obtains DP by adding noise to the output of the problem. In many machine learning algorithms, the underlying objective function is minimized with gradient descent. As the gradient is dependent on the privacy-sensitive data, randomization is introduced at each step of the gradient descent [9,12]. The amount of noise we need to add at each step depends on the sensitivity of the function to changes in its input [4]. Objective perturbation [8,9,13] is another state-of-the-art method to obtain DP, where noise is added to the underlying objective function of the machine learning algorithm, rather than its solutions. A newly proposed take on output perturbation [14] injects noise after model convergence, which imposes some additional constraints. In addition to optimization problems, Smith [15] proposed a general approach for computing summary statistics using the sample-and-aggregate framework and both the Laplace and Exponential mechanisms [16].

Zhang et al. originally proposed functional mechanism (FM) [7] as an extension to the Laplace mechanism. FM has been used in numerous studies to ensure DP in practical settings. Jorgensen et al. applied FM in personalized differential privacy (PDP) [17], where the privacy requirements are specified at the user-level, rather than by a single, global privacy parameter. FM has also been combined with homomorphic encryption [18] to obtain both data secrecy and output privacy, as well as with fairness-aware learning [10,19] in classification models. The work of Fredrikson et al. [20], which demonstrated privacy in pharmacogenetics using FM and other DP mechanisms, is of particular interest to us. Pharmacogenetic models [21,22,23,24] contain sensitive clinical and genomic data that need to be protected. However, poor utility of differentially private pharmacogenetic models can expose patients to increased risk of disease. Fredrikson et al. [20] tested the efficacy of such models against attribute inference by using a model inversion technique. Their study shows that, although not explicitly designed to protect attribute privacy, DP can prevent attackers from accurately predicting genetic markers if is sufficiently small (≤1). However, the small value of results in poor utility of the models due to excessive noise addition, leading them to conclude that when utility cannot be compromised much, the existing methods do not give an ϵ for which state-of-the-art DP mechanisms can be reasonably employed. As mentioned before, Ding et al. [10] recently proposed relaxed FM in an attempt to improve upon the original FM using the Extended Gaussian mechanism [11], which offered approximate DP guarantee.

DP algorithms provide different guarantees than Secure Multi-party Computation (SMC)-based methods. Several studies [25,26,27] applied a combination of SMC and DP for distributed learning. Gade and Vaidya [25] demonstrated one such method in which each site adds and subtracts arbitrary functions to confuse the adversary. Heikkilä et al. [26] also studied the relationship of additive noise and sample size in a distributed setting. In their model, S data holders communicate their data to M computation nodes to compute a function. Tajeddine et al. [27] used DP-SMC on vertically partitioned data, i.e., where data of the same participants are distributed across multiple parties or data holders. Bonawitz et al. [28] proposed a communication-efficient method for federated learning over a large number of mobile devices. More recently, Heikkilä et al. [29] considered DP in a cross-silo federated learning setting by combining it with additive homomorphic secure summation protocols. Xu et al. [30] investigated DP for multiparty learning in vertically partitioned data setting. Their proposed framework dissects the objective function into single-party and cross-party sub-functions, and applies functional mechanisms and secure aggregation to achieve the same utility as the centralized DP model. Inspired by the seminal work of Dwork et al. [31] that proposed distributed noise generation for preserving privacy, Imtiaz et al. [6] proposed the Correlation Private Estimation () protocol. employs a similar principle as Anandan and Clifton [32] to reduce the noise added for DP in decentralized-data settings.

Our Contributions. As mentioned before, we are motivated by the necessity of a decentralized differentially private algorithm that injects a smaller amount of noise (compared to existing approaches) to efficiently solve practical signal estimation and learning problems. To that end, we first propose an improvement to the existing functional mechanism. We achieve this by performing a tighter characterization of the sensitivity analysis, which significantly reduces the additive noise variance. As we utilize the Gaussian mechanism [33] to ensure -DP, we call our improved functional mechanism Gaussian FM. Using our novel sensitivity analysis, we show that the proposed Gaussian FM injects a much smaller amount of additive noise compared to the original FM [7] and the relaxed FM [10] algorithms. We empirically show the superiority of Gaussian FM in terms of privacy guarantee and utility by comparing it with the corresponding non-private algorithm, the original FM [7], the relaxed FM [10], the objective perturbation [8], and the noisy gradient descent [12] methods. Note that the original FM [7] and the objective perturbation [8] methods guarantee pure DP, whereas the other methods guarantee approximate DP. We compare our -DP Gaussian FM with the pure DP algorithms as a means for investigating how much performance/utility gain one can achieve by trading off pure the DP guarantee with an approximate DP guarantee. Additionally, the noisy gradient descent method is a multi-round algorithm. Due to the composition theorem of differential privacy [33], the privacy budgets in multi-round algorithms accumulate across the number of iterations during training. In order to perform better accounting for the total privacy loss in the noisy gradient descent algorithm, we use Rényi differential privacy [34].

Considering the fact that machine learning algorithms are often used in decentralized/federated data settings, we adapt our proposed Gaussian FM algorithm to decentralized/federated data settings following the () [6] protocol, and propose . In many signal processing and machine learning applications, where privacy regulations prevent sites from sharing the local raw data, joint learning across datasets can yield discoveries that are impossible to obtain from a single site. Motivated by scientific collaborations that are common in human health research, improves upon the conventional decentralized DP schemes and achieves the same level of utility as the pooled-data scenario in certain regimes. It has been shown [6] that can benefit computations with sensitivies satisfying some conditions. Many functions of interest in machine learning and deep neural networks have sensitivites that satisfy these conditions. Our proposed algorithm utilizes the Stone–Weierstrass theorem [35] to approximate a cost function in the decentralized-data setting and employs the protocol.

To summarize, the goal of our work is to improve the privacy–utility trade-off and reduce the amount of noise in the functional mechanism at the expense of approximate DP guarantee for applications of machine learning in decentralized/federated data settings, similar to those found in research consortia. Our main contributions are:

- We propose Gaussian FM as an improvement over the existing functional mechanism by performing a tighter sensitivity analysis. Our novel analysis has two major features: (i) the sensitivity parameters of the data-dependent (hence, privacy-sensitive) polynomial coefficients of the Stone–Weierstrass decomposition of the objective function are free of the dataset dimensionality; and (ii) the additive noise for privacy is tailored for the order of the polynomial coefficient of the Stone–Weierstrass decomposition of the objective function, rather than being the same for all coefficients. These features give our proposed Gaussian FM a significant advantage by offering much less noisy function computation compared to both the original FM [7] and the relaxed FM [10], as shown for linear and logistic regression problems. We also empirically validate this on real and synthetic data.

- We extend our Gaussian FM to decentralized/federated data settings to propose , a novel extension of the functional mechanism for decentralized-data. To this end, we note another significant advantage of our proposed Gaussian FM over the original FM: the Gaussian FM can be readily extended to decentralized/federated data settings by exploiting the fact that the sum of a number of Gaussian random variables is another Gaussian random variable, which is not true for Laplace random variables. We show that the proposed can achieve the same utility as the pooled-data scenario for some parameter choices. To the best of our knowledge, our work is the first functional mechanism for decentralized-data settings.

- We demonstrate the effectiveness of our algorithms with varying privacy and dataset parameters. Our privacy analysis and empirical results on real and synthetic datasets show that the proposed algorithms can achieve much better utility than the existing state-of-the-art algorithms.

2. Definitions and Preliminaries

Notation. We denote vectors, matrices, and scalars with bold lower case letters , bold upper case letters , and unbolded letters , respectively. We denote indices with lower case letters and they typically run from 1 to their upper case versions (). The n-th column of a matrix X is denoted as . We denote the Euclidean (or ) norm of a vector and the spectral norm of a matrix with . Finally, we denote the inner product of two matrices A and B as .

2.1. Definitions

Definition 1

(()-Differential Privacy [4]). Let us consider a domain of datasets consisting of N records, and where D and differ in a single record (neighboring datasets). Then, for all measurable and all neighboring data sets , an algorithm provides -differential privacy (-DP) if

- This definition is also known as bounded differential privacy (as opposed to unbounded differential privacy [1]). One way to interpret this is that an algorithm satisfies -DP if the probability distribution of the output of does not change significantly if the input database is changed by one sample. That is to say, whether or not a particular individual takes part in a differentially private study, the outcome of the study is not changed by much. An adversary attempting to identify an individual will not be able to verify the individual’s presence or absence in the study with high confidence. The privacy of the individual is thus preserved by plausible deniability. In the definition of DP, are privacy parameters, where lower ensure more privacy. The parameter can be interpreted as the probability that the algorithm fails to provide privacy risk . Note that -DP is known as approximate differential privacy whereas -differential privacy (-DP) is known as pure differential privacy. In general, we denote approximate (bounded) differentially private algorithms with DP. An important feature of DP is that post-processing of the output does not change the privacy guarantee, as long as that post-processing does not use the original data [33]. Among the most commonly used mechanisms for formulating a DP algorithm are additive noise mechanisms such as the Gaussian [4] or Laplace [33] mechanisms, and random sampling using the exponential mechanism [16]. For additive noise mechanisms, the standard deviation of the additive noise is scaled to the sensitivity of the computation.

Definition 2

(-Sensitivity [4]). Given neighboring datasets D and , the -sensitivity of a vector-valued function is

We focus on and 2 in this paper.

Definition 3

(Gaussian Mechanism [33]). Let be an arbitrary function with -sensitivity Δ. The Gaussian mechanism with parameter τ adds noise scaled to to each of the D entries of the output and satisfies -differential privacy for if

- Note that, for any given pair, we can calculate a noise variance such that addition of a noise term drawn from guarantees ()-differential privacy. There are infinitely many pairs that yield the same . Therefore, we parameterize our methods using [36] in this paper. We refer the reader to [37,38,39] for a broader discussion of privacy parameter .

Definition 4

(Rényi Differential Privacy (RDP) [34]). A randomized mechanism is -Rényi differentially private if, for any adjacent , the following holds:

Here, , and and are probability density functions defined on .

Analyzing the total privacy loss of a multi-round algorithm, each stage of which is DP, is a challenging task. It has been shown [34,40] that the advanced composition theorem [33] for -differential privacy can be loose. Hence, we use RDP, which offers a much simpler composition rule that is shown to be tight. Here, we review the properties of RDP [34] that we utilize in our analysis in Section 3.

Proposition 1

(From RDP to Differential Privacy [34]). If is an -RDP mechanism, then it also satisfies -differential privacy for any .

Proposition 2

(Composition of RDP [34]). Let be -RDP and be -RDP. Then the mechanism defined as , where and , satisfies -RDP.

Proposition 3

(RDP and Gaussian Mechanism [34]). If has -sensitivity 1, then the Gaussian mechanism , where satisfies -RDP. Additionally, a composition of T Gaussian mechanisms satisfies -RDP.

Correlation Assisted Private Estimation () [6]. As mentioned before, we utilize the protocol for developing . In Section 5.2 we describe the trust/collusion model in detail, and discuss how the correlated noise in a decentralized-data setting is used to reduce the excess noise introduced in conventional decentralized DP algorithms. We use the terms “distributed” and “decentralized” interchangeably in this paper. Note that the scheme, and consequently the proposed algorithm can be readily extended (see Section III.C of Imtiaz et al. [6]) for federated learning [29] settings.

The protocol considers a decentralized data setting with S sites and a central aggregator node in an “honest but curious” threat model [6]. For simplicity, we consider the symmetric setting: each site holds a dataset of disjoint data samples, where the total number of samples across all sites is N. overcomes the utility degradation in conventional decentralized DP schemes and achieves the same noise variance as that of the pooled-data scenario in certain parameter regimes. The privacy of is given by Theorem 1 and the claim that the noise variance of the estimator is exactly the same as if all data were present at the aggregator is formalized in Lemma 1. Here, we review the relevant properties of the scheme for extending our proposed Gaussian FM to the decentralized-data setting. We refer the reader to Imtiaz et al. [6] for the proofs of these properties.

Theorem 1

(Privacy of scheme [6]). In a decentralized data setting with and for all sites , if at most collude after execution, then guarantees -differential privacy for each site, where satisfy the relation , and are given by

Lemma 1

([6]). Consider the symmetric setting: and for all sites . Let the variances of the noise terms and be and , respectively. If we denote the variance of the additive noise (for preserving privacy) in the pooled-data scenario by and the variance of the estimator by then protocol achieves the same noise variance as the pooled-data scenario (i.e., ).

Proposition 4

(Performance improvement using [6]). If the local noise variances are for then the scheme provides a reduction in noise variance over the conventional decentralized DP scheme in the symmetric setting ( and ∀), where and are the noise variances of the final estimate at the aggregator in the conventional scheme and the scheme, respectively.

Proposition 5

(Scope of [6]). Consider a decentralized setting with sites in which site has a dataset of samples and . Suppose the sites are employing the scheme to compute a function with -sensitivity . Denote and observe the ratio . Then the protocol achieves , if (i) for convex ; and (ii) for general .

2.2. Functional Mechanism [7]

In this section, we first review the existing functional mechanism through a regression model following [7] before describing our proposed improvement. Let be a dataset that contains N samples of the form , where is the feature vector and is the response for . Without loss of generality, we assume for each sample that . The objective is to construct a regression model that enables one to predict any based on . Depending on the regression model, the mapping function can be of various types. Without loss of generality, it can be parameterized with a D-dimensional vector of real numbers. To evaluate whether leads to an accurate model, a cost function f is defined to measure the deviation between the original and predicted values of , given as the model parameters. The optimal model parameter is defined as

where the empirical average cost function is

Note that depends on the data samples. In cases where the data are privacy-sensitive, the empirical average cost function (or any function computed from it, such as its gradient or the optimizer ) may reveal private information about the members of the dataset. To make the model differentially private, one approach is to add noise to the gradients of the cost function at every iteration [12]. We refer to this approach as noisy gradient descent in this paper. Another approach is the to perturb the objective function [7,8,9,10]. In particular, the original FM [7] and the relaxed FM [10] use a randomized approximation of the objective function.

Now, recall that contains the model parameters . We define for some . Let denote the set of all with degree , i.e.,

For example, , , and . By the Stone–Weierstrass Theorem [35], any continuous and differentiable can be always written as a (potentially infinite) sum of monomials of , i.e., for some , we have

where denotes the coefficient of in the polynomial. Note that is a function of the n-th data sample. Consequently, the as expressed above depends on the model parameters through and on the data samples through . The expression for average cost in (1) can now be written as

For regression analysis on two neighboring datasets and differing in a single sample, the -sensitivity of the data-dependent term in (2) is computed as [7]:

In FM, Zhang et al. [7] proposed to perturb by injecting Laplace noise with variance into each coefficient of the polynomial. FM achieves -DP by obtaining the optimal model parameters that minimize the noise-perturbed function .

As mentioned before, decomposition such as (2) can be performed for any continuous and differentiable cost function . However, depending on the complexity of , the decomposition may be non-trivial. In Section 4, we show how such decomposition can be performed on linear regression and logistic regression problems, as illustrative examples.

3. Functional Mechanism with Approximate Differential Privacy: Gaussian FM

Zhang et al. [7] computed the -sensitivity of the data-dependent terms for linear regression and logistic regression problems. The is shown to be for linear regression, and for logistic regression. We note that grows quadratically with the ambient dimension of the data samples, resulting in a excessively large amount of noise to be injected into the objective function. Additionally, Ding et al. [10] proposed relaxed FM, a “utility-enhancement scheme”, by replacing the Laplace mechanism with the Extended Gaussian mechanism [11], and thus achieving slightly better utility than the original FM at the expense of an approximate DP guarantee instead of a pure DP guarantee. However, Ding et al. [10] showed that the -sensitivity of the data-dependent terms for the logistic regression problem is . Additionally, using the technique outlined in [10], it can be shown that the -sensitivity of the data-dependent terms is for the linear regression problem (please see Appendix A for details). For both cases, we observe that grows linearly with the ambient dimension of the data samples. Therefore, the privacy-preserving additive noise variances in both the original FM and relaxed FM schemes are data-dimensionality dependent, and therefore, can be prohibitively large even for moderate D. Moreover, both FM and relaxed FM schemes add the same amount of noise to each polynomial coefficient irrespective of the order j. With a tighter characterization, we show in Section 4 that the sensitivities of these coefficients are different for different order j. We reduce the amount of added noise by addressing these issues and performing a novel sensitivity analysis. The key points are as follows:

- Instead of computing the -DP approximation of the objective function using the Laplace mechanism, we use the Gaussian mechanism to compute the ()-DP approximation of . This gives a weaker privacy guarantee than the pure differential privacy, but provides much better utility.

- Recall that the original FM achieves -DP by adding Laplace noise scaled to the -sensitivity of the data-dependent terms of the objective function in (2). As we use the Gaussian mechanism, we require -sensitivity analysis. To compute the -sensitivity of the data-dependent terms of the objective function in (2), we first define an array that contains as its entries for all . The term “array” is used because the dimension of depends on the cardinality of . For example, for , is a scalar because ; for , can be expressed as a D-dimensional vector because ; for , can be expressed as a matrix because .

- We rewrite the objective function aswhere is the array containing all as its entries. Note that and have the same dimensions and number of elements. We define the -sensitivity of aswhere and are computed on neighboring datasets and , respectively. Following the Gaussian mechanism [33], we can calculate the differentially private estimate of , denoted aswhere the noise array has the same dimension as , and contains entries drawn i.i.d. from with . Finally, we have

- As the function depends on the data only through , this computation satisfies ()-differential privacy. Our proposed Gaussian FM is shown in detail in Algorithm 1.

Theorem 2

(Privacy of the Gaussian FM (Algorithm 1)). Consider Algorithm 1 with privacy parameters , and the empirical average cost function represented as in (3). Then Algorithm 1 computes an differentially private approximation to . Consequently, the minimizer satisfies -differential privacy.

| Algorithm 1 Gaussian FM |

Proof.

The proof of Theorem 2 follows from the fact that the function depends on the data samples only through . The computation of is -differentially private by the Gaussian mechanism [4,33]. Therefore, the release of satisfies -differential privacy. One way to rationalize this is to consider that the probability of the event of selecting a particular set of is the same as the event of formulating a function with that set of . Therefore, it suffices to consider the joint density of the and find an upper bound on the ratio of the joint densities of the under two neighboring datasets and . As we employ the Gaussian mechanism to compute , the ratio is upper bounded by with probability at least . Therefore, the release of satisfies -differential privacy. Furthermore, differential privacy is post-processing invariant. Therefore, the computation of the minimizer also satisfies -differential privacy. □

Privacy Analysis of Noisy Gradient Descent [12] using Rényi Differential Privacy. One of the most crucial qualitative properties of DP is that it allows us to evaluate the cumulative privacy loss over multiple computations [33]. Cumulative, or total, privacy loss is different from ()-DP in multi-round machine learning algorithms. In order to demonstrate the superior privacy guarantee of the proposed Gaussian FM, we compare it to the existing functional mechanism [7], the relaxed functional mechanism [10], the objective perturbation [8], and the noisy gradient descent [12] method. Note that, similar to objective perturbation, FM and relaxed FM, the proposed Gaussian FM injects randomness in a single round, and therefore does not require privacy accounting. However, the noisy gradient descent method involves addition of noise in each step the gradient is computed. That is, noise is added to the computed gradients of the parameters of the objective function during optimization. Since it is a multi-round algorithm, the overall used during optimization is different from the for every iteration. We follow the analysis procedure outlined in [6] for the privacy accounting of the noisy gradient descent algorithm. Note that Proposition 3 described in Section 2.1 is defined for functions with unit -sensitivity. Therefore, if a noise from is added to a function with sensitivity , then the resulting mechanism satisfies -RDP. Now, according to Proposition 3, the T-fold composition of Gaussian mechanisms satisfies -RDP. Finally, according to Proposition 1, it also satisfies -differential privacy for any , where . For a given value of , we can express the value of the optimal overall as a function of :

where is given by

We compute the overall following this procedure for the noisy gradient descent algorithm [12] in our experiments in Section 6.

4. Application of Gaussian FM in Regression Analysis

In this section, we demonstrate how our proposed Gaussian FM can be applied to linear and logistic regression problems to achieve ()-DP. For both cases, we first decompose the objective function (i.e., the empirical average cost function) into a finite series of polynomials, inject noise into the coefficients (i.e., the only data-dependent components in the decomposition) using Gaussian mechanism, and finally minimize the ()-differentially private objective function. As before, we assume that we have a dataset with N samples of the form , where for each sample , the D-dimensional feature vector is (normalized to ensure ) and the corresponding output is .

4.1. Linear Regression

For our linear regression problem, we assume . Let be the parameter vector. The goal of linear regression is to find the optimal so that . The empirical average cost function is defined as

Using simple algebra, this equation can be decomposed into a series of polynomials as

As we intend to compute the differentially private minimizer , we observe that the representation of is of the form with . The expressions for are

Here, is a scalar, is a D-dimensional vector, and is a symmetric matrix, since is an matrix containing as its columns. The expressions for are

The next step is finding the sensitivities of using (4). Let and be two neighboring datasets differing in only one sample, e.g., the last samples and . Now, the -sensitivity of is

since and hence . Next, the -sensitivity of is

where the second line follows from the triangle inequality, and the last line follows from the assumptions that and . Finally, the -sensitivity of is

The proof of the inequality in the last line is as follows:

Proof.

The term is a symmetric matrix, whose norm can be expressed [41] as . It follows that

□

After computing the -sensitivity of for , and 2, we can now compute the noise array , where , and then compute following (5). Using these, we can compute the differentially private according to (6), and consequently, the minimizer . Note that, unlike the existing FM and relaxed FM, the additive noise variances of our proposed Gaussian FM do not depend on the sample dimension D. More specifically, for the linear regression problem, the -sensitivity of the coefficients in FM [7] is and the -sensitivity of the coefficients in relaxed FM [10] is (see Appendix A for the proof). Both of these sensitivities are orders of magnitude larger than that we achieved for , and for practical values of D and N. Thus, the proposed Gaussian FM can offer the ()-differentially private approximation with much less noise, which results in a ()-differentially private model that is much closer to the true model . We show empirical validation on synthetic and real datasets in Section 6.

4.2. Logistic Regression

For the logistic regression problem, we assume to be the class labels. The class label is approximated using the sigmoid function defined as . Let be the parameter vector. The goal of logistic regression is to find the optimal so that . The empirical average cost function for logistic regression is defined as

Unlike linear regression, the simplified form of in the second line cannot be represented with a finite series of polynomials. Zhang et al. [7] proposed an approximate polynomial form of using Taylor series expansion, written as

Using simple algebra and the values of for , and 2, i.e., , , and , we obtain

As before, we intend to compute the differentially private minimizer , and we observe that the representation of is of the form with . The expressions for are

Again, is a scalar, a D-dimensional vector, and a matrix for , and 2, respectively. We can express for , and 2 the same way as we did for linear regression in Section 4.1. To compute the sensitivities of using (4), let and be two neighboring datasets differing in only the last samples, which are and , respectively. Now, the -sensitivity of is . The -sensitivity of is

where , since , and . Finally, the -sensitivity of is

where the inequality follows from the expression for the norm of a symmetric matrix, as shown in Section 4.1. After computing the -sensitivity of for , and 2, we can now compute the noise array , where , and then compute following (5). Using these, we can compute the differentially-private according to (6), and consequently, the minimizer . Again we note that the -sensitivity of the coefficients in FM [7] is and the -sensitivity of the coefficients in relaxed FM [10] is for logistic regression. As in the case of linear regression, both of these sensitivities are orders of magnitude larger than that we achieved for , and for practical values of D and N. Since additive noise variances of our proposed Gaussian FM do not depend on the sample dimension D, we obtain , the ()-differentially private approximation to , with much less noise. As mentioned before, we validate our analysis empirically using synthetic and real datasets in Section 6.

4.3. Avoiding Unbounded Noisy Objective Functions

Our proposed Gaussian FM achieves (,)-DP by injecting noise drawn from a Gaussian distribution into the coefficients of the Stone–Weierstrass decomposition of the empirical average objective function. However, the injection of noise may render the objective function unbounded, which means there may not exist any optimal solution for the noisy objective function. As shown in Section 4.1 and Section 4.2, the Stone–Weierstrass decomposition would transform the objective functions of linear and logistic regression problems into quadratic polynomials in our Gaussian FM. Let be the matrix representation of the quadratic polynomial, where is a symmetric and positive semi-definite matrix, is a D-dimensional vector and is a scalar. After injection of noise, the noisy objective function becomes . In order to ensure that is bounded after introducing noise, it suffices to make sure is also symmetric and positive semi-definite [42].

We follow the seminal work of Dwork et al. [43] in our implementation—the symmetry of is ensured by constructing the noise matrix in such a way that noise is first drawn from the Gaussian distribution to form an upper triangular matrix, and the elements of the upper triangle part of the matrix (excluding the diagonal elements) are then copied to its lower triangle part. Adding the symmetric noise matrix to results in a symmetric . However, may still be unbounded if is not positive semi-definite. To resolve this, we perform eigen-decomposition of to obtain the eigenvalues and corresponding eigenvectors. We then project the eigenvalues onto the non-negative orthant. Let be the eigen-decomposition of , where is a matrix containing an eigenvector of in each row, and is a diagonal matrix where the i-th diagonal element is the eigenvalue of corresponding to the eigenvector in the i-th row of . We can write

If the i-th diagonal element of is negative, we turn that entry to zero. After this projection onto the non-negative orthant, let the resulting matrix be , where any i-th diagonal element is bigger than or equal to zero. The noisy objective function then becomes

where is symmetric positive semi-definite. Thus, is bounded. Since all of these are performed after the differentially-private noise addition, we can invoke the post-processing invariability of differential privacy and guarantee that is (,)-differentially private. Consequently, the minimizer also satisfies (,) differential privacy. Note that it is possible for all the eigenvalues of the differentially private estimate of the matrix to be negative. We leave the solution to such cases for future work.

5. Extension of Gaussian FM to Decentralized-Data Setting:

In many signal processing and machine learning applications, the privacy-sensitive user data being collected/used are of decentralized nature. Training machine learning and neural-network-based models on such a huge amount of data is certainly lucrative from an algorithmic perspective, but privacy constraints often make it challenging to share such datasets with a central aggregator. However, training locally at one node/site is infeasible due to the number of samples in each node/site could be too small for meaningful model training. Decentralized DP can benefit such research work by allowing data owners to share information while maintaining local privacy. The conventional decentralized DP scheme, however, always results in a degradation in performance compared to that of the pooled-data scenario. In this section, we first describe the problem with conventional decentralized DP. Then we review the scheme [6] in brief, as we employ the scheme into our Gaussian FM to propose .

The Decentralized-data Setting. In line with our discussions in Section 2.2, let us consider a decentralized data setting with S sites and a central aggregator node. We assume an “honest but curious” threat model [6]: all parties follow the protocol honestly, but a subset are “curious” and can collude (maybe with an external adversary) to learn other sites’ data/function outputs. For simplicity, we consider the symmetric setting: each site holds a dataset of disjoint data samples , where the total number of samples across all sites is N, and . The cost incurred by the model parameters due to one data sample is . We need to minimize the average cost to find the optimal . The empirical average cost for a particular over all the samples is expressed as

According to (3), the above expression can be written as

where contains as its entries for all at site s, , and is the array containing all as its entries. Finally, we can compute the minimizer:

5.1. Problems with Conventional Decentralized DP Computations

In this section, we discuss the problems with conventional decentralized DP schemes [6]. Consider estimating the mean of N scalars , where each . The -sensitivity of the function is . Therefore, for computing the -DP estimate of the average , we can follow the Gaussian mechanism [4] to release , where and .

Suppose now that the N samples are equally distributed among S sites. An aggregator wishes to estimate and publish the mean of all the samples. For preserving privacy, the conventional DP approach is for each site s to release (or send to the aggregator node) an -DP estimate of the function as: , where and . The aggregator can then compute the -DP approximate average as

The variance of the estimator is . We observe the ratio

That is, the decentralized DP averaging scheme will always result in a poorer performance than the pooled-data case. Imtiaz et al. [6] proposed the protocol that improves the performance of such systems by assuming the availability of some reasonable resources.

5.2. Correlation Assisted Private Estimation ()

Trust/Collusion Model. In order to incorporate the scheme to our proposed Gaussian FM in a decentralized data setting, we assume a similar trust model as in [6]. As mentioned before, we assume all of the S sites and the central aggregator node to be honest-but-curious. That is, the sites and central node can collude with an adversary to learn about the data or function output of some other site. We assume that up to sites, as well as the central node can collude with an adversary. In addition to having access to the outputs from each site and the aggregator, the adversary can know everything about the colluding sites, including their private data. Denoting the non-colluding sites with , we have .

Correlated Noise and the Protocol. Imtiaz et al. [6] proposed a novel framework that ensures -DP guarantee of the output from each site, while achieving the same noise level of the pooled-data scenario in the final output from the aggregator. In the scheme, each site first generates two noise terms: locally, and jointly with all other sites such that . The correlated noise term is generated by employing the secure aggregation protocol () by Bonawitz et al. [28], which utilizes Shamir’s t-out-of-n secret sharing [44] and is communication-efficient. The procedure is outlined in Algorithm 2.

| Algorithm 2 Generate Zero-Sum Noise |

|

Note that neither of the terms and has large enough variance to provide an acceptable -DP guarantee. However, the variances of and are chosen in such a way that the noise is sufficient to ensure a stringent DP guarantee to at site s. We observe that the variance of is given by and the variance of is set to [6]. Considering the decentralized mean computation problem of Section 5.1, under the scheme, each site sends to the aggregator. We can then compute the following at the aggregator

where we used . The variance of the estimator is , which is exactly the same as if all the data were present at the aggregator. This claim is formalized in Lemma 1 [6] in Section 2.1. That is, the protocol achieves the same noise variance as the pooled-data scenario in the symmetric decentralized-data setting.

5.3. Proposed Gaussian FM for Decentralized Data

For employing the scheme to extend our proposed Gaussian FM for decentralized-data setting, we need to generate the zero-sum noise. We can readily extend Algorithm 2 to generate array-valued zero-sum noise terms for each of the terms of the decomposition (3). That is, according to the scheme, the sites generate the noise using Algorithm 2, such that holds for all . The sites also generate noise with entries i.i.d. . The sites then compute the perturbed coefficient arrays locally as for all and send to the central aggregator. Note that and are arrays of the same dimension as . Now, the aggregator simply computes the average of each coefficient term for all as

because . The aggregator then uses these to compute and release . The privacy of follows directly from Theorem 1 and Theorem 2. It follows from Lemma 1 [6] that in the symmetric setting (i.e., and for all sites and all ), the noise variance achieved at the aggregator is the same as that of the pooled-data scenario. Additionally, the performance gain of over any conventional decentralized functional mechanism is given by Proposition 4. We refer to our proposed decentralized functional mechanism as , shown in Algorithm 3.

| Algorithm 3 Proposed Decentralized Gaussian FM () |

|

5.4. Computation and Communication Overhead of

We analyze the computation and communication costs associated with the proposed algorithm according to [6,28] for the decentralized linear regression and logistic regression problems. At each iteration round, we need to generate the zero-sum noise terms , which entails communication complexity of the sites and communication complexity of the aggregator [28]. Each site computes the noisy coefficient arrays and sends those to the aggregator, incurring an communication cost for the sites. Therefore, the total communication cost is for the sites and for the aggregator node. On the other hand, the zero-sum noise generation entails computation cost at the sites and computation cost at the aggregator [28]. This is expected since the largest coefficient arrays we are computing/sending are matrices in the decentralized setting. Note that we are not incorporating the computation cost of .

6. Experimental Results

In this section, we empirically compare the performance of our proposed Gaussian FM algorithm (gauss-fm) with those of some state-of-the-art differentially private linear and logistic regression algorithms, namely noisy gradient descent (noisy-gd) [12], objective perturbation (obj-pert) [8], original functional mechanism (fm) [7], and relaxed functional mechanism (rlx-fm) [10]. We also compare the performance of these algorithms with non-private linear and logistic regression (non-priv). As mentioned before, we compute the overall using RDP for the multi-round noisy-gd algorithm. Additionally, we show how our proposed decentralized functional mechanism (cape-fm) can improve a decentralized computation if the target function has sensitivity satisfying the conditions of Proposition 5 in Section 2.1. We show the variation in performance with privacy parameters and number of training samples. For the decentralized setting, we further show the empirical performance comparison by varying the number of sites.

Performance Indices. For the linear regression task, we use the mean squared error (MSE) as the performance index. Let the test dataset be . Then the MSE can be defined as: , where is the prediction from the algorithm. For the classification task, we use accuracy as the performance index. The accuracy can be defined as: , where is the indicator function, and is the prediction from the algorithm. Note that, in addition to a small MSE or large accuracy, we want to attain a strict privacy guarantee, i.e., small overall values. Recall from Section 3 that the overall for multi-shot algorithms is a function of the number of iterations, the target , the additive noise variance and the sensitivity . To demonstrate the overall guarantee for a fixed target , we plotted the overall (with dotted red lines on the right y-axis) along with MSE/accuracy (with solid blue lines on the left y-axis) as a means for visualizing how the privacy–utility trade-off varies with different parameters. For a given privacy budget (or performance requirement), the user can use the overall plot on the right y-axis, shown with dotted lines, (or MSE/accuracy plot on the left y-axis, shown with solid lines) to find the required noise standard deviation on the x-axis and, thereby, find the corresponding performance (or overall ). We compute the overall for the noisy-gd algorithm using the RDP technique shown in Section 3.

6.1. Linear Regression

For the linear regression problem, we perform experiments on three real datasets (and a synthetic dataset, as shown in Appendix B). The pharmacogenetic dataset was collected by the International Warfarin Pharmacogenetics Consortium (IWPC) [23] for the purpose of estimating personalized warfarin dose based on clinical and genotype information of a patient. The data used for this study have ambient dimension , and features are collected from patients. Out of the wide variety of numerical modeling methods used in [23], linear regression provided the most accurate dose estimates. Fredrikson et al. [20] later implemented an attack model assuming an adversary who employed an inference algorithm to discover the genotype of a target individual, and showed that an existing functional mechanism (fm) failed to provide a meaningful privacy guarantee to prevent such attacks. We perform privacy-preserving linear regression on the IWPC dataset (Figure 1a–c) to show the effectiveness of our proposed gauss-fm over fm, rlx-fm, and other existing approaches. Additionally, we use the Communities and Crime dataset (crime) [45], which has a larger dimensionality (Figure 1d–f), and the Buzz in Social Media dataset (twitter) [46] with and a large sample size (Figure 1g–i). We refer the reader to [47] for a detailed description of these real datasets. For all the experiments, we pre-process the data so that the samples satisfy the assumptions and ∀. We divide each dataset into train and test partitions with a ratio of 90:10. We show the average performance over 10 independent runs.

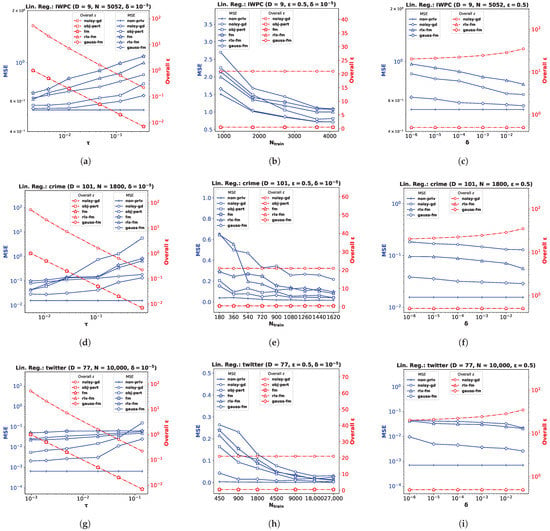

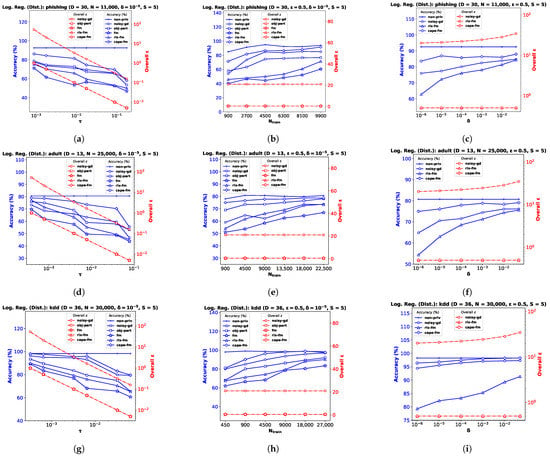

Figure 1.

Linear regression performance comparison in terms of MSE and overall for IWPC , crime , and twitter datasets with varying noise standard deviation in (a,d,g) the number of training samples in (b,e,h), and privacy parameter in (c,f,i).

Performance Comparison with Varying . We first investigate the variation of MSE with the DP additive noise standard deviation . We plot MSE against in Figure 1a,d,g. Recall from Definition 3 that, in the Gaussian mechanism, the noise is drawn from a Gaussian distribution with standard deviation . We keep fixed at . Note that one can vary to vary . Since noise standard deviation is inversely proportional to , increasing means decreasing , i.e., smaller noise variance. We observe from the plots that smaller leads to smaller MSE for all DP algorithms, indicating better utility at the expense of higher privacy loss. It is evident from these MSE vs. plots that our proposed method gauss-fm has much smaller MSE compared to all the other methods for the same values for all datasets. The obj-pert and fm algorithms offer pure DP by trading off utility, whereas gauss-fm and rlx-fm algorithms offer approximate DP. Although rlx-fm improves upon fm, the excess noise due to linear dependence on data dimension D leads to higher MSE than gauss-fm. Our proposed gauss-fm outperforms all of these methods by reducing the additive noise with the novel sensitivity analysis as shown in Section 4. We recall that the overall privacy loss for noisy-gd is calculated using the RDP approach, since noise is injected into the gradients in every iteration during optimization, with target . On the other hand, gauss-fm, rlx-fm, and fm add noise to the polynomial coefficients of the cost function before optimization, and obj-pert injects noise into the regularized cost function [8]. We plot the total privacy loss for all of the algorithms against . We observe from the y-axis on the right that the total privacy loss of the multi-round noisy-gd is considerably higher than the single-shot algorithms.

Performance Comparison with Varying . Next, we investigate the variation of MSE with the number of training samples . For this task, we shuffle and divide the total number of samples N into smaller partitions and perform the same pre-processing steps, while keeping the test partition untouched. We kept the values of the privacy parameters fixed: and . We plot MSE against in Figure 1b,e,h. We observe that performance generally improves with the increase in , which indicates that it is easier to ensure the same level of privacy when the training dataset cardinality is higher. We also observe from the MSE vs. plots that our proposed method gauss-fm offers MSE very close to that of non-priv even for moderate sample sizes, outperforming fm, rlx-fm, noisy-gd, and obj-pert. Again, we compute the overall spent using RDP for noisy-gd, and show that the multi-round algorithm suffers from larger privacy loss. Recall from (7) in Section 3 that the overall depends on sensitivity , and the number of iterations T. In the computation of , the number of training samples is cancelled out. Thus, the overall depends only on T for noisy-gd. We keep T fixed at 1000 iterations for noisy-gd and observe that the overall privacy risk exceeds 20. Note that we set the value of the target in (7) to be equal to in our computations.

Performance Comparison with Varying . Recall that we can interpret the privacy parameter as the probability that an algorithm fails to provide privacy risk . The obj-pert and fm algorithms offer pure -DP, where the additional privacy parameter is zero. Hence, we compare our proposed gauss-fm method with the rlx-fm and noisy-gd methods, which also guarantee (,)-DP. In the Gaussian mechanism, is in the denominator of the logarithmic term within the square root in the expression of . Therefore, the noise variance is not significantly changed by varying . We keep privacy parameter fixed at and observe from the MSE vs. plots in Figure 1c,f,i show that the performance of our algorithm does not degrade much for smaller . For the IWPC dataset in Figure 1c, for a value of as small as (indicating probability of the algorithm failing to provide -differential privacy), the MSE of gauss-fm is almost the same as that of the non-priv case. For the other datasets, our proposed method also gives better performance and overall , and thus a better privacy–utility trade-off than rlx-fm and noisy-gd.

6.2. Logistic Regression

For the logistic regression problem, we again perform experiments on three real datasets (and a synthetic dataset, as shown in Appendix B): the Phishing Websites dataset (phishing) [47] with dimensionality (Figure 2a–c), the Census Income dataset (adult) [47] with (Figure 2d–f), and the KDD Cup ’99 dataset (kdd) [47] with (Figure 2g–i). As before, we pre-process the data so that the feature vectors satisfy , and ∀. Note for obj-pert that the cost function is regularized and the labels are assumed to be in [8]. We divide each dataset into train and test partitions with a ratio of 90:10. We use percent accuracy on the test dataset as the performance index for logistic regression, and show the average performance over 10 independent runs.

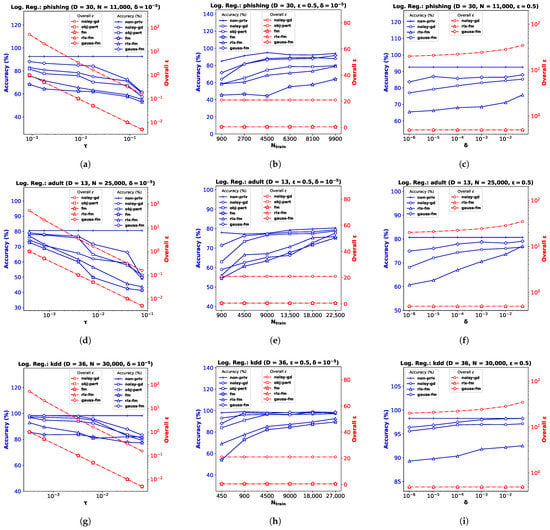

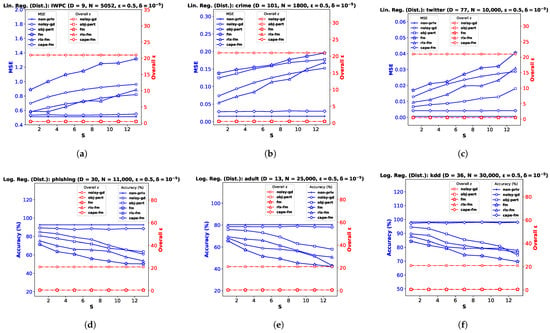

Figure 2.

Logistic regression performance comparison in terms of accuracy and overall for phishing , adult , and kdd datasets with varying noise standard deviation in (a,d,g), the number of training samples in (b,e,h), and privacy parameter in (c,f,i).

Performance Comparison with Varying . We plot accuracy against the DP additive noise standard deviation in Figure 2a,d,g. We observe that accuracy degrades when the additive DP noise standard deviation increases, indicating a greater privacy guarantee at the cost of performance. When noise is too high, privacy-preserving logistic regression may not learn a meaningful at all, and provide random results. Depending on the class distribution, this may not be obvious and the accuracy score may be misleading. We observe this for the kdd dataset in Figure 2g, where the classes are highly imbalanced, with ∼80% positive labels. Although the existing fm performs poorly on this dataset, our proposed gauss-fm provides significantly higher accuracy for all datasets, outperforming fm, as well as rlx-fm, obj-pert, and noisy-gd. As before, we observe the total privacy loss, i.e., overall spent, from the y-axis on the right.

Performance Comparison with Varying . We perform the same steps described in Section 6.1 and observe the variation in performance with the number of training samples, while keeping the privacy parameters fixed in Figure 2b,e,h. Accuracy generally improves with increasing . We observe that the same DP algorithm does not perform equally well for different datasets. For example, obj-pert performs better than noisy-gd on the adult dataset (Figure 2e), whereas noisy-gd performs better than obj-pert on the phishing dataset (Figure 2b). In general, fm and rlx-fm suffer from too much noise due to the quadratic and linear dependence on D of their sensitivities, respectively. However, our proposed gauss-fm overcomes this issue and consistently achieves accuracy close to the non-priv case even for moderate sample sizes. We also show the overall privacy guarantee, as before.

Performance Comparison with Varying . Similar to the linear regression experiments shown in Section 6.1, we keep and fixed for this task and vary the other privacy parameter . Figure 2c,f,i show that percent accuracy improves with increased . For sufficiently large (indicating 1–5% probability of the algorithm failing to provide privacy risk), gauss-fm accuracy can reach that of the non-priv algorithm in some datasets (e.g., Figure 2i). Although the accuracy of noisy-gd also improves, it comes at the cost of additional privacy risk, as shown in the overall vs. plots along the y-axes on the right. Due to the higher noise variance, rlx-fm achieves much inferior accuracy compared to both gauss-fm and noisy-gd.

6.3. Decentralized Functional Mechanism ()

In this section, we empirically show the effectiveness of , our proposed decentralized Gaussian FM which utilizes the [6] protocol. We implement differentially private linear and logistic regression for the decentralized-data setting using the same datasets described in Section 6.1 and Section 6.2, respectively. Note that the IWPC [23] data were collected from 21 sites across 9 countries. After obtaining informed consent to use de-identified data from patients prior to the study, the Pharmacogenetics Knowledge Base has since made the dataset publicly available for research purpose. As mentioned before, the type of data contained in the IWPC dataset is similar to many other medical datasets containing private information [20].

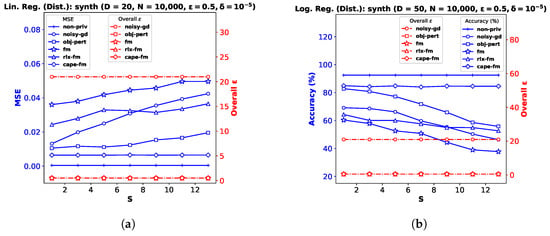

We implement our proposed cape-fm according to Algorithm 3, along with fm, rlx-fm, obj-pert, and noisy-gd according to the conventional decentralized DP approach. We compare the performance of these methods in Figure 3 and Figure 4. Similar to the pooled-data scenario, we also compare performance of these algorithms with non-private linear and logistic regression (non-priv). For these experiments, we assume and . Recall that the scheme achieves the same noise variance as the pooled-data scenario in the symmetric setting (see Lemma 1 [6] in Section 2.1). As our proposed algorithm follows the scheme, we attain the same advantages. When varying privacy parameters and , we keep the number of sites S fixed. Additionally, we show the variation in performance due to change in the number of sites in Figure 5. We pre-process each dataset as before, and use MSE and percent accuracy on test dataset as performance indices of the decentralized linear and logistic regression problems, respectively.

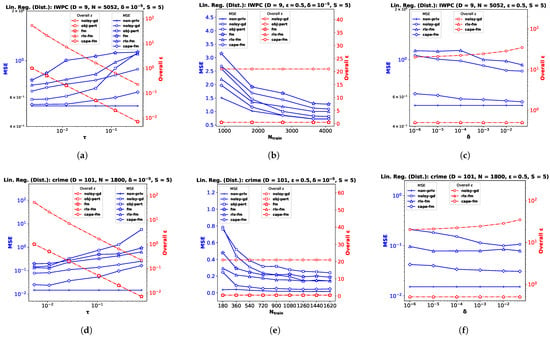

Figure 3.

Decentralized linear regression performance comparison in terms of MSE and overall for IWPC , crime , and twitter datasets with varying noise standard deviation in (a,d,g), the number of training samples in (b,e,h), and privacy parameter in (c,f,i).

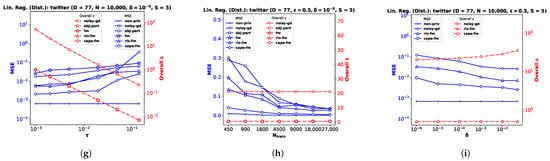

Figure 4.

Decentralized logistic regression performance comparison in terms of accuracy and overall for phishing , adult , and kdd datasets with varying noise standard deviation in (a,d,g), the number of training samples in (b,e,h), and privacy parameter in (c,f,i).

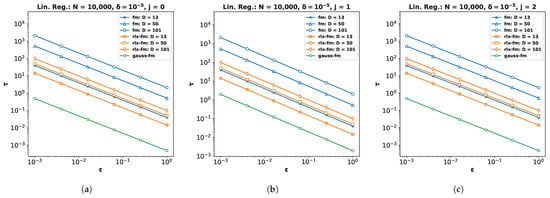

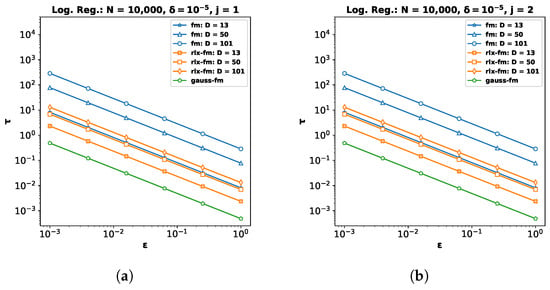

Figure 5.

Decentralized linear and logistic regression performance comparison and overall with varying number of sites S for the datasets (a) IWPC , (b) crime , (c) twitter , (d) phishing , (e) adult , and (f) kdd .

Performance Comparison by Varying . For this experiment, we keep the total number of samples N, privacy parameter , and the number of sites S fixed. We observe from the plots (a), (d), and (g) in both Figure 3 and Figure 4 that as increases, the performance degrades. The proposed cape-fm outperforms conventional decentralized noisy-gd, obj-pert, fm, and rlx-fm by a larger margin than the pooled-data case. The reason for this is that we can achieve a much smaller noise variance at the aggregator due to the correlated noise scheme detailed in Section 5.3. The utility of cape-fm thus stays the same as the centralized case in the decentralized-data setting, whereas the conventional scheme’s utility always degrades by a factor of S (see Section 5.1). The overall usage vs. plots on the right y-axes for each site show that noisy-gd suffers from much higher privacy loss.

Performance Comparison by Varying . We keep , , and S fixed while investigating variation in performance with respect to . As the sensitivities we computed in Section 4.1 and Section 4.2 are inversely proportional to the sample size, it is straightforward to infer that guaranteeing smaller privacy risk and higher utility is much easier when the sample size is large. Similar to the pooled-data cases in Section 6.1 and Section 6.2, we again observe from the plots (b), (e), and (h) in both Figure 3 and Figure 4 that, for sufficiently large , utility of cape-fm can reach that of the non-priv case. Note that the non-priv algorithms are the same as the pooled-data scenario, because if privacy is not a concern, all sites can send the data to aggregator for learning.

Performance Comparison by Varying . For this task, we keep , , and S fixed. Note according to the scheme that the proposed cape-fm algorithm guarantees -DP where satisfy the relation . Recall that is the probability that the algorithm fails to provide privacy risk , and that we assumed a fixed number of colluding sites . From the plots (c), (f), and (i) in both Figure 3 and Figure 4, we observe that even for moderate values of , cape-fm easily outperforms rlx-fm and noisy-gd. Moreover, as seen from the overall plots, noisy-gd provides a much weaker privacy guarantee. Thus, our proposed cape-fm algorithm offers superior performance and privacy–utility trade-off in the decentralized setting.

Performance Comparison by Varying S. Finally, we investigate performance variation with the number of sites S, keeping the privacy and dataset parameters fixed. This automatically varies the number of samples at each site , as we consider the symmetric setting. Figure 5a–c shows the results for decentralized linear regression, and Figure 5d–f shows the results for decentralized logistic regression. We observe that the variation in S does not affect the utility of cape-fm, as long as the number of colluding sites meets the condition . However, increasing S leads to significant degradation in performance for conventional decentralized DP mechanisms, since the additive noise variance increases as decreases. We show additional experimental results on synthetic datasets in Appendix B.

7. Conclusions and Future Work

In this paper, we proposed Gaussian FM that offers a significant improvement over the existing FM to compute functions that are commonly used in signal processing and machine learning applications, satisfying differential privacy. Our improvement stems from a novel sensitivity analysis that resulted in an orders-of-magnitude reduction in the amount of noise added to the coefficients of the Stone–Weierstrass decomposition of the functions. We showed two common regression problems—linear and logistic regression—as examples to demonstrate our analyses. Additionally, we experimentally showed the superior privacy guarantee and utility of our proposed method over existing methods by varying privacy parameters and relevant dataset parameters for both synthetic and real datasets. We extended our Gaussian FM algorithm to decentralized data settings by taking advantage of a correlated noise protocol, , and proposed , which ensures the same utility as the pooled-data scenario in certain regimes. We empirically compared the performance of the proposed with that of existing and conventional algorithms for decentralized linear and logistic regression problems. In addition to varying privacy and dataset parameters, we showed performance comparison by varying the number of sites, which further proves the superior privacy guarantee and improved utility of our proposed method. For future work, we plan to extend our research to more complex algorithms and neural networks to ensure differential privacy on other challenging signal processing and machine learning problems.

Author Contributions

Conceptualization, methodology, formal analysis, N.T., J.M., A.D.S. and H.I.; software, data curation, N.T. and H.I.; supervision, H.I.; writing—original draft preparation, N.T.; writing—review and editing, H.I., J.M. and A.D.S.; funding acquisition, A.D.S. All authors have read and agreed to the published version of the manuscript.

Funding

The work of A.D. Sarwate was funded in part by the US National Science Foundation under awards CNS-2148104 and CIF-1453432 and by the US National Institutes of Health under award 2R01DA040487-01A1.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The experimental data used to evaluate the performance of the algorithms proposed in this paper are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Comparison of Sensitivity and Noise Standard Deviation

To provide further details and rationale behind the superior performance of our proposed Gaussian FM (gauss-fm) over the original FM [7] (fm) and the relaxed FM [10] (rlx-fm) algorithms, we compare the additive noise standard deviation for each mechanism by varying the privacy parameter for different values of data dimension D. Recall that is scaled to the sensitivity of the data-dependent terms in the Stone–Weierstrass [35] decomposition of the objective function. The computed sensitivities for each of the three mechanisms are shown in Table A1.

Table A1.

Comparison of sensitivities for various DP mechanisms.

Table A1.

Comparison of sensitivities for various DP mechanisms.

| Linear Regression | ||||

| Logistic Regression | ||||

As mentioned before, the sensitivity terms for our proposed gauss-fm are tailored to the order j, and do not depend on the ambient dimension D. On the other hand, the sensitivity terms for both fm and rlx-fm depend on D. This results in injecting prohibitively large amounts of noise into the function computation. The proofs of the -sensitivity terms for fm are provided in [7], and the proof of the -sensitivity for rlx-fm for the logistic regression is shown in [10]. We can follow the similar procedure outlined by Ding et al. [10] to obtain the -sensitivity for the linear regression problem as . The proof is as follows:

Proof.

Let the n-th sample of a dataset be denoted by a tuple , where is the feature vector and is the response for . Let us assume that two neighboring datasets and differ in the last tuple and . For linear regression we have

where ; ; and . We denote and as the set of polynomial coefficients of and . We also denote

where represents the c-th element in the feature vector . Now, the -sensitivity of linear regression for the relaxed FM algorithm can be expressed as

where t is an arbitrary tuple. □

We now empirically compare the additive noise standard deviation for gauss-fm, fm, and rlx-fm. In Figure A1, we show of the additive noise for the coefficient terms for different j and different data dimensionality D. We set the number of samples N = 10,000 and privacy parameter . From the figure, we observe that the noise standard deviation for gauss-fm is significantly lower than the noise standard deviation for both fm and rlx-fm algorithms. We achieve this by our novel sensitivity analysis, which is tailored to different coefficient terms (i.e., the order j) as shown in Section 4 and Algorithm 1.

Figure A1.

Standard deviation of the additive noise for (a) , (b) , and (c) for different values of dimensionality D for differentially private linear regression using fm, rlx-fm, and gauss-fm.

Figure A2.

Standard deviation of the additive noise for (a) and (b) for different values of dimensionality D for differentially private logistic regression using fm, rlx-fm, and gauss-fm.

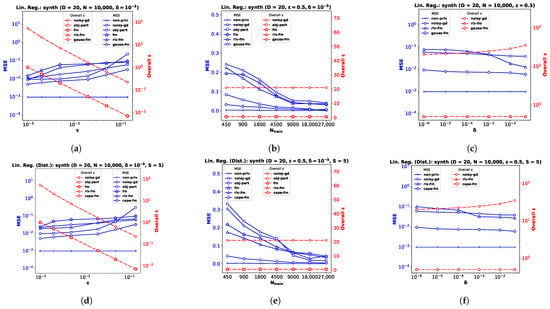

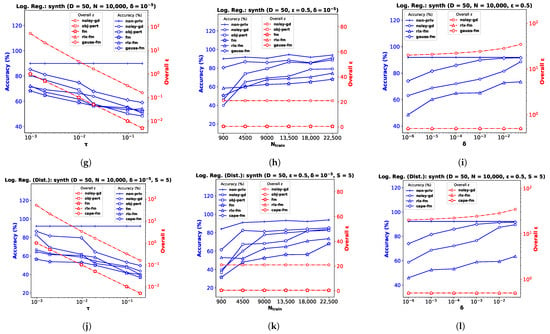

Appendix B. Additional Experimental Results on Synthetic Data

In addition to the real datasets, we perform experiments on synthetic datasets while keeping the setup identical to the one described in Section 6. We generate random samples and outputs with dimensionality for the linear regression problems in pooled-data (Figure A3a–c) and distributed-data settings ((Figure A3d–f). For logistic regression in pooled-data (Figure A3g–i) and distributed-data settings (Figure A3j–l), we generate another synthetic dataset with dimensionality where outputs are class labels.

Similar to the results observed in Section 6, performance generally improves with lower noise variance and a weaker privacy guarantee. Our proposed gauss-fm and cape-fm algorithms consistently outperform existing fm, rlx-fm, noisy-gd, and obj-pert methods. We also show variation in performance with number of sites S in Figure A4. The empirical results verify that the utility of cape-fm does not degrade with increased S, and thus provides a better privacy guarantee over conventional decentralized DP schemes.

Figure A3.

Performance comparison and overall for synthetic datasets with varying noise standard deviation in (a,d,g,j), number of training samples in (b,e,h,k), and privacy parameter in (c,f,i,l).

Figure A4.

Decentralized linear and logistic regression performance comparison and overall with varying number of sites S for the datasets (a) synth (D = 20) and (b) synth (D = 50).

References

- Dwork, C. Differential Privacy. In Automata, Languages and Programming. ICALP 2006; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4052, pp. 1–12. [Google Scholar]

- Sarwate, A.D.; Chaudhuri, K. Signal processing and machine learning with differential privacy: Algorithms and challenges for continuous data. IEEE Signal Process. Mag. 2013, 30, 86–94. [Google Scholar] [CrossRef] [PubMed]

- Jayaraman, B.; Evans, D. Evaluating differentially private machine learning in practice. In Proceedings of the 28th USENIX Security Symposium (USENIX Security 19), Santa Clara, CA, USA, 14–16 August 2019; pp. 1895–1912. [Google Scholar]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating noise to sensitivity in private data analysis. In Theory of Cryptography Conference; Springer: Berlin/Heidelberg, Germany, 2006; pp. 265–284. [Google Scholar]

- Desfontaines, D.; Pejó, B. Sok: Differential privacies. Proc. Priv. Enhancing Technol. 2020, 2020, 288–313. [Google Scholar] [CrossRef]

- Imtiaz, H.; Mohammadi, J.; Silva, R.; Baker, B.; Plis, S.M.; Sarwate, A.D.; Calhoun, V.D. A Correlated Noise-Assisted Decentralized Differentially Private Estimation Protocol, and its Application to fMRI Source Separation. IEEE Trans. Signal Process. 2021, 69, 6355–6370. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Zhang, Z.; Xiao, X.; Yang, Y.; Winslett, M. Functional mechanism: Regression analysis under differential privacy. arXiv 2012, arXiv:1208.0219. [Google Scholar] [CrossRef]

- Chaudhuri, K.; Monteleoni, C.; Sarwate, A.D. Differentially private empirical risk minimization. J. Mach. Learn. Res. 2011, 12, 1069–1109. [Google Scholar]

- Bassily, R.; Smith, A.; Thakurta, A. Private empirical risk minimization: Efficient algorithms and tight error bounds. In Proceedings of the 2014 IEEE 55th Annual Symposium on Foundations of Computer Science, Philadelphia, PA, USA, 18–21 October 2014; pp. 464–473. [Google Scholar]

- Ding, J.; Zhang, X.; Li, X.; Wang, J.; Yu, R.; Pan, M. Differentially private and fair classification via calibrated functional mechanism. Proc. AAAI Conf. Artif. Intell. 2020, 34, 622–629. [Google Scholar] [CrossRef]

- Phan, N.; Vu, M.; Liu, Y.; Jin, R.; Dou, D.; Wu, X.; Thai, M.T. Heterogeneous Gaussian mechanism: Preserving differential privacy in deep learning with provable robustness. arXiv 2019, arXiv:1906.01444. [Google Scholar]

- Song, S.; Chaudhuri, K.; Sarwate, A.D. Stochastic gradient descent with differentially private updates. In Proceedings of the 2013 IEEE Global Conference on Signal and Information Processing, Austin, TX, USA, 3–5 December 2013; pp. 245–248. [Google Scholar]

- Nozari, E.; Tallapragada, P.; Cortés, J. Differentially private distributed convex optimization via objective perturbation. In Proceedings of the 2016 American Control Conference (ACC), Boston, MA, USA, 6–8 July 2016; pp. 2061–2066. [Google Scholar]

- Wu, X.; Li, F.; Kumar, A.; Chaudhuri, K.; Jha, S.; Naughton, J. Bolt-on differential privacy for scalable stochastic gradient descent-based analytics. In Proceedings of the 2017 ACM International Conference on Management of Data, Chicago, IL, USA, 14–19 May 2017; pp. 1307–1322. [Google Scholar]

- Smith, A. Privacy-preserving statistical estimation with optimal convergence rates. In Proceedings of the Forty-Third Annual ACM Symposium on Theory of Computing, San Jose, CA, USA, 6–8 June 2011; pp. 813–822. [Google Scholar]

- McSherry, F.; Talwar, K. Mechanism design via differential privacy. In Proceedings of the 48th Annual IEEE Symposium on Foundations of Computer Science (FOCS’07), Providence, RI, USA, 21–23 October 2007; pp. 94–103. [Google Scholar]

- Jorgensen, Z.; Yu, T.; Cormode, G. Conservative or liberal? Personalized differential privacy. In Proceedings of the 2015 IEEE 31st International Conference on Data Engineering, Seoul, Republic of Korea, 13–17 April 2015; pp. 1023–1034. [Google Scholar]

- Aono, Y.; Hayashi, T.; Trieu Phong, L.; Wang, L. Scalable and secure logistic regression via homomorphic encryption. In Proceedings of the Sixth ACM Conference on Data and Application Security and Privacy, New Orleans, LA, USA, 9–11 March 2016; pp. 142–144. [Google Scholar]

- Xu, D.; Yuan, S.; Wu, X. Achieving differential privacy and fairness in logistic regression. In Proceedings of the Companion Proceedings of the 2019 World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 594–599. [Google Scholar]

- Fredrikson, M.; Lantz, E.; Jha, S.; Lin, S.; Page, D.; Ristenpart, T. Privacy in pharmacogenetics: An End-to-End case study of personalized Warfarin dosing. In Proceedings of the 23rd USENIX Security Symposium (USENIX Security 14), San Diego, CA, USA, 20–22 August 2014; pp. 17–32. [Google Scholar]

- Anderson, J.L.; Horne, B.D.; Stevens, S.M.; Grove, A.S.; Barton, S.; Nicholas, Z.P.; Kahn, S.F.; May, H.T.; Samuelson, K.M.; Muhlestein, J.B.; et al. Randomized trial of genotype-guided versus standard Warfarin dosing in patients initiating oral anticoagulation. Circulation 2007, 116, 2563–2570. [Google Scholar] [CrossRef]

- Fusaro, V.A.; Patil, P.; Chi, C.L.; Contant, C.F.; Tonellato, P.J. A systems approach to designing effective clinical trials using simulations. Circulation 2013, 127, 517–526. [Google Scholar] [CrossRef]

- Consortium, I.W.P. Estimation of the Warfarin dose with clinical and pharmacogenetic data. N. Engl. J. Med. 2009, 360, 753–764. [Google Scholar]

- Sconce, E.A.; Khan, T.I.; Wynne, H.A.; Avery, P.; Monkhouse, L.; King, B.P.; Wood, P.; Kesteven, P.; Daly, A.K.; Kamali, F. The impact of CYP2C9 and VKORC1 genetic polymorphism and patient characteristics upon Warfarin dose requirements: Proposal for a new dosing regimen. Blood 2005, 106, 2329–2333. [Google Scholar] [CrossRef] [PubMed]

- Gade, S.; Vaidya, N.H. Private learning on networks. arXiv 2016, arXiv:1612.05236. [Google Scholar]

- Heikkilä, M.; Lagerspetz, E.; Kaski, S.; Shimizu, K.; Tarkoma, S.; Honkela, A. Differentially private Bayesian learning on distributed data. Adv. Neural Inf. Process. Syst. 2017, 30, 3229–3238. [Google Scholar]

- Tajeddine, R.; Jälkö, J.; Kaski, S.; Honkela, A. Privacy-preserving data sharing on vertically partitioned data. arXiv 2020, arXiv:2010.09293. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar]

- Heikkilä, M.A.; Koskela, A.; Shimizu, K.; Kaski, S.; Honkela, A. Differentially private cross-silo federated learning. arXiv 2020, arXiv:2007.05553. [Google Scholar]

- Xu, D.; Yuan, S.; Wu, X. Achieving differential privacy in vertically partitioned multiparty learning. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021; pp. 5474–5483. [Google Scholar]

- Dwork, C.; Kenthapadi, K.; McSherry, F.; Mironov, I.; Naor, M. Our data, ourselves: Privacy via distributed noise generation. In Annual International Conference on the Theory and Applications of Cryptographic Techniques; Springer: Berlin/Heidelberg, Germany, 2006; pp. 486–503. [Google Scholar]

- Anandan, B.; Clifton, C. Laplace noise generation for two-party computational differential privacy. In Proceedings of the 2015 13th Annual Conference on Privacy, Security and Trust (PST), Izmir, Turkey, 21–23 July 2015; pp. 54–61. [Google Scholar]