A Selective Review on Information Criteria in Multiple Change Point Detection

Abstract

:1. Introduction

2. Problem Formulation

2.1. Single CP Model Formulation

2.2. MCP Model Formulation

3. Information Criteria for MCP

3.1. AIC and Its Variant

3.2. BIC and Its Variants

3.3. Minimum Description Length

- The penalty of a real-valued parameter estimated by n data points is ;

- The penalty of an unbounded integer parameter K is ;

- The penalty of an integer bounded by a known integer N is .

4. Review on Applications

4.1. Application of Hypothesis-Testing Based Methods

4.2. Application of Information Criteria Based Methods

4.3. Appliction of Hybrid Methods

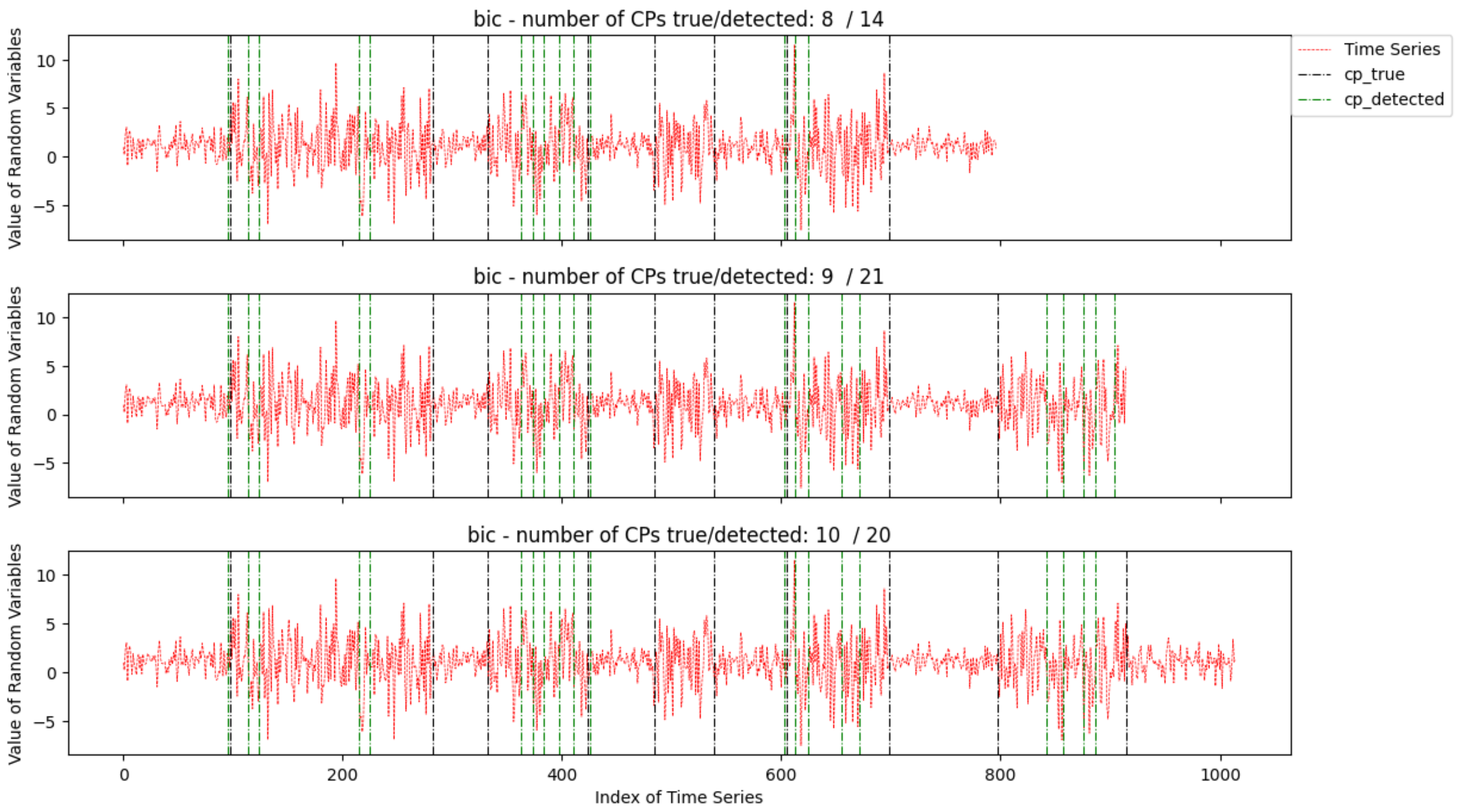

5. Simulation Study

5.1. Simulation on Different Magnitude of Mean Shifts

5.2. Simulation on Different Number of Change Points

6. Case Study

7. Discussion, Summary and Future Perspective

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Simulation and Discussion for Variance Change Case

Appendix B. Simulation and Discussion for Mean and Variance Change Case

References

- Gao, Z.; Shang, Z.; Du, P.; Robertson, J.L. Variance change point detection under a smoothly-changing mean trend with application to liver procurement. J. Am. Stat. Assoc. 2018, 114, 773–781. [Google Scholar] [CrossRef]

- Liang, W.; Xu, L. Gradual variance change point detection with a smoothly changing mean trend. Stat 2021, 10, e327. [Google Scholar] [CrossRef]

- Page, E.S. Continuous inspection schemes. Biometrika 1954, 41, 100–115. [Google Scholar] [CrossRef]

- Page, E.S. A test for a change in a parameter occurring at an unknown Point. Biometrika 1955, 42, 523–527. [Google Scholar] [CrossRef]

- Page, E.S. On problems in which a change in a parameter occurs at an unknown point. Biometrika 1957, 44, 248–252. [Google Scholar] [CrossRef]

- Hinkley, D.V. Inference about the change-point in a sequence of random Variables. Biometrika 1970, 57, 1–17. [Google Scholar] [CrossRef]

- Hinkley, D.V. Inference about the intersection in two-phase Regression. Biometrika 1969, 56, 495–504. [Google Scholar] [CrossRef]

- Hudson, D.J. Fitting segmented curves whose join points have to be estimated. J. Am. Stat. Assoc. 1966, 61, 1097–1129. [Google Scholar] [CrossRef]

- Chen, J.; Gupta, A.K. Parametric Statistical Change Point Analysis: With Applications to Genetics, Medicine, and Finance; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Zhang, J.; Yang, Y.; Ding, J. Information criteria for model selection. WIREs Comput. Stat. 2023, 15, e1607. [Google Scholar] [CrossRef]

- Brodsky, E.; Darkhovsky, B. Nonparametric Methods in Change Point Problems; Springer Science & Business Media: Berlin, Germany, 2013; Volume 243. [Google Scholar]

- Padilla, O.H.M.; Yu, Y.; Wang, D.; Rinaldo, A. Optimal nonparametric multivariate change point detection and localization. IEEE Trans. Inf. Theory 2021, 68, 1922–1944. [Google Scholar] [CrossRef]

- Arlot, S.; Celisse, A.; Harchaoui, Z. A kernel multiple change-point algorithm via model selection. J. Mach. Learn. Res. 2019, 20, 1–56. [Google Scholar]

- Haynes, K.; Fearnhead, P.; Eckley, I.A. A computationally efficient nonparametric approach for changepoint detection. Stat. Comput. 2017, 27, 1293–1305. [Google Scholar] [CrossRef] [PubMed]

- Zou, C.; Yin, G.; Feng, L.; Wang, Z. Nonparametric maximum likelihood approach to multiple change-point problems. Ann. Stat. 2014, 42, 970–1002. [Google Scholar] [CrossRef]

- Niu, Y.S.; Hao, N.; Zhang, H. Multiple change-point detection: A selective overview. Stat. Sci. 2016, 31, 611–623. [Google Scholar] [CrossRef]

- Siegmund, D. Confidence sets in change-point problems. Int. Stat. Rev. Rev. Int. De Stat. 1988, 56, 31–48. [Google Scholar] [CrossRef]

- Worsley, K.J. Confidence regions and tests for a change-point in a sequence of exponential family random variables. Biometrika 1986, 73, 91–104. [Google Scholar] [CrossRef]

- Kim, H.-J.; Fay, M.P.; Feuer, E.J.; Midthune, D.N. Permutation tests for joinpoint regression with applications to cancer rates. Stat. Med. 2000, 19, 335–351. [Google Scholar] [CrossRef]

- Kim, H.-J.; Yu, B.; Feuer, E.J. Selecting the number of change-points in segmented line regression. Stat. Sin. 2009, 19, 597. [Google Scholar]

- Truong, C.; Oudre, L.; Vayatis, N. Selective review of offline change point detection methods. Signal Process. 2020, 167, 107299. [Google Scholar] [CrossRef]

- Killick, R.; Fearnhead, P.; Eckley, I.A. Optimal detection of changepoints with a linear computational cost. J. Am. Stat. Assoc. 2012, 107, 1590–1598. [Google Scholar] [CrossRef]

- Xiao, X.; Chen, P.; Ye, Z.-S.; Tsui, K.-L. On computing multiple change points for the gamma distribution. J. Qual. Technol. 2021, 53, 267–288. [Google Scholar] [CrossRef]

- Akaike, H. Information theory and an extension of maximum likelihood principle. In Proceedings of the 2nd International Symposium on Information Theory; Akademiai Kiado: Budapest, Hungary, 1973; pp. 267–281. [Google Scholar]

- Schwarz, G. Estimating the Dimension of a Model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Rissanen, J. Modeling by shortest data description. Automatica 1978, 14, 465–471. [Google Scholar] [CrossRef]

- Akaike, H. A new look at the statistical model identification. IEEE Trans. Autom. Control 1974, 19, 716–723. [Google Scholar] [CrossRef]

- Jones, R.H.; Dey, I. Determining one or more change Points. Chem. Phys. LIPIDS 1995, 76, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Katz, R.W. On some criteria for estimating the order of a Markov chain. Technometrics 1981, 23, 243–249. [Google Scholar] [CrossRef]

- Shibata, R. Selection of the order of an autoregressive model by Akaike’s information criterion. Biometrika 1976, 63, 117–126. [Google Scholar] [CrossRef]

- Kass, R.E.; Raftery, A.E. Bayes factors. J. Am. Stat. Assoc. 1995, 90, 773–795. [Google Scholar] [CrossRef]

- Ninomiya, Y. Change-point model selection via AIC. Ann. Inst. Stat. Math. 2015, 67, 943–961. [Google Scholar] [CrossRef]

- Yao, Y.-C. Estimating the number of change-points via Schwarz’criterion. Stat. Probab. Lett. 1988, 6, 181–189. [Google Scholar] [CrossRef]

- Zhang, N.R.; Siegmund, D.O. A modified Bayes information criterion with applications to the analysis of comparative genomic hybridization data. Biometrics 2007, 63, 22–32. [Google Scholar] [CrossRef] [PubMed]

- Lavielle, M. Using penalized contrasts for the change-point Problem. Signal Process. 2005, 85, 1501–1510. [Google Scholar] [CrossRef]

- Chen, J.; Gupta, A.; Pan, J. Information criterion and change point problem for regular models. Sankhyā Indian J. Stat. 2006, 68, 252–282. [Google Scholar]

- Pan, J.; Chen, J. Application of modified information criterion to multiple change point problems. J. Multivar. Anal. 2006, 97, 2221–2241. [Google Scholar] [CrossRef]

- Zhang, N.R. Change-Point Detection and Sequence Alignment: Statistical Problems of Genomics. Ph.D. Thesis, Stanford University, Stanford, CA, USA, 2005. [Google Scholar]

- Wang, H.; Li, B.; Leng, C. Shrinkage tuning parameter selection with a diverging number of parameters. J. R. Stat. Soc. Ser. B Stat. Methodol. 2009, 71, 671–683. [Google Scholar] [CrossRef]

- Muggeo, V.M.; Adelfio, G. Efficient change point detection for genomic sequences of continuous measurements. Bioinformatics 2011, 27, 161–166. [Google Scholar] [CrossRef] [PubMed]

- Fryzlewicz, P. Wild binary segmentation for multiple change-point detection. Ann. Stat. 2014, 42, 2243–2281. [Google Scholar] [CrossRef]

- Kolmogorov, A.N. Three approaches to the quantitative definition information. Probl. Inf. Transm. 1965, 1, 1–7. [Google Scholar] [CrossRef]

- Rissanen, J. A universal prior for integers and estimation by minimum description length. Ann. Stat. 1983, 11, 416–431. [Google Scholar] [CrossRef]

- Lu, Q.; Lund, R.; Lee, T.C. An MDL approach to the climate segmentation problem. Ann. Appl. Stat. 2010, 4, 299–319. [Google Scholar] [CrossRef]

- Ma, L.; Sofronov, G. Change-point detection in autoregressive processes via the Cross-Entropy method. Algorithms 2020, 13, 128. [Google Scholar] [CrossRef]

- Davis, R.A.; Lee, T.C.M.; Rodriguez-Yam, G.A. Structural break estimation for nonstationary time series models. J. Am. Stat. Assoc. 2006, 101, 223–239. [Google Scholar] [CrossRef]

- Alin, A.; Beyaztas, U.; Martin, M.A. Robust change point detection for linear regression models. Stat. Its Interface 2019, 12, 203–213. [Google Scholar] [CrossRef]

- Ganocy, S.J.; Sun, J.-Y. Heteroscedastic change point analysis and application to footprint data. J. Data Sci. 2015, 13, 157–185. [Google Scholar] [CrossRef]

- Theodosiadou, O.; Pantelidou, K.; Bastas, N.; Chatzakou, D.; Tsikrika, T.; Vrochidis, S.; Kompatsiaris, I. Change point detection in terrorism-related online content using deep learning derived indicators. Information 2021, 12, 274. [Google Scholar] [CrossRef]

- Li, Q.; Yao, K.; Zhang, X. A change-point detection and clustering method in the recurrent-event context. J. Stat. Comput. Simul. 2020, 90, 1131–1149. [Google Scholar] [CrossRef]

- Anastasiou, A.; Fryzlewicz, P. Detecting multiple generalized change-points by isolating single ones. Metrika 2022, 85, 141–174. [Google Scholar] [CrossRef]

- Niu, Y.S.; Zhang, H. The screening and ranking algorithm to detect DNA copy number variations. Ann. Appl. Stat. 2012, 6, 1306. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, Z.; Zi, X. Rank-based multiple change-point detection. Commun. Stat. Theory Methods 2020, 49, 3438–3454. [Google Scholar] [CrossRef]

- Cabrieto, J.; Adolf, J.; Tuerlinckx, F.; Kuppens, P.; Ceulemans, E. Detecting long-lived autodependency changes in a multivariate system via change point detection and regime switching models. Sci. Rep. 2018, 8, 15637. [Google Scholar] [CrossRef]

- Wang, Q.; Tang, J.; Zeng, J.; Leng, S.; Shui, W. Regional detection of multiple change points and workable application for precipitation by maximum likelihood approach. Arab. J. Geosci. 2019, 12, 1–16. [Google Scholar] [CrossRef]

- Cho, H.; Fryzlewicz, P. Multiple change point detection under serial dependence: Wild contrast maximisation and gappy Schwarz algorithm. arXiv 2020, arXiv:2011.13884. [Google Scholar] [CrossRef]

- Li, S.; Lund, R. Multiple changepoint detection via genetic Algorithms. J. Clim. 2012, 25, 674–686. [Google Scholar] [CrossRef]

- Cucina, D.; Rizzo, M.; Ursu, E. Multiple changepoint detection for periodic autoregressive models with an application to river flow analysis. Stoch. Environ. Res. Risk Assess. 2019, 33, 1137–1157. [Google Scholar] [CrossRef]

- Ding, Y.; Zeng, L.; Zhou, S. Phase I analysis for monitoring nonlinear profiles in manufacturing processes. J. Qual. Technol. 2006, 38, 199–216. [Google Scholar] [CrossRef]

- Zeng, L.; Neogi, S.; Zhou, Q. Robust Phase I monitoring of profile data with application in low-E glass manufacturing processes. J. Manuf. Syst. 2014, 33, 508–521. [Google Scholar] [CrossRef]

- Wu, Z.; Li, Y.; Hu, L. A synchronous multiple change-point detecting method for manufacturing process. Comput. Ind. Eng. 2022, 169, 108114. [Google Scholar] [CrossRef]

- Bai, J. Common breaks in means and variances for panel data. J. Econom. 2010, 157, 78–92. [Google Scholar] [CrossRef]

- Chen, J.; Gupta, A.K. Testing and locating variance changepoints with application to stock prices. J. Am. Stat. Assoc. 1997, 92, 739–747. [Google Scholar] [CrossRef]

- Costa, M.; Gonçalves, A.M.; Teixeira, L. Change-point detection in environmental time series based on the informational approach. Electron. J. Appl. Stat. Anal. 2016, 9, 267–296. [Google Scholar]

- Zhang, Z.; Doganaksoy, N. Change point detection and issue localization based on fleet-wide fault data. J. Qual. Technol. 2022, 54, 453–465. [Google Scholar] [CrossRef]

- Ratnasingam, S.; Ning, W. Modified information criterion for regular change point models based on confidence distribution. Environ. Ecol. Stat. 2021, 28, 303–322. [Google Scholar] [CrossRef]

- Basalamah, D.; Said, K.K.; Ning, W.; Tian, Y. Modified information criterion for linear regression change-point model with its applications. Commun. Stat.-Simul. Comput. 2021, 50, 180–197. [Google Scholar] [CrossRef]

- Said, K.K.; Ning, W.; Tian, Y. Modified information criterion for testing changes in skew normal model. Braz. J. Probab. Stat. 2019, 33, 280–300. [Google Scholar] [CrossRef]

- Ariyarathne, S.; Gangammanavar, H.; Sundararajan, R.R. Change point detection-based simulation of nonstationary sub-hourly wind time series. Appl. Energy 2022, 310, 118501. [Google Scholar] [CrossRef]

- Noh, H.; Rajagopal, R.; Kiremidjian, A. Sequential structural damage diagnosis algorithm using a change point detection method. J. Sound Vib. 2013, 332, 6419–6433. [Google Scholar] [CrossRef]

- Letzgus, S. Change-point detection in wind turbine SCADA data for robust condition monitoring with normal behaviour models. Wind. Energy Sci. 2020, 5, 1375–1397. [Google Scholar] [CrossRef]

- Takeuchi, K. Distribution of information statistics and validity criteria of models. Math. Sci. 1976, 153, 12–18. [Google Scholar]

- Murata, N.; Yoshizawa, S.; Amari, S.-I. Network information criterion-determining the number of hidden units for an artificial neural network model. IEEE Trans. Neural Netw. 1994, 5, 865–872. [Google Scholar] [CrossRef]

- Spiegelhalter, D.J.; Best, N.G.; Carlin, B.P.; Van Der Linde, A. Bayesian measures of model complexity and fit. J. R. Stat. Soc. Ser. B Stat. Methodol. 2002, 64, 583–639. [Google Scholar] [CrossRef]

- Biernacki, C.; Celeux, G.; Govaert, G. Assessing a mixture model for clustering with the integrated completed likelihood. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 719–725. [Google Scholar] [CrossRef]

| Publication | Number of CPs | MCP Formulation | Model Selection Criteria |

|---|---|---|---|

| [47] | one or two | Piecewise linear regression with Gaussian noise | hypothesis testing |

| [48] | two | Piecewise linear or quadratic regression | hypothesis testing |

| [49] | multiple | Empirical divergence measure | hypothesis testing |

| [50] | multiple | Recurrent K-means cluster model | hypothesis testing |

| [51] | multiple | Mean shift model with Gaussian noise | sBIC2 |

| [52] | multiple | Mean shift model with Gaussian noise | BIC, mBIC2 |

| [53] | multiple | Mean shift model with Gaussian noise | BIC |

| [40] | multiple | Mean shift model with Gaussian noise | sBIC1 |

| [54] | multiple | Mean shift model with Gaussian noise | AIC, BIC |

| [55] | multiple | AR(1) with multivariate Gaussian innovation | BIC |

| [56] | multiple | Mean shift model with noise | BIC |

| [44] | multiple | Periodic linear regression with noise | MDL |

| [57] | multiple | Lognormal distribution | MDL |

| [58] | multiple | Periodic AutoRegressive model | AIC, BIC, MDL |

| [59] | multiple | Multivariate normal distribution | MDL |

| [60] | multiple | Regular/Mixed-effect polynomial model | AIC, MDL |

| [61] | multiple | Piecewise linear regression with noise | BIC |

| [62] | multiple | Mean shift model with noise | AIC, BIC |

| [63] | multiple | Normal distribution | Hybrid (BIC) |

| [64] | multiple | Normal distribution | Hybrid (BIC) |

| [65] | multiple | Poisson distribution | Hybrid (BIC) |

| [66] | multiple | Weibull distribution | Hybrid (mBICS) |

| [67] | multiple | Piecewise linear regression with Gaussian noise | Hybrid (mBIC2) |

| [68] | multiple | Skew normal distribution | Hybrid (mBIC2) |

| [69] | multiple | Vector auto-regressive model with Gaussian innovation | Hybrid (AIC) |

| [70] | multiple | Single-variate auto-regressive model | Hybrid (AIC) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Z.; Xiao, X.; Fang, Y.-P.; Rao, J.; Mo, H. A Selective Review on Information Criteria in Multiple Change Point Detection. Entropy 2024, 26, 50. https://doi.org/10.3390/e26010050

Gao Z, Xiao X, Fang Y-P, Rao J, Mo H. A Selective Review on Information Criteria in Multiple Change Point Detection. Entropy. 2024; 26(1):50. https://doi.org/10.3390/e26010050

Chicago/Turabian StyleGao, Zhanzhongyu, Xun Xiao, Yi-Ping Fang, Jing Rao, and Huadong Mo. 2024. "A Selective Review on Information Criteria in Multiple Change Point Detection" Entropy 26, no. 1: 50. https://doi.org/10.3390/e26010050