Abstract

This paper addresses the challenge of identifying causes for functional dynamic targets, which are functions of various variables over time. We develop screening and local learning methods to learn the direct causes of the target, as well as all indirect causes up to a given distance. We first discuss the modeling of the functional dynamic target. Then, we propose a screening method to select the variables that are significantly correlated with the target. On this basis, we introduce an algorithm that combines screening and structural learning techniques to uncover the causal structure among the target and its causes. To tackle the distance effect, where long causal paths weaken correlation, we propose a local method to discover the direct causes of the target in these significant variables and further sequentially find all indirect causes up to a given distance. We show theoretically that our proposed methods can learn the causes correctly under some regular assumptions. Experiments based on synthetic data also show that the proposed methods perform well in learning the causes of the target.

1. Introduction

Identifying the causes of a target variable is a primary objective in numerous research studies. Sometimes, these target variables are dynamic, observed at distinct time intervals, and typically characterized by functions or distinct curves that depend on other variables and time. We call them functional dynamic targets. For example, in nature, the growth of animals and plants is usually multistage and nonlinear with respect to time [1,2,3]. The popular growth curve functions, including Logistic, Gompertz, Richards, Hossfeld IV, and Double-Logistic functions, have S shapes [3], and have been widely used to model the patterns of growth. In psychological and cognitive science, researchers usually fit individual learning and forgetting curves by power functions; individuals may have different curve parameters [4,5].

The causal graphical model is widely used for the automated derivation of causal influences in variables [6,7,8,9] and demonstrates excellent performance in presenting complex causal relationships between multiple variables and expressing causal hypotheses [7,10,11]. In this paper, we aim to identify the underlying causes of these functional dynamic targets using the graphical model. There are three main challenges for this purpose. Firstly, identifying the causes is generally more challenging than exploring associations, even though the latter has received substantial attention, as evidenced by the extensive use of Genome-Wide Association Studies (GWAS) within the field of bioinformatics. Secondly, it is difficult to use a causal graphical model to represent the generating mechanism of dynamic targets and to find the causes of the targets from observational data when the number of variables is very large. For example, one needs to find the genes that affect the growth curve of individuals from more than thousands of Single-Nucleotide Polymorphisms (SNPs). Finally, the variables considered are mixed, which increases the complexity of representing and learning the causal model. We discuss these three challenges in detail below.

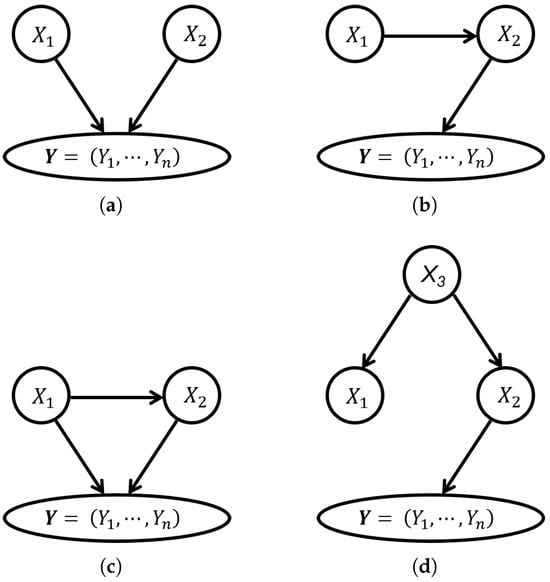

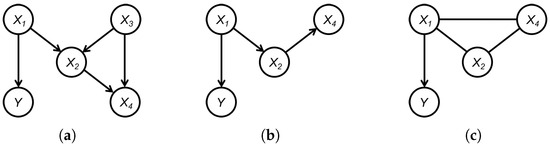

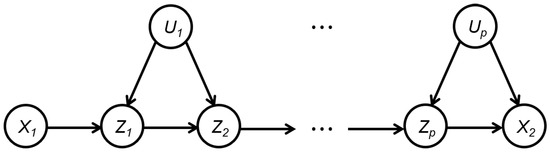

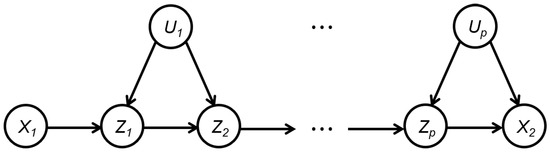

First of all, traditional statistical methods can only discover correlations between variables, rather than causal relationships, which may give false positive or false negative results for finding the real causes of the target. In fact, the association between the target and variables may originate from different causal mechanisms. For example, Figure 1 displays several different causal mechanisms possibly resulting in a statistically significant association between the target and variables. In Figure 1, are three random variables, is a vector representing a functional dynamic target, in which are states of the target in n time points, and direct edges represent direct causal relations among them. Using the statistical methods, we are very likely to find that is associated with significantly in all four cases. However, it is hard to identify whether is a real cause of without further causal learning. As shown in Figure 1, might be a direct cause of in Figure 1a,c, a cause but not a direct cause in Figure 1b, and not a cause in Figure 1d.

Figure 1.

The causal graphs when the variable is significantly associated with : (a) V-structures. (b) Chains. (c) Triangles. (d) Forks.

In addition, when the number of candidate variables is very huge, both learning causal structures and discovering target causes become very difficult. In fact, learning the complete causal graph is redundant and wasteful for the task of finding causes, as the focus should be on the target variable’s local structure. PCD-by-PCD algorithm [12] is adept at identifying such local structures and efficiently distinguishing parents, children, and some descendants. The MB-by-MB method [13], in contrast, simplifies this by learning Markov Blanket (MB) sets for identifying direct causes/effects, leveraging simpler and quicker techniques compared with PCD sets with methods like PCMB, STMB, and EEMB [14,15,16]. The CMB algorithm further streamlines this process using a topology-based MB discovery approach [17]. However, Ling [18] pointed out that Expand-Backtracking-type algorithms, such as the PCD-by-PCD and CMB algorithms, may overlook some v-structures, leading to numerous incorrect edge orientations. To tackle these issues, the APSL algorithm was introduced and designed to learn the subgraph within a specific distance centered around the target variable. Nonetheless, its dependence on the FCBF method for Markov Blanket learning tends to produce approximate sets rather than precise ones [19]. Furthermore, Ling [18] emphasized learning the local graph within a certain distance from the target rather than focusing on the causes of the target.

Finally, the variables in question are varied; specifically, the targets consist of dynamic time series or complex curves, while the other variables may be either discrete or continuous. Consequently, measuring the connections between the target and other variables presents significant challenges. For instance, traditional statistical methods used to assess independence or conditional independence between variables and complex targets might not only be inefficient but also ineffective, especially when there is an insufficient sample size to accurately measure high-order conditional independence.

In this paper, we introduce a causal graphical model tailored for analyzing dynamic targets and propose two methods to identify the causes of such a functional dynamic target assuming no hidden variables or selection biases. Initially, after establishing our dynamic target causal graphical model, we conduct an association analysis to filter out most variables unrelated to the target. With data from the remaining significantly associated variables, we then combine the screening method with structural learning algorithms and introduce the SSL algorithm to identify the causes of the target. Finally, to mitigate the distance effects that can mask the association between a cause and the target in data sets where the causal chain from cause to target is excessively long, we propose a local method. This method initially identifies the direct causes of the target and then proceeds to learn the causes sequentially in reverse order along the causal path.

The main contributions of this paper include the following:

- We introduce a causal graphical model that combines Bayesian networks and functional dynamic targets to represent the causal mechanism of variables and the target.

- We present a screening method that significantly reduces the dimensions of potential factors and combines it with structural learning algorithms to learn the causes of a given target and prove that all identifiable causes can be learned correctly.

- We propose a screening-based and local method to learn the causes of the functional dynamic target up to any given distance among all factors. This method is helpful when association disappears due to the long distance between indirect causes and the target.

- We experimentally study our proposed method on a simulation data set to demonstrate the validity of the proposed methods.

2. Preliminary

Before introducing the main results of this paper, we need to clarify some definitions and notations related to graphs. Furthermore, unless otherwise specified, we use capital letters such as V to denote variables or vertices, boldface letters such as to denote variable sets or vectors, and lowercase letters such as v and to denote the realization of a variable or vector, respectively.

A graph is a pair , in which is the vertex set and is the edge set. To simplify the symbols, we use to represent both random variables and the corresponding nodes in the graph. For any two nodes , an undirected edge between and , denoted by , is an edge satisfying and , while a directed edge between and , denoted by , is an edge satisfying and . If all edges in a graph are undirected (directed), the graph is called an undirected (directed) graph. If a graph has both undirected and directed edges, then it is called a partially directed graph. For a given graph , we use and to denote its vertex set and edge set, respectively, where can be an undirected, directed, or partially directed graph. For any , the induced subgraph of over , denoted by or , is the graph with vertex set and edge set containing all and only edges between vertices in , that is, , where .

In a graph , is a parent of and is a child of if the directed edge is in . and are neighbors of each other if the undirected edge is in . and are called adjacent if they are connected by an edge, regardless of whether the edge is directed or undirected. We use to denote the sets of parents, children, neighbors, and adjacent vertices of in , respectively. For any vertex set , the parent set of in can be defined as . The sets of children, neighbors, and adjacent vertices of in can be defined similarly. A root vertex is the vertex without parents. For any vertex , the degree of in , denoted by , is the number of ’s adjacent vertices, that is, . The skeleton of , denoted by , is an undirected graph obtained by transforming all directed edges in to undirected edges, that is, , where .

The sequence in graph is an ordered collection of distinct vertices . A sequence becomes a path, denoted by , if every pair of consecutive vertices in the sequence is adjacent in . The vertices and serve as the endpoints, with the rest being intermediate vertices. For a path in , and for any , the subpath from to is , and path can thus be represented as a combination of its subpaths, denoted by . A path is partially directed if there is no directed edge in for any . A partially directed path is directed (or undirected) if all its edges are directed (or undirected). A vertex is an ancestor of and is a descendant of if there exists a directed path from to or . The sets of ancestors and descendants of in the graph are denoted by and , respectively. Furthermore, a vertex is a possible ancestor of and is a possible descendant of if there is a partially directed path from to . The sets of possible ancestors and possible descendants of in graph are denoted by and , respectively. For any vertex set , the ancestor set of in graph is . The sets of possible ancestors and (possible) descendants of in graph can be defined similarly.

A (directed, partially directed, or undirected) cycle is a (directed, partially directed, or undirected) path from a node to itself. The length of a path (cycle) is the number of edges on the path (cycle). The distance between two variables and is the length of the shortest directed path from to . A directed acyclic graph (DAG) is a directed graph without directed cycles, and a partially directed acyclic graph (PDAG) is a partially directed graph without directed cycles. A chain graph is a partially directed graph in which all partially directed cycles are undirected. This indicates that both DAGs and undirected graphs can be considered as specific types of chain graphs.

In a graph , a v-structure is a tuple satisfying with , in which is called a collider. A path is d-separated (blocked) by a set of vertices if (1) contains a chain or a fork with ; (2) contains a v-structure with , and is d-connected otherwise [20]. Sets of vertices and are d-separated by if and only if blocks all paths from any vertex to any vertex , denoted by . Furthermore, for any distribution P, denotes that and are conditional independent given . Given a DAG and a distribution P, the Markov condition holds if , while faithfulness holds if . In fact, for any distribution, there exists at least one DAG such that the Markov condition holds, but there are some certain distributions that do not satisfy faithfulness to any DAG. Therefore, unlike the Markov condition, faithfulness is often regarded as an assumption. In this paper, unless otherwise stated, we assume that faithfulness holds, that is, . For simplicity, we use the symbol to denote both (conditional) independence and d-separation.

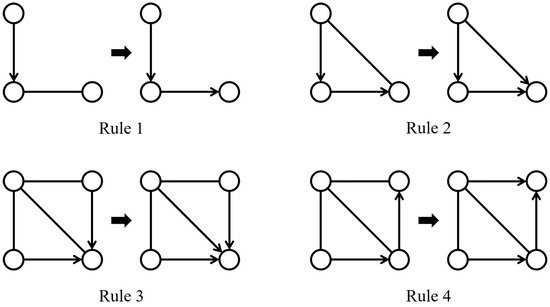

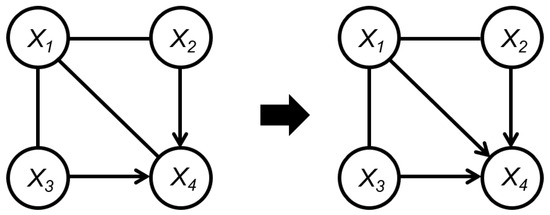

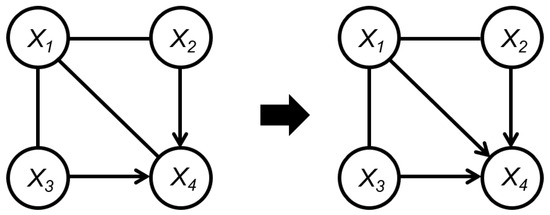

From the concepts described, it can be inferred that a DAG characterizes the (conditional) independence relationships among a set of variables. In fact, multiple different DAGs may characterize the same conditional independent relationship. According to the Markov condition and faithfulness assumption, if the d-separation relationship contained in two DAGs is exactly the same, then these two DAGs are said to be Markov equivalent. Furthermore, two DAGs are Markov equivalent if and only if they share the same skeleton and v-structures [21]. All Markov equivalent DAGs constitute a Markov equivalent class, which can be represented by a completely partially directed acyclic graph (CPDAG) . Two vertices are adjacent in the CPDAG if and only if they are adjacent in all DAGs in the equivalent class. The directed edge in CPDAG indicates that this directed edge appears in all DAGs within the equivalent class, whereas the undirected edge signifies that is present in some DAGs and in others within the equivalent class [22]. A CPDAG is a chain graph [23] and can be learned by observational data and Meek’s rules [24] (Figure 2).

Figure 2.

Meek’s rules comprise four orientation rules. If the graph on the left-hand side of a rule is an induced subgraph of a PDAG, then the corresponding rule can be applied to replace an undirected edge in the induced subgraph with a directed edge. This replacement results in the induced subgraph transforming into the graph depicted on the right-hand side of the rule.

3. The Causal Graphical Model of Potential Factors and Functional Dynamic Target

Let be a set of random variables representing potential factors and be a functional dynamic target, where , for , represents the state of the target at q different time points. Let be a DAG defined over , and let be the subgraph induced by over the set of potential factors . Suppose that the causal network of can be represented by , and when combined with the joint probabilities over , denoted by , we obtain a causal graphical model . Consequently, the data generation mechanisms of and follow a causal Bayesian network model of and a model determined by the direct causes of , respectively. Formally, we define a causal graphical model of the functional dynamic target as follows.

Definition 1.

Let be a DAG over , denote the direct causes of in and , be a joint distribution over , and be parameters determining the expectations of the functional dynamic target , which is influenced by . Then, the triple constitutes a causal graphical model for if the following two conditions hold:

- The pair constitutes a Bayesian network model for .

- The functional dynamic target follows the following model:where is the vector of the mean function at time , and is the vector of error terms with mean of zero, that is, .

Different functional dynamic targets use different mean functions. For example, the optimal mean function of growth curves of different species varies from the Gompertz function, , the Richards function, , the Hossfeld function, , and the Logistic function, to Double-Logistic function, [25,26,27].

A causal graphical model of the functional dynamic target can be interpreted as a data generation mechanism of variables in and as follows. First, the root variables in are generated according to their marginal probabilities. Then, following the topological ordering of the DAG , for any non-root-variable X, when its parent nodes have been generated, X can be drawn from , which is the conditional probability of X given its parent set . Finally, the target is generated by Equation (1). According to Definition 1, the Markov condition holds for the causal graphical model of a dynamic target, that is, for any pair of variables and , the d-separation of and given a set in implies that and are conditionally independent given .

Given a mean function , we can estimate parameters as follows,

where n and q represent the number of individuals and the length of the functional dynamic target, respectively. The residual sum of squares (RSS) is minimized at . The Akaike information criterion (AIC) can be used to select the appropriate mean function to fit the functional dynamic targets. We have

4. Variable Screening for Causal Discovery

For the set of potential factors and the functional dynamic target , our task is to find the direct causes and all causes of up to a given distance. An intuitive method involves learning the causal graph to find all causes of . Alternatively, we could first learn the causal graph and then identify all variables that have directed paths to . However, as mentioned in Section 1, this intuitive approach has three main drawbacks. To address these challenges, we propose a variable screening method to reduce the number of potential factors, and a hypothesis testing method to test for (conditional) independence between potential factors and . By integrating these methods with structural learning approaches, we have developed an algorithm capable of learning and identifying all causes of functional dynamic targets.

Let X be a variable with level K. The variable X is not independent of if there exists at least two values of X, say and , such that the conditional distributions of given and are different. Conversely, if the conditional distribution of given remains unchanged for any x, we have that X and are independent. Let be the parameter of the mean function of the functional dynamic target with . To ascertain whether the variable X is not independent of , we implement the following test:

Let be the ith sample of the functional dynamic target with . Under the null hypothesis, is modeled as , whereas under the alternative hypothesis, it is modeled as . Let denote the unrestricted log-likelihood of under and let denote the restricted log-likelihood of under . The likelihood ratio statistic is calculated as follows:

Under certain regular conditions, the statistic approximately follows distribution, and the degrees of freedom of this distribution are determined by the difference in the numbers of parameters between and , as specified in Equations (2) and (3).

Therefore, by applying hypothesis tests described in Equations (2) and (3) to each potential factor, we can identify all variables significantly associated with the dynamic target. We denote these significant variables as , defined as . Indeed, since the mean function of the dynamic target depends on its direct causes, which in turn depend on indirect causes, the dynamic target ultimately depends on all its causes. Therefore, when X is precisely a cause of , we can reject the null hypothesis in Equation (2), implying that includes all causes of the dynamic target, assuming no statistical errors. Therefore, given a dynamic target , perform hypothesis testing of against as defined in Equations (2) and (3) to each potential factor sequentially, then we can obtain the set and their corresponding p-values , in which is the p-value of the variable .

A causal graphical model, as described in Definition 1, necessitates adherence to the Markov conditions for variables and the functional dynamic target. Given the Markov condition and the faithfulness assumption, a natural approach to identifying the causes of the functional dynamic target involves learning the causal structure of and subsequently discerning the relationship between each variable and . For significant variables , we present the following theorem, with its proof available in Appendix A.2:

Theorem 1.

Suppose that constitutes a causal graphical model for the functional dynamic target as defined in Definition 1, with the faithfulness assumption being satisfied. Let denote the set comprising all variables in that are dependent on . Then, the following assertions hold:

- 1.

- consists of all causes and the descendants of these causes of , that is, .

- 2.

- For any two variables , if either or is a cause of , then are not adjacent in if and only if there exists a set such that .

- 3.

- For any two variables , if there exists a set such that , then are not adjacent in .

The first result of Theorem 1 implies the soundness and rationality of the method for finding mentioned above. The second result indicates that when at least one end of an edge is a cause of , this edge can be accurately identified (in terms of its skeleton, not its direction) using any well-known structural learning methods, such as the PC algorithm [28] and GES algorithm [29]. Contrasting with the second result, the third specifies that for any pair of variables , if a separation set exists in that blocks , then these variables are not adjacent in the true graph . However, the converse does not necessarily hold due to the potential presence of a confounder or common cause , which can led to the appearance of an extraneous edge between and in the causal graph derived solely from data on . To accommodate this, the CPDAG learned from is denoted as , and the induced subgraph that corresponds to the true graph over is represented as . An illustrative example follows to elaborate on this explanation.

Example 1.

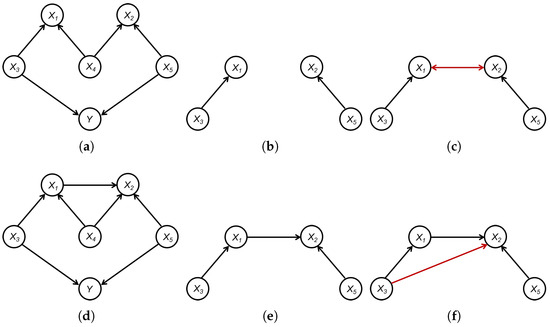

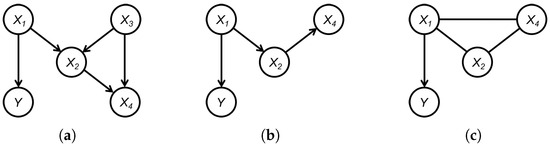

In Figure 3, Figure 3a presents a true graph defined over and . Here, the set of significant variables is , and is independent of . Figure 3b illustrates the induced subgraph of the CPDAG over the set , while Figure 3c displays the graph learned through the structural learning method, such as the PC algorithm, applied to . It should be noted that, in , is a separation set of and , that is, . However, since and structural learning only utilize data concerning , no separation set exists for and in . Consequently, and appear adjacent in the learned graph . Furthermore, given and , the structural learning method identifies a v-structure . A similar process yiel . Therefore, a bidirected edge appears in the learned graph but not in , as highlighted by the red edge in Figure 3c.

Figure 3.

An example to illustrate the difference between and : (a) True graph . (b) . (c) . (d) True graph . (e) . (f) .

Similarly, Figure 3d presents a true graph defined over and . In this scenario, the set of significant variables is identified as , with being independent of . Figure 3b depicts the induced subgraph of the CPDAG over , while Figure 3c illustrates the graph learned through the structural learning method, such as the PC algorithm, applied to . In , the set acts as a separation set between and , indicating . However, with and structural learning relying solely on data concerning , a separation set for and in no longer exists. As a result, and appear adjacent in the learned graph . Furthermore, given and , the structural learning method is capable of identifying a v-structure . Therefore, a directed edge is present in the learned graph but not in , as highlighted by the red edge in Figure 3f.

Example 1 illustrates two scenarios in which the graph might include false positive edges that do not exist in . Importantly, these additional false edges may not appear between the causes of . Instead, they may occur between the causes and noncauses of , or exclusively among the noncauses of , as delineated in Theorem 1. The complete result is given by Proposition A1 in Appendix A.2. Indeed, a more profound inference can be drawn: the presence of extra edges does not compromise the structural integrity concerning the causes of , affecting neither the skeleton nor the orientation.

Theorem 2.

The edges in , if exists, do not affect the skeleton or orientation of edges among the ancestors of in . Furthermore, we have and , where and are graphs by adding a node and directed edges from ’s direct causes to in graphs and , respectively.

According to Theorem 2, it is evident that although the graph obtained through structural learning does not exactly match the induced subgraph of CPDAG over corresponding to the true graph, the causes of the functional dynamic target in these two graphs are identical, including the structure among these causes. Thus, in terms of identifying the causes, the two graphs can be considered equivalent. Furthermore, Theorem 2 indicates that all possible ancestors of in are also possible ancestors in , though the converse may not hold. The detailed proof is available in Appendix A.2.

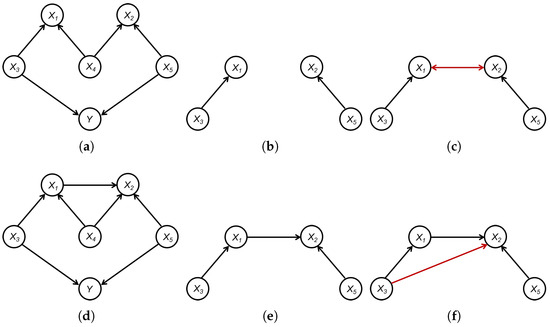

Example 2.

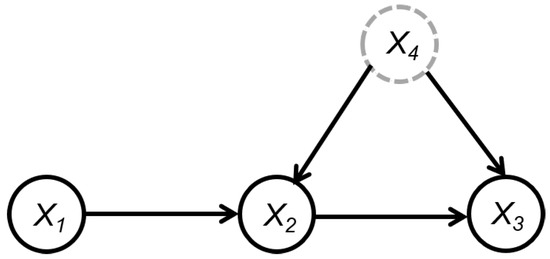

The true graph is given by Figure 4a, and the corresponding CPDAG is itself, that is, . In this case, the set of significant variables is . Figure 4b is the induced graph of () over , and Figure 4c is the CPDAG obtained by using the structural learning method on . Then, we have , while .

Figure 4.

An example to illustrate the results in Theorem 2: (a) True graph . (b) . (c) .

According to the causal graphical model in Definition 1 and the faithfulness assumption, is the sum of the mean function and an independent noise, and the mean function is a deterministic function of ’s direct causes. Therefore, for any nondescendant of , say X, given the direct causes of , that is, , X is independent of . On the contrary, for any , X is a direct cause of if and only if there is no subset such that .

Let be a subset of . For any and , to test the conditional independence , consider the following test:

Under the null hypothesis, the parameter only depends on the value of the set , which can be denoted as , while under the alternative hypothesis, the parameter is determined by the values of both and X, which can be denoted as . Let be the log-likelihood of under , and be the log-likelihood of under . The likelihood ratio statistic is

Under certain regular conditions, the statistic approximately follows distribution, with degrees of freedom equal to .

Based on the above results, we propose a screening and structural learning-based algorithm to identify the causes of the functional dynamic target , as detailed in Algorithm 1.

In Algorithm 1, the initial step involves learning the structure over utilizing data related to through a structural learning method, detailed in Lines 1–6. The notation in Lines 3–4 signifies that the connection between X and Y could be either or . We first learn the skeleton of following the same procedure as the PC algorithm (Line 1), with the details in Appendix A.1. Nevertheless, due to the potential occurrence of bidirected edges, adjustments are made in identifying v-structures (Lines 2–5), culminating in the elimination of all bidirected edges. According to Theorem 1, these bidirected edges, which are removed directly (Line 5), are present only between causative and noncausative variables or among noncausative variables of the functional dynamic target. Since these variable pairs are inherently (conditional) independent, removing such edges does not compromise the (conditional) independence relationships among the remaining variables, as shown in Theorem 2 and Example 1. Subsequently, we designate the set of direct causes as and sequence these variables in ascending order of their correlations with (Lines 7–8). This is because variables with weaker correlation are less likely to be the direct cause of . Placing these variables at the beginning of the sequence can quickly exclude non-direct-cause variables in the subsequent conditional independence tests, thereby enhancing the algorithm’s efficiency, simplifying its complexity, and reducing the required number of conditional independence tests. Next, we add directed edges from all vertices in to (Line 9) to construct the graph . For each directed edge, say , we check the conditional independence of X and given a subset of (Lines 12–14). In seeking the separation set , the search starts with single-element sets, progressing to sets comprising two elements, and so forth. Upon identifying a separation set, both vertices and directed edges are removed from and , respectively (Lines 15–17). Lastly, if the separation set’s size k surpasses that of , implying that no conditional independence of X and can be found given any subset of , the directed edge remains in .

| Algorithm 1 SSL: Screening and structural learning-based algorithm |

| Require: and their corresponding p-values , data sets about and . Ensure: Causes of .

|

According to Theorem 1 and the discussion after Example 2, is the set of all direct causes of if all assumptions in Theorem 1 hold and all statistical tests are correct. Further, according to Theorem 2, all ancestors of can be obtained from the graph . Therefore, Algorithm 1 can learn all the causes of correctly.

Note that in Algorithm 1, we first traverse the sizes of the separation set (Line 11) and then, for each given size, traverse all variables in the set and all possible separations with that size (Line 12 and 13) to test for the conditional independence of each variable and . That is, first fix the size of the separation set to 1, and then traverse all variables. After all variables are traversed once, increase the size of the separation set to 2, and then traverse all variables again. The advantage of this arrangement is that it can quickly remove the nondirect causes of and reduce the size of the set, thereby reducing the number of conditional independence tests and improving their accuracy. Furthermore, it is worth mentioning that the reason why we directly add directed edges from variables in to in graph (Line 9) is because we assume the descendant set of is empty, as shown in Definition 1, and in this case, ’s adjacent set is exactly the direct causes we are looking for. If there is no such assumption, then it is necessary to judge the variables in ’s adjacent set and distinguish the parents from the children.

5. A Screening-Based and Local Algorithm

Based on the previous results and discussions, we can conclude that Algorithm 1 is capable of correctly identifying the causes of a functional dynamic target. However, Algorithm 1 requires recovering the complete causal structure of and . As analyzed in Section 1, learning the complete structure is unnecessary for identifying the causes of the target. Furthermore, Algorithm 1 may be influenced by the distance effect, whereby the correlation between a cause and the target may diminish from the data when the path from the cause to the target is too lengthy. Consequently, identifying this cause variable through observational data becomes challenging, potentially leading to missed causes. Therefore, we propose a screening-based and local approach to address these challenges.

In this section, we introduce a three-stage approach to learn the causes of functional dynamic targets. Initially, utilizing the causal graphical model, we apply a hypothesis testing method to screen variables, identifying factors significantly correlated with the target. Subsequently, we employ a constraint-based method to find the direct causes of the target from these significant variables. Lastly, we present a local learning method to discover the causes of these direct causes within any specified distance. We begin with the introduction of a screening-based algorithm that can learn the direct causes of , as shown in Algorithm 2.

In Algorithm 2, we initially set the set of direct causes and arrange these variables in ascending order of their correlations with (Lines 1–2), which is the same as Algorithm 1. We introduce a set to contain variables determined not to belong to X’s separation set, starting as an empty set (Line 3). We then check the conditional independence of each variable with . During the search for the separation set , is set as all subsets of with k variables and is arranged roughly in descending order of their associations with (Lines 7–8). This is because variables that have a stronger correlation with are more likely to be the direct causes and are also more likely to become the separation set of other variables. Placing these variables at the beginning of the order can quickly find the separation set of nondirect causes and remove these variables from , which can reduce the number of conditional independence tests and accelerate the algorithm. Once we find the separation set for X and , we remove X from and add X to for each (Lines 11–13). This is because when is the separation set of X and , the variables in appear in the path from X to . Consequently, X should not be in the separation set for variables in with respect to . Compared with Algorithm 1, introducing in Algorithm 2 improves efficiency and speed. While Algorithm 1 requires examining every subset of (Line 8 in Algorithm 1), Algorithm 2 only needs to evaluate subsets of (Line 7 in Algorithm 2). The theoretical validation of Algorithm 2’s correctness is presented below.

| Algorithm 2 Screening-based algorithm for learning direct causes of |

| Require: and their corresponding p-values , data sets about and . Ensure: Direct causes of .

|

Theorem 3.

If all assumptions in Theorem 1 hold, and there are no errors in the independence tests, then Algorithm 2 can correctly identify all direct causes of .

Next, we aim to identify all causes of within a specified distance. One natural method is to recursively apply Algorithm 2, starting with ’s direct causes and then expanding to their direct causes. This process continues until all causes within the set distance are found. However, this method’s effectiveness for relies on the assumption that has no descendants, making its adjacent set its parent set. This is not the case for other variables. Thus, we must further analyze and distinguish variables in the adjacent set of other variables. Consequently, we introduce the LPC algorithm in Algorithm 3.

| Algorithm 3 algorithm |

| Require: a target node T, a data set over variables , a non-PC set . Ensure: the PCD set of T and set containing all separation relations.

|

Algorithm 3 aims to learn the local structure of a given target variable T, but in fact, the final set includes T’s Parents, Children, and Descendants. This is because when verifying the conditional independence (Line 4), we remove some nonadjacent variables of T in advance (Line 1), resulting in some descendant variables being unable to find the corresponding separation set.

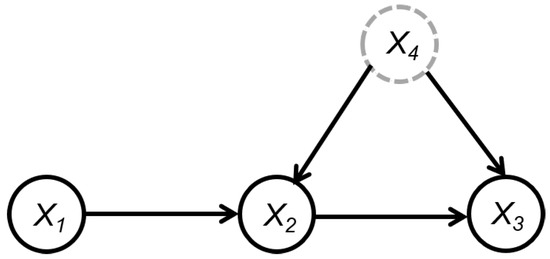

Example 3.

In Figure 5, let . Since , we initially have (Line 1 in Algorithm 3). Note that there originally exists a conditional independent relationship in the graph, but since we remove the vertex in advance, there is no longer a separation set of and in the set of . Therefore, cannot be removed from further and the output , that is, , which is a descendant of but not a child of , is included in .

Figure 5.

An example to illustrate the set obtained by Algorithm 3.

Example 3 illustrates that there may indeed be some nonchildren descendants of the target variable in the set obtained by Algorithm 3. Below, we show that one can identify these non-child-descendant variables by repeatedly applying Algorithm 3. For example, in Example 3, the set of is . Then, we can apply Algorithm 3 to and find that the set of is . It can be seen that is not in . Hence, we can conclude that is a nonchildren descendant of ; otherwise, must be in . Through this method, we can delete the non-child-descendant variables from the set, so that the set only contains the parents and children of the target variable. Based on this idea, we propose a step-by-step algorithm to learn all causes of a functional dynamic target locally, as shown in Algorithm 4.

| Algorithm 4 PC-by-PC: Finding all causes of target within a given distance |

| Require: a target set , data set over , and the maximum distance m. Ensure: all causes of with length up to m.

|

In Algorithm 4, represents the n-th variable in the set , and represents the first to the -th variable in the set . denotes the shortest path from V to L in graph . There are many methods to learn the shortest path, such as the Dijkstra algorithm [30]. Algorithm 4 uses the mentioned symmetric validation method to remove descendants from the set (Lines 7–13), and hence, we directly write the set as the set (Line 6). When our task is to learn all causes of a functional dynamic target , the target set as the algorithm input is all direct causes of , which can be obtained by Algorithm 2, and the auxiliary node L is exactly the functional dynamic target (Line 2). In fact, we can prove it theoretically, as shown below.

Theorem 4.

If the faithfulness assumption holds and all independence tests are correct, then Algorithm 4 can learn all causes of the input target set within a given distance m correctly. Further, if all assumptions in Theorem 1 holds, is the set of direct causes of the functional dynamic target , and the auxiliary node L in Algorithm 4 is , then Algorithm 4 can learn all causes of within a given distance correctly.

Note that the above algorithm gradually spreads outward from the direct causes of , and at each step, the newly added nodes are all in the set of previous nodes (Line 18), which only involves the local structure of all causes of , greatly improving the efficiency and accuracy of the algorithm. Moreover, Algorithm 4 identifies the shortest path between each cause variable and . When the m-th edge on one path from cannot be oriented, it only continues to expand from that path, instead of expanding all paths (Line 18 in Algorithm 4), which simplifies the algorithm and reduces the learning of redundant structures.

6. Experiments

In this section, we compare the effectiveness of different methods for learning the direct and all causes of a functional dynamic target through simulation experiments. As mentioned before, to our knowledge, existing structural learning algorithms lack the specificity needed to identify causes of functionally dynamic targets, so we only compare the methods we proposed, which are as follows:

- SSL algorithm: The screening and structural learning-based algorithm given in Algorithm 1, which can learn both direct and all causes of a dynamic target simultaneously;

- S-Local algorithm: First, use the screening-based algorithm given in Algorithm 2, which can learn direct causes of a functional dynamic target, and then use the PC-by-PC algorithm given in Algorithm 4, which can learn all causes of a functional dynamic target.

In fact, our proposed SSL algorithm integrates elements of the screening method with those of traditional constraint-based structural learning techniques, as depicted in Algorithm 1. In its initial phase, the SSL algorithm is a modified version of the PC algorithm, extending its capabilities to effectively handle bidirectional edges introduced by the screening process. This extension of the PC algorithm, tailored to address the causes of the dynamic target, positions the SSL algorithm as a strong candidate for a benchmark.

In this simulation experiment, we randomly generate a causal graph consisting of a dynamic target and p = (15, 100, 1000, 10,000) potential factors. Additionally, we randomly select 1 to 2 variables from these potential factors to serve as direct causes for . The potential factors are all discrete with finite levels, while the functional dynamic target is a continuous vector, and its mean function is a Double-Logistic function, that is,

where

and . The in the subscript of the above equations indicates that parameters are affected by the direct causes of . For each causal graph , we randomly generate the corresponding causal mechanism, that is, the marginal and conditional distributions of potential factors and the functional dynamic target, and generate the simulation data from it. We use different sample sizes and repeat the experiment 100 times for each sample size. In addition, we adopt adaptive significance level values in the experiment, because as the number of potential factors increases, the strength of screening also increases. In other words, as the number of potential factors p increases, the significance level of the (conditional) independence test decreases. For example, is 0.05 when , while is 0.0005 when p = 10,000.

To evaluate the effectiveness of different methods, suppose is the set of learned direct causes of by algorithms, and is the set of true direct causes of in the generated graph. Then, let , , , and we have

where p is the number of potential factors. It can be seen that the recall measures how much the algorithm has learned among all the true direct causes. Precision measures how much of the direct causes learned by the algorithm are correct. Accuracy measures the proportion of correct judgments on whether each variable is a direct cause or not. The evaluation indicators for learning all causes can also be defined similarly.

The experiment results are shown in Table 1, in which represents the total time (in seconds) consumed by the algorithm, and represent the average value of recall, precision, and accuracy over 100 experiments, respectively. In addition, different subscripts represent different methods. and denote that algorithms learn direct causes and all causes, respectively.

Table 1.

Experimental results of SSL algorithm and S-Local algorithm under different settings.

In Table 1, since the SSL algorithm obtains direct and all causes simultaneously through complete structural learning, for the sake of fairness, we only count the total time for both algorithms. It can be seen that the time of the two algorithms is approximately linearly related to the number of potential factors p. Moreover, when p is fixed, the algorithm takes longer and longer as the sample size n increases. In fact, for SSL algorithms, most of the time is spent on learning the complete graph structure. Therefore, as n increases, the (conditional) independence test becomes more accurate, resulting in an increase in the size of set and a larger graph to learn, which naturally increases the time required. For the S-Local algorithm, more than 99% of the time is spent on optimizing the log-likelihood function during the (conditional) independence test in the screening stage. As n increases, the optimization time becomes longer and the total time also increases accordingly. This also explains why the time of the S-Local algorithm increases linearly as the number of variables increases, since the number of independence tests required increases roughly linearly. In addition, it can be seen that in most cases, the S-Local algorithm takes less time than the SSL algorithm, especially when p is small. However, when p is large, the time used by the two algorithms is similar. This is mainly because in this experimental setting, the mechanism of the functional dynamic target is relatively complex, and its mean function is a Double-Logistic function with too many parameters, which requires much time for optimization. In fact, even if there is only one binary direct cause, the mean function will have 13 parameters. When the mechanism of the functional dynamic target is relatively simple, the time required for the S-Local algorithm will also be greatly reduced. Besides, it should be noted that more than 99% of the time, the S-Local algorithm is used to check the independence in the screening step, and in practice, this step can be performed in parallel, which will greatly reduce the time required.

When learning the direct cause, whether it is recall, precision, or accuracy, the results of the S-Local algorithm are much higher than those of the SSL algorithm, especially the value of precision. The precision values of the SSL algorithm are very small, mainly because the accuracy of learning the complete graph structure is relatively low, resulting in learning many non-direct-cause variables in the local structure of . Particularly when p is large, it is difficult to correctly recover the local structure of . What’s more, it should be noted that under the same sample size, when p is small, the values of recall, precision and accuracy obtained by S-Local algorithm are not as good as those obtained when p is large. For example, when , , we have , and , but when p = 10,000, n = 50, we have = 1.000, = 1.000 and = 1.000. The recall and accuracy values of the SSL algorithm also show similar results. This result does not violate our intuition, as we use adaptive significance levels in the experiment. When p is large, in order to increase the strength of screening and facilitate subsequent learning of all causes, we use a smaller significance level. Therefore, the algorithm is more rigorous in determining whether a variable is the direct cause of when learning direct causes, making it easier to exclude those non-direct-cause variables.

When learning all causes, the recall and accuracy values of the SSL algorithm and S-Local algorithm increase monotonically with respect to the sample size, and even in cases with many potential factors, both algorithms can achieve very good results. For example, when p = 10,000, the accuracy values of both algorithms are above . Of course, overall, the results of the S-Local algorithm are significantly better than those of the SSL algorithm. However, it should be noted that the values of precision of the two algorithms show different trends. The precision value of the SSL algorithm increases monotonically with n when p is large, but the trend is not significant when p is small. This is because the SSL algorithm is affected by the distance effect, and as n gradually increases, (conditional) independence tests also become more accurate. As a result, many causes that are far away from can be identified. When p is large, the number of causes that are far away from is also large. Therefore, the precision of the SSL algorithm will gradually increase. However, when p is small, most variables have a short distance from . Although the SSL algorithm can also obtain more causes (the value of increases), it also includes some noncause variables that are strongly related to in the set of causes. At this time, the value of precision does not have a clear trend. On the other hand, the precision value of the S-Local algorithm monotonically increases with respect to n when p is small, and as p gradually increases, this trend gradually transforms into a monotonic decrease. This is because when p is small, as n increases, the S-Local algorithm can identify more causes through a more accurate (conditional) independence test. However, when p is large, the number of noncause variables obtained by the S-Local algorithm is greater than the number of causes. Therefore, the recall value still increases, but the precision value gradually decreases. In other words, in this case, there is a trade-off between the values of recall and precision of the S-Local algorithm. However, it should be noted that although the trends of precision values are different, the accuracy values of both algorithms increase with the increase in sample size.

It should be noted that the primary objective of the models and algorithms introduced in this paper is to identify the causes of functional dynamic targets, addressing the "Cause of Effect" (CoE) challenge, rather than directly predicting . However, based on the causal graphical model for these targets, correctly identifying ’s direct causes is indeed sufficient for making accurate predictions. In the simulation experiment, with 15 nodes and 1000 samples, the Mean Squared Error (MSE) of prediction is 0.281 for simulations that incorrectly learn ’s direct causes. This figure dropped to 0.185 when the causes were correctly identified, reflecting a significant reduction in prediction error of approximately 34%. Additionally, as illustrated in Table 1, the S-Local algorithm demonstrated exceptional accuracy in identifying the direct causes, with a success rate consistently above 98% in most cases. This high level of accuracy indicates that our algorithms perform well in predicting as well.

7. Discussion and Conclusions

In this paper, we first establish a causal graphical model for functional dynamic targets and discuss hypothesis testing methods for testing the (conditional) independence between random variables and functional dynamic targets. In order to deal with situations where there are too many potential factors, we propose a screening algorithm to screen out some variables that are significantly related to the functional dynamic target from a large number of potential factors. On this basis, we propose the SSL algorithm and S-Local algorithm to learn the direct causes and all causes within a given distance of functional dynamic targets. The former utilizes the screening algorithm and structural learning methods to learn both the direct and all causes of functional dynamic targets simultaneously by recovering the complete graph structure of the screened variables. Its disadvantage is that learning the complete structure of the graph is very difficult and redundant, and it is also affected by the distance effect, resulting in a low accuracy in learning causes. The latter first uses a screening-based algorithm to learn the direct causes of functional dynamic targets, and then uses our proposed PC-by-PC algorithm, a step-by-step locally learning algorithm, to learn all causes within a given distance. The advantage of this algorithm is that all learning processes are controlled within the local structure of current nodes, making the algorithm no longer affected by the distance effect. In fact, this algorithm only focuses on the local structure of each cause variable, rather than learning the complete graph structure, greatly saving time and space. Moreover, the algorithm not only pays attention to the distance, but also can identify the direct path between each cause variable and the functional dynamic target, so that the algorithm does not need to identify the whole structure of a certain part but only learns the part of the local structure involving the cause variables, further reducing the learning of redundant structures.

It should be noted that when the causal mechanism of functional dynamic targets is very complex, the time required for the S-Local algorithm may greatly increase. In addition, the choice of significance level will also have an impact on the precision of the algorithm. Thus, how to simplify the causal model of functional dynamic targets and how to reasonably choose an appropriate significance level are two directions of our future work.

Author Contributions

Conceptualization, R.Z. and X.Y.; methodology, R.Z. and X.Y.; software, R.Z. and X.Y.; validation, R.Z. and Y.H.; formal analysis, R.Z., X.Y. and Y.H.; investigation, R.Z., X.Y. and Y.H.; resources, Y.H.; data curation, R.Z. and X.Y.; writing—original draft preparation, R.Z.; writing—review and editing, R.Z. and Y.H.; visualization, R.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Key Research and Development Program of China grant number 2022ZD0160300.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The simulated data can be regenerated using the codes, which can be provided to the interested user via an email request to the correspondence author.

Acknowledgments

Thank Qingyuan Zheng for providing technical support.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Appendix A.1

In Algorithm 1, we first screen variables significantly related to through the hypothesis testing of against as defined in Equations (2) and (3), and learn the causal structure of these variables via a structural learning method. However, it should be noted that due to the absence of some variables in some separation sets, the graph we learn here is not the true CPDAG, but may have some extra edges, which may be directed or bidirected. Therefore, we need to make some modifications to the structural learning algorithm. As shown in Algorithm 1, we first learn the skeleton through the original structural learning method and then find v-structures by using a variant algorithm (Lines 2–5 in Algorithm 1). Hence, taking the PC algorithm as an example, we give the method to learn the skeleton below.

| Algorithm A1 PC algorithm to learn the skeleton . |

| Require: , data sets about . Ensure: the skeleton and all separation sets .

|

Appendix A.2

Proof of Theorem 1.

We know that all causes of and the descendants of these causes are d-connected with . Since the faithfulness assumption holds for the causal graphical model , we have that all causes of and the descendants of these causes are not independent of . From the definition , we have that contains all causes of and the descendants of these causes. Meanwhile, according to the definition of the causal graphical model , the functional dynamic target has no children. Therefore, all d-connected paths from a vertex X to should be either or . Clearly, the vertex X is either a cause of or a descendant of a cause of . Now, we have shown that the vertices in are either causes of or descendants of causes of . Statement 1 is proved.

Now, we prove the Statement 2. The faithfulness assumption makes sure that and are not adjacent in if there exists a set such that . We just need to prove the “only if” part, that is, there exists a set such that if and are not adjacent in .

- Consider the case that both and are causes of . Since and are not adjacent in , either is a nondescendant of or is a nondescendant of . Without loss of generality, we assume that is a nondescendant of . Since is a cause of , according to Statement 1, we have . Let , we can obtain the result .

- Consider the case that is a cause of and is not a cause of . If is not a descendant of , similar to the discussion in the previous case, we have where . If is a descendant of , we have that all paths from to with the first directed edge as are d-separated, otherwise, there should be a directed cycle. Let consist of all parents of that are d-connected with in . Clearly, since is a cause of . Then, let . Since blocks all d-connected paths between and through , and the set blocks all paths like , we have that the set blocks all d-connected paths between and in , that is, holds.

- Consider the case that is a cause of and is not a cause of , which is symmetric to the second case and can be discussed similarly.

Therefore, the “only if” part of Statement 2 is proven.

Statement 3 holds directly since the faithfulness assumption holds for the causal graphical model . □

Proposition A1.

The edges in , if they exist, do not appear between two ancestors of in .

Proof of Proposition A1.

For any two nonadjacent vertices , if there is no directed path from to , then is a separation set relative to the pair , and hence there is no edge between and in the graph . Therefore, without loss of generality, we assume . In this case, since is an ancestor of , is a separation set relative to the pair , in which is the set of all intermediate nodes on the directed paths from to in . Hence, there is no edge between and in the graph . □

Proof of Theorem 2.

According to Proposition A1, the edge in may have two cases:

Case 1. .

Case 1.1. . Since is a nondescendant of , is a separation set relative to . Hence, there is no edge between and in graph , which is a contradiction.

Case 1.2. . In this case, there are four possible paths between and in the graph :

Case 1.2.1. Causal path . In this case, given any vertex Z on this path different from and , this path can be blocked, implying that and are not adjacent in , which is a contradiction.

Case 1.2.2. Non-causal-path . Since , all parents of are in the set . Hence, conditioning on the parent of on this path can block this path, implying that and are not adjacent in , which is a contradiction.

Case 1.2.3. Non-causal-path . There must exist at least one v-structure in the path, and the path can be blocked given an empty set. Suppose the nearest v-structure to is , then the collider Z in this v-structure can not appear on any causal paths from to ; otherwise, there will be a directed cycle in graph . Hence, and are not adjacent in , which is a contradiction.

Case 1.2.4. Non-causal-path . There must exist at least one v-structure in the path, and the path can be blocked given an empty set. However, different from Case 1.2.3, the colliders of the v-structures in this path may occur on some causal paths from to . According to Case 1.2.1, these vertices may need to be adjusted to block the causal paths. We use Figure A1 to make an illustration in detail. In Figure A1, with out loss of generality, we suppose . If not, let the point in the path that is farthest from and belongs to the set be the new . In this case, in the non causal path , all colliders are in the causal path , and . In order to block the causal path , it is necessary to adjust some vertices in , say . But at this time, the path cannot be blocked. In fact, even if all are adjusted, the non-causal-path still cannot be blocked.

Figure A1.

Illustration for Case 1.2.4.

In general, in Case 1, only when the situation in Case 1.2.4 (Figure A1) occurs, the edges in will appear. Then we have and . Note that Rule 1–Rule 3 of Meek’s rules only orient the edges backward. In other words, in Case 1, no matter how the edges in are oriented, they do not affect the orientation of the edges between vertices in . For instance, when applying Rule 3 of Meek’s rules, as shown in Figure A2, if is the edge in due to Case 1.2.4, then the following hold:

- If , then because of the directed edge , we can obtain , implying that the new oriented edge is the directed edge out of the set , which does not affect the orientation of edges between vertices in .

- If , then because of the directed edge , we have . Note that if , then we have and . Since in Case 1.2.4, only paths between and cannot be blocked, and all such paths have an arrow pointing to . Hence, in the process of learning the graph , we have and , implying that a v-structure occurs in the graph before applying Meek’s rules, which is a contradiction. Hence, , implying that the newly oriented edge is the directed edge between vertices in the set , which does not affect the orientation of edges between vertices in .

Figure A2.

Rule 3 of Meek’s rules as an example to illustrate Case 1.

Other cases of Meek’s rules can be similarly proved. In fact, for Case 1 as shown in Figure A1, if there exists a vertex such that the edge between and W may be misoriented in due to the new edges in , we have ; otherwise, forms a v-structure and the edge can be oriented correctly in both and . And then, we have ; otherwise, can be oriented by v-structure and can be oriented correctly in both and by applying Rule 2 of Meek’s rules if the edge between and is directed and using Lemma 1 in [24] if the edge between and is undirected. Similarly, we have and . Note that the vertices and W form a triangle, implying that the edge between W and cannot be oriented by applying Meek’s rules to the edge between and in , which contradicts the assumption.

Case 2. .

Case 2.1. and . In this case, there are three possible paths between and in the graph :

Case 2.1.1. Non-causal-path . The specific discussion is similar to Case 1.2.3.

Case 2.1.2. Non-causal-path (or ). There must exist at least one v-structure in the path, and the path can be blocked given an empty set. Note that, different from Case 1.2.4, there is no causal path between and at this time, implying that the collider of the v-structure closest to (or ) will not be adjusted. Therefore, and are not adjacent in , which is a contradiction.

Case 2.1.3. Non-causal-path . Since some parents of or may not belong to the set , this path cannot be blocked. For example, in the fork , when , an edge in appears between and . Similar to the discussion at the end of Case 1, no matter how this edge is oriented, Meek’s rules are backward-oriented, so the orientation of this edge only happens inside the set and does not affect the orientation within the set .

Case 2.2. (the case of can be discussed similarly). In this case, there are four possible paths between and in the graph :

Case 2.2.1. Causal path . The discussion is the same as Case 1.2.1.

Case 2.2.2. Non-causal-path or . The discussion is the same as Case 1.2.3.

Case 2.2.3. Non-causal-path . The discussion is the same as Case 1.2.4.

Case 2.2.4. Non-causal-path . The discussion is the same as Case 2.1.3.

Case 2.3. This case is symmetric to Case 2.2 and can be discussed similarly.

We already proved that the edges in do not affect the skeleton or orientation of edges between ancestors of in . In fact, it is worth mentioning that, in the above proof, all discussions focus on graph , implying that the orientation of edges between vertices in are not affected by the new edge in . In other words, the ancestors of in the two graphs are the same, while the possible ancestors of in are also the possible ancestors of in , but not vice versa. □

Proof of Theorem 3.

We need to prove that for any , X is a direct cause of if and only if there is no subset such that . According to Theorem 1, the “only if” part holds obviously.

Now, we prove the “if” part. According to Theorem 1, for any , X is a direct cause of if and only if there is no subset such that . Hence, using the definition of in Algorithm 2, it suffices to prove that if we have , then for each non-direct-cause variable , there exists at least one separation set of V and that does not contain X. In fact, paths between V and can be divided into two types: paths that go through X and paths that do not go through X. For paths that do not go through X, the separation set naturally does not contain X. Then, for any path that goes through X, the path can be represented as , where has already been blocked by some variables in set . Therefore, whether the subpath is blocked or not, the path can be separated by a set that does not contain X. □

Proof of Theorem 4.

We first prove that Algorithm 3 can learn the PCD set of a target node T correctly. Similar to the definition of , let be the set of variables associated with T. As shown in Line 1 in Algorithm 3, the initial value of is , which is a subset of . In fact, if the non-PC set is empty, then we have . According to the Markov condition and the faithfulness assumption, for any , X is a parent or child of T if and only if there is no subset such that . Hence, contains all parents and children of T. For any nondescendant variable of T, the set of T’s parents separates them from T. Due to the lack of a separation set, some descendant variables of T may be included in , as shown in Example 3. Therefore, obtained by Algorithm 3 consists of T’s parents, children, and some descendants.

Now, we prove that Algorithm 4 can learn all causes of within a given distance m. First, according to the discussion following Example 3, Algorithm 4 can learn the PC set of each variable correctly by using Algorithm 3 and symmetric validation method (Lines 7–13 in Algorithm 4). In other words, Algorithm 4 can learn the skeleton of the local structure of each variable correctly. Next, note that once the skeleton of the local structure of a variable is determined, its separation sets from other variables are also obtained at the same time (Line 6 in Algorithm 4). Therefore, all v-structures can be learned correctly because they are determined by local structures and separation sets (Line 14 in Algorithm 4). Combined with Meek’s rules, Algorithm 4 learns the orientation of the local structure of each variable correctly. Finally, we show that continuing the algorithm cannot obtain more causes of within a distance m. Notice that we learn the local structure of nodes layer by layer, and we only learn the next layer after all the nodes of a certain layer have been learned (Line 15 in Algorithm 4). Hence, once Algorithm 4 is stopped, it means that all directed paths pointing to with a distance less than or equal to m have been found, and the m-th edge of these paths has been directed. As shown above, we can correctly obtain all edges and v-structures and their orientations. Hence, continuing the algorithm can only orient new edges that are farther away from (), which is not what we care about.

We already showed that Algorithm 4 can correctly learn all causes of that are within a distance of m from . Note that the distance between a functional dynamic target and its direct causes is always 1. Thus, obviously, if is exactly the set of ’s direct causes obtained from Algorithm 2, and the node L in Line 2 in Algorithm 4 is exactly , then according to Theorem 3 and the proof above, Algorithm 4 learns all causes of within a given distance correctly. □

References

- Karkach, F. Trajectories and models of individual growth. Demogr. Res. 2006, 15, 347–400. [Google Scholar] [CrossRef]

- Richards, A.S. A flexible growth function for empirical use. J. Exp. Bot. 1959, 10, 290–301. [Google Scholar] [CrossRef]

- Zimmerman, D.L.; Núñez-Antón, V. Parametric modelling of growth curve data: An overview. Test 2011, 10, 1–73. [Google Scholar] [CrossRef]

- Murre, J.M.; Chessa, A.G. Power laws from individual differences in learning and forgetting: Mathematical analyses. Psychon. Bull. Rev. 2001, 18, 592–597. [Google Scholar] [CrossRef] [PubMed]

- Wixted, J.T.; Chessa, A.G. On Common Ground: Jost’s (1897) law of forgetting and Ribot’s (1881) law of retrograde amnesia. Psychol. Rev. 2004, 111, 864–879. [Google Scholar] [CrossRef] [PubMed]

- Sachs, K.; Perez, O.; Pe’er, D.; Lauffenburger, D.A.; Nolan, G.P. Causal protein-signaling networks derived from multiparameter single-cell data. Science 2005, 308, 523–529. [Google Scholar] [CrossRef] [PubMed]

- Pearl, J. Causality Models, Reasoning and Inference, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Han, B.; Park, M.; Chen, X.W. A Markov blanket-based method for detecting causal SNPs in GWAS. BMC Bioinform. 2010, 11, S5. [Google Scholar] [CrossRef]

- Duren, Z.; Wang, Y. A systematic method to identify modulation of transcriptional regulation via chromatin activity reveals regulatory network during mESC differentiation. Sci. Rep. 2016, 6, 22656. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Heckman, J.J. Comment on “Identification of causal effects using instrumental variables”. J. Am. Stat. Assoc. 1996, 91, 459–462. [Google Scholar] [CrossRef]

- Winship, C.; Morgan, S.L. The estimation of causal effects from observational data. Annu. Rev. Sociol. 1999, 25, 659–706. [Google Scholar] [CrossRef]

- Yin, J.; Zhou, Y.; Wang, C.; He, P.; Zheng, C.; Geng, Z. Partial Orientation and Local Structural Learning of Causal Networks for Prediction. In Proceedings of the Causation and Prediction Challenge at WCCI, Hong Kong, China, 1–6 June 2008; pp. 93–105. [Google Scholar]

- Wang, C.; Zhou, Y.; Zhao, Q.; Geng, Z. Discovering and Orienting the Edges Connected to a Target Variable in a DAG via a Sequential Local Learning Approach. Comput. Stat. Data Anal. 2014, 77, 252–266. [Google Scholar] [CrossRef]

- Pena, J.M.; Nilsson, R.; Bjorkegren, J.; Tegner, J. Towards Scalable and Data Efficient Learning of Markov Boundaries. J. Mach. Learn. Res. 2007, 45, 211–232. [Google Scholar]

- Gao, T.; Ji, Q. Efficient Markov Blanket Discovery and Its Application. IEEE Trans. Cybern. 2017, 47, 1169–1179. [Google Scholar] [CrossRef]

- Wang, H.; Ling, Z.; Yu, K.; Wu, X. Towards Efficient and Effective Discovery of Markov Blankets for Feature Selection. Inf. Sci. 2020, 509, 227–242. [Google Scholar] [CrossRef]

- Gao, T.; Ji, Q. Local Causal Discovery of Direct Causes and Effects. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2512–2520. [Google Scholar]

- Ling, Z.; Yu, K.; Wang, H.; Liu, L.; Li, J. Any Part of Bayesian Network Structure Learning. IEEE Trans. Neural Netw. Learn. Syst. 2019, 14, 1–14. [Google Scholar]

- Yu, L.; Liu, H. Efficient Feature Selection via Analysis of Relevance and Redundancy. J. Mach. Learn. Res. 2004, 5, 1205–1224. [Google Scholar]

- Pearl, J.; Shafer, G. Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference; Morgan Kaufmann: San Mateo, CA, USA, 1988. [Google Scholar]

- Verma, T.; Pearl, J. Equivalence and synthesis of causal models. In Proceedings of the 6th Conference on Uncertainty in Artificial Intelligence, Cambridge, MA, USA, 27–29 July 1990; pp. 220–227. [Google Scholar]

- Pearl, J.; Geiger, D.; Verma, T. Conditional independence and its representations. Kybernetika 1989, 25, 33–44. [Google Scholar]

- Andersson, S.A.; Madigan, D.; Perlman, M.D. A characterization of Markov equivalence classes for acyclic digraphs. Ann. Stat. 1997, 25, 505–541. [Google Scholar] [CrossRef]

- Meek, C. Causal inference and causal explanation with background knowledge. In Proceedings of the Eleventh Conference on Uncertainty in Artificial Intelligence, Montreal, QC, Canada, 18–20 August 1995; pp. 403–410. [Google Scholar]

- Fekedulegn, D.; Mac Siúrtáin, M.P.; Colbert, J.J. Parameter estimation of nonlinear models in forestry. Silva Fenn. 1999, 33, 327–336. [Google Scholar] [CrossRef]

- Gossman, M.; Koops, W. Multiple analysis of growth curves in chickens. Poulty Sci. 1988, 67, 33–42. [Google Scholar] [CrossRef]

- Xu, M.J.; Zhu, L.B.; Zhou, S.; Ye, C.G.; Mao, M.X.; Sun, K.; Su, L.D.; Pan, X.H.; Zhang, H.X.; Huang, S.G.; et al. A computational framework for mapping the timing of vegetative phase change. New Phytol. 2016, 211, 750–760. [Google Scholar] [CrossRef]

- Spirtes, P.; Glymour, C. An algorithm for fast recovery of sparse causal graphs. Soc. Sci. Comput. Rev. 1991, 9, 62–72. [Google Scholar] [CrossRef]

- Chickering, D.M. Optimal structure identification with greedy search. J. Mach. Learn. Res. 2002, 3, 507–554. [Google Scholar]

- West, D. Introduction to Graph Theory; Prentice Hall: Upper Saddle River, NJ, USA, 1996. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).