A Broken Duet: Multistable Dynamics in Dyadic Interactions

Abstract

1. Introduction

2. Dyadic Exchanges and Bayesian Inference

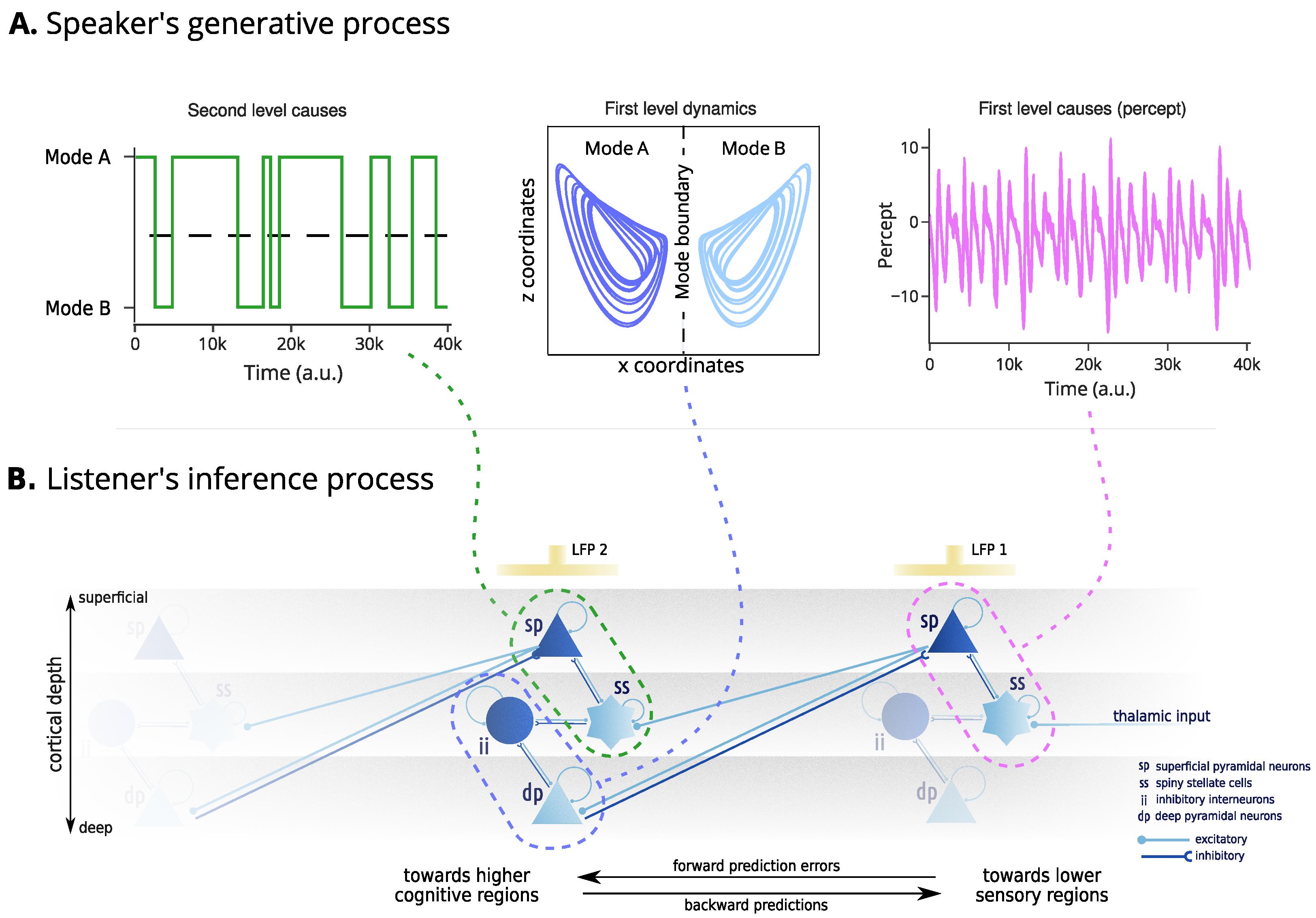

2.1. Hierarchical Processing of Speech

2.2. Predictive Coding and Dyadic Exchanges

2.3. Communication between Native and Non-Native Speakers

3. Methods

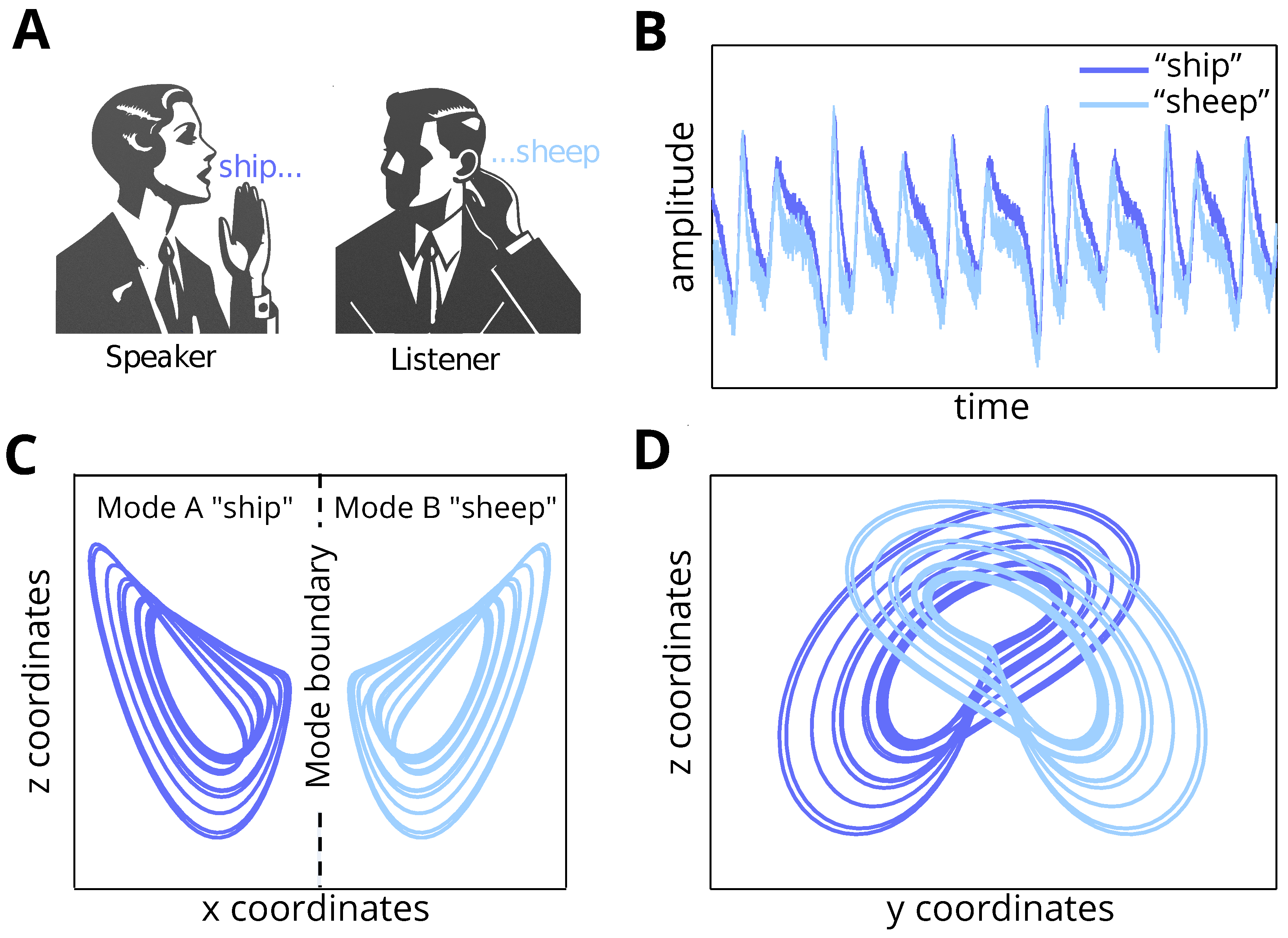

3.1. Bistable Internal Dynamics

3.2. Ambiguous Outcome

3.3. Relevance for Dyadic Exchanges

3.4. Inversion Scheme

3.5. Modelling In Silico Evoked Responses

4. Results

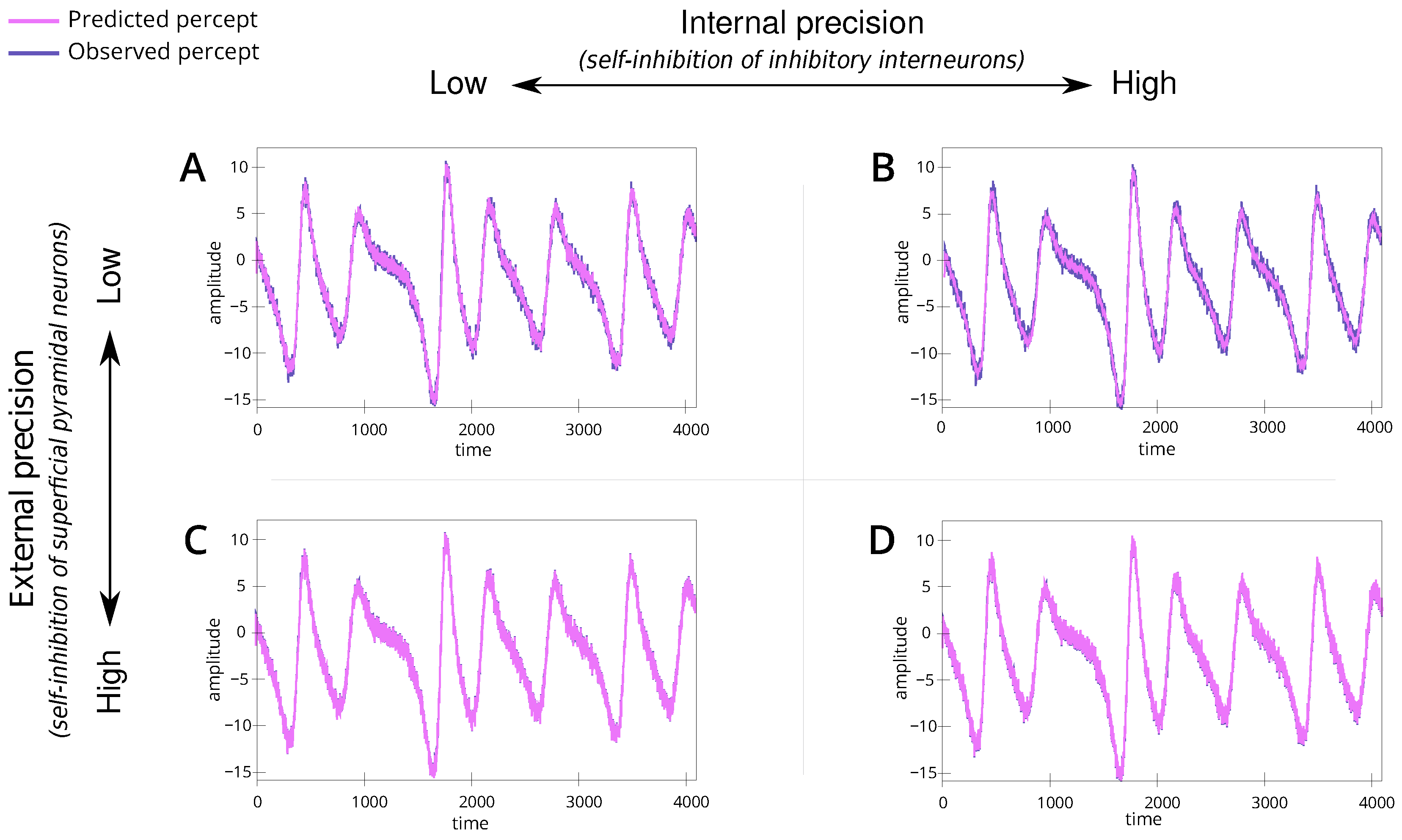

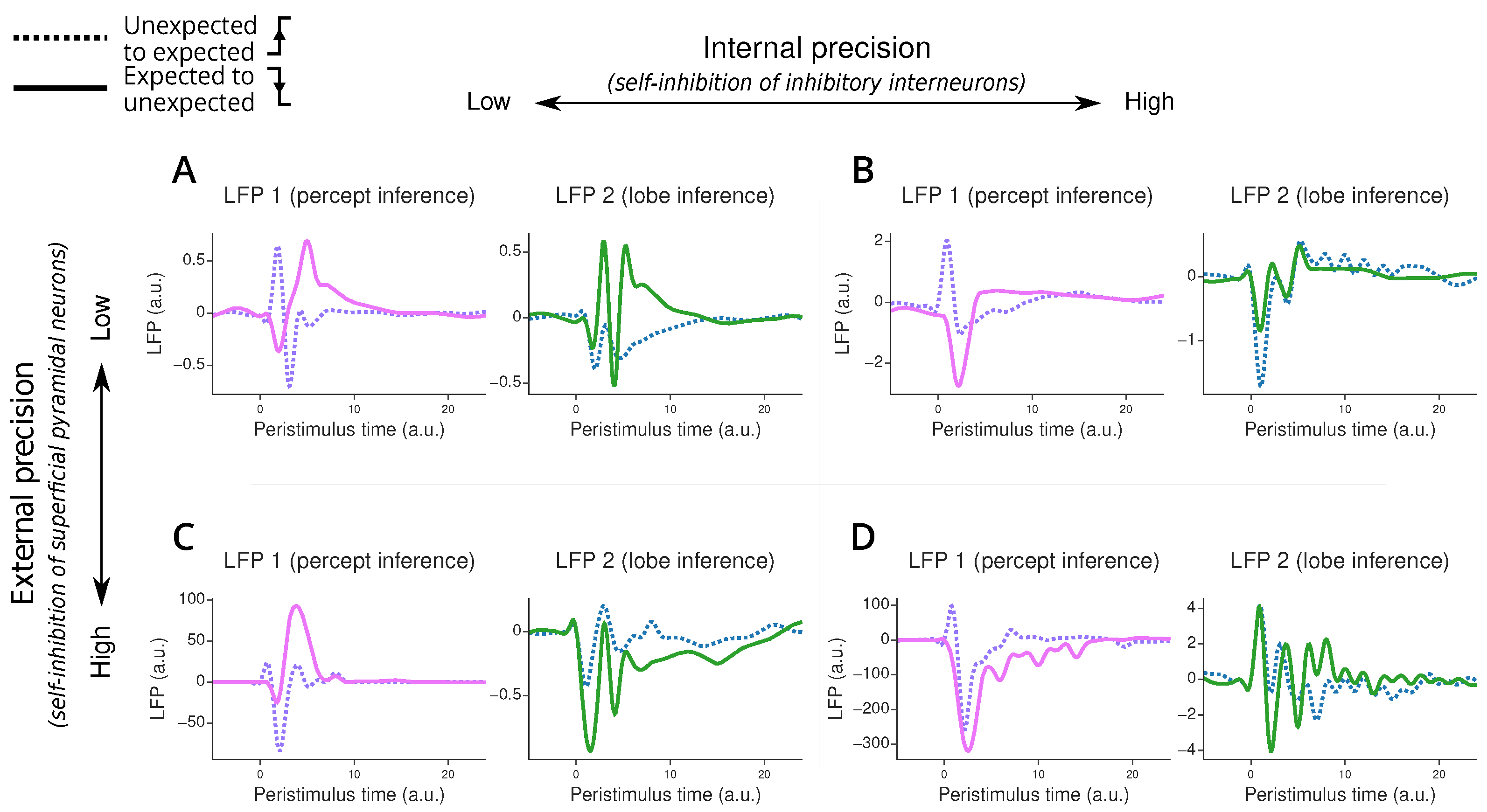

4.1. Low Internal and External Precision

4.2. High Internal Precision with Low External Precision

4.3. Low Internal Precision with High External Precision

4.4. High Internal and External Precision

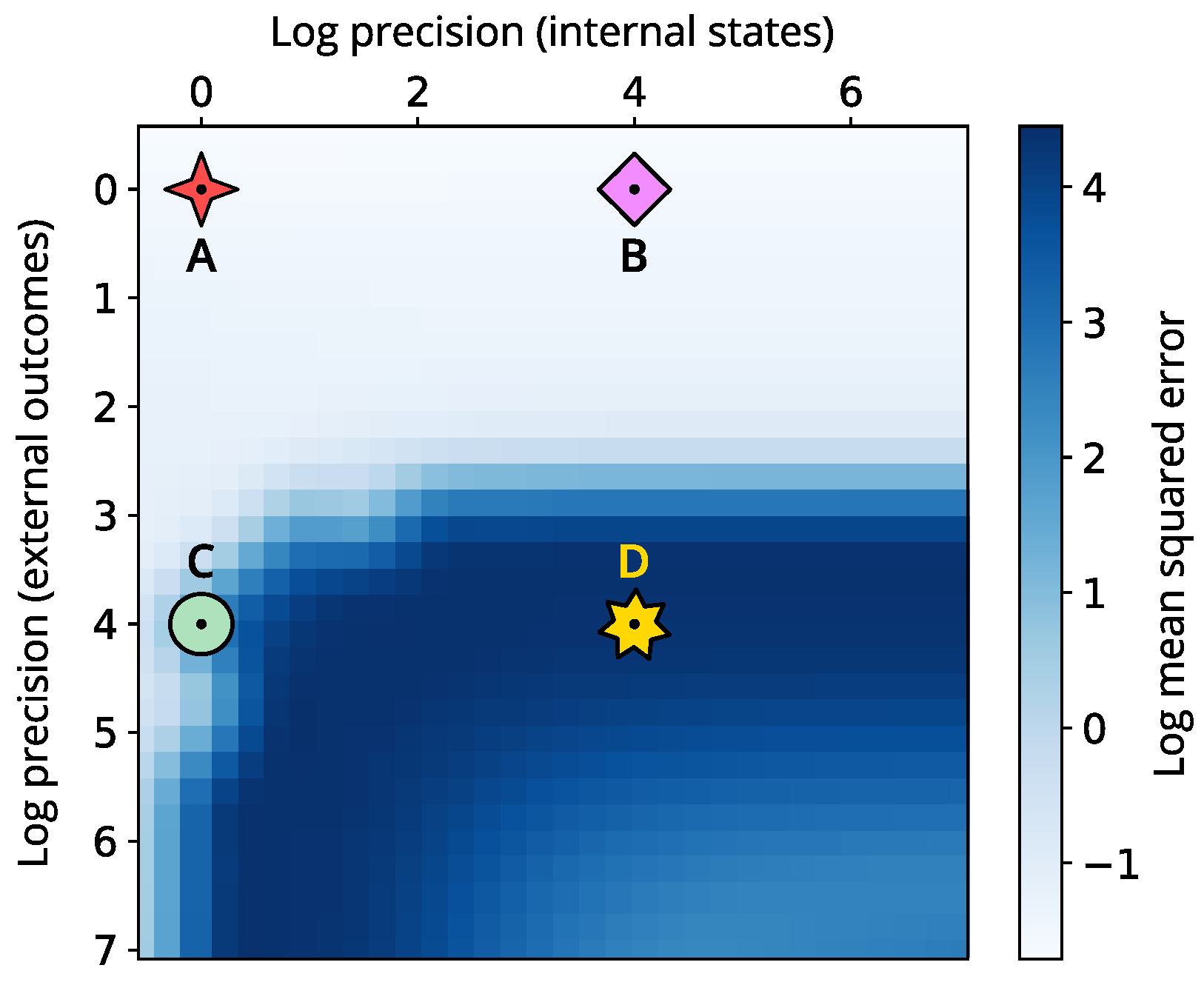

4.5. Trade-Off between Internal and External Precision

4.6. Simulated In Silico Evoked Responses

5. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gopnik, A.; Choi, S. Do linguistic differences lead to cognitive differences? A cross-linguistic study of semantic and cognitive development. First Lang. 1990, 10, 199–215. [Google Scholar] [CrossRef]

- Dąbrowska, E.; Street, J. Individual differences in language attainment: Comprehension of passive sentences by native and non-native English speakers. Lang. Sci. 2006, 28, 604–615. [Google Scholar] [CrossRef]

- Long, M.H. Linguistic and conversational adjustments to non-native speakers. Stud. Second Lang. Acquis. 1983, 5, 177–193. [Google Scholar] [CrossRef]

- Clahsen, H.; Felser, C. How native-like is non-native language processing? Trends Cogn. Sci. 2006, 10, 564–570. [Google Scholar] [CrossRef]

- Friston, K. The free-energy principle: A rough guide to the brain? Trends Cogn. Sci. 2009, 13, 293–301. [Google Scholar] [CrossRef]

- Penny, W. Bayesian Models of Brain and Behaviour. ISRN Biomath. 2012, 2012, 785791. [Google Scholar] [CrossRef]

- Schmidhuber, J. Learning Complex, Extended Sequences Using the Principle of History Compression. Neural Comput. 1992, 4, 234–242. [Google Scholar] [CrossRef]

- Dayan, P.; Hinton, G.E.; Neal, R.M.; Zemel, R.S. The Helmholtz machine. Neural Comput. 1995, 7, 889–904. [Google Scholar] [CrossRef]

- Knill, D.C.; Pouget, A. The Bayesian brain: The role of uncertainty in neural coding and computation. Trends Neurosci. 2004, 27, 712–719. [Google Scholar] [CrossRef]

- Friston, K.; Frith, C. A duet for one. Conscious. Cogn. 2015, 36, 390–405. [Google Scholar] [CrossRef]

- Friston, K.J.; Frith, C.D. Active inference, communication and hermeneutics. Cortex 2015, 68, 129–143. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.J.; Parr, T.; Yufik, Y.; Sajid, N.; Price, C.J.; Holmes, E. Generative models, linguistic communication and active inference. Neurosci. Biobehav. Rev. 2020, 118, 42–64. [Google Scholar] [CrossRef] [PubMed]

- Sajid, N.; Da Costa, L.; Parr, T.; Friston, K. Active inference, Bayesian optimal design, and expected utility. In The Drive for Knowledge: The Science of Human Information Seeking; Cambridge University Press: Cambridge, UK, 2022; pp. 124–146. [Google Scholar]

- Friston, K.; Da Costa, L.; Sajid, N.; Heins, C.; Ueltzhöffer, K.; Pavliotis, G.A.; Parr, T. The free energy principle made simpler but not too simple. Phys. Rep. 2023, 1024, 1–29. [Google Scholar] [CrossRef]

- Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 2013, 36, 181–204. [Google Scholar] [CrossRef]

- Schwartenbeck, P.; FitzGerald, T.H.; Mathys, C.; Dolan, R.; Wurst, F.; Kronbichler, M.; Friston, K. Optimal inference with suboptimal models: Addiction and active Bayesian inference. Med. Hypotheses 2015, 84, 109–117. [Google Scholar] [CrossRef]

- Friston, K.J.; Sajid, N.; Quiroga-Martinez, D.R.; Parr, T.; Price, C.J.; Holmes, E. Active listening. Hear. Res. 2021, 399, 107998. [Google Scholar] [CrossRef] [PubMed]

- Lorenz, E.N. Deterministic nonperiodic flow. J. Atmos. Sci. 1963, 20, 130–141. [Google Scholar] [CrossRef]

- Li, C.; Sprott, J.C. Multistability in the Lorenz system: A broken butterfly. Int. J. Bifurc. Chaos 2014, 24, 1450131. [Google Scholar] [CrossRef]

- Tognoli, E.; Kelso, J.S. The metastable brain. Neuron 2014, 81, 35–48. [Google Scholar] [CrossRef]

- McIntosh, A.R.; Jirsa, V.K. The hidden repertoire of brain dynamics and dysfunction. Netw. Neurosci. 2019, 3, 994–1008. [Google Scholar] [CrossRef]

- Medrano, J.; Friston, K.; Zeidman, P. Linking fast and slow: The case for generative models. Netw. Neurosci. 2024, 8, 24–43. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Leong, Y.C.; Honey, C.J.; Yong, C.H.; Norman, K.A.; Hasson, U. Shared memories reveal shared structure in neural activity across individuals. Nat. Neurosci. 2017, 20, 115–125. [Google Scholar] [CrossRef]

- Baldassano, C.; Chen, J.; Zadbood, A.; Pillow, J.W.; Hasson, U.; Norman, K.A. Discovering event structure in continuous narrative perception and memory. Neuron 2017, 95, 709–721. [Google Scholar] [CrossRef] [PubMed]

- Zada, Z.; Goldstein, A.; Michelmann, S.; Simony, E.; Price, A.; Hasenfratz, L.; Barham, E.; Zadbood, A.; Doyle, W.; Friedman, D.; et al. A shared model-based linguistic space for transmitting our thoughts from brain to brain in natural conversations. Neuron 2024, 112, 1–12. [Google Scholar] [CrossRef]

- Stephens, G.J.; Silbert, L.J.; Hasson, U. Speaker–listener neural coupling underlies successful communication. Proc. Natl. Acad. Sci. USA 2010, 107, 14425–14430. [Google Scholar] [CrossRef] [PubMed]

- Dikker, S.; Silbert, L.J.; Hasson, U.; Zevin, J.D. On the same wavelength: Predictable language enhances speaker–listener brain-to-brain synchrony in posterior superior temporal gyrus. J. Neurosci. 2014, 34, 6267–6272. [Google Scholar] [CrossRef]

- Silbert, L.J.; Honey, C.J.; Simony, E.; Poeppel, D.; Hasson, U. Coupled neural systems underlie the production and comprehension of naturalistic narrative speech. Proc. Natl. Acad. Sci. USA 2014, 111, E4687–E4696. [Google Scholar] [CrossRef]

- Jackendoff, R. Précis of foundations of language: Brain, meaning, grammar, evolution. Behav. Brain Sci. 2003, 26, 651–665. [Google Scholar] [CrossRef]

- Friederici, A.D. Towards a neural basis of auditory sentence processing. Trends Cogn. Sci. 2002, 6, 78–84. [Google Scholar] [CrossRef]

- Price, C.J. The anatomy of language: A review of 100 fMRI studies published in 2009. Ann. N. York Acad. Sci. 2010, 1191, 62–88. [Google Scholar] [CrossRef]

- Sajid, N.; Gajardo-Vidal, A.; Ekert, J.O.; Lorca-Puls, D.L.; Hope, T.M.; Green, D.W.; Friston, K.J.; Price, C.J. Degeneracy in the neurological model of auditory speech repetition. Commun. Biol. 2023, 6, 1161. [Google Scholar] [CrossRef] [PubMed]

- Kaas, J.H. The evolution of auditory cortex: The core areas. In The Auditory Cortex; Springer: Berlin/Heidelberg, Germany, 2010; pp. 407–427. [Google Scholar]

- Hickok, G.; Poeppel, D. The cortical organization of speech processing. Nat. Rev. Neurosci. 2007, 8, 393–402. [Google Scholar] [CrossRef] [PubMed]

- Friederici, A.D. The brain basis of language processing: From structure to function. Physiol. Rev. 2011, 91, 1357–1392. [Google Scholar] [CrossRef]

- Hagoort, P. On Broca, brain, and binding: A new framework. Trends Cogn. Sci. 2005, 9, 416–423. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef]

- Rao, R.; Sejnowski, T.J. Predictive sequence learning in recurrent neocortical circuits. Adv. Neural Inf. Process. Syst. 1999, 12, 164–170. [Google Scholar]

- Friston, K. Hierarchical models in the brain. PLoS Comput. Biol. 2008, 4, e1000211. [Google Scholar] [CrossRef]

- Bastos, A.M.; Usrey, W.M.; Adams, R.A.; Mangun, G.R.; Fries, P.; Friston, K.J. Canonical microcircuits for predictive coding. Neuron 2012, 76, 695–711. [Google Scholar] [CrossRef]

- Blank, H.; Davis, M.H. Prediction errors but not sharpened signals simulate multivoxel fMRI patterns during speech perception. PLoS Biol. 2016, 14, e1002577. [Google Scholar] [CrossRef]

- Sohoglu, E.; Peelle, J.E.; Carlyon, R.P.; Davis, M.H. Predictive top-down integration of prior knowledge during speech perception. J. Neurosci. 2012, 32, 8443–8453. [Google Scholar] [CrossRef]

- Hahne, A.; Friederici, A.D. Electrophysiological evidence for two steps in syntactic analysis: Early automatic and late controlled processes. J. Cogn. Neurosci. 1999, 11, 194–205. [Google Scholar] [CrossRef]

- Steinhauer, K.; Drury, J.E. On the early left-anterior negativity (ELAN) in syntax studies. Brain Lang. 2012, 120, 135–162. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.J.; Trujillo-Barreto, N.; Daunizeau, J. DEM: A variational treatment of dynamic systems. Neuroimage 2008, 41, 849–885. [Google Scholar] [CrossRef]

- Friston, K.; Stephan, K.; Li, B.; Daunizeau, J. Generalised filtering. Math. Probl. Eng. 2010, 2010. [Google Scholar] [CrossRef]

- Adank, P.; Davis, M.H.; Hagoort, P. Neural dissociation in processing noise and accent in spoken language comprehension. Neuropsychologia 2012, 50, 77–84. [Google Scholar] [CrossRef]

- Yi, H.G.; Smiljanic, R.; Chandrasekaran, B. The neural processing of foreign-accented speech and its relationship to listener bias. Front. Hum. Neurosci. 2014, 8, 768. [Google Scholar] [CrossRef] [PubMed]

- Goslin, J.; Duffy, H.; Floccia, C. An ERP investigation of regional and foreign accent processing. Brain Lang. 2012, 122, 92–102. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.J. Computational Nosology and Precision Psychiatry: A Proof of Concept. In Computational Psychiatry: New Perspectives on Mental Illness; Redish, A.D., Gordon, J.A., Eds.; MIT Press: Cambridge, MA, USA, 2017; Volume 20. [Google Scholar] [CrossRef]

- Pastukhov, A.; García-Rodríguez, P.E.; Haenicke, J.; Guillamon, A.; Deco, G.; Braun, J. Multi-stable perception balances stability and sensitivity. Front. Comput. Neurosci. 2013, 7, 17. [Google Scholar] [CrossRef] [PubMed]

- Gershman, S.J.; Vul, E.; Tenenbaum, J.B. Multistability and perceptual inference. Neural Comput. 2012, 24, 1–24. [Google Scholar] [CrossRef]

- Sterzer, P.; Kleinschmidt, A.; Rees, G. The neural bases of multistable perception. Trends Cogn. Sci. 2009, 13, 310–318. [Google Scholar] [CrossRef]

- Thiede, A.; Glerean, E.; Kujala, T.; Parkkonen, L. Atypical MEG inter-subject correlation during listening to continuous natural speech in dyslexia. Neuroimage 2020, 216, 116799. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Hong, B.; Nolte, G.; Engel, A.K.; Zhang, D. Speaker-listener neural coupling correlates with semantic and acoustic features of naturalistic speech. Soc. Cogn. Affect. Neurosci. 2024, 19, nsae051. [Google Scholar] [CrossRef] [PubMed]

- Sajid, N.; Holmes, E.; Costa, L.D.; Price, C.; Friston, K. A mixed generative model of auditory word repetition. bioRxiv 2022. [Google Scholar]

- Auksztulewicz, R.; Friston, K. Repetition suppression and its contextual determinants in predictive coding. Cortex 2016, 80, 125–140. [Google Scholar] [CrossRef] [PubMed]

- Bradlow, A.R.; Bent, T. Perceptual adaptation to non-native speech. Cognition 2008, 106, 707–729. [Google Scholar] [CrossRef]

- Weber-Fox, C.M.; Neville, H.J. Maturational constraints on functional specializations for language processing: ERP and behavioral evidence in bilingual speakers. J. Cogn. Neurosci. 1996, 8, 231–256. [Google Scholar] [CrossRef]

- Hahne, A. What’s different in second-language processing? Evidence from event-related brain potentials. J. Psycholinguist. Res. 2001, 30, 251–266. [Google Scholar] [CrossRef]

- Hahne, A.; Friederici, A.D. Processing a second language: Late learners’ comprehension mechanisms as revealed by event-related brain potentials. Biling. Lang. Cogn. 2001, 4, 123–141. [Google Scholar] [CrossRef]

- Ojima, S.; Nakata, H.; Kakigi, R. An ERP study of second language learning after childhood: Effects of proficiency. J. Cogn. Neurosci. 2005, 17, 1212–1228. [Google Scholar] [CrossRef]

- Steinhauer, K.; White, E.J.; Drury, J.E. Temporal dynamics of late second language acquisition: Evidence from event-related brain potentials. Second Lang. Res. 2009, 25, 13–41. [Google Scholar] [CrossRef]

- Pulvermüller, F. The Neuroscience of Language: On Brain Circuits of Words and Serial Order; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Chomsky, N. Three factors in language design. Linguist. Inq. 2005, 36, 1–22. [Google Scholar] [CrossRef]

- Haken, H.; Kelso, J.S.; Bunz, H. A theoretical model of phase transitions in human hand movements. Biol. Cybern. 1985, 51, 347–356. [Google Scholar] [CrossRef]

- Haken, H.; Portugali, J. Information and self-organization II: Steady state and phase transition. Entropy 2021, 23, 707. [Google Scholar] [CrossRef] [PubMed]

- Vilalta, R.; Drissi, Y. A perspective view and survey of meta-learning. Artif. Intell. Rev. 2002, 18, 77–95. [Google Scholar] [CrossRef]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Precup, D., Teh, Y.W., Eds.; PMLR: London, UK, 2017; Volume 70, pp. 1126–1135. [Google Scholar]

- Fountas, Z.; Sajid, N.; Mediano, P.; Friston, K. Deep active inference agents using Monte-Carlo methods. Adv. Neural Inf. Process. Syst. 2020, 33, 11662–11675. [Google Scholar]

- Yuan, K.; Sajid, N.; Friston, K.; Li, Z. Hierarchical generative modelling for autonomous robots. Nat. Mach. Intell. 2023, 5, 1402–1414. [Google Scholar] [CrossRef]

- Tanenhaus, M.K.; Trueswell, J.C. Eye movements and spoken language comprehension. In Handbook of Psycholinguistics; Elsevier: Amsterdam, The Netherlands, 2006; pp. 863–900. [Google Scholar]

| Simulation | Internal Precision | External Precision |

|---|---|---|

| A | ||

| B | ||

| C | ||

| D |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Medrano, J.; Sajid, N. A Broken Duet: Multistable Dynamics in Dyadic Interactions. Entropy 2024, 26, 731. https://doi.org/10.3390/e26090731

Medrano J, Sajid N. A Broken Duet: Multistable Dynamics in Dyadic Interactions. Entropy. 2024; 26(9):731. https://doi.org/10.3390/e26090731

Chicago/Turabian StyleMedrano, Johan, and Noor Sajid. 2024. "A Broken Duet: Multistable Dynamics in Dyadic Interactions" Entropy 26, no. 9: 731. https://doi.org/10.3390/e26090731

APA StyleMedrano, J., & Sajid, N. (2024). A Broken Duet: Multistable Dynamics in Dyadic Interactions. Entropy, 26(9), 731. https://doi.org/10.3390/e26090731