Feasibility of a Personal Neuromorphic Emulation

Abstract

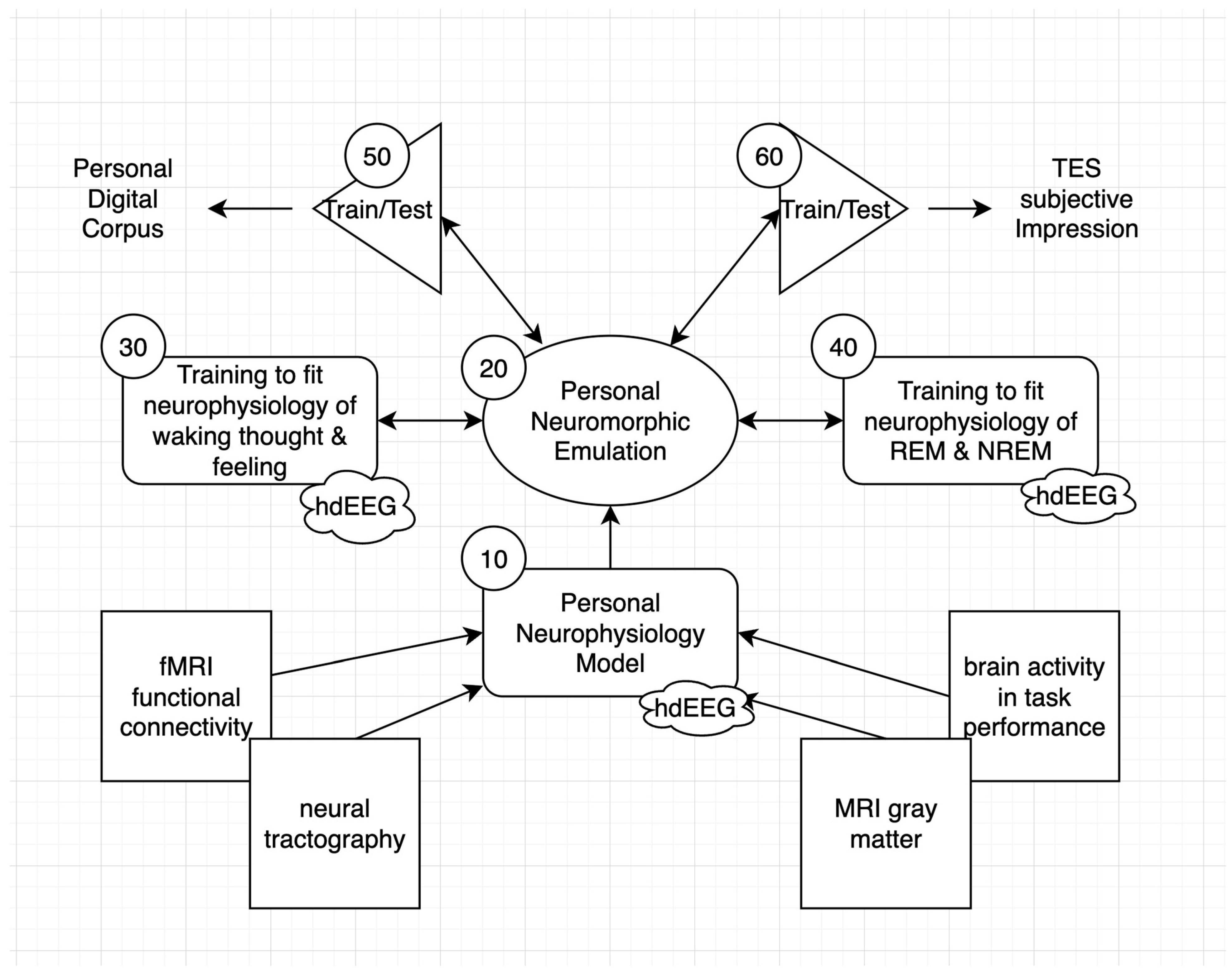

1. Introduction

2. Formulating Principles on the Physical, Informatic Basis of Intelligence

2.1. Overview of the Principles

2.2. The Identity of Mind and Developing the Brain Anatomy

2.3. The Informatic Basis of Organisms Is Computable

2.4. Inferring the Weights of a Mortal Computer

2.5. Hypothesis: Active Inference Is Consolidated in Sleep and Dreams

3. Observing and Emulating the Consolidation of Experience

3.1. Inferring Neural Connection Weights through Very High-Definition EEG

3.2. A Personal Neuromorphic Interface to Evolving AI Architectures

3.3. Phenomenology of the AI Interface: Is This Still Me?

4. Consolidating Experience through Active Inference

4.1. Growing a Mind through Active Inference

4.2. The Limbic Base of Adaptive Bayes

4.3. The Phenomenology of Active Inference

4.4. The Differential Precision of Variational Bayesian Inference

4.5. The Challenge of Neural Self-Regulation through Vertical Integration

4.6. Self-Regulation of Active Inference and the Predictable Consciousness of a Good Self-Regulator

4.7. Limitations

4.8. Conclusions: Human Experience Is Computable, and the Self Is Implicit in Our Dreams

Author Contributions

Funding

Conflicts of Interest

References

- Rosenblatt, F. The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef] [PubMed]

- Grossberg, S. How does a brain build a cognitive code? Psychol. Rev. 1980, 87, 1–51. [Google Scholar] [CrossRef] [PubMed]

- Minsky, M.; Papert, S. An Introduction to Computational Geometry; Science Press: Beijing, China, 1969. [Google Scholar]

- Tucker, D.M.; Desmond, R.E., Jr. Aging and the Plasticity of the Self. In Annual Review of Gerontology and Geriatrics, Vol 17: Focus on Emotion and Adult Development; Shaie, K.W., Laughton, M.P., Eds.; Springer: New York, NY, USA, 1998; pp. 266–281. [Google Scholar]

- Rumelhart, D.E.; McClelland, J.L. Parallel Distributed Processing: Explorations in the Microstructure of Cognition; MIT Press: Cambridge, MA, USA, 1986; Volume I: Foundations. [Google Scholar]

- Dayan, P.; Hinton, G.E.; Neal, R.M.; Zemel, R.S. The helmholtz machine. Neural Comput. 1995, 7, 889–904. [Google Scholar] [CrossRef]

- Friston, K. A free energy principle for a particular physics. arXiv 2019, arXiv:1906.10184. [Google Scholar]

- Changeux, J.-P. The Physiology of Truth: Neuroscience and Human Knowledge; Harvard University Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Tucker, D.M.; Luu, P. Cognition and Neural Development; Oxford University Press: New York, NY, USA, 2012. [Google Scholar]

- Johnson, M.; Tucker, D.M. Out of the Cave: The Natural Philosophy of Mind and Knowing; MIT Press: Cambridge, MA, USA, 2021. [Google Scholar]

- Schrodinger, E. What is Life? The Physical Aspect of the Living Cell; Cambridge University Press: Cambridge, UK, 1944. [Google Scholar]

- von de Malsburg, C.; Singer, W. Principles of cortical network organization. In Neurobiology of Neocortex; Rakic, P., Singer, W., Eds.; Wiley: New York, NY, USA, 1988; pp. 69–99. [Google Scholar]

- Luu, P.; Tucker, D.M. Continuity and change in neural plasticity through embryonic morphogenesis, fetal activity-dependent synaptogenesis, and infant memory consolidation. Dev. Psychobiol. 2023, 65, e22439. [Google Scholar] [CrossRef] [PubMed]

- Badcock, P.B.; Friston, K.J.; Ramstead, M.J.; Ploeger, A.; Hohwy, J. The hierarchically mechanistic mind: An evolutionary systems theory of the human brain, cognition, and behavior. Cogn. Affect. Behav. Neurosci. 2019, 19, 1319–1351. [Google Scholar] [CrossRef]

- Luu, P.; Tucker, D.M.; Friston, K. From active affordance to active inference: Vertical integration of cognition in the cerebral cortex through dual subcortical control systems. Cereb. Cortex 2024, 34, 1–30. [Google Scholar] [CrossRef]

- García-Cabezas, M.Á.; Zikopoulos, B.; Barbas, H. The Structural Model: A theory linking connections, plasticity, pathology, development and evolution of the cerebral cortex. Brain Struct. Funct. 2019, 224, 985–1008. [Google Scholar] [CrossRef]

- Sanda, P.; Malerba, P.; Jiang, X.; Krishnan, G.P.; Gonzalez-Martinez, J.; Halgren, E.; Bazhenov, M. Bidirectional interaction of hippocampal ripples and cortical slow waves leads to coordinated spiking activity during NREM sleep. Cereb. Cortex 2021, 31, 324–340. [Google Scholar] [CrossRef]

- Bastos, A.M.; Usrey, W.M.; Adams, R.A.; Mangun, G.R.; Fries, P.; Friston, K.J. Canonical microcircuits for predictive coding. Neuron 2012, 76, 695–711. [Google Scholar] [CrossRef]

- Bennett, M. A Brief History of Intelligence: Evolution, AI, and the Five Breakthroughs That Made Our Brains; Mariner Books: Boston, MA, USA, 2023. [Google Scholar]

- Tucker, D.M. Mind from Body: Experience from Neural Structure; Oxford University Press: New York, NY, USA, 2007. [Google Scholar]

- Tucker, D.M.; Luu, P. Motive control of unconscious inference: The limbic base of adaptive Bayes. Neurosci. Biobehav. Rev. 2021, 128, 328–345. [Google Scholar] [CrossRef] [PubMed]

- Tucker, D.M.; Luu, P. Adaptive control of functional connectivity: Dorsal and ventral limbic divisions regulate the dorsal and ventral neocortical networks. Cereb. Cortex 2023, 33, 7870–7895. [Google Scholar] [CrossRef] [PubMed]

- Ororbia, A.G.; Friston, K. Mortal Computation: A Foundation for Biomimetic Intelligence. arXiv 2023, arXiv:2311.09589. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Friston, K.J.; Price, C.J. Generative models, brain function and neuroimaging. Scand. J. Psychol. 2001, 42, 167–177. [Google Scholar] [CrossRef]

- Friston, K. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef]

- Hobson, J.A.; Friston, K.J. Waking and dreaming consciousness: Neurobiological and functional considerations. Prog. Neurobiol. 2012, 98, 82–98. [Google Scholar] [CrossRef]

- Adams, R.A.; Shipp, S.; Friston, K.J. Predictions not commands: Active inference in the motor system. Brain Struct. Funct. 2013, 218, 611–643. [Google Scholar] [CrossRef]

- Parrondo, J.M.; Horowitz, J.M.; Sagawa, T. Thermodynamics of information. Nat. Phys. 2015, 11, 131–139. [Google Scholar] [CrossRef]

- Ramstead, M.J.; Constant, A.; Badcock, P.B.; Friston, K.J. Variational ecology and the physics of sentient systems. Phys. Life Rev. 2019, 31, 188–205. [Google Scholar] [CrossRef]

- Friston, K.J.; Wiese, W.; Hobson, J.A. Sentience and the origins of consciousness: From Cartesian duality to Markovian monism. Entropy 2020, 22, 516. [Google Scholar] [CrossRef]

- Marsh, B.M.; Navas-Zuloaga, M.G.; Rosen, B.Q.; Sokolov, Y.; Delanois, J.E.; González, O.C.; Krishnan, G.P.; Halgren, E.; Bazhenov, M. Emergent effects of synaptic connectivity on the dynamics of global and local slow waves in a large-scale thalamocortical network model of the human brain. bioRxiv 2024. [Google Scholar] [CrossRef] [PubMed]

- Rasmussen, D.; Eliasmith, C. A neural model of rule generation in inductive reasoning. Top. Cogn. Sci. 2011, 3, 140–153. [Google Scholar] [CrossRef] [PubMed]

- Cohen-Duwek, H.; Slovin, H.; Ezra Tsur, E. Computational modeling of color perception with biologically plausible spiking neural networks. PLoS Comput. Biol. 2022, 18, e1010648. [Google Scholar] [CrossRef]

- Thakur, C.S.; Molin, J.L.; Cauwenberghs, G.; Indiveri, G.; Kumar, K.; Qiao, N.; Schemmel, J.; Wang, R.; Chicca, E.; Olson Hasler, J. Large-scale neuromorphic spiking array processors: A quest to mimic the brain. Front. Neurosci. 2018, 12, 891. [Google Scholar] [CrossRef]

- Jirsa, V.K.; Sporns, O.; Breakspear, M.; Deco, G.; McIntosh, A.R. Towards the virtual brain: Network modeling of the intact and the damaged brain. Arch. Ital. Biol. 2010, 148, 189–205. [Google Scholar]

- Khacef, L.; Klein, P.; Cartiglia, M.; Rubino, A.; Indiveri, G.; Chicca, E. Spike-based local synaptic plasticity: A survey of computational models and neuromorphic circuits. Neuromorphic Comput. Eng. 2023, 3, 042001. [Google Scholar] [CrossRef]

- Marković, D.; Grollier, J. Quantum neuromorphic computing. Appl. Phys. Lett. 2020, 117, 150501. [Google Scholar] [CrossRef]

- Hinton, G. The forward-forward algorithm: Some preliminary investigations. arXiv 2022, arXiv:2212.13345. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Buzsaki, G. The hippocampal-neocortical dialogue. Cereb. Cortex 1996, 6, 81–92. [Google Scholar] [CrossRef]

- Klinzing, J.G.; Niethard, N.; Born, J. Mechanisms of systems memory consolidation during sleep. Nat. Neurosci. 2019, 22, 1598–1610. [Google Scholar] [CrossRef]

- Jouvet, M. Paradoxical sleep mechanisms. Sleep 1994, 17, S77–S83. [Google Scholar] [CrossRef] [PubMed]

- Hobson, J.A. Dreaming: A Very Short Introduction; OUP Oxford: Oxford, UK, 2005. [Google Scholar]

- Diekelmann, S.; Born, J. The memory function of sleep. Nat. Rev. 2010, 11, 114–126. [Google Scholar] [CrossRef]

- Rasch, B.; Born, J. About sleep’s role in memory. Physiol. Rev. 2013, 93, 681–766. [Google Scholar] [CrossRef] [PubMed]

- Mahler, M.S. On Human Symbiosis and the Vicissitudes of Individuation; International Universities Press: New York, NY, USA, 1968. [Google Scholar]

- Hobson, J.A. REM sleep and dreaming: Towards a theory of protoconsciousness. Nat. Rev. Neurosci. 2009, 10, 803–813. [Google Scholar] [CrossRef]

- Tucker, D.M.; Johnson, M. Deep Feelings: The Emotional Sources of Imagination and Experience; MIT Press: Cambridge, MA, USA, in preparation.

- Samson, D.R.; Nunn, C.L. Sleep intensity and the evolution of human cognition. Evol. Anthropol. Issues News Rev. 2015, 24, 225–237. [Google Scholar] [CrossRef]

- Hathaway, E.; Morgan, K.; Carson, M.; Shusterman, R.; Fernandez-Corazza, M.; Luu, P.; Tucker, D.M. Transcranial Electrical Stimulation targeting limbic cortex increases the duration of human deep sleep. Sleep Med. 2021, 81, 350–357. [Google Scholar] [CrossRef] [PubMed]

- Fernandez-Corazza, M.; Feng, R.; Ma, C.; Hu, J.; Pan, L.; Luu, P.; Tucker, D. Source localization of epileptic spikes using Multiple Sparse Priors. Clin. Neurophysiol. 2021, 132, 586–597. [Google Scholar] [CrossRef]

- Friston, K.; Harrison, L.; Daunizeau, J.; Kiebel, S.; Phillips, C.; Trujillo-Barreto, N.; Henson, R.; Flandin, G.; Mattout, J. Multiple sparse priors for the M/EEG inverse problem. Neuroimage 2008, 39, 1104–1120. [Google Scholar] [CrossRef]

- Miller, M.E.; Spatz, E. A unified view of a human digital twin. Hum.-Intell. Syst. Integr. 2022, 4, 23–33. [Google Scholar] [CrossRef]

- Tucker, D.M.; Luu, P.; Shusterman, R.; Turovets, S. Electrocortical Neuromorphic Augmented Cognition Transmission (ENACT) for Human Digital Twin Communication. In Proceedings of the NATO Specialist Meeting on Human Digital Twin in the Military: Findings and Perspectives, Orlando, FL, USA, 10–13 December 2024. [Google Scholar]

- Wright, J.; Bourke, P. Markov Blankets and Mirror Symmetries. Free Energy Minimization and Mesocortical Anatomy. Entropy 2024, 26, 287. [Google Scholar] [CrossRef]

- Friston, K. Hierarchical models in the brain. PLoS Comput. Biol. 2008, 4, e1000211. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. Am I Self-Conscious? (Or Does Self-Organization Entail Self-Consciousness?). Front. Psychol. 2018, 9, 579. [Google Scholar] [CrossRef]

- Ungerleider, L.G.; Mishkin, M. Two cortical visual systems. In The Analysis of Visual Behavior; Ingle, D.J., Mansfield, R.J.W., Goodale, M.A., Eds.; MIT Press: Cambridge, MA, USA, 1982; pp. 549–586. [Google Scholar]

- Kant, I. Critique of Pure Reason; Macmillan and Son: London, UK, 1781; Reprint publication 1881. [Google Scholar]

- Barbas, H. General cortical and special prefrontal connections: Principles from structure to function. Annu. Rev. Neurosci. 2015, 38, 269–289. [Google Scholar] [CrossRef]

- Tucker, D.M.; Luu, P.; Johnson, M. Neurophysiological mechanisms of implicit and explicit memory in the process of consciousness. J. Neurophysiol. 2022, 128, 872–891. [Google Scholar] [CrossRef]

- Chalmers, D. The hard problem of consciousness. In Blackwell Companion Conscious; John Wiley & Sons: Hoboken, NJ, USA, 2007; pp. 225–235. [Google Scholar]

- Tononi, G.; Koch, C. The neural correlates of consciousness: An update. Ann. N. Y. Acad. Sci. 2008, 1124, 239–261. [Google Scholar] [CrossRef]

- Yonelinas, A.P.; Ranganath, C.; Ekstrom, A.D.; Wiltgen, B.J. A contextual binding theory of episodic memory: Systems consolidation reconsidered. Nat. Rev. Neurosci. 2019, 20, 364–375. [Google Scholar] [CrossRef]

- Corbetta, M.; Shulman, G.L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002, 3, 201–215. [Google Scholar] [CrossRef]

- Tucker, D.M. Turning Left and Right: The Fragile Sanity of Nations; Amazon Kindle Publishing: Seattle, WA, USA, 2024. [Google Scholar]

- Puelles, L.; Harrison, M.; Paxinos, G.; Watson, C. A developmental ontology for the mammalian brain based on the prosomeric model. Trends Neurosci. 2013, 36, 570–578. [Google Scholar] [CrossRef] [PubMed]

- Butler, A.B. Evolution of the thalamus: A morphological and functional review. Thalamus Relat. Syst. 2008, 4, 35–58. [Google Scholar] [CrossRef]

- Conant, R.C.; Ashby, W.R. Every good regulator of a system must be a model of that system. Int. J. Syst. Sci. 1970, 1, 89–97. [Google Scholar] [CrossRef]

- Eliasmith, C.; Anderson, C.H. Neural Engineering: Computation, Representation, and Dynamics in Neurobiological Systems; MIT Press: Cambridge, MA, USA, 2003. [Google Scholar]

- Tsur, E.E. Neuromorphic Engineering: The Scientist’s, Algorithms Designer’s and Computer Architect’s Perspectives on Brain-Inspired Computing; CRC Press: Boca Raton, FL, USA, 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tucker, D.M.; Luu, P. Feasibility of a Personal Neuromorphic Emulation. Entropy 2024, 26, 759. https://doi.org/10.3390/e26090759

Tucker DM, Luu P. Feasibility of a Personal Neuromorphic Emulation. Entropy. 2024; 26(9):759. https://doi.org/10.3390/e26090759

Chicago/Turabian StyleTucker, Don M., and Phan Luu. 2024. "Feasibility of a Personal Neuromorphic Emulation" Entropy 26, no. 9: 759. https://doi.org/10.3390/e26090759

APA StyleTucker, D. M., & Luu, P. (2024). Feasibility of a Personal Neuromorphic Emulation. Entropy, 26(9), 759. https://doi.org/10.3390/e26090759