Abstract

Purpose in systems is considered to be beyond the purview of science since it is thought to be intrinsically personal. However, just as Claude Shannon was able to define an impersonal measure of information, so we formally define the (impersonal) ‘entropic purpose’ of an information system (using the theoretical apparatus of Quantitative Geometrical Thermodynamics) as the line integral of an entropic “purposive” Lagrangian defined in hyperbolic space across the complex temporal plane. We verify that this Lagrangian is well-formed: it has the appropriate variational (Euler-Lagrange) behaviour. We also discuss the teleological characteristics of such variational behaviour (featuring both thermodynamically reversible and irreversible temporal measures), so that a “Principle of Least (entropic) Purpose” can be adduced for any information-producing system. We show that entropic purpose is (approximately) identified with the information created by the system: an empirically measurable quantity. Exploiting the relationship between the entropy production of a system and its energy Hamiltonian, we also show how Landauer’s principle also applies to the creation of information; any purposive system that creates information will also dissipate energy. Finally, we discuss how ‘entropic purpose’ might be applied in artificial intelligence contexts (where degrees of system ‘aliveness’ need to be assessed), and in cybersecurity (where this metric for ‘entropic purpose’ might be exploited to help distinguish between people and bots).

1. Introduction

1.1. Entropic Purpose and Complex Time

This paper will show that a consistent technical definition may be made of a quantity that, because it is defined entirely in time, may be considered an attenuated form of purpose. We will call this quantity “entropic purpose”. It is necessary to emphasise that this narrow definition of “entropic purpose” is a scientific and impersonal quantity, entirely devoid of the philosophical properties of the purposes that dominate human affairs. It is true that there exist teleological implications of this scientific development which will be discussed, at length and separately, in an Appendix. But we note that the Principle of Least Action (which underpins much of the physical description of the universe and is elegantly described by Jennifer Coopersmith [1]) has teleological overtones (see Michael Stöltzner, 2003 [2]) yet is still unquestioningly considered to be rigorously scientific in nature.

What is additionally required for our new technical definition of entropic purpose is the systematic complexification of time (as well as space) that was demonstrated in 2023 (by Parker & Jeynes, PJ23 [3]). This demonstration shows that complex time may coherently be thought to exist, a conclusion that has also been reached independently by others, as we will discuss.

1.2. Life and Artificial Intelligence

Raymond and Denis Noble [4] have asserted recently that, ”Agency and purposeful action is a defining property of all living systems,” (p. 47). This summarises the massive recent work in molecular biology and genetics that they discuss.

In the rapidly evolving landscape created by the transformative application of Artificial Intelligence (AI) techniques across a diverse set of domains, not only has the efficiency of complex systems been enhanced, but also the way has been paved for innovative engineering solutions to otherwise intractable problems (see for example Joksimovic et al. 2023 [5]). AI is therefore not just a powerful theoretical and practical tool in current engineering problems but is also now becoming a driving force shaping future technological advances.

One intriguing AI application investigates artificial life, seeking to emulate the performance of living systems and employing AI algorithms in an attempt to replicate biological processes (see for example Chan, 2019 [6]). The synergy of AI and artificial life investigations not only enriches our understanding of the complexities of life but also fuels the development of innovative technologies with the capability of profoundly changing the way we live our lives. The fusion of AI and life sciences (including molecular biology and biochemistry) could even offer new scientific insights into the origin of life studies (perhaps, see Yampolskiy 2017 [7]).

As society becomes increasingly reliant on interconnected digital communications, information, and computational systems, the importance of securing these networks against evolving threats is also becoming ever more critical. AI is emerging as a pivotal technology to help address the challenges posed by cybersecurity, and offering sophisticated solutions that adapt to the dynamic nature of cyber threats. The application of AI in security measures involves predictive analysis, anomaly detection, and intelligent response mechanisms, contributing to the creation of resilient and adaptive defence systems. In this context, one key aspect of identification and authentication is the ability to distinguish between real people (personalities) and the multiplicity of bots that attempt to impersonate and replicate the functions of actual people. Were it available, the ability to distinguish between animate and inanimate entities would be considered an important weapon in the arsenal of cybersecurity.

It is in this context that the work presented here offers an impersonal approach to measuring what we call the “entropic purpose” of a system. It is our expectation that the “entropic purpose” metric presented in this paper will be appropriate to biological and social applications since our ‘narrow’ definition allows it to be grounded in a mathematically rigorous and physical description appropriate for scientific application.

It is interesting to note that previous attempts to define the fundamental attributes of life have suggested various characteristics, including the following: cellular organisation; response to stimuli; growth; reproduction; metabolism; homeostasis; evolutionary adaptation; and heredity (see for example, Trifonov 2011 [8]). It is immediately obvious that purpose is currently (in general) not considered one of the attributes of life, perhaps because of the assumption that purpose cannot be considered a physically describable characteristic, being intrinsically metaphysical in nature and therefore not amenable to scientific methods of enquiry. However, everyone knows that living things have purposes (see [4] for example), and since living things are both real and physical then physics must be capable of describing these purposes.

1.3. Aristotelian Teleology

In this paper, we use “metaphysical” literally (not pejoratively, and not meaning “ontological”): that is, indicating “the metanarrative of physics” (with the broad Aristotelian meaning of physics: “pertaining to the natural world”). Jeynes et al. (2023) [9] have demonstrated the necessary existence of a metaphysical context of any physical discussion: although drawing attention to metaphysics is not currently considered scientifically proper, it must be acknowledged that every physical discussion is embedded in a metaphysical context.

“Purpose” is central to the Aristotelian physics that was overturned in the 17th century by Newtonian (mechanistic) physics: since then, any appeal to “purpose” has been regarded as scientifically illegitimate (with good reason!). However, purposes are manifestly a characteristic of life in general, and human activities in particular, so it seems perverse to deny their existence. It should be pointed out that many strands of research are pointing to a (partial) rehabilitation of Aristotelian ideas.

In the Graphical Abstract, Aristotle is looking at a Nautilus shell, obtained from the Indian Ocean. Surprisingly, we know he discussed this creature since it is documented in his “Historia animalium” (see von Lieven & Humar, 2008 [10]). Nautilus is of specific interest to us since it embodies the logarithmic spiral (on which see §2.4) and it is perhaps not surprising that the geometrical entropy description of such a (double) logarithmic spiral structure shares key mathematical features with the “purposive Lagrangian” (employed in this paper) that is associated with the creation of information. Although not the subject of this paper, there appears to be a deep and intriguing connection between the structural form of a living organism and its (entropic) purposeful function.

1.4. Shannon Information

When Claude Shannon developed his mathematical theory of information (1948 [11]), his fundamental underlying assumption was that such (essentially semantic) information already existed. Somewhat unexpectedly at the time (and his papers were immediately recognised as a critically important breakthrough), he was also able to show that such information could, in effect, be de-personalised and defined in a “scientific” (essentially syntactic) manner: the Shannon metric (known as “Shannon information” or “Shannon entropy”). In his studies, Shannon was interested in the engineering issues of the quantification of information and the conditions under which information can be faithfully transferred from one location in spacetime to another. In particular, Shannon defined the basis for a high-fidelity communications channel (consisting of a transmitter and a receiver, with a noisy channel in between), together with the conditions by which information at the transmitter end can be perfectly conveyed to the receiver end. It is clear that, ultimately, any such information must be created by a person who wishes to send it to another person able (and willing) to receive it: the information presupposes metaphysical purposes, but these purposes are irrelevant both to ours and to Shannon’s: his eponymous information was defined (impersonally) for the purposes of communications engineers, and our entropic purpose is defined (impersonally) for more general physical ends.

It is well known that information and noise have the same physical properties: both are intrinsically acausal (unpredictable and non-deterministic, see Hermann Haus, 2000 [12]). The key distinction between a string of noisy bits and a string of information bits is whether an algorithm exists (particularly at the receive end of a communications channel) to decode the received string. The existence of an algorithm (to first encode and then decode the information, making it robust against noise) bespeaks the existence of an intelligent (living) entity; but we are not here interested in intelligence per se, nor in its associated purposes.

1.5. Entropic Purpose

It is the aim of this paper to construct an impersonal (and therefore restricted) description of “entropic purpose”, in much the same way that a (restricted) concept of “information” was described in an impersonal way by Shannon. In effect, we will define an “entropic purpose” as being equivalent to the creation of such (Shannon) information, proving that any entity that creates (Shannon) information thereby exhibits entropic purpose.

The formalism presented here is based on the Quantitative Geometrical Thermodynamics of Parker and Jeynes (2019) [13]: the “prior art” is now very extensive and is summarised in §2, where the work itself is found in §3 with proofs of important relations given separately in Appendix A and Appendix B.

1.6. Teleonomy

Teleonomy is a neologism of Colin Pittendrigh of 1958, defined as “evolved biological purposiveness” by Corning et al. (2023) [14]. It is a circumlocution by the biologists to avoid using the term Teleology, which was thought to have too many Aristotelian overtones. Here, we intend to show how the teleological behaviour of systems (obvious in living systems) can be adequately represented in a valid physical account, thus making the term teleonomy redundant. These wider issues (not strictly scientific ones, although much science is discussed) are addressed more conveniently and at useful length in Appendix C.

1.7. Non-Technical Overview

This paper establishes the non-trivial result that a purposive Lagrangian exists. Roger Penrose, in his comprehensive work “The Road to Reality” (2004) [15], has a section entitled “The magical Lagrangian formalism” (his §20.1) in which he gives a beautiful account of the far-reaching power of this powerful mathematical framework. Of course, the difficulty is that constructing a Lagrangian for any given system is typically hard to do (the easy cases for ideal situations are rather rare). But here we give a “purposive Lagrangian” (as an example of our mathematical analysis) to act as a ‘template’ function. This may be adapted to the specific application in view: whether it be biological function, an emergent phenomenon due to the actions of multiple living organisms; the behaviour of a living entity as it goes about its purposeful activities (such as foraging for food); or indeed information creation due to some non-living (such as AI-based) system process.

However, we also show that the entropic purpose of a system (obtained from the Lagrangian) is essentially the same as the information created (not noise) by the system. That is, the physical measure of the entropic purpose of any system is simply the new information created by that system. Therefore, it is not actually necessary to construct the appropriate Lagrangian in order to calculate the entropic purpose. That is to say, if we can study a biological process (for example a foraging strategy) and calculate the information that is generated as the organism collects and processes sensory data (used to inform its decisions), then we will have also calculated the entropic purpose being exhibited by that organism. Thus, observation of the external phenomenological behaviour of the organism allows us to make a calculation of its entropic purpose without the need for knowing the underlying purposive Lagrangian that might be underpinning its actions: we can therefore acquire a quantitative evaluation in addition to the qualitative assessment of its ‘purposive behaviour’. It is true that we only treat the idealised case (in which noise is ignored or assumed zero) but we expect further work to show that the conclusion is generally valid (at least approximately).

The paper is quite long and technically difficult because the result that an impersonal entropic purpose can be mathematically defined is a radical surprise (going against deeply ingrained scientific assumptions), but rests on substantial previous mathematical works, while also requiring certain detailed technical criteria be established (Appendix A and Appendix B).

However, Appendix C considers a wide range of (mostly) recent work pointing to those same conclusions for which we now provide a solid physical basis. In particular, in Appendix C §C.1, we discuss how Samuel Butler (1835–1902) was convinced that “life is matter that chooses”: that is, the “entropic purpose” of an organism can be measured by its choices (effectively the information it generates). Similarly, in Appendix C §C.3, we discuss new observations of foraging birds (gannets and albatrosses) detailing how they choose their path to where the food is. Such choices are literally a matter of life and death.

The use of the word ‘path’ in the context of entropic purpose is intentional here since the key mathematical object employed in this paper is the line-integral of the purposive Lagrangian along a trajectory across the complex temporal plane. There is a phase associated with each incremental ‘infinitesimal purpose’ along the path of such a purposive trajectory, whose contributions to the overall integration are destructive or constructive according to the value of the phase at each point, such that the overall value of the integral is not a trivial result. Indeed, the purposive Lagrangian satisfies the Euler-Lagrange equation of variational calculus, so that there are an infinity of potential paths that a system could possibly follow, each associated with an overall entropic purpose. Yet, according to the teleological characteristic of the purposive Lagrangian, the actual path adopted by the system is the one that minimises the entropic purpose and its associated information creation. We see here the Principle of Least (entropic) Purpose, such that a biological organism or purposive system exhibits an economy of behaviour, requiring the least amount of created information (that is, expending the least intellectual effort—an alternate rendering of the principle of Occam’s Razor) as it chooses to adopt a particular line of action or strategy in its attempt to achieve a desired objective (the least entropic purpose).

Interestingly, the purposive “line integral” is also an expression of a “non-local” constraint on the system, representing a “holistic” rather than a “reductionist” approach to finding how the system evolves (according to the variational calculus as exemplified by the Euler-Lagrange equation). Therefore, the purposive metric presented here implicitly describes (and is underpinned by) a highly complex and sophisticated mathematical mechanism with powerful analytical properties (although in a simple view it also merely represents the amount of information created by a system).

The reader may suspect that entropic purpose has something to do with consciousness, on the admittedly plausible grounds that our purposes are inextricably linked with our consciousness, and information is generated by conscious systems. There is enormous current interest in trying to express consciousness as the creation of information and its processing in complex neural systems. However, the present work sidesteps questions of consciousness by insisting that the purpose in view (entropic purpose) is entirely impersonal. These issues are addressed more fully in Appendix D.

However, by highlighting here the intuitively attractive idea that consciousness is closely related to the creation of information, we prove in this paper that as a physical phenomenon, information creation has a mathematical description that is both teleological (future-orientated) in nature and also obeys an underlying variational principle. That is to say, this variational principle (the principle of least entropic purpose) also implies the existence of deep symmetry relationships (and conservation laws, due to Noether’s theorem) within the broader concept of information creation. None of these critically important technical aspects associated with the creation of information have so far been discussed in the context of consciousness studies. Although this paper does not discuss these issues directly, we are now setting the scene in allowing consciousness research studies to be discussed more quantitatively within the context of these important mathematical principles, which are already known to underpin most (if not all) physical phenomena. It is in the context of these powerful principles that our paper breaks new ground in the literature of consciousness studies.

2. Technical Background

2.1. Holomorphism and the Lagrangian-Hamiltonian Representation

Parker and Walker (2004 [16]) have shown that a holomorphic function (a complex function analytic everywhere) cannot carry Shannon information, whereas a meromorphic function (piece-wise holomorphic) has poles with non-zero Cauchy residues and can carry information. Parker and Walker (2010) [17] have also considered the simplest case of a single pole (“point of non-analyticity”) in a restricted spacetime and shown that it obeys the Paley-Wiener [18] criterion for causality. Using geometric algebra methods [19], Parker and Jeynes (PJ19 Appendix A [13]) formally generalise Parker and Walker’s 2010 treatment to Minkowski 4-space.

Quantitative Geometrical Thermodynamics (QGT: PJ19 [13]) systematically uses the well-known “elegant unifying picture” (Ch.20 in Penrose 2004 [15]) of the Lagrangian-Hamiltonian formulation, which Penrose explains in some detail saying that it leads to a “mathematical structure of imposing splendour”. In particular, QGT sets up an entropic Lagrangian-Hamiltonian system, proving that the required canonical equations of state are satisfied (see Equation (11) passim of PJ19 [13]).

Moreover, using this system, Parker and Jeynes (2023) [3] elegantly prove an equivalence between energy (represented by the Hamiltonian) and entropy production, using a systematic complexification of the formalism (including complexifying time itself), noting that, like energy, entropy production is a Noether-conserved quantity (proved by Parker and Jeynes, 2021 [20]). This is a startling development since it explicitly mixes up (or indeed, unifies) the reversible (where entropy production is identically zero) and the irreversible (with non-zero entropy production).

We will rely on this Hamiltonian-Lagrangian formulation, in particular setting up a “purposive Lagrangian” to enable the definition and discussion of “entropic purpose”. This is despite Roger Penrose saying, following a detailed technical discussion of the limitations of Lagrangians, “I remain uneasy about relying on [Lagrangians] too strongly in our search for improved fundamental physical theories” (ibid. §20.6, p. 491).

In classical mechanics, the Lagrangian represents a balance between potential and kinetic energy, and the Least Action is a minimisation of a temporal line integral along the Lagrangian. Such variational Principles are recognised as fundamental in physics: “Least Action” and “Maximum Entropy” have long been recognised, and “Least Exertion” was proved by Parker and Jeynes in 2019 [13], who also proved it to be equivalent to Jaynes’ “Maximum Caliber” (1980 [21], see Parker & Jeynes 2023 [22]). We should point out that although the entropic Lagrangian is in effect an entropic “balance” between the potential and kinetic entropies, this balance is not exact as in the case of classical mechanics. The situation is even more complicated for quantum mechanics: Paul Dirac addressed the issue in 1933 [23] and Richard Feynman famously took it up in his thesis [24] (see discussion by Hari Dass [25]). But note that Feynman explicitly says that his analysis is non-relativistic throughout, and Penrose points out (ibid. §20.6, p. 489) that “strictly speaking”, Lagrangian methods “do not work” for relativistic fields. However, the present QGT treatment is relativistic in principle [20].

2.2. Shannon Information and Info-Entropy

The impersonal definition of ‘Entropic Purpose’ that we will propose here relies on the mathematical and physical properties of the Shannon information, which is based on the mathematical functional object ρlnρ, where ρ is the probability distribution |Ψ|2 of a (complex) meromorphic wavefunction Ψ in Minkowski spacetime. The basic functional equations are given by Parker and Walker (2010, Equations (2) and (3) [17]) for the Shannon entropy S and the Shannon information I, and they give an integral over space x:

and a corresponding integral over time t:

where ρ is a meromorphic function with a simple pole at x + it (i ≡ √−1 as usual), the Boltzmann constant kB is the quantum of entropy, and c is the speed of light. We generalise to natural logarithms and apply the proper metric [+++−] to the space/time co-ordinates (the Shannon information is imaginary compared to the Shannon entropy). Parker and Walker (2014) [26] also proved that a system in thermal equilibrium cannot produce Shannon information.

The logarithmic relations on the RHS of Equations (1a) and (1b) is a signature of hyperbolic spacetime which is also the theatre of QGT’s Hamiltonian-Lagrangian framework. Within the framework of QGT, Parker and Jeynes [13] also define the quantity info-entropy f of a system, proving that f is holomorphic:

where “holomorphic” means that the appropriate canonical spacetime-based Cauchy-Riemann relations are satisfied (see Annex to Appendix B of PJ19 [13]).

Considering a system where the geometric variation in 3D space occurs in a transverse plane described by the x1 and x2 co-ordinates along an axial co-ordinate x3, then the respective information and entropy of the system are simply the logarithms of its Euclidean co-ordinates [13], which can be written (somewhat informally):

The info-entropy f of such a x3-axial system is given by:

where the logarithm argument is now formally correct, being explicitly dimensionless.

For a system comprising a double-helical geometry in 3D space, which is parametrically represented by the transverse co-ordinates x1 and x2 such that x1 = Rcos(κx3) and x2 = Rsin(κx3); the two helices are coupled by a pair of differential equations, where x3 represents the axial co-ordinate of the system and κ ≡ 2π/λ is the wavenumber of the double-helix with λ its pitch and R its radius. Then we have:

where the prime symbol indicates differentiation with respect to the spatial x3 axial co-ordinate. It is clear that using the coupled equations Equations (5a) and (5b), the info-entropy function f for such a double-helix eigenvector of QGT can be represented as:

It is equally clear that the quantity (for n = 1,2) represents an important parameter for the double-helix eigenvector in QGT.

2.3. The QGT Equations of State

Quantitative Geometrical Thermodynamics (QGT) is a comprehensive and coherent description of the entropic behaviour of any system, entirely isomorphic to the well-known (kinematical) Hamiltonian and Lagrangian equations of classical mechanics. In constructing any such entropic equations of state, suitable “position” (q) and “momentum” (p) quantities need to be defined. In the application of QGT to creating a mathematical framework suitable for describing entropic purpose, these are defined for n ∈ {t,τ} in the complex temporal domain, where Z(≡R/c) is a temporal scaling factor analogous to the radius R of the double-helix in QGT (see Equation (9a) of [13]):

where mS ≡ iκkB is the entropic mass (Equation (9c) of [13]), c is the speed of light, and the prime symbols indicate differentiation with respect to the temporal parameter T (where T ≡ √(t2 + τ2), see formal treatment in §3 below and also Appendix A and Appendix B). The parameter κ is analogous to that described earlier, where κ = 2π/λ is the wavenumber with λ a pitch (see formal treatment in §3 below). The hyperbolic velocity q’ is dimensionless and q’* ≡ 1/q’: the ‘group’ velocity q’ is the inverse of the ‘phase’ velocity q’*, as normal (see Equation (9) of PJ19 [13], and §7 of Parker & Jeynes 2021 [27]), Note that Equations (7a)–(7c) means that equations of state for entropic purpose are defined in hyperbolic (not Euclidean) spacetime, and Equation (7c) shows how the hyperbolic velocity q′ relates to the associated Euclidean temporal derivative n′.

Considering the system entropy previously calculated using the Shannon entropy and indicated in Equation (1), it is clear that the hyperbolic time quantity q of Equation (7a) is functionally equivalent to the system Shannon entropy of Equation (1). That is to say, whereas we earlier merely noted that calculation of the Shannon entropy of a meromorphic point of non-analyticity is equivalent to the logarithm of the Euclidean spacetime co-ordinate, in QGT the hyperbolic position parameter q is functionally identical to the system Shannon information (except for the scaling factor Z and the quantum of entropy, kB). Thus, whereas the conventional mechanical equations of state are set within the theatre of Euclidean spacetime, the equations of state for entropic purpose and QGT are set within hyperbolic (logarithmic) space, and the transformation between the Euclidean and hyperbolic domains for a system can be seen to be effected by calculating the spatio-temporal Shannon information of the system (see Equations (1a) and (1b)).

The associated entropic momentum p as indicated in Equation (7b) is also intrinsically dependent upon the properties of hyperbolic time, but now via its temporal derivative q′; as determined by the axial co-ordinate T. In conventional mechanics, the momentum is given by the product of the inertial mass and the velocity; similarly, in QGT the entropic momentum is given by the product of the entropic mass mS (which includes the speed of light c for dimensionality reasons) and the (phase) hyperbolic velocity ≡ 1/.

We note the functional similarity of Equation (7c) to the quantity already identified in Equation (6). It is clear that to completely define the quantities seen in Equations (6) and (7c) only two parameters of a double-helix (a fundamental eigenvector of QGT) are required: the radius R (in this case the temporal parameter Z and the wavenumber κ). That is, these two parameters are sufficient to define the key equations of state that comprise the basis of QGT.

Thus, we emphasise that the Shannon information is completely intrinsic to (and permeates) the definitions and natures of both the entropic position q and the entropic momentum p in the hyperbolic spacetime of QGT. The corollary to this is that a universe exhibiting a hyperbolic geometry (such as ours, with its “hyperbolic overall geometry” as per Penrose’s assertion, §2.7 p.48, [15]) is also intrinsically informational in nature.

2.4. Irreversibility in QGT

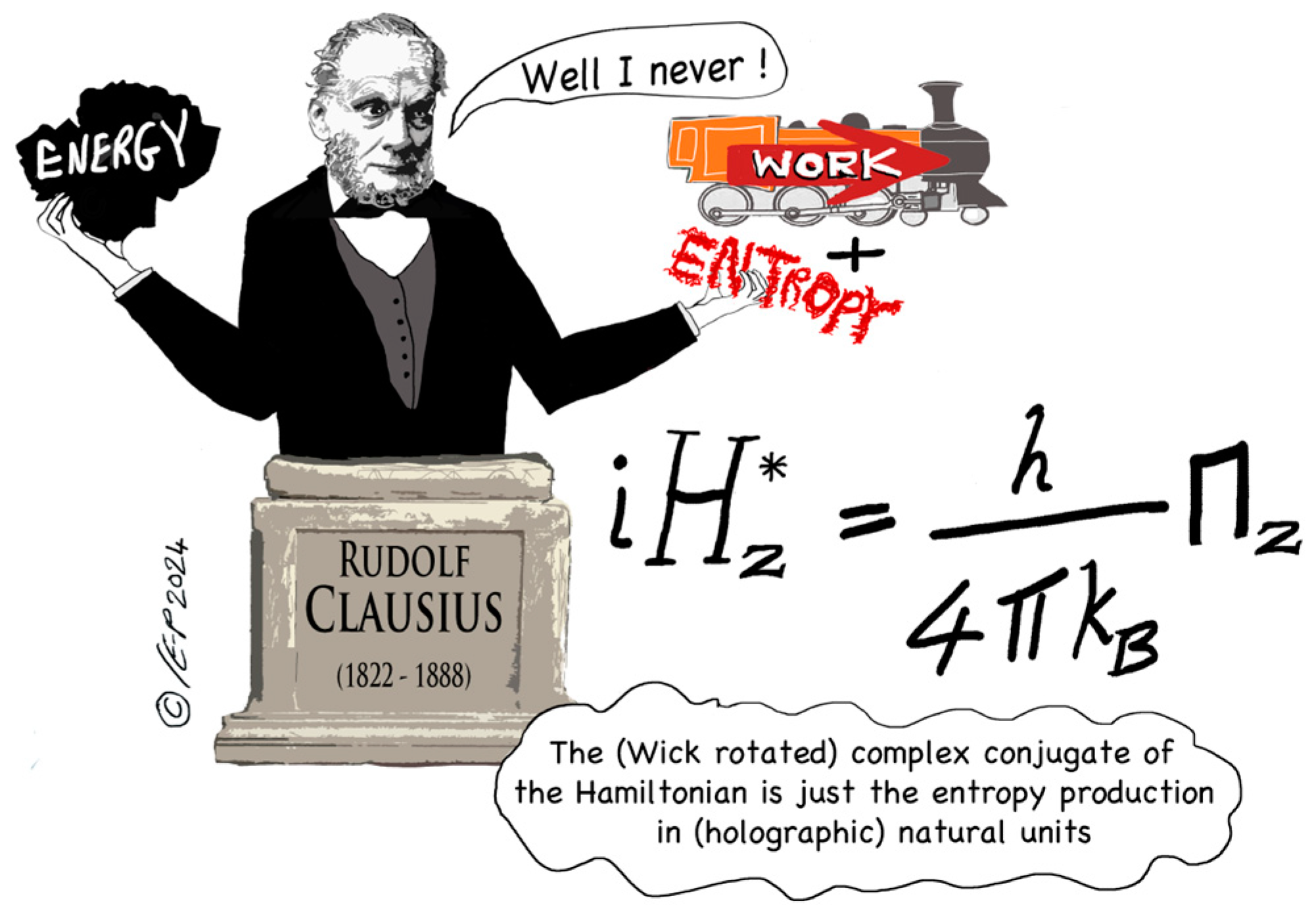

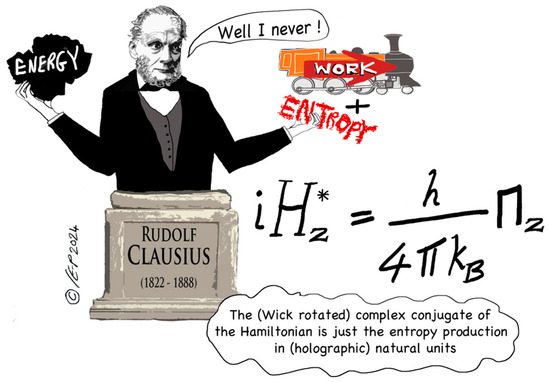

Irreversible systems have positive (non-zero) entropy production. Parker and Jeynes (2019, [13]) have shown that the double logarithmic spiral (DLS, of which the double-helix is a special case) is a fundamental eigenfunction of the entropic Hamiltonian. But a DLS entails positive entropy production, as was shown by Parker and Jeynes’ (2021 [20]) QGT treatment of black holes (also proving that entropy production is a Noether-conserved quantity): this black hole treatment was confirmed subsequently in a more fundamental treatment (Parker and Jeynes 2023 [3]) which also established the essential physical equivalence of energy (as represented by the Hamiltonian) and entropy production (see Figure 1).

Figure 1.

Graphical Abstract of “Relating a System’s Hamiltonian to its Entropy Production using a Complex Time Approach” (Parker and Jeynes 2023 [3]); picture credit: Christine Evans-Pughe (www.howandwhy.com).

The double-helix has zero entropy production, which accounts for the stability of both DNA (PJ19 [13]) and Buckminsterfullerene (Parker and Jeynes 2020 [28]). In fact, a QGT analysis shows that the alpha particle (in its ground state) behaves as a unitary entity (than which exists nothing simpler: Parker et al. 2022 [29]). Where there is zero entropy production, there is no change—such cases are trivially reversible. But irreversible cases are where there is positive (non-zero) entropy production: all real processes involve change, and there is also a QGT treatment of the simplest such example—that of beta-decay (Parker and Jeynes 2023 [30]).

It is remarkable that QGT treats reversibility and irreversibility entirely commensurately: in fact, in a fully complexified treatment, the Hamiltonian (representing the system energy) and the entropy production are seen to be equivalent. This is a remarkable result: stated more precisely, the (Wick rotated) complex conjugate of the Hamiltonian is just the entropy production in (holographic) natural units ([3]; see Figure 1). But this result is only obtained in a fully complexified system, that is, where time is also complexified. Ivo Dinov’s Michigan group refers to the resulting 5-D spacetime as “spacekime”, where “kime” refers to “complex time” (see for example Wang et al. [31]).

The world is irreversible. Therefore, the fundamental equations of physics cannot be reversible. Consequently, QGT takes the (irreversible) Second Law of Thermodynamics as axiomatic. Purpose is an intrinsically irreversible concept, a truncated form of which (“entropic purpose”) we will express here using the (QGT) apparatus of complex time.

2.5. Other Comments

Information and noise are physically indistinguishable in a Shannon communication channel: this is why “cryptographically secure pseudo-random number generators” are of such importance in establishing secure communications. Formally, “entropy” is added to the information at the transmit end, and then subtracted at the receive end to retrieve the information. Therefore, information production (the rate of information creation) and entropy production (the rate of increase in entropy) are also closely related.

The ”fundamental equations of physics” are usually considered to be those of Quantum Mechanics (QM) and General Relativity (GR), which have both been demonstrated correct by multiple very high-precision experiments. The trouble is that QM is apparently in “fundamental conflict” with GR (Penrose [15] §30.11): Roger Penrose is convinced that QM “has no credible ontology, so that it must be seriously modified for the physics of the world to make sense” (ibid. §30.13 p. 860, emphasis original). Many physicists do not take Penrose’s view; nevertheless, there is still no consensus on a theory of quantum gravity. We take the view that “fundamental equations” should treat reversibility and irreversibility commensurately (which neither QM nor GR do) on the grounds that irreversibility is a phenomenon ubiquitously observed.

In fact, there are no real systems that are reversible! QM has looked for the “fundamental particles”, but the smaller they are, the higher the energy needed for the relevant experiments—at such high energies the assumption of reversibility is an exceedingly good approximation. But QGT has shown that there is another approach to the fundamental: Parker et al. 2022 [29] have shown that it is reasonable to consider the alpha particle (in its ground state) as a unitary entity (than which exists nothing simpler): that is, at its unitary length scale the alpha as such should also be considered “fundamental” (since there exists nothing simpler)—even though we know it is composed of two protons and two neutrons (when considered from the reference frame of a smaller length scale); a system of four nucleons which is complicated compared to the unitary entity. Hyperbolic space emphasises the relativity of scale (see Auffray & Nottale’s useful review 2008) [32]. Of course, if the alpha is excited (not in its ground state), it behaves as a system, not as a unitary entity (which has no parts).

3. A Formal Expression for a System with a Non-Zero Entropic Purpose

3.1. Overview

We will take an engineering approach, idealising the problem to facilitate an analytic solution. Centrally, we use the result of PJ23 ([3], see Figure 1) that time must be represented as a complex number to adequately express the Second Law (in turn allowing the expression of the irreversibility ubiquitously observed for all real systems), finding that it is possible to construct a well-formed purposive Lagrangian in the complex temporal plane: that is, without a spatial component. We call this Lagrangian “purposive” on the grounds that purpose is a temporal phenomenon without a spatial component. Of course, we cannot set up a physical system to adequately represent the full scope of purposes (including mental phenomena): our formalism is necessarily restricted to the purely physical.

To underline this, we, therefore, refer here to “entropic purpose”. We acknowledge that, since the Newtonian revolution of the 17th century, assigning “purpose” (of any sort) to things is regarded as illegitimate in physics. However, the introduction of complex time means that the classical Lagrangian-Hamiltonian apparatus can be extended to irreversible cases, including ones that can be interpreted as purposive.

We will define the Entropic Purpose P [J/K] as an appropriate line integral along the relevant (“purposive”) Lagrangian LP, in the same way that the action (or the “exertion”, see Equation (12) of PJ19 [13]) are line integrals on the appropriate Lagrangians. We will also show that just as there is a Principle of Least Action and a Principle of Least Exertion [13], there is also a Principle of Least (entropic) Purpose (see Appendix A). Note parenthetically that Parker and Jeynes (2023 [22]) have shown that the “exertion” is proportional to what Edwin Jaynes called “caliber” (1980 [21]), and Pressé et al. [33] have emphasised the general nature of the variational Principle of “Maximum Caliber”.

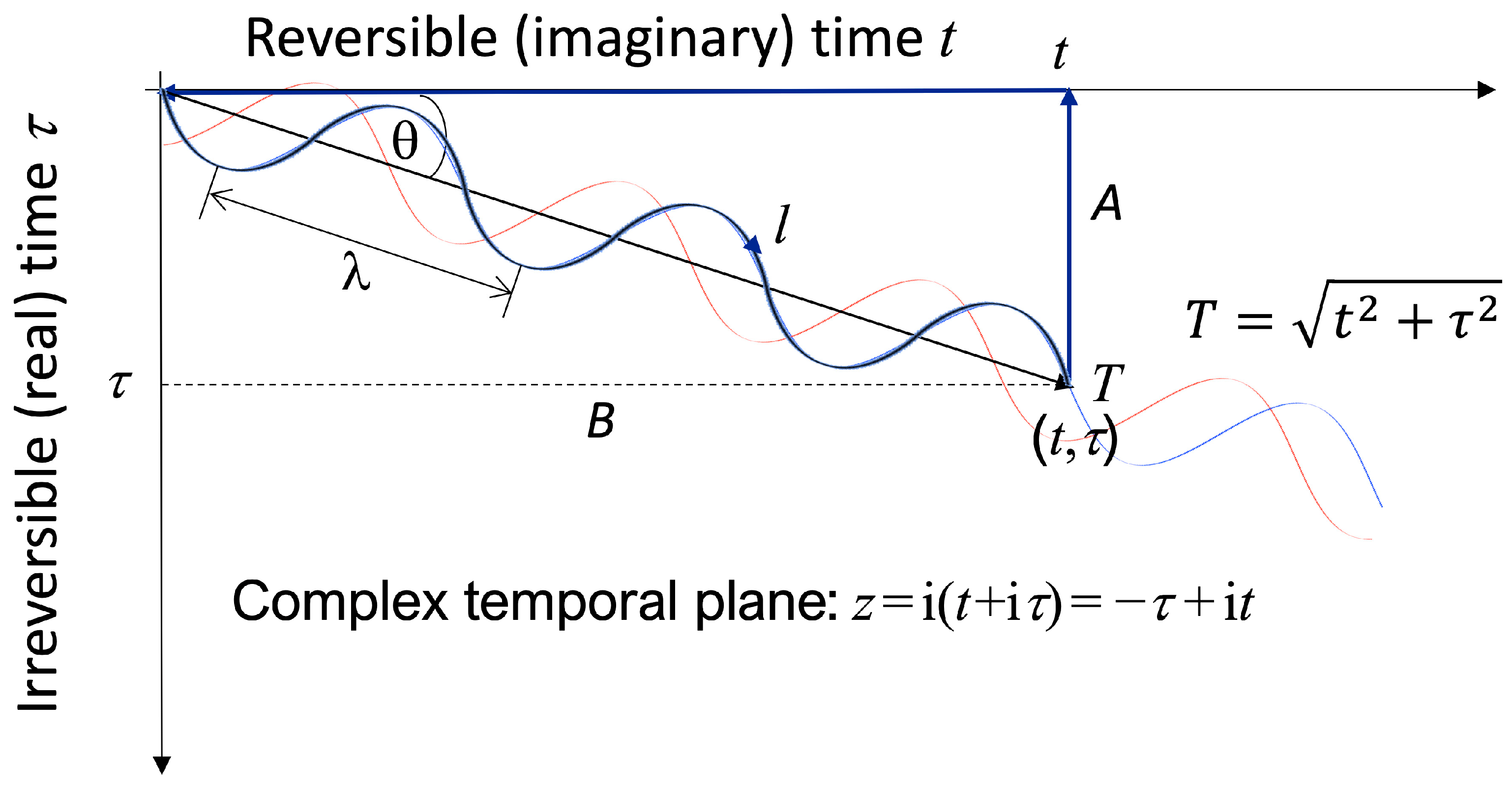

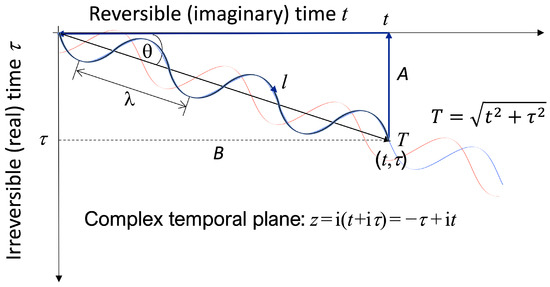

Purpose involves time, and purposive systems must follow some sort of trajectory through time. The treatment of information in QGT is predicated on the existence of meromorphic functions which are piece-wise holomorphic, with the (particle-like) poles of the function behaving like pieces of information. Figure 2 sketches an example trajectory l through complex time of one such pole. This trajectory may readily be constructed holomorphic: see Appendix A Equation (A1a).

Figure 2.

Description of a holomorphic trajectory l (represented by the red-blue pair of lines) across the complex z temporal plane. Note that because z = −τ + it, the real-time (tau) axis is inverted, implying an intrinsic handedness (chirality) to the complex time plane.

As well as defining the holomorphic trajectory l (see Equation (A1a) of Appendix A) also shows that the associated “purposive Lagrangian” (see §§3B,3C) is well-formed; that is, it satisfies the appropriate (entropic) Euler-Lagrange equation in which the conjugate variables {p,q} of Equation (7) are defined in a hyperbolic complex time {t,τ} (QGT is always defined in an information space that is necessarily hyperbolic). Entropic position q and entropic momentum p = cmSq’* (with entropic mass mS ≡ iκkB) are all defined as previously (see Equations (7a)–(7c) passim), except that the prime now indicates partial differentiation by the temporal parameter T shown in Figure 2 (q’ ≡ ∂q/∂T: but note also that q’* ≡ 1/q’ as before).

We can calculate the entropic purpose directly (§3.4) by interpreting the line integral along the “purposive Lagrangian” across the complex temporal plane as the entropic purpose of the system. We can also complexify the Lagrangian to make it analytic (see Appendix B) so as to conveniently exploit the mathematical apparatus of complex algebra and calculate a closed path integral (with zero residue).

Finally, we calculate the Information created (§3.5). Here, we consider an idealised system unaffected by noise: that is, we assume that all the entropy produced by the purposive system is informative. The entropy produced by the system (in this idealisation, all in the form of information) is calculated as usual by a line integral across the purposive Hamiltonian, which is obtained from the Lagrangian as is conventional by the appropriate Legendre transformation.

The aim is to obtain a formal expression of entropic purpose, see Equations (19a,b); together with the corresponding Shannon information, see Equation (25). We discuss the metaphysics associated with entropic purpose (that is, this truncated treatment of purpose) in light of our new physical results in Appendix C.

3.2. The Purposive Lagrangian LP

A new “Purposive Lagrangian” LP is defined in the complex temporal plane (thus having no spatial component, just as purpose is purely temporal), using the Lagrangian for the double-logarithmic spiral (shown in PJ19 [13] to be a fundamental eigenfunction of the entropic Hamiltonian) as a template: see Equation (A10a,b) of Appendix A:

where as usual c is the speed of light, kB is the Boltzmann constant, and κ ≡ 2π/λ is a wavenumber corresponding to the parameter λ representing the length scale of the system trajectory. Kn (n ∈ {t,τ}) are entropic constants. Here, mS is the “entropic mass” which is a constant of the purposive system that scales with κ (mS ≡ iκkB: see Equation (9c) of [13] passim). Time is expressed as a complex number with real (τ) and imaginary (t) parts expressing respectively the irreversible and reversible behaviours of the system.

The parameter Λ was used previously to represent the logarithmically varying radius of the double logarithmic spiral (in a hyperbolic 4-D Minkowski space, see Equations (20) and (D.6) of PJ19 [13]). Here, it is used in a way that is formally similar but now in the (hyperbolic) 2-D space given by the complex time plane. Here we interpret it as characterising the “entropic purpose” of the system, such that Λ ≥ 0, and when Λ = 0 the system has zero entropic purpose.

In addition, the complex quantity T, which in Figure 2 represents a temporal point on the holomorphic trajectory across the complex time plane, is a critically important aspect of the geometry. Its main physical interpretation, as a scalar quantity, is that it represents the least required time (apparently known in advance) to produce a given amount of information by a system. Thus, a system creating a certain amount of information will require a system-determined minimum period of time T (calculable in advance) to produce that information.

The time T can also be understood to be the empirically measurable time that elapses over the course of the information-producing process. That is to say, the measured time T (which must be monotonically increasing according to the 2nd Law) is a (vectorial) function of the (mutually orthogonal) reversible and irreversible times. Any information-producing process proceeds along a holomorphic trajectory l in the temporal plane, but it is the monotonically increasing resultant time T that is measured, and which also represents the key variational parameter of the Lagrangian calculus. Just as the Principle of Least Time (or more generally, the Principle of Least Action) offers a minimum time interpretation for any physical phenomenon, so the time T can also be (loosely) understood to represent the minimum time to produce a given amount of information according to a Principle of Least (entropic) Purpose (see Appendix A).

The key canonical relationships (of the Euler-Lagrange variational equation involving Hamiltonian and Lagrangian quantities) are defined using QGT in hyperbolic spacetime, as discussed above and as appropriate for an analysis based on the Shannon information. The Euclidean complex temporal plane is given by z = i(t + iτ) (see Equation (3) of [3]) as indicated in Figure 2. The associated hyperbolic complex time is then given by qn/Z = ln(n/Z): n ∈ {t,τ} (see Equation (7a) above) where in this context Z is the holographic temporal scaling factor (akin to a ‘temporal radius’) of the system (the equivalent holographic spatial radius R is discussed above, see Equation (5a,b); and q′ is the derivative of hyperbolic time with respect to T, given by q′n ≡ ∂qn/∂T: n ∈ {t,τ} (see Equation (7b): combined with the entropic mass mS, these are effectively the entropic momenta). Finally, the quantity Λ in Equation (8) is the parameter that determines the strength of entropic purpose: Λ = 0 for a system with zero entropic purpose.

The first term on the RHS of Equation (8) can be considered to be a ‘kinetic’ term since it is composed of temporal derivatives, whereas the second term on the RHS can be considered as the ‘potential’ term of the Lagrangian since it is determined by non-derivative (i.e., “field”) considerations. The final term on the RHS is related to the granularity or scale of the physical system that is exhibiting entropic purpose (again noting that the entropic purpose in a hyperbolic representation requires the frame of scale to be explicitly referenced, see Auffray and Nottale [32]), and is a constant, akin to a constant of integration or an offset term (as is generally present in considerations of entropy).

Appendix A shows the purposive Lagrangian of Equation (8) to be valid by demonstrating that it satisfies the Euler-Lagrange equation describing the appropriate variational principle, which we will call the Principle of Least (entropic) Purpose:

for both temporal dimensions n ∈ {t,τ}. That is, across the complex temporal plane z, given by z = −τ + it, the Principle of Least (entropic) Purpose is obeyed by an information-producing system exhibiting the Lagrangian of Equation (8), such that the system adopts a holomorphic trajectory l across the complex temporal plane. Therefore, the entropic purpose P of the system is given by the line integral of the purposive Lagrangian along the trajectory across the complex time plane as the information-creating process evolves:

where dz represents the infinitesimal time increment along the trajectory as it traverses the complex temporal plane. Since the trajectory l obeys the variational calculus of the Euler-Lagrange equation of Equation (9), it also indisputably has properties that appear to be teleological. That is to say, before the process starts, the minimised entropic purpose P of the system already determines what the (empirically-measurable) temporal duration T of the process will be.

3.3. Calculating LP

We now calculate the entropic purpose P of a system that exhibits the purposive Lagrangian of Equation (8). The hyperbolic derivatives in the complex temporal plane are given by:

where the angle θ, seen in Figure 2, is the local orientation of the trajectory across the temporal plane; see Appendix A, Equations (A6a) and (A6b). The characteristic holographic temporal parameter (analogous to the geometric radius) of the system is given by Z0, assumed constant. This allows us to simplify the purposive Lagrangian of Equation (8) (see Equation (A10b) in Appendix A):

Here we see that the purposive Lagrangian is composed of a variable component (depending on the angle θ) and an essentially overall constant component (the two final terms in Equation (12) on the RHS). In a dimensionally consistent expression, the angle θ may be given by:

which exhibits plausible behaviour as the value of Λ varies. In particular, the angle θ may reasonably be supposed proportional to the information production parameter Λ, see Equation (20), and we therefore employ the wavenumber κ as the dimensionally equivalent system parameter to normalise Λ. For example, when Λ = 0 then the angle θ = 0, and the trajectory of the system simply proceeds along the reversible t-axis: no information is being produced and zero thermodynamic irreversibility (as represented by the temporal parameter τ) is present. However, for finite Λ > 0 then the angle is also θ > 0, and the irreversible temporal parameter τ is also finite. We need only consider the varying part of Equation (12), the purposive Lagrangian that explicitly depends on the information production, given by:

However, in the subsequent analysis, we consider the analytically-continued (complexified according to the handedness of the z = −τ + it plane) version of the purposive Lagrangian, which is given by (see Equation (A15b) of Appendix B):

This complexified purposive Lagrangian can then be immediately simplified, using the Euler identity sin2θ + icos2θ = iexp(−i2θ), to:

which we use in the subsequent analysis, and where we have ignored the constant term associated with ln(i) in the purposive Lagrangian of Equation (14c) (and also other constant terms in Equation (12) on the RHS), since constant aspects to any Lagrangian (or Hamiltonian) play no part in the system dynamics as described by the relevant variational calculus and canonical differential equations. That the purposive Lagrangian is analytic in the complex temporal z-plane (see Appendix B) is also helpful.

3.4. Calculating the Entropic Purpose P

With reference to Figure 2 and given there are no poles or points of non-analyticity enclosed within the closed contour described across the complex temporal plane; using the fundamental results of complex calculus, we can see that a closed contour (line) integral of the purposive Lagrangian must equal zero, tracing from the origin along the trajectory l to the temporal point T at (it,τ), and then ascending vertically upwards (parallel to the τ-axis) along the line A, and then backwards (along the t-axis) on the line B back to the origin:

Of course, this requires the purposive Lagrangian to represent an analytic function in the temporal complex z-plane. Our previous work (PJ23 [3]) allows us to assume that the Cauchy-Riemann conditions hold as appropriate for the handedness of the z-plane (see Appendix B): and , where the complexified purposive Lagrangian is composed of two real functions: . That is to say, just as QGT’s entropic Hamiltonian of [3] is complex, whose real (dissipative and entropic) and imaginary (reversible and energetic) components are Hilbert transforms of each other, the QGT entropic Lagrangian (the simple Legendre transformation of the entropic Hamiltonian) is also complex and analytic. Given that the purposive Lagrangian LP is based on equivalent QGT quantities (although now defined in the complex temporal plane), Appendix B shows that LP is also analytic.

Fortunately, the two line-integrals A and B can be calculated analytically, so that the entropic purpose integral of Equation (10) can be evaluated. We consider the two integrals along the paths A and B in turn.

Line integral A is calculated using Equations (14c) and (13):

Line integral B is also calculated using Equations (14c) and (13):

Thus:

and therefore, the entropic purpose P has real and imaginary components given by:

It is clear from Equations (19a,19b) that the dimensionality of entropic purpose is given by entropy [J/K] and (given the definition of the entropic mass mS) that it is quantised by the Boltzmann constant kB. In addition, since the complexification of the (real) purposive Lagrangian of Equation (14a) led to a complex-valued entropic purpose, it is clear that it is the real part of the entropic purpose, Equation (19a), that we are interested in.

That is to say, we need only consider the real component, Pr (Equation (19a)) which is simply the result of the line integral B along the reversible time axis (but not independent of the line integral A along the irreversible time axis due to the Hilbert transform relationship between the real and imaginary components of the complexified purposive Lagrangian). This is because for a system with zero information production (θ = 0 and τ = 0), then Pr = 0. However, as the information production increases, such that θ > 0 and τ > 0, then Pr > 0, as expected for a system that is creating information. In addition, it is clear that the entropic purpose increases over time as more information is created by the system.

As a first-order heuristic approximation, and because when no information is being produced, both θ and Λ are zero, we can conjecture that the angle θ is related to the information production parameter Λ (dimensioned correctly with the factor κ) by:

3.5. Calculating the Information

Having calculated the entropic purpose of an information-producing system, the next question that immediately comes to mind is, how much information is this entropic purpose associated with? Whereas in QGT the exertion (as associated with the Principle of Least Exertion) is found by calculating the line integral of the entropic Lagrangian, the entropy associated with the system (assumed to be all in the form of information in this idealised system) is found by calculating the line integral of the entropic Hamiltonian over the same trajectory. Thus, whereas the entropic purpose (with the dimensionality of entropy, [J/K]) is calculated by the temporal line integral of the purposive Lagrangian, the associated increase in Shannon information (itself also an entropic quantity with the same dimensionality, [J/K]) corresponds to the line integral of the purposive Hamiltonian over the same trajectory across the complex temporal plane.

The purposive Hamiltonian HP and purposive Lagrangian LP are related to each other by the Legendre transformation:

The identity (see Equation (7b) above, and Equation (9b) of PJ19 [13]) shows that the first term on the RHS of Equation (21) does not vary over time. Then it is clear from Equation (14a) that the varying part (that depends on the angle θ as the temporal trajectory crosses the complex time plane) of the purposive Hamiltonian is simply given by the purposive Lagrangian:

Any constant aspect to the purposive Hamiltonian simply contributes to the (constant) offset associated with the information entropy and can be ignored, since we are only interested in the change in information (i.e., the information production). Therefore we can deploy the same complex analysis as for the calculation of the entropic purpose P above, and we complexify the purposive Hamiltonian of Equation (22) in the same way as Equations (14a)–(14c):

In the same way that we found that the real part of the entropic purpose was simply the line integral along the reversible time axis, we calculate the information I along the same line integral. Note, that from an info-entropy perspective, where information and entropy are in quadrature to each other (that is, using the language of geometrical algebra, their basis vectors are Hodge duals of each other [13]) and the line integral of an entropic Hamiltonian gives the entropy of a system [3], so we take the complex-conjugate of the purposive Hamiltonian of Equation (23) and multiply by the pseudoscalar i so as to yield the appropriate information term I arising from the integration:

and realise that we obtain exactly the same result as for Pr see Equation (19a):

It is clear that the created information I of Equation (25) behaves as expected: in conjunction with Equation (20), it is zero for Λ = 0, and increases with Λ just as the entropic purpose P increases with Λ. We also see that the entropic purpose and the created information are identical. That is to say, they imply each other: the creation of information implies entropic purpose, and vice-versa.

Note that entropy production is a conserved quantity, just like energy. One way of understanding this is to remember that the energy Hamiltonian H and the entropy production Π are mutually complex conjugates (Equations (23) of PJ23 [3]) and since the Hamiltonian is Noether-conserved, so is the entropy production. But the entropy production of a purposive system is also given by the sum of its information production and the associated ’noise production’ (which all have the same units). The sum of the information production and noise production is therefore conserved, but the interplay between the information and noise means that the productions of each are not individually conserved at each instant in time. This is similar to the process of transformations over time between kinetic energy and potential energy in a dynamic system, where the total energy is a constant (conserved) but at each instant in time the amount of KE and PE in the system is variable. Similarly, we can speak of the generation (creation) of information as part of a dynamic process where the overall entropy production of the system is conserved (a constant), but the relative allocations to information production and noise generation can vary with time.

Given that the time to create the information is T, then using Equations (A2a) and (20), the information production Π is obtained from Equation (25):

From Equation (23c) of PJ23 [3], the associated energy Hamiltonian, using mS ≡ iκkB, is then:

where is the complex conjugate of Π and c = fλ as usual. Note that for an angle θ = 90° across the temporal plane, the energy H associated with the information production is therefore simply that of a photon of frequency f.

Equation (27) indicates that any system exhibiting entropic purpose (that is, creating Shannon information) will also dissipate energy. This is an interesting extension to Landauer’s principle [34,35] (see also [17] and a recent review [36]), which conventionally states that the deletion (erasure) of information requires energy; that is, it must be a dissipative process. In earlier work, Parker and Walker [16] already showed that the transfer of information is also dissipative. However, now we can also see that the creation of information is equally dissipative. Thus, the physical processing of any information (be it its creation, copying, transfer, or erasure) is always accompanied by the dissipation of energy.

Note that although the sign of the information production can change according to the physical process (so that the creation of information is associated with a positive sign for ∂I/∂T, whereas its destruction or erasure will have a negative sign), yet because the information production is the Wick-rotated complex-conjugate of the energy Hamiltonian, the sign of the energy change is ambiguous, as indeed, is the sign of the associated temporal change. However, given that the 2nd Law is fundamental, the energy change must always manifest itself as a dissipation event, accompanied by an overall increase in entropy.

3.6. Communication Systems Power Requirements

From an engineering perspective, there is always an interest in minimising the energy dissipation or powering requirements for any communications system. The quotient of Equations (26) and (27) indicates that the energy required for a given information production is:

The dimensionality of Equation (28) needs a comment, in that the information production rate [s−1] is quantised by the Boltzmann constant [J/K], such that the information production Π has overall dimensionality [J/Ks], whereas that of the energy Hamiltonian H is simply [J]. Thus Equation (28) offers a theoretical minimum energy dissipated per rate of created information; however, the energy is expressed in Kelvin-seconds. In order to express it in a more familiar form, Landauer’s principle specifies the minimum energy dissipation per (erased) bit:

That is to say, we multiply Equation (28) by the thermodynamic (entropic) unit value for a bit of information, kBln2, such that the minimum energy for a given information creation rate is:

It is interesting that the minimum energy for a given information production depends on the Planck constant, rather than the Boltzmann constant; particularly since ℏ (1.055 × 10−34 Js) is numerically considerably smaller than kB, (1.381 × 10−23 J/K) albeit they are different physical quantities. However, only temperatures in the nanokelvin range (for example) that are more associated with black holes or very advanced optical cooling techniques would yield energy values equivalent to 1 b/s information creation rate, or at room temperature (300 K), an information production of 39.3 Tb/s would require equivalent energies. The implications of this for telecom efficiencies are still being worked out.

4. Discussion

Purpose is necessarily irreversible, and there exists today a growing interest in irreversible systems. For recent examples, Jeynes [37] reviews a standard approach to certain non-adiabatic systems, and Aslani et al. [38] discuss micro-rotation in (non-Newtonian) micropolar fluids (where a “Newtonian fluid” has an idealised viscosity and is therefore intrinsically irreversible).

Living things are characterised by purpose—at a minimum they want to survive. Although as humans we are very good at discerning purposes, it is generally assumed that talk of “purpose” is not properly “scientific”, for the very good reason that what we mean by “purpose” is inescapably metaphysical. And metaphysics (the metanarrative of physics) is necessarily inexpressible in physical terms.

However, we have found a way of mathematically expressing a cut-down version of “purpose” (that is, shorn of its metaphysical aspects: “entropic purpose”) that will certainly help to physically distinguish the animate from the inanimate, which we expect to shed light on critical issues of practical engineering concern related to assessments of ‘aliveness’, personality aspects of artificial intelligence (AI) systems, and tests to distinguish actual human actors from bots in a cybersecurity context.

Our definition of “entropic purpose” relies heavily on Shannon’s information metric, an important aspect of thermodynamics. That is, our treatment of entropic purpose derives from recent progress in the study of Quantitative Geometrical Thermodynamics (QGT) that represents a new and powerful approach to the understanding of system entropy, as expressed by a fully canonical Lagrangian-Hamiltonian formulation that treats information and entropy as mutually orthogonal (whose spacetime base axes are Hodge duals) in a holomorphic entity: info-entropy. QGT is a general formalism with wide-ranging consequences, in particular, that entropy production (the rate of entropy increase) is demonstrably an isomorph of energy, and both are Noether-conserved. Since information production (characteristic of life) is very closely related to entropy production, we can derive important results (presented here) on the mathematical characteristics of entropic purpose. Its application in a range of current AI-based technologies remains to be developed.

One important aspect to highlight is that intrinsic to the Euler-Lagrange formalism of Equation (2) is the teleological character of the purposive Lagrangian. This is an important attribute that is essential to any discussion of entropic purpose since purpose is necessarily orientated to the future. As is already well recognised for the Principle of Least Action [1,2], there is a strong teleological element to its interpretation (the PLA was after all the original stimulus for the development of the mathematical apparatus of the kinematic Lagrangian and the associated Euler-Lagrange equation). For example, in adopting a trajectory across spacetime, a geometric light ray apparently ‘chooses’ in advance which trajectory (with its initial angle of departure) will ensure the least time is taken to reach the ‘intended’ destination. The purposive Lagrangian is equally teleological for the same reason. That is to say, the entropic purpose of a system is minimised (according to the variational calculus of the Euler-Lagrange equation) by its trajectory across the complex temporal plane, in accordance with the amount of information created by the process.

Thus, the entropic purpose of a system is equivalent (in metric) to the quantity of information created by the system. On the one hand, the creation of such information is straightforwardly and simply measured using the Shannon metric; which might imply that the measurement of entropic purpose as a distinct quantity is therefore superfluous or even a tautology. However, this is to overlook the essential feature of purpose which is its future orientation. The Shannon metric does not consider the causality, future orientation, or even the teleological nature of the information it is measuring: Shannon assumed that the information already existed. However, when considering the (ex-nihilo) creation of such information, then its (entropic) purposive nature comes to the fore and needs to be explicitly considered. In this paper, we show that the creation of such Shannon information can be described using a purposive Lagrangian that obeys the Euler-Lagrange equation so that its teleological or future-orientation characteristics are intrinsic; albeit implicit and also frequently ignored in epistemological considerations of the variational calculus. Here, we have only proven that such a theoretical Lagrangian framework for entropic purpose exists in a coherent mathematical formalism and that it successfully describes the creation of Shannon information. The explication of an appropriate (or specific) purposive Lagrangian for any particular information-creating system is the subject of future research. Here, we are only indicating the fact that such a purposive Lagrangian can be coherently defined, see Equation (8); and that it is also consistent with and fits into the current physical and mathematical state of knowledge.

Another critical insight is that the complex (energy) Hamiltonian of a system ([3]) is equivalent to the complex conjugate of the system’s entropy production. That is, the real entropy production is isomorphic to the imaginary energy, and the imaginary entropy production is isomorphic to the real energy; such a relation is predicated on a description of time in the complex temporal plane, thereby providing a consistent physical description of thermodynamically reversible and irreversible processes. This means that as a system evolves, it describes a temporal trajectory across the complex temporal plane that satisfies this description of entropic purpose.

QGT was originally developed to treat Maximum Entropy (MaxEnt) entities, which are necessarily stable in time, ranging from the sub-atomic to the cosmic, from nuclear isotopes and DNA to black holes. Curiously, being “stable” does not necessarily mean being “unchanging”, since black holes are MaxEnt (actually, they are the archetypal MaxEnt entity) but they also necessarily grow. Surprisingly, in the QGT formalism, it is the geometry of MaxEnt entities that incorporates the Second Law.

Now, whenever living entities are not dormant (that is, when they are actively exhibiting purposes) they cannot be MaxEnt—rather, they are in a far-from-equilibrium state, as discussed authoritatively by Pressé et al. [33] and Pachter et al. [39], and note that Parker and Jeynes [22] have shown that what these authors call “caliber” is identified with “exertion” in QGT, where “exertion” is defined as a line integral on an appropriate (entropic) Lagrangian. Here, we have found a temporal (rather than a geometrical) entropic Lagrangian to express this case (naturally limited to entropic purpose). The (non-trivial) issue here is that in the context of complex time (enabling a unified approach to reversible and irreversible processes), a valid Lagrangian is fully complexified: its real and imaginary components essentially represent respectively the energetic and entropic aspects of any such system.

It is also relevant to note that the time T associated with the physical process of producing (creating) information is also larger than if the process created zero information, where the conventional elapsed time (for the non-creation of information) would be the thermodynamically reversible time t. That is to say, arguably, the presence of an information-producing phenomenon causes time to dilate (lengthen). The implications of this in the physical world are intriguing. A black hole (or, indeed, a supermassive BH) which is arguably the most entropic as well as the most entropy-producing (and thereby, energetic) object in a galaxy should therefore cause time dilation as per the discussion centred on Figure 2. Of course, this is already well known from General Relativity on account of the BH’s mass. However, whether there is an additional effect on the dilation of time due to a BH’s entropy production (over and above that due to its mass), or whether the time dilation due to entropy production is simply an alternative (thermodynamic description) but still the same (i.e., an exactly equivalent), explanation for time dilation due to the presence of mass is the subject of further research.

Similarly, as conscious beings producing both entropy and information, we are all familiar with the passage of time. But could the passage of time be variable due to differing amounts of entropy production being associated with different living entities? Again, this is a fundamental question that requires additional research.

Scientists have been claiming for well over a century that purpose is essentially illusory, which seems to be in gross contradiction to our common sense; and one would not expect such a long and well-founded tradition to be easily overturned. However, the quantitative treatment of entropy is now at last promising useful progress, since we have shown that an entropic purpose can be expressed in properly physical terms: we expect that this will have far-reaching implications for the study of AI and information systems.

5. Summary and Conclusions

Inanimate things do not have purposes. But animate things (which do have purposes) are both real and material so physics should apply to them. Therefore, physics must incorporate quantities (defined impersonally of course) that in some sense look like “purposes”, and that can in principle be used to impersonally distinguish animate from inanimate things.

We have defined the “entropic purpose” of a heavily idealised system using the formalism of QGT (Quantitative Geometrical Thermodynamics), showing that systems with zero information production also have zero entropic purpose.

This has been possible only because QGT can be expressed in a fully general way which depends on the full complexification of the formalism [3], allowing us to bring to bear the powerful mathematics of complex analysis. It is noteworthy that Ivo Dinov’s group in Michigan also uses complex time (“kime”) in a 5-D “spacekime” to solve big data problems (see for example Wang et al. [31]). This fully general (complexified) formalism also treats reversible and irreversible systems commensurately—and living systems are all irreversible! This is the answer to the longstanding Loschmidt Paradox that has puzzled physicists since Ludwig Boltzmann replied to Joseph Loschmidt (see Olivier Darrigol’s helpfully commented translation [40]): how does the real (irreversible) world ‘emerge’ from the (apparently all reversible) equations of physics? Our QGT resolution shows constructively that physics coherently treats reality, whether or not it is reversible.

It is surprising how much useful physics can be done using only reversible theories (perhaps with some perturbation theory), but this QGT treatment underlines that it is the reversible theories that are all approximations: fundamentally, they are idealisations from the irreversible generality! This is now starting to be recognised with an increasing interest in non-Hermitian systems (recently reviewed [37]); and of course, Ilya Prigogine’s “Brussels-Austin Group” has long been systematically approaching non-equilibrium thermodynamics.

QGT is an analytical theory. So far, we have treated only simple (high symmetry) systems that yield readily to an analytic approach, having previously shown very simple (and demonstrably correct) QGT treatments of known systems that are nearly intractable using traditional approaches (including DNA and spiral galaxies [13]; fullerenes [28]; alpha particle size [29]; and free neutron lifetime [30]).

Here, we have demonstrated that mainstream physics also applies in a non-trivial way even to living beings: in particular that it is now possible in principle to recognise (at least in part) the purposefulness of life.

Specifically, we have proved that a “purposive Lagrangian” exists and, moreover, that the “entropic purpose” of a system may be measured by the Shannon information it creates. That is, it is not necessary to construct the purposive Lagrangian to quantify the entropic purpose.

Author Contributions

Conceptualisation, M.C.P. and C.J.; methodology, M.C.P. and C.J.; validation, M.C.P., C.J. and S.D.W.; formal analysis, M.C.P.; writing—original draft preparation, M.C.P. and C.J.; writing—review and editing, M.C.P., C.J. and S.D.W.; funding acquisition, M.C.P. and S.D.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly funded by the Engineering and Physical Sciences Research Council (EPSRC) under grant EP/W03560X/1 (SAMBAS: Sustainable and Adaptive Ultra-High Capacity Micro Base Stations).

Data Availability Statement

The original contributions presented in this study are included in the article material. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. Principle of Least (Entropic) Purpose

We confirm, using the same method as in Appendix C of PJ19 [13], that the Euler-Lagrange equation (Equation (9) is indeed satisfied for the trajectory l (associated with the sample purposive Lagrangian LP of Equation (8) across the complex plane (see Figure 2). We note that the path of the trajectory is particularly entailed by the time T required to produce a given amount of information, such that the calculus of variations analysis must use T as the key differentiating variable.

Note that this variational principle (which mirrors the Principle of Least Action) concerns the scientific quantity of “entropic purpose”, not the philosophical idea of “purpose”.

The two orthogonal dimensions in the complex temporal plane (see Figure 2) are the (reversible time) t axis and the (irreversible time) τ axis. We consider the expression for a generalised holomorphic trajectory across the temporal z = −τ + it plane (such a trajectory is analogous to a double-helix in QGT), where in our formalism we also continue to explicitly conform to the handedness of the z-plane:

Equation (A1a) approximately represents a double-helix with an axis located along the temporal T direction which is rotated at an angle θ in the complex temporal plane (see Figure 2). When θ = 45° then the double-helical trajectory description is equal to the ‘simple’ equation, Equation (8) of PJ19 [13] (where using QGT concepts, the τ axis is equivalent to the x1 axis, and t is equivalent to the x2 axis, while T is equivalent to x3).

When θ = 0 or 90° then we only have a single-helix (rather than a double-helix) trajectory geometry with the additional value of Z along one of the temporal axes. The values of Z for these two extreme cases θ = 0, 90° both act as (constant) offsets which, when invoking the (differential) canonical relations (the Euler-Lagrange, Lagrangian/Hamiltonian, or the Cauchy-Riemann equations), differentiate to zero.

In Equation (A2b) (for an information-producing system), we define the system’s characteristic holographic temporal parameter Z (equivalent to the spatial holographic radius in QGT) to vary with the parameter Λ along its temporal T axis. This is equivalent to the diminution of radius in a double logarithmic spiral along the axis of the spiral (see Appendix B of PJ19, Equation (B.32) passim [13]). The parameter Z0 represents the characteristic (holographic) time at the beginning of the (possibly information-producing) process.

The holomorphic trajectory l has a description in the (Euclidean) temporal plane, with the two co-ordinate functionals zt, zτ describing the evolution of the holomorphic trajectory across the complex time plane:

Appendix A.1. Conjugate Parameters in Hyperbolic Space

The entropic Lagrangian is defined in hyperbolic (not Euclidean) space (see Equation (7c) earlier, and Equation (9) passim of PJ19 [13]), and the hyperbolic position q is given by the transformation:

where n ∈ {t,τ} and Z (Equation (A2b)) is the instantaneous temporal holographic parameter. Taking the two co-ordinate functionals zt, zτ in turn using the Equation (A3a)–(A3c), and substituting them for n in Equation (A4), we transform into the following two hyperbolic temporal co-ordinates:

We then differentiate the expressions for qn (Equations (A5a,b) by the axial temporal parameter T, to obtain the (conjugate) hyperbolic velocities and (which are proportional to the momenta):

The entropic Euler-Lagrange equation that describes the Principle of Least (entropic) Purpose is then given by:

Appendix A.2. Obtaining the Purposive Lagrangian

We call the Lagrangian appropriate to the complex temporal plane the “Purposive Langrangian” LP.

Lagrangians (including LP) are obtained quite generally in hyperbolic (information) space from PJ19 (Equations (C23)–(C25) of Appendix C [13]), comprising a ‘kinetic’ and a ‘potential’ term:

A general derivation of the purposive potential terms (corresponding to an inverse-square law force relationship in Euclidean space) is given in PJ19 Appendix B (summarised in Equation (C24) [13]):

The purposive Lagrangian is therefore given by:

where are constants of integration and where mS is the entropic mass as before (see Equation (7b), passim). Substituting Equation (A6a,b) into Equation (A10a), it is straightforward to rearrange and additionally derive:

as per Equation (12) in the main text.

Appendix A.3. Confirming the Variational Properties on the Reversible t Axis

Partial differentiating the purposive Lagrangian LP of Equation (A10a) with respect to the differential (hyperbolic velocity) quantity :

Substituting Equation (A6a) into Equation (A11a) we have:

Differentiating with respect to T we have:

The second term of the Euler-Lagrange equation, Equation (A7) is found as follows, using Equations (A8) and (A9a) as the preceding parts of Equation (A10):

Thus, by inspection (Equations (A11c,d)), the Euler-Lagrange equation for the n = t time co-ordinate is satisfied:

Appendix A.4. Confirming the Variational Properties on the Irreversible τ Axis

Similarly, for the other n = τ temporal coordinate. Partial differentiating the purposive Lagrangian LP with respect to :

Substituting Equation (A6b) into Equation (A12a) we have:

Differentiating with respect to T we have:

The second term of the Euler-Lagrange equation, Equation (A7) is found as follows, using Equations (A8) and (A9b):

Thus, by inspection (Equations (A12c,d)), the Euler-Lagrange equation for the n = τ time co-ordinate is satisfied:

confirming that the trajectory l (Equations (A1)) obeys the variational principle (Equation (A7)), as required.

Appendix B. Analyticity of the Purposive Lagrangian in the Complex Temporal Plane

We briefly indicate the validity of the Cauchy-Riemann equations as applied to the complexified purposive Lagrangian LP in the complex temporal plane, . In particular, we assume that the purposive Lagrangian can be expressed by a pair of purely real functions, F and G, which together form an analytic function, Σ:

Note that it is a standard result of complex analysis that F and G are mutually Hilbert Transforms. For the z =−τ + it plane, the relevant Cauchy-Riemann equations are then:

and

Employing the purposive Lagrangian of Equation (12), substituting in Equation (13) and only considering the varying part of LP, we have Equation (14a):

This function is purely real and therefore cannot constitute an analytic function. To make it analytic, we must complexify it, therefore, requiring the Hilbert Transform of sin2θ (i.e., −cos 2θ). However, the appropriate complexified version of the purposive Lagrangian appropriate to the handedness of the z=−τ + it plane is then given (with some simple algebraic manipulation) by: