Short-Term Traffic State Prediction Based on Mobile Edge Computing in V2X Communication

Abstract

:1. Introduction

- How to perceive and predict traffic state in real time. The traditional traffic sensors are difficult to meet the predicted requirements of short-term traffic state prediction, especially for the accuracy requests of intelligent connected vehicles (ICVs).

- How to perceive and analyze the dynamic data of vehicles. The vehicles running on the road may face all kinds of sudden traffic incidents. However, traditional vehicular dynamic data strategies (such as floating vehicle) are difficult to meet the requests of accurate prediction in real time.

- How to effectively analyze and filter spatiotemporal features of traffic state. The spatiotemporal features of the traffic state are high nonlinear correlations, which are still the focus problems of urban traffic research. For example, the variation of traffic flow at one intersection will affect the traffic state at the adjacent intersection, meanwhile, affect the future traffic state over time.

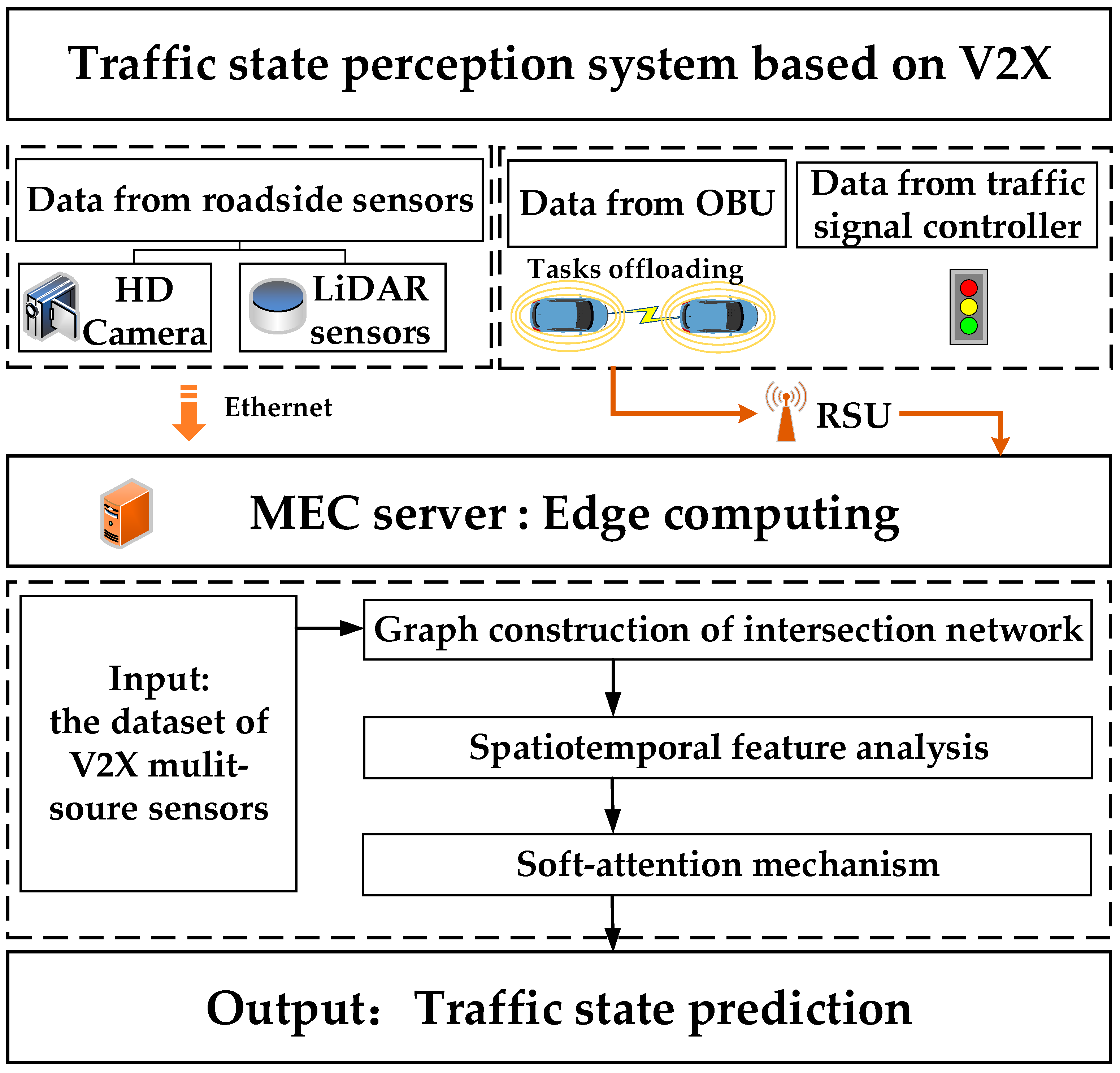

- Firstly, this paper proposes a traffic perceptual and computational system based on the MEC architecture, in which each edge server is responsible for managing the data upload of vehicles within its service scope. Moreover, the MEC server will predict traffic state based on the perceptual information.

- Secondly, this paper applies on board unit (OBU) data of ICVs to predict traffic state by tracking vehicular driving state in real time, which can effectively improve the accuracy of prediction.

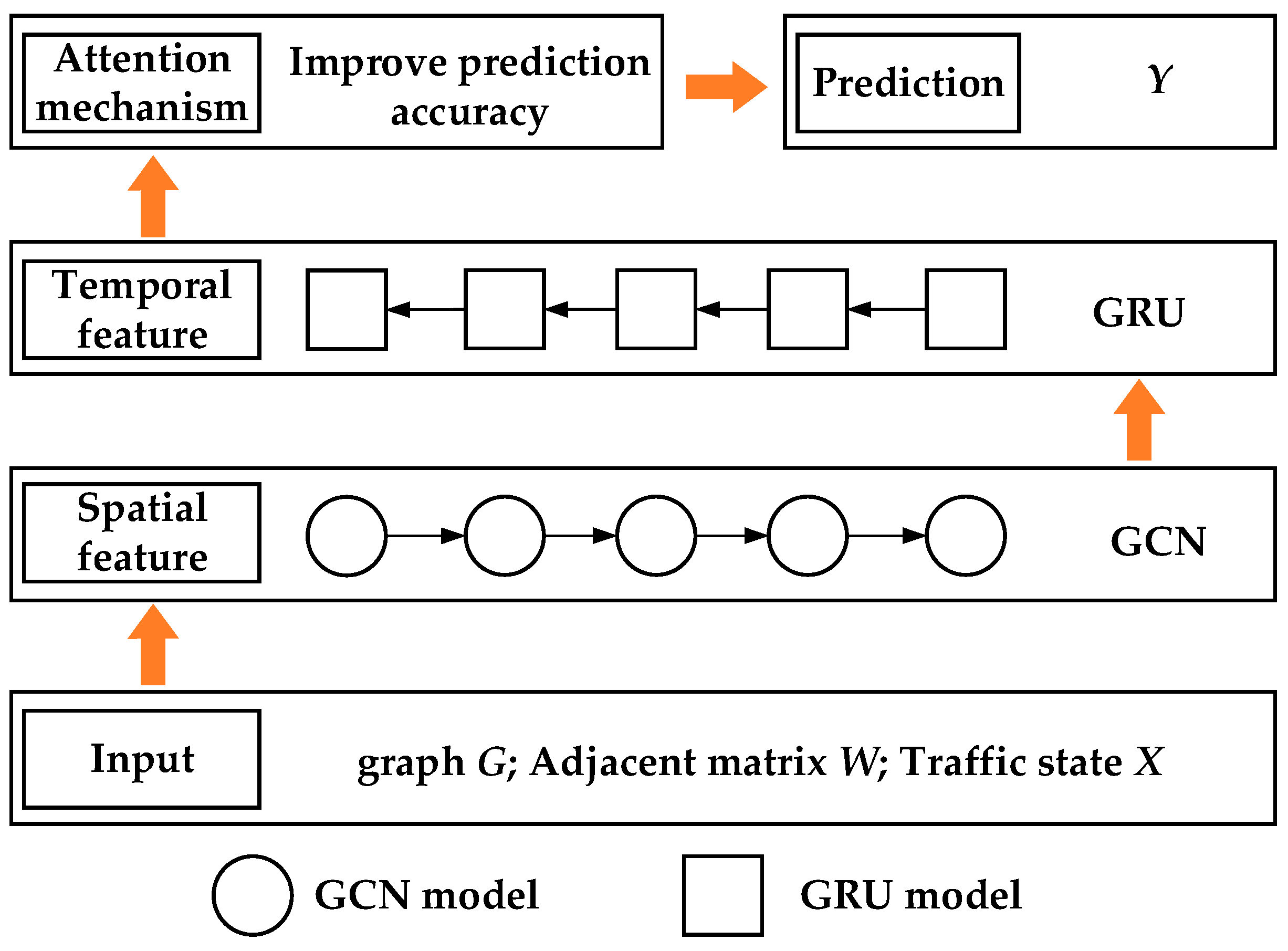

- Thirdly, with the characteristics of the MEC, this paper designs a traffic prediction model to analyze and evaluate the traffic state at the intersection in V2X environment. GCN and GRU models are combined to analyze spatiotemporal features of traffic data in the model. Then, the soft-attention mechanism is utilized to integrate the various extracted features.

2. Materials and Methods

2.1. Traffic State Perception

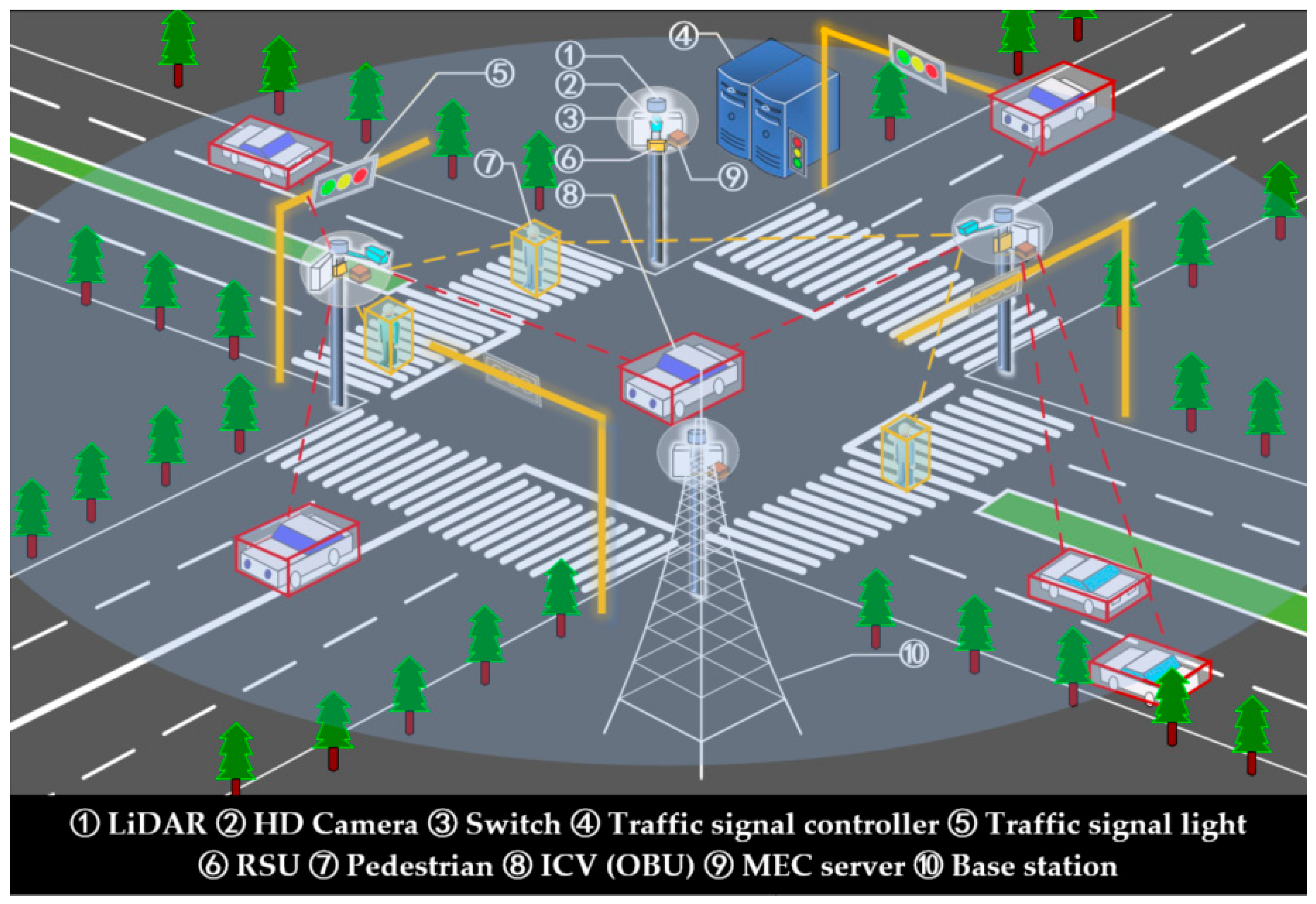

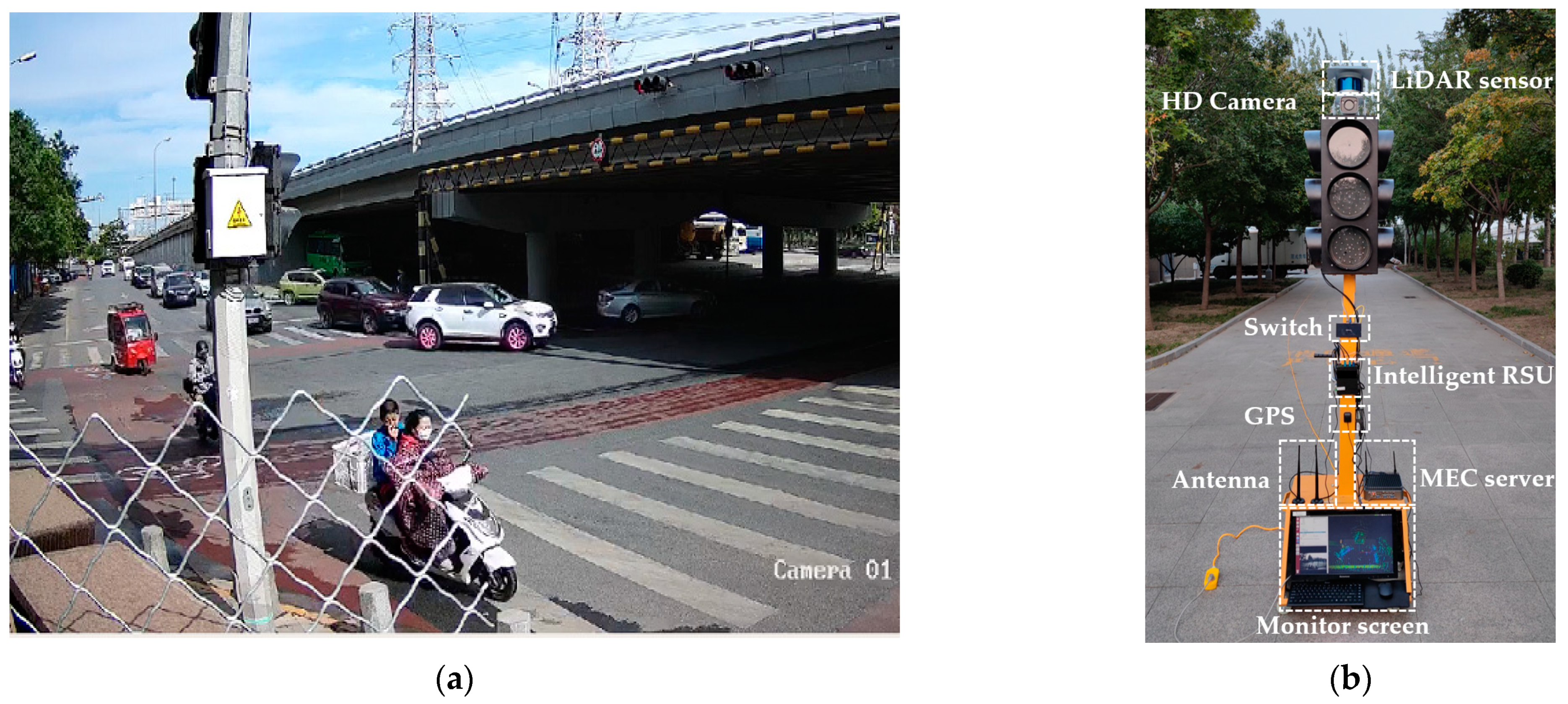

2.1.1. Traffic Perception System Based on V2X Communication

2.1.2. Traffic Perception Data

- 1.

- Data from Roadside Sensors

- 2.

- Data from OBU

- 3.

- Data from Traffic Signal Controller

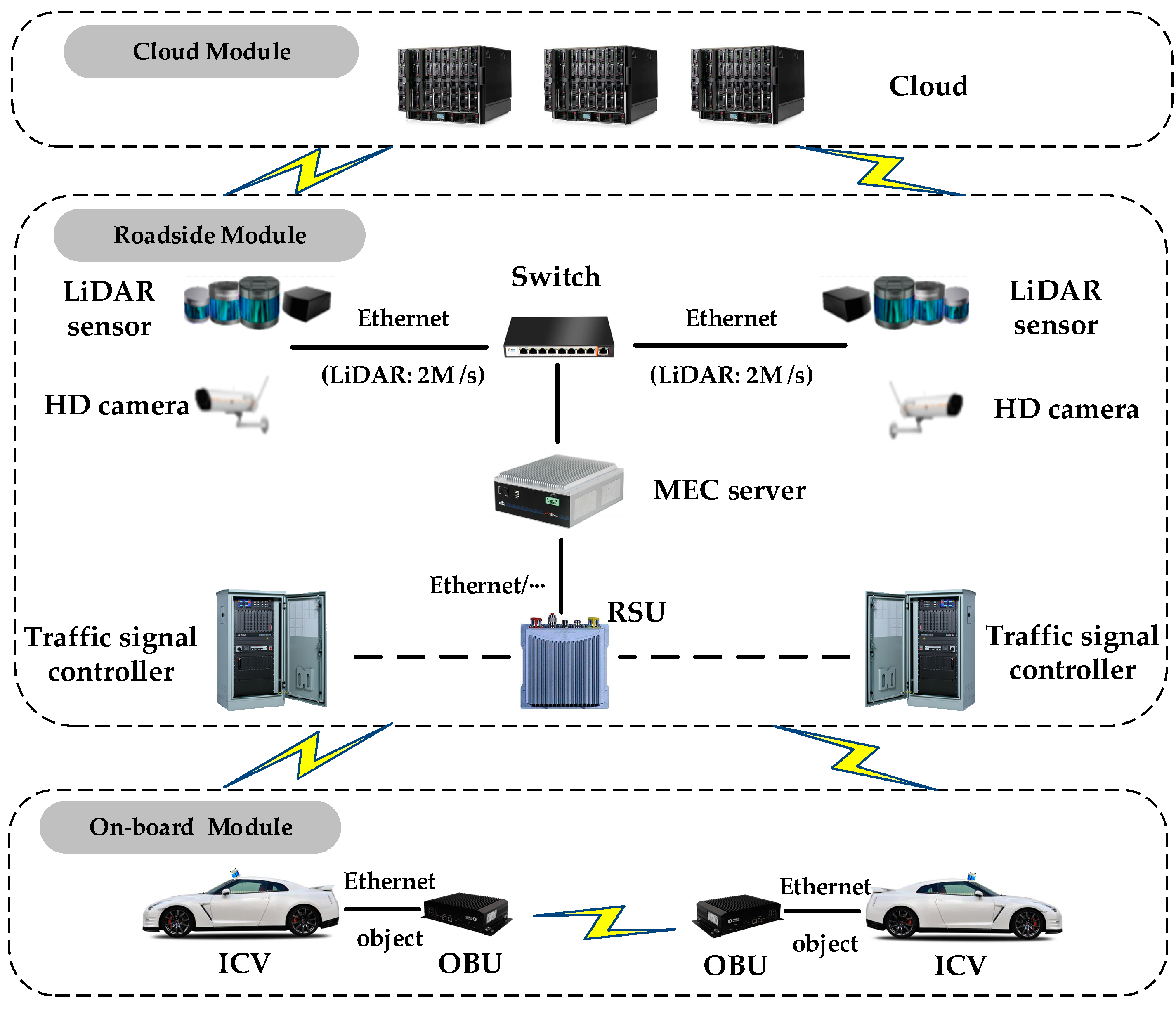

2.2. MEC Architecture

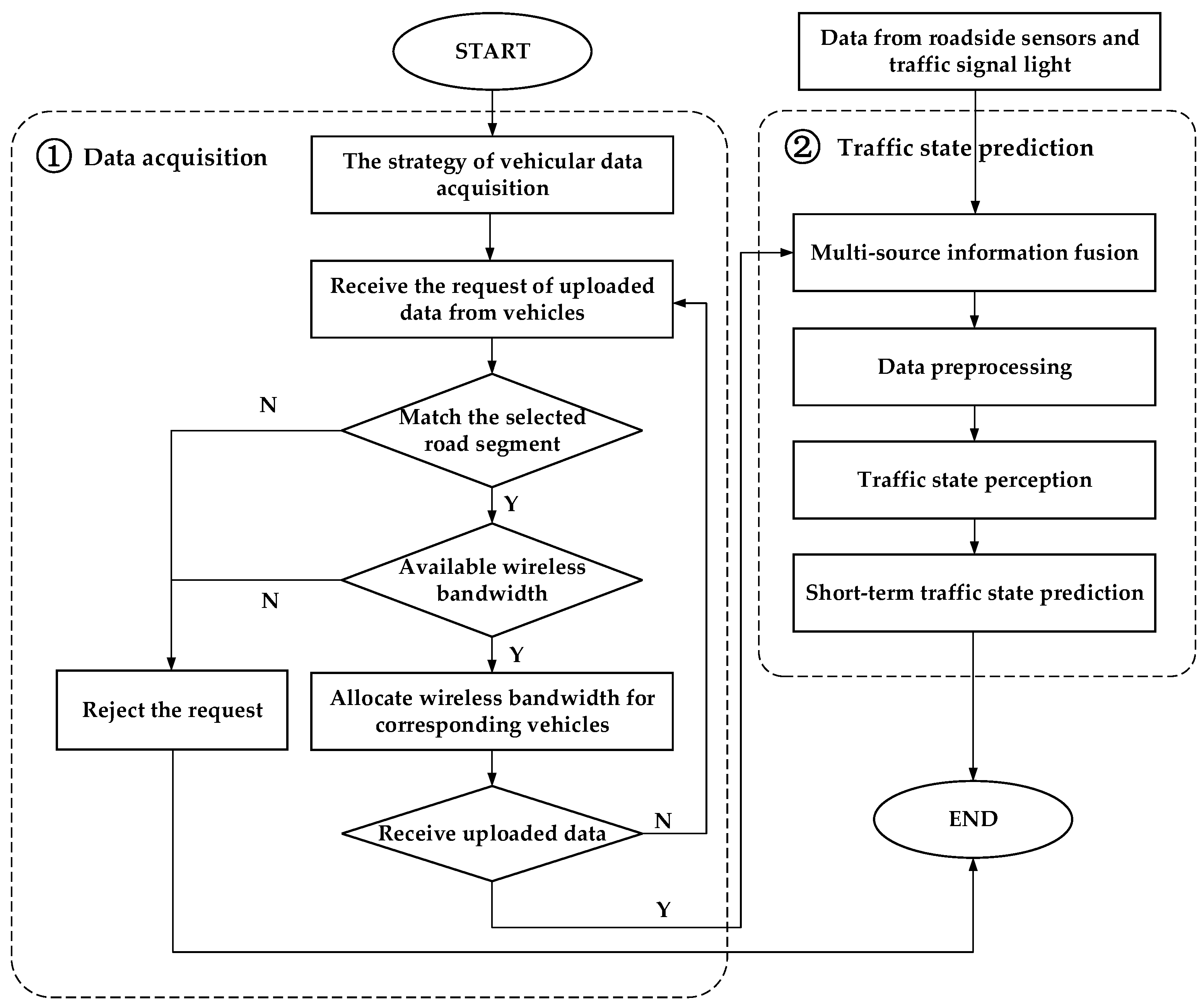

- Firstly, the MEC server can decide whether the requests of data uploading can be received by listening to messages broadcast from vehicles. These messages include the required bandwidth of the vehicle and the section ID associated with the vehicle.

- The MEC server will check if the ID of the corresponding section is selected. If the ID does not match, the MEC server rejects the request; otherwise, if the ID matches, the actions proceed to the next step.

- The MEC server then checks whether the required bandwidth can be met. If not, the MEC server rejects the request; if yes, the MEC server allocates bandwidth to the vehicle.

- Finally, the MEC server is ready to receive the uploaded vehicle data. If the data is uploaded successfully, the MEC server updates the allocated bandwidth of the corresponding road section. Otherwise, the allocated bandwidth will not be updated.

- Firstly, multi-source information can be fused by the MEC server.

- Then, the MEC server processes the original vehicular data, including eliminating invalid data and sensing the traffic state information based on vehicular data.

- Finally, by designing a model of short-term traffic state prediction, the MEC server can predict the traffic state of the intersection of the system.

2.3. The Model of Short-Term Traffic State Prediction

2.3.1. Graph Construction of Intersection Network

2.3.2. Spatial Feature Extraction

2.3.3. Temporal Feature Extraction

2.3.4. Attention Mechanism Module

3. Experimental Results and Discussion

3.1. Field Test and Data Analysis

3.2. Evaluation Index

- (1)

- Root mean squared error (RMSE)

- (2)

- Mean absolute error (MAE)

- (3)

- Accuracy (Accuracy)where and represent the real traffic state value and predicted traffic state value, respectively.

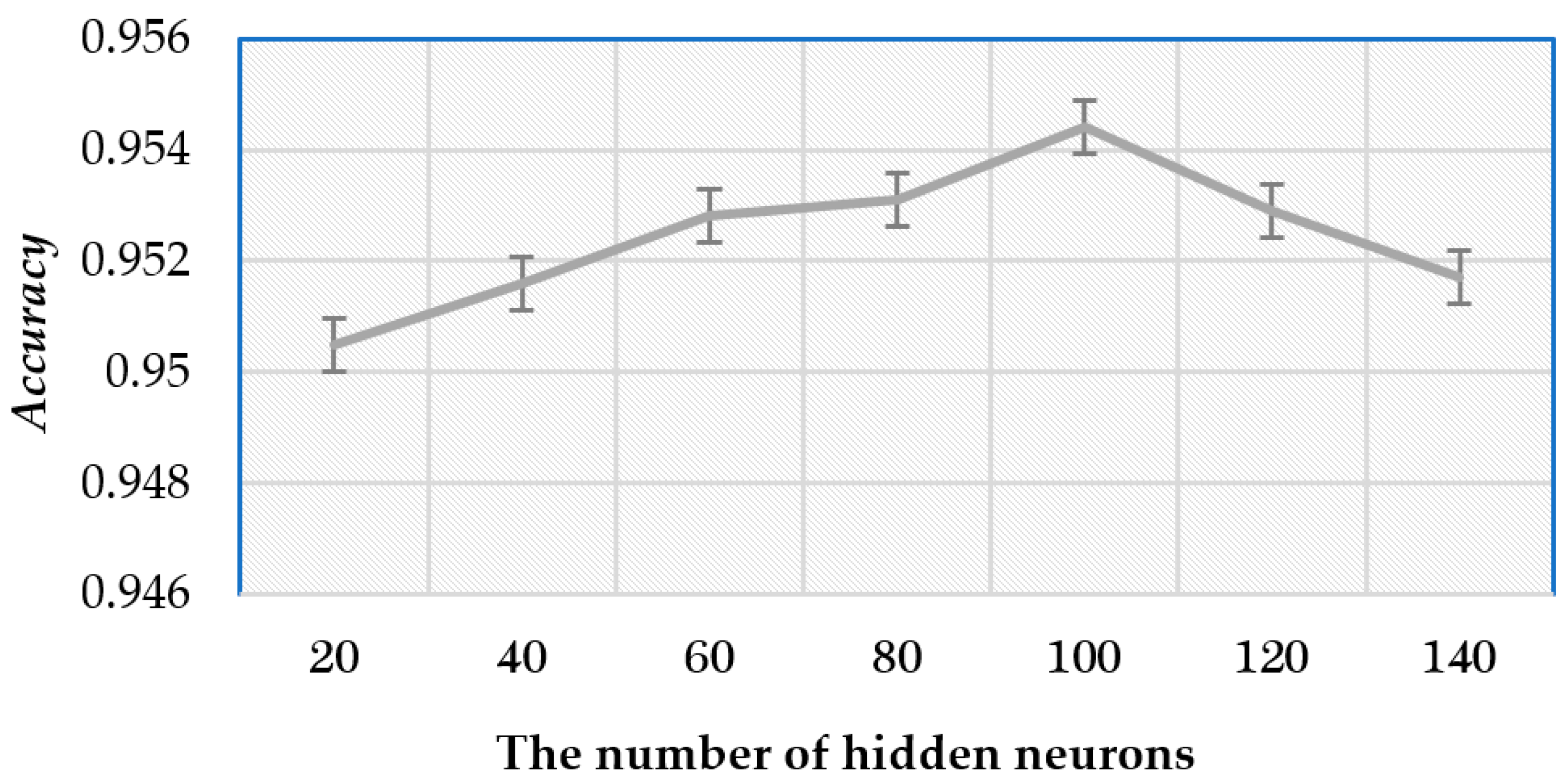

3.3. Parameter Settings

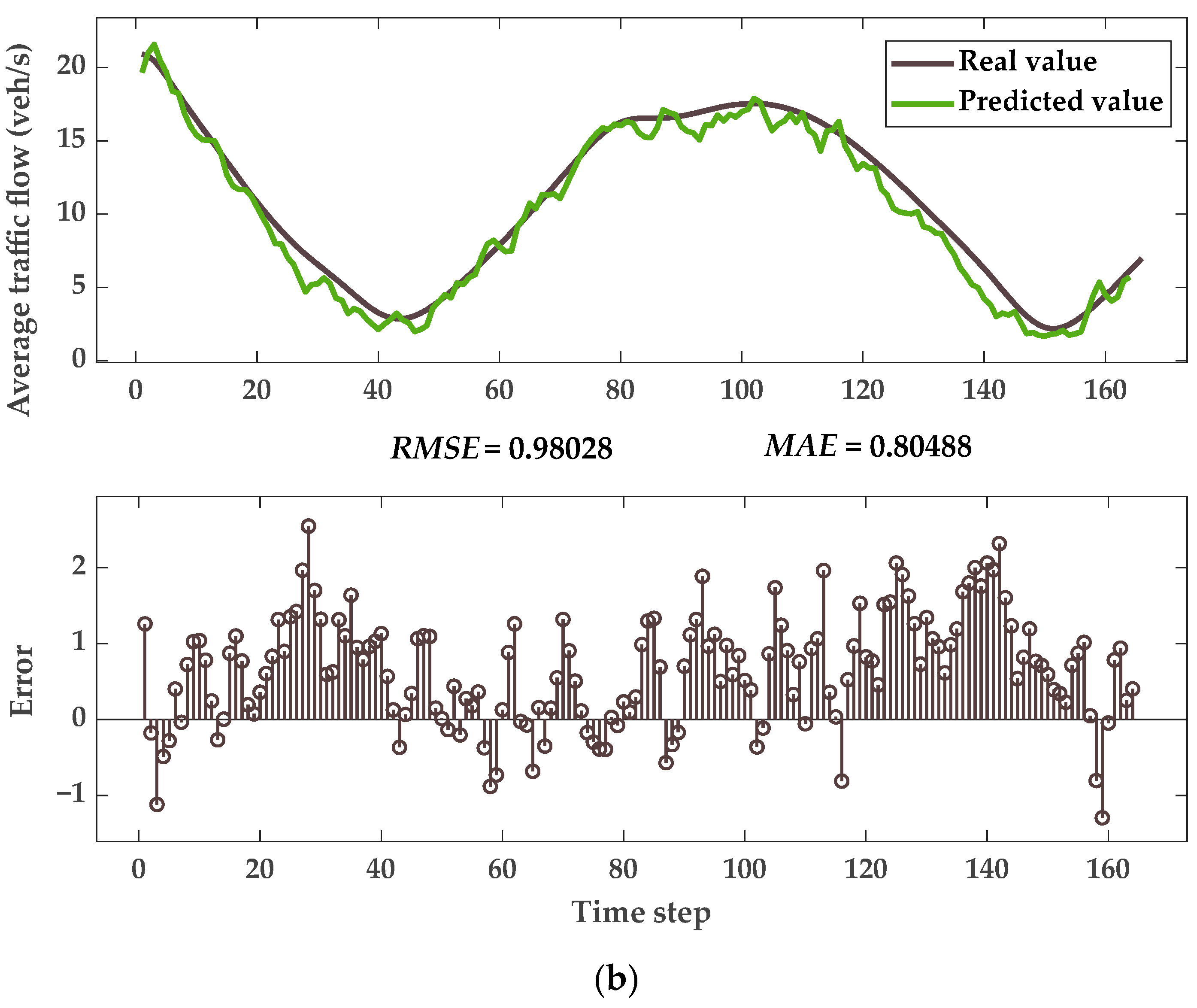

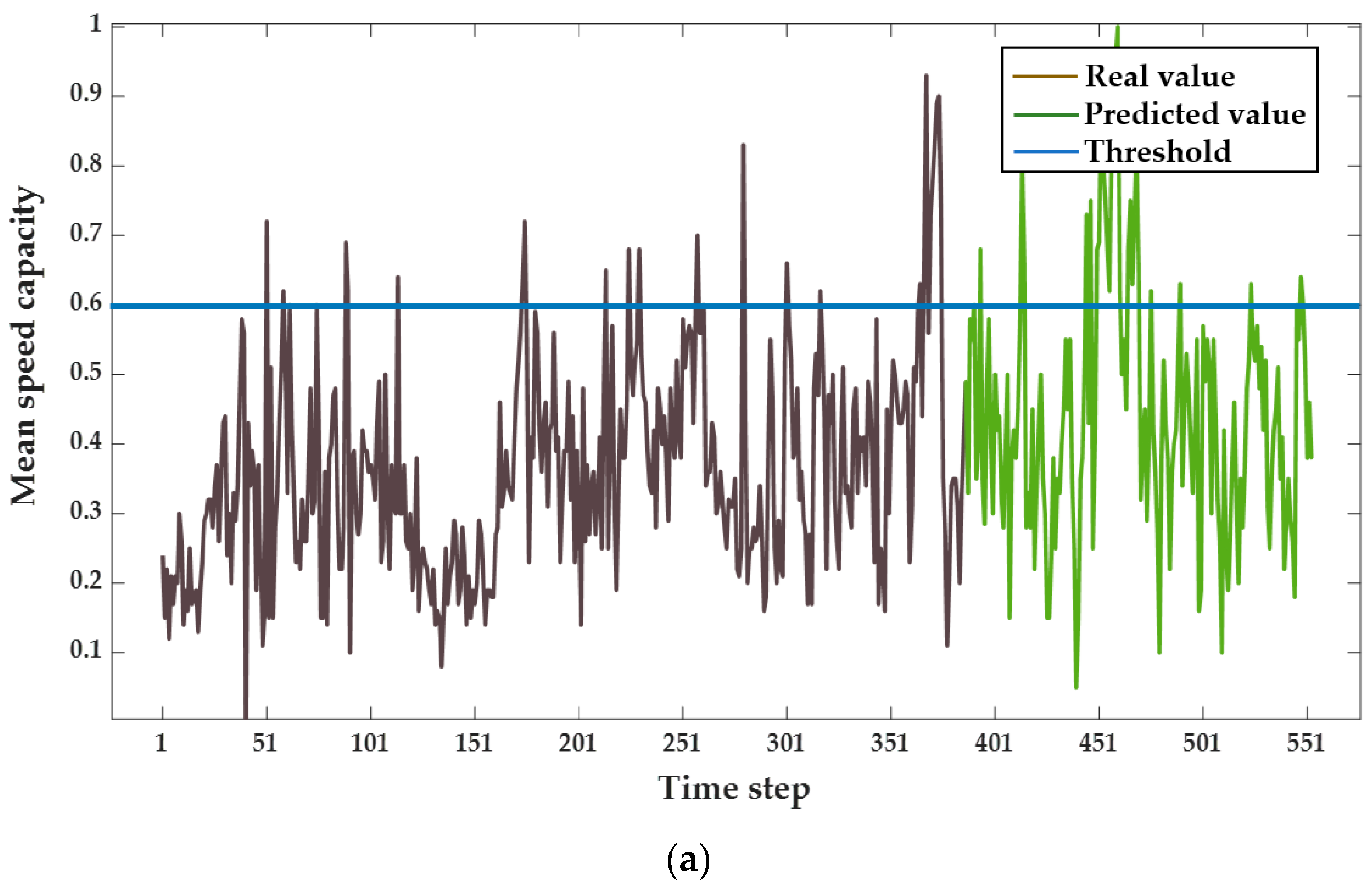

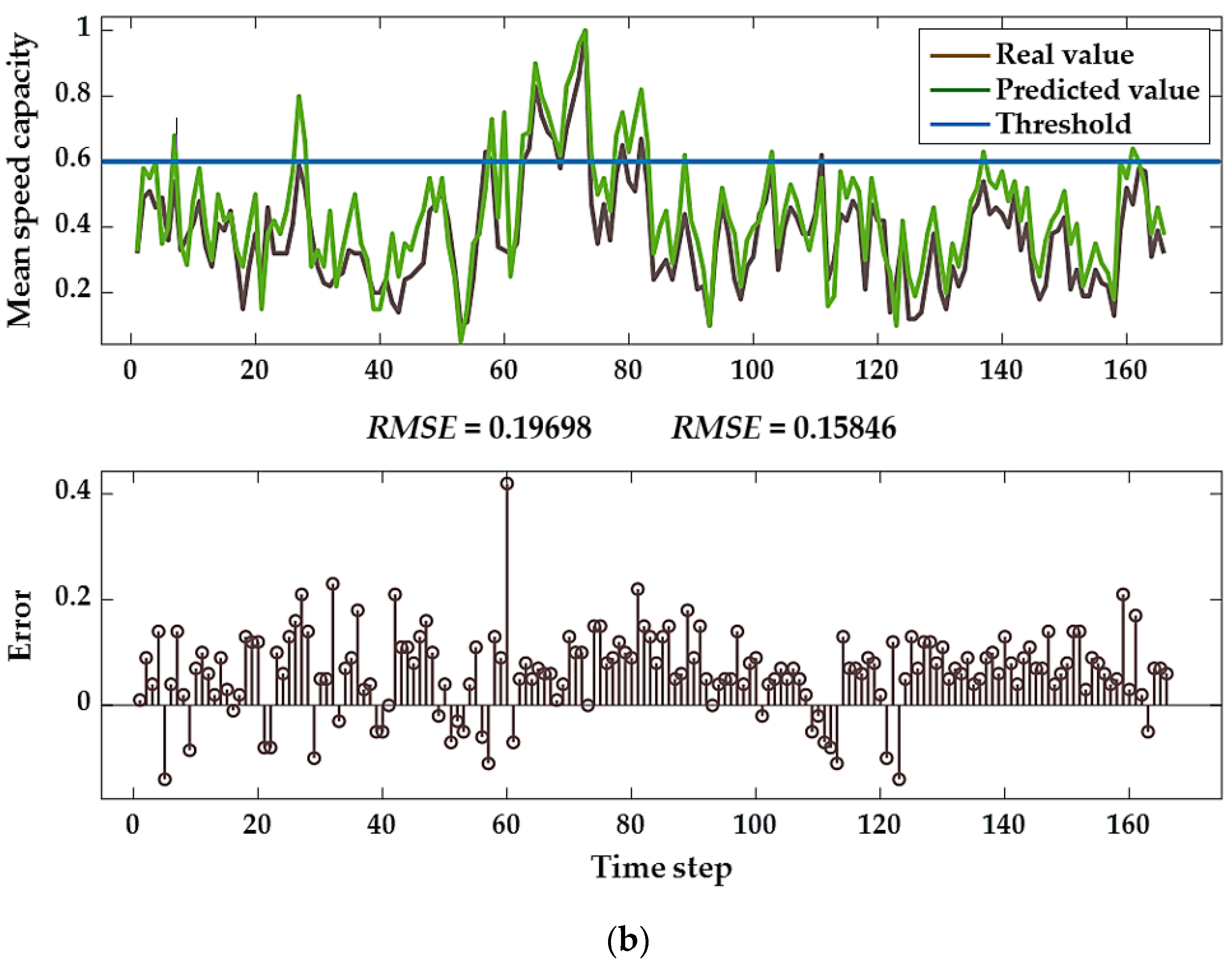

3.4. Performance of Prediction Model

3.5. Comparison Experiment Results

4. Conclusions

- (1)

- This paper fuses multi-source information between intelligent OBUs, roadside sensors and traffic signal controller to accurately perceive the traffic state based on the V2X communication.

- (2)

- The prediction model considers the spatiotemporal dependence of all vehicles at vertexes of the intersection network. The proposed model can effectively extract vertexes features from the intersection, which greatly improves the prediction accuracy of the model. Based on the data acquisition strategy of the MEC-assisted V2X network, the comparative experiment reveals the effectiveness of our proposed model.

- (3)

- This paper mainly analyzes the traffic operation state of the single intersection, which limits the usage of the proposed model in extended scenarios and may pose a challenge to the adaptability of the model. In further research, we will study the traffic state prediction problems with the regional intersections to explore the efficiency and effectiveness of data support and implementation scheme in CVIS, as well as the adaptability of the model in these scenarios.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Su, X.; Fan, M.; Zhang, M.; Liang, Y. An innovative approach for the short-term traffic flow prediction. J. Syst. Sci. Syst. Eng. 2021, 30, 519–532. [Google Scholar] [CrossRef]

- Salamanis, A.; Margaritis, G.; Kehagias, D.D.; Matzoulas, G.; Tzovaras, D. Identifying patterns under both normal and abnormal traffic conditions for short-term traffic prediction. Transp. Res. Proc. 2017, 22, 665–674. [Google Scholar] [CrossRef]

- He, Z.; Chow, C.; Zhang, J. STCNN: A Spatio-temporal convolutional neural network for long-term traffic prediction. In Proceedings of the 2019 20th IEEE International Conference on MDM, Hong Kong, China, 10–13 June 2019; pp. 226–233. [Google Scholar] [CrossRef]

- Schakel, W.J.; Van Arem, B. Improving traffic flow efficiency by in-car advice on lane, speed, and headway. In Proceedings of the Transportation Research Board 92nd Annual Meeting Compendium of Papers, Washington, DC, USA, 13–17 January 2013. [Google Scholar] [CrossRef]

- Ma, J.; Han, W.; Guo, Q.; Zhang, S. Enhancing traffic capacity of scale-free networks by link-directed strategy. Int. J. Mod. Phys. C 2016, 27, 1650028. [Google Scholar] [CrossRef]

- Ghiasi, A.; Hussain, O.; Qian, Z.; Li, X. A mixed traffic capacity analysis and lane management model for connected automated vehicles: A Markov chain method. Transp. Res. B-Meth. 2017, 106, 266–292. [Google Scholar] [CrossRef]

- Wang, X.; Han, J.; Bai, C.; Shi, H.; Zhang, J.; Wang, G. Research on the impacts of generalized preceding vehicle information on traffic flow in V2X environment. Fut. Int. 2021, 13, 88. [Google Scholar] [CrossRef]

- Wang, P.; Jiang, Y.; Xiao, L.; Zhao, Y.; Li, Y. A joint control model for connected vehicle platoon and arterial signal coordination. J. Intell. Transp. Syst. 2020, 24, 81–92. [Google Scholar] [CrossRef]

- Yan, J.; Li, H.; Bai, Y.; Lin, Y. Spatial-temporal traffic flow data restoration and prediction method based on the tensor decomposition. Appl. Sci. 2021, 11, 9220. [Google Scholar] [CrossRef]

- Wang, C.; Ran, B.; Yang, H.; Zhang, J.; Qu, X. A novel approach to estimate freeway traffic state: Parallel computing and improved kalman filter. IEEE Intell. Transp. Syst. Mag. 2018, 10, 180–193. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, S.; Chen, B.; Cao, J.; Huang, Z. TrafficGAN: Network-scale deep traffic prediction with generative adversarial nets. IEEE Trans. Intell. Transp. Syst. 2019, 22, 219–230. [Google Scholar] [CrossRef]

- Lv, Y.; Duan, Y.; Kang, W.; Li, Z.; Wang, F. Traffic flow prediction with big data: A deep learning approach. IEEE Trans. Intell. Transp. Syst. 2015, 16, 865–873. [Google Scholar] [CrossRef]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. arXiv 2018, arXiv:1707.01926. [Google Scholar]

- Nguyen, H.; Le, M.K.; Tao, W.; Chen, C. Deep learning methods in transportation domain: A review. IET Intel. Transp. Syst. 2018, 12, 998–1004. [Google Scholar] [CrossRef]

- Fang, W.; Zhong, B.; Zhao, N.; Love, E.D.P.; Luo, H.; Xue, J.; Xu, S. A deep learning-based approach for mitigating falls from height with computer vision: Convolutional neural network. Adv. Eng. Inf. 2019, 39, 170–177. [Google Scholar] [CrossRef]

- Yin, W.; Kann, K.; Yu, M.; Sch, H. Comparative study of CNN and RNN for natural language processing. arXiv 2017, arXiv:1702.01923. [Google Scholar]

- Liu, Y.; Gong, C.; Yang, L.; Chen, Y. DSTP-RNN: A dual-stage two-phase attention-based recurrent neural network for long-term and multivariate time series prediction. Exp. Syst. Appl. 2020, 143, 113082. [Google Scholar] [CrossRef]

- Yuan, Y.; Shao, C.; Cao, Z.; He, Z.; Zhu, C.; Wang, Y.; Jang, V. Bus dynamic travel time prediction: Using a deep feature extraction framework based on RNN and DNN. Electronics 2020, 9, 1876. [Google Scholar] [CrossRef]

- Zeng, Y.; Shao, M.; Sun, L.; Lu, C. Traffic prediction and congestion control based on directed graph convolution neural network. China J. Highw. Transp. 2021, 5, 1–16. [Google Scholar]

- Cui, Z.; Henrickson, K.; Ke, R.; Wang, Y.H. Traffic graph convolutional recurrent neural network: A deep learning framework for network-scale traffic learning and forecasting. IEEE Trans. Intell. Transp. Syst. 2019, 21, 4883–4894. [Google Scholar] [CrossRef] [Green Version]

- Ye, J.; Sun, L.; Du, B.; Fu, Y.; Xiong, H. Coupled layer-wise graph convolution for transportation demand prediction. arXiv 2020, arXiv:2012.08080. [Google Scholar]

- Ma, X.; Dai, Z.; He, Z.; Na, J.; Wang, Y.; Wang, Y. Learning traffic as images: A deep convolutional neural network for large-scale transportation network speed prediction. Sensors 2017, 17, 818. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Xiong, G.; Tian, Y.; Lv, Y.; Chen, Y.; Hui, P.; Su, X. A multi-stream feature fusion approach for traffic prediction. IEEE Trans. Intell. Transp. Syst. 2020, 1–11. [Google Scholar] [CrossRef]

- Wang, P.; Deng, H.; Zhang, J.; Wang, L.; Zhang, M.; Li, Y. Model predictive control for connected vehicle platoon under switching communication topology. IEEE Trans. Intell. Transp. Syst. 2021. [Google Scholar] [CrossRef]

- Giovanni, B.; YannAel, L.B.; Gianluca, B.; Karl, D. On-board-unit data: A big data platform for scalable storage and processing. In Proceedings of the 2018 4th International Conference on CloudTech, Brussels, Belgium, 26–28 November 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Mondal, S.; Gupta, A. Assessment of saturation flow at signalized intersections: A synthesis of global perspective and future directions. Curr. Sci. 2020, 119, 32–43. [Google Scholar] [CrossRef]

- Ma, X.; Miao, R.; Wu, X.; Liu, X. Examining influential factors on the energy consumption of electric and diesel buses: A data-driven analysis of large-scale public transit network in Beijing. Energy 2021, 216, 119196. [Google Scholar] [CrossRef]

- Wang, P.; Wang, Y.; Deng, H.; Zhang, M.; Zhang, J. Multilane spatiotemporal trajectory optimization method (MSTTOM) for connected vehicles. J. Adv. Transp. 2020. [Google Scholar] [CrossRef]

- Liu, X.; Qu, X.; Ma, X. Improving flex-route transit services with modular autonomous vehicles. Transport. Res. E-Log. 2021, 149, 1366–5545. [Google Scholar] [CrossRef]

- Minh, Q.T.; Kamioka, E. Traffic state estimation with mobile phones based on the “3R” philosophy. IEEE Trans. Commun. 2011, 12, 3447–3458. [Google Scholar] [CrossRef]

- Yang, S.; Su, Y.; Chang, Y.; Hung, H. Short-term traffic prediction for edge computing-enhanced autonomous and connected cars. IEEE Trans. Veh. Techn. 2019, 68, 3140–3153. [Google Scholar] [CrossRef]

- Gu, Y.; Xu, X.; Qin, L.; Shao, Z.; Zhang, H. An improved Bayesian combination model for short-term traffic prediction with deep learning. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1924–9050. [Google Scholar] [CrossRef]

- Liu, X.; Qu, X.; Ma, X. Optimizing electric bus charging infrastructure considering power matching and seasonality. Transport. Res. D-Trans. 2021, 100, 1361–9209. [Google Scholar] [CrossRef]

- Andreas, R.; Felix, K.; Ralph, R.; Klaus, D. Car2x-based perception in a high-level fusion architecture for cooperative perception systems. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Madrid, Spain, 3–7 June 2012; pp. 270–275. [Google Scholar] [CrossRef]

| Data Sources | Data Types |

|---|---|

| Roadside sensors | Vehicle information: license plate number, latitude, longitude, speed, horizontal distance, heading angle, etc. |

| Traffic state information: average vehicular speed, average traffic flow, average queue length, parking line location, etc. | |

| OBU | Timstamp, latitude, longitude, speed, acceleration, license plate number, wheel speed, steering angle, braking status, etc. |

| Traffic signal controller | Signal cycle, signal phase, traffic light color, remaining time of green, etc. |

| V2X Communication | License Plate | Latitude | Longitude | Rev (r/s) | Steering Angle (°) | Speed (m/s) | Acceleration (m/s2) | Horizontal Distance (m) | Heading Angle (°) |

|---|---|---|---|---|---|---|---|---|---|

| Yes | N C5530 | 116.201054 | 39.923663 | 0.05 | 15 | 0.10 | −0.06 | 5.82 | 7.30 |

| No | N 46735 | 116.200968 | 39.923662 | —— | —— | 1.26 | —— | 11.71 | 6.52 |

| ︙ | ︙ | ︙ | ︙ | ︙ | ︙ | ︙ | ︙ | ︙ | ︙ |

| Yes | A V3210 | 116.2011 | 39.923624 | 2.4 | 43 | 4.32 | 0.33 | 20.15 | 13.49 |

| No | U B3957 | 116.201055 | 39.9236244 | —— | —— | 4.98 | —— | 12.43 | 8.03 |

| Time Stamp | Traffic Flow (veh/s) | Average Speed (m/s) | Average Queue Length(m) | Signal Cycle (s) | Signal Light (E→W) | Signal Remaining Time (s) |

|---|---|---|---|---|---|---|

| 1609232611 | 5 | 1.38 | 6.80 | 105 | Red | 10 |

| 1609232612 | 7 | 0.83 | 14.80 | 105 | Red | 9 |

| ︙ | ︙ | ︙ | ︙ | ︙ | ︙ | ︙ |

| 1609234429 | 13 | 3.44 | 0 | 105 | Green | 5 |

| 1609234430 | 11 | 2.85 | 0 | 105 | Green | 4 |

| The Number of Hidden Neurons | RMSE | MAE | Accuracy |

|---|---|---|---|

| 20 | 2.8942 | 1.9852 | 0.9505 |

| 40 | 2.7863 | 1.9723 | 0.9516 |

| 60 | 2.7021 | 1.9623 | 0.9528 |

| 80 | 2.6967 | 1.9483 | 0.9531 |

| 100 | 2.6547 | 1.9372 | 0.9544 |

| 120 | 2.7654 | 1.9521 | 0.9529 |

| 140 | 2.7735 | 1.9652 | 0.9517 |

| Time | Evaluation Index | GCN–GRU | LSTM | RNN | CNN | BP |

|---|---|---|---|---|---|---|

| 5 min | MAE | 1.0002 | 1.9823 | 3.5521 | 4.3511 | 3.8552 |

| RMSE | 1.2354 | 2.9940 | 5.3215 | 6.5362 | 4.5113 | |

| Accuracy | 0.949 | 0.832 | 0.775 | 0.682 | 0.751 | |

| 10 min | MAE | 0.9303 | 1.9823 | 3.3241 | 4.3012 | 3.8463 |

| RMSE | 1.1532 | 2.9940 | 4.9215 | 6.4621 | 4.4963 | |

| Accuracy | 0.954 | 0.832 | 0.779 | 0.682 | 0.758 | |

| 15 min | MAE | 0.8049 | 1.9838 | 3.4843 | 4.2984 | 3.8466 |

| RMSE | 0.9803 | 2.9843 | 5.0122 | 6.4551 | 4.4985 | |

| Accuracy | 0.958 | 0.845 | 0.780 | 0.671 | 0.756 | |

| 30 min | MAE | 0.7842 | 1.8856 | 3.5012 | 4.3123 | 3.8512 |

| RMSE | 0.9053 | 2.7650 | 5.1230 | 6.5010 | 4.5013 | |

| Accuracy | 0.967 | 0.883 | 0.789 | 0.663 | 0.758 |

| Time | Evaluation Index | GCN–GRU | LSTM | RNN | CNN | BP |

|---|---|---|---|---|---|---|

| 5 min | MAE | 0.2911 | 0.5451 | 0.9528 | 1.4213 | 0.9984 |

| RMSE | 0.2935 | 0.6654 | 1.0981 | 1.7520 | 1.2135 | |

| Accuracy | 0.965 | 0.852 | 0.781 | 0.625 | 0.773 | |

| 10 min | MAE | 0.2133 | 0.5431 | 0.9413 | 1.5312 | 0.9988 |

| RMSE | 0.2465 | 0.6641 | 1.0005 | 1.7640 | 1.2141 | |

| Accuracy | 0.967 | 0.864 | 0.788 | 0.631 | 0.771 | |

| 15 min | MAE | 0.1835 | 0.5394 | 0.9641 | 1.4812 | 0.9985 |

| RMSE | 0.2004 | 0.6604 | 1.2154 | 1.7024 | 1.2031 | |

| Accuracy | 0.966 | 0.865 | 0.791 | 0.640 | 0.773 | |

| 30 min | MAE | 0.1585 | 0.5388 | 0.9721 | 1.5531 | 0.9971 |

| RMSE | 0.1970 | 0.6541 | 1.3412 | 1.7233 | 1.1998 | |

| Accuracy | 0.965 | 0.859 | 0.799 | 0.627 | 0.772 |

| LSTM | RNN | CNN | BP | GCN–GRU | |

|---|---|---|---|---|---|

| Training time | 183.2 s | 105.4 s | 309.7 s | 123.4 s | 112.3 s |

| Prediction time | 1.353 s | 0.932 s | 2.332 s | 1.234 s | 0.995 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Liu, X.; Wang, Y.; Wang, T.; Zhang, J. Short-Term Traffic State Prediction Based on Mobile Edge Computing in V2X Communication. Appl. Sci. 2021, 11, 11530. https://doi.org/10.3390/app112311530

Wang P, Liu X, Wang Y, Wang T, Zhang J. Short-Term Traffic State Prediction Based on Mobile Edge Computing in V2X Communication. Applied Sciences. 2021; 11(23):11530. https://doi.org/10.3390/app112311530

Chicago/Turabian StyleWang, Pangwei, Xiao Liu, Yunfeng Wang, Tianren Wang, and Juan Zhang. 2021. "Short-Term Traffic State Prediction Based on Mobile Edge Computing in V2X Communication" Applied Sciences 11, no. 23: 11530. https://doi.org/10.3390/app112311530

APA StyleWang, P., Liu, X., Wang, Y., Wang, T., & Zhang, J. (2021). Short-Term Traffic State Prediction Based on Mobile Edge Computing in V2X Communication. Applied Sciences, 11(23), 11530. https://doi.org/10.3390/app112311530