A Lightweight Pathological Gait Recognition Approach Based on a New Gait Template in Side-View and Improved Attention Mechanism

Abstract

:1. Introduction

2. Related Work

- By analyzing the advantages and disadvantages of the GEI, GEnI, and AEI, along with the relationship between their generation principles and abnormal gait characteristics, we design a comprehensive gait template that is robust and contains rich gait information.

- We propose an ICBAM that employs depthwise separable convolution (DSC) to replace ordinary convolution in the spatial attention module and connect it in parallel for capturing key features and richer discriminative information.

- We constructed an abnormal gait dataset that can recognize not only festinating gait and shuffling gait in Parkinson’s disease, but also pathological gait in hemiplegic disease and spastic paraplegic disease.

- A lightweight deep learning model, NSVGT-ICBAM-FACN, is used to evaluate pathological gait. The accuracy of this paper’s method is proven to be superior to other methods by ablation studies, module comparison experiments, and identification results on the self-constructed abnormal gait dataset and GAIT-IST dataset, which ensures high accuracy while keeping the model lightweight.

3. Materials and Methods

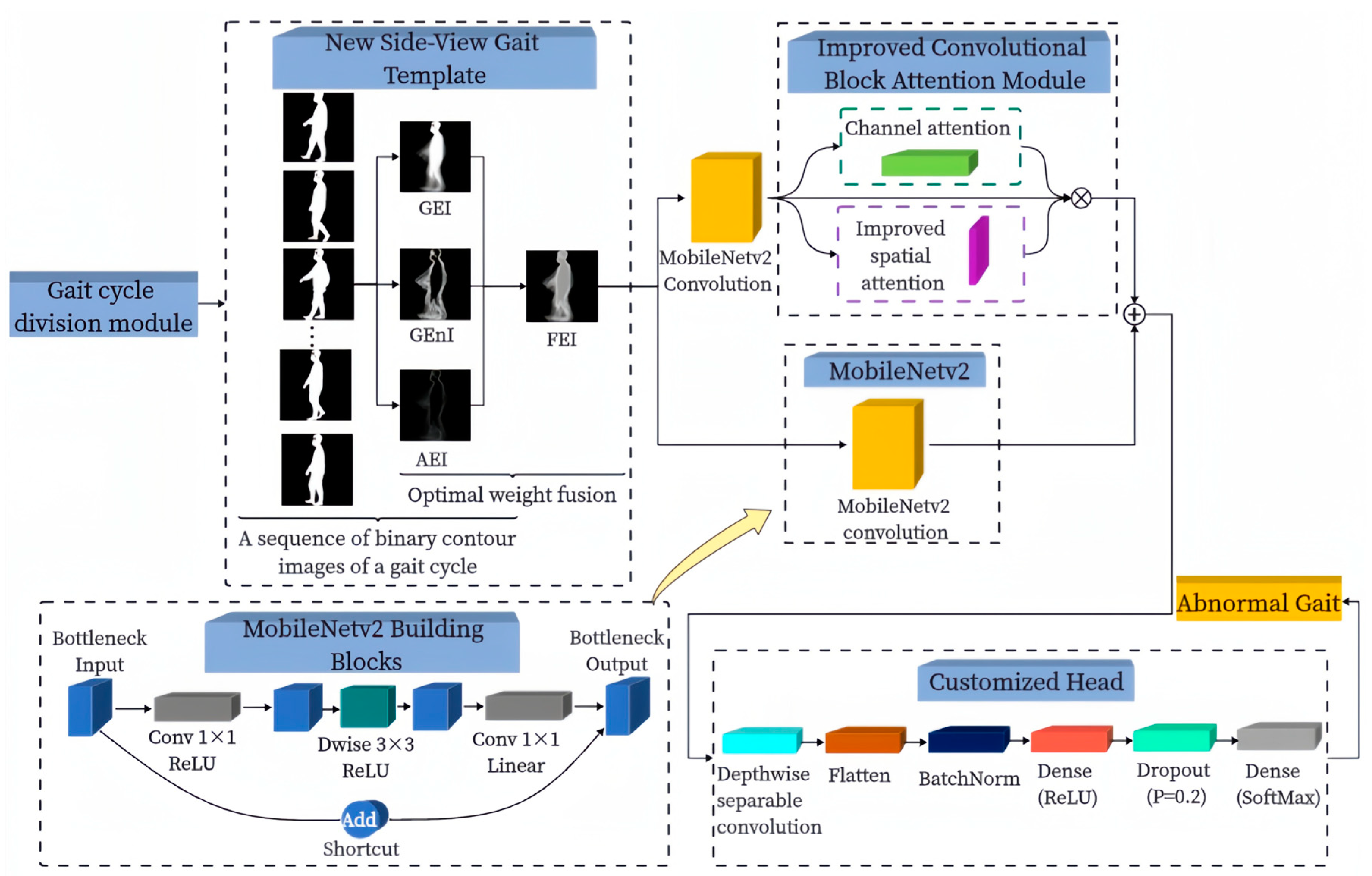

3.1. Overall Architecture of the Proposed Algorithm

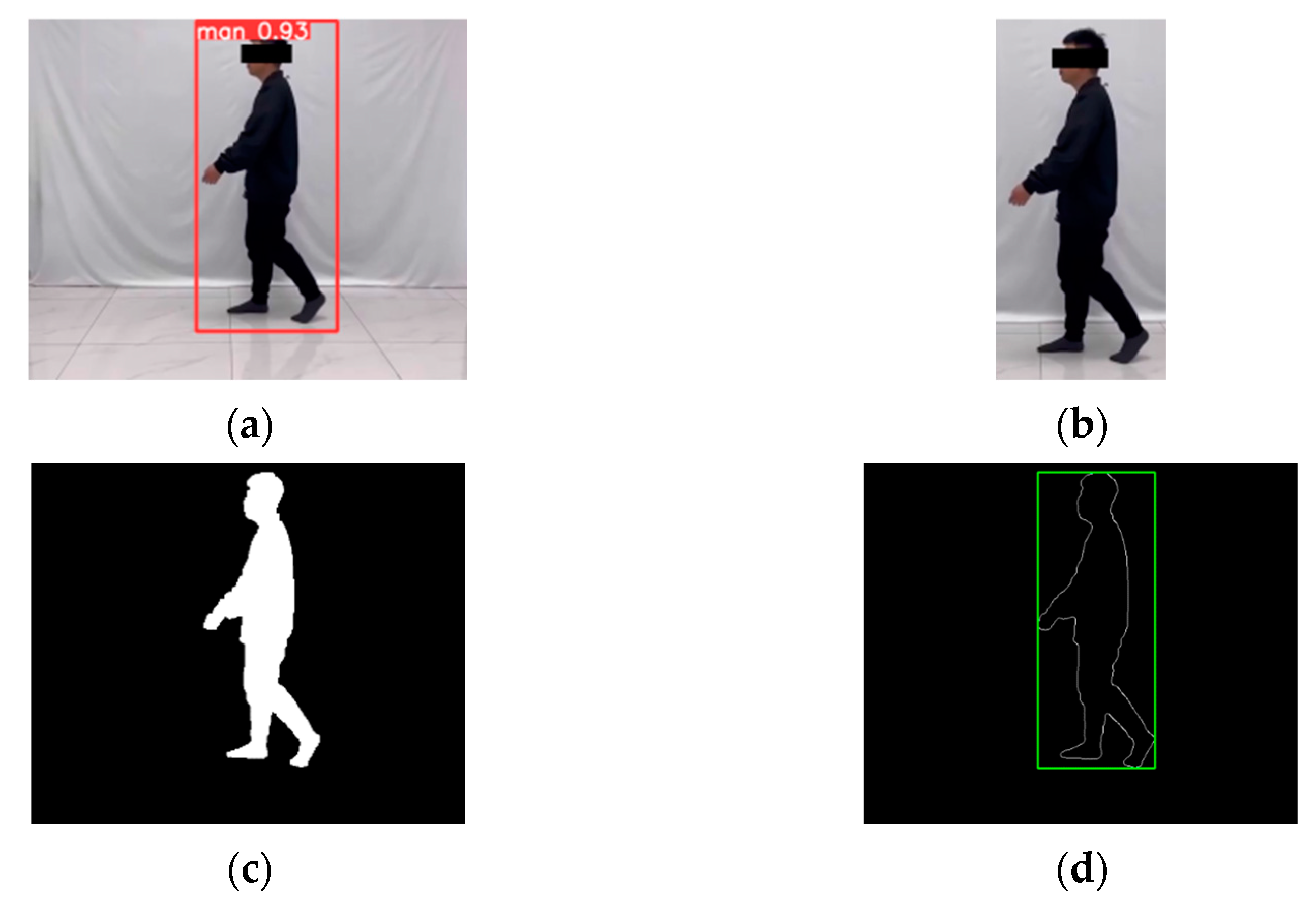

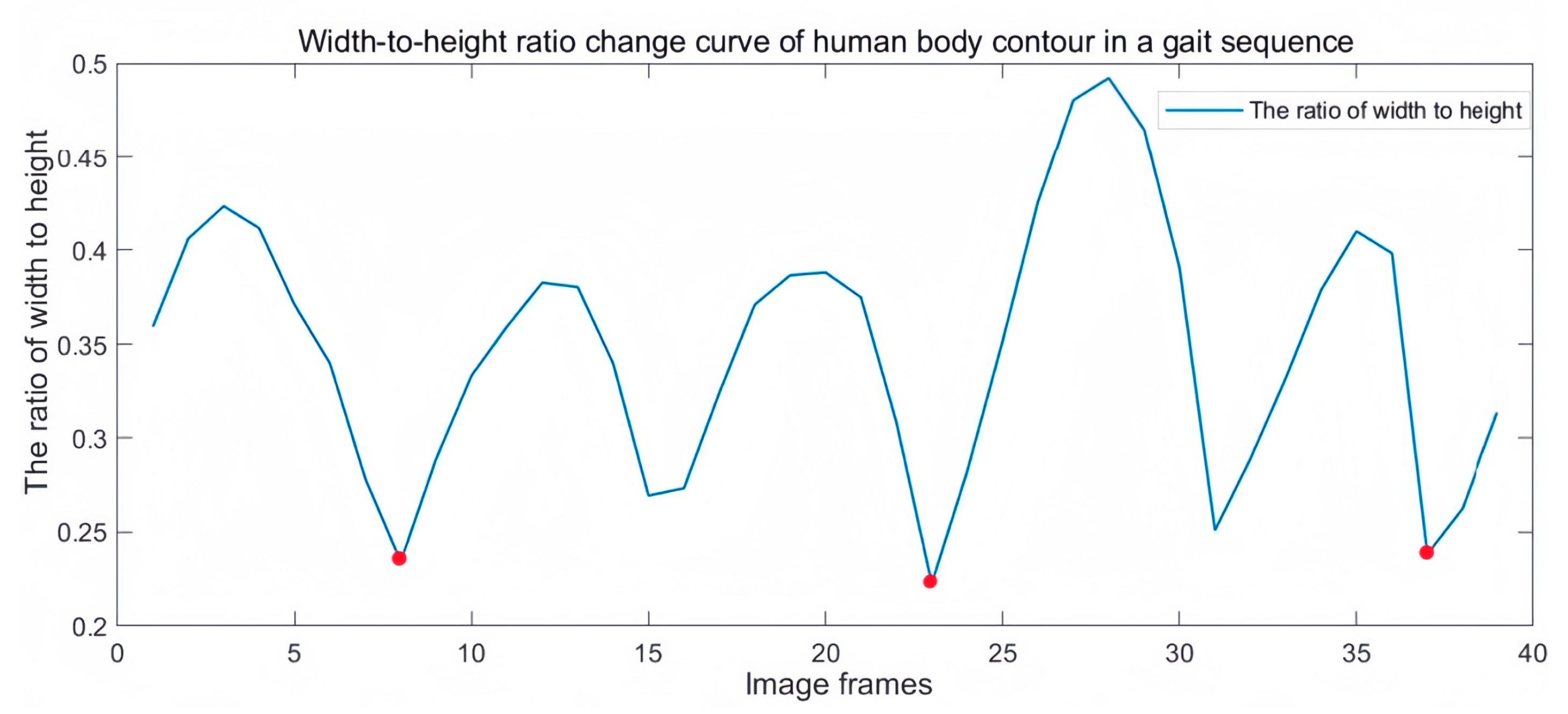

3.2. Gait Cycle Division Module

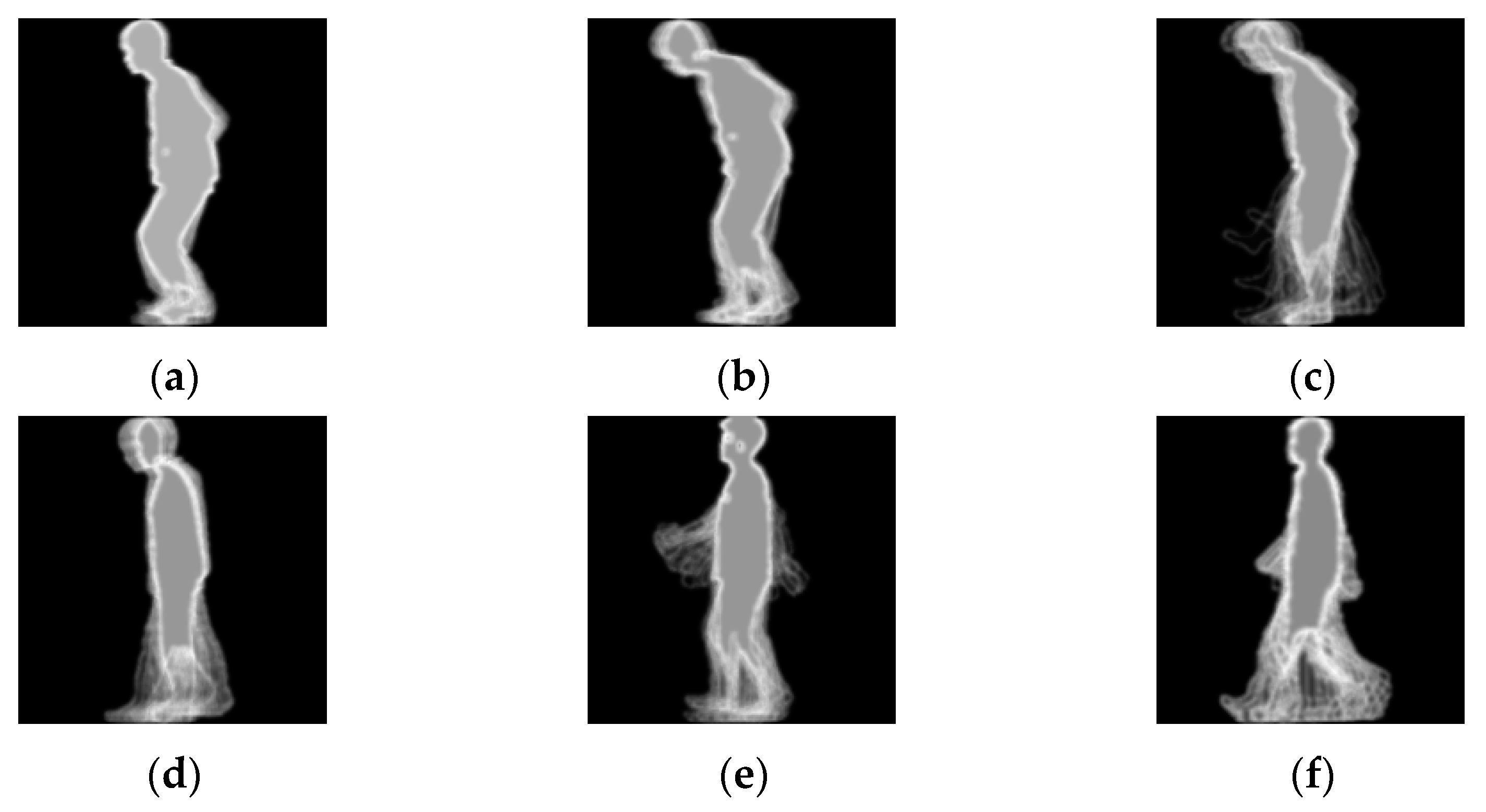

3.3. New Gait Template

3.3.1. Gait Energy Image (GEI)

3.3.2. Gait Entropy Image (GEnI)

3.3.3. Active Energy Image (AEI)

3.3.4. Batch Gradient Descent (BGD)

3.3.5. Fusion Energy Image (FEI)

3.4. NSVGT-ICBAM-FACN

3.5. MobileNetv2

3.6. Improved Convolutional Block Attention Module

3.7. Transfer Learning

4. Experiment and Result Analysis

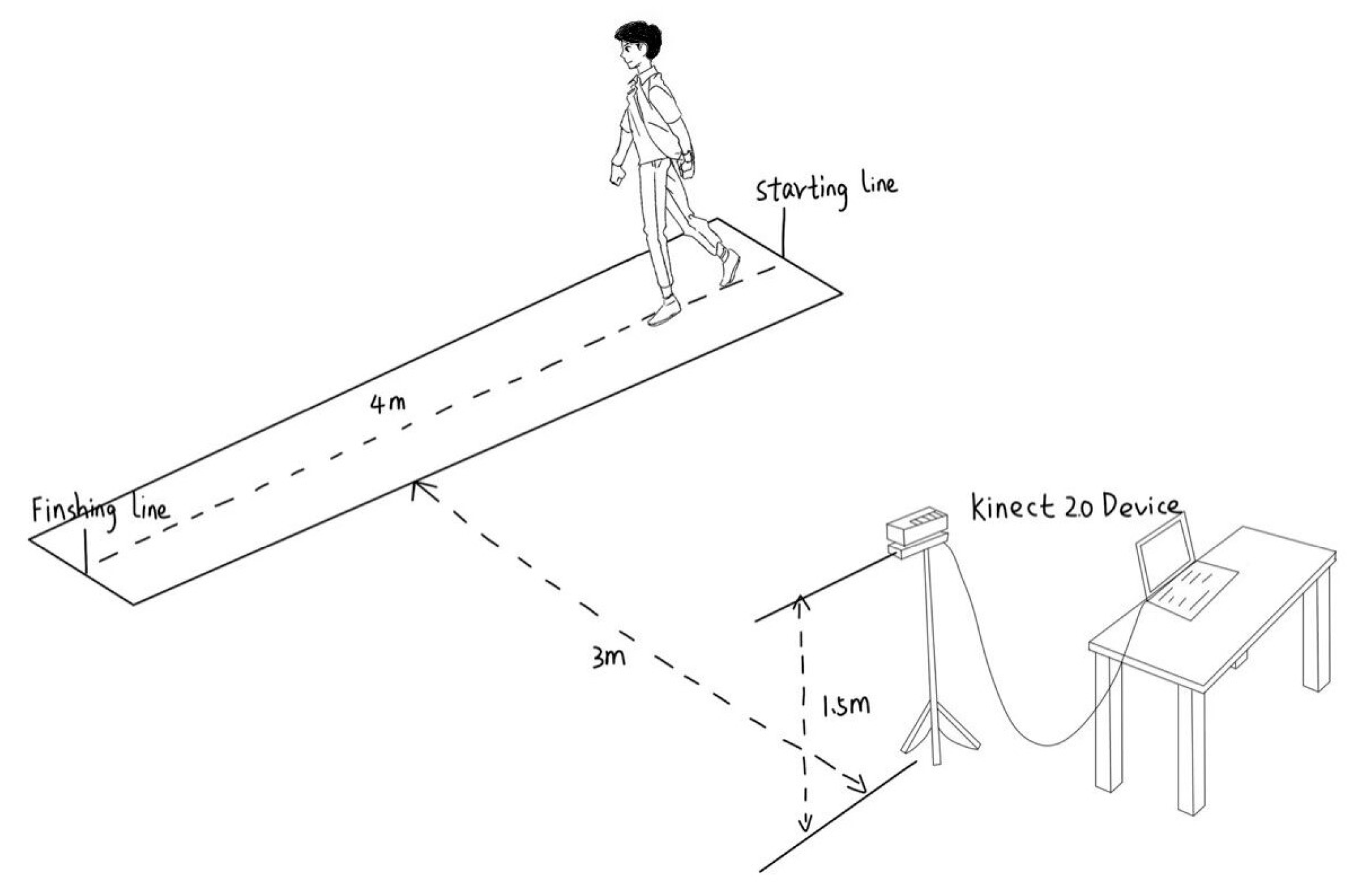

4.1. Dataset Construction

4.1.1. Characterization of the Five Gait Types

4.1.2. Experimental Scheme

4.2. Evaluation Indicators

4.3. Settings

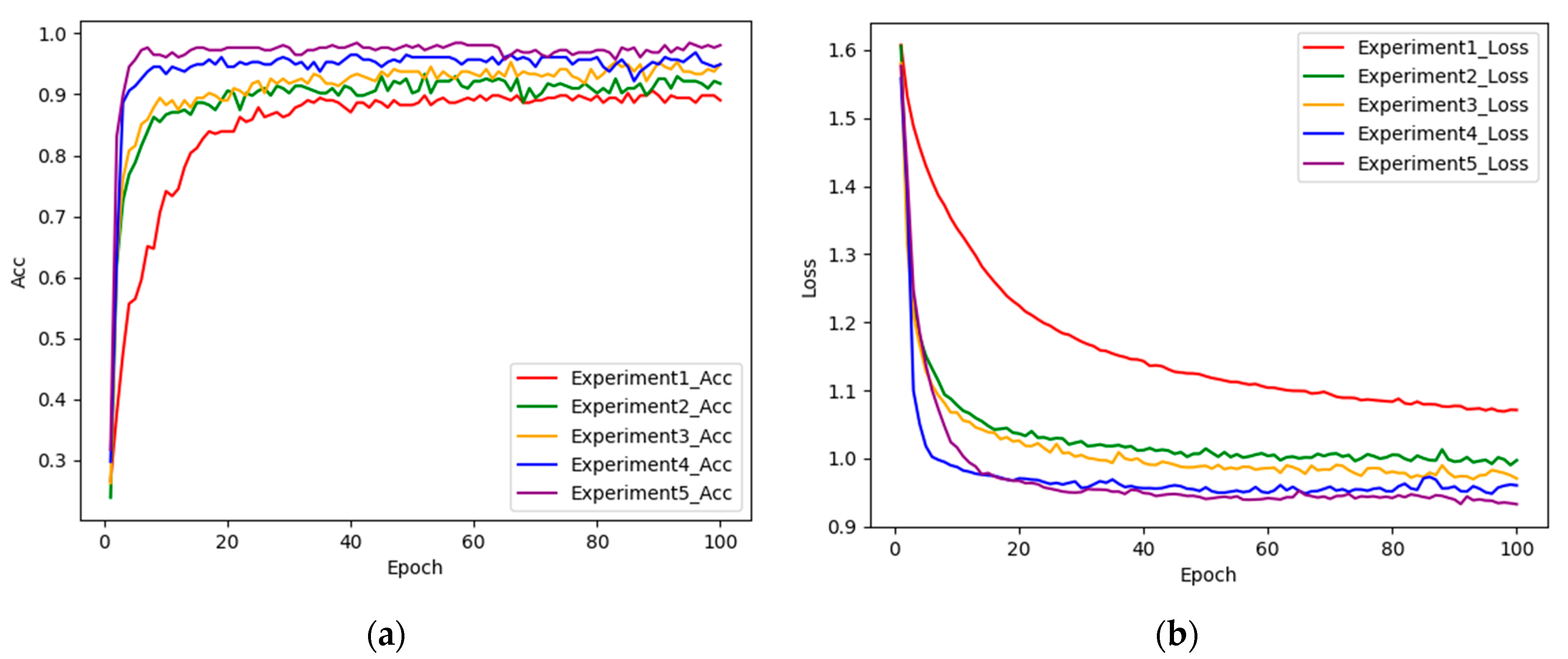

4.4. Ablation Study

4.5. Comparative Performance Experiments with Attention Modules

4.6. Comparative Experiments on the Validity of Different Components in the New Gait Template

4.7. Compared with the State-of-the-Art Models

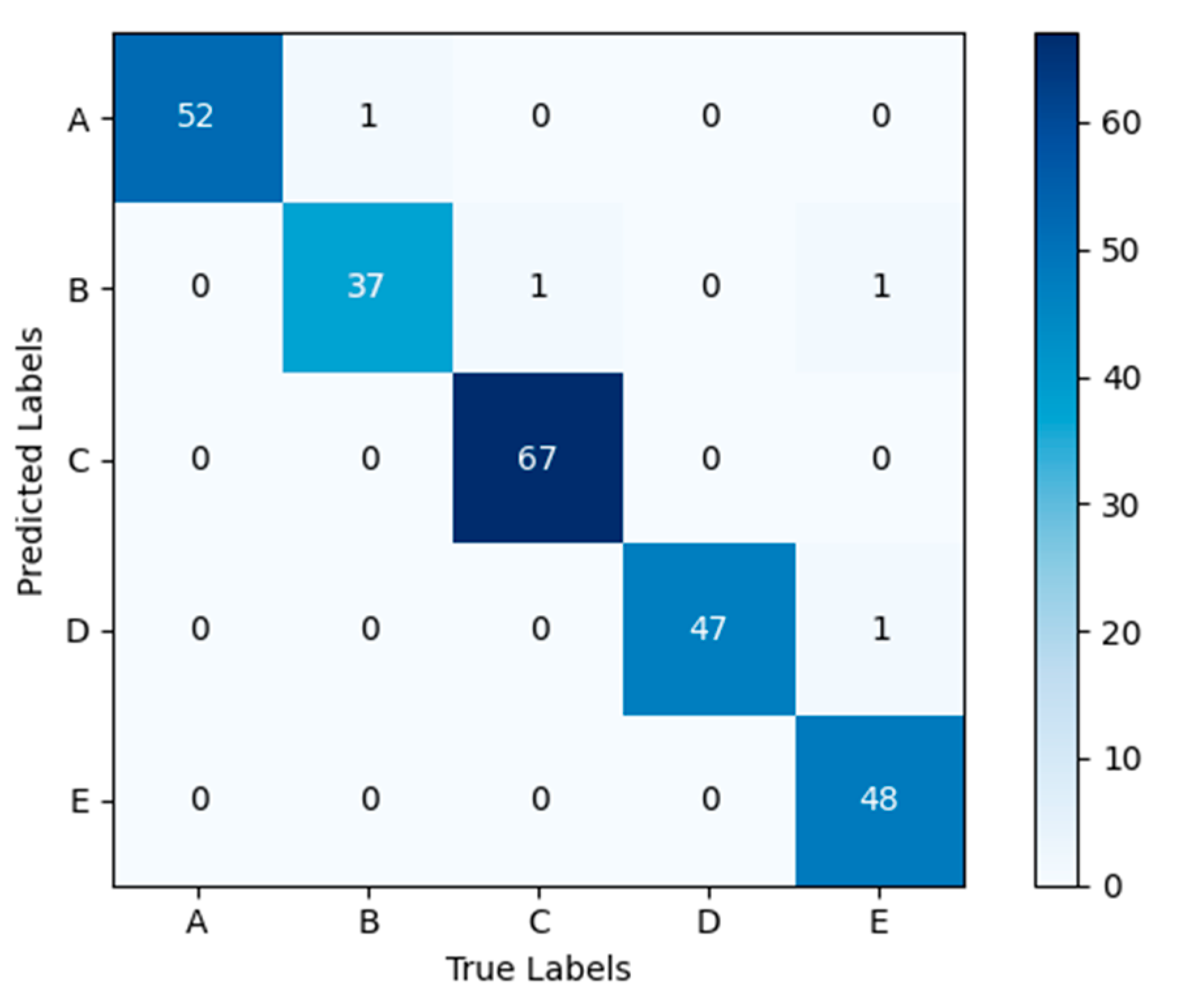

4.8. Experimental Results of the Model on the Self-Constructed Dataset

4.9. Experimental Results of the Model on the GAIT-IST Dataset

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pirker, W.; Katzenschlager, R. Gait Disorders in Adults and the Elderly: A Clinical Guide. Wien. Klin. Wochenschr. 2017, 129, 81–95. [Google Scholar] [CrossRef]

- Wu, J.; Huang, J.; Wu, X.; Dai, H. A Novel Graph-Based Hybrid Deep Learning of Cumulative GRU and Deeper GCN for Recognition of Abnormal Gait Patterns Using Wearable Sensors. Expert Syst. Appl. 2023, 233, 120968. [Google Scholar] [CrossRef]

- Connor, P.; Ross, A. Biometric Recognition by Gait: A Survey of Modalities and Features. Comput. Vis. Image Underst. 2018, 167, 1–27. [Google Scholar] [CrossRef]

- FitzGerald, J.J.; Lu, Z.; Jareonsettasin, P.; Antoniades, C.A. Quantifying Motor Impairment in Movement Disorders. Front. Neurosci. 2018, 12, 202. [Google Scholar] [CrossRef] [PubMed]

- Rida, I.; Almaadeed, N.; Almaadeed, S. Robust Gait Recognition: A Comprehensive Survey. IET Biom. 2019, 8, 14–28. [Google Scholar] [CrossRef]

- Sijobert, B.; Denys, J.; Coste, C.A.; Geny, C. IMU Based Detection of Freezing of Gait and Festination in Parkinson’s Disease. In Proceedings of the 2014 IEEE 19th International Functional Electrical Stimulation Society Annual Conference (IFESS), Kuala Lumpur, Malaysia, 17–19 September 2014; pp. 1–3. [Google Scholar]

- Zhao, H.; Wang, Z.; Qiu, S.; Shen, Y.; Wang, J. IMU-Based Gait Analysis for Rehabilitation Assessment of Patients with Gait Disorders. In Proceedings of the 2017 4th International Conference on Systems and Informatics (ICSAI), Hangzhou, China, 11–13 November 2017; pp. 622–626. [Google Scholar]

- Wang, L.; Sun, Y.; Li, Q.; Liu, T.; Yi, J. IMU-Based Gait Normalcy Index Calculation for Clinical Evaluation of Impaired Gait. IEEE J. Biomed. Health Inform. 2021, 25, 3–12. [Google Scholar] [CrossRef]

- Potluri, S.; Ravuri, S.; Diedrich, C.; Schega, L. Deep Learning Based Gait Abnormality Detection Using Wearable Sensor System. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 3613–3619. [Google Scholar]

- Wang, M.; Yong, S.; He, C.; Chen, H.; Zhang, S.; Peng, C.; Wang, X. Research on Abnormal Gait Recognition Algorithms for Stroke Patients Based on Array Pressure Sensing System. In Proceedings of the 2019 IEEE 3rd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chengdu, China, 15–17 March 2019; pp. 1560–1563. [Google Scholar]

- Tian, H.; Ma, X.; Wu, H.; Li, Y. Skeleton-Based Abnormal Gait Recognition with Spatio-Temporal Attention Enhanced Gait-Structural Graph Convolutional Networks. Neurocomputing 2022, 473, 116–126. [Google Scholar] [CrossRef]

- Lee, D.-W.; Jun, K.; Lee, S.; Ko, J.-K.; Kim, M.S. Abnormal Gait Recognition Using 3D Joint Information of Multiple Kinects System and RNN-LSTM. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 542–545. [Google Scholar]

- Chen, Y.-Y. A Vision-Based Regression Model to Evaluate Parkinsonian Gait from Monocular Image Sequences. Expert Syst. Appl. 2012, 39, 520–526. [Google Scholar] [CrossRef]

- Albuquerque, P.; Verlekar, T.T.; Correia, P.L.; Soares, L.D. A Spatiotemporal Deep Learning Approach for Automatic Pathological Gait Classification. Sensors 2021, 21, 6202. [Google Scholar] [CrossRef] [PubMed]

- Zhou, C.; Feng, D.; Chen, S.; Ban, N.; Pan, J. Portable Vision-Based Gait Assessment for Post-Stroke Rehabilitation Using an Attention-Based Lightweight CNN. Expert Syst. Appl. 2024, 238, 122074. [Google Scholar] [CrossRef]

- Han, J.; Bhanu, B. Individual Recognition Using Gait Energy Image. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 316–322. [Google Scholar] [CrossRef] [PubMed]

- Elkholy, A.; Makihara, Y.; Gomaa, W.; Rahman Ahad, M.A.; Yagi, Y. Unsupervised GEI-Based Gait Disorders Detection From Different Views. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 5423–5426. [Google Scholar]

- Zhou, C.; Mitsugami, I.; Yagi, Y. Detection of Gait Impairment in the Elderly Using patch-GEI. IEEJ Trans. Electr. Electron. Eng. 2015, 10, S69–S76. [Google Scholar] [CrossRef]

- Albuquerque, P.; Machado, J.P.; Verlekar, T.T.; Correia, P.L.; Soares, L.D. Remote Gait Type Classification System Using Markerless 2D Video. Diagnostics 2021, 11, 1824. [Google Scholar] [CrossRef] [PubMed]

- Bashir, K.; Xiang, T.; Gong, S. Gait Recognition Using Gait Entropy Image. In Proceedings of the 3rd International Conference on Imaging for Crime Detection and Prevention (ICDP 2009), London, UK, 3 December 2009; p. P2. [Google Scholar]

- Zhang, E.; Zhao, Y.; Xiong, W. Active Energy Image plus 2DLPP for Gait Recognition. Signal Process. 2010, 90, 2295–2302. [Google Scholar] [CrossRef]

- Verlekar, T.T.; Lobato Correia, P.; Soares, L.D. Using Transfer Learning for Classification of Gait Pathologies. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2376–2381. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Ortells, J.; Herrero-Ezquerro, M.T.; Mollineda, R.A. Vision-Based Gait Impairment Analysis for Aided Diagnosis. Med. Biol. Eng. Comput. 2018, 56, 1553–1564. [Google Scholar] [CrossRef]

- Nieto-Hidalgo, M.; Ferrández-Pastor, F.J.; Valdivieso-Sarabia, R.J.; Mora-Pascual, J.; García-Chamizo, J.M. Vision Based Extraction of Dynamic Gait Features Focused on Feet Movement Using RGB Camera. In Proceedings of the Ambient Intelligence for Health; Springer: Cham, Switzerland, 2015; pp. 155–166. [Google Scholar]

- Nieto-Hidalgo, M.; García-Chamizo, J.M. Classification of Pathologies Using a Vision Based Feature Extraction. In Proceedings of the Ubiquitous Computing and Ambient Intelligence; Springer: Cham, Switzerland, 2017; pp. 265–274. [Google Scholar]

- Loureiro, J.; Correia, P.L. Using a Skeleton Gait Energy Image for Pathological Gait Classification. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 503–507. [Google Scholar]

- Sukkar, M.; Kumar, D.; Sindha, J. Real-Time Pedestrians Detection by YOLOv5. In Proceedings of the 2021 12th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kharagpur, India, 6 July 2021; pp. 1–6. [Google Scholar]

- Žilinskas, A. Review of Practical Mathematical Optimization: An Introduction to Basic Optimization Theory and Classical and New Gradient-Based Algorithms. Interfaces 2006, 36, 613–615. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision— ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11211, pp. 3–19. ISBN 978-3-030-01233-5. [Google Scholar]

- Iman, M.; Arabnia, H.R.; Rasheed, K. A Review of Deep Transfer Learning and Recent Advancements. Technologies 2023, 11, 40. [Google Scholar] [CrossRef]

- Fei-Fei, L.; Deng, J.; Li, K. ImageNet: Constructing a large-scale image database. J. Vis. 2010, 9, 1037. [Google Scholar] [CrossRef]

- Ebersbach, G.; Moreau, C.; Gandor, F.; Defebvre, L.; Devos, D. Clinical Syndromes: Parkinsonian Gait. Mov. Disord. 2013, 28, 1552–1559. [Google Scholar] [CrossRef]

- Wick, J.Y.; Zanni, G.R. Tiptoeing Around Gait Disorders: Multiple Presentations, Many Causes. Consult. Pharm. 2010, 25, 724–737. [Google Scholar] [CrossRef]

- Schaafsma, J.D.; Balash, Y.; Gurevich, T.; Bartels, A.L.; Hausdorff, J.M.; Giladi, N. Characterization of Freezing of Gait Subtypes and the Response of Each to Levodopa in Parkinson’s Disease. Euro J. Neurol. 2003, 10, 391–398. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Tan, D.; Tan, T. A Framework for Evaluating the Effect of View Angle, Clothing and Carrying Condition on Gait Recognition. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; pp. 441–444. [Google Scholar]

| Gait Type | Gait Characteristics |

|---|---|

| Festinating gait | The subject’s body leaned forward with increasing speed and smaller steps [35]. |

| Scissor gait | The subject’s legs flexed slightly at the hips and knees, with the knees and thighs hitting or crossing in a scissors-like movement [36]. |

| Hemiparetic gait | The subject’s legs were swung outward in a semicircle [36]. |

| Shuffling gait | Subjects dragged and took small steps [37]. |

| Normal gait | Subjects walked normally without abnormal movements. |

| Number | Single Branch | Dual Branch | ICBAM | DSC | Customized Head (Except DSC) |

|---|---|---|---|---|---|

| 1 | √ | × | × | × | × |

| 2 | √ | × | √ | × | × |

| 3 | × | √ | √ | × | × |

| 4 | × | √ | √ | √ | × |

| 5 | × | √ | √ | √ | √ |

| Number | Acc (%) | Prec (%) | Sens (%) | Spec (%) | MF1 |

|---|---|---|---|---|---|

| 1 | 90.59 | 90.55 | 89.63 | 97.64 | 89.84 |

| 2 | 93.33 | 93.08 | 92.78 | 98.34 | 92.82 |

| 3 | 95.29 | 95.06 | 94.96 | 98.83 | 95 |

| 4 | 96.86 | 96.60 | 96.85 | 99.23 | 96.70 |

| 5 | 98.43 | 98.18 | 98.38 | 99.62 | 98.26 |

| Type | Datasets | Parameters (Million) | Acc (%) |

|---|---|---|---|

| ECA | Our Dataset | 2.8 | 96.86 |

| SE | Our Dataset | 3.01 | 97.25 |

| CBAM | Our Dataset | 3.01 | 97.65 |

| Proposed | Our Dataset | 3.01 | 98.43 |

| ECA | GAIT-IST | 2.68 | 98.04 |

| SE | GAIT-IST | 2.89 | 98.37 |

| CBAM | GAIT-IST | 2.89 | 98.37 |

| Proposed | GAIT-IST | 2.89 | 98.69 |

| Type | Acc (%) | Prec (%) | Sens (%) | Spec (%) | MF1 |

|---|---|---|---|---|---|

| AEI | 90.59 | 90.71 | 89.98 | 97.67 | 90.11 |

| GEnI | 96.08 | 95.80 | 95.60 | 99.03 | 95.67 |

| GEI | 95.69 | 95.59 | 94.77 | 98.93 | 95 |

| FEI | 98.43 | 98.18 | 98.38 | 99.62 | 98.26 |

| Method | Dataset | Data Type | Parameters (Million) | Flops (Billion) | Memory Usage (MB) | FPS (f·s−1) | Acc (%) |

|---|---|---|---|---|---|---|---|

| GhostNet [15] | GAIT-IST | SEI | 2.6 | 0.176 | - | - | 98.10 |

| VGG-19 [28] | GAIT-IST | SEI | 139 | 19.6 | 532 | 76.38 | 97.40 |

| Proposed | GAIT-IST | SEI | 2.89 | 0.322 | 11.2 | 111.25 | 98.04 |

| Proposed | GAIT-IST | FEI | 2.89 | 0.322 | 11.2 | 112.23 | 98.69 |

| VGG-19 [28] | Our Dataset | FEI | 139 | 19.6 | 652 | 67.05 | 97.66 |

| Proposed | Our Dataset | FEI | 3.01 | 0.42 | 11.7 | 111 | 98.43 |

| Gait Type | Prec (%) | Sens (%) | Spec (%) |

|---|---|---|---|

| Festinating Gait | 98.11 | 100 | 99.51 |

| Scissor Gait | 94.87 | 97.37 | 99.08 |

| Normal Gait | 100 | 98.53 | 100 |

| Hemiparetic Gait | 97.92 | 100 | 99.52 |

| Shuffling gait | 100 | 96 | 100 |

| Gait Type | Prec (%) | Sens (%) | Spec (%) |

|---|---|---|---|

| Diplegic Gait | 98.31 | 96.67 | 99.59 |

| Hemiplegic Gait | 98.44 | 100 | 99.59 |

| Neuropathic Gait | 98.44 | 98.44 | 99.59 |

| Normal Gait | 100 | 100 | 100 |

| Parkinsonian Gait | 98.95 | 98.95 | 99.53 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Wang, B.; Li, Y.; Liu, B. A Lightweight Pathological Gait Recognition Approach Based on a New Gait Template in Side-View and Improved Attention Mechanism. Sensors 2024, 24, 5574. https://doi.org/10.3390/s24175574

Li C, Wang B, Li Y, Liu B. A Lightweight Pathological Gait Recognition Approach Based on a New Gait Template in Side-View and Improved Attention Mechanism. Sensors. 2024; 24(17):5574. https://doi.org/10.3390/s24175574

Chicago/Turabian StyleLi, Congcong, Bin Wang, Yifan Li, and Bo Liu. 2024. "A Lightweight Pathological Gait Recognition Approach Based on a New Gait Template in Side-View and Improved Attention Mechanism" Sensors 24, no. 17: 5574. https://doi.org/10.3390/s24175574