Robust Infrared–Visible Fusion Imaging with Decoupled Semantic Segmentation Network

Abstract

1. Introduction

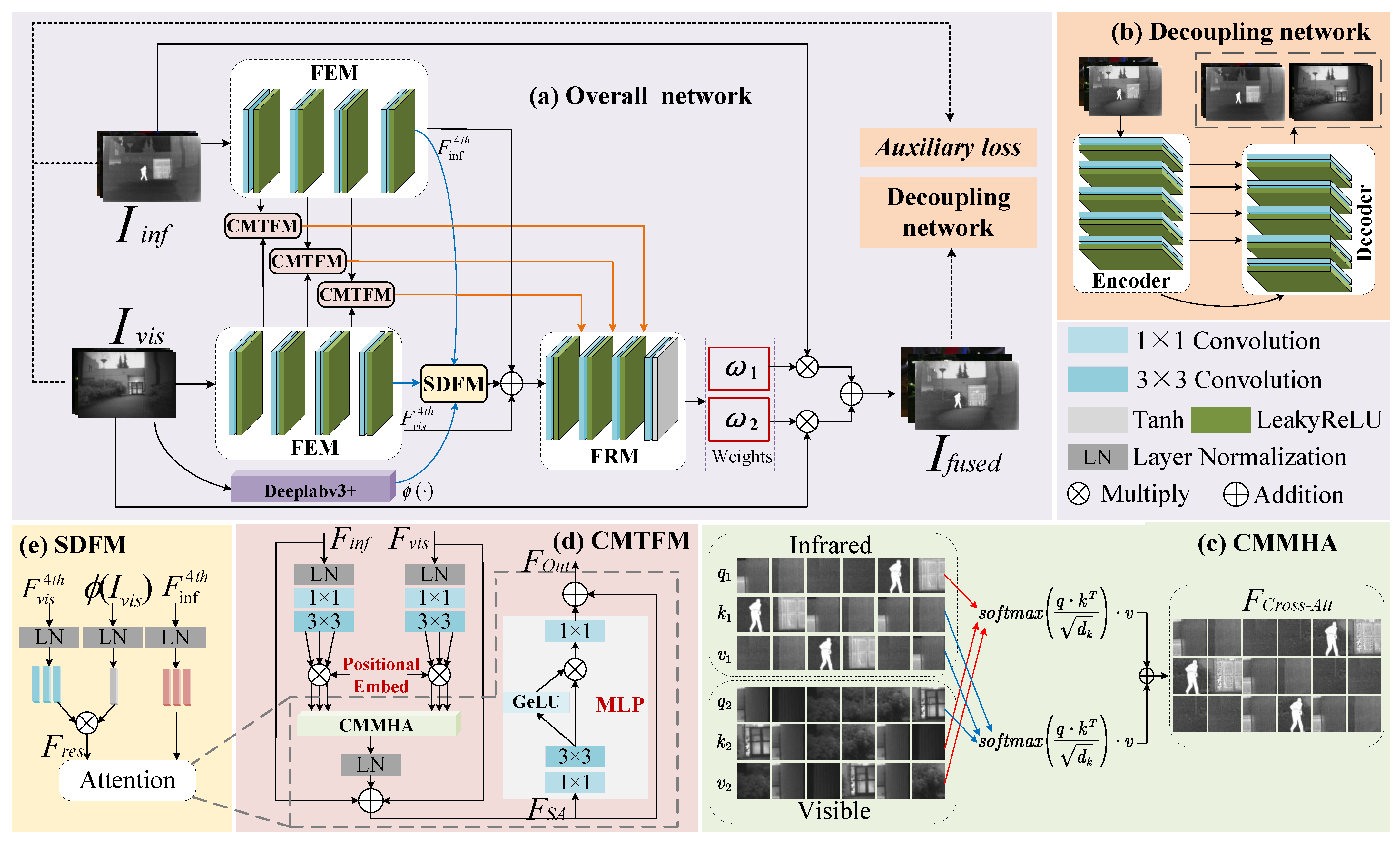

- We constructed an IVIF method based on a decoupled and semantic segmentation-driven network, which can automatically adjust the fusion weights and integrate meaningful information from the IVIs.

- We developed two fusion modules, namely, CMTFM and SDFM. These modules integrate information from different modalities by combining the transformer cross-attention mechanism with semantic segmentation, thus embedding semantic information into the fusion process.

- A refined loss function was designed to improve the quality of the fused images by importing auxiliary losses through the decoupling network to guide the training of the fusion network.

- We constructed a new benchmark dataset for evaluating infrared and visible image fusion in a special scenario called the Maritime Infrared and Visible (MIV) dataset. The benchmark dataset is available from https://github.com/xhzhang0377/MIV-Dataset (accessed on 19 April 2025).

2. Related Work

2.1. Deep-Learning-Based IVIF Method

2.2. Transformer-Based IVIF

2.3. Advanced Task-Guided IVIF

3. Proposed Method

3.1. Network Construction

3.2. Modules

3.2.1. Cross-Modality Transformer Fusion Module

3.2.2. Semantic-Driven Fusion Module

3.2.3. Decoupling Network

3.3. Loss Function

3.3.1. Loss Function of the Decoupling Network

3.3.2. Joint Loss of DSSFusion

4. Experiments

4.1. Experimental Setup

4.2. Dataset Preparation

4.3. Comparison Method and Evaluation Metrics

4.4. Training Details

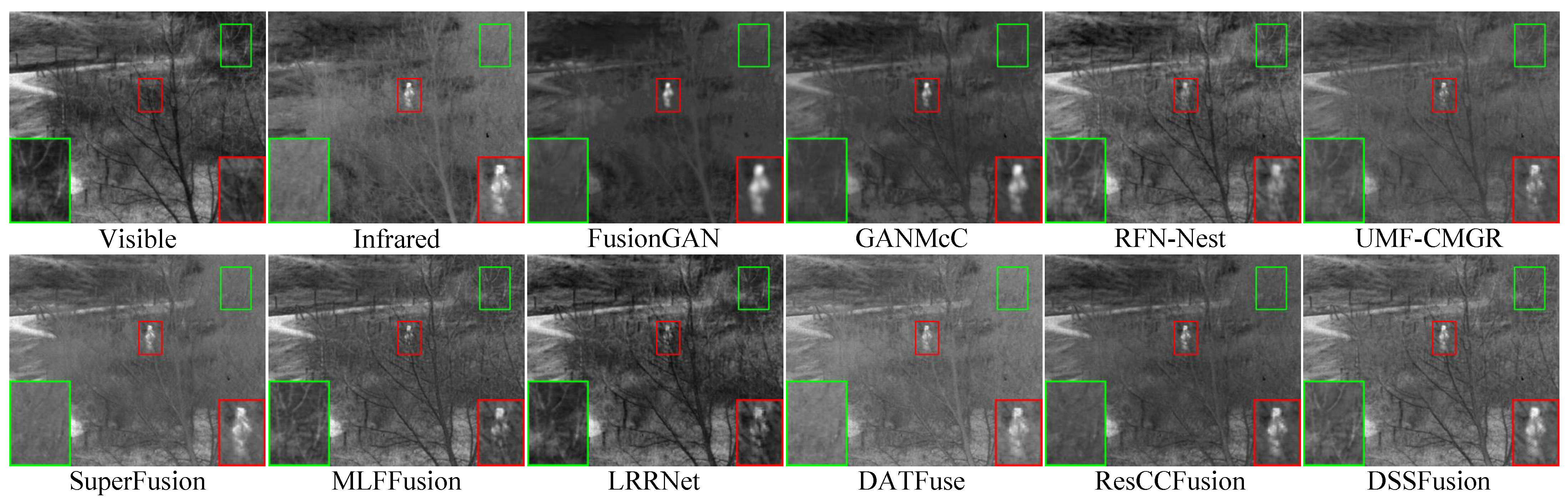

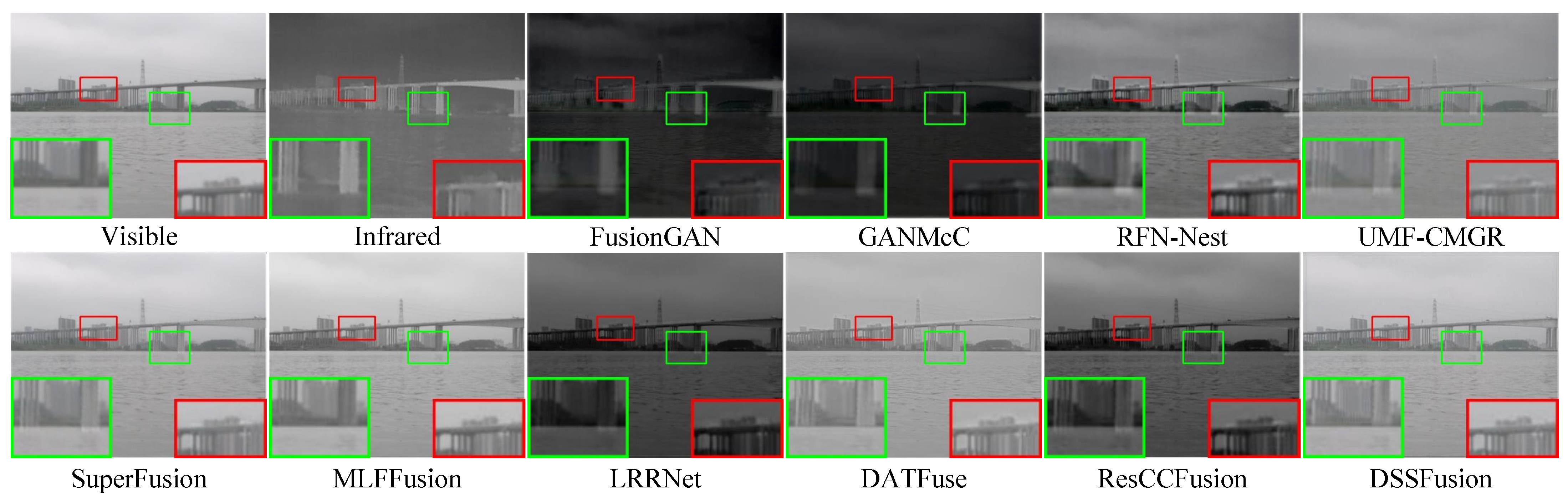

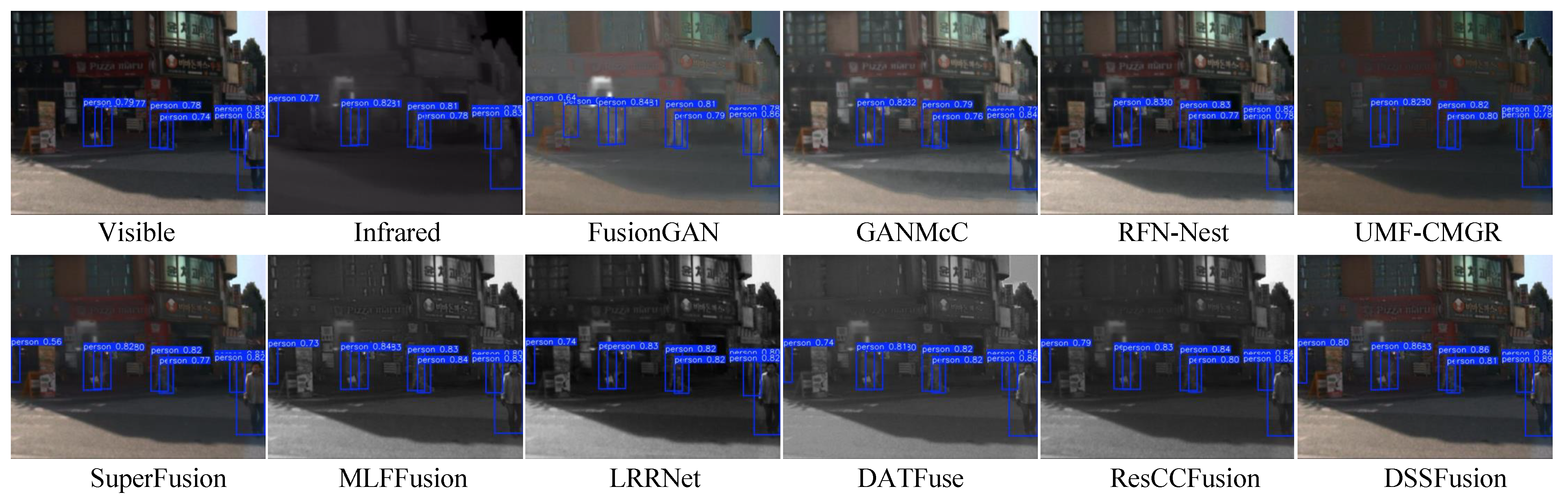

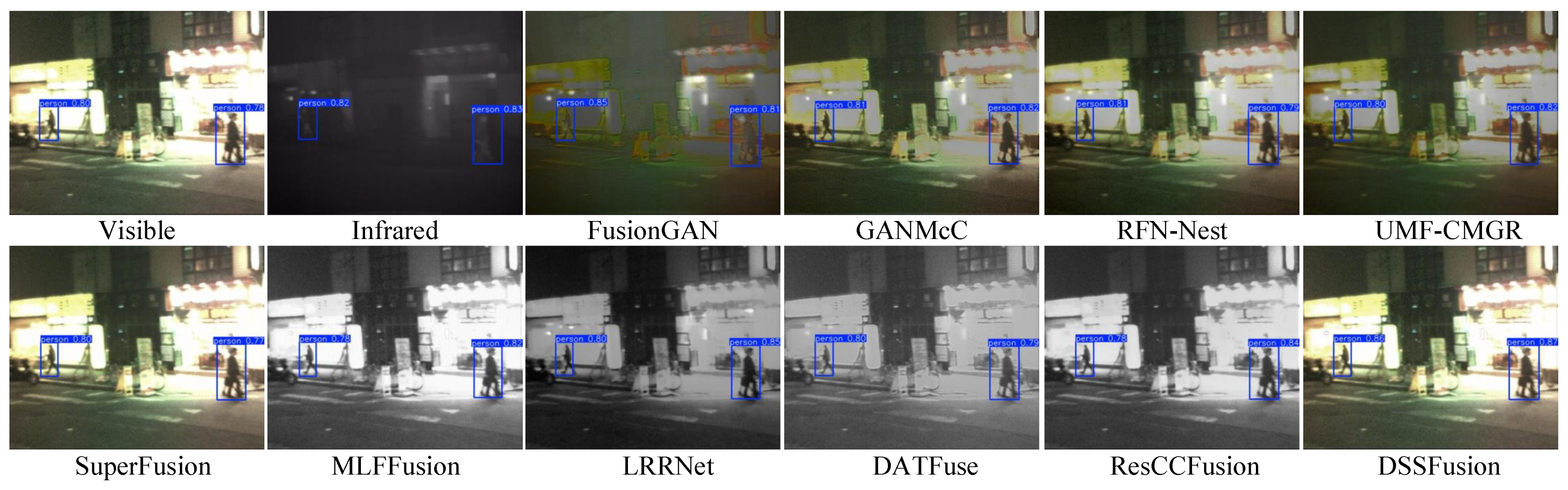

4.5. Experimental Results on Public Datasets

4.5.1. Fusion Results on LLVIP Dataset

4.5.2. Fusion Results on MSRS Dataset

4.5.3. Fusion Results on TNO Dataset

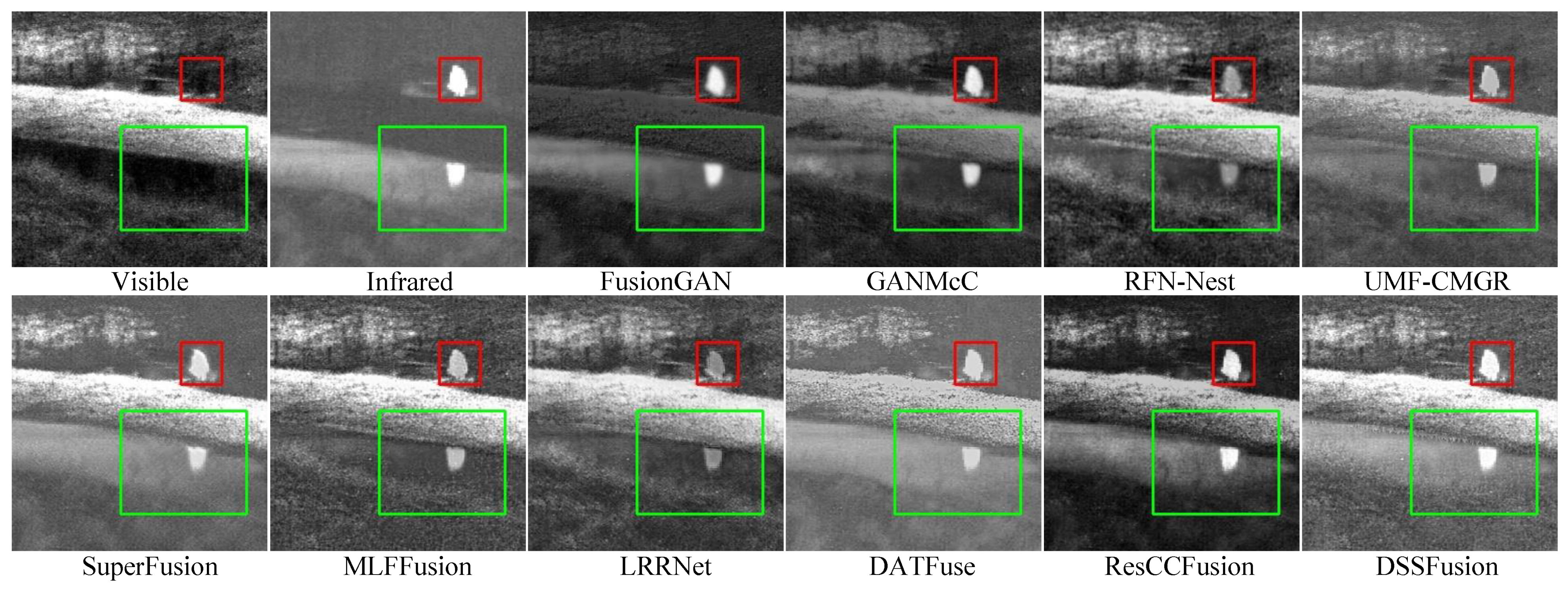

4.6. Experimental Results on Actual Marine Datasets

4.7. Ablation Study

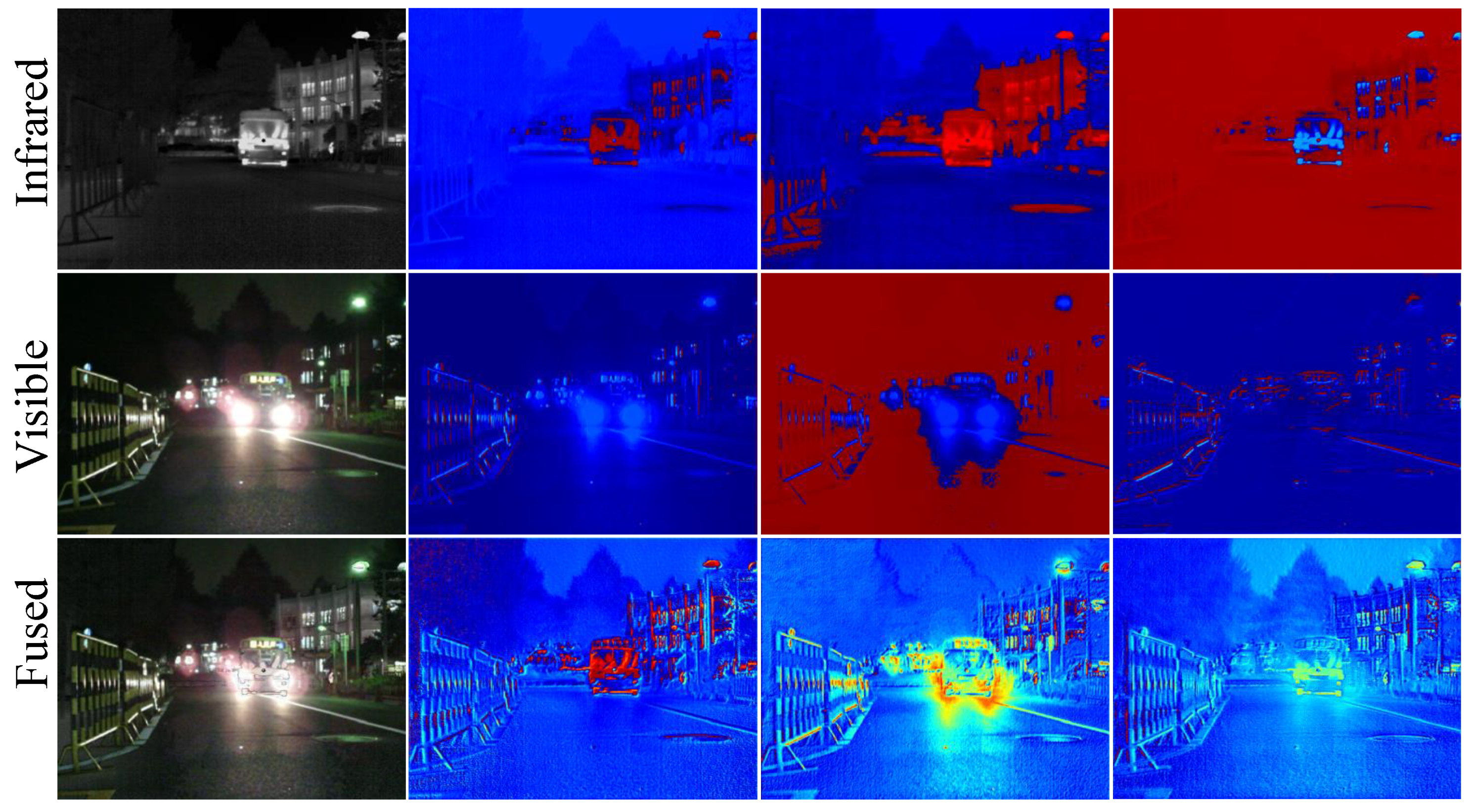

4.8. Feature Visualization of Fusion Module

4.9. Visualization of the Decoupling Network

5. Target Detection

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jiang, C.; Ren, H.; Ye, X.; Zhu, J.; Zeng, H.; Nan, Y.; Sun, M.; Ren, X.; Huo, H. Object detection from UAV thermal infrared images and videos using YOLO models. Int. J. Appl. Earth Obs. Geoinform. 2022, 112, 102912. [Google Scholar] [CrossRef]

- Ma, W.; Wang, K.; Li, J.; Yang, S.X.; Li, J.; Song, L.; Li, Q. Infrared and visible image fusion technology and application: A review. Sensors 2023, 23, 599. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Xu, H.; Tian, X.; Jiang, J.; Ma, J. Image fusion meets deep learning: A survey and perspective. Inf. Fusion 2021, 76, 323–336. [Google Scholar] [CrossRef]

- Li, C.; Zhu, C.; Huang, Y.; Tang, J.; Wang, L. Cross-modal ranking with soft consistency and noisy labels for robust RGB-T tracking. Proc. Eur. Conf. Comput. Vis. (ECCV) 2018, 11217, 808–823. [Google Scholar]

- Zhou, W.; Liu, J.; Lei, J.; Yu, L.; Hwang, J.-N. GMNet: Graded-feature multilabel-learning network for RGB-thermal urban scene semantic segmentation. IEEE Trans. Image Process. 2021, 30, 7790–7802. [Google Scholar] [CrossRef]

- Lu, Y.; Wu, Y.; Liu, B.; Zhang, T.; Li, B.; Chu, Q.; Yu, N. Cross-modality person re-identification with shared specific feature transfer. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 13376–13386. [Google Scholar]

- Ma, J.; Zhou, Y. Infrared and visible image fusion via gradientlet filter. Comput. Vis. Image Underst. 2020, 197–198, 103016. [Google Scholar] [CrossRef]

- Chen, J.; Li, X.; Luo, L.; Mei, X.; Ma, J. Infrared and visible image fusion based on target-enhanced multiscale transform decomposition. Inform. Sci. 2020, 508, 64–78. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.K.; Wang, Z.J. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Xing, C.; Wang, Z.; Ouyang, Q.; Dong, C.; Duan, C. Image fusion method based on spatially masked convolutional sparse representation. Image Vis. Comput. 2019, 90, 103806. [Google Scholar] [CrossRef]

- Kong, W.; Lei, Y.; Zhao, H. Adaptive fusion method of visible light and infrared images based on non-subsampled shearlet transform and fast non-negative matrix factorization. Infr. Phys. Technol. 2014, 67, 161–172. [Google Scholar] [CrossRef]

- Bavirisetti, D.P.; Dhuli, R. Two-scale image fusion of visible and infrared images using saliency detection. Infr. Phys. Technol. 2016, 76, 52–64. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infr. Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Li, H.; Wu, X. DenseFuse: A fusion approach to infrared and visible images. IEEE T. Image Process. 2018, 28, 2614–2623. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Wu, X.; Durrani, T. NestFuse: An infrared and visible image fusion architecture based on nest connection and spatial/channel attention models. IEEE T. Instrum. Meas. 2020, 69, 9645–9656. [Google Scholar] [CrossRef]

- Ma, J.; Tang, L.; Xu, M.; Zhang, H.; Xiao, G. STDFusionNet: An infrared and visible image fusion network based on salient target detection. IEEE Trans. Instrum. Meas. 2021, 70, 5009513. [Google Scholar] [CrossRef]

- Ram Prabhakar, K.; Sai Srikar, V.; Venkatesh Babu, R. DeepFuse: A deep unsupervised approach for exposure fusion with extreme exposure image pairs. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4714–4722. [Google Scholar]

- Qi, J.; Abera, D.E.; Fanose, M.N.; Wang, L.; Cheng, J. A deep learning and image enhancement based pipeline for infrared and visible image fusion. Neurocomputing 2024, 578, 127353. [Google Scholar] [CrossRef]

- Li, H.; Xu, T.; Wu, X.-J.; Lu, J.; Kittler, J. LRRNet: A novel representation learning guided fusion network for infrared and visible images. IEEE T. Pattern Anal. Mach. Intell. 2023, 45, 11040–11052. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inform. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Zhang, H.; Yuan, J.; Tian, X.; Ma, J. GAN-FM: Infrared and visible image fusion using GAN with full-scale skip connection and dual Markovian discriminators. IEEE Trans. Comput. Imaging 2021, 7, 1134–1147. [Google Scholar] [CrossRef]

- Li, L.; Shi, Y.; Lv, M.; Jia, Z.; Liu, M.; Zhao, X.; Zhang, X.; Ma, H. Infrared and visible image fusion via sparse representation and guided filtering in laplacian pyramid domain. Remote Sens. 2024, 16, 3804. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Ma, J. Image fusion in the loop of high-level vision tasks: A semantic-aware real-time infrared and visible image fusion network. Inform. Fusion 2022, 82, 28–42. [Google Scholar] [CrossRef]

- Sun, Y.; Cao, B.; Zhu, P.; Hu, Q. Detfusion: A detection-driven infrared and visible image fusion network. In Proceedings of the 30th ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 4003–4011. [Google Scholar]

- Rao, D.; Xu, T.; Wu, X. TGFuse: An infrared and visible image fusion approach based on transformer and generative adversarial network. In IEEE Transactions on Image Processing; IEEE: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Rao, Y.; Wu, D.; Han, M.; Wang, T.; Yang, Y.; Lei, T.; Zhou, C.; Bai, H.; Xing, L. AT-GAN: A generative adversarial network with attention and transition for infrared and visible image fusion. Inform. Fusion 2023, 92, 336–349. [Google Scholar] [CrossRef]

- Liu, J.; Lin, R.; Wu, G.; Liu, R.; Luo, Z.; Fan, X. Coconet: Coupled contrastive learning network with multi-level feature ensemble for multi-modality image fusion. Int. J. Comput. Vis. 2024, 132, 1748–1775. [Google Scholar] [CrossRef]

- Zhang, H.; Ma, J. SDNet: A versatile squeeze-and-decomposition network for real-time image fusion. Int. J. Comput. Vis. 2021, 129, 2761–2785. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE T. Pattern Anal. 2020, 44, 502–518. [Google Scholar] [CrossRef]

- Cheng, C.; Xu, T.; Wu, X. MUFusion: A general unsupervised image fusion network based on memory unit. Inform. Fusion 2023, 92, 80–92. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhu, Y.; Zhang, J.; Xu, S.; Zhang, Y.; Zhang, K.; Meng, D.; Timofte, R.; Van Gool, L. DDFM: Denoising diffusion model for multi-modality image fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 8082–8093. [Google Scholar]

- Yue, J.; Fang, L.; Xia, S.; Deng, Y.; Ma, J. Dif-fusion: Toward high color fidelity in infrared and visible image fusion with diffusion models. IEEE T. Image Process. 2023, 32, 5705–5720. [Google Scholar] [CrossRef]

- Heredia-Aguado, E.; Cabrera, J.J.; Jiménez, L.M.; Valiente, D.; Gil, A. Static Early Fusion Techniques for Visible and Thermal Images to Enhance Convolutional Neural Network Detection: A Performance Analysis. Remote Sens. 2025, 17, 1060. [Google Scholar] [CrossRef]

- Vivone, G.; Deng, L.-J.; Deng, S.; Hong, D.; Jiang, M.; Li, C.; Li, W.; Shen, H.; Wu, X.; Xiao, J.-L.; et al. Deep Learning in Remote Sensing Image Fusion: Methods, protocols, data, and future perspectives. IEEE Geosci. Remote Sens. Mag. 2025, 13, 269–310. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Zhang, H.; Jiang, X.; Ma, J. PIAFusion: A progressive infrared and visible image fusion network based on illumination aware. Inform. Fusion 2022, 83, 79–92. [Google Scholar] [CrossRef]

- Song, W.; Gao, M.; Li, Q.; Guo, X.; Wang, Z.; Jeon, G. Optimizing Nighttime Infrared and Visible Image Fusion for Long-haul Tactile Internet. IEEE Trans. Consum. Electron. 2024, 70, 4277–4286. [Google Scholar] [CrossRef]

- Yang, Q.; Zhang, Y.; Zhao, Z.; Zhang, J.; Zhang, S. IAIFNet: An Illumination-Aware Infrared and Visible Image Fusion Network. IEEE Signal Process. Lett. 2024, 31, 1374–1378. [Google Scholar] [CrossRef]

- Wang, C.; Sun, D.; Gao, Q.; Wang, L.; Yan, Z.; Wang, J.; Wang, E.; Wang, T. MLFFusion: Multi-level feature fusion network with region illumination retention for infrared and visible image fusion. Infrared Phys. Techn. 2023, 134, 104916. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.; Kittler, J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images. Inform. Fusion 2021, 73, 72–86. [Google Scholar] [CrossRef]

- Zhao, Z.; Xu, S.; Zhang, J.; Liang, C.; Zhang, C.; Liu, J. Efficient and model-based infrared and visible image fusion via algorithm unrolling. IEEE T. Circ. Syst. Vid. 2021, 32, 1186–1196. [Google Scholar] [CrossRef]

- Tang, L.; Xiang, X.; Zhang, H.; Gong, M.; Ma, J. DIVFusion: Darkness-free infrared and visible image fusion. Inform. Fusion 2023, 91, 477–493. [Google Scholar] [CrossRef]

- Liu, J.; Dian, R.; Li, S.; Liu, H. SGFusion: A saliency guided deep-learning framework for pixel-level image fusion. Inform. Fusion 2023, 91, 205–214. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhang, J.; Zhang, Y.; Xu, S.; Lin, Z.; Timofte, R.; Van Gool, L. CDDFuse: Correlation-driven dual-branch feature decomposition for multi-modality image fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 5906–5916. [Google Scholar]

- Xiong, Z.; Zhang, X.; Han, H.; Hu, Q. ResCCFusion: Infrared and visible image fusion network based on ResCC module and spatial criss-cross attention models. Infrared Phys. Technol. 2024, 136, 104962. [Google Scholar] [CrossRef]

- Mei, L.; Hu, X.; Ye, Z.; Tang, L.; Wang, Y.; Li, D.; Liu, Y.; Hao, X.; Lei, C.; Xu, C. GTMFuse: Group-attention transformer-driven multiscale dense feature-enhanced network for infrared and visible image fusion. Knowl. Based Syst. 2024, 293, 111658. [Google Scholar] [CrossRef]

- Wang, D.; Liu, J.; Fan, X.; Liu, R. Unsupervised misaligned infrared and visible image fusion via cross-modality image generation and registration. In Proceedings of the International Joint Conference on Artificial Intelligence, Messe Wien, Austria, 23–29 July 2022; pp. 3508–3515. [Google Scholar]

- Ma, J.; Zhang, H.; Shao, Z.; Liang, P.; Xu, H. GANMcC: A generative adversarial network with multiclassification constraints for infrared and visible image fusion. IEEE T. Instrum. Meas. 2020, 70, 1–14. [Google Scholar] [CrossRef]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-aware dual adversarial learning and a multi-scenario multi-modality benchmark to fuse infrared and visible for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5802–5811. [Google Scholar]

- Ma, J.; Tang, L.; Fan, F.; Huang, J.; Mei, X.; Ma, Y. SwinFusion: Cross-domain long-range learning for general image fusion via swin transformer. IEEE/CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Tang, W.; He, F.; Liu, Y.; Duan, Y.; Si, T. DATFuse: Infrared and visible image fusion via dual attention transformer. IEEE T. Circuits Syst. Video Technol. 2023, 33, 3159–3172. [Google Scholar] [CrossRef]

- Zhu, H.; Wu, H.; He, D.; Lan, R.; Liu, Z.; Pan, X. AcFusion: Infrared and visible image fusion based on self-attention and convolution with enhanced information extraction. IEEE T. Cons. Electron. 2023, 70, 4155–4167. [Google Scholar] [CrossRef]

- Mustafa, H.T.; Shamsolmoali, P.; Lee, I.H. TGF: Multiscale transformer graph attention network for multi-sensor image fusion. Expert Syst. Appl. 2024, 238, 121789. [Google Scholar] [CrossRef]

- Wu, X.; Cao, Z.-H.; Huang, T.-Z.; Deng, L.-J.; Chanussot, J.; Vivone, G. Fully-Connected Transformer for Multi-Source Image Fusion. IEEE T. Pattern Anal. Mach. Intell. 2025, 47, 2071–2088. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.; Deng, Y.; Ma, Y.; Huang, J.; Ma, J. SuperFusion: A versatile image registration and fusion network with semantic awareness. IEEE/CAA J. Autom. Sin. 2022, 9, 2121–2137. [Google Scholar] [CrossRef]

- Huo, X.; Deng, Y.; Shao, K. Infrared and Visible Image Fusion with Significant Target Enhancement. Entropy 2022, 24, 1633. [Google Scholar] [CrossRef]

- Li, L.; Lv, M.; Jia, Z.; Jin, Q.; Liu, M.; Chen, L.; Ma, H. An effective infrared and visible image fusion approach via rolling guidance filtering and gradient saliency map. Remote Sens. 2023, 15, 2486. [Google Scholar] [CrossRef]

- Qi, J.; Abera, D.E.; Cheng, J. PS-GAN: Pseudo Supervised Generative Adversarial Network With Single Scale Retinex Embedding for Infrared and Visible Image Fusion. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2024, 18, 766–1777. [Google Scholar] [CrossRef]

- Wang, S.; Wang, C.; Shi, C.; Liu, Y.; Lu, M. Mask-Guided Mamba Fusion for Drone-Based Visible-Infrared Vehicle Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 3452550. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Wu, H.; Chen, P. An improved Deeplabv3+ semantic segmentation algorithm with multiple loss constraints. PLoS ONE 2022, 17, e0261582. [Google Scholar] [CrossRef] [PubMed]

- MIV-Dataset. Available online: https://github.com/xhzhang0377/MIV-Dataset (accessed on 19 April 2025).

- Jia, X.; Zhu, C.; Li, M.; Tang, W.; Zhou, W. LLVIP: A visible-infrared paired dataset for low-light vision. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops, Montreal, BC, Canada, 11–17 October 2021; pp. 3489–3497. [Google Scholar]

- MSRS Dataset. Available online: https://github.com/Linfeng-Tang/MSRS (accessed on 19 April 2025).

- TNO Dataset. Available online: https://figshare.com/articles/dataset/TNO_Image_Fusion_Dataset/1008029 (accessed on 19 April 2025).

- Zhang, X.; Ye, P.; Xiao, G. VIFB: A visible and infrared image fusion benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 104–105. [Google Scholar]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; So Kweon, I. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO. Software 2023, Version 8.0.0. Available online: https://github.com/ultralytics/ultralytics (accessed on 19 April 2025).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Geosci. Remote Sens. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

| Methods | SD | MI | VIF | AG | EN | Qabf | SF |

|---|---|---|---|---|---|---|---|

| FusionGAN | 24.821 | 2.817 | 0.476 | 1.947 | 6.308 | 0.254 | 6.919 |

| GANMcC | 32.113 | 2.682 | 0.613 | 2.123 | 6.690 | 0.297 | 6.814 |

| RFN-Nest | 34.646 | 2.554 | 0.669 | 2.158 | 6.862 | 0.313 | 6.321 |

| UMF-CMGR | 29.379 | 2.682 | 0.521 | 2.504 | 6.462 | 0.347 | 9.915 |

| SuperFusion | 42.350 | 4.040 | 0.818 | 3.120 | 7.128 | 0.528 | 11.139 |

| MLFFusion | 46.561 | 3.739 | 0.960 | 4.085 | 7.227 | 0.675 | 13.953 |

| LRRNet | 24.867 | 2.348 | 0.564 | 2.467 | 6.145 | 0.424 | 9.108 |

| DATFuse | 39.850 | 4.436 | 0.839 | 3.036 | 7.082 | 0.514 | 12.331 |

| ResCCFusion | 41.006 | 3.920 | 0.849 | 2.841 | 7.093 | 0.519 | 10.851 |

| DSSFusion | 44.708 | 4.268 | 0.985 | 4.134 | 7.225 | 0.697 | 14.384 |

| Methods | SD | MI | VIF | AG | EN | Qabf | SF |

|---|---|---|---|---|---|---|---|

| FusionGAN | 17.145 | 1.843 | 0.413 | 1.209 | 5.250 | 0.138 | 3.875 |

| GANMcC | 24.349 | 2.376 | 0.682 | 1.835 | 5.832 | 0.338 | 5.227 |

| RFN-Nest | 21.588 | 2.141 | 0.574 | 1.266 | 5.389 | 0.242 | 4.337 |

| UMF-CMGR | 16.889 | 1.587 | 0.308 | 1.738 | 5.058 | 0.232 | 6.033 |

| SuperFusion | 32.159 | 3.173 | 0.917 | 2.570 | 5.903 | 0.589 | 8.504 |

| MLFFusion | 33.189 | 3.372 | 0.988 | 3.056 | 5.787 | 0.640 | 9.621 |

| LRRNet | 20.323 | 2.065 | 0.436 | 1.692 | 5.183 | 0.308 | 6.089 |

| DATFuse | 27.089 | 3.183 | 0.849 | 2.618 | 5.809 | 0.542 | 8.873 |

| ResCCFusion | 29.882 | 3.719 | 0.942 | 2.500 | 5.836 | 0.629 | 8.357 |

| DSSFusion | 32.714 | 4.312 | 1.052 | 3.062 | 6.041 | 0.684 | 9.810 |

| Methods | SD | MI | VIF | AG | EN | Qabf | SF |

|---|---|---|---|---|---|---|---|

| FusionGAN | 30.781 | 2.333 | 0.424 | 2.418 | 6.557 | 0.234 | 6.272 |

| GANMcC | 33.423 | 2.280 | 0.532 | 2.520 | 6.734 | 0.279 | 6.111 |

| RFN-Nest | 36.940 | 2.129 | 0.561 | 2.654 | 6.966 | 0.333 | 5.846 |

| UMF-CMGR | 30.117 | 2.229 | 0.598 | 2.969 | 6.537 | 0.411 | 8.178 |

| SuperFusion | 37.082 | 3.417 | 0.686 | 3.566 | 6.763 | 0.476 | 9.235 |

| MLFFusion | 41.412 | 3.281 | 0.755 | 4.020 | 6.907 | 0.519 | 10.009 |

| LRRNet | 40.987 | 2.530 | 0.564 | 3.762 | 6.991 | 0.353 | 9.510 |

| DATFuse | 28.351 | 3.452 | 0.730 | 3.697 | 6.551 | 0.523 | 10.047 |

| ResCCFusion | 40.712 | 3.658 | 0.828 | 3.805 | 7.000 | 0.519 | 10.003 |

| DSSFusion | 39.215 | 4.538 | 0.809 | 4.501 | 6.994 | 0.602 | 11.887 |

| Methods | SD | MI | VIF | AG | EN | Qabf | SF |

|---|---|---|---|---|---|---|---|

| FusionGAN | 30.005 | 3.242 | 0.403 | 2.248 | 6.550 | 0.252 | 8.288 |

| GANMcC | 30.345 | 3.273 | 0.459 | 1.911 | 6.425 | 0.246 | 6.364 |

| RFN-Nest | 27.932 | 3.095 | 0.536 | 2.057 | 6.510 | 0.319 | 6.576 |

| UMF-CMGR | 30.349 | 3.596 | 0.486 | 2.500 | 6.548 | 0.368 | 9.655 |

| SuperFusion | 31.060 | 4.966 | 0.508 | 2.816 | 6.571 | 0.504 | 10.233 |

| MLFFusion | 35.264 | 4.401 | 0.672 | 3.449 | 6.690 | 0.531 | 12.744 |

| LRRNet | 23.095 | 3.484 | 0.453 | 2.301 | 6.159 | 0.353 | 9.352 |

| DATFuse | 27.033 | 3.783 | 0.570 | 3.307 | 6.381 | 0.471 | 12.479 |

| ResCCFusion | 35.756 | 4.329 | 0.641 | 3.264 | 6.885 | 0.486 | 12.338 |

| DSSFusion | 36.050 | 4.871 | 0.658 | 3.463 | 6.909 | 0.569 | 12.814 |

| Speed | Fusion GAN | GANMcC | RFN-Nest | UMF-CMGR | Super Fusion | MLF Fusion | LRRNet | DATFuse | ResCC Fusion | DSS Fusion |

|---|---|---|---|---|---|---|---|---|---|---|

| run_time(second/each image pair)parms/(M) | 0.126 | 0.268 | 0.243 | 0.067 | 0.265 | 0.137 | 0.295 | 0.058 | 0.330 | 0.015 |

| 1.326 | 1.860 | 2.730 | 0.629 | 0.196 | 1.181 | 0.049 | 0.011 | 0.817 | 0.801 |

| Modules | Components | TNO Dataset | MSRS Dataset | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Map | CMTFM | loss | SDFM | SD | MI | VIF | AG | EN | Qabf | SF | SD | MI | VIF | AG | EN | Qabf | SF | |

| I | × | × | × | × | 36.509 | 3.014 | 0.626 | 3.760 | 6.804 | 0.472 | 8.841 | 31.460 | 2.654 | 0.776 | 2.669 | 5.538 | 0.527 | 8.672 |

| II | × | ✓ | ✓ | ✓ | 39.193 | 4.403 | 0.781 | 4.003 | 6.853 | 0.533 | 10.453 | 32.138 | 4.103 | 1.011 | 2.501 | 5.903 | 0.607 | 8.584 |

| III | ✓ | × | ✓ | ✓ | 37.724 | 2.703 | 0.684 | 3.894 | 6.827 | 0.499 | 9.577 | 31.032 | 2.704 | 0.796 | 2.612 | 5.651 | 0.537 | 8.648 |

| IV | ✓ | ✓ | × | ✓ | 36.046 | 3.028 | 0.627 | 3.740 | 6.771 | 0.473 | 8.891 | 30.812 | 2.548 | 0.762 | 2.642 | 5.435 | 0.529 | 8.769 |

| V | ✓ | ✓ | ✓ | × | 37.659 | 2.717 | 0.588 | 3.601 | 6.840 | 0.436 | 8.276 | 35.151 | 1.905 | 0.657 | 2.143 | 5.664 | 0.388 | 8.384 |

| Ours | ✓ | ✓ | ✓ | ✓ | 39.215 | 4.538 | 0.809 | 4.501 | 6.924 | 0.602 | 11.887 | 32.714 | 4.312 | 1.052 | 3.062 | 6.041 | 0.684 | 9.810 |

| Methods | Precision | Recall | mAP@50 | mAP@[50:95] |

|---|---|---|---|---|

| Visible | 0.945 | 0.931 | 0.974 | 0.674 |

| Infrared | 0.946 | 0.944 | 0.978 | 0.689 |

| FusionGAN | 0.954 | 0.934 | 0.978 | 0.677 |

| GANMcC | 0.948 | 0.918 | 0.970 | 0.665 |

| RFN-Nest | 0.953 | 0.921 | 0.973 | 0.675 |

| UMF-CMGR | 0.945 | 0.930 | 0.974 | 0.672 |

| SuperFusion | 0.951 | 0.936 | 0.976 | 0.682 |

| MLFFusion | 0.948 | 0.934 | 0.973 | 0.681 |

| LRRNet | 0.955 | 0.928 | 0.977 | 0.679 |

| DATFuse | 0.952 | 0.937 | 0.977 | 0.683 |

| ResCCFusion | 0.955 | 0.923 | 0.975 | 0.679 |

| DSSFusion | 0.961 | 0.949 | 0.983 | 0.709 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Yin, Y.; Wang, Z.; Wu, H.; Cheng, L.; Yang, A.; Zhao, G. Robust Infrared–Visible Fusion Imaging with Decoupled Semantic Segmentation Network. Sensors 2025, 25, 2646. https://doi.org/10.3390/s25092646

Zhang X, Yin Y, Wang Z, Wu H, Cheng L, Yang A, Zhao G. Robust Infrared–Visible Fusion Imaging with Decoupled Semantic Segmentation Network. Sensors. 2025; 25(9):2646. https://doi.org/10.3390/s25092646

Chicago/Turabian StyleZhang, Xuhui, Yunpeng Yin, Zhuowei Wang, Heng Wu, Lianglun Cheng, Aimin Yang, and Genping Zhao. 2025. "Robust Infrared–Visible Fusion Imaging with Decoupled Semantic Segmentation Network" Sensors 25, no. 9: 2646. https://doi.org/10.3390/s25092646

APA StyleZhang, X., Yin, Y., Wang, Z., Wu, H., Cheng, L., Yang, A., & Zhao, G. (2025). Robust Infrared–Visible Fusion Imaging with Decoupled Semantic Segmentation Network. Sensors, 25(9), 2646. https://doi.org/10.3390/s25092646