Educational Scoring System in Laparoscopic Cholecystectomy: Is It the Right Time to Standardize?

Abstract

1. Introduction

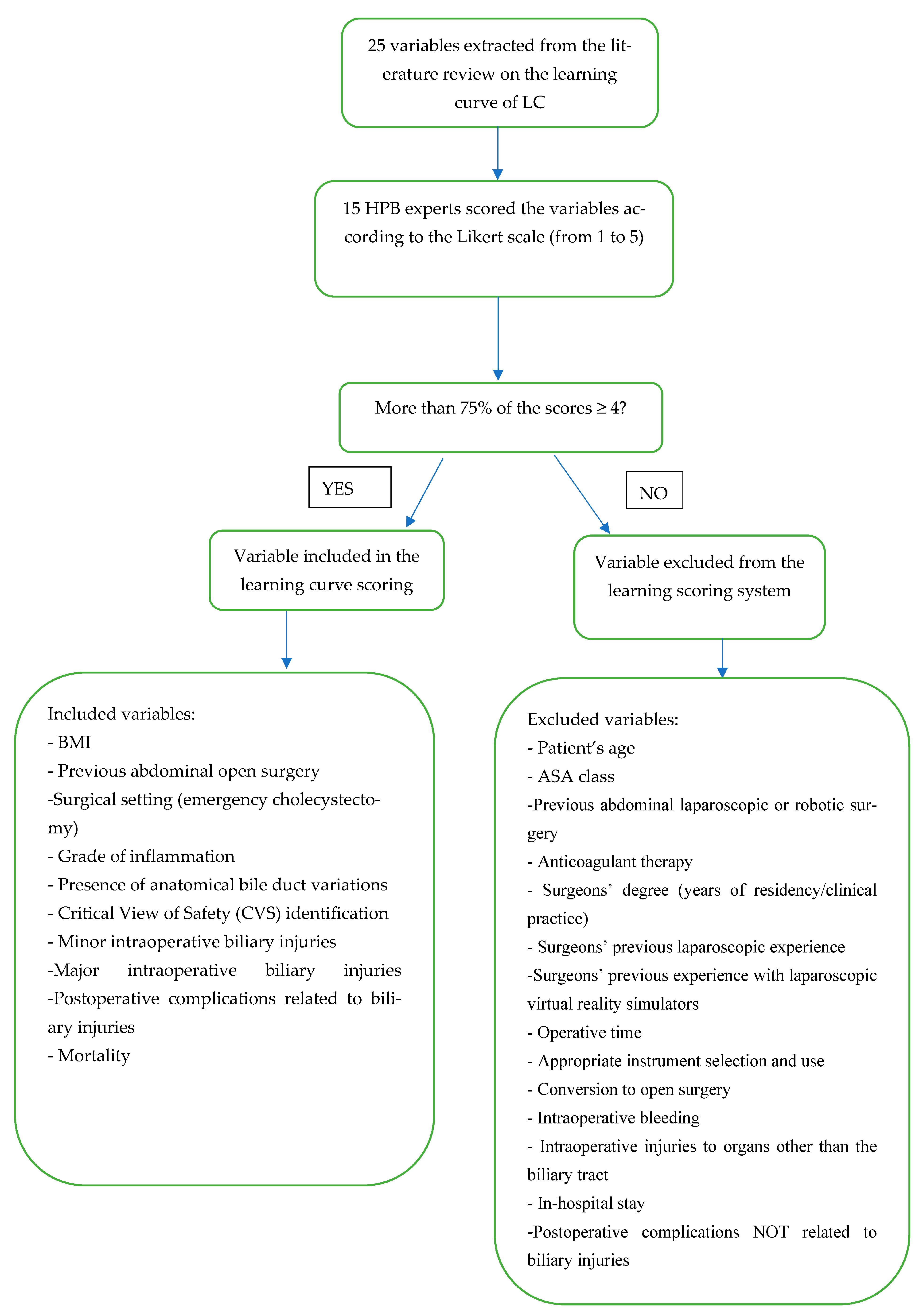

2. Materials and Methods

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hassler, K.R.; Collins, J.T.; Philip, K.; Jones, M.W. Laparoscopic Cholecystectomy; StatPearls: Tampa, FL, USA, 2022; pp. 1–2. [Google Scholar]

- Polychronidis, A.; Laftsidis, P.; Bounovas, A.; Simopoulos, C. Twenty Years of Laparoscopic Cholecystectomy: Philippe Mouret—March 17, 1987. JSLS J. Soc. Laparosc. Robot. Surg. 2008, 12, 109–111. [Google Scholar]

- Sgaramella, L.I.; Gurrado, A.; Pasculli, A.; de Angelis, N.; Memeo, R.; Prete, F.P.; Berti, S.; Ceccarelli, G.; Rigamonti, M.; Badessi, F.G.A.; et al. The critical view of safety during laparoscopic cholecystectomy: Strasberg Yes or No? An Italian Multicentre study. Surg. Endosc. 2020, 35, 3698–3708. [Google Scholar] [CrossRef] [PubMed]

- La Greca, G.; Schembari, E.; Bortolussi, C.; Pesce, A.; Vitale, M.; Latteri, S.; Reitano, E.; Russello, D. Quantifying the scientific interest in surgical training and education: Numerical evidence of a PubMed analysis. Updat. Surg. 2020, 73, 339–348. [Google Scholar] [CrossRef] [PubMed]

- Buttenschoen, K.; Tsokos, M.; Schulz, F. Laparoscopic Cholecystectomy Associated Lethal Hemorrhage. JSLS J. Soc. Laparosc. Robot. Surg. 2007, 11, 101–105. [Google Scholar]

- Hopper, A.N.; Jamison, M.H.; Lewis, W.G. Learning curves in surgical practice. Postgrad. Med. J. 2007, 83, 777–779. [Google Scholar] [CrossRef]

- Duca, S.; Bãlã, O.; Al-Hajjar, N.; Iancu, C.; Puia, I.; Munteanu, D.; Graur, F. Laparoscopic cholecystectomy: Incidents and complications. A retrospective analysis of 9542 consecutive laparoscopic operations. HPB 2003, 5, 152–158. [Google Scholar] [CrossRef]

- Radunovic, M.; Lazovic, R.; Popovic, N.; Magdelinic, M.; Bulajic, M.; Radunovic, L.; Vukovic, M.; Radunovic, M. Complications of Laparoscopic Cholecystectomy: Our Experience from a Retrospective Analysis. Open Access Maced. J. Med. Sci. 2016, 4, 641–646. [Google Scholar] [CrossRef]

- Christou, N.; Roux-David, A.; Naumann, D.N.; Bouvier, S.; Rivaille, T.; Derbal, S.; Taibi, A.; Fabre, A.; Fredon, F.; Durand-Fontanier, S.; et al. Bile Duct Injury During Cholecystectomy: Necessity to Learn How to Do and Interpret Intraoperative Cholangiography. Front. Med. 2021, 8, 637987. [Google Scholar] [CrossRef]

- Kaushik, R. Bleeding complications in laparoscopic cholecystectomy: Incidence, mechanisms, prevention and management. J. Minimal Access Surg. 2010, 6, 59–65. [Google Scholar] [CrossRef]

- Okamoto, K.; Suzuki, K.; Takada, T.; Strasberg, S.M.; Asbun, H.J.; Endo, I.; Iwashita, Y.; Hibi, T.; Pitt, H.A.; Umezawa, A.; et al. Tokyo Guidelines 2018: Flowchart for the management of acute cholecystitis. J. Hepato-Biliary-Pancreat. Sci. 2017, 25, 55–72. [Google Scholar] [CrossRef]

- Böckler, D.; Geoghegan, J.; Klein, M.; Quasim, W.; Turan, M.; Meyer, L.; Scheele, J. Implications of Laparoscopic Cholecystectomy for Surgical Residency Training. JSLS J. Soc. Laparosc. Robot. Surg. 1999, 3, 19–22. [Google Scholar]

- Pariani, D.; Fontana, S.; Zetti, G.; Cortese, F. Laparoscopic Cholecystectomy Performed by Residents: A Retrospective Study on 569 Patients. Surg. Res. Pr. 2014, 2014, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Salim, J.H.; Al-Khayat, H. The Learning Curve of First One Hundred Laparoscopic Cholecystectomy. Basrah J. Surg. 2010, 16, 16–20. [Google Scholar] [CrossRef]

- Jung, Y.K.; Choi, D.; Lee, K.G. Learning Laparoscopic Cholecystectomy: A Surgical resident’s Insight on Safety and Training During the Initial 151 Cases. Indian J. Surg. 2020, 83, 224–229. [Google Scholar] [CrossRef]

- Reitano, E.; De’Angelis, N.; Schembari, E.; Carrà, M.C.; Francone, E.; Gentilli, S.; La Greca, G. Learning curve for laparoscopic cholecystectomy has not been defined: A systematic review. ANZ J. Surg. 2021, 91, E554–E560. [Google Scholar] [CrossRef]

- De’Angelis, N.; Catena, F.; Memeo, R.; Coccolini, F.; Martínez-Pérez, A.; Romeo, O.M.; De Simone, B.; Di Saverio, S.; Brustia, R.; Rhaiem, R.; et al. 2020 WSES guidelines for the detection and management of bile duct injury during cholecystectomy. World J. Emerg. Surg. 2021, 16, 30. [Google Scholar] [CrossRef]

- Rosenthal, R.; Hoffmann, H.; Clavien, P.-A.; Bucher, H.C.; Dell-Kuster, S. Definition and Classification of Intraoperative Complications (CLASSIC): Delphi Study and Pilot Evaluation. World J. Surg. 2015, 39, 1663–1671. [Google Scholar] [CrossRef]

- Clavien, P.A.; Barkun, J.; de Oliveira, M.L.; Vauthey, J.N.; Dindo, D.; Schulick, R.D.; de Santibañes, E.; Pekolj, J.; Slankamenac, K.; Bassi, C.; et al. The Clavien-Dindo Classification of Surgical Complications: Five-year experience. Ann. Surg. 2009, 250, 187–196. [Google Scholar] [CrossRef]

- Diamond, I.R.; Grant, R.C.; Feldman, B.M.; Pencharz, P.B.; Ling, S.C.; Moore, A.M.; Wales, P.W. Defining consensus: A systematic review recommends methodologic criteria for reporting of Delphi studies. J. Clin. Epidemiol. 2014, 67, 401–409. [Google Scholar] [CrossRef]

- Humphrey-Murto, S.; Varpio, L.; Wood, T.; Gonsalves, C.; Ufholz, L.-A.; Mascioli, K.; Wang, C.; Foth, T. The Use of the Delphi and Other Consensus Group Methods in Medical Education Research. Acad. Med. 2017, 92, 1491–1498. [Google Scholar] [CrossRef]

- Weir, C.B.; Jan, A. BMI Classification Percentile and Cut Off Points; StatPearls: Tampa, FL, USA, 2022; pp. 2–3. [Google Scholar]

- Scally, G. Deaths in Bristol have changed the face of British medicine. Can. Med. Assoc. J. 2001, 165, 628. [Google Scholar]

- Moletta, L.; Pierobon, E.S.; Capovilla, G.; Costantini, M.; Salvador, R.; Merigliano, S.; Valmasoni, M. International guidelines and recommendations for surgery during COVID-19 pandemic: A Systematic Review. Int. J. Surg. 2020, 79, 180–188. [Google Scholar] [CrossRef] [PubMed]

- Sugrue, M.; Sahebally, S.M.; Ansaloni, L.; Zielinski, M.D. Grading operative findings at laparoscopic cholecystectomy—A new scoring system. World J. Emerg. Surg. 2015, 10, 14. [Google Scholar] [CrossRef] [PubMed]

- Niven, D.J.; McCormick, T.J.; Straus, S.E.; Hemmelgarn, B.R.; Jeffs, L.; Barnes, T.R.M.; Stelfox, H.T. Reproducibility of clinical research in critical care: A scoping review. BMC Med. 2018, 16, 26. [Google Scholar] [CrossRef]

- De’Angelis, N.; Gavriilidis, P.; Martínez-Pérez, A.; Genova, P.; Notarnicola, M.; Reitano, E.; Petrucciani, N.; Abdalla, S.; Memeo, R.; Brunetti, F.; et al. Educational value of surgical videos on YouTube: Quality assessment of laparoscopic appendectomy videos by senior surgeons vs. novice trainees. World J. Emerg. Surg. 2019, 14, 22. [Google Scholar] [CrossRef]

- Li, J.C.M.; Hon, S.S.F.; Ng, S.S.M.; Lee, J.F.Y.; Yiu, R.Y.C.; Leung, K.L. The learning curve for laparoscopic colectomy: Experience of a surgical fellow in an university colorectal unit. Surg. Endosc. 2009, 23, 1603–1608. [Google Scholar] [CrossRef]

- Waters, J.A.; Chihara, R.; Moreno, J.; Robb, B.W.; Wiebke, E.A.; George, V.V. Laparoscopic Colectomy: Does the Learning Curve Extend Beyond Colorectal Surgery Fellowship? JSLS J. Soc. Laparosc. Robot. Surg. 2010, 14, 325–331. [Google Scholar] [CrossRef]

- Tekkis, P.P.; Senagore, A.J.; Delaney, C.P.; Fazio, V.W. Evaluation of the Learning Curve in Laparoscopic Colorectal Surgery: Com-parison of right-sided and left-sided resections. Ann. Surg. 2005, 242, 83–91. [Google Scholar] [CrossRef]

- Li, J.; Jiang, J.; Jiang, X.; Liu, L. Risk-adjusted monitoring of surgical performance. PLoS ONE 2018, 13, e0200915. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Kinugasa, Y.; Shiomi, A.; Sato, S.; Yamakawa, Y.; Kagawa, H.; Tomioka, H.; Mori, K. Learning curve for robotic-assisted surgery for rectal cancer: Use of the cumulative sum method. Surg. Endosc. 2014, 29, 1679–1685. [Google Scholar] [CrossRef]

- Nagakawa, Y.; Nakamura, Y.; Honda, G.; Gotoh, Y.; Ohtsuka, T.; Ban, D.; Nakata, K.; Sahara, Y.; Velasquez, V.V.D.M.; Takaori, K.; et al. Learning curve and surgical factors influencing the surgical outcomes during the initial experience with laparoscopic pancreaticoduodenectomy. J. Hepato-Biliary-Pancreat. Sci. 2018, 25, 498–507. [Google Scholar] [CrossRef]

- Voitk, A.J.; Tsao, S.G.; Ignatius, S. The tail of the learning curve for laparoscopic cholecystectomy. Am. J. Surg. 2001, 182, 250–253. [Google Scholar] [CrossRef] [PubMed]

- The Southern Surgeons Club; Moore, M.J.; Bennett, C.L. The learning curve for laparoscopic cholecystectomy. Am. J. Surg. 1995, 170, 55–59. [Google Scholar] [CrossRef] [PubMed]

- Jung, Y.K.; Kwon, Y.J.; Choi, D.; Lee, K.G. What is the Safe Training to Educate the Laparoscopic Cholecystectomy for Surgical Residents in Early Learning Curve? J. Minim. Invasive Surg. 2016, 19, 70–74. [Google Scholar] [CrossRef]

- Ali, S.A.; Soomro, A.G.; Mohammad, A.T.; Jarwar, M.; Siddique, A.J. Experience of laparoscopic cholecystectomy during a steep learning curve at a university hospital. J. Ayub Med. Coll. Abbottabad JAMC 2013, 24, 27–29. [Google Scholar]

- Chen, W.; Sailhamer, E.; Berger, D.L.; Rattner, D.W. Operative time is a poor surrogate for the learning curve in laparoscopic colorectal surgery. Surg. Endosc. 2007, 21, 238–243. [Google Scholar] [CrossRef]

- Sarpong, N.O.; Herndon, C.L.; Held, M.B.; Neuwirth, A.L.; Hickernell, T.R.; Geller, J.A.; Cooper, H.J.; Shah, R.P. What Is the Learning Curve for New Technologies in Total Joint Arthroplasty? A Review. Curr. Rev. Musculoskelet. Med. 2020, 13, 675–679. [Google Scholar] [CrossRef]

- Yamashita, Y.; Takada, T.; Kawarada, Y.; Nimura, Y.; Hirota, M.; Miura, F.; Mayumi, T.; Yoshida, M.; Strasberg, S.; Pitt, H.A.; et al. Surgical treatment of patients with acute cholecystitis: Tokyo Guidelines. J. Hepato-Biliary-Pancreat. Surg. 2007, 14, 91–97. [Google Scholar] [CrossRef]

- Mascagni, P.; Fiorillo, C.; Urade, T.; Emre, T.; Yu, T.; Wakabayashi, T.; Felli, E.; Perretta, S.; Swanstrom, L.; Mutter, D.; et al. Formalizing video documentation of the Critical View of Safety in laparoscopic cholecystectomy: A step towards artificial intelligence assistance to improve surgical safety. Surg. Endosc. 2019, 34, 2709–2714. [Google Scholar] [CrossRef]

- Nassar, A.H.M.; Ng, H.J.; Wysocki, A.P.; Khan, K.S.; Gil, I.C. Achieving the critical view of safety in the difficult laparoscopic cholecystectomy: A prospective study of predictors of failure. Surg. Endosc. 2020, 35, 6039–6047. [Google Scholar] [CrossRef]

- Bogacki, P.; Krzak, J.; Gotfryd-Bugajska, K.; Szura, M. Evaluation of the usefulness of the SAGES Safe Cholecystectomy Program from the viewpoint of the European surgeon. Videosurgery Other Miniinvasive Tech. 2020, 15, 80–86. [Google Scholar] [CrossRef] [PubMed]

- Khan, N.; Abboudi, H.; Khan, M.S.; Dasgupta, P.; Ahmed, K. Measuring the surgical ‘learning curve’: Methods, variables and competency. BJU Int. 2013, 113, 504–508. [Google Scholar] [CrossRef] [PubMed]

- Kneebone, R. Simulation in surgical training: Educational issues and practical implications. Med. Educ. 2003, 37, 267–277. [Google Scholar] [CrossRef] [PubMed]

- Köckerling, F. What Is the Influence of Simulation-Based Training Courses, the Learning Curve, Supervision, and Surgeon Volume on the Outcome in Hernia Repair?—A Systematic Review. Front. Surg. 2018, 5, 57. [Google Scholar] [CrossRef]

- Cirocchi, R.; Panata, L.; Griffiths, E.A.; Tebala, G.D.; Lancia, M.; Fedeli, P.; Lauro, A.; Anania, G.; Avenia, S.; Di Saverio, S.; et al. Injuries during Laparoscopic Cholecystectomy: A Scoping Review of the Claims and Civil Action Judgements. J. Clin. Med. 2021, 10, 5238. [Google Scholar] [CrossRef]

| A Surgical Learning Curve Is Defined as “The Time Taken and/or the Number of Procedures an Average Surgeon Needs in Order to Be Able to Perform a Procedure Independently with a Reasonable Outcome”. With Respect to the Learning Curve of LC, What Is a Reasonable Outcome in Your Opinion? | Nb (%) of Experts Who Chose the Corresponding Definitions |

| (1) Completing the surgery with no intraoperative complications. | 3 (20) |

| (2) Completing the surgery as quickly as possible (short operative time). | 1 (6.7) |

| (3) Having no early postoperative complications. | 0 |

| (4) Having no early or long-term postoperative complications. | 1 (6.7) |

| (5) Having no intraoperative or postoperative complications. | 10 (66.7) |

| Variables | Likert Score 1–3 [N (%)] | Likert Score 4≥ [N (%)] | To Be Included in the Scoring System (Y/N) |

|---|---|---|---|

| Preoperative factors | |||

| Patient’s age | 15 (100) | - | N |

| BMI | - | 15 (100) | Y |

| ASA class | 13 (86.7) | 2 (13.3) | N |

| Previous laparoscopic or robotic abdominal surgery | 11 (73.3) | 4 (26.7) | N |

| Previous open abdominal surgery | 2 (13.3) | 13 (86.7) | Y |

| Anticoagulant therapy | 12 (80) | 3 (20) | N |

| Surgery setting (elective or emergency cholecystectomy) | - | 15 (100) | Y |

| Grade of inflammation according to Tokyo Guidelines (in emergency cholecystectomy) | - | 15 (100) | Y |

| Surgeons’ degree (years of residency/clinical practice) | 7 (46.7) | 8 (53.3) | N |

| Surgeons’ previous laparoscopic experience (other than cholecystectomy) | 4 (26.7) | 11 (73.3) | N |

| Surgeons’ previous experience with laparoscopic virtual reality simulators | 11 (73.3) | 4 (26.7) | N |

| Presence of anatomical bile duct variations | 2 (13.3) | 13 (86.7) | Y |

| Intraoperative factors | |||

| Operative time (from the first incision to port removal) | 8 (53.3) | 7 (46.7) | N |

| Critical view of safety (CVS) identification | - | 15 (100) | Y |

| Appropriate instrument selection and use | 6 (40) | 9 (60) | N |

| Conversion to open surgery | 7 (46.7) | 8 (53.3) | N |

| Intraoperative bleeding | 6 (40) | 9 (60) | N |

| Minor intraoperative injuries to the BT | 2 (13.3) | 13 (86.7) | Y |

| Major intraoperative injuries to the BT | 1 (6.7) | 14 (93.3) | Y |

| Intraoperative injuries to organs other than the BT | 4 (26.7) | 11 (73.3) | N |

| Postoperative factors | |||

| In-hospital stay | 10 (66.7) | 5 (33.3) | N |

| Postoperative complications related to biliary injuries | 3 (20) | 12 (80) | Y |

| Postoperative complications NOT related to biliary injuries | 7 (46.7) | 8 (53.3) | N |

| Mortality | 2 (13.3) | 13 (86.7) | Y |

| Readmissions | 5 (33.3) | 10 (66.7) | N |

| Variables | Mean (±SD) | Score | Subcategory |

|---|---|---|---|

| Preoperative | |||

| BMI | 4.07 (0.799) | 0 to +4 | BMI 18–24.9: +0 BMI 25–29.9: +1 BMI 30–35: +2 BMI 35–40: +3 BMI > 40: +4 |

| Previous abdominal open surgery | 4.27 (0.884) | +4 | |

| Surgical setting (emergency cholecystectomy) | 4.60 (0.507) | +5 | |

| Grade of inflammation according to Tokyo guidelines (in emergency cholecystectomy) | 4.87 (0.352) | +4 or +5 | Grade 1: +4 Grade 2: +5 Grade III excluded |

| Presence of anatomical bile duct variations | 4.20 (1.08) | +4 | |

| Intraoperative | |||

| Critical view of safety (CVS) identification | 4.67 (0.72) | +5 | |

| Minor intraoperative injuries to the BT | 4.07 (1.33) | −4 | |

| Major intraoperative injuries to the BT | 4.73 (1.03) | −5 | |

| Postoperative | |||

| Postoperative complications related to biliary injuries | 4.07 (1.62) | −4 | |

| Mortality | 4.40 (1.40) | −4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reitano, E.; Famularo, S.; Dallemagne, B.; Mishima, K.; Perretta, S.; Riva, P.; Addeo, P.; Asbun, H.J.; Conrad, C.; Demartines, N.; et al. Educational Scoring System in Laparoscopic Cholecystectomy: Is It the Right Time to Standardize? Medicina 2023, 59, 446. https://doi.org/10.3390/medicina59030446

Reitano E, Famularo S, Dallemagne B, Mishima K, Perretta S, Riva P, Addeo P, Asbun HJ, Conrad C, Demartines N, et al. Educational Scoring System in Laparoscopic Cholecystectomy: Is It the Right Time to Standardize? Medicina. 2023; 59(3):446. https://doi.org/10.3390/medicina59030446

Chicago/Turabian StyleReitano, Elisa, Simone Famularo, Bernard Dallemagne, Kohei Mishima, Silvana Perretta, Pietro Riva, Pietro Addeo, Horacio J. Asbun, Claudius Conrad, Nicolas Demartines, and et al. 2023. "Educational Scoring System in Laparoscopic Cholecystectomy: Is It the Right Time to Standardize?" Medicina 59, no. 3: 446. https://doi.org/10.3390/medicina59030446

APA StyleReitano, E., Famularo, S., Dallemagne, B., Mishima, K., Perretta, S., Riva, P., Addeo, P., Asbun, H. J., Conrad, C., Demartines, N., Fuks, D., Gimenez, M., Hogg, M. E., Lin, C. C.-W., Marescaux, J., Martinie, J. B., Memeo, R., Soubrane, O., Vix, M., ... Mutter, D. (2023). Educational Scoring System in Laparoscopic Cholecystectomy: Is It the Right Time to Standardize? Medicina, 59(3), 446. https://doi.org/10.3390/medicina59030446