1. Introduction

With the increasing demands of road traffic safety and the decreasing cost of data acquisition equipment, driving behavior identification has become a research hotspot in recent years. Real-time driving behavior identification is one of the most important basic modules of driving assistance systems [

1,

2], which has a wide range of applications in driver state monitoring [

3,

4,

5], driving style analysis [

6,

7,

8,

9], automobile insurance [

1], and fuel consumption optimization [

10].

Conceptually, there are two types of driving behaviors: macroscopic driving behavior and microscopic driving behavior. The macroscopic driving behavior refers to the overall driving states or patterns of the vehicle during a relatively long period of driving, such as normal, fatigue, aggressive, and other stages [

11,

12], while the microscopic driving behavior is more specific to short-range operations, usually including stop, acceleration, deceleration, and others, and is the focus of this paper. Referring to previous studies [

13,

14,

15,

16,

17], the five most typical driving behaviors were selected in this paper: lane keeping, acceleration, deceleration, turning, and lane change.

Currently, the methods of driving behavior identification can be divided into two main categories. One uses the thresholds of vehicle kinematic parameters to identify the start and end of driving behaviors. Ma et al. [

18] identified three kinds of driving behaviors, namely, turning, acceleration, and deceleration, by thresholds of vehicle angular velocity and longitudinal acceleration, with three styles, aggressive, normal, and cautious, to explore the differences in driving styles during different driving stages of online car-hailing. Although this method is simple and easy to comprehend, most of the thresholds in existing research are determined empirically, leading to weak identification effects, with the identified driving behaviors requiring manual verifications in most cases. Recently, the machine learning and deep learning-based methods have become more popular in transportation studies. Since the driving behaviors usually last for a period of time, identification is essentially a time series-based classification problem, where each sample is a two-dimensional matrix, including a time dimension and a feature dimension. Classic machine learning algorithms usually solve such problems by flattening features or dividing the time series into different time windows to extract the statistical features, such as mean, standard deviation, or other metrics, as input [

19,

20]. However, these extracted features could not reflect the chronological features of driving behaviors; thus, some researchers used the dynamic time warping (DTW) algorithm to reflect the sequence-to-sequence distance more reasonably [

14]. Zheng et al. [

1] used the KNN-DTW algorithm to identify two kinds of driving behaviors: left lane change and right lane change, with an identification accuracy of 81.97% and 71.77%, respectively. Considering the fact that it was a simple binary classification problem, such accuracies were relatively low, which can be attributed to the weakness of the algorithm itself. A more efficient method is to use deep learning algorithms to improve the nonlinearity and generalization ability [

21]. Xie et al. [

22] used the UAH-DriveSet public dataset, selected seven features, namely, velocity, tri-axis accelerations, and tri-axis angular velocities, and employed a convolutional neural network (CNN) to identify six kinds of driving behavior: lane keeping, acceleration, braking, turning, left lane change, and right lane change, with an average F1 of 0.87. Similarly, Xie et al. [

16] compared three window-based feature extraction methods, statistical values, principal component analysis, and stacked sparse auto-encoders, and used the random forest method to classify driving behaviors on three public datasets: UAH, UW, and IMS.

The data used in the above research were all vehicle kinematic data only, and the limited information from a single source leads to poorer identification results. Driving behavior is not only closely related to vehicle motion, it is also expressed through driver states. Therefore, some researchers have incorporated driver states, such as eye-movement features [

23] and physiological features [

24], to analyze driving behaviors. Guo et al. [

25] identified the driving intention to lane change using a bidirectional LSTM network based on an attention mechanism with vehicle kinematic data, driver maneuver data, driver eye-movement data, and head rotation data as input, and achieved an accuracy of 93.33% at 3 s prior to the lane change. However, the eye-movement data and physiological data are mostly collected in an intrusive manner, which can cause some interference with driving. To the authors’ knowledge, there is currently no research on real-time driving behavior identification considering drivers’ facial expressions. Fortunately, it has been shown that drivers’ driving behaviors and emotions are closely related [

26,

27,

28]. A study by Precht et al. [

29] showed that drivers’ anger emotions can increase the frequency of their aggressive driving behaviors, such as rapid deceleration and rapid turning, while such aggressive driving behaviors are intentional rather than potentially influenced by emotions. Kadoya et al. [

30] found that negative emotions (angry and sad) of taxi drivers had significant impacts on increased driving speed, while a neutral emotional state is related to decreased speed. It is common sense to use driver expressions to identify driving behaviors. For example, drivers need to look at both side-mirrors when turning or changing lanes, which may lead to changes in expressions (such as eyebrow shapes), and in fact the eye-movement features in some studies were also deduced from driver expressions [

31].

In order to integrate driver expressions into driving behavior identification without interfering with drivers and to monitor vehicle movement and driver states in real time for better analyzing driving style and risks, this study conducts an online car-hailing naturalistic driving test to collect vehicle kinematic data and driver expression data in a non-intrusive way and uses the sliding time window on the whole data set to generate driving behavior identification samples. The stacked long short-term memory (S-LSTM) network is employed to identify five kinds of driving behaviors, namely, lane keeping, acceleration, deceleration, turning, and lane change, while the artificial neural network (ANN) and XGBoost algorithms are used as a comparison to validate the effectiveness of the S-LSTM algorithm.

2. Data Collection and Pre-Processing

An online car-hailing naturalistic driving test was conducted in Nanjing, China, where drivers drove in real scenarios without any test-related interference. By posting recruitment information in the Nanjing online car-hailing platform, a total of 22 drivers were recruited. However, there were 10 drivers whose faces were occluded or far away from the camera, and the expression data could not be well recognized, thus a total of 12 drivers were finally employed. Considering the long test time and high sampling frequency, a dataset with the test results from 12 drivers should already be sufficient [

32,

33,

34]. Due to the specificity of this occupation, the 12 drivers were all male, with an average age of 36 years old, and they all had three or more years’ driving experience. These drivers were informed of the specific requirements of the test and received sufficient training before the formal test. The test was conducted during the daytime (08:00–20:00) under good weather conditions. In order to ensure the generality of the road environment, the test vehicles were free to take orders in the Nanjing area, with no restrictions on the test routes, including urban expressways, major arterial roads, minor arterial roads, and local streets. The data collection during the test was made in a non-intrusive way so as to ensure the validity of the data.

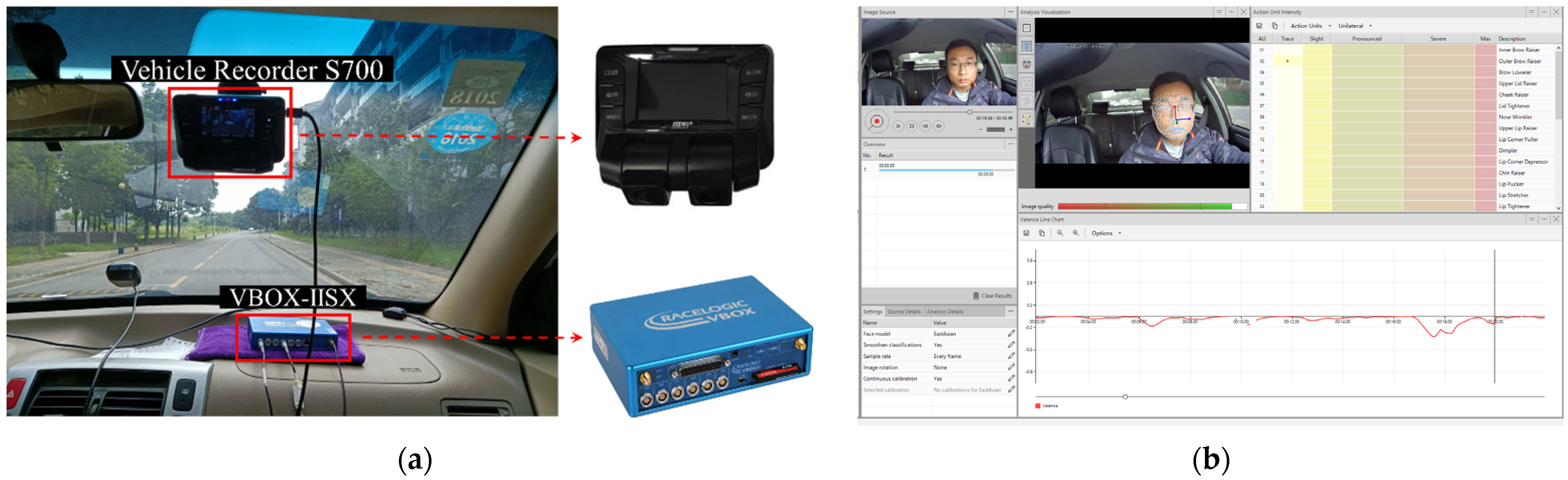

In this test, the vehicle kinematic data were collected by VBOX-IISX GPS data loggers with a sampling frequency of 10 Hz. The outside ambient video and driver video information were collected by the S700 Vehicle Recorder, while the driver expression data were obtained through the processing of the driver videos with the FaceReader 8.0, a tool that automatically analyses facial expressions and provides an objective assessment of a driver’s motion. The test equipment is shown in

Figure 1.

The data were collected over 12 days from the end of 2018 to early 2019 and consisted of 3,120,578 pieces of vehicle kinematic data and 905.5 GB of video clips. The vehicle kinematic data contained 12 features, which are described in

Table 1. The video clip data include the information from outside ambient videos and driver videos.

Driver expression data were obtained by processing driver videos using FaceReader 8.0, including the action intensity of the 20 most common facial action units with a frequency of 10 Hz and a value range of 0–1, where larger values indicate greater action intensity. The description of each action unit is shown in

Table 2.

Due to the equipment problems, both vehicle kinematic data and driver expression data had a small portion of missing values. As the data sampling frequency is relatively high (10 Hz), a linear interpolation could be sufficient to successfully interpolate those missing data.

Yaw rate plays an important role in driving behavior analysis [

14,

15,

16], which was then calculated from the heading information. First, the heading data was converted to the cumulative heading angle for expansion to the arbitrary angle. Then, the cumulative heading angle was differentiated to obtain the yaw rate with the unit of (°/s).

In addition, to reduce the noise and improve the stability, the vehicle kinematic data were smoothed using a Savitzky–Golay filter, and the specific steps and parameters were taken from a study by Brombacher et al. [

6].

5. Conclusions

In this paper, a non-intrusive method was used to collect high-precision vehicle kinematic data and high-definition video data through an online car-hailing naturalistic driving test in Nanjing, which ensured the capture of rich information on driving behaviors with no interference to driving, improving the identification of driving behaviors, and providing theoretical support for analyzing driving behavior using multi-source data.

In order to determine a reasonable time dimension, 10 different time windows were compared for generating driving behavior samples. The S-LSTM model was constructed to identify five kinds of driving behaviors: lane keeping, acceleration, deceleration, turning, and lane change, while two machine learning algorithms, the ANN and the XGBoost, were used as comparisons to verify the effectiveness of the S-LSTM model. The results show that the identification effect of the S-LSTM is better than the ANN and the XGBoost algorithms, the driver expression data enhancing the identification results, which implies that the S-LSTM model could mine richer information from multi-source data and is suitable for solving such time series classification problems with many features and large data volumes. It has also been demonstrated that too long or too short time windows are not conducive to driving behavior identification, 3.5 s being optimal. In addition, the relatively poorer identification of lane-change behaviors is attributed to the fact that lane-change behaviors are more complex.

In summary, the main contribution of this paper is to propose a real-time driving behavior identification framework based on the fusion of multi-source data, which innovatively integrates driver expressions into driving behavior identification. While driving, the driving behavior identification results are fed back to the driving monitoring platform in real time for driving style analysis and driving risk prediction, and can also be fed back to insurance companies for analysis, allowing them to provide more preferential policies for cautious and steady drivers. In addition, scores for drivers with respect to their driving behaviors can even be provided on a real-time basis to foster good driving habits.

In the next study, a more diverse sample of drivers will be employed (different driving skills, genders, and ages) to improve the generality of the research. Extra data sources could be considered, such as road alignment data, to further improve the identification results, and driving behavior categories can be refined. For example, turning behaviors can be further divided into left-turn and right-turn behaviors. In addition, the real-time identification can be upgraded to real-time prediction, so as to monitor driver states and predict driving risks timelier and more effectively.