1. Introduction

The growth of the electric vehicle industry has captivated governments, automakers, and energy companies. EVs are seen as a viable solution to the depletion of fossil resources and rising pollution [

1]. It is widely believed that the popularity of EVs can reduce greenhouse gas emissions (mainly carbon dioxide) [

2]. Meanwhile, falling battery prices and government incentives will also promote rapid growth in the scale of EVs [

3]. However, the increased charging demand resulting from the rapid development of EVs also poses various challenges to the grid. The EV charging load has a great impact on the stable operation of the distribution network [

4], including the decline of power quality and the difficulty of optimizing and controlling the operation of the power grid [

5,

6]. The research on EV charging load forecasting is carried out not only to ensure the economical and stable operation of the power system [

7] but also to support the development of EVs [

8].

EV charging load forecasting approaches are now separated into probabilistic models, time series models, and machine learning models. The probabilistic modeling method establishes probabilistic models of residents’ charging and travel behavior using statistical and queuing theory, followed by load forecasts using Monte Carlo simulation. Taylor J et al. [

9] utilized the Monte Carlo method to establish a large-scale charging demand model, considering EV type, penetration rate, charging scenario, etc. In [

10], it is assumed that the arrival time of EVs at the charging station follows Poisson distribution, and the charging load prediction is carried out based on queuing theory. With the deepening of research, the temporal and spatial distribution of EV charging load has attracted the interest of many researchers. Shun et al. [

11] established a probabilistic model of the temporal and spatial distribution of EVs based on travel chains and Markov decision processes. Chen et al. [

12] applied the OD matrix analysis method to plan the driving path of the logistics electric vehicle and solve the charging demand load value through the mixed-integer programming model. Xing et al. [

13] proposed a data-driven EV charging load prediction method, which is based on Didi user travel data to establish a traffic network model, a vehicle spatiotemporal transfer model, and a resident travel probability model.

Currently, time series and machine learning algorithms are commonly employed to forecast EV charging load in the short term. The exponential smoothing model [

14], the linear regression (LR) model [

15], and the autoregressive integrated moving average (ARIMA) model [

16] are the most often used time series models. While time series models have straightforward structures and require minimal training, they are incapable of capturing the nonlinear properties of load series. With the rapid advancement of artificial intelligence technology, intelligent algorithms such as artificial neural networks (ANNs) and deep neural networks are increasingly used to forecast EV charging load. The neural network has excellent power for feature extraction and the ability to form nonlinear mapping relationships [

17], which effectively addresses the time series model’s shortcomings. In [

18], the SVR founded on an evolutionary algorithm is proposed for electric bus charging load forecasting. Yi et al. [

19] proposed a multi-step EV load prediction model established on long short-term memory (LSTM), and the results suggest that the model is capable of accurately predicting sequence data. In [

20], LSTM models show better performance and provide higher accuracy compared to the prediction results of ANNs. The gated recurrent unit (GRU) is a characteristic and efficient variant of LSTM. The GRU is characterized by making the network structure simpler. Zhu et al. [

21] introduced GRU into short-term forecasting of EV charging load. In order to further improve the short-term load forecasting performance, some forecasting methods combined with LSTM and other recurrent neural networks (RNN) have also been proposed. Feng et al. [

22] proposed an EV charging load prediction method based on a combination of the multivariate residual corrected grey model (EMGM) and LSTM network. Dabbaghjamanesh et al. [

23] applied Q-Learning Technique based on ANN and RNN to improve the short-term prediction accuracy of EV charging load. The model based on LSTM and GRU is capable of learning long-term temporal correlations; however, due to the lack of convolution in the model, the feature extraction capability still has to be enhanced. Therefore, it is difficult for the above models to effectively utilize and extract the feature information in the EV charging load.

When confronted with this problem, approaches for extracting features are seen to be one of the most viable solutions. The convolutional neural networks (CNN) have excellent feature extraction [

24], which is often used for feature extraction in short-term load forecasting. Li et al. [

25] applied an evolutionary algorithm-optimized CNN model for EV charging load prediction. In addition, the CNN-LSTM model combining CNN and LSTM is often used in traditional short-term forecasting of power loads [

26]. In the CNN-LSTM model, CNN extracts the feature information of load-related influencing factors, and LSTM is used to learn the temporal dependency between the feature information sequence extracted by CNN and the output [

27]. Yan et al. [

28] proposed a hybrid model based on CNN and LSTM to predict the short-term electricity load of a single household. However, most methods ignore the long-term temporal relationship of input variables, causing the load forecasting model to lack adequate prior knowledge.

Furthermore, EVs are abundant in urban areas, and EV users’ travel behavior is influenced by many random factors, resulting in increasingly complicated fluctuations in the charging load of EVs. Given this problem, accurate forecasting by using a short-term load forecasting model on a single time scale is difficult [

29]. Short-term load forecasting can be enhanced by decomposing the load into multiple intrinsic mode functions and then separately predicting and reconstructing the sub-model prediction results [

30]. Wang et al. [

31] proposed a “decomposition-predict-reconstruction” prediction model based on empirical mode decomposition (EMD) and LSTM, which effectively improved the accuracy of load prediction.

One-dimensional convolutional neural networks (1DCNN) can extract one-dimensional sequence features, commonly used to extract time series feature information. Wang et al. [

32] utilized 1DCNN to extract the fusion features of bearing vibration signal and sound signal to realize bearing fault diagnosis. In [

33], the influent load is first decomposed by EMD, and then 1DCNN extracts the latent features of each intrinsic mode function’s periodic signal. However, although the 1DCNN model can achieve feature extraction at various time scales by adjusting the scope of the receptive field, it cannot extract the time series dependencies between time series data. With the advent of advanced TCN models that combine the advantages of CNN feature processing and RNN time-domain modeling, it is possible to extract time series dependencies between long intervals of historical data [

34]. Yin et al. [

35] proposed a feature fusion TCN structure that fuses model output features at multiple time delay scales. The TCN built on the convolutional network can process data in parallel on a large scale and has a faster computing speed than the RNN such as LSTM [

36]. Although the signal decomposition method can obtain the components of EV charging load at various time scales, it still necessitates the selection and construction of low-dimensional features with a high degree of differentiation, which not only adds subjectivity and complexity to this identification method but also risks losing important information.

On the basis of the foregoing research, an EV charging load forecasting model based on the MCCNN-TCN is proposed in this paper. The MCCNN model can mine the fluctuation features of EV charging load at multi-time scales. The TCN model can establish the global time-series dependencies between the local time-series feature information at different time scales extracted by the MCCNN model. In addition, accurate load forecasting is frequently reliant on a thorough understanding of the elements that contribute to increasing or decreasing consumer demand [

37]. The EV charging load is affected by numerous aspects, including weather temperature, date type, traffic conditions, user travel behavior, etc. [

8]. Therefore, this paper introduces the maximum information coefficient (MIC) and Spearman rank correlation coefficient and proposes a similar day method based on weighted gray correlation analysis to screen historical loads. The main contributions of this paper are described as follows:

- (1)

The MIC was applied to eliminate input data redundancy and reduce the complexity of the model. The MIC was used to choose meteorological variables that have a substantial link with EV charging load. The selected meteorological variables were utilized as an input to both the prediction and comparable day selection models;

- (2)

A similar day selection model based on weighted grey relational analysis was proposed. The Spearman rank correlation coefficient of the week average daily load was used to calculate week type similarity. Then, by selecting meteorological variables obtained by MIC and week type similarity as the input, a similar day selection model based on weighted gray correlation analysis was used to choose a similar day load used as the forecasting model’s input;

- (3)

An MCCNN-TCN model framework was built. Combining the multi-channel 1DCNN model with the TCN model can establish global temporal dependencies between time series features at multiple time scales, which effectively improves the prediction performance.

The remainder of this paper is organized as follows. In

Section 2, a short-term EV charging load forecasting framework based on the MCCNN-TCN model is introduced. In

Section 3, experiments are conducted with a real dataset of grid companies and compared with other models. In

Section 4, the model proposed in this paper is analyzed compared to other state-of-the-art methods based on experimental results. In

Section 5, the paper’s conclusions and future research are given.

3. Results

The subject of the study in the paper is EV charging load short-term forecasting in the urban area of a city in northern China. The dataset was data collected from 38 public DC charging stations in the city’s urban area, from 1 January 2019 to 31 March 2020. The number of charging stations in residential, commercial, work and leisure areas is 8, 12, 11, and 7. These charging stations have 298 charging poles, each with a maximum charging power of 60 kW. The dataset included the active power of the charging poles, the transaction power, the charging start time and the charging end time, etc. The active power of the charging poles was sampled at 15 min intervals.

Meteorological data, which can be obtained from China Meteorological Data Network, include the temperature, humidity, precipitation, visibility, wind speed, and weather type. Among them, the temperature, humidity, and precipitation need to be interpolated by spline, and the purpose is to obtain the sampling value simultaneously with the load. Other data includes date type, season, etc.

All of the experimental models were run in the Python 3.6 programming environment, implemented under the Pytorch framework. The hardware used for the experiments was a PC with an Intel Core i7-10300H CPU, NVIDIA RTX 2060 GPU, and 32 GB of RAM.

3.1. Input Variables Selection and Processing

According to the investigation of influencing factors on EV charging load, these factors were divided into meteorological factors, date features, and similar daily load in this paper. Next, three types of features are selected and processed.

The MIC between each meteorological factor and EV charging load was calculated except for weather conditions.

Table 1 shows the MIC and Pearson correlation coefficient between EV charging load and temperature, humidity, precipitation, visibility, and wind direction. As shown in

Table 1, the EV charging load has a strong correlation with temperature, humidity, and rainfall but a weak correlation with visibility and wind speed. At the same time, the influence of weather conditions on the charging load of EVs cannot be ignored [

25]. The min–max normalization was used to linearly transform the raw temperature, humidity, and rainfall data to

. The number of index mapping databases is referenced in Ref. [

18]. In this paper, the mapping values were set to 0.1, 0.2, and 0.3 for the weather types sunny, cloudy and overcast, respectively, and 0.7, 0.1, and 1.5 for the weather types light rain or snow, rain or snow, and heavy rain or snow, respectively. Therefore, this paper selected weather type, temperature, humidity, and rainfall as the meteorological features that affect the EV charging load. Thus, this paper selected the temperature, humidity, rainfall, and weather conditions among meteorological factors as similar daily selection and prediction models.

Since the month, season, and week type affect the EV charging load fluctuation characteristics, the season, month, day, week type, weekday, and holiday, selected as date features, were used as the input of the prediction model.

Table 2 depicts the date features.

Similar daily loads were obtained from the similar days model. The min–max normalization was adopted to constrain EV charging load to . After that, the forecasted load values were exponentiated to establish a nonlinear relationship between the exponentially mapped forecasted load values and the historical loads. It eliminates the lagging problem when the model takes the last moment of the input sequence as the forecasting load value.

3.2. Performance Evaluation

The paper considered the root mean square error (RMSE), the mean absolute error (MAE), and the mean absolute percentage error (MAPE) while assessing the performance of the forecasting model. These are the statistical metrics defined:

where

N indicates the number of validation or testing instances.

and

represents the actual load and forecasted load of the

i-th instance, respectively.

Each statistical metric has different advantages and disadvantages. The RMSE evaluates the performance of a predictive model based on the mean absolute error of the deviation between predicted and actual loads. However, it is susceptible to outliers. In comparison to the RMSE, the MAE reflects the mean absolute error between forecasted and actual loads. It is more resilient to outliers than the RMSE but does not show the real degree of prediction bias. The MAPE is a forecast accuracy measure that considers the relative difference between forecasted and actual loads. However, the MAPE does not apply when the actual load is zero. Therefore, it is vital to employ multiple statistical metrics to assess the prediction performance.

3.3. Similar Daily Load Selection Based on Weighted Grey Correlation Analysis

The weather condition, temperature, humidity, rainfall, and week type are selected as daily features for the similar day in this paper. Since weather conditions and week type similarity are coarse-grained features, while temperature, humidity, and rainfall are fine-grained features, it is necessary to select the coarse-grained amounts of temperature, humidity, and rainfall. This paper selected daily maximum temperature, mean temperature, minimum temperature, as well as daily mean humidity and daily average rainfall as coarse-grained characteristics. Therefore, weather conditions, daily maximum temperature, daily average temperature, daily minimum temperature, humidity, rainfall, and week type similarity were selected as daily features. According to the selected day characteristics and the weighted gray correlation degree, a similar day set of the forecasting day was obtained.

Taking the EV charging load forecast on 15 December 2019 as an example, the weather forecast parameters on that day are shown in

Table 3. Because the selected December belongs to winter, the week type similarity obtained by Spearman correlation analysis in this season is shown in

Table 4.

According to the historical meteorological data and week type before the forecast day (1 December 2019 to 14 December 2019), the weighted grey correlation degrees between the forecasting day and the historical days were calculated to obtain a similar day set. The results of a similar day set are shown in

Table 5.

3.4. Validating the Multi-Channel Convolutional Neural Network and Temporal Convolution Network Model

3.4.1. Hyperparameters of the Multi-Channel Convolutional Neural Network and Temporal Convolution Network Model

From the similar day model results, it can be seen that the length of the similar day historical load sequence of the forecasting day is 384. In this paper, the number of channels of the multi-channel 1DCNN model was set to 4 to fully exploit the characteristics of EV charging load at different time scales. In the multi-channel 1DCNN model, the convolution stride in each channel was set to 1, and the activation function Tanh was selected to perform nonlinear mapping on the results after each convolution. The hyperparameters of the multi-channel 1DCNN model are shown in

Table 6. The TCN model hyperparameters are shown in

Table 7. The hyperparameters of the BP model and output layer are shown in

Table 8. In this paper, meteorological features, date features, and similar daily loads were selected as input variables for the MCCNN-TCN model, as shown in

Table 9.

3.4.2. Comparative Analysis of Single-Channel and Multi-Channel Convolutional Neural Network and Temporal Convolution Network Model

On the same data set, compared with the prediction results of the single-channel 1DCNN-TCN model, the advanced nature of the MCCNN-TCN proposed in this paper was verified. Each single-channel 1DCNN-TCN and MCCNN-TCN had the same TCN structure, with the only distinction being the number of 1DCNN channels. The single-channel 1DCNN-TCN models were set as follows: Model 1: C1-TCN; Model 2: C2-TCN; Model 3: C3-TCN; Model 4: C4-TCN. Each single-channel 1DCNN-TCN model and MCCNN-TCN model, whose loss function is the MSE, were trained with the Adam optimizer, a learning rate of 0.001, and a batch size of 512.

From 1 June 2019 to 31 August 2019, the training set, validation set, and test set were selected according to the ratio of 8:1:1. Each model outputs a load forecast value at one time each time, and the one-day forecast value refers to the cyclic forecast load value at 96 times. The RMSE, MAPE, and MAE values of each single-channel 1DCNN-TCN and MCCNN-TCN model on the test set are shown in

Table 10.

From

Table 10, it can be seen that the prediction performance of Model 1 to Model 4 decreases as the extracted time scale increases. This is due to the fact that the single-channel 1DCNN-TCN at the long-term scale loses the local short-term variation features of the EV charging load. The reason why the prediction performance of Model 1 is lower than that of the MCCNN-TCN model is that Model 1 lacks attention to the change trend features of EV charging load at a long-time scale. The advantage of the MCCNN-TCN model is that it can extract the local short-term change features and long-term change trend features of the EV charging load. Therefore, the RMSE, MAPE, and MAE values of the MCCNN-TCN model are lower than those of the single-channel 1DCNN-TCN models. It can be shown that extracting the multi-scale features of EV charging load can significantly improve the prediction accuracy.

3.4.3. Comparative Analysis of Different Forecasting Models

In order to evaluate the forecasting accuracy and superiority of the model proposed in this paper, ANN, LSTM, CNN-LSTM, and TCN prediction models, whose model structures are shown in

Appendix B Figure A4,

Figure A5,

Figure A6 and

Figure A7, were chosen for comparison.

Table 11 shows the ANN, LSTM, and CNN-LSTM models’ input. The TCN model’s inputs are equal to those of the MCCNN-TCN model. The loss function of ANN, LSTM, CNN-LSTM, and TCN models is MSE. Meanwhile, ANN, LSTM, CNN-LSTM, and TCN models were trained with the Adam optimizer, with a learning rate of 0.001 and a batch size of 512. The dataset was selected between 1 January 2019 and 31 March 2020, with an 8:1:1 ratio for the training, validation, and test sets.

The forecasting load curve of the model mentioned above on the test set from 1 March to 7 March 2020 is shown in

Figure 7. It can be seen from

Figure 7 that the original load is an approximately constant value from 0:00 to 6:00 am every day. The forecasting value of this period, except for the BP model, the forecasting value of all models fluctuates and deviates from the actual value. Although the forecasting value of the ANN model remains constant, it deviates significantly from the actual value. The MCCNN-TCN model fluctuates less than other models and is proximate to the actual value. At the peak of the load curve, the predicted values of the LSTM, ANN, and CNN-LSTM models all deviate to a certain extent and lag significantly compared with the actual values. The TCN model has a significant deviation from the actual values. In comparison to other models, the changing trend of the MCCNN-TCN model is compatible with the actual situation, and the predicted value is more proximate to the actual value. In the rising stage of the load curve, the forecasting value of the MCCNN-TCN model can also maintain a trend similar to the actual value. By analyzing the forecast effect of each prediction model in three stages, it can be seen that the MCCNN-TCN model can improve the accuracy of the short-term load forecasting of EV charging load. This is because the MCCNN-TCN model can not only learn the variation law of EV load on a long timescale but also pay attention to the short-term fluctuation characteristics of EV charging load.

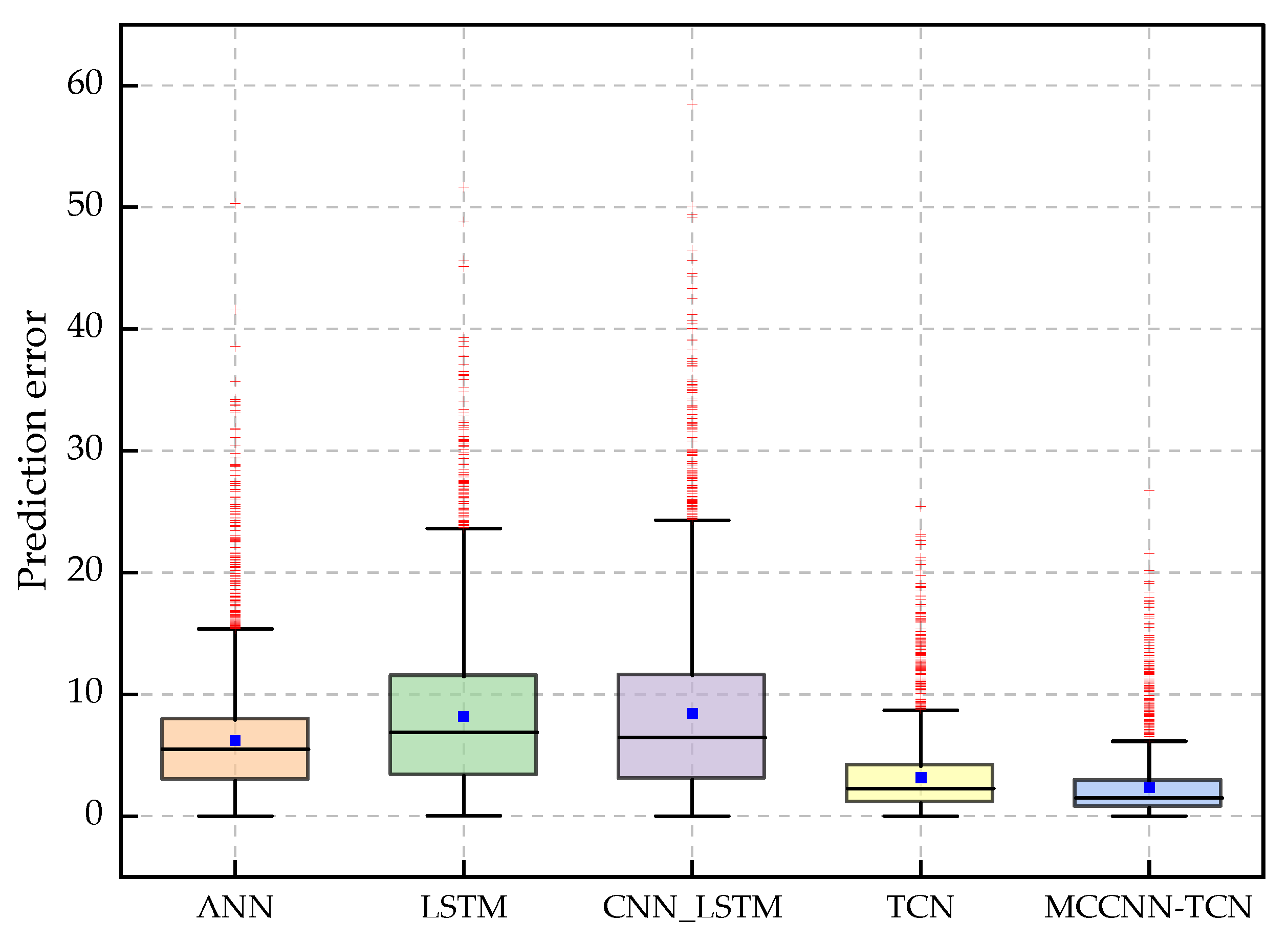

The RMSE, MAPE, and MAE of each model on the test set are shown in

Table 12. It can be seen from

Table 12 that the MAPE of the MCCNN-TCN model is 13.24%, which is 14.09%, 25.13%, 27.32%, and 4.48% higher than that of the ANN, LSTM, CNN-LSTM, and TCN models, respectively. The RMSE of the MCCNN-TCN model is 4.92 kW, which is also significantly less than that of other models. The absolute prediction error boxplots of the five models on the test dataset are shown in

Figure 8. The wider the boxplot, the more spread out the prediction errors are. It can be seen from

Figure 8 that the prediction error range of the MCCNN-TCN model is the narrowest while the LSTM is the widest, and the median absolute error of the MCCNN-TCN model is smaller than that of ANN, LSTM, CNN-LSTM, and TCN. From the prediction results, the MCCNN-TCN model is more effective than the ANN, LSTM, and CNN-LSTM models in complex fluctuation time series prediction.

In addition, it can be seen from

Appendix A Figure A2 that in different seasons, the charging load of EVs will show different characteristics. Therefore, this means that the performance of the model proposed in this paper needs to be evaluated further during each season. According to the four seasons defined by meteorology, spring is from March 2019 to May 2019, summer is from June 2019 to August 2019, autumn is from September 2019 to November 2019, and winter is from December 2019 to February 2020. In this paper, each season’s historical load and meteorological data are selected, respectively, and the training set, the verification set, and the test set are selected according to the ratio of 8:1:1. The prediction errors of different models on the test set of each season are presented in

Table 13.

As shown in

Table 13, by comparing the prediction results of the five models in each season, the advanced nature of the model proposed in this paper can be verified intuitively. Although the prediction performance of each prediction model is different in different seasons, the MCCNN-TCN model proposed in this paper has a significant decrease in MAPE, RMSE, and MAE compared with other models in each season. By taking the spring test set as an example, compared with other models, the MAPE of the MCCNN-TCN model decreased by 22.62%, 17.98%, 15.73%, and 6.66%, and the MAE decreased by 6.48, 5.43, 5.39, and 1.67, respectively. In addition, on the test set of each season, the RMSE, MAE, and MAPE of the MCCNN-TCN model and the TCN model are smaller than those of other models. However, since the TCN model does not have the characteristics of multi-time scale feature extraction, its RMSE, MAE, and MAPE in each season are higher than those of the MCCNN-TCN model. Additionally, the MCCNN-TCN model’s mean absolute error is relatively concentrated and much lower than the other models under each season, as illustrated in

Figure 9. Comparing the prediction results on the test set for each season demonstrates that the MCCNN-TCN model proposed in this paper has a stable prediction performance. This shows that the MCCNN-TCN model can adapt to the load forecasting demand of each season in a year and has good robustness and engineering application value.

4. Discussion

By comparing with the single-channel 1DCNN-TCN model, it can be demonstrated that the method of extracting EV charging load feature information at different time scales by setting multiple parallel 1DCNN passes can significantly improve the short-term load prediction performance.

The results in

Table 12 show that the MCCNN-TCN model can effectively improve short-term load prediction by using an approach that extracts EV charging load features at multiple scales and relies on TCN to establish long-time dependencies between features. The ANN model has the disadvantage of only establishing superficial nonlinear mapping relationships, which leads to a weaker ability to extract temporal correlations of EV charging loads. Recurrent neural network models such as LSTM have memory properties. They can learn long-term temporal correlations, but feature extraction is weak due to the lack of convolution in their models. This leads to its poor effectiveness in predicting EV charging loads characterized by substantial fluctuations over short periods. The TCN model has superior predictive capabilities over the LSTM and CNN-LSTM due to the availability of convolutional units for extracting shallow temporal features and establishing temporal dependencies. However, the TCN model can only extract features at a single scale, and therefore its prediction performance is poorer than that of the MCCNN-TCN. Further, the results in

Table 13 show that the predictive performance of the MCCNN-TCN model proposed in this paper is stable and outperforms those of the comparison models under different seasons.

Combined with the above analysis, it can be seen that the EV charging load prediction model proposed in this paper has a high prediction accuracy. However, the model proposed in this paper relies on the accuracy of meteorological data and EV charging load data to achieve high accuracy prediction. Therefore, some problems need to be noted in the engineering application of this method. On the one hand, if there are deviations in the meteorological data measurement of the forecasting day, this will affect the selection of similar daily loads. This paper uses several meteorological and date factors as day features when selecting similar day loads. Additionally, the adjacent day loads of the forecasting day to be measured are also added to the similar day set, making the similar day selection model somewhat fault-tolerant. On the other hand, in the power system, there are disturbances in the power load data from the measurement system caused by errors in the electric power system, outliers due to data encoding errors, and EV charging start and end times falling between load sampling points. Suppose the deviation from the actual value is slight. In that case, the deviation from the actual value obtained from the prediction model will also be slight. Conversely, suppose there are significant deviations from the actual values. In that case, the actual values need to be estimated using data pre-processing techniques such as mean-fill, interpolation, and algorithmic mean filtering.

5. Conclusions

Due to the randomness of EV charging behavior, the short-term fluctuation characteristics of EV charging load are obvious in one day. In order to improve the load prediction accuracy, this paper proposes the MCCNN-TCN load model, which considers the multi-time scale characteristics of EV charging loads. The multi-channel 1DCNN model was used to extract the features of EV charging load at multiple time scales. The TCN model was used to establish global temporal dependencies between the features.

By considering the influence of various factors on the load, MIC and Spearman coefficient were used to reduce the meteorological feature dimension and establish the similarity of date types, respectively. Then, taking the selected meteorological features and the similarity of date types as the daily features, a similar day selection model based on the weighted grey correlation degree was established to select similar daily loads. The selected meteorological features, date features, and similar daily loads were used as the input of the MCCNN-TCN model.

From the comparative experiments of single-channel 1DCNN-TCN and MCCNN-TCN, it can be seen that MCCNN-TCN can improve the prediction accuracy of EV charging load. This shows that the prediction performance can be improved by extracting the feature information of time series at different time scales and establishing global time series dependencies.

According to the prediction results compared with ANN, LSTM, CNN-LSTM, and TCN models, compared with these models, due to the unique structure of the MCCNN-TCN network, it can learn the multi-scale features of the EV charging load time series and master the changing law of EV charging load.

The MCCNN-TCN network constructed in this paper also lacks the consideration of real-time electricity price factors. In the future, we can further consider the selection of richer feature data and take advantage of big data to improve the accuracy of load forecasting.