Forecasting the Return of Carbon Price in the Chinese Market Based on an Improved Stacking Ensemble Algorithm

Abstract

1. Introduction

1.1. Literature Review

1.1.1. Progress in Carbon Market Prediction

1.1.2. Development of Ensemble Learning

1.1.3. Literature Review Summary

1.2. Objectives and Contributions

- How to modify the common stacking algorithm to maintain the sequentiality of the time series training data and at the same time improve the predictive power?;

- How to apply the improved stacking ensemble algorithm to carbon return prediction?;

- How to evaluate the improved algorithm’s practical power and provide investment guidance based on the results?

- This study innovatively improves the common stacking algorithm for better application to time series forecasting, and the results show that the modified algorithm can significantly improve the accuracy and increase the economic gain;

- The two ensemble forecasting frameworks we constructed are robust and accurate for predicting carbon price return;

- We novelly explored the predictability of carbon option returns using the stacking ensemble algorithm from a statistical and economic perspective, and the characteristics of carbon assets we obtained are very enlightening to relevant practitioners and academics;

- We linked the carbon return forecast with the volatility of the carbon market, opening up a new perspective to capture the variations of predictability of returns under different market conditions.

2. Methodology and Models

2.1. Improvement on the Stacking Ensemble Algorithm

2.1.1. The Common Stacking Algorithm

| Algorithm 1: Common stacking algorithm with k-fold cross-validation |

Input: Training set: , Test set Base learners: Meta-learner: Output: 1: level-1: Train the base learners with k-fold cross-validation 2: for do 3: Divide D into and 4: for do 5: Train base learner on 6: end for 7: end for 8: for do 9: Use the trained base learner to make prediction for 10: end for 11: for do 12: Use the trained base learner to make prediction for x 13: end for 14: level-2: Train the meta-learner 15: for do 16: Generate with to train meta-learner η 17: end for 18: Make prediction on test set 19: for do 20: (1) Generate new features for x with 21: (2) Input into trained meta-model to obtain the final prediction 22: end for |

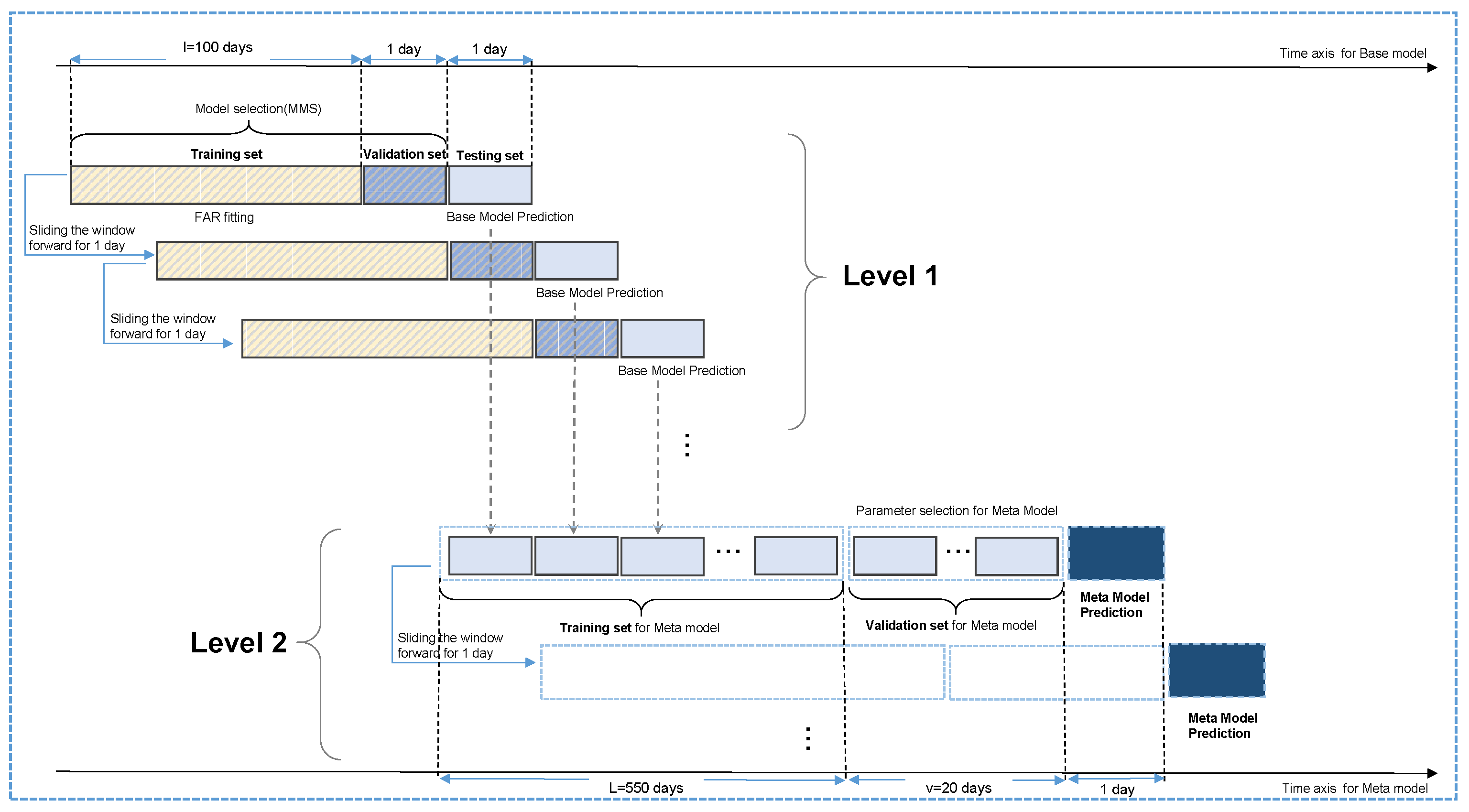

2.1.2. Our Improved Stacking Algorithm

| Algorithm 2: Improved stacking algorithm with walk-forward validation |

Input: Dataset: Base learners: Meta-learner: Output: 1: level-1: Training base learners with walk-forward validation 2: for do 3: for do 4: 5: end for 6: end for 7: level-2: Training meta-learner and make the final prediction 8: for do 9: Connect to obtain 10: Train η using to obtain trained meta-learner 11: while do 12: 13: end while 14: Adjust the hyperparameters of according to 15: if Find the best hyperparameter of then 16: Retrain using on to obtain retrained meta-learner 17: end if 18: Predict using the retrained meta-learner 19: end for 20: Isolating the test set on the timeline 21: |

2.1.3. Forecasting Framework for Empirical Experiments

2.2. Forecasting Models

2.3. Statistical and Economic Evaluation

2.3.1. Judgment on Different Volatile Intervals

2.3.2. Prediction Accuracy Metrics

2.3.3. Model Confidence Set

2.3.4. Investment Portfolio

3. Data Description

- Commodity variables, including energy and non-energy commodity futures, based on settlement prices and a price index from the Chinese market. For energy commodities, the selections were as follows: (1) China Liquefied Natural Gas Price Index (LNG); (2) thermal coal (SPcoa); (3) INE crude oil (SPcru). For non-energy commodities, the variables were subdivided into non-ferrous metals and agricultural products. Non-ferrous metals were (1) aluminum (SPalu); (2) zinc (SPzin); (3) lead (SPlea); (4) nickel (SPnic); (5) tin (SPtin); (6) silver (SPsil); (7) gold (SPgol); and (8) cathode copper (SPcop). Agricultural products were (1) yellow corn (SPcor); (2) egg (SPegg); (3) cotton (SPcot); and (4) high-quality strong gluten wheat (SPwhe) (except for LNG, other variables were option settlement price (active contract));

- Stock and bond market variables, including some composite indexes and rate variables. For the stock market, the predictors were (1) SSE: Average P/E ratio (SSEPE); (2) SSE Composite Index (SSECI); (3) CSI 300 Index (CSI300); (4) SSE 180 Index (SSE180); (5) SZSE Composite Index (SZSECI); (6) CSI 100 Index (CSI100); and (7) CSI 500 Index (CSI500). For the bond market, the predictors were (1) SSE Government Bond (SSEGBI); (2) SSE Corporate Bond Index (SSECBI); (3) SSE Enterprise Bond Index (SSEEBI); (4) CCDC government bond yield: 3-months (Gb3M); (5) CCDC government bond yield: 10-years (Gb10Y); (6) CCDC corporate bond yield (AAA): 3-months (Cb3M); (7) CCDC corporate bond yield (AAA): 10-years (Cb10M); (8) CCDC coal industry bond yield (AAA): 3-months (coalb3M); and (9) CCDC coal industry bond yield (AAA): 5-years (coalb5Y);

- Economic and industry composite variables, including (1) Financial Conditions Index (FCI); (2) China Securities Industry Index: energy (CSIIene); and (3) Wind Industry Index: energy (Windene). These three indexes were used to depict the financial sector and the energy sector as a whole, sectors which are closely related to the carbon option return.

4. Empirical Results

4.1. Out-of-Sample Accuracy Performance

4.1.1. Accuracy for the Whole Test Set

4.1.2. Accuracy for the Different Volatility Intervals

4.1.3. Robustness Analysis for Different Rolling Window Sizes

4.2. Analysis of MCS

4.3. Investment Gains from a Portfolio Perspective

4.3.1. Influence of Risk Preference on Portfolio Construction

4.3.2. Functionality of Ensemble Strategy in Improving Economic Gains

5. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Support Vector Regression (SVR)

Appendix A.2. Random Forest(RF)

Appendix A.3. eXtreme Gradient Boosting(XGBoost)

Appendix A.4. Three Penalty Regression

Appendix B

| Variables | Mean | Std. Dev. | Skew. | Kurt. | ADF Test | Jarque–Bera |

|---|---|---|---|---|---|---|

| SZA | 0.00034 | 0.18722 | 0.28 | 11.27 | −18.85 *** | 4857.24 *** |

| LNG | 0.00068 | 0.00047 | 0.34 | 33.09 | −9.76 *** | 41764.82 *** |

| SPcoa | 0.00042 | 0.00050 | −2.14 | 24.06 | −8.86 *** | 22770.72 *** |

| SPcru | 0.00044 | 0.00052 | −0.33 | 10.50 | −25.58 *** | 4217.38 *** |

| SPcor | 0.00046 | 0.00005 | 0.68 | 4.42 | −26.42 *** | 815.51 *** |

| SPegg | 0.00020 | 0.00045 | 4.68 | 63.46 | −8.15 *** | 156892.57 *** |

| SPcot | −0.00013 | 0.00018 | 0.06 | 8.94 | −25.61 *** | 3045.48 *** |

| SPwhe | 0.00031 | 0.00015 | 3.16 | 43.93 | −8.06 *** | 75088.17 *** |

| SPalu | 0.00032 | 0.00013 | −0.58 | 17.67 | −7.01 *** | 11956.52 *** |

| SPzin | −0.00001 | 0.00017 | 0.60 | 9.79 | −26.96 *** | 3712.28 *** |

| SPlea | −0.00021 | 0.00010 | −0.31 | 4.56 | −16.78 *** | 806.90 *** |

| SPnic | 0.00063 | 0.00028 | 0.14 | 5.36 | −12.91 *** | 1096.74 *** |

| SPtin | 0.00035 | 0.00020 | 0.16 | 12.29 | −6.73 *** | 5760.94 *** |

| SPsil | 0.00020 | 0.00023 | −0.98 | 11.20 | −15.31 *** | 4924.58 *** |

| SPgol | 0.00038 | 0.00006 | −0.82 | 10.13 | −9.97 *** | 4013.54 *** |

| SPcop | 0.00020 | 0.00013 | 2.12 | 49.51 | −27.06 *** | 94123.69 *** |

| SSEPE | −0.00032 | 0.00024 | −4.40 | 49.17 | −9.80 *** | 95125.33 *** |

| SSECI | 0.00003 | 0.00015 | −0.39 | 3.76 | −30.72 *** | 563.26 *** |

| CSI300 | 0.00008 | 0.00018 | −0.41 | 3.33 | −11.46 *** | 448.50 *** |

| SSE180 | 0.00005 | 0.00017 | −0.25 | 3.18 | −11.65 *** | 394.53 *** |

| SZSECI | 0.00021 | 0.00023 | −0.62 | 3.52 | −10.88 *** | 532.07 *** |

| CSI100 | 0.00003 | 0.00018 | −0.35 | 2.87 | −10.28 *** | 331.63 *** |

| CSI500 | 0.00007 | 0.00022 | −0.59 | 3.79 | −29.92 *** | 599.16 *** |

| SSEGBI | 0.00021 | 0.00000 | 4.96 | 57.39 | −5.88 *** | 129320.39 *** |

| SSECBI | 0.00023 | 0.00000 | 3.96 | 27.77 | −5.29 *** | 31781.76 *** |

| SSEEBI | 0.00021 | 0.00000 | 2.68 | 20.55 | −5.10 *** | 17187.30 *** |

| Gb3M | −0.00081 | 0.00075 | −1.71 | 17.74 | −10.91 *** | 12447.21 *** |

| Gb10Y | −0.00034 | 0.00008 | −1.76 | 18.40 | −7.98 *** | 13377.39 *** |

| Cb3M | −0.00108 | 0.00031 | −1.36 | 16.33 | −15.98 *** | 10454.93 *** |

| Cb10M | −0.00044 | 0.00002 | −2.11 | 27.65 | −6.90 *** | 29825.27 *** |

| coalb3M | −0.00108 | 0.00033 | −1.17 | 15.50 | −16.51 *** | 9368.78 *** |

| coalb5Y | −0.00060 | 0.00006 | −0.81 | 10.93 | −8.55 *** | 4653.14 *** |

| FCI | −0.00450 | 0.01904 | −0.81 | 17.78 | −8.76 *** | 12151.94 *** |

| CSIIene | 0.00018 | 0.00034 | 0.20 | 5.04 | −31.51 *** | 974.00 *** |

| Windene | 0.00025 | 0.00032 | 0.05 | 4.83 | −31.17 *** | 888.71 *** |

| Label | Variable | Transform. |

|---|---|---|

| SZA | Option Settlement Price: Carbon Emission Right (Shenzhen) | LD |

| Panel A: Energy and non-energy commodities | ||

| LNG | China Liquified Natural Gas Price Index | LD |

| SPcoa | Futures settlement price (active contract): Coal | LD |

| SPcru | Futures settlement price (active contract): Crude oil | LD |

| SPcor | Futures settlement price (active contract): Corn | LD |

| SPegg | Futures settlement price (active contract): Egg | LD |

| SPcot | Futures settlement price (active contract): Cotton | LD |

| SPwhe | Futures settlement price (active contract): Wheat | LD |

| SPalu | Futures settlement price (active contract): Aluminium | LD |

| SPzin | Futures settlement price (active contract): Zinc | LD |

| SPlea | Futures settlement price (active contract): Lead | LD |

| SPnic | Futures settlement price (active contract): Nickel | LD |

| SPtin | Futures settlement price (active contract): Tin | LD |

| SPsil | Futures settlement price (active contract): Silver | LD |

| SPgol | Futures settlement price (active contract): Gold | LD |

| SPcop | Futures settlement price (active contract): Copper | LD |

| SZA | Option Settlement Price: Carbon Emission Right (Shenzhen) | LD |

| Panel B: Financial variables | ||

| SSEPE | SSE Average P/E ratio | LD |

| SSECI | SSE Composite Index | LD |

| CSI300 | CSI 300 Index | LD |

| SSE180 | SSE 180 Index | LD |

| SZSECI | SZSE Composite Index | LD |

| CSI100 | CSI 100 Index | LD |

| CSI500 | CSI 500 Index | LD |

| SSEGBI | SSE Government Bond Index | LD |

| SSECBI | SSE Corporate Bond Index | LD |

| SSEEBI | SSE Enterprise Bond Index | LD |

| Gb3M | CCDC government bond yield: 3-months | LD |

| Gb10Y | CCDC government bond yield: 10-years | LD |

| Cb3M | CCDC corporate bond yield (AAA): 3-months | LD |

| Cb10M | CCDC corporate bond yield (AAA): 10-years | LD |

| coalb3M | CCDC coal industry bond yield (AAA): 3-months | LD |

| coalb5Y | CCDC coal industry bond yield (AAA): 5-years | LD |

| Panel C: Economic and industry index | ||

| FCI | Financial Conditions Index | FD |

| CSIIene | China Securities Industry Index: Energy | LD |

| Windene | WIND Industry Index: Energy | LD |

| Model Name | Explanations | Hyperparameters |

|---|---|---|

| FAR | Factor-augmented regression | |

| FAR+MMS | Mallows Model Selection | - |

| MMA | Mallows Model Averaging | - |

| E-net | Elastic-net | |

| lasso | Lasso regression | - |

| ridge | Ridge regression | - |

| svr | Support Vector Regression | kernel:rbf, gamma:auto, C:[0.5,1] |

| XGBoost | eXtreme Gradient Boosting | max_depth:3, learning_rate:0.04, subsample:0.3, colsample_bytree:0.8, reg_alpha:0.05, reg_lambda:0.05, n_estimators:50 |

| rf | Random Forest | n_estimators:50, max_features:sqrt, max_depth:4, min_samples_split:2, min_samples_leaf:4 |

| homo_rf | RF as meta-model for homogeneous ensemble | n_estimators:180, max_features:sqrt, max_depth:2, min_samples_split:2, min_samples_leaf:4 |

| homo_svr | SVR as meta-model for homogeneous ensemble | c = 0.5 |

| homo_xgb | XGBoost as meta-model for homogeneous ensemble | max_depth:2, learning_rate:0.1, subsample:0.95, colsample_bytree:0.7, reg_alpha:0.2, reg_lambda:0.05, n_estimators:50 |

| hete_rf | RF as meta-model for heterogeneous ensemble | n_estimators:70, max_features:sqrt, max_depth:3, min_samples_split:2, min_samples_leaf:2 |

| hete_svr | SVR as meta-model for heterogeneous ensemble | c = 8 |

| hete_xgb | XGBoost as meta-model heterogeneous ensemble | max_depth:2, learning_rate:0.06, subsample:0.6, colsample_bytree:0.6, reg_alpha:0.2, reg_lambda:0.06, n_estimators:80 |

| w = 200 | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | SMAPE | MAE | U1 | ||||||||||||||||

| Whole | High | Low | Whole | High | Low | Whole | High | Low | Whole | High | Low | Whole | High | Low | |||||

| Base Model | |||||||||||||||||||

| FAR | 0.4369 | 0.5620 | 0.2568 | 0.2595 | 0.3481 | 0.1708 | 0.2749 | 0.3758 | 0.1740 | 0.1630 | 0.2569 | 0.0935 | 0.2942 | 0.3363 | −0.0142 | ||||

| FAR+MMS | 0.4362 | 0.5604 | 0.2578 | 0.2704 | 0.3601 | 0.1807 | 0.2851 | 0.3866 | 0.1836 | 0.1632 | 0.2595 | 0.0942 | 0.2965 | 0.3400 | −0.0222 | ||||

| MMA | 0.4378 | 0.5641 | 0.2549 | 0.2713 | 0.3634 | 0.1792 | 0.2862 | 0.3903 | 0.1821 | 0.1631 | 0.2601 | 0.0926 | 0.2913 | 0.3311 | 0.0003 | ||||

| E-net | 0.4658 | 0.6127 | 0.2419 | 0.2552 | 0.3551 | 0.1553 | 0.2742 | 0.3903 | 0.1582 | 0.1641 | 0.2681 | 0.0793 | 0.1977 | 0.2111 | 0.0996 | ||||

| lasso | 0.4756 | 0.6280 | 0.2410 | 0.2573 | 0.3578 | 0.1569 | 0.2776 | 0.3956 | 0.1596 | 0.1690 | 0.2767 | 0.0784 | 0.1634 | 0.1711 | 0.1067 | ||||

| ridge | 0.4692 | 0.6157 | 0.2473 | 0.2598 | 0.3571 | 0.1626 | 0.2791 | 0.3926 | 0.1656 | 0.1662 | 0.2704 | 0.0843 | 0.1859 | 0.2032 | 0.0590 | ||||

| SVR | 0.4507 | 0.5916 | 0.2372 | 0.2477 | 0.3430 | 0.1524 | 0.2652 | 0.3754 | 0.1551 | 0.1564 | 0.2523 | 0.0771 | 0.2488 | 0.2643 | 0.1346 | ||||

| XGBoost | 0.4759 | 0.6242 | 0.2518 | 0.2763 | 0.3786 | 0.1740 | 0.2955 | 0.4144 | 0.1767 | 0.1647 | 0.2611 | 0.0916 | 0.1623 | 0.1811 | 0.0248 | ||||

| RF | 0.4884 | 0.6469 | 0.2420 | 0.2645 | 0.3670 | 0.1621 | 0.2861 | 0.4075 | 0.1647 | 0.1620 | 0.2615 | 0.0795 | 0.1180 | 0.1206 | 0.0990 | ||||

| Ensemble Model | |||||||||||||||||||

| homo_rf | 0.4268 | 0.5528 | 0.2426 | 0.2608 | 0.3509 | 0.1707 | 0.2751 | 0.3770 | 0.1732 | 0.1530 | 0.2433 | 0.0841 | 0.3262 | 0.3578 | 0.0950 | ||||

| homo_svr | 0.4059 | 0.5231 | 0.2365 | 0.2402 | 0.3188 | 0.1616 | 0.2533 | 0.3426 | 0.1640 | 0.1372 | 0.2109 | 0.0802 | 0.3906 | 0.4250 | 0.1394 | ||||

| homo_xgb | 0.4210 | 0.5472 | 0.2347 | 0.2508 | 0.3395 | 0.1622 | 0.2650 | 0.3654 | 0.1646 | 0.1456 | 0.2316 | 0.0788 | 0.3445 | 0.3707 | 0.1529 | ||||

| hete_rf | 0.4311 | 0.5668 | 0.2248 | 0.2540 | 0.3468 | 0.1613 | 0.2693 | 0.3752 | 0.1633 | 0.1536 | 0.2449 | 0.0778 | 0.3126 | 0.3249 | 0.2227 | ||||

| hete_svr | 0.4169 | 0.5422 | 0.2317 | 0.2413 | 0.3260 | 0.1565 | 0.2555 | 0.3520 | 0.1590 | 0.1442 | 0.2267 | 0.0788 | 0.3572 | 0.3821 | 0.1744 | ||||

| hete_xgb | 0.4196 | 0.5500 | 0.2226 | 0.2511 | 0.3446 | 0.1576 | 0.2653 | 0.3708 | 0.1597 | 0.1477 | 0.2333 | 0.0788 | 0.3489 | 0.3641 | 0.2375 | ||||

| Percentage of improvement(%) | |||||||||||||||||||

| homogeneous | 4.3389 | 3.7271 | 7.3429 | 3.4248 | 3.3781 | 3.5201 | 3.7968 | 3.7642 | 3.8672 | 10.8676 | 11.0036 | 13.2982 | - | - | - | ||||

| heterogeneous | 9.3613 | 9.6233 | 7.6670 | 4.9431 | 5.8698 | 2.8976 | 6.1368 | 7.3688 | 3.2041 | 9.2448 | 11.1046 | 5.7464 | - | - | - | ||||

| w = 300 | |||||||||||||||||||

| RMSE | SMAPE | MAE | U1 | ||||||||||||||||

| Whole | High | Low | Whole | High | Low | Whole | High | Low | Whole | High | Low | Whole | High | Low | |||||

| Base Model | |||||||||||||||||||

| FAR | 0.4432 | 0.5723 | 0.2555 | 0.2631 | 0.3554 | 0.1708 | 0.2792 | 0.3844 | 0.1740 | 0.1614 | 0.2574 | 0.0900 | 0.2737 | 0.3117 | −0.0043 | ||||

| FAR+MMS | 0.4363 | 0.5592 | 0.2609 | 0.2723 | 0.3573 | 0.1872 | 0.2870 | 0.3837 | 0.1902 | 0.1636 | 0.2572 | 0.0964 | 0.2959 | 0.3428 | −0.0473 | ||||

| MMA | 0.4378 | 0.5609 | 0.2620 | 0.2721 | 0.3557 | 0.1884 | 0.2869 | 0.3825 | 0.1914 | 0.1637 | 0.2568 | 0.0958 | 0.2912 | 0.3387 | −0.0561 | ||||

| E-net | 0.4657 | 0.6120 | 0.2431 | 0.2549 | 0.3537 | 0.1561 | 0.2740 | 0.3889 | 0.1591 | 0.1645 | 0.2671 | 0.0804 | 0.1981 | 0.2127 | 0.0907 | ||||

| lasso | 0.4681 | 0.6163 | 0.2415 | 0.2544 | 0.3531 | 0.1557 | 0.2737 | 0.3890 | 0.1585 | 0.1654 | 0.2695 | 0.0792 | 0.1898 | 0.2016 | 0.1031 | ||||

| ridge | 0.4685 | 0.6158 | 0.2447 | 0.2573 | 0.3553 | 0.1592 | 0.2766 | 0.3909 | 0.1622 | 0.1661 | 0.2696 | 0.0825 | 0.1881 | 0.2031 | 0.0788 | ||||

| SVR | 0.4473 | 0.5868 | 0.2363 | 0.2471 | 0.3420 | 0.1523 | 0.2643 | 0.3737 | 0.1549 | 0.1559 | 0.2514 | 0.0774 | 0.2601 | 0.2764 | 0.1410 | ||||

| XGBoost | 0.4838 | 0.6417 | 0.2376 | 0.2681 | 0.3808 | 0.1554 | 0.2886 | 0.4193 | 0.1580 | 0.1715 | 0.2752 | 0.0837 | 0.1343 | 0.1346 | 0.1316 | ||||

| RF | 0.4916 | 0.6485 | 0.2504 | 0.2742 | 0.3710 | 0.1775 | 0.2960 | 0.4119 | 0.1802 | 0.1791 | 0.2781 | 0.0967 | 0.1064 | 0.1161 | 0.0355 | ||||

| Ensemble Model | |||||||||||||||||||

| homo_rf | 0.4299 | 0.5564 | 0.2450 | 0.2588 | 0.3432 | 0.1744 | 0.2736 | 0.3702 | 0.1769 | 0.1527 | 0.2381 | 0.0867 | 0.3166 | 0.3494 | 0.0766 | ||||

| homo_svr | 0.4234 | 0.5492 | 0.2387 | 0.2464 | 0.3278 | 0.1651 | 0.2612 | 0.3547 | 0.1676 | 0.1445 | 0.2230 | 0.0813 | 0.3369 | 0.3660 | 0.1235 | ||||

| homo_xgb | 0.4255 | 0.5496 | 0.2448 | 0.2543 | 0.3393 | 0.1694 | 0.2688 | 0.3656 | 0.1721 | 0.1477 | 0.2316 | 0.0831 | 0.3305 | 0.3650 | 0.0780 | ||||

| hete_rf | 0.4343 | 0.5667 | 0.2367 | 0.2569 | 0.3381 | 0.1757 | 0.2725 | 0.3670 | 0.1779 | 0.1546 | 0.2377 | 0.0860 | 0.3026 | 0.3250 | 0.1384 | ||||

| hete_svr | 0.4169 | 0.5417 | 0.2328 | 0.2408 | 0.3262 | 0.1553 | 0.2549 | 0.3521 | 0.1578 | 0.1440 | 0.2221 | 0.0786 | 0.3572 | 0.3833 | 0.1664 | ||||

| hete_xgb | 0.4276 | 0.5586 | 0.2319 | 0.2526 | 0.3439 | 0.1614 | 0.2675 | 0.3713 | 0.1637 | 0.1494 | 0.2334 | 0.0813 | 0.3237 | 0.3443 | 0.1731 | ||||

| Percentage of improvement(%) | |||||||||||||||||||

| homogeneous | 3.8109 | 3.5846 | 4.9584 | 3.7821 | 5.2603 | 0.7060 | 4.0542 | 5.4235 | 1.0293 | 8.1069 | 10.2943 | 6.9572 | - | - | - | ||||

| heterogeneous | 8.5464 | 9.1647 | 4.6173 | 4.2287 | 6.3325 | −0.3874 | 5.3811 | 7.6877 | −0.0795 | 10.3723 | 13.3881 | 3.6899 | - | - | - | ||||

| homo_rf | homo_svr | homo_xgb | hete_rf | hete_svr | hete_xgb | |

|---|---|---|---|---|---|---|

| 0.1 | 127.7197 | 123.8112 | 130.1952 | 124.2137 | 125.9954 | 123.8034 |

| 0.2 | 106.8630 | 101.8535 | 105.7327 | 103.7007 | 105.0748 | 105.3883 |

| 0.3 | 88.2185 | 85.6709 | 87.1185 | 83.8264 | 86.4316 | 85.9962 |

| 0.4 | 70.2675 | 68.8990 | 70.2145 | 65.6354 | 68.6525 | 67.9815 |

| 0.5 | 52.7011 | 59.8317 | 54.7938 | 47.4687 | 49.0159 | 50.8217 |

| 0.6 | 38.3261 | 49.1146 | 45.4186 | 33.7303 | 30.2269 | 37.5260 |

| 0.7 | 29.1157 | 37.5849 | 34.7880 | 23.8302 | 20.6855 | 28.0542 |

| 0.8 | 19.5876 | 26.3511 | 24.5970 | 14.0808 | 11.0149 | 18.0043 |

| 0.9 | 10.2042 | 15.6767 | 14.6974 | 3.9732 | 1.5389 | 7.7412 |

| 1.0 | 0.8623 | 5.4678 | 4.9580 | −6.3192 | −7.4124 | −1.6894 |

| 1.1 | −8.2981 | −4.2715 | −4.6567 | −15.5063 | −16.6081 | −10.8347 |

| 1.2 | −17.4042 | −13.3336 | −14.0189 | −24.3991 | −25.6488 | −19.2512 |

| 1.3 | −26.3873 | −22.1643 | −23.2896 | −33.1668 | −34.0418 | −27.5019 |

| 1.4 | −35.2305 | −30.8520 | −32.3527 | −41.2748 | −42.2087 | −35.9446 |

| 1.5 | −43.9335 | −39.4210 | −41.2813 | −48.5382 | −49.5585 | −44.2988 |

| 1.6 | −52.3764 | −47.8206 | −49.8819 | −55.5433 | −56.5896 | −52.5452 |

| 1.7 | −59.5221 | −56.0732 | −57.9944 | −62.5579 | −63.4440 | −60.4958 |

| 1.8 | −66.6184 | −63.7761 | −65.4391 | −62.2680 | −67.9305 | −68.0212 |

| 1.9 | −67.0725 | −71.0538 | −70.1967 | −61.9225 | −72.4949 | −71.0244 |

| 2.0 | −66.7368 | −78.2603 | −74.9988 | −61.8104 | −77.0745 | −67.9159 |

| homo_rf | homo_svr | homo_xgb | hete_rf | hete_svr | hete_xgb | |

|---|---|---|---|---|---|---|

| 0.1 | 10.5833 | 11.0648 | 9.6583 | 21.7402 | 14.0093 | 6.2470 |

| 0.2 | 9.2922 | 8.0546 | 9.5888 | 19.7474 | 9.3324 | 6.7526 |

| 0.3 | 7.1480 | 5.2682 | 8.1578 | 16.5444 | 5.6646 | 6.6055 |

| 0.4 | 4.1519 | 3.3513 | 5.8763 | 12.7961 | 2.8451 | 5.2168 |

| 0.5 | 1.6063 | 2.2058 | 3.1016 | 9.3113 | 1.1212 | 3.6444 |

| 0.6 | −0.2334 | 1.1163 | 0.3678 | 7.7594 | −0.2119 | 2.3696 |

| 0.7 | −0.2521 | −0.1230 | −1.8165 | 7.5543 | −1.7435 | 1.4899 |

| 0.8 | −0.2480 | −1.4857 | −2.2543 | 7.3756 | −3.3473 | 0.5143 |

| 0.9 | −0.2202 | −2.8608 | −2.3471 | 6.8545 | −3.4884 | −0.1160 |

| 1.0 | −0.4539 | −4.2558 | −2.5065 | 6.2759 | −3.6066 | −0.6956 |

| 1.1 | −0.8144 | −5.6323 | −2.5351 | 5.6874 | −3.4685 | −0.8434 |

| 1.2 | −1.2150 | −6.6567 | −2.6040 | 5.0897 | −3.4482 | −0.9459 |

| 1.3 | −1.6539 | −6.5332 | −2.9180 | 4.4465 | −3.5440 | −1.1259 |

| 1.4 | −2.1931 | −6.5012 | −3.3178 | 3.7551 | −3.7335 | −1.3668 |

| 1.5 | −2.6683 | −6.5235 | −3.7421 | 3.0814 | −3.9281 | −1.6564 |

| 1.6 | −3.1688 | −6.6074 | −4.2389 | 2.4251 | −4.1701 | −1.9855 |

| 1.7 | −3.7310 | −6.7433 | −4.7852 | 1.7832 | −4.4465 | −2.3472 |

| 1.8 | −4.2997 | −6.9287 | −5.3504 | 1.1336 | −4.7509 | −2.7454 |

| 1.9 | −4.8519 | −7.1635 | −5.8327 | 0.4947 | −5.0776 | −3.1775 |

| 2.0 | −5.3919 | −7.4461 | −6.3234 | −0.0607 | −5.3202 | −3.6242 |

References

- Weng, Q.; Xu, H. A review of China’s carbon trading market. Renew. Sustain. Energy Rev. 2018, 91, 613–619. [Google Scholar] [CrossRef]

- Qi, S.; Cheng, S.; Tan, X.; Feng, S.; Zhou, Q. Predicting China’s carbon price based on a multi-scale integrated model. Appl. Energy 2022, 324, 119784. [Google Scholar] [CrossRef]

- Lu, H.; Ma, X.; Huang, K.; Azimi, M. Carbon trading volume and price forecasting in China using multiple machine learning models. J. Clean. Prod. 2020, 249, 119386. [Google Scholar] [CrossRef]

- Fan, X.; Li, S.; Tian, L. Chaotic characteristic identification for carbon price and an multi-layer perceptron network prediction model. Expert Syst. Appl. 2015, 42, 3945–3952. [Google Scholar] [CrossRef]

- Zhu, B.; Wei, Y. Carbon price forecasting with a novel hybrid ARIMA and least squares support vector machines methodology. Omega 2013, 41, 517–524. [Google Scholar] [CrossRef]

- Segnon, M.; Lux, T.; Gupta, R. Modeling and forecasting the volatility of carbon dioxide emission allowance prices: A review and comparison of modern volatility models. Renew. Sustain. Energy Rev. 2017, 69, 692–704. [Google Scholar] [CrossRef]

- Byun, S.J.; Cho, H. Forecasting carbon futures volatility using GARCH models with energy volatilities. Energy Econ. 2013, 40, 207–221. [Google Scholar] [CrossRef]

- Chevallier, J.; Sévi, B. On the realized volatility of the ECX CO2 emissions 2008 futures contract: Distribution, dynamics and forecasting. Ann. Stat. 2009, 32, 407–499. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Zhang, K. Short-term electric load forecasting based on singular spectrum analysis and support vector machine optimized by Cuckoo search algorithm. Electr. Power Syst. Res. 2017, 146, 270–285. [Google Scholar] [CrossRef]

- Jiang, L.; Wu, P. International carbon market price forecasting using an integration model based on SVR. In Proceedings of the 2015 International Conference on Engineering Management, Engineering Education and Information Technology, Guangzhou, China, 24–25 October 2015; Atlantis Press: Amsterdam, The Netherlands, 2015; pp. 303–308. [Google Scholar]

- Atsalakis, G.S. Using computational intelligence to forecast carbon prices. Appl. Soft Comput. 2016, 43, 107–116. [Google Scholar] [CrossRef]

- Ji, L.; Zou, Y.; He, K.; Zhu, B. Carbon futures price forecasting based with ARIMA-CNN-LSTM model. Procedia Comput. Sci. 2019, 162, 33–38. [Google Scholar] [CrossRef]

- Zhu, B.; Ye, S.; Wang, P.; He, K.; Zhang, T.; Wei, Y.M. A novel multiscale nonlinear ensemble leaning paradigm for carbon price forecasting. Energy Econ. 2018, 70, 143–157. [Google Scholar] [CrossRef]

- Xiong, S.; Wang, C.; Fang, Z.; Ma, D. Multi-step-ahead carbon price forecasting based on variational mode decomposition and fast multi-output relevance vector regression optimized by the multi-objective whale optimization algorithm. Energies 2019, 12, 147. [Google Scholar] [CrossRef]

- Qin, Q.; He, H.; Li, L.; He, L.Y. A novel decomposition-ensemble based carbon price forecasting model integrated with local polynomial prediction. Comput. Econ. 2020, 55, 1249–1273. [Google Scholar] [CrossRef]

- Sun, W.; Duan, M. Analysis and forecasting of the carbon price in china’s regional carbon markets based on fast ensemble empirical mode decomposition, phase space reconstruction, and an improved extreme learning machine. Energies 2019, 12, 277. [Google Scholar] [CrossRef]

- Yang, Y.; Guo, H.; Jin, Y.; Song, A. An ensemble prediction system based on artificial neural networks and deep learning methods for deterministic and probabilistic carbon price forecasting. Front. Environ. Sci. 2021, 9, 740093. [Google Scholar] [CrossRef]

- Zhou, J.; Yu, X.; Yuan, X. Predicting the carbon price sequence in the shenzhen emissions exchange using a multiscale ensemble forecasting model based on ensemble empirical mode decomposition. Energies 2018, 11, 1907. [Google Scholar] [CrossRef]

- Tan, X.; Sirichand, K.; Vivian, A.; Wang, X. Forecasting European carbon returns using dimension reduction techniques: Commodity versus financial fundamentals. Int. J. Forecast. 2022, 38, 944–969. [Google Scholar] [CrossRef]

- Adekoya, O.B. Predicting carbon allowance prices with energy prices: A new approach. J. Clean. Prod. 2021, 282, 124519. [Google Scholar] [CrossRef]

- Zhao, X.; Han, M.; Ding, L.; Kang, W. Usefulness of economic and energy data at different frequencies for carbon price forecasting in the EU ETS. Appl. Energy 2018, 216, 132–141. [Google Scholar] [CrossRef]

- French, K.R.; Schwert, G.W.; Stambaugh, R.F. Expected stock returns and volatility. J. Financ. Econ. 1987, 19, 3–29. [Google Scholar] [CrossRef]

- Nelson, D.B. Conditional heteroskedasticity in asset returns: A new approach. Model. Stock. Mark. Volatility 1991, 59, 347–370. [Google Scholar] [CrossRef]

- Benz, E.; Trück, S. Modeling the price dynamics of CO2 emission allowances. Energy Econ. 2009, 31, 4–15. [Google Scholar] [CrossRef]

- Dasarathy, B.V.; Sheela, B.V. A composite classifier system design: Concepts and methodology. Proc. IEEE 1979, 67, 708–713. [Google Scholar] [CrossRef]

- Schapire, R.E. The strength of weak learnability. Mach. Learn. 1990, 5, 197–227. [Google Scholar] [CrossRef]

- Wolpert, D.H. Stacked generalization. Neural Netw. 1992, 5, 241–259. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Ding, W.; Wu, S. ABC-based stacking method for multilabel classification. Turk. J. Electr. Eng. Comput. Sci. 2019, 27, 4231–4245. [Google Scholar] [CrossRef]

- Bakurov, I.; Castelli, M.; Gau, O.; Fontanella, F.; Vanneschi, L. Genetic programming for stacked generalization. Swarm Evol. Comput. 2021, 65. [Google Scholar] [CrossRef]

- Agarwal, S.; Chowdary, C.R. A-Stacking and A-Bagging: Adaptive versions of ensemble learning algorithms for spoof fingerprint detection. Expert Syst. Appl. 2020, 146. [Google Scholar] [CrossRef]

- Varshini, P.A.G.; Kumari, A.K.; Varadarajan, V. Estimating software development efforts using a random forest-based stacked ensemble approach. Electronics 2021, 10, 1195. [Google Scholar] [CrossRef]

- Lacy, S.E.; Lones, M.A.; Smith, S.L. A Comparison of evolved linear and non-linear ensemble vote aggregators. In Proceedings of the 2015 IEEE Congress on Evolutionary Computation (CEC), Sendai, Japan, 25–28 May 2015; IEEE Congress on Evolutionary Computation. IEEE: Piscataway, NJ, USA, 2015; pp. 758–763. [Google Scholar]

- Menahem, E.; Rokach, L.; Elovici, Y. Troika—An improved stacking schema for classification tasks. Inf. Sci. 2009, 179, 4097–4122. [Google Scholar] [CrossRef]

- Pari, R.; Sandhya, M.; Sankar, S. A multitier stacked ensemble algorithm for improving classification accuracy. Comput. Sci. Eng. 2020, 22, 74–85. [Google Scholar] [CrossRef]

- Adyapady R, R.; Annappa, B. An ensemble approach using a frequency-based and stacking classifiers for effective facial expression recognition. Multimed. Tools Appl. 2022, 82, 14689–14712. [Google Scholar] [CrossRef]

- Yoon, T.; Kang, D. Multi-model Stacking ensemble for the diagnosis of cardiovascular diseases. J. Pers. Med. 2023, 13, 373. [Google Scholar] [CrossRef]

- Dumancas, G.; Adrianto, I. A stacked regression ensemble approach for the quantitative determination of biomass feedstock compositions using near infrared spectroscopy. Spectrochim. Acta Part Mol. Biomol. Spectrosc. 2022, 276, 121231. [Google Scholar] [CrossRef]

- Zhang, Z.; Ma, Y.; Hua, Y. Financial Fraud Identification Based on Stacking Ensemble Learning Algorithm: Introducing MD&A Text Information. Comput. Intell. Neurosci. 2022, 2022, 1780834. [Google Scholar] [CrossRef]

- Yang, Y.; Liu, X. A robust semi-supervised learning approach via mixture of label information. Pattern Recognit. Lett. 2015, 68, 15–21. [Google Scholar] [CrossRef]

- Breiman, L. Stacked regressions. Mach. Learn. 1996, 24, 49–64. [Google Scholar] [CrossRef]

- Campbell, J.Y.; Thompson, S.B. Predicting excess stock returns out of sample: Can anything beat the historical average? Rev. Financ. Stud. 2007, 21, 1509–1531. [Google Scholar] [CrossRef]

- Zhu, B.; Han, D.; Wang, P.; Wu, Z.; Zhang, T.; Wei, Y.M. Forecasting carbon price using empirical mode decomposition and evolutionary least squares support vector regression. Appl. Energy 2017, 191, 521–530. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, J.; Xiong, T.; Su, C. Interval forecasting of carbon futures prices using a novel hybrid approach with exogenous variables. Discret. Dyn. Nat. Soc. 2017, 2017, 5730295. [Google Scholar] [CrossRef]

- Yahsi, M.; Canakoglu, E.; Agrali, S. Carbon price forecasting models based on big data analytics. Carbon Manag. 2019, 10, 175–187. [Google Scholar] [CrossRef]

- Wang, J.; Sun, X.; Cheng, Q.; Cui, Q. An innovative random forest-based nonlinear ensemble paradigm of improved feature extraction and deep learning for carbon price forecasting. Sci. Total Environ. 2021, 762, 143099. [Google Scholar] [CrossRef]

- Zhang, C.; Zhao, Y.; Zhao, H. A novel hybrid price prediction model for multimodal carbon emission trading market based on CEEMDAN algorithm and window-based XGBoost approach. Mathematics 2022, 10, 72. [Google Scholar] [CrossRef]

- Jaramillo-Moran, M.A.; Fernandez-Martinez, D.; Garcia-Garcia, A.; Carmona-Fernandez, D. Improving artificial intelligence forecasting models performance with data preprocessing: European Union Allowance prices case study. Energies 2021, 14, 845. [Google Scholar] [CrossRef]

- Kim, H.H.; Swanson, N.R. Forecasting financial and macroeconomic variables using data reduction methods: New empirical evidence. J. Econom. 2014, 178, 352–367. [Google Scholar] [CrossRef]

- Cheng, X.; Hansen, B.E. Forecasting with factor-augmented regression: A frequentist model averaging approach. J. Econom. 2015, 186, 280–293. [Google Scholar] [CrossRef]

- Mallows, C.L. Some comments on Cp. Technometrics 2000, 42, 87–94. [Google Scholar]

- Hansen, B.E. Least squares model averaging. Econometrica 2007, 75, 1175–1189. [Google Scholar] [CrossRef]

- Hamilton, J.D.; Susmel, R. Autoregressive conditional heteroskedasticity and changes in regime. J. Econom. 1994, 64, 307–333. [Google Scholar] [CrossRef]

- Liu, M.; Lee, C.C. Capturing the dynamics of the China crude oil futures: Markov switching, co-movement, and volatility forecasting. Energy Econ. 2021, 103, 105622. [Google Scholar] [CrossRef]

- Wang, P.; Zong, L.; Ma, Y. An integrated early warning system for stock market turbulence. Expert Syst. Appl. 2020, 153, 113463. [Google Scholar] [CrossRef]

- Shi, Y.; Ho, K.Y.; Liu, W.M. Public information arrival and stock return volatility: Evidence from news sentiment and Markov Regime-Switching Approach. Int. Rev. Econ. Financ. 2016, 42, 291–312. [Google Scholar] [CrossRef]

- Ardia, D.; Bluteau, K.; Boudt, K.; Catania, L.; Trottier, D.A. Markov-switching GARCH models in R: The MSGARCH package. J. Stat. Softw. 2019, 91, 1–38. [Google Scholar] [CrossRef]

- Hansen, P.R.; Lunde, A.; Nason, J.M. The model confidence set. Econometrica 2011, 79, 453–497. [Google Scholar] [CrossRef]

- Rapach, D.E.; Strauss, J.K.; Zhou, G. Out-of-sample equity premium prediction: Combination forecasts and links to the real economy. Rev. Financ. Stud. 2009, 23, 821–862. [Google Scholar] [CrossRef]

- Zhao, A.B.; Cheng, T. Stock return prediction: Stacking a variety of models. J. Empir. Financ. 2022, 67, 288–317. [Google Scholar] [CrossRef]

- Liu, J.; Ma, F.; Tang, Y.; Zhang, Y. Geopolitical risk and oil volatility: A new insight. Energy Econ. 2019, 84, 104548. [Google Scholar] [CrossRef]

- Zhang, F.; Xia, Y. Carbon price prediction models based on online news information analytics. Financ. Res. Lett. 2022, 46, 102809. [Google Scholar] [CrossRef]

- Yun, P.; Zhang, C.; Wu, Y.; Yang, Y. Forecasting carbon dioxide price using a time-varying high-order moment hybrid model of NAGARCHSK and gated recurrent unit network. Int. J. Environ. Res. Public Health 2022, 19, 899. [Google Scholar] [CrossRef]

- Rossi, B.; Inoue, A. Out-of-sample forecast tests robust to the choice of window size. J. Bus. Econ. Stat. 2012, 30, 432–453. [Google Scholar] [CrossRef]

- Drucker, H.; Burges, C.J.; Kaufman, L.; Smola, A.; Vapnik, V. Support vector regression machines. Adv. Neural Inf. Process. Syst. 1996, 9, 155–161. [Google Scholar]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Wang, X.; Wang, Y. A hybrid model of EMD and PSO-SVR for short-term load forecasting in residential quarters. Math. Probl. Eng. 2016, 2016, 9895639. [Google Scholar] [CrossRef]

- Ho, T.K. Random decision forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 1, pp. 278–282. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Hoerl, A.; Kennard, R. Ridge regression—Biased estimation for nonorthogonal problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. Ser. B-Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B-Stat. Methodol. 2005, 67, 301–320. [Google Scholar] [CrossRef]

| Evaluation Index | Definition | Equation |

|---|---|---|

| RMSE | Root mean square error | |

| SMAPE | Symmetric mean absolute percentage error | |

| MAE | Mean absolute error | |

| U1 | Theil U statistic 1 | |

| Out-of-sample statistic |

| RMSE | SMAPE | MAE | U1 | ||

|---|---|---|---|---|---|

| Homogeneous ensemble | |||||

| Base Model | |||||

| FAR | 0.4701 | 0.2774 | 0.2959 | 0.1738 | 0.1828 |

| FAR+MMS | 0.4513 | 0.2706 | 0.2872 | 0.1671 | 0.2469 |

| Ensemble Model | |||||

| homo_rf | 0.4317 | 0.2544 | 0.2698 | 0.1530 | 0.3107 |

| homo_svr | 0.4229 | 0.2458 | 0.2605 | 0.1475 | 0.3386 |

| homo_xgb | 0.4270 | 0.2510 | 0.2660 | 0.1512 | 0.3257 |

| Heterogeneous ensemble | |||||

| Base Model | |||||

| MMA | 0.4616 | 0.2733 | 0.2910 | 0.1712 | 0.2120 |

| E-net | 0.4832 | 0.2616 | 0.2827 | 0.1679 | 0.1364 |

| lasso | 0.4868 | 0.2683 | 0.2895 | 0.1662 | 0.1238 |

| ridge | 0.4825 | 0.2647 | 0.2855 | 0.1677 | 0.1390 |

| SVR | 0.4668 | 0.2542 | 0.2734 | 0.1602 | 0.1943 |

| XGBoost | 0.5022 | 0.2863 | 0.3087 | 0.1713 | 0.0671 |

| RF | 0.4992 | 0.2708 | 0.2936 | 0.1629 | 0.0783 |

| Ensemble Model | |||||

| hete_rf | 0.4387 | 0.2541 | 0.2702 | 0.1544 | 0.2884 |

| hete_svr | 0.4340 | 0.2445 | 0.2602 | 0.1535 | 0.3034 |

| hete_xgb | 0.4393 | 0.2446 | 0.2611 | 0.1536 | 0.2862 |

| RMSE | SMAPE | MAE | U1 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| High | Low | High | Low | High | Low | High | Low | High | Low | |||||

| Base Model | ||||||||||||||

| FAR | 0.6041 | 0.2775 | 0.3691 | 0.1858 | 0.4020 | 0.1897 | 0.2757 | 0.0945 | 0.2330 | −0.1846 | ||||

| FAR+MMS | 0.5864 | 0.2518 | 0.3657 | 0.1755 | 0.3960 | 0.1784 | 0.2732 | 0.0862 | 0.2773 | 0.0251 | ||||

| Ensemble Model | ||||||||||||||

| homo_rf | 0.5617 | 0.2392 | 0.3408 | 0.1680 | 0.3692 | 0.1704 | 0.2451 | 0.0797 | 0.3368 | 0.1199 | ||||

| homo_svr | 0.5482 | 0.2390 | 0.3285 | 0.1630 | 0.3554 | 0.1656 | 0.2311 | 0.0790 | 0.3683 | 0.1213 | ||||

| homo_xgb | 0.5542 | 0.2399 | 0.3373 | 0.1648 | 0.3646 | 0.1673 | 0.2416 | 0.0799 | 0.3545 | 0.1148 | ||||

| Percentage of improvement(%) | ||||||||||||||

| 8.1735 | 13.7447 | 9.0900 | 11.0440 | 9.6952 | 11.5317 | 13.2126 | 15.8577 | - | - | |||||

| RMSE | SMAPE | MAE | U1 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| High | Low | High | Low | High | Low | High | Low | High | Low | |||||

| Base Model | ||||||||||||||

| MMA | 0.6056 | 0.2437 | 0.3753 | 0.1713 | 0.4081 | 0.1740 | 0.2815 | 0.0855 | 0.2292 | 0.0863 | ||||

| E-net | 0.6376 | 0.2460 | 0.3654 | 0.1579 | 0.4045 | 0.1609 | 0.2750 | 0.0807 | 0.1456 | 0.0688 | ||||

| lasso | 0.6384 | 0.2575 | 0.3683 | 0.1683 | 0.4075 | 0.1715 | 0.2685 | 0.0857 | 0.1434 | −0.0197 | ||||

| ridge | 0.6345 | 0.2511 | 0.3642 | 0.1652 | 0.4026 | 0.1683 | 0.2737 | 0.0845 | 0.1539 | 0.0298 | ||||

| SVR | 0.6154 | 0.2388 | 0.3530 | 0.1555 | 0.3887 | 0.1581 | 0.2592 | 0.0758 | 0.2040 | 0.1231 | ||||

| XGBoost | 0.6573 | 0.2692 | 0.3900 | 0.1827 | 0.4313 | 0.1860 | 0.2724 | 0.0910 | 0.0919 | −0.1143 | ||||

| RF | 0.6643 | 0.2390 | 0.3775 | 0.1642 | 0.4207 | 0.1666 | 0.2605 | 0.0800 | 0.0724 | 0.1214 | ||||

| Ensemble Model | ||||||||||||||

| hete_rf | 0.5769 | 0.2283 | 0.3458 | 0.1625 | 0.3759 | 0.1646 | 0.2470 | 0.0772 | 0.3006 | 0.1986 | ||||

| hete_svr | 0.5686 | 0.2311 | 0.3362 | 0.1527 | 0.3652 | 0.1551 | 0.2418 | 0.0776 | 0.3205 | 0.1784 | ||||

| hete_xgb | 0.5765 | 0.2318 | 0.3323 | 0.1569 | 0.3628 | 0.1593 | 0.2430 | 0.0786 | 0.3016 | 0.1734 | ||||

| Percentage of improvement(%) | ||||||||||||||

| 9.7761 | 7.5941 | 8.7461 | 5.4426 | 10.0423 | 5.7167 | 9.7016 | 6.6027 | - | - | |||||

| RMSE | SMAPE | MAE | U1 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Whole | High | Low | Whole | High | Low | Whole | High | Low | Whole | High | Low | Whole | High | Low | |||||

| Ensemble Model | |||||||||||||||||||

| homo_rf | 0.4317 | 0.5617 | 0.2392 | 0.2544 | 0.3408 | 0.1680 | 0.2698 | 0.3692 | 0.1704 | 0.1530 | 0.2451 | 0.0797 | 0.3107 | 0.3368 | 0.1199 | ||||

| homo_svr | 0.4229 | 0.5482 | 0.2390 | 0.2458 | 0.3285 | 0.1630 | 0.2605 | 0.3554 | 0.1656 | 0.1475 | 0.2311 | 0.0790 | 0.3386 | 0.3683 | 0.1213 | ||||

| homo_xgb | 0.4270 | 0.5542 | 0.2399 | 0.2510 | 0.3373 | 0.1648 | 0.2660 | 0.3646 | 0.1673 | 0.1512 | 0.2416 | 0.0799 | 0.3257 | 0.3545 | 0.1148 | ||||

| hete_rf | 0.4387 | 0.5769 | 0.2283 | 0.2541 | 0.3458 | 0.1625 | 0.2702 | 0.3759 | 0.1646 | 0.1544 | 0.2470 | 0.0772 | 0.2884 | 0.3006 | 0.1986 | ||||

| hete_svr | 0.4340 | 0.5686 | 0.2311 | 0.2445 | 0.3362 | 0.1527 | 0.2602 | 0.3652 | 0.1551 | 0.1535 | 0.2418 | 0.0776 | 0.3034 | 0.3205 | 0.1784 | ||||

| hete_xgb | 0.4393 | 0.5765 | 0.2318 | 0.2446 | 0.3323 | 0.1569 | 0.2611 | 0.3628 | 0.1593 | 0.1536 | 0.2430 | 0.0786 | 0.2862 | 0.3016 | 0.1734 | ||||

| Deep Learning Model | |||||||||||||||||||

| LSTM | 0.4415 | 0.5280 | 0.3334 | 0.2943 | 0.3373 | 0.2513 | 0.3086 | 0.3607 | 0.2566 | 0.1972 | 0.2155 | 0.1861 | 0.2790 | 0.4141 | −0.7100 | ||||

| GRU | 0.4696 | 0.5471 | 0.3763 | 0.3401 | 0.3765 | 0.3038 | 0.3552 | 0.4000 | 0.3104 | 0.2059 | 0.2218 | 0.1964 | 0.1846 | 0.3709 | −1.1785 | ||||

| EMD+LSTM | 0.4560 | 0.5358 | 0.3588 | 0.3123 | 0.3436 | 0.2809 | 0.3270 | 0.3672 | 0.2868 | 0.1631 | 0.1879 | 0.1467 | 0.2311 | 0.3966 | −0.9798 | ||||

| MSE | MAE | Huber Loss | ||||||

|---|---|---|---|---|---|---|---|---|

| Homogeneous ensemble | ||||||||

| FAR | 0.1075 | 0.0969 | 0.0416 | 0.0801 | 0.0862 | 0.0541 | ||

| FAR+MMS | 0.2132 | 0.2082 | 0.0752 | 0.0801 | 0.2302 | 0.1569 | ||

| homo_rf | 0.6814 | 0.6707 | 0.2739 | 0.163 | 0.8326 | 0.8531 | ||

| homo_xgb | 0.6814 | 0.6707 | 0.3961 | 0.3961 | 0.8326 | 0.8531 | ||

| homo_svr | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | ||

| Heterogeneous ensemble | ||||||||

| MMA | 0.3683 | 0.3922 | 0.0876 | 0.3015 | 0.2629 | 0.3739 | ||

| E-net | 0.3683 | 0.3922 | 0.2946 | 0.3015 | 0.2629 | 0.3739 | ||

| lasso | 0.3683 | 0.3776 | 0.1881 | 0.3015 | 0.2509 | 0.2619 | ||

| ridge | 0.3683 | 0.3776 | 0.2921 | 0.3015 | 0.2629 | 0.3158 | ||

| SVR | 0.3244 | 0.3922 | 0.3658 | 0.3676 | 0.2509 | 0.3739 | ||

| XGBoost | 0.0778 | 0.0536 | 0.0104 | 0.0184 | 0.0443 | 0.0316 | ||

| RF | 0.3683 | 0.3550 | 0.0926 | 0.3015 | 0.1578 | 0.2619 | ||

| hete_rf | 0.8630 | 0.7634 | 0.2921 | 0.3676 | 0.8959 | 0.7860 | ||

| hete_xgb | 0.8630 | 0.6902 | 0.8846 | 0.8846 | 0.8959 | 0.7514 | ||

| hete_svr | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | ||

| UG | SR | ||||||

|---|---|---|---|---|---|---|---|

| Whole | High | Low | Whole | High | Low | ||

| Base Model | |||||||

| FAR | 40.0720 | 77.1260 | 5.1235 | 0.2445 | 0.3445 | 0.0876 | |

| FAR+MMS | 45.7422 | 88.4737 | 5.6559 | 0.2650 | 0.3759 | 0.0899 | |

| MMA | 42.4660 | 79.2613 | 7.9127 | 0.2526 | 0.3485 | 0.1066 | |

| E-net | 42.3854 | 80.5625 | 6.3027 | 0.2551 | 0.3581 | 0.0947 | |

| lasso | 41.8589 | 80.7737 | 4.9107 | 0.2572 | 0.3668 | 0.0837 | |

| ridge | 40.2578 | 81.0734 | 1.8021 | 0.2466 | 0.3582 | 0.0615 | |

| svr | 41.7817 | 71.9543 | 13.1367 | 0.2549 | 0.3344 | 0.1537 | |

| XGBoost | 9.6912 | 19.3971 | 0.3235 | 0.1320 | 0.1810 | 0.0520 | |

| rf | 27.1812 | 41.1122 | 13.6153 | 0.2007 | 0.2448 | 0.1538 | |

| Ensemble Model | |||||||

| homo_rf | 46.4293 | 88.2185 | 7.1480 | 0.2689 | 0.3774 | 0.1010 | |

| homo_svr | 44.2339 | 85.6709 | 5.2682 | 0.2608 | 0.3702 | 0.0867 | |

| homo_xgb | 46.4102 | 87.1185 | 8.1578 | 0.2681 | 0.3730 | 0.1086 | |

| hete_rf | 49.2886 | 83.8264 | 16.5444 | 0.2810 | 0.3678 | 0.1690 | |

| hete_svr | 44.8457 | 86.4316 | 5.6646 | 0.2638 | 0.3742 | 0.0899 | |

| hete_xgb | 45.1008 | 85.9962 | 6.6055 | 0.2649 | 0.3725 | 0.0968 | |

| buy&hold | - | - | - | 0.0119 | 0.0050 | 0.0187 | |

| homo_rf | homo_svr | homo_xgb | hete_rf | hete_svr | hete_xgb | Observation | |

|---|---|---|---|---|---|---|---|

| Whole | 0.0715 | 0.0656 | 0.0775 | 0.0547 | 0.0884 | 0.0624 | 0.2715 |

| High | 0.1166 | 0.1041 | 0.1264 | 0.0970 | 0.1508 | 0.1048 | 0.4800 |

| Low | 0.0258 | 0.0256 | 0.0278 | 0.0108 | 0.0246 | 0.0171 | 0.0655 |

| UG | |||

|---|---|---|---|

| Whole | High | Low | |

| Base Model | |||

| FAR | 144.9971 | 263.4732 | 25.9002 |

| FAR+MMS | 150.6673 | 268.8209 | 26.4326 |

| MMA | 147.3911 | 265.6085 | 28.6894 |

| E-net | 147.3105 | 266.9096 | 27.0794 |

| lasso | 146.7839 | 267.1209 | 25.6873 |

| ridge | 145.1829 | 267.4206 | 22.5788 |

| svr | 146.7068 | 258.3015 | 33.9134 |

| XGBoost | 114.6163 | 205.7443 | 21.1002 |

| rf | 132.1063 | 227.4593 | 34.3919 |

| Ensemble Model | |||

| homo_rf | 151.3544 | 274.5656 | 27.9247 |

| homo_svr | 149.1590 | 272.0181 | 26.0448 |

| homo_xgb | 151.3353 | 273.4657 | 28.9345 |

| hete_rf | 154.2137 | 270.1735 | 37.3211 |

| hete_svr | 149.7708 | 272.7787 | 26.4413 |

| hete_xgb | 150.0259 | 272.3434 | 27.3822 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, P.; Li, Y.; Siddik, A.B. Forecasting the Return of Carbon Price in the Chinese Market Based on an Improved Stacking Ensemble Algorithm. Energies 2023, 16, 4520. https://doi.org/10.3390/en16114520

Ye P, Li Y, Siddik AB. Forecasting the Return of Carbon Price in the Chinese Market Based on an Improved Stacking Ensemble Algorithm. Energies. 2023; 16(11):4520. https://doi.org/10.3390/en16114520

Chicago/Turabian StyleYe, Peng, Yong Li, and Abu Bakkar Siddik. 2023. "Forecasting the Return of Carbon Price in the Chinese Market Based on an Improved Stacking Ensemble Algorithm" Energies 16, no. 11: 4520. https://doi.org/10.3390/en16114520

APA StyleYe, P., Li, Y., & Siddik, A. B. (2023). Forecasting the Return of Carbon Price in the Chinese Market Based on an Improved Stacking Ensemble Algorithm. Energies, 16(11), 4520. https://doi.org/10.3390/en16114520