Electrical Load Forecasting Using LSTM, GRU, and RNN Algorithms

Abstract

:1. Introduction

- Forecasting electrical loads with the highest accuracy to simulate the real development of electrical loads.

- Assisting electrical companies in developing short and medium-term plans for designing electrical networks and estimating infrastructure needs.

- Improving the electricity service in Palestine and solving the problem of power outages in Palestine.

- Helping the electricity companies in securing sources of energy that are suitable for the loads and not reduce the loads; as this increase is considered a waste that cannot be used.

2. Literature Review

2.1. Background

2.2. Electrical Load Forecasting

2.3. Short-Term Load Forecasting (STLF)

2.3.1. Short-Term Load Forecasting for Medium and Large Electrical Networks

2.3.2. Short-Term Load Forecasting for Small Electrical Networks

2.4. Research Questions

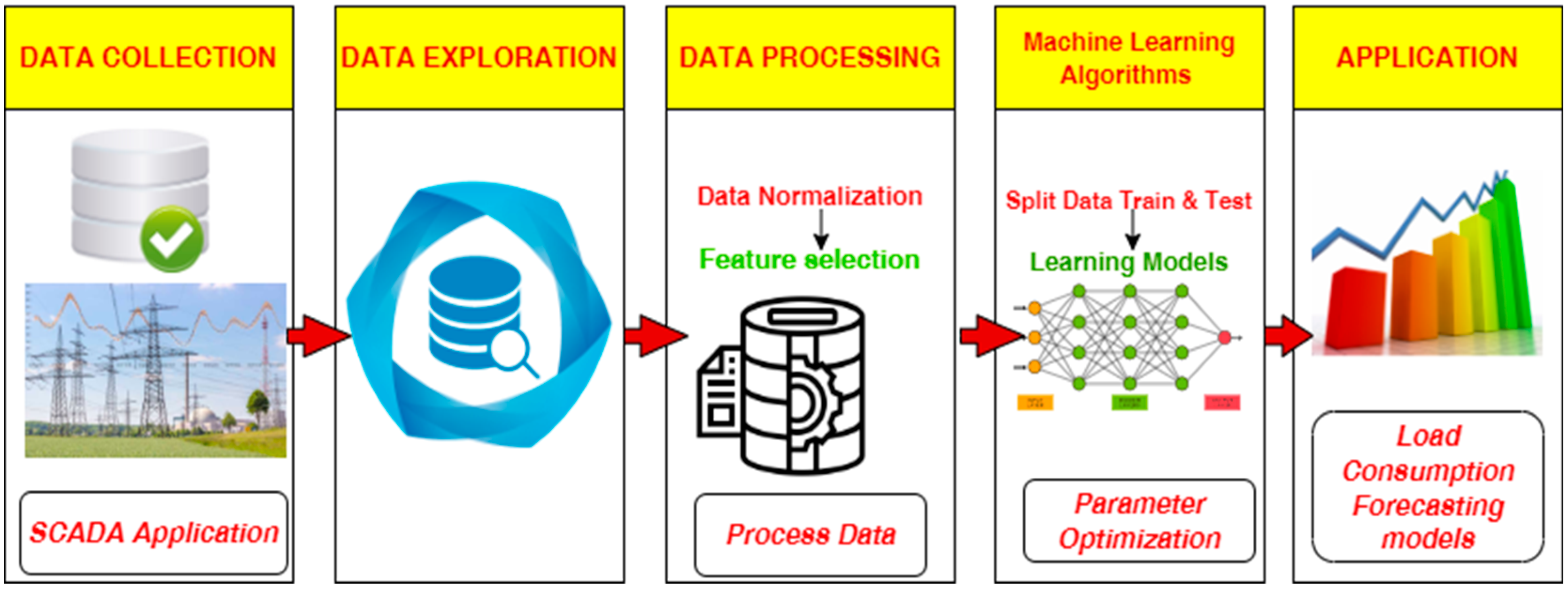

3. Methodology

3.1. Data Collection and Description

3.2. Exploratory Data Analysis (EDA)

3.2.1. Correlation

3.2.2. Electrical Demand Behavior Analysis

3.2.3. Time Series Analysis for Electricity Loads

3.3. Forecasting Methodology

3.3.1. Data Preprocessing

Data Normalization

Feature Selection

3.3.2. Machine Learning Algorithms

Long Short-Term Memory Model

Recurrent Neural Network Model

Gate Recurrent Unit Model

- Variable xt is the network input at moment t.

- Variables ht and () are information vectors that reflect the temporary output and the hidden layer output at instant t, respectively.

- Variables zt and rt are gate vectors that reflect the output of the update gate and the reset gate at moment t, respectively.

- The sigmoid and tanh activation functions are represented by (X) and tanh (x), respectively.

3.3.3. Hyperparameters Tuning for Machine Learning Models

- Best optimizer.

- Activation function.

- Learning rate.

- The number of epochs.

- Batch size.

- The number of hidden layers.

- Dropout.

3.3.4. Metrics Selection

- Mean Square Error (MSE) is a calculation of the mean squared deviation between observed and predicted values. Equation (3) shows how to calculate MSE.where is the actual data value and the predicted data value.

- Root Mean Square Error (RMSE) is equal to the square root of the average squared error. Equation (4) shows how to calculate RMSE.

- Mean Absolute Error (MAE) is the mean of the absolute value of the errors. Equation (5) shows how to calculate MAE.

- The coefficient of Determination (R-squared) is a number between 0 and 1 that measures the accuracy with which a model can anticipate a given result. Equation (6) shows how to calculate R-squared.where:

- —The regression sum of squares (explained sum of squares).

- —The sum of all squares.

4. Result and Discussion

4.1. Forecasting Results

4.1.1. Forecasting Using LSTM, RNN, and GRU Algorithms with Adam Optimizer

4.1.2. Forecasting Using LSTM, RNN, and GRU Algorithms with AdaGrad Optimizer

4.1.3. Forecasting Using LSTM, RNN, and GRU Algorithms with RMSprop Optimizer

4.1.4. Forecasting Using LSTM, RNN, and GRU Algorithms with Adadelta Optimizer

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yohanandhan, R.V.; Elavarasan, R.M.; Pugazhendhi, R.; Premkumar, M.; Mihet-Popa, L.; Zhao, J.; Terzija, V. A specialized review on outlook of future Cyber-Physical Power System (CPPS) testbeds for securing electric power grid. Int. J. Electr. Power Energy Syst. 2022, 136, 107720. [Google Scholar] [CrossRef]

- Azarpour, A.; Mohammadzadeh, O.; Rezaei, N.; Zendehboudi, S. Current status and future prospects of renewable and sustainable energy in North America: Progress and challenges. Energy Convers. Manag. 2022, 269, 115945. [Google Scholar] [CrossRef]

- Huang, N.; Wang, S.; Wang, R.; Cai, G.; Liu, Y.; Dai, Q. Gated spatial-temporal graph neural network based short-term load forecasting for wide-area multiple buses. Int. J. Electr. Power Energy Syst. 2023, 145, 108651. [Google Scholar] [CrossRef]

- Liu, C.-L.; Tseng, C.-J.; Huang, T.-H.; Yang, J.-S.; Huang, K.-B. A multi-task learning model for building electrical load prediction. Energy Build. 2023, 278, 112601. [Google Scholar] [CrossRef]

- Xia, Y.; Wang, J.; Wei, D.; Zhang, Z. Combined framework based on data preprocessing and multi-objective optimizer for electricity load forecasting. Eng. Appl. Artif. Intell. 2023, 119, 105776. [Google Scholar] [CrossRef]

- Jena, T.R.; Barik, S.S.; Nayak, S.K. Electricity Consumption & Prediction using Machine Learning Models. Acta Tech. Corviniensis-Bull. Eng. 2020, 9, 2804–2818. [Google Scholar]

- Mansouri, S.A.; Jordehi, A.R.; Marzband, M.; Tostado-Véliz, M.; Jurado, F.; Aguado, J.A. An IoT-enabled hierarchical decentralized framework for multi-energy microgrids market management in the presence of smart prosumers using a deep learning-based forecaster. Appl. Energy 2023, 333, 120560. [Google Scholar] [CrossRef]

- Oprea, S.-V.; Bâra, A.; Puican, F.C.; Radu, I.C. Anomaly Detection with Machine Learning Algorithms and Big Data in Electricity Consumption. Sustainability 2021, 13, 10963. [Google Scholar] [CrossRef]

- Lei, L.; Chen, W.; Wu, B.; Chen, C.; Liu, W. A building energy consumption prediction model based on rough set theory and deep learning algorithms. Energy Build. 2021, 240, 110886. [Google Scholar] [CrossRef]

- Liu, T.; Xu, C.; Guo, Y.; Chen, H. A novel deep reinforcement learning based methodology for short-term HVAC system energy consumption prediction. Int. J. Refrig. 2019, 107, 39–51. [Google Scholar] [CrossRef]

- Al-Bayaty, H.; Mohammed, T.; Wang, W.; Ghareeb, A. City scale energy demand forecasting using machine learning based models: A comparative study. ACM Int. Conf. Proceeding Ser. 2019, 28, 1–9. [Google Scholar]

- Ahmad, T.; Chen, H.; Huang, R.; Yabin, G.; Wang, J.; Shair, J.; Akram, H.M.A.; Mohsan, S.A.H.; Kazim, M. Supervised based machine learning models for short, medium and long-term energy prediction in distinct building environment. Energy 2018, 158, 17–32. [Google Scholar] [CrossRef]

- Geetha, R.; Ramyadevi, K.; Balasubramanian, M. Prediction of domestic power peak demand and consumption using supervised machine learning with smart meter dataset. Multimedia Tools Appl. 2021, 80, 19675–19693. [Google Scholar] [CrossRef]

- Chen, C.; Liu, Y.; Kumar, M.; Qin, J.; Ren, Y. Energy consumption modelling using deep learning embedded semi-supervised learning. Comput. Ind. Eng. 2019, 135, 757–765. [Google Scholar] [CrossRef]

- Khan, Z.; Adil, M.; Javaid, N.; Saqib, M.; Shafiq, M.; Choi, J.-G. Electricity Theft Detection Using Supervised Learning Techniques on Smart Meter Data. Sustainability 2020, 12, 8023. [Google Scholar] [CrossRef]

- Kaur, H.; Kumari, V. Predictive modelling and analytics for diabetes using a machine learning approach. Appl. Comput. Inform. 2022, 18, 90–100. [Google Scholar] [CrossRef]

- Kim, T.-Y.; Cho, S.-B. Predicting residential energy consumption using CNN-LSTM neural networks. Energy 2019, 182, 72–81. [Google Scholar] [CrossRef]

- Wang, Z.; Srinivasan, R.S. A Review of Artificial Intelligence Based Building Energy Use Prediction: Contrasting the Capabilities of single and Ensemble Prediction Models. Renew. Sustain. Energy Rev. 2017, 75, 796–808. [Google Scholar] [CrossRef]

- Ivanov, D.; Tsipoulanidis, A.; Schönberger, J. Global Supply Chain and Operations Management; Springer International Publishing: Cham, Switzerland, 2017. [Google Scholar]

- Kuster, C.; Rezgui, Y.; Mourshed, M. Electrical load forecasting models: A critical systematic review. Sustain. Cities Soc. 2017, 35, 257–270. [Google Scholar] [CrossRef]

- Arora, S.; Taylor, J.W. Rule-based autoregressive moving average models for forecasting load on special days: A case study for France. Eur. J. Oper. Res. 2018, 266, 259–268. [Google Scholar] [CrossRef] [Green Version]

- Takeda, H.; Tamura, Y.; Sato, S. Using the ensemble Kalman filter for electricity load forecasting and analysis. Energy 2016, 104, 184–198. [Google Scholar] [CrossRef]

- Maldonado, S.; González, A.; Crone, S. Automatic time series analysis for electric load forecasting via support vector regression. Appl. Soft Comput. 2019, 83, 105616. [Google Scholar] [CrossRef]

- Rendon-Sanchez, J.F.; de Menezes, L.M. Structural combination of seasonal exponential smoothing forecasts applied to load forecasting. Eur. J. Oper. Res. 2019, 275, 916–924. [Google Scholar] [CrossRef]

- Lindberg, K.; Seljom, P.; Madsen, H.; Fischer, D.; Korpås, M. Long-term electricity load forecasting: Current and future trends. Util. Policy 2019, 58, 102–119. [Google Scholar] [CrossRef]

- Hong, T.; Forecasting, S.F. Probabilistic electric load forecasting: A tutorial review. Int. J. Forecast. 2016, 32, 914–938. [Google Scholar] [CrossRef]

- Kloker, S.; Straub, T.; Weinhardt, C.; Maedche, A.; Brocke, J.V.; Hevner, A. Designing a Crowd Forecasting Tool to Combine Prediction Markets and Real-Time Delphi. In Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 10243, pp. 468–473. [Google Scholar] [CrossRef]

- Goehry, B.; Goude, Y.; Massart, P.; Poggi, J.-M. Aggregation of Multi-Scale Experts for Bottom-Up Load Forecasting. IEEE Trans. Smart Grid 2019, 11, 1895–1904. [Google Scholar] [CrossRef]

- Chafi, Z.S.; Afrakhte, H. Short-Term Load Forecasting Using Neural Network and Particle Swarm Optimization (PSO) Algorithm. Math. Probl. Eng. 2021, 2021, 5598267. [Google Scholar] [CrossRef]

- Gao, X.; Li, X.; Zhao, B.; Ji, W.; Jing, X.; He, Y. Short-Term Electricity Load Forecasting Model Based on EMD-GRU with Feature Selection. Energies 2020, 12, 1140. [Google Scholar] [CrossRef] [Green Version]

- Yuan, B.; He, B.; Yan, J.; Jiang, J.; Wei, Z.; Shen, X. Short-term electricity consumption forecasting method based on empirical mode decomposition of long-short term memory network. IOP Conf. Ser. Earth Environ. Sci. 2022, 983, 12004. [Google Scholar] [CrossRef]

- He, F.; Zhou, J.; Feng, Z.-K.; Liu, G.; Yang, Y. A hybrid short-term load forecasting model based on variational mode decomposition and long short-term memory networks considering relevant factors with Bayesian optimization algorithm. Appl. Energy 2019, 237, 103–116. [Google Scholar] [CrossRef]

- Zhou, F.; Zhou, H.; Li, Z.; Zhao, K. Multi-Step Ahead Short-Term Electricity Load Forecasting Using VMD-TCN and Error Correction Strategy. Energies 2022, 15, 5375. [Google Scholar] [CrossRef]

- Nasiri, H.; Ebadzadeh, M.M. Multi-step-ahead Stock Price Prediction Using Recurrent Fuzzy Neural Network and Variational Mode Decomposition. arXiv 2022, arXiv:2212.14687. [Google Scholar]

- Biswas, M.R.; Robinson, M.D.; Fumo, N. Prediction of residential building energy consumption: A neural network approach. Energy 2016, 117, 84–92. [Google Scholar] [CrossRef]

- Bendaoud, N.M.M.; Farah, N. Using deep learning for short-term load forecasting. Neural Comput. Appl. 2020, 32, 15029–15041. [Google Scholar] [CrossRef]

- Thokala, N.K.; Bapna, A.; Chandra, M.G. A deployable electrical load forecasting solution for commercial buildings. In Proceedings of the 2018 IEEE International Conference on Industrial Technology (ICIT), Lyon, France, 20–22 February 2018; pp. 1101–1106. [Google Scholar]

- Nasiri, H.; Ebadzadeh, M.M. MFRFNN: Multi-Functional Recurrent Fuzzy Neural Network for Chaotic Time Series Prediction. Neurocomputing 2022, 507, 292–310. [Google Scholar] [CrossRef]

- Alobaidi, M.H.; Chebana, F.; Meguid, M.A. Robust ensemble learning framework for day-ahead forecasting of household based energy consumption. Appl. Energy 2018, 212, 997–1012. [Google Scholar] [CrossRef] [Green Version]

- Fekri, M.N.; Patel, H.; Grolinger, K.; Sharma, V. Deep learning for load forecasting with smart meter data: Online Adaptive Recurrent Neural Network. Appl. Energy 2020, 282, 116177. [Google Scholar] [CrossRef]

- Somu, N.; MR, G.R.; Ramamritham, K. A hybrid model for building energy consumption forecasting using long short term memory networks. Appl. Energy 2020, 261, 114131. [Google Scholar] [CrossRef]

- Li, L.; Ota, K.; Dong, M. Everything is Image: CNN-based Short-Term Electrical Load Forecasting for Smart Grid. In Proceedings of the 2017 14th International Symposium on Pervasive Systems, Algorithms and Networks & 2017 11th International Conference on Frontier of Computer Science and Technology & 2017 Third International Symposium of Creative Computing (ISPAN-FCST-ISCC), Exeter, UK, 21–23 June 2017; Volume 99, pp. 344–351. [Google Scholar] [CrossRef]

- Shi, H.; Xu, M.; Grid, R.L. Deep learning for household load forecasting—A novel pooling deep RNN. IEEE Trans. Smart Grid 2017, 8, 133–190. [Google Scholar]

- Amarasinghe, K.; Marino, D.L.; Manic, M. Deep neural networks for energy load forecasting. In Proceedings of the 2017 IEEE 26th International Symposium on Industrial Electronics (ISIE), Edinburgh, UK, 19–21 June 2017; Volume 14, pp. 1483–1488. [Google Scholar]

- Bache, K.; Lichman, M. UCI machine learning repository. IEEE Access 2018, 206, 23. [Google Scholar]

- Bessani, M.; Massignan, J.A.; Santos, T.; London, J.B.; Maciel, C.D. Multiple households very short-term load forecasting using bayesian networks. Electr. Power Syst. Res. 2020, 189, 106733. [Google Scholar] [CrossRef]

- Gong, L.; Yu, M.; Jiang, S.; Cutsuridis, V.; Pearson, S. Deep Learning Based Prediction on Greenhouse Crop Yield Combined TCN and RNN. Sensors 2021, 21, 4537. [Google Scholar] [CrossRef] [PubMed]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-Term Residential Load Forecasting Based on LSTM Recurrent Neural Network. IEEE Trans. Smart Grid 2017, 10, 841–851. [Google Scholar] [CrossRef]

- Javed, U.; Ijaz, K.; Jawad, M.; Ansari, E.A.; Shabbir, N.; Kütt, L.; Husev, O. Exploratory Data Analysis Based Short-Term Electrical Load Forecasting: A Comprehensive Analysis. Energies 2021, 14, 5510. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, Z.; Wei, Z. Absolute logarithmic calibration for correlation coefficient with multiplicative distortion. Commun. Stat. Comput. 2023, 52, 482–505. [Google Scholar] [CrossRef]

- Aggarwal, C.C. Data Mining: The Textbook; Springer: Berlin/Heidelberg, Germany, 2015; Volume 1. [Google Scholar]

- Punyani, P.; Gupta, R.; Kumar, A. A multimodal biometric system using match score and decision level fusion. Int. J. Inf. Technol. 2022, 14, 725–730. [Google Scholar] [CrossRef]

- Vafaie, H.; De Jong, K. Genetic algorithms as a tool for feature selection in machine learning. ICTAI 2018, 200–203. [Google Scholar] [CrossRef]

- Norouzi, A.; Aliramezani, M.; Koch, C.R. A correlation-based model order reduction approach for a diesel engine NOx and brake mean effective pressure dynamic model using machine learning. Int. J. Engine Res. 2020, 22, 2654–2672. [Google Scholar] [CrossRef]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual Prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Fan, C.; Wang, J.; Gang, W.; Li, S. Assessment of deep recurrent neural network-based strategies for short-term building energy predictions. Appl. Energy 2019, 236, 700–710. [Google Scholar] [CrossRef]

- Cho, K.; Van Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv 2019, arXiv:1409.1259. [Google Scholar] [CrossRef]

- Britz, D. Recurrent neural network tutorial, part 4 implementing a gru/lstm rnn with python and Theano. Inf. Syst. E-bus. Manag. 2015, 256, 560–587. [Google Scholar]

- Ravanelli, M.; Brakel, P.; Omologo, M.; Bengio, Y. Light Gated Recurrent Units for Speech Recognition. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 92–102. [Google Scholar] [CrossRef] [Green Version]

- Su, Y.; Kuo, C.-C.J. On extended long short-term memory and dependent bidirectional recurrent neural network. Neurocomputing 2019, 356, 151–161. [Google Scholar] [CrossRef] [Green Version]

- Gruber, N.; Jockisch, A. Are GRU Cells More Specific and LSTM Cells More Sensitive in Motive Classification of Text? Front. Artif. Intell. 2020, 3, 40. [Google Scholar] [CrossRef]

- Veloso, B.; Gama, J.; Malheiro, B.; Vinagre, J. Hyperparameter self-tuning for data streams. Inf. Fusion 2021, 76, 75–86. [Google Scholar] [CrossRef]

- Plevris, V.P.; Solorzano, G.S.; Bakas, N.B.; Seghier, M.E.A.B.S. Investigation of performance metrics in regression analysis and machine learning-based prediction models. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 13, 1–40. [Google Scholar] [CrossRef]

| Date (yyyy-mm-dd hh:min:sec) | Temperature—°C | Hour | Weekday | Week | Month | Year | Energy—kWh |

|---|---|---|---|---|---|---|---|

| 2021-09-01 00:00:54 | 31.0 | 0 | 3 | 35 | 9 | 2021 | 284.10560 |

| 2021-09-01 00:01:55 | 31.0 | 0 | 3 | 35 | 9 | 2021 | 279.18033 |

| 2021-09-01 00:02:55 | 31.0 | 0 | 3 | 35 | 9 | 2021 | 278.64350 |

| 2021-09-01 00:03:56 | 31.0 | 0 | 3 | 35 | 9 | 2021 | 280.11516 |

| 2021-09-01 00:04:56 | 31.0 | 0 | 3 | 35 | 9 | 2021 | 280.37660 |

| Electrical Load (kWh) | Daily (kWh) | Weekly (kWh) | Monthly (kWh) |

|---|---|---|---|

| Standard Deviation | 35.59 | 30.15 | 25.13 |

| Mean | 199.013 | 200.51 | 202.18 |

| Median | 200.36 | 198.31 | 202.25 |

| Learning Rate | Model | MSE | R-Squared | RMSE | MAE |

|---|---|---|---|---|---|

| One hidden layer | |||||

| 0.01 | LSTM | 0.00282 | 0.87239 | 0.05310 | 0.03937 |

| 0.001 | 0.00400 | 0.81900 | 0.06324 | 0.04786 | |

| 0.01 | GRU | 0.00374 | 0.83063 | 0.06118 | 0.04731 |

| 0.001 | 0.00280 | 0.87323 | 0.05293 | 0.03790 | |

| 0.01 | RNN | 0.00295 | 0.86647 | 0.05432 | 0.04115 |

| 0.001 | 0.00307 | 0.86104 | 0.05541 | 0.04065 | |

| Two hidden layers | |||||

| 0.01 | LSTM | 0.00293 | 0.8672 | 0.05417 | 0.04001 |

| 0.001 | 0.002988 | 0.864808 | 0.054662 | 0.04107 | |

| 0.01 | GRU | 0.00215 | 0.90228 | 0.04647 | 0.03266 |

| 0.001 | 0.0028 | 0.8727 | 0.0530 | 0.0384 | |

| 0.01 | RNN | 0.01529 | 0.30793 | 0.12367 | 0.10960 |

| 0.001 | 0.0038 | 0.8275 | 0.0617 | 0.0490 | |

| Three hidden layers | |||||

| 0.01 | LSTM | 0.00378 | 0.82861 | 0.06154 | 0.04779 |

| 0.001 | 0.00312 | 0.85855 | 0.05591 | 0.04233 | |

| 0.01 | GRU | 0.00265 | 0.88001 | 0.05149 | 0.03738 |

| 0.001 | 0.00275 | 0.87547 | 0.05246 | 0.03790 | |

| 0.01 | RNN | 0.01614 | 0.26963 | 0.12705 | 0.10554 |

| 0.001 | 0.00432 | 0.80448 | 0.06573 | 0.05348 | |

| Learning Rate | Model | MSE | R-Squared | RMSE | MAE |

|---|---|---|---|---|---|

| One hidden layer | |||||

| 0.01 | LSTM | 0.00295 | 0.86627 | 0.05436 | 0.04305 |

| 0.001 | 0.00822 | 0.62783 | 0.09069 | 0.07237 | |

| 0.01 | GRU | 0.00319 | 0.85533 | 0.05654 | 0.04119 |

| 0.001 | 0.00300 | 0.86413 | 0.05479 | 0.04042 | |

| 0.01 | RNN | 0.00303 | 0.86273 | 0.05508 | 0.04251 |

| 0.001 | 0.00320 | 0.86399 | 0.05489 | 0.04171 | |

| Two hidden layers | |||||

| 0.01 | LSTM | 0.0030 | 0.8600 | 0.0556 | 0.0436 |

| 0.001 | 0.0215 | 0.0263 | 0.1466 | 0.1171 | |

| 0.01 | GRU | 0.0035 | 0.8378 | 0.0598 | 0.0444 |

| 0.001 | 0.0029 | 0.8672 | 0.0541 | 0.0399 | |

| 0.01 | RNN | 0.0040 | 0.8148 | 0.0639 | 0.0522 |

| 0.001 | 0.0031 | 0.8587 | 0.0558 | 0.0429 | |

| Three hidden layers | |||||

| 0.01 | LSTM | 0.02191 | 0.00837 | 0.14804 | 0.11889 |

| 0.001 | 0.02224 | −0.0066 | 0.14916 | 0.11908 | |

| 0.01 | GRU | 0.00405 | 0.81659 | 0.06366 | 0.04953 |

| 0.001 | 0.00301 | 0.86373 | 0.05487 | 0.04083 | |

| 0.01 | RNN | 0.00908 | 0.58907 | 0.09530 | 0.08068 |

| 0.001 | 0.00329 | 0.85094 | 0.05739 | 0.04482 | |

| Learning Rate | Model | MSE | R-Squared | RMSE | MAE |

|---|---|---|---|---|---|

| One hidden layer | |||||

| 0.01 | LSTM | 0.00349 | 0.84209 | 0.05907 | 0.04313 |

| 0.001 | 0.00350 | 0.84130 | 0.05922 | 0.04489 | |

| 0.01 | GRU | 0.00270 | 0.87749 | 0.05203 | 0.03904 |

| 0.001 | 0.00354 | 0.83976 | 0.05951 | 0.04310 | |

| 0.01 | RNN | 0.00329 | 0.85114 | 0.05735 | 0.04446 |

| 0.001 | 0.00335 | 0.84833 | 0.05789 | 0.04609 | |

| Two hidden layers | |||||

| 0.01 | LSTM | 0.0080 | 0.6367 | 0.0895 | 0.0749 |

| 0.001 | 0.0039 | 0.8216 | 0.0627 | 0.0493 | |

| 0.01 | GRU | 0.0026 | 0.8804 | 0.0513 | 0.0378 |

| 0.001 | 0.0032 | 0.8520 | 0.0571 | 0.0410 | |

| 0.01 | RNN | 0.0046 | 0.7915 | 0.0678 | 0.0562 |

| 0.001 | 0.0046 | 0.7889 | 0.0683 | 0.0556 | |

| Three hidden layers | |||||

| 0.01 | LSTM | 0.00422 | 0.80874 | 0.06501 | 0.04828 |

| 0.001 | 0.00683 | 0.69075 | 0.08267 | 0.06936 | |

| 0.01 | GRU | 0.00288 | 0.86941 | 0.05372 | 0.04146 |

| 0.001 | 0.00334 | 0.84857 | 0.05785 | 0.04172 | |

| 0.01 | RNN | 0.01341 | 0.39317 | 0.11581 | 0.09153 |

| 0.001 | 0.00961 | 0.56479 | 0.09807 | 0.08433 | |

| Learning Rate | Model | MSE | R-Squared | RMSE | MAE |

|---|---|---|---|---|---|

| One hidden layer | |||||

| 0.01 | LSTM | 0.00416 | 0.81143 | 0.06455 | 0.05274 |

| 0.001 | 0.01577 | 0.28612 | 0.12561 | 0.10147 | |

| 0.01 | GRU | 0.00292 | 0.86781 | 0.05405 | 0.04006 |

| 0.001 | 0.00959 | 0.56599 | 0.09794 | 0.07986 | |

| 0.01 | RNN | 0.00301 | 0.86348 | 0.05492 | 0.04120 |

| 0.001 | 0.00586 | 0.73461 | 0.07658 | 0.06180 | |

| Two hidden layers | |||||

| 0.01 | LSTM | 0.0060 | 0.7262 | 0.0777 | 0.0603 |

| 0.001 | 0.0188 | 0.1487 | 0.1371 | 0.1092 | |

| 0.01 | GRU | 0.0029 | 0.8676 | 0.0540 | 0.0397 |

| 0.001 | 0.0129 | 0.4138 | 0.1138 | 0.0923 | |

| 0.01 | RNN | 0.0034 | 0.8426 | 0.0589 | 0.0441 |

| 0.001 | 0.0132 | 0.3993 | 0.1152 | 0.0912 | |

| Three hidden layers | |||||

| 0.01 | LSTM | 0.02018 | 0.03696 | 0.14205 | 0.11361 |

| 0.001 | 0.02253 | −0.0198 | 0.15013 | 0.11925 | |

| 0.01 | GRU | 0.00292 | 0.86749 | 0.05411 | 0.04024 |

| 0.001 | 0.01215 | 0.45025 | 0.11022 | 0.09018 | |

| 0.01 | RNN | 0.00339 | 0.86348 | 0.05492 | 0.04120 |

| 0.001 | 0.01373 | 0.37867 | 0.11718 | 0.09260 | |

| Reference | Algorithms | Result | Location |

|---|---|---|---|

| [29] | NN with PSO algorithm | MAPE = 0.0338, MAE = 0.02191. | Iran. |

| [30] | EMD-GRU-FS | Accuracy on four data sets was 96.9%, 95.31%, 95.72%, and 97.17%, consecutively. | Public |

| [31] | LSTM with EMD | MAPE = 2.6249% in the winter and 2.3047% in the summer. | Public |

| [32] | VMD, LSTM with optimizer BOA, SVR, LR, RF, and EMD-LSTM | The LSTM with optimizer BOA gave the best, where MAPE is 0.4186%. | China |

| [33] | VMD, TCN | MAPE for 6-, 12-, and 24-step forecasting is 0.274%, 0.326%, and 0.405, respectively | Global Energy Competition 2014 |

| [35] | ANN based on the Levenberg Marquardt and newton algorithms | The model is a perfect fitting with a rate of 90% of the variance in the power consumption variable predicted from the independent variable. | Public |

| [36] | NARX and ANN | MAPE and RMSE of 3.16% and 270.60, respectively. | Algerian |

| [37] | NARX, SVR | The SVR outperformed the NARX neural network model, for the day ahead, a week ahead, and a month ahead forecasting, the average predicting accuracy is approximately 91%, 88–90%, and 85–87%, respectively. | Public |

| [38] | MFRFNN | The RMSE for wind speed prediction, Google stock price prediction, and air quality index prediction are decreased by 35.12%, 13.95%, and 49.62, respectively. | Real Datasets |

| [39] | EANN, BANN | EANN is the best, where RMSE = 296.3437, MAPE = 15.9396. In BANN given the result, RMSE = 309.6022, and MAPE = 16.236. | France |

| [40] | RNN | MAE = 0.24, 0.12 straight for 50 h ago and an hour ago. | London |

| [41] | LSTM, ISCOA | STLF give MAE = 0.0733, MAPE = 5.1882, MSE = 0.0115, RMSE = 0.1076. | India-Mumbai |

| [42] | DLSF, SVM | The DLSF model outperformed the SVM algorithm, where the accuracy of DLSF is 90%, and SVM = 70%. | China |

| [43] | PDRNN, ARIMA, SVR, and RNN. | The PDRNN method outperforms ARIMA, SVR, and RNN, where RMSE (kWh) = 0.4505, 0.5593, 0.518, and 0.528 respectively. | Ireland |

| [45] | CNN, SVM, ANN, and LSTM. | The superiority of the proposed model CNN over SVM, ANN, and LSTM where RMSE = 0.677, 0.814, 0.691, 0.7 respectively. | Public |

| [46] | NN with Bayesian networks | MAE is 1.0085, and MAAPE is 0.5035. | Irish |

| [48] | LSTM, BPNN, KNN, | The LSTM with ELM is the best where MAPE = 8.18%. | China |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abumohsen, M.; Owda, A.Y.; Owda, M. Electrical Load Forecasting Using LSTM, GRU, and RNN Algorithms. Energies 2023, 16, 2283. https://doi.org/10.3390/en16052283

Abumohsen M, Owda AY, Owda M. Electrical Load Forecasting Using LSTM, GRU, and RNN Algorithms. Energies. 2023; 16(5):2283. https://doi.org/10.3390/en16052283

Chicago/Turabian StyleAbumohsen, Mobarak, Amani Yousef Owda, and Majdi Owda. 2023. "Electrical Load Forecasting Using LSTM, GRU, and RNN Algorithms" Energies 16, no. 5: 2283. https://doi.org/10.3390/en16052283

APA StyleAbumohsen, M., Owda, A. Y., & Owda, M. (2023). Electrical Load Forecasting Using LSTM, GRU, and RNN Algorithms. Energies, 16(5), 2283. https://doi.org/10.3390/en16052283