Abstract

Lithium-ion batteries are widely used in electric vehicles, energy storage power stations, and many other applications. Accurate and reliable monitoring of battery health status and remaining capacity is the key to establish a lithium-ion cell management system. In this paper, based on a Bayesian optimization algorithm, a deep neural network is structured to evaluate the whole charging curve of the battery using partial charging curve data as input. A 0.74 Ah battery is used for experiments, and the effect of different input data lengths is also investigated to check the high flexibility of the approach. The consequences show that using only 20 points of partial charging data as input, the whole charging profile of a cell can be exactly predicted with a root-mean-square error (RMSE) of less than 19.16 mAh (2.59% of the nominal capacity of 0.74 Ah), and its mean absolute percentage error (MAPE) is less than 1.84%. In addition, critical information including battery state-of-charge (SOC) and state-of-health (SOH) can be extracted in this way to provide a basis for safe and long-lasting battery operation.

1. Introduction

Lithium-ion batteries can be used in a variety of applications in electric vehicles, portable electronics, energy storage stations, and other fields [1]. Cell capacity depletion is a well-recognized measure of battery age loss, which severely impacts the range of battery-powered devices [2]. Meanwhile, some critical states of the battery such as state of charge and state of health affect the energy balance and economic operation of the system, affecting, for instance, issues such as the driving range and operating cost of electric vehicles [3,4]. Accurately assessing the maximum capacity of a battery is important for the design of the battery and its management strategy, as well as influencing the development of a battery service plan and the selection of a battery pack manufacturer [5]. Assessing the maximum capacity of a battery requires a complete charge or discharge curve from the lower voltage limit to the upper voltage limit [6]. In electric vehicles and some portable electronics, complete charge and discharge profiles are seldom obtained; however, the charging process may start in a different state or at a different voltage. It may also not end immediately after reaching a fully charged state. Analyzing actual EV battery management figures shows that the battery management system (BMS) only records a small charging or discharging curve [7]. Traditional model-based approaches [8] can accurately simulate the state of a lithium-ion battery. Nevertheless, it is extremely difficult to design a perfect estimation model for lithium-ion batteries.

As artificial intelligence technology continues to evolve, some machine learning and neural network methods are increasingly used for various state estimations of cells with good results. An optimized machine learning technique is proposed in the literature [9] to improve the Li-ion battery charge state estimation. Gong et al. [10] proposed a deep learning neural network (DNN) model consisting of a convolutional layer, an ultralight quantum spatial attention mechanism layer, a simple recursive unit layer, and a dense layer. The model takes measured battery signal features such as voltage, current, and temperature data as inputs and state of charge (SOC) estimates as outputs. It has high estimation accuracy. Chen et al. [11] proposed a battery charge state estimation method based on long and short-term memory network modeling which uses an LSTM network to estimate the charge state using voltage, current, operating temperature, and health state as inputs, and then flattens the estimation results using an adaptive H-infinity filter, which provides a higher accuracy estimation capability. Jiao et al. [12] proposed a GRU-based neural network and used the momentum gradient algorithm to improve the training speed and optimization process of the network by integrating the historical and current trends of weight changes. The measured voltages and currents are used as inputs and the SOC estimates are used as outputs. The sampled data were also processed with noise to improve the estimation accuracy of SOC comprehensively. Liu et al. [13] proposed a time-domain convolutional network model (TCN) that maps voltage, current, and temperature directly to the SOC of the battery and shows excellent capability in processing battery timing data through its specially designed dilated causal convolution structure. The trained model has good accuracy. All these methods require data collection in a specific voltage range. The approach presented in this article allows data gathered in various voltage windows in any section of the charging profile to be used as input data to the neural network, improving the flexibility of estimation and also achieving the desired estimation accuracy.

The main contributions of this paper are as follows. For Li-ion batteries, a Bayesian-CNN model for estimating the complete charging profile is established using only partial charging data with a voltage width of 200 mV (20 data points) as input. To prevent overfitting and improve the generalization ability of the CNN, the structure and activation function of the CNN, etc., are improved, and the optimal hyperparameters are screened using the Bayesian model. The critical battery states such as SOC and SOH are extracted from the estimated charging curves. The simulation results verify that the proposed Bayesian-CNN algorithm can accurately and efficiently estimate the complete charging curves and battery states of Li-ion batteries. All development in Python (version 3.10.12).

The remainder of the paper is organized as follows. Section 2 introduces the principles of the Bayesian optimization method and CNN, respectively, and establishes the Bayesian-CNN estimation model; Section 3 performs simulations using the optimized Bayesian-CNN model, analyzes the results under different voltage windows, and compares them with other machine learning methods; Section 4 extracts the key SOC and SOH, etc., by using the estimated complete charging curve state information; finally, the conclusions are discussed in Section 5.

2. Research Methods

2.1. Bayesian Optimization

The success of neural networks relies heavily on the choice of hyperparameters. Determining which hyperparameters to tune and defining the value range of these hyperparameters and then selecting the most optimized set of values requires a careful design and experimental process. For complex neural network structures with a very large hyperparameter space, attempting all possible permutations requires a great deal of calculation and time [14]. Random search (RS) [15] and grid search (GS) [16] are the two most commonly used hyperparameter optimization methods. Then, Bayesian optimization builds alternative functions (probabilistic models) from the historical assessment of the target function in order to locate the exact value that minimizes the target function. It differs from RS and GS in that it consults previous assessments when attempting the next set of hyperparameters [17]. As a result, a lot of useless work can be eliminated, and there is a great improvement in terms of time and space.

Bayesian optimization is an approximate approximation global optimization algorithm which implements hyperparameter optimization by estimating the posterior distribution of the target function according to Bayesian theorem (1) and finding the hyperparameter combination that minimizes the objective function by constructing a mapping function between the sample points and the minimized objective function values.

The θ in Equation (1) represents the unknown objective function, denotes the combination of measured symptoms and observations. denote the posterior probability and the precursor odds of . indicates the probability spread of , and stands for the marginal probability spread of .

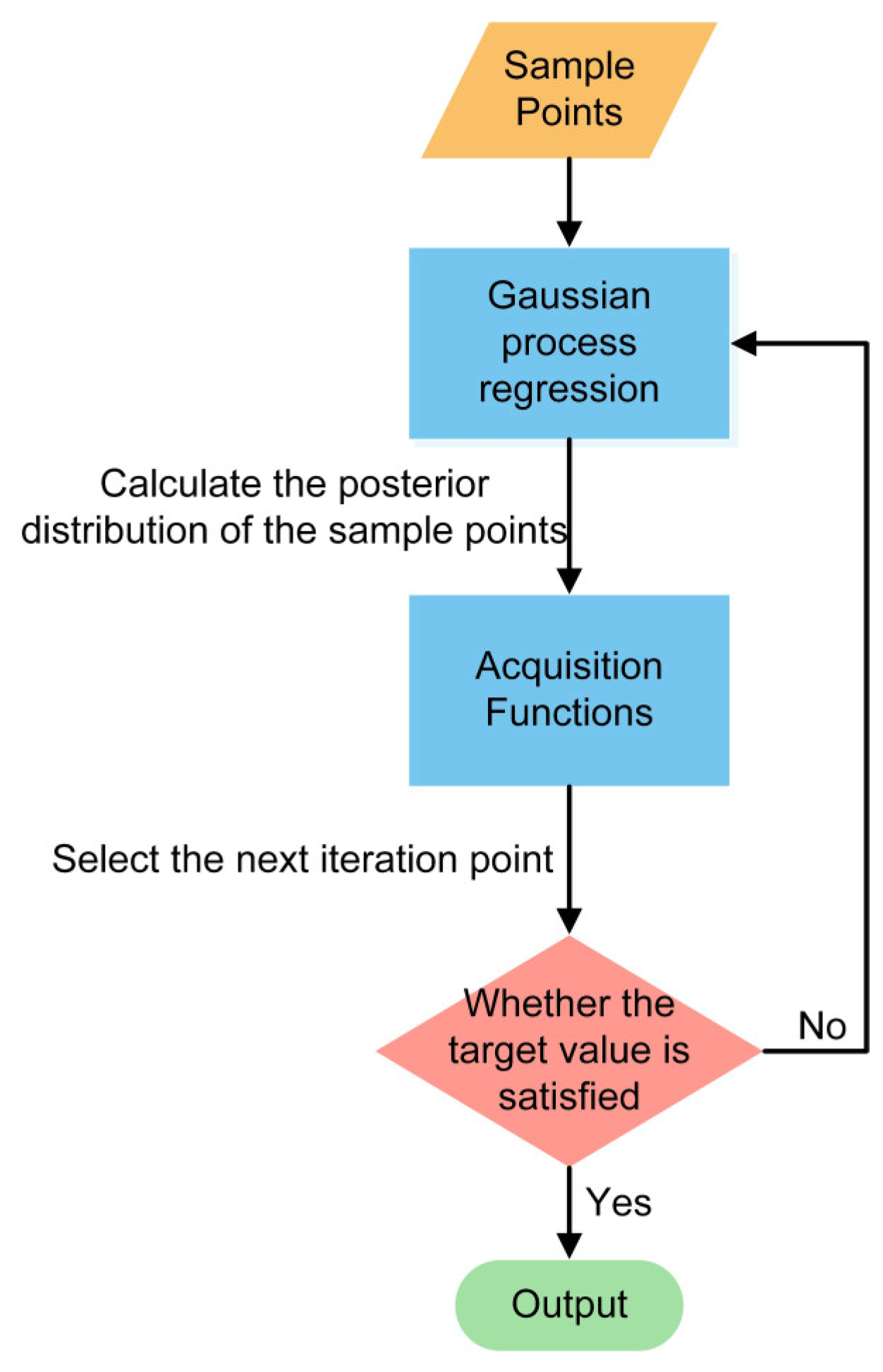

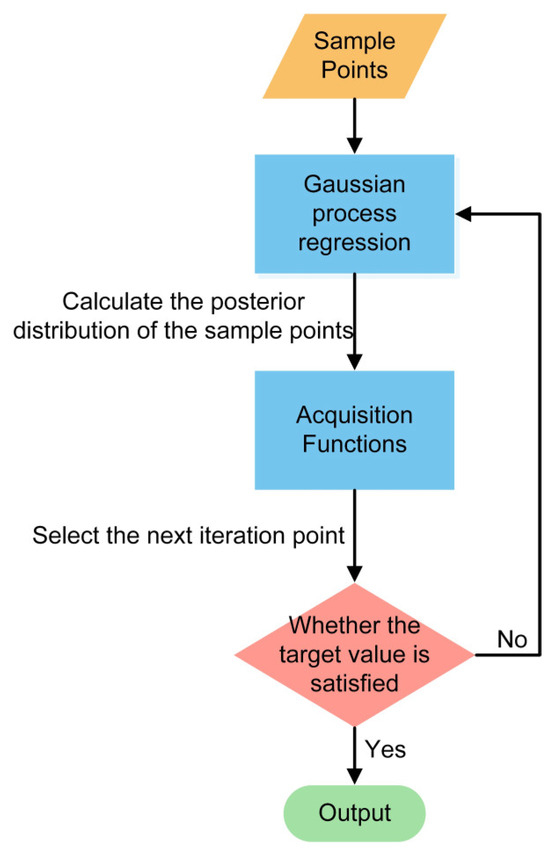

A brief flow of Bayesian optimization is illustrated in Figure 1. There are two core processes for Bayesian optimization. The first core process is the probabilistic proxy modeling, which is a model for computing the probability of an unknown objective function. In the Bayesian optimization process, the apprise of the probabilistic proxy model is accomplished by increasing the number of sample points and continuously correcting the prior probabilities [18]. One of the widely used probabilistic proxy models is the Gaussian process. Since a Gaussian process is a collection of stochastic variables obeying a normal allocation, the normal distribution obeyed by the next sampling point at any value can be estimated after calculation so that the next optimal sample point can be obtained by the sampling function. The hyperparametric optimization in this paper is based on the Gaussian process to achieve the objective function modeling. The second core process is the obtaining function, which is structured according to the posterior approximation of the probability distribution, and it can use the existing information to find the next sample point. The most important function of the sampling function is to equilibrate the search and utilization in Bayesian optimization and avoid Bayesian optimization falling into a local optimum [18].

Figure 1.

A brief Bayesian optimization process.

2.2. Neural Network Architecture and Description

2.2.1. Principle

In the article, we adopt a convolution neural network (CNN) architecture, which has the advantage of using local connectivity and weight sharing techniques [19], which significantly reduce the network parameters and further overcome the parameter explosion problem that exists in traditional neural networks. Local connections indicate that every neuron is attached to only one local neuron in the upper layer, thus making the number of parameters substantially lower. At the meantime, local concatenation allows each later layer of neurons to process information from only a small number of related neurons in the former layer, making each later layer of neurons more targeted and the division of labor between neurons more explicit. Weight sharing means that the CNN groups the neurons in the same layer according to the correlation of connections and further reduces the network parameters substantially by convolutional operations so that the same group of neurons adopts the same weights. The convolution operation is shown in Equation (2).

where and denote the -th convolutional plane and convolutional kernel (also called weights) on the -th layer, respectively. is the offset, and is the activation function. Suppose the size of and are and , respectively; moreover , and the sliding step is , then all elements of the -th convolution are shown in Equations (3)–(5), where the convolution layer consists of all the convolutions surfaces .

2.2.2. Architecture

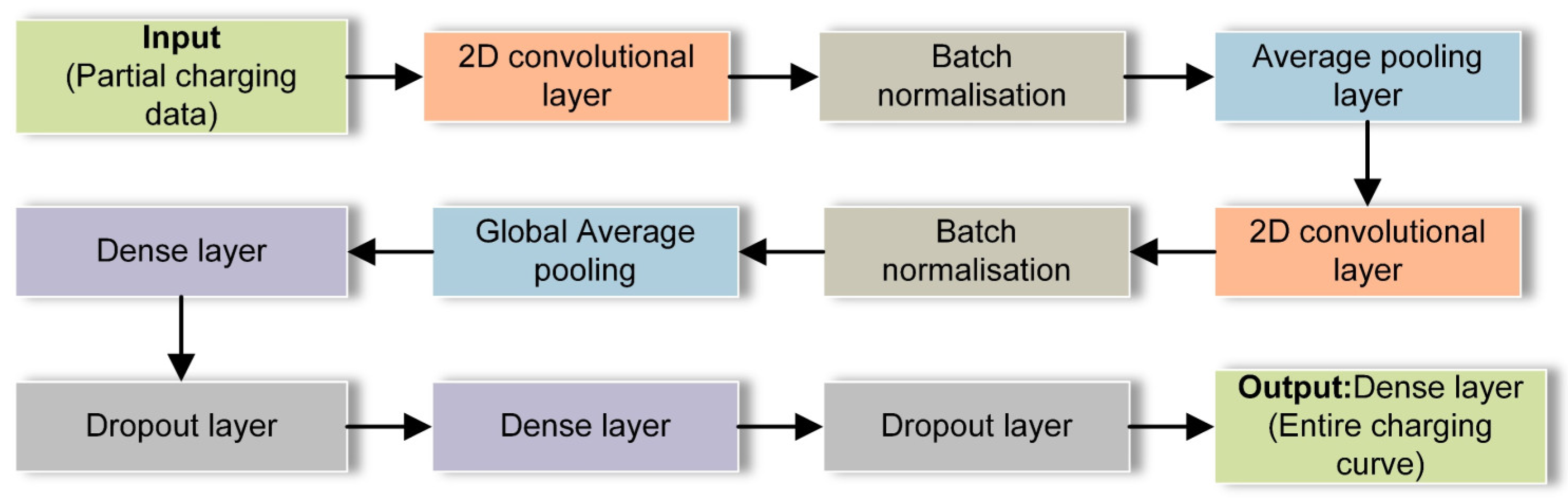

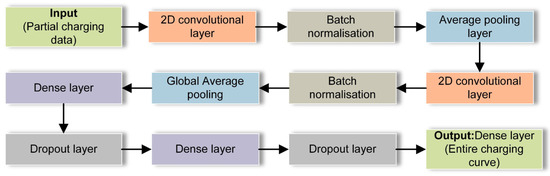

The developed deep neural network is illustrated below in Figure 2, which is composed of five main layers, namely the 2D Convolution Layer, Batch Normalization Layer, Global Average Pooling Layer, Dense Layer, and Dropout Layer. The 2D Convolutional Layer introduces the Same Padding technique to reduce the effect of boundary information loss in convolutional operations. Meanwhile, the Mish activation function is selected for the convolutional layer, as stated in Equation (6), which keeps each point smooth and offers a robust regularization effect to reduce the risk of over-fitting.

Figure 2.

The deep neural network structure designed in this study.

In addition, the he_uniform initialization method is introduced to enhance the convergence rate and performance of the system, and further alleviate the problem of gradient explosion and disappearance. Finally, we also added the L2 Regularizer to regularize the weights in the neural network. It prevents overfitting by penalizing the sum of squares of each element in the weight matrix. The L2 Regularizer contributes to a better generalization of the model and enhances the performance and stability of the model.

Batch Normalization Layer is a normalization technique commonly used in pro-deep learning to increase the speed of model convergence and reduce the risk of over-fitting. Specifically, it makes their distribution more stable by calculating the average and standard deviation of each layer of incoming data and using them to adjust each piece of input data. It helps the model to learn the data better and also reduces the sensitivity to the specific initialization method. The process is as follows.

where is the -th input to Batch Normalization Layer, m is the batch size, and is the output of Batch Normalization Layer. and are two arguments, and is a constant setting of 0.001 to assure that the denominator is positive [20].

Global Average Pooling Layer is extensively used for squeezing the features that are extracted by the convolutional layer [20]. Input a sequence with length N, the corresponding output of the global average pooling layer is shown in (11).

It diminishes the dimension of the input data, thereby decreasing the number of parameters and the calculation of the model, and eliminates the risk of overfitting, as well as enhancing the universalization of the model.

The Dense Layer uses the ReLU activation function. It is shown in Equation (12):

where the derivative of the is 1 or 0 (when = 0, the derivative would be defined as 0 or 1); this will alleviate the problem of disappearing gradients [20]. Moreover, leads to zero output for some neurons, which results in a diluted network and decreases parameter inter-dependence, alleviating the occurrence of overfitting problems. The Dropout Layer is added after the Dense Layer, which is a regularization technology in deep learning neural networks to avoid overfitting. It operates by randomly removing a certain percentage of neurons in that layer in each training iteration. It obliges the rest of the neurons to learn more independent features and reduces the network’s over-reliance on certain neurons. The last output layer is also the Dense Layer, whose dimension is the equal to the length of the regression objective. This layer does not use an activation function and only outputs the estimates.

2.3. Neural Network Training under Bayesian Optimization

There are 10 hyper-parameters to be finalized, namely the amount of CNN filters, the size of the convolutional kernel, the sliding step, the L2 regularization factor, and the amount of CNN filters of fully connected layer neurons. Their selection range is as shown in Table 1. The remaining parameters are set empirically, the training damage function is Mean Square Error (MSE), the optimizer is Adam, the learning ratio is 0.0001, the batch size is 512, and the proportion of the validation set is 35%.

Table 1.

Hyperparameters and their ranges.

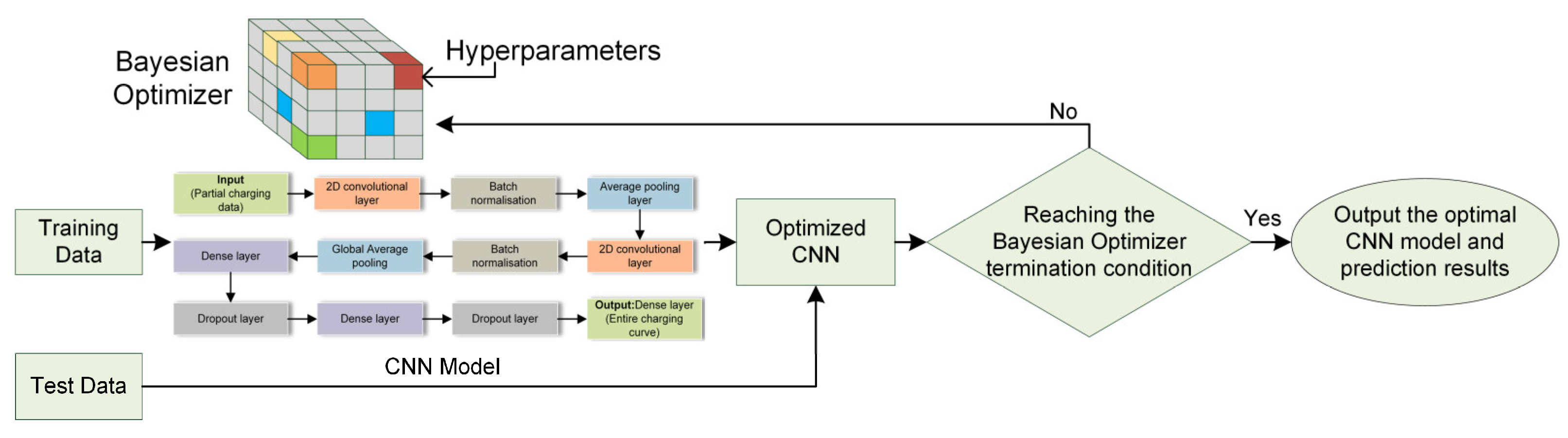

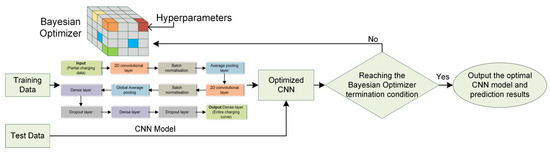

The flow of the CNN prediction model under Bayesian optimization is illustrated in Figure 3. The hyperparameter space can be considered as a hypercube [21], where each dimension is a hyperparameter. Any particular combination in identifies a singular network ƒ which can be trained and then evaluated based on a loss function . The goal is to find the combination that minimizes losses as quickly as possible .

Figure 3.

Bayesian optimization CNN prediction model process.

This paper uses data from the Oxford Battery Degradation Dataset 1, which consists of degradation test data from eight 0.74 Ah pocket cell whose voltage windows range from 2.7 V to 4.2 V. The cyclic 1C constant current charging curve at 40 °C is used to characterize the charging of the battery in different states. The battery management system (BMS) samples the voltage and current during constant current charging, then calculates the cell capacity and voltage as a function [22].

Due to the inability of some cells to accurately reach the upper or lower voltage limits under certain circumstances, a linear interpolation method was used to extract the discrete charging profile from a voltage interval of 2.8 V to 4.19 V with a sampling interval of 10 mV to obtain an output vector containing 140 elements [22]. The training dataset is composed of data from six cells, and the data from the other two cells form the test dataset, which is used for model evaluation.

3. Empirical Analysis

3.1. Complete Charging Curve Estimation

The optimal neural network hyperparameter combination obtained after Bayesian optimization is shown in Table 2. To assess the reliability of the prediction model, Root Mean Square Error () and Mean Absolute Percentage Error () were used, calculated as follows.

where is the real value, is the estimated value, and is the number of estimated samples.

Table 2.

Optimized neural network hyperparameters.

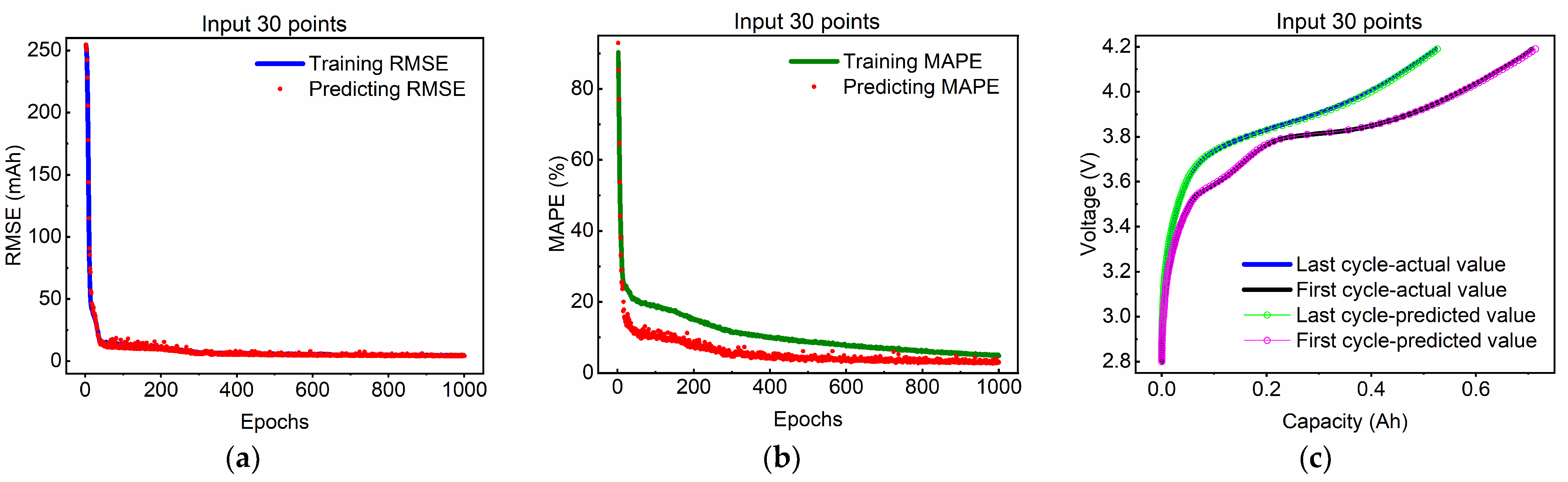

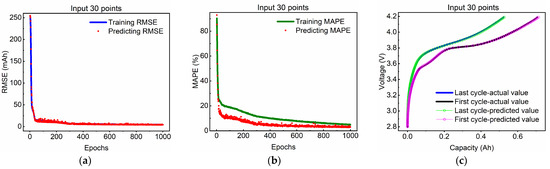

Based on Reference [22], we first considered the charging profile prediction for an input strength of 300 mV (number of input elements is 30). The results suggest that the model could accurately predict the complete charging curve with an RMSE of less than 19.3 mAh (2.608% of the nominal capacity of 0.74 Ah) and MAPE is less than 1.77%. In order to intuitively examine the estimated results, the optimal charging curves for the first and last cycles of the test dataset were also plotted. As can be seen in Figure 4, the evaluation results are in accordance with the input starting voltage over the entire cell voltage spectrum.

Figure 4.

The model evaluation metrics and training results for voltage window of 300 mV: (a) RMSE; (b) MAPE; (c) estimation curves.

3.2. Different Input Data Length Validation

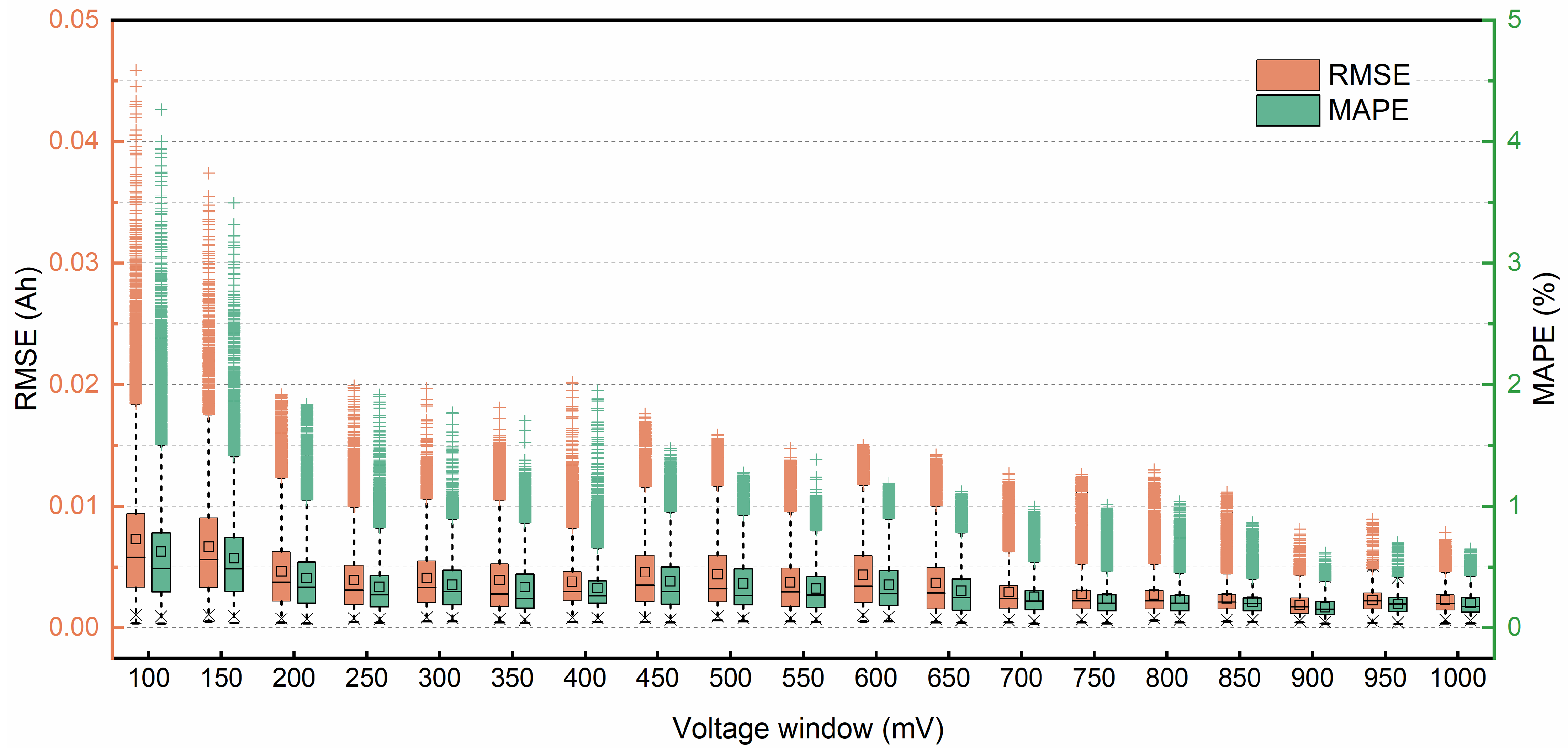

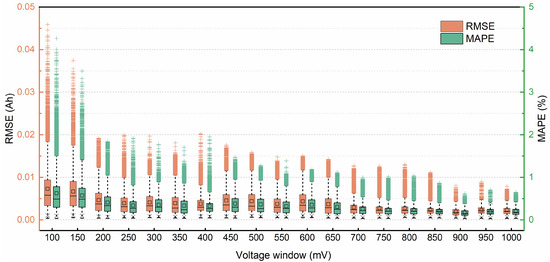

In general, a wide window will decrease the global root-mean-square error, eliminate outliers, and shrink the spread of the error. In this paper, the effect of window length is investigated, i.e., with different input data lengths, the window size is varied from 100 mV to 1000 mV in steps of 50 mV, and the results are shown in Figure 5. When the window size is more than 300 mV, the improvement of accuracy is limited by increasing the window size, even though the overall RMSE is reduced. Moreover, as the voltage window length increases it also leads to an increase in the input sequence sampling time.

Figure 5.

Effect of different input lengths on model accuracy.

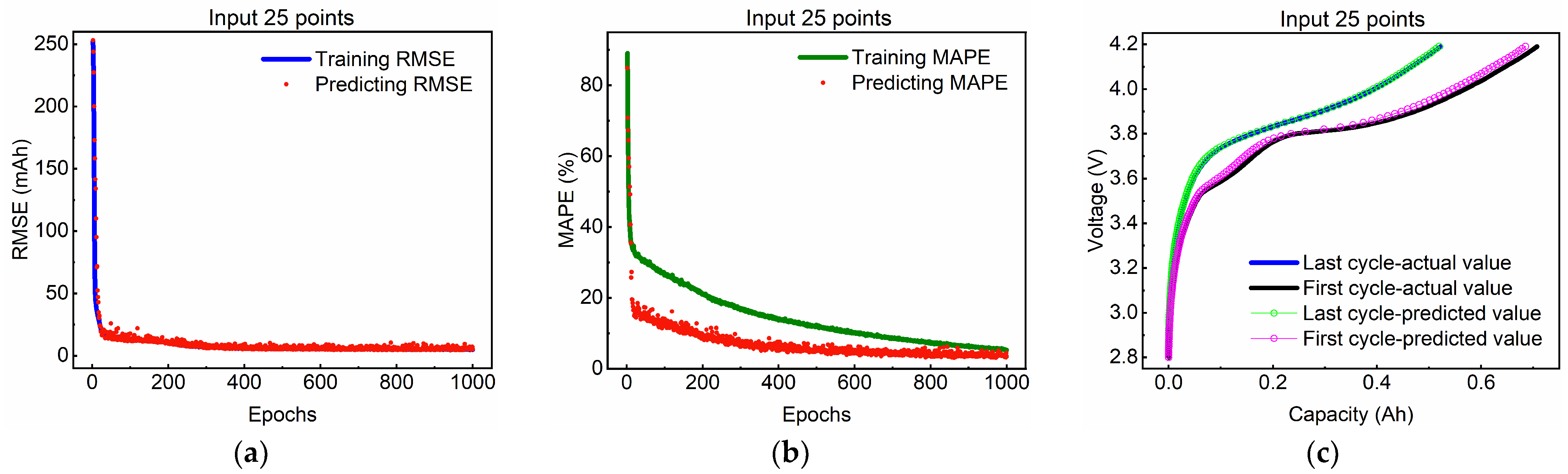

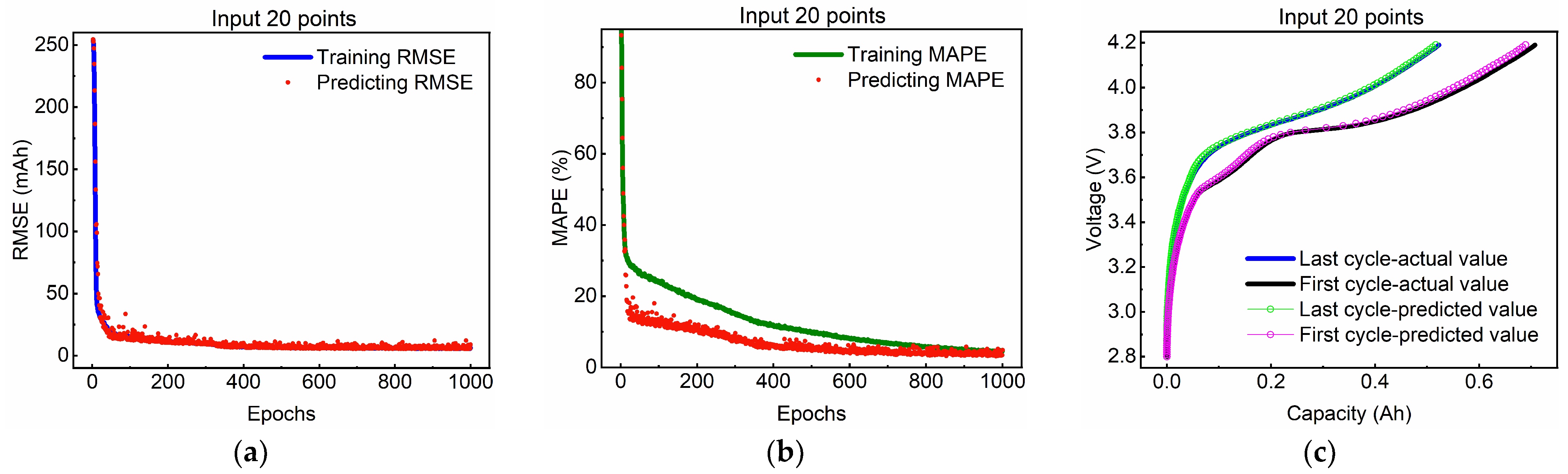

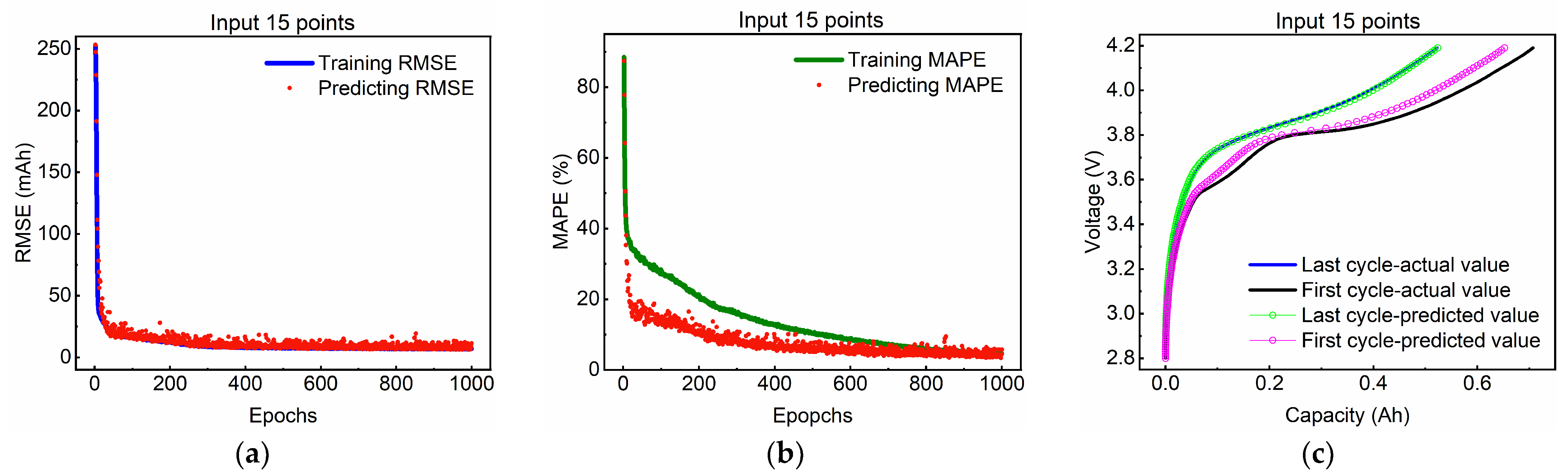

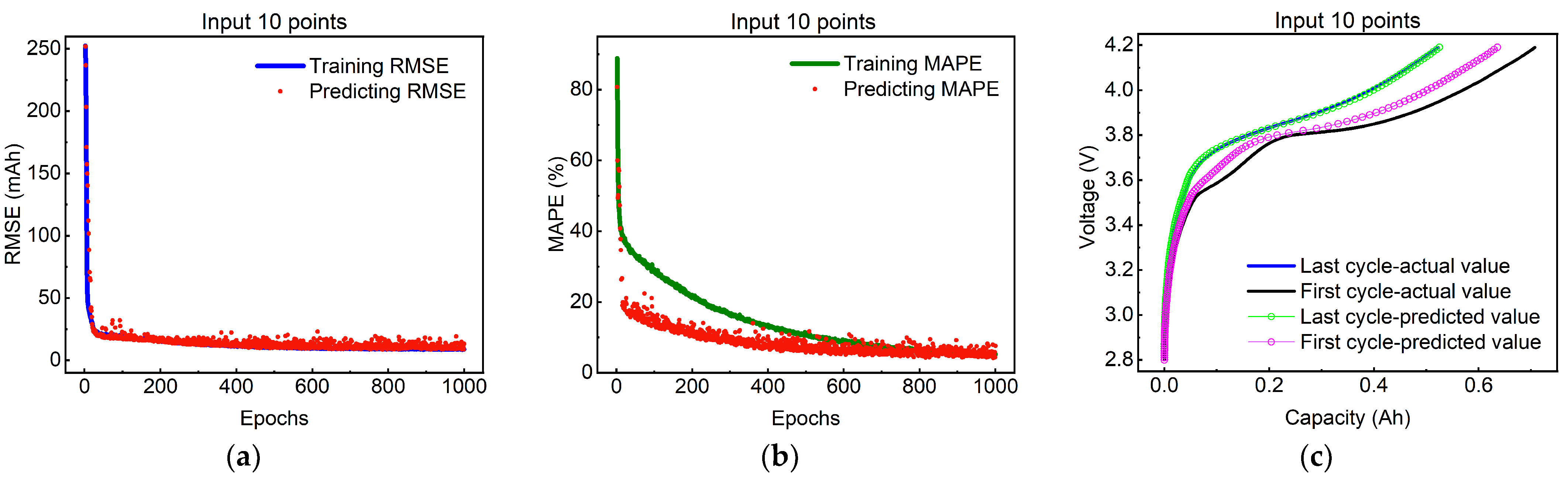

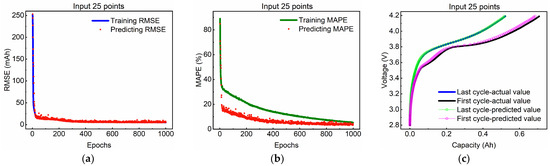

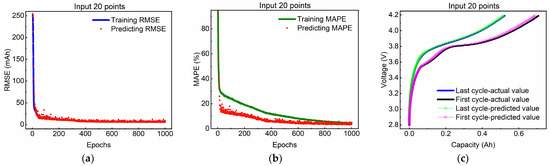

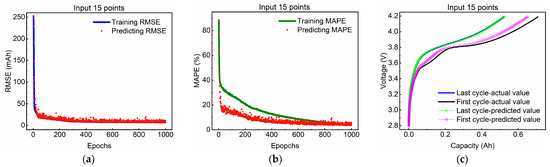

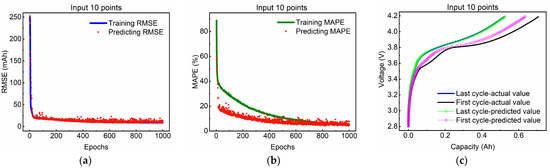

On this basis, in order to achieve the purpose of accurately estimating the complete charging curve with the least number of data points. The deep neural network we designed focuses on the effect of changing the window size from 100 mV to 300 mV in units of 50 mV, and the results are shown in Figure 6, Figure 7, Figure 8 and Figure 9. Where the best charge curve estimate for the first cycle of the test data set deviates slightly from the actual full charge curve for input lengths of 100 mV and 150 mV. However, as the number of charging cycles increases, the estimated optimal charging profile for the last cycle is generally consistent with the actual complete charging profile. As can be seen, a size of 100 mV (only 10 points) is also adequate for an exact prediction with a MAPE value of less than 4.5%, as indicated in Figure 5. In particular, the RMSE is less than 19.16 mAh (2.59% of the rated capacity of 0.74 Ah) and the MAPE is less than 1.84% at an input size of 200 mV (the input data length was only 20 points), and the best charge curve estimates for the first and last cycles in their test data set were in agreement with the actual starting voltage of the input over the whole cell voltage spectrum.

Figure 6.

The model evaluation metrics and training results for voltage window of 250 mV: (a) RMSE; (b) MAPE; (c) estimation curves.

Figure 7.

The model evaluation metrics and training results for voltage window of 200 mV: (a) RMSE; (b) MAPE; (c) estimation curves.

Figure 8.

The model evaluation metrics and training results for voltage window of 150 mV: (a) RMSE; (b) MAPE; (c) estimation curves.

Figure 9.

The model evaluation metrics and training results for voltage window of 100 mV: (a) RMSE; (b) MAPE; (c) estimation curves.

The research results show that the Bayesian optimized deep neural network designed in this paper can accurately predict the complete charging curve by inputting only a partial charging curve of 20 points, making the imported data easier to use and striking a balance between sampling time and estimation accuracy.

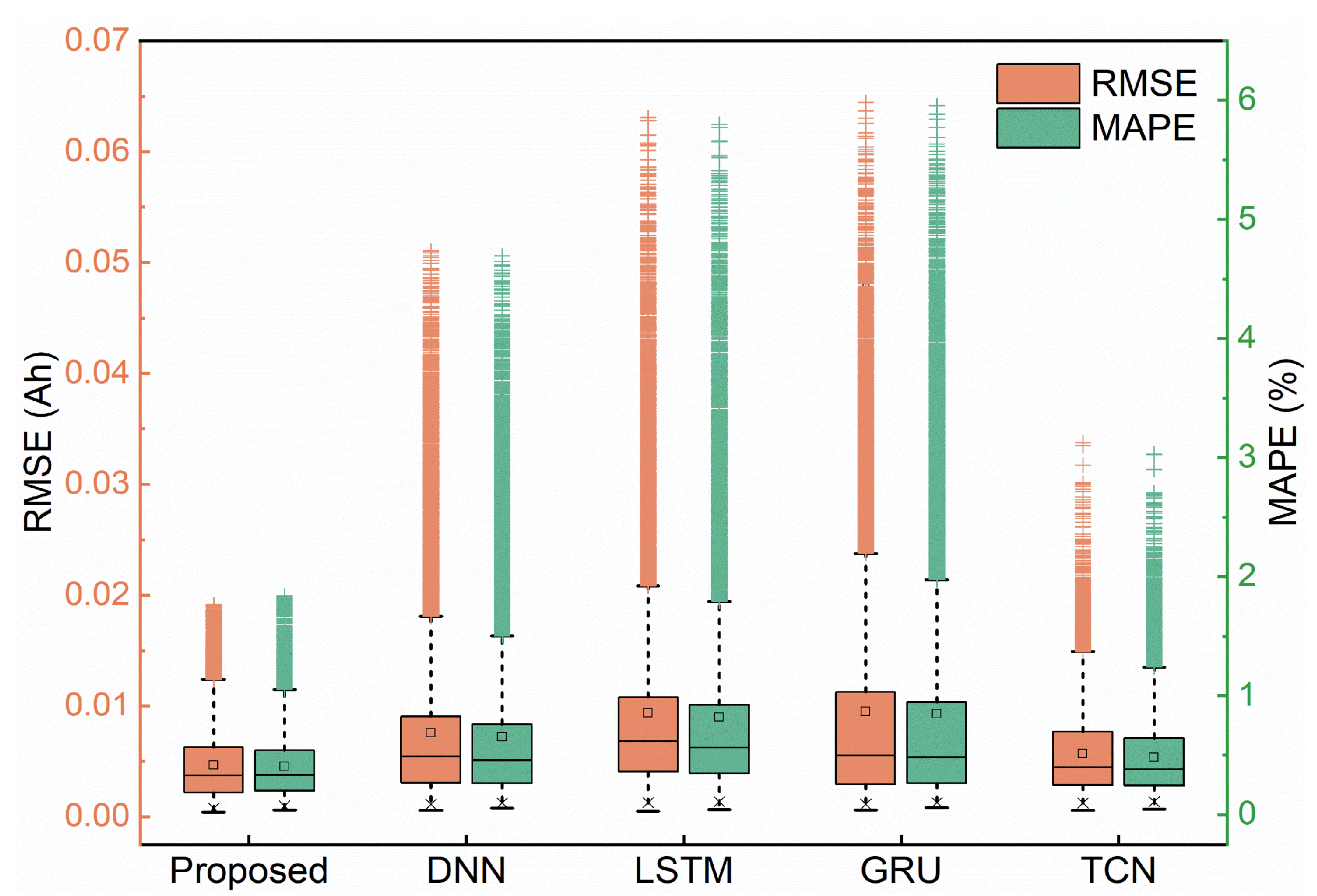

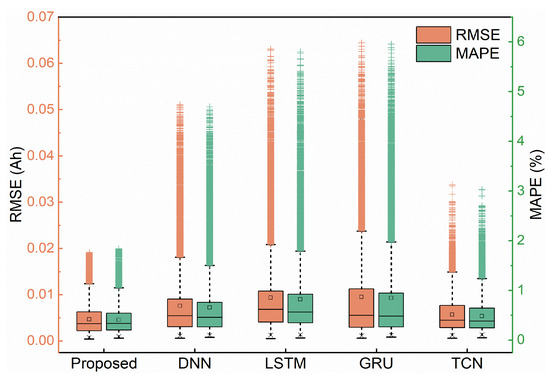

3.3. Comparison of Different Approaches

To demonstrate the capability of the proposed method, four intelligent learning techniques are used as baseline methods, namely general deep neural networks (DNN), long and short-term memory (LSTM), gated recurrent units (GRU), and time-domain convolutional networks (TCN). Each method uses the same 20-point input data as the proposed method. Their estimation error distributions are shown in Figure 10. It can be noticed that the common DNN, LSTM, and GRU cannot provide reliable estimation compared to the proposed method, where the maximum MAPE of LSTM and GRU is more than 5%. While TCN has better prediction performance, the maximum MAPE still reaches 3%. In summary, the proposed method can reliably and accurately predict the overall charging curve using 20 points of input data.

Figure 10.

Estimation error of different methods.

4. Maximum and Remaining Capacity Estimates

An accurate estimate of the largest capacity can help determine the wellness of the battery for scheduling maintenance and can provide a basis for battery modeling [23]. State of health (SOH) is generally characterized as the ratio of the current maximum capacity of the cell to the initial maximum capacity, which directly reflects the aging process of the cell. It can be expressed by the following formula:

where is the Max. cell capacity at the th cycle and is the initial max cell capacity.

In the meantime, precisely predicting remaining capacity allow direct measurement of the charge stored in the cell and can serves as a guide for assessing the mileage of an electric vehicle and other battery management measures [20]. Among them, state of charge () is considered a major characteristic of the battery management system (BMS), defined as the ratio of residual capacity to rated capacity, indicating how long the cell can last before it needs to be charged [24], which can be expressed by the following equation:

where is the remaining capacity of the cell at time at the th cycle. Accurate knowledge of the cell is not only important for electric vehicle mileage utilization and travel scheduling but is also critical for battery management systems to provide consistency and correct cell manipulation [25].

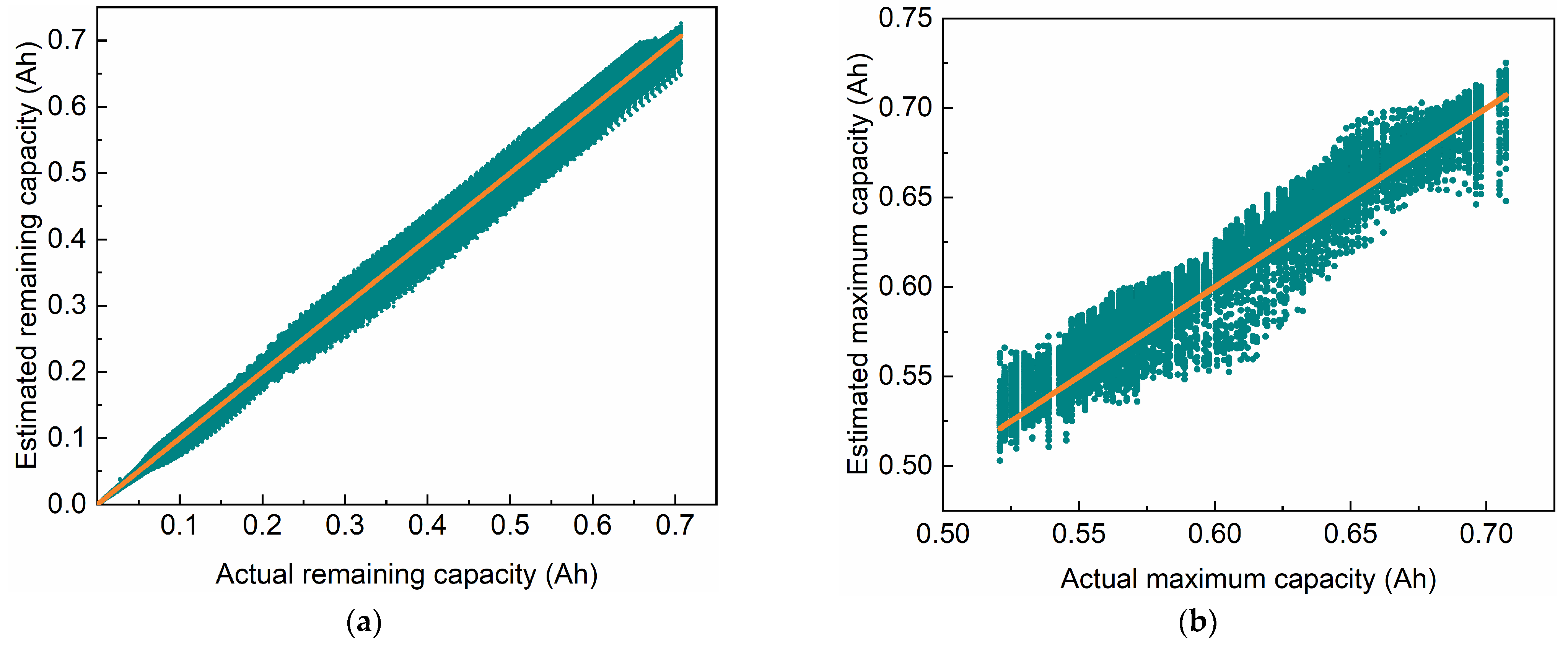

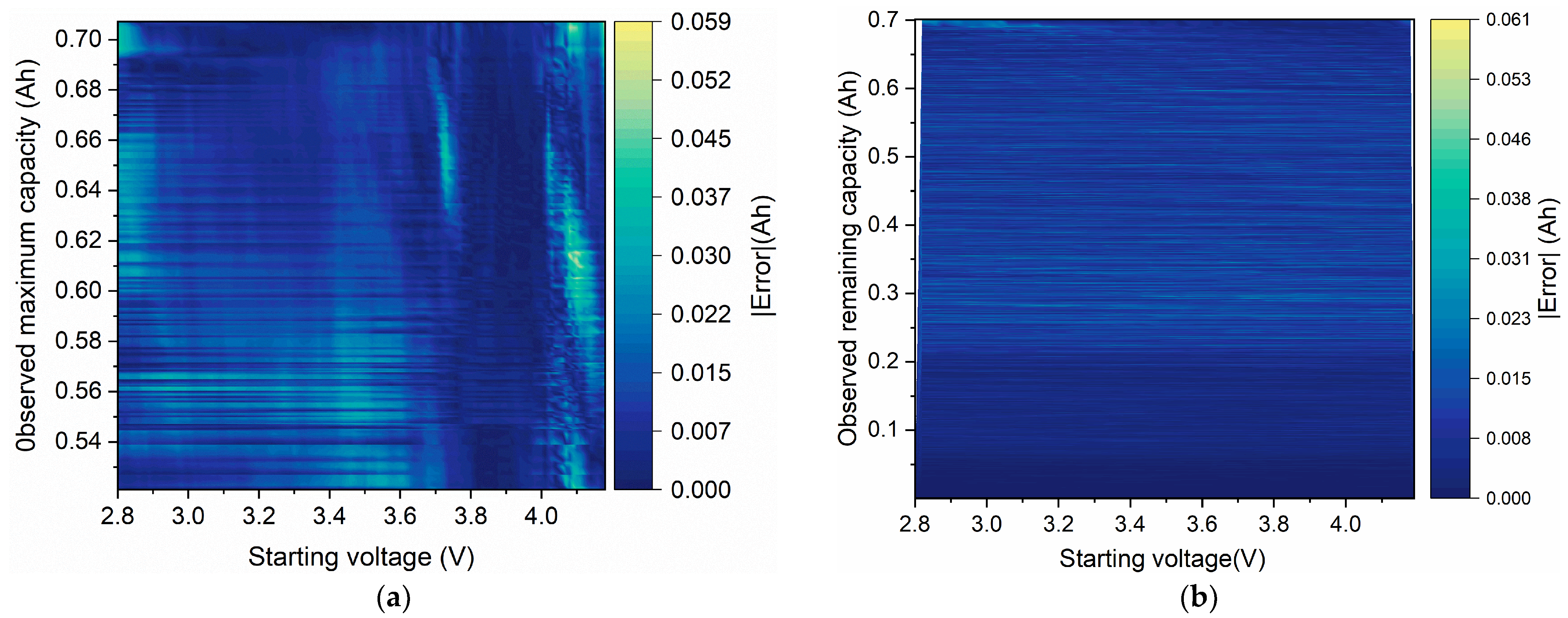

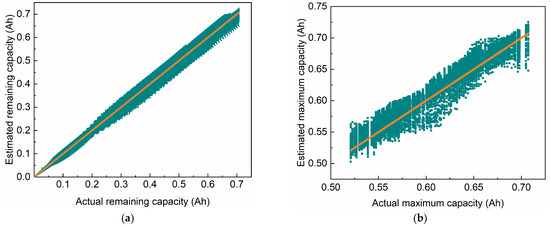

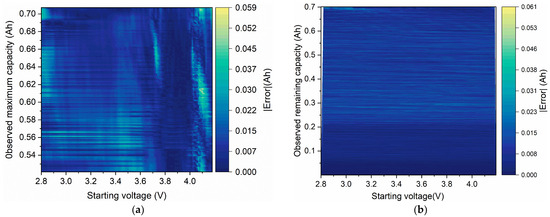

and are associated with the maximum capacity and the remaining capacity of the cell, respectively, so the changes in state of health () and state of charge () of the cell can be visualized in terms of max capacity and residual capacity. In this paper, by estimating the charging curve, the data of the maximum capacity and the remaining capacity during the cell cycle can be extracted accurately, and the predicted outcomes and errors are displayed in Figure 11 and Figure 12. Figure 11a,b compares the estimated results of the maximum and surplus capacities with the actual values. It is evident that the capacity loss of the cell is very serious; the capacity drops from 0.707 Ah to 0.521 Ah, and the capacity loss reaches 26%. Where Figure 12a,b plots the estimated errors of the maximum cell capacity and the surplus capacity. The results show that the absolute value of the maximum capacity estimation error extracted from the complete charging curve predicted using only 20 points of partial charging data is less than 0.059 Ah (7.97% of the nominal capacity of 0.74 Ah), with an average error of 0.011 Ah (1.48% of the nominal capacity of 0.74 Ah). The absolute value of the residual capacity prediction error is less than 0.061 Ah (8.24% of the rated capacity of 0.74 Ah), and the average error is 0.00385 Ah (0.52% of the rated capacity of 0.74 Ah).

Figure 11.

Estimation compared to actual values for voltage window of 200 mV: (a) remaining capacity; (b) maximum capacity.

Figure 12.

The estimation error of capacity for voltage window of 200 mV: (a) maximum capacity error; (b) remaining capacity error.

5. Conclusions

In this paper, a Bayesian optimization algorithm and deep neural network are combined to greatly optimize the configuration of hyperparameters in deep neural network design, on the one hand, in order to better adapt to the data type and improve the estimation precision of neural network models. On the other hand, using the data of different voltage windows of the charging profile as network inputs, the complete charging curve can be accurately predicted using only partial charging data containing 20 points (a section of 200 mV charging curve). The RMSE is less than 19.16 mAh (2.59% of the nominal capacity of 0.74 Ah) and its MAPE is less than 1.84%. The submitted approach guarantees both the accuracy of the estimation and the flexibility of the input data. At the same time, the maximum capacity and remaining capacity information are obtained from the estimated charging traces, thus visualizing the cell aging process and charging process, achieving a comprehensive and accurate prediction of battery life and range and providing support for intelligent battery management.

Author Contributions

Methodology, C.D. and Q.G.; validation, Q.G.; supervision, L.Z. and T.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 52072217. And supported by the Joint Funds of the Hubei Natural Science Foundation Innovation and Development, grant number 2022CFD034.

Data Availability Statement

All data generated or analyzed during this study are included in this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shen, L.; Cheng, Q.; Cheng, Y.; Wei, L.; Wang, Y. Hierarchical Control of DC Micro-Grid for Photovoltaic EV Charging Station Based on Flywheel and Battery Energy Storage System. Electr. Power Syst. Res. 2020, 179, 106079. [Google Scholar] [CrossRef]

- Wang, S.-L.; Tang, W.; Fernandez, C.; Yu, C.-M.; Zou, C.-Y.; Zhang, X.-Q. A Novel Endurance Prediction Method of Series Connected Lithium-Ion Batteries Based on the Voltage Change Rate and Iterative Calculation. J. Clean. Prod. 2019, 210, 43–54. [Google Scholar] [CrossRef]

- Wróblewski, P.; Kupiec, J.; Drożdż, W.; Lewicki, W.; Jaworski, J. The Economic Aspect of Using Different Plug-In Hybrid Driving Techniques in Urban Conditions. Energies 2021, 14, 3543. [Google Scholar] [CrossRef]

- Wróblewski, P.; Drożdż, W.; Lewicki, W.; Miązek, P. Methodology for Assessing the Impact of Aperiodic Phenomena on the Energy Balance of Propulsion Engines in Vehicle Electromobility Systems for Given Areas. Energies 2021, 14, 2314. [Google Scholar] [CrossRef]

- Lu, J.; Xiong, R.; Tian, J.; Wang, C.; Hsu, C.-W.; Tsou, N.-T.; Sun, F.; Li, J. Battery Degradation Prediction against Uncertain Future Conditions with Recurrent Neural Network Enabled Deep Learning. Energy Storage Mater. 2022, 50, 139–151. [Google Scholar] [CrossRef]

- Barai, A.; Uddin, K.; Dubarry, M.; Somerville, L.; McGordon, A.; Jennings, P.; Bloom, I. A Comparison of Methodologies for the Non-Invasive Characterisation of Commercial Li-Ion Cells. Prog. Energy Combust. Sci. 2019, 72, 1–31. [Google Scholar] [CrossRef]

- Song, L.; Zhang, K.; Liang, T.; Han, X.; Zhang, Y. Intelligent State of Health Estimation for Lithium-Ion Battery Pack Based on Big Data Analysis. J. Energy Storage 2020, 32, 101836. [Google Scholar] [CrossRef]

- Xia, B.; Zhang, Z.; Lao, Z.; Wang, W.; Sun, W.; Lai, Y.; Wang, M. Strong Tracking of a H-Infinity Filter in Lithium-Ion Battery State of Charge Estimation. Energies 2018, 11, 1481. [Google Scholar] [CrossRef]

- Hannan, M.A.; Lipu, M.S.H.; Hussain, A.; Ker, P.J.; Mahlia, T.M.I.; Mansor, M.; Ayob, A.; Saad, M.H.; Dong, Z.Y. Toward Enhanced State of Charge Estimation of Lithium-Ion Batteries Using Optimized Machine Learning Techniques. Sci. Rep. 2020, 10, 4687. [Google Scholar] [CrossRef]

- Chen, Z.; Zhao, H.; Shu, X.; Zhang, Y.; Shen, J.; Liu, Y. Synthetic State of Charge Estimation for Lithium-Ion Batteries Based on Long Short-Term Memory Network Modeling and Adaptive H-Infinity Filter. Energy 2021, 228, 120630. [Google Scholar] [CrossRef]

- Jiao, M.; Wang, D.; Qiu, J. A GRU-RNN Based Momentum Optimized Algorithm for SOC Estimation. J. Power Sources 2020, 459, 228051. [Google Scholar] [CrossRef]

- Liu, Y.; Li, J.; Zhang, G.; Hua, B.; Xiong, N. State of Charge Estimation of Lithium-Ion Batteries Based on Temporal Convolutional Network and Transfer Learning. IEEE Access 2021, 9, 34177–34187. [Google Scholar] [CrossRef]

- Yuan, H.; Liu, J.; Zhou, Y.; Pei, H. State of Charge Estimation of Lithium Battery Based on Integrated Kalman Filter Framework and Machine Learning Algorithm. Energies 2023, 16, 2155. [Google Scholar] [CrossRef]

- Gulcu, A.; Kus, Z. Hyper-Parameter Selection in Convolutional Neural Networks Using Microcanonical Optimization Algorithm. IEEE Access 2020, 8, 52528–52540. [Google Scholar] [CrossRef]

- Bergstra, J.; Bengio, Y. Random Search for Hyper-Parameter Optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Yang, G.; Tian, X.; Li, H.; Deng, H.; Li, H. Target Recognition Using of PCNN Model Based on Grid Search Method. dtcse 2017. [CrossRef]

- Wang, J.; Xu, J.; Wang, X. Combination of Hyperband and Bayesian Optimization for Hyperparameter Optimization in Deep Learning. arXiv 2018, arXiv:1801.01596. [Google Scholar]

- Shahriari, B.; Swersky, K.; Wang, Z.; Adams, R.P.; De Freitas, N. Taking the Human Out of the Loop: A Review of Bayesian Optimization. Proc. IEEE 2016, 104, 148–175. [Google Scholar] [CrossRef]

- Zhao, F.; Li, P.; Li, Y.; Li, Y. The Li-Ion Battery State of Charge Prediction of Electric Vehicle Using Deep Neural Network. In Proceedings of the 2019 Chinese Control and Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; IEEE: Piscataway, NJ, USA; pp. 773–777. [Google Scholar]

- Tian, J.; Xiong, R.; Shen, W.; Lu, J.; Sun, F. Flexible Battery State of Health and State of Charge Estimation Using Partial Charging Data and Deep Learning. Energy Storage Mater. 2022, 51, 372–381. [Google Scholar] [CrossRef]

- Bertrand, H.; Ardon, R.; Perrot, M.; Bloch, I. Hyperparameter Optimization of Deep Neural Networks: Combining Hyperband with Bayesian Model Selection; Hindustan Aeronautics Limited: Bangalore, India, 2019. [Google Scholar]

- Tian, J.; Xiong, R.; Shen, W.; Lu, J.; Yang, X.-G. Deep neural network battery charging curve prediction using 30 points collected in 10 min. Joule 2021, 5, 1521–1534. [Google Scholar] [CrossRef]

- Xu, L.; Lin, X.; Xie, Y.; Hu, X. Enabling High-Fidelity Electrochemical P2D Modeling of Lithium-Ion Batteries via Fast and Non-Destructive Parameter Identification. Energy Storage Mater. 2022, 45, 952–968. [Google Scholar] [CrossRef]

- Hannan, M.A.; Lipu, M.S.H.; Hussain, A.; Mohamed, A. A Review of Lithium-Ion Battery State of Charge Estimation and Management System in Electric Vehicle Applications: Challenges and Recommendations. Renew. Sustain. Energy Rev. 2017, 78, 834–854. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, S.; Li, W.; Miao, Q. State-of-Charge Estimation of Lithium-Ion Batteries Using LSTM and UKF. Energy 2020, 201, 117664. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).