Analysis of Anthropogenic Waste Heat Emission from an Academic Data Center

Abstract

1. Introduction

2. Materials and Methods

2.1. Server Room Selected for Simulation

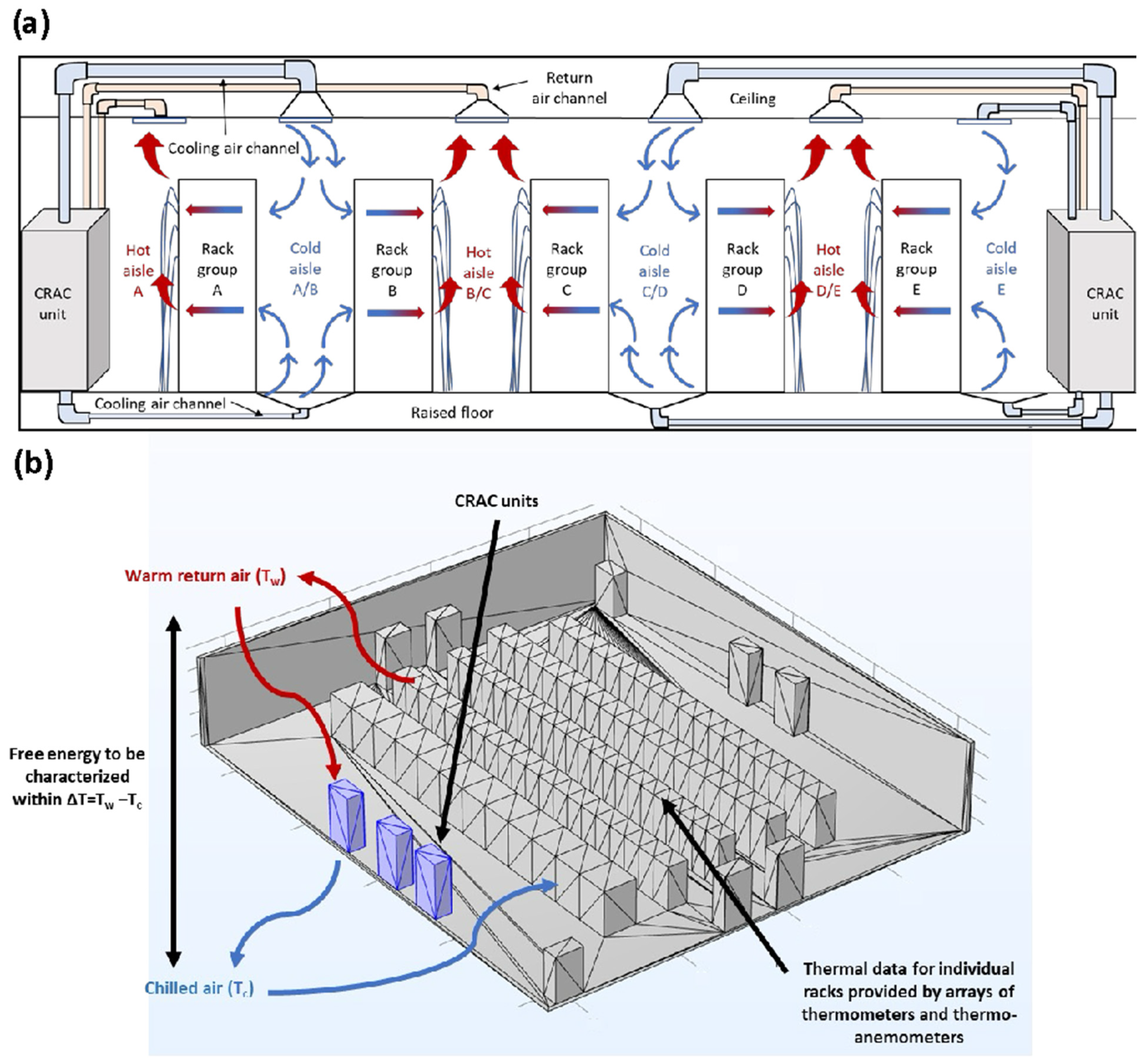

2.2. Air-Cooling System in the Server Room

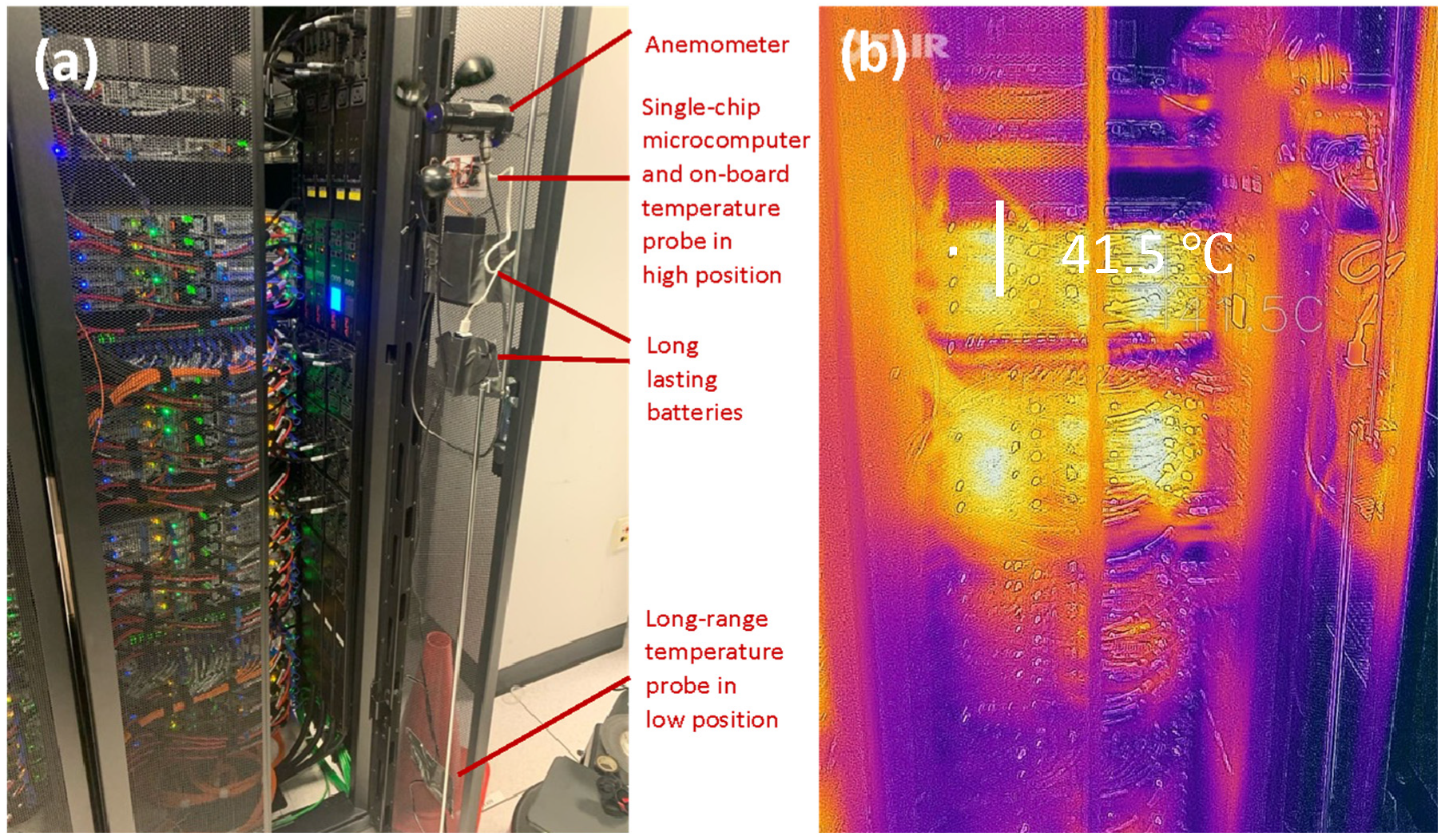

2.3. Analysis Framework and Collection of Primary Data

2.4. Calculation of Cooling and Energy Efficiency Indices

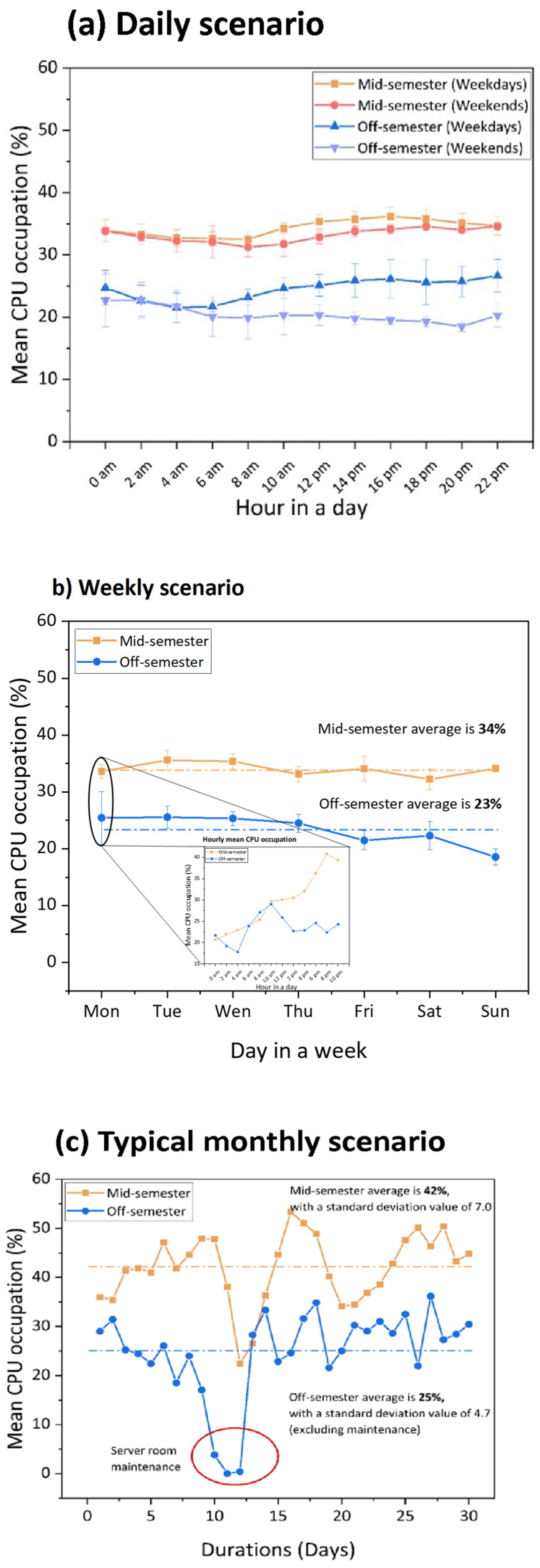

2.5. Characterization of Operating Trends and Thermal Conditions

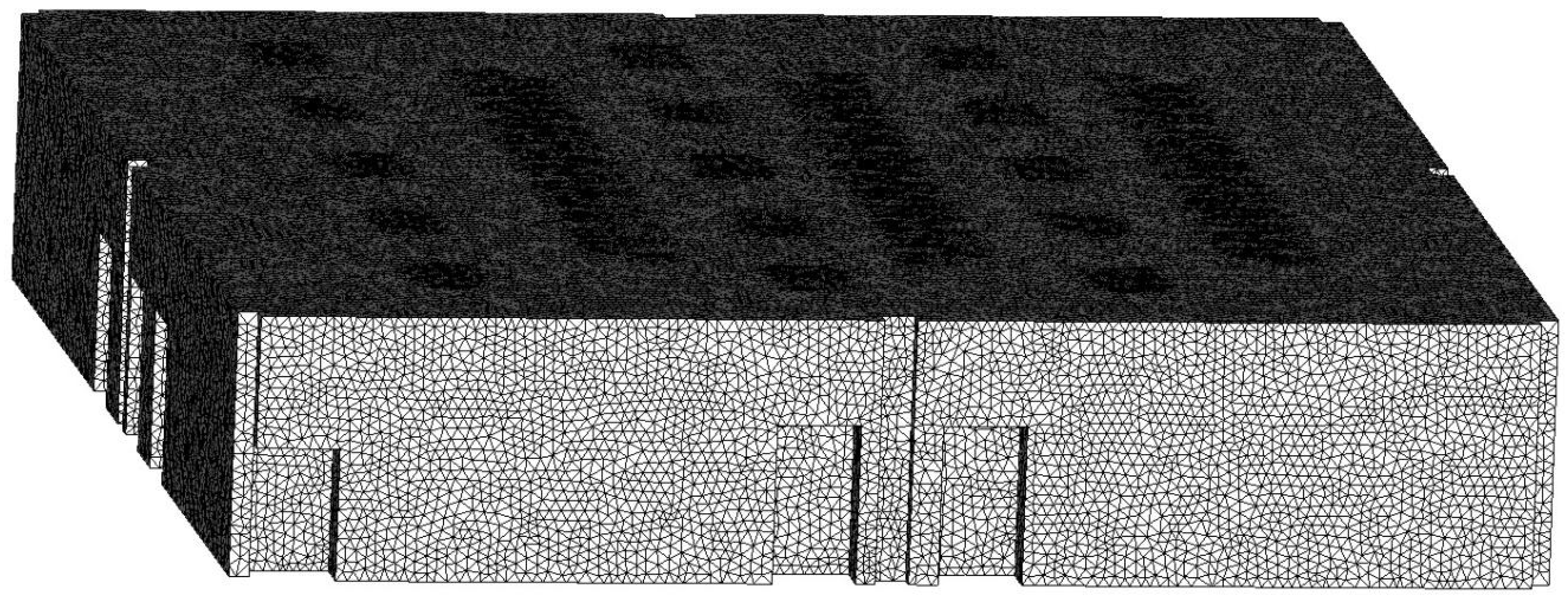

2.6. Development of CFD Model

2.7. Estimation of Server Room Waste Heat Quality and Quantity

3. Results and Discussion

3.1. Server Room CSE and PUE

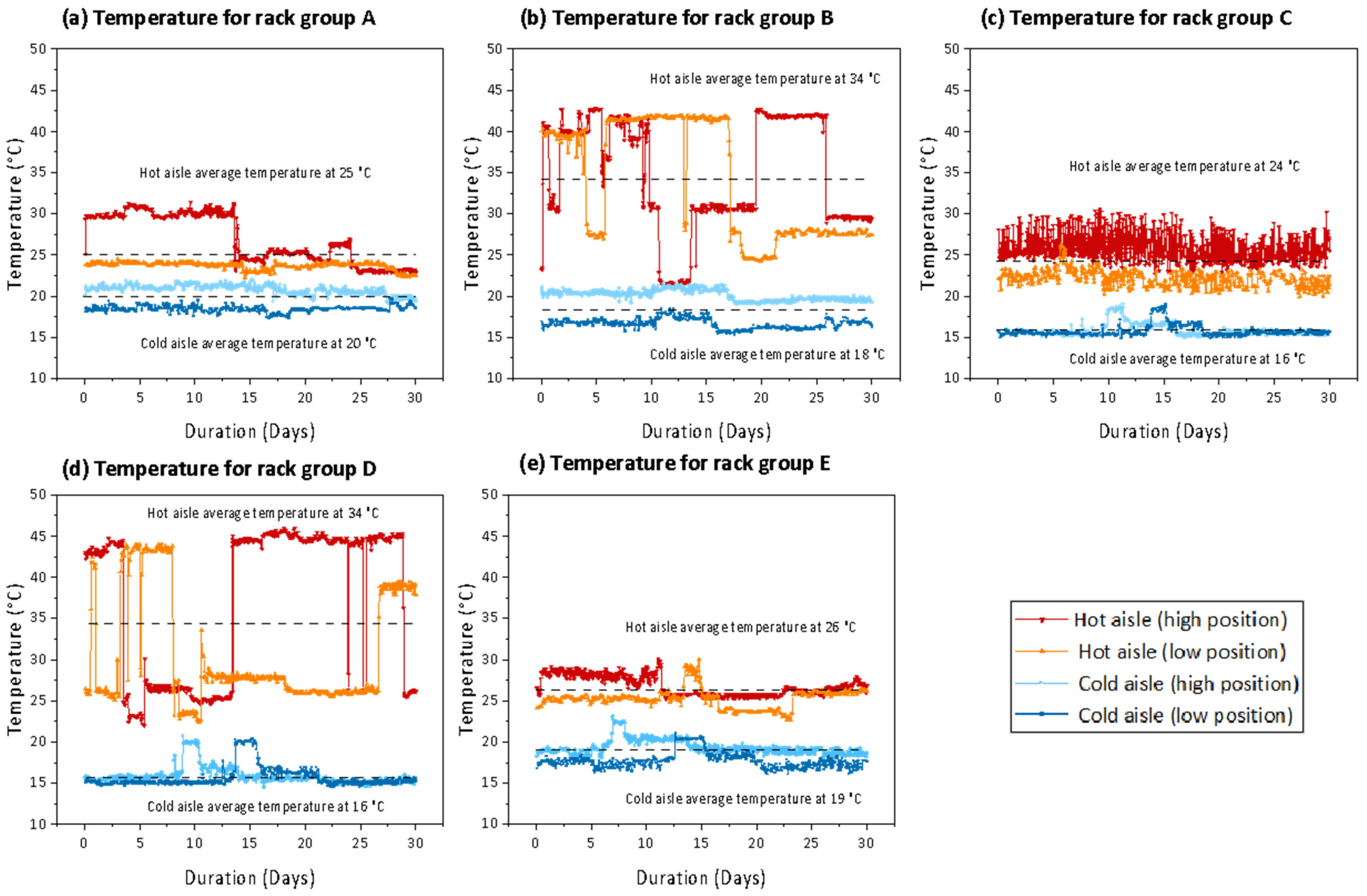

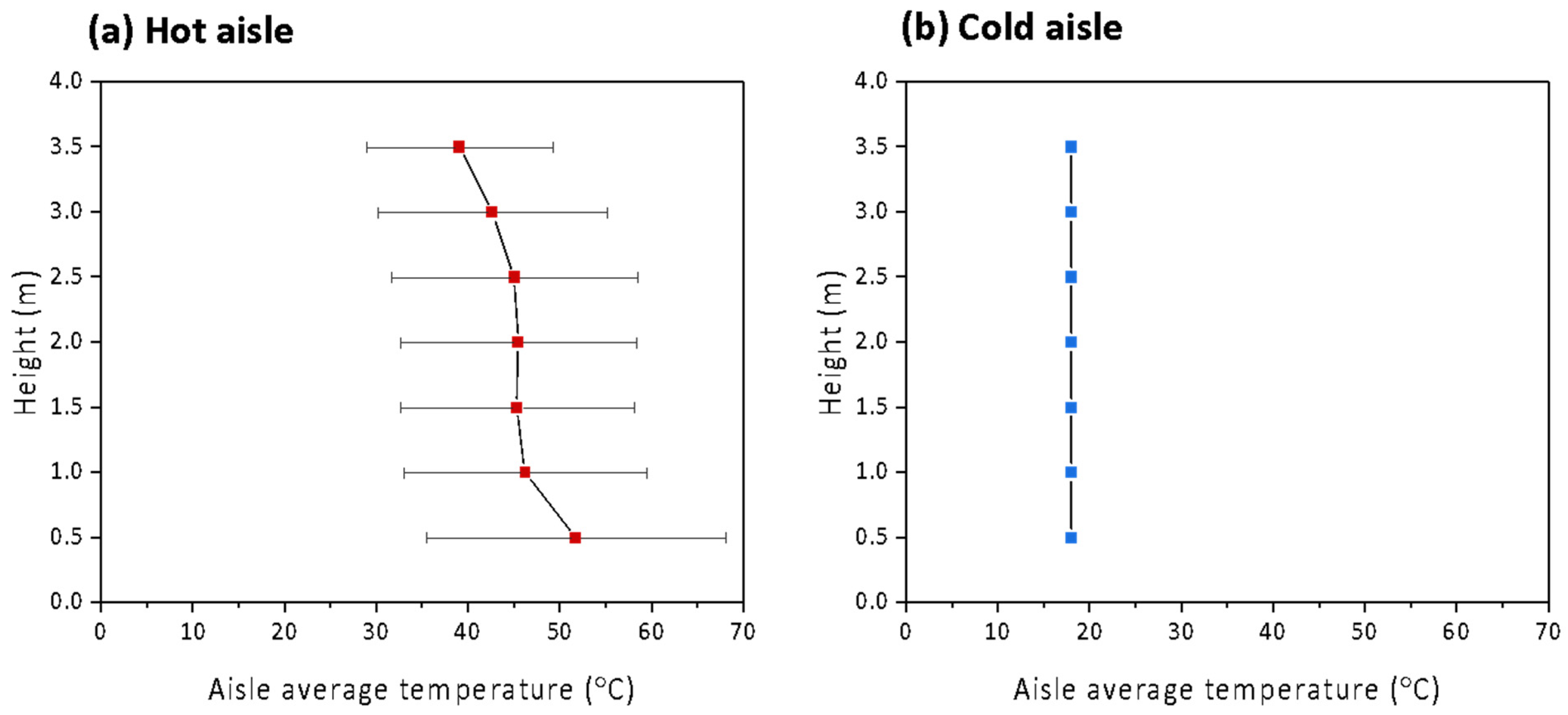

3.2. Server Room Operating Trends and Temperature Distribution

3.3. Server Room Waste Heat Estimation and Reuse Analysis from CFD Model Results

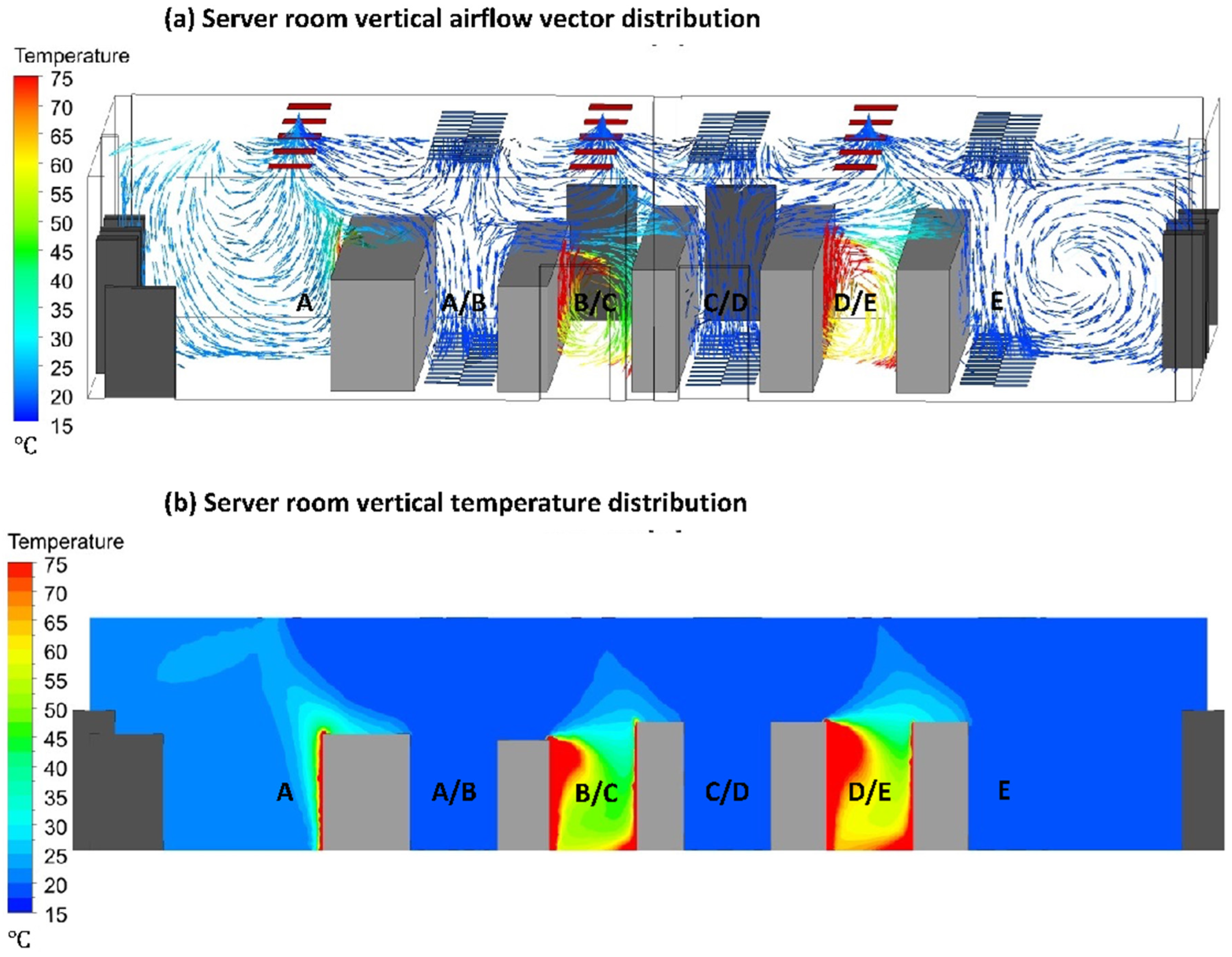

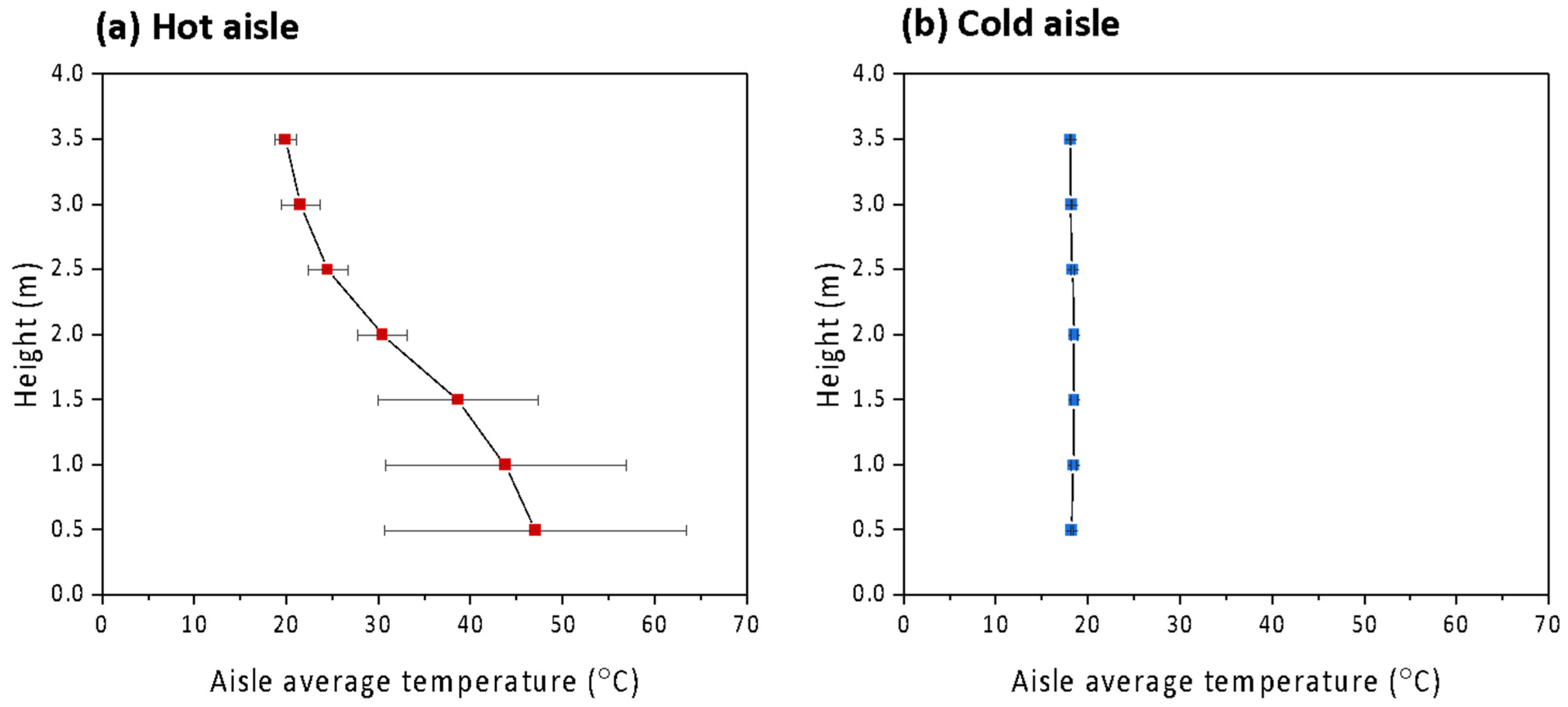

3.3.1. Current Server Room Configuration with Open Aisles

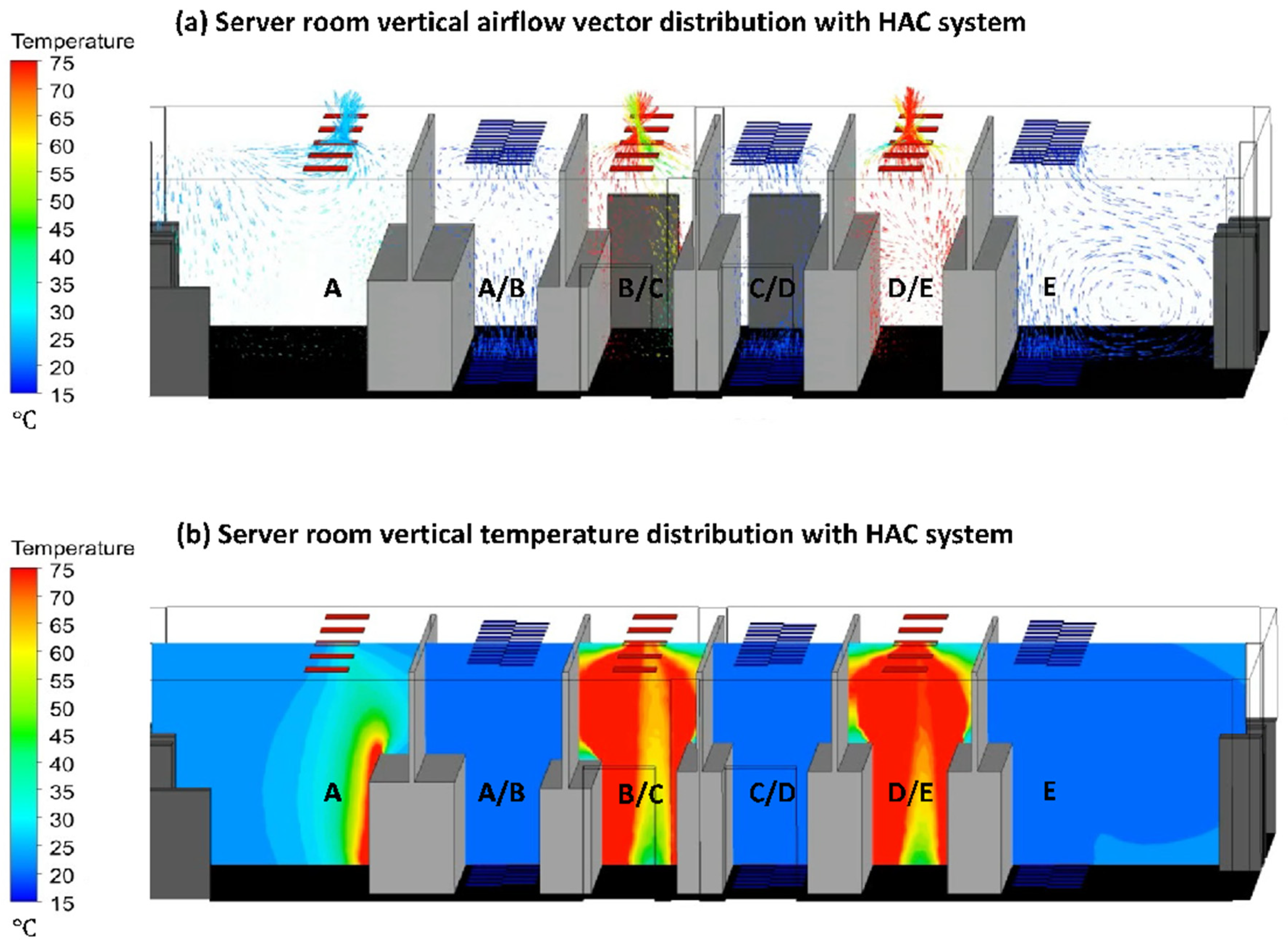

3.3.2. Example of Improved Server Room Configuration with HAC System

4. Conclusions and Implications

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Rack Group | Servers per Rack Group | CRAC Number | Operating Status |

|---|---|---|---|

| A | 268 | CRAC-1 | ON |

| CRAC-2 | ON | ||

| B | 592 | CRAC-3 | OFF |

| CRAC-4 | ON | ||

| C | 588 | CRAC-5 | ON |

| CRAC-6 | ON | ||

| D | 436 | CRAC-7 | OFF |

| CRAC-8 | ON | ||

| E | 594 | CRAC-9 | ON |

| Hot Aisle Temperature (°C) | Airflow Rate (m3/s) | |

|---|---|---|

| Location 1 (in rack group A) | 22.6 | 7.61 |

| Location 2 (in rack group B) | 31.0 | 16.4 |

| Location 3 (in rack group C) | 30.0 | 6.54 |

| Location 4 (in rack group D) | 45.4 | 3.66 |

| Location 5 (in rack group E) | 31.4 | 11.2 |

| Average | 32.1 | 9.07 |

| Temperature (°C) | Airflow Rate (m3/s) | |

|---|---|---|

| Hot vents ceiling | 24.1 | 0.93 |

| Cold vents floor | 13.0 | 0.91 |

| Cold vents ceiling | 15.0 | 0.74 |

References

- Dayarathna, M.; Wen, Y.; Fan, R. Data center energy consumption modeling: A survey. IEEE Commun. Surv. Tutor. 2015, 18, 732–794. [Google Scholar] [CrossRef]

- Masanet, E.; Shehabi, A.; Lei, N.; Smith, S.; Koomey, J. Recalibrating global data center energy-use estimates. Science 2020, 367, 984–986. [Google Scholar] [CrossRef] [PubMed]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Poess, M.; Nambiar, R.O. Energy cost, the key challenge of today’s data centers: A power consumption analysis of TPC-C results. Proc. VLDB Endow. 2008, 1, 1229–1240. [Google Scholar] [CrossRef]

- Kamiya, G. Data Centres and Data Transmission Networks. Available online: https://www.iea.org/reports/data-centres-and-data-transmission-networks (accessed on 24 February 2024).

- Capozzoli, A.; Chinnici, M.; Perino, M.; Serale, G. Review on performance metrics for energy efficiency in data center: The role of thermal management. In Proceedings of the International Workshop on Energy Efficient Data Centers, Cambridge, UK, 10 June 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 135–151. [Google Scholar]

- Long, S.; Li, Y.; Huang, J.; Li, Z.; Li, Y. A review of energy efficiency evaluation technologies in cloud data centers. Energy Build. 2022, 260, 111848. [Google Scholar] [CrossRef]

- Lee, Y.-T.; Wen, C.-Y.; Shih, Y.-C.; Li, Z.; Yang, A.-S. Numerical and experimental investigations on thermal management for data center with cold aisle containment configuration. Appl. Energy 2022, 307, 118213. [Google Scholar] [CrossRef]

- Baniata, H.; Mahmood, S.; Kertesz, A. Assessing anthropogenic heat flux of public cloud data centers: Current and future trends. PeerJ Comput. Sci. 2021, 7, e478. [Google Scholar] [CrossRef] [PubMed]

- Choo, K.; Galante, R.M.; Ohadi, M.M. Energy consumption analysis of a medium-size primary data center in an academic campus. Energy Build. 2014, 76, 414–421. [Google Scholar] [CrossRef]

- Dvorak, V.; Zavrel, V.; Torrens Galdiz, J.; Hensen, J.L. Simulation-based assessment of data center waste heat utilization using aquifer thermal energy storage of a university campus. In Building Simulation; Springer: Berlin/Heidelberg, Germany, 2020; Volume 13, pp. 823–836. [Google Scholar]

- Ali, E. Energy efficient configuration of membrane distillation units for brackish water desalination using exergy analysis. Chem. Eng. Res. Des. 2017, 125, 245–256. [Google Scholar] [CrossRef]

- Cho, J.; Lim, T.; Kim, B.S. Viability of datacenter cooling systems for energy efficiency in temperate or subtropical regions: Case study. Energy Build. 2012, 55, 189–197. [Google Scholar] [CrossRef]

- Karimi, L.; Yacuel, L.; Degraft-Johnson, J.; Ashby, J.; Green, M.; Renner, M.; Bergman, A.; Norwood, R.; Hickenbottom, K.L. Water-energy tradeoffs in data centers: A case study in hot-arid climates. Resour. Conserv. Recycl. 2022, 181, 106194. [Google Scholar] [CrossRef]

- Greenberg, S.; Mills, E.; Tschudi, B.; Rumsey, P.; Myatt, B. Best practices for data centers: Lessons learned from benchmarking 22 data centers. In Proceedings of the ACEEE Summer Study on Energy Efficiency in Buildings, Asilomar, CA, USA, 13–18 August 2006; Volume 3, pp. 76–87. [Google Scholar]

- Wang, L.; Khan, S.U. Review of performance metrics for green data centers: A taxonomy study. J. Supercomput. 2013, 63, 639–656. [Google Scholar] [CrossRef]

- Mathew, P.; Ganguly, S.; Greenberg, S.; Sartor, D. Self-Benchmarking Guide for Data Centers: Metrics, Benchmarks, Actions; Lawrence Berkeley National Lab. (LBNL): Berkeley, CA, USA, 2009. [Google Scholar]

- Pärssinen, M.; Wahlroos, M.; Manner, J.; Syri, S. Waste heat from data centers: An investment analysis. Sustain. Cities Soc. 2019, 44, 428–444. [Google Scholar] [CrossRef]

- Lu, T.; Lü, X.; Remes, M.; Viljanen, M. Investigation of air management and energy performance in a data center in Finland: Case study. Energy Build. 2011, 43, 3360–3372. [Google Scholar] [CrossRef]

- Monroe, M. How to Reuse Waste Heat from Data Centers Intelligently; Data Center Knowledge: West Chester Township, OH, USA, 2016. [Google Scholar]

- Wahlroos, M.; Pärssinen, M.; Rinne, S.; Syri, S.; Manner, J. Future views on waste heat utilization–Case of data centers in Northern Europe. Renew. Sustain. Energy Rev. 2018, 82, 1749–1764. [Google Scholar] [CrossRef]

- Velkova, J. Thermopolitics of data: Cloud infrastructures and energy futures. Cult. Stud. 2021, 35, 663–683. [Google Scholar] [CrossRef]

- Vela, J. Helsinki data centre to heat homes. The Guardian, 20 July 2010. Available online: https://www.theguardian.com/environment/2010/jul/20/helsinki-data-centre-heat-homes (accessed on 24 February 2024).

- Fontecchio, M. Companies Reuse Data Center Waste Heat to Improve Energy Efficiency; TechTarget SearchDataCenter: Newton, MA, USA, 2008. [Google Scholar]

- Miller, R. Data Center Used to Heat Swimming Pool; Data Center Knowledge: West Chester Township, OH, USA, 2008; Volume 2. [Google Scholar]

- Oró, E.; Taddeo, P.; Salom, J. Waste heat recovery from urban air cooled data centres to increase energy efficiency of district heating networks. Sustain. Cities Soc. 2019, 45, 522–542. [Google Scholar] [CrossRef]

- Nerell, J. Heat Recovery from Data Centres. Available online: https://eu-mayors.ec.europa.eu/sites/default/files/2023-10/2023_CoMo_CaseStudy_Stockholm_EN.pdf (accessed on 24 February 2024).

- Westin Building Exchange. 2018. Available online: https://www.westinbldg.com/Content/PDF/WBX_Fact_Sheet.pdf (accessed on 24 February 2024).

- Nieminen, T. Fortum and Microsoft’s Data Centre Project Advances Climate Targets. Available online: https://www.fortum.com/data-centres-helsinki-region (accessed on 24 February 2024).

- Huang, P.; Copertaro, B.; Zhang, X.; Shen, J.; Löfgren, I.; Rönnelid, M.; Fahlen, J.; Andersson, D.; Svanfeldt, M. A review of data centers as prosumers in district energy systems: Renewable energy integration and waste heat reuse for district heating. Appl. Energy 2020, 258, 114109. [Google Scholar] [CrossRef]

- Ebrahimi, K.; Jones, G.F.; Fleischer, A.S. A review of data center cooling technology, operating conditions and the corresponding low-grade waste heat recovery opportunities. Renew. Sustain. Energy Rev. 2014, 31, 622–638. [Google Scholar] [CrossRef]

- Sandberg, M.; Risberg, M.; Ljung, A.-L.; Varagnolo, D.; Xiong, D.; Nilsson, M. A modelling methodology for assessing use of datacenter waste heat in greenhouses. In Proceedings of the Third International Conference on Environmental Science and Technology, ICOEST, Budapest, Hungary, 19–23 October 2017. [Google Scholar]

- Mountain, G. Land-Based Lobster Farming Will Use Waste Heat from Data Center. Available online: https://greenmountain.no/data-center-heat-reuse/ (accessed on 24 February 2024).

- White Data Center Inc. The Commencement of Japanese Eel Farming. Available online: https://corp.wdc.co.jp/en/news/2024/01/240/ (accessed on 24 February 2024).

- Data Center Optimization Initiative (DCOI). Available online: https://viz.ogp-mgmt.fcs.gsa.gov/data-center-v2 (accessed on 24 February 2024).

- Moss, S. Available online: https://www.datacenterdynamics.com/en/news/giant-us-federal-spending-bill-includes-data-center-energy-efficiency-measures/ (accessed on 24 February 2024).

- Oltmanns, J.; Sauerwein, D.; Dammel, F.; Stephan, P.; Kuhn, C. Potential for waste heat utilization of hot-water-cooled data centers: A case study. Energy Sci. Eng. 2020, 8, 1793–1810. [Google Scholar] [CrossRef]

- Hou, J.; Li, H.; Nord, N.; Huang, G. Model predictive control for a university heat prosumer with data centre waste heat and thermal energy storage. Energy 2023, 267, 126579. [Google Scholar] [CrossRef]

- Montagud-Montalvá, C.; Navarro-Peris, E.; Gómez-Navarro, T.; Masip-Sanchis, X.; Prades-Gil, C. Recovery of waste heat from data centres for decarbonisation of university campuses in a Mediterranean climate. Energy Convers. Manag. 2023, 290, 117212. [Google Scholar] [CrossRef]

- Li, H.; Hou, J.; Hong, T.; Ding, Y.; Nord, N. Energy, economic, and environmental analysis of integration of thermal energy storage into district heating systems using waste heat from data centres. Energy 2021, 219, 119582. [Google Scholar] [CrossRef]

- ISO/IEC 30134-2:2016; Key Performance Indicators Part 2: Power Usage Effectiveness (PUE). International Organization for Standardization (ISO): Geneva, Switzerland, 2018.

- Nadjahi, C.; Louahlia, H.; Lemasson, S. A review of thermal management and innovative cooling strategies for data center. Sustain. Comput. Inform. Syst. 2018, 19, 14–28. [Google Scholar] [CrossRef]

- Amiri Delouei, A.; Sajjadi, H.; Ahmadi, G. Ultrasonic vibration technology to improve the thermal performance of CPU water-cooling systems: Experimental investigation. Water 2022, 14, 4000. [Google Scholar] [CrossRef]

- Wang, J.; Deng, H.; Liu, Y.; Guo, Z.; Wang, Y. Coordinated optimal scheduling of integrated energy system for data center based on computing load shifting. Energy 2023, 267, 126585. [Google Scholar] [CrossRef]

- Fulpagare, Y.; Bhargav, A. Advances in data center thermal management. Renew. Sustain. Energy Rev. 2015, 43, 981–996. [Google Scholar] [CrossRef]

- Alissa, H.; Nemati, K.; Sammakia, B.; Ghose, K.; Seymour, M.; Schmidt, R. Innovative approaches of experimentally guided CFD modeling for data centers. In Proceedings of the 2015 31st Thermal Measurement, Modeling & Management Symposium (SEMI-THERM), San Jose, CA, USA, 15–19 March 2015; pp. 176–184. [Google Scholar]

- Wahlroos, M.; Pärssinen, M.; Manner, J.; Syri, S. Utilizing data center waste heat in district heating–Impacts on energy efficiency and prospects for low-temperature district heating networks. Energy 2017, 140, 1228–1238. [Google Scholar] [CrossRef]

- Li, P.; Yang, J.; Islam, M.A.; Ren, S. Making ai less “thirsty”: Uncovering and addressing the secret water footprint of ai models. arXiv 2023, arXiv:2304.03271. [Google Scholar]

- Data Center Cooling Market by Solution (Air Conditioning, Chilling Unit, Cooling Tower, Economizer System, liquid Cooling System, Control System), Service, Type of Cooling, Data Center Type, Industry, & Geography—Global Forecast to 2030. 2024. Available online: https://www.marketsandmarkets.com/Market-Reports/data-center-cooling-solutions-market-1038.html (accessed on 8 March 2024).

| Height (m) | Aisle Temperature (°C) | |||||

|---|---|---|---|---|---|---|

| Aisle A | Aisle A/B | Aisle B/C | Aisle C/D | Aisle D/E | Aisle E | |

| 0.5 | 25.1 | 18.3 | 51.4 | 18.3 | 64.5 | 18.0 |

| 1.0 | 25.8 | 18.6 | 49.0 | 18.5 | 56.4 | 18.0 |

| 1.5 | 26.7 | 18.8 | 41.8 | 18.6 | 47.2 | 18.0 |

| 2.0 | 27.9 | 18.8 | 29.1 | 18.6 | 34.1 | 18.0 |

| 2.5 | 27.5 | 18.5 | 22.4 | 18.4 | 23.4 | 18.0 |

| 3.0 | 24.4 | 18.2 | 19.9 | 18.1 | 20.1 | 18.0 |

| 3.5 | 21.5 | 18.1 | 19.0 | 18.0 | 19.0 | 18.0 |

| Height (m) | Aisle Temperature (°C) | |||||

|---|---|---|---|---|---|---|

| Aisle A | Aisle A/B | Aisle B/C | Aisle C/D | Aisle D/E | Aisle E | |

| 0.5 | 31.1 | 18.0 | 53.1 | 18.0 | 70.9 | 18.0 |

| 1.0 | 28.1 | 18.0 | 50.8 | 18.0 | 59.7 | 18.0 |

| 1.5 | 27.3 | 18.0 | 53.0 | 18.0 | 55.7 | 18.0 |

| 2.0 | 27.2 | 18.0 | 55.7 | 18.0 | 53.4 | 18.0 |

| 2.5 | 26.2 | 18.0 | 56.4 | 18.0 | 52.6 | 18.0 |

| 3.0 | 25.2 | 18.0 | 53.7 | 18.0 | 49.0 | 18.0 |

| 3.5 | 24.7 | 18.0 | 47.5 | 18.0 | 44.9 | 18.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, W.; Ebrahimi, B.; Kim, B.-D.; Devenport, C.L.; Childress, A.E. Analysis of Anthropogenic Waste Heat Emission from an Academic Data Center. Energies 2024, 17, 1835. https://doi.org/10.3390/en17081835

Ding W, Ebrahimi B, Kim B-D, Devenport CL, Childress AE. Analysis of Anthropogenic Waste Heat Emission from an Academic Data Center. Energies. 2024; 17(8):1835. https://doi.org/10.3390/en17081835

Chicago/Turabian StyleDing, Weijian, Behzad Ebrahimi, Byoung-Do Kim, Connie L. Devenport, and Amy E. Childress. 2024. "Analysis of Anthropogenic Waste Heat Emission from an Academic Data Center" Energies 17, no. 8: 1835. https://doi.org/10.3390/en17081835

APA StyleDing, W., Ebrahimi, B., Kim, B.-D., Devenport, C. L., & Childress, A. E. (2024). Analysis of Anthropogenic Waste Heat Emission from an Academic Data Center. Energies, 17(8), 1835. https://doi.org/10.3390/en17081835