Classification of Visual Smoothness Standards Using Multi-Scale Areal Texture Parameters and Low-Magnification Coherence Scanning Interferometry

Abstract

:1. Introduction

1.1. Challenges in Quantifying Surface Quality

1.2. Paper Description and Organization

2. Samples and Measurements

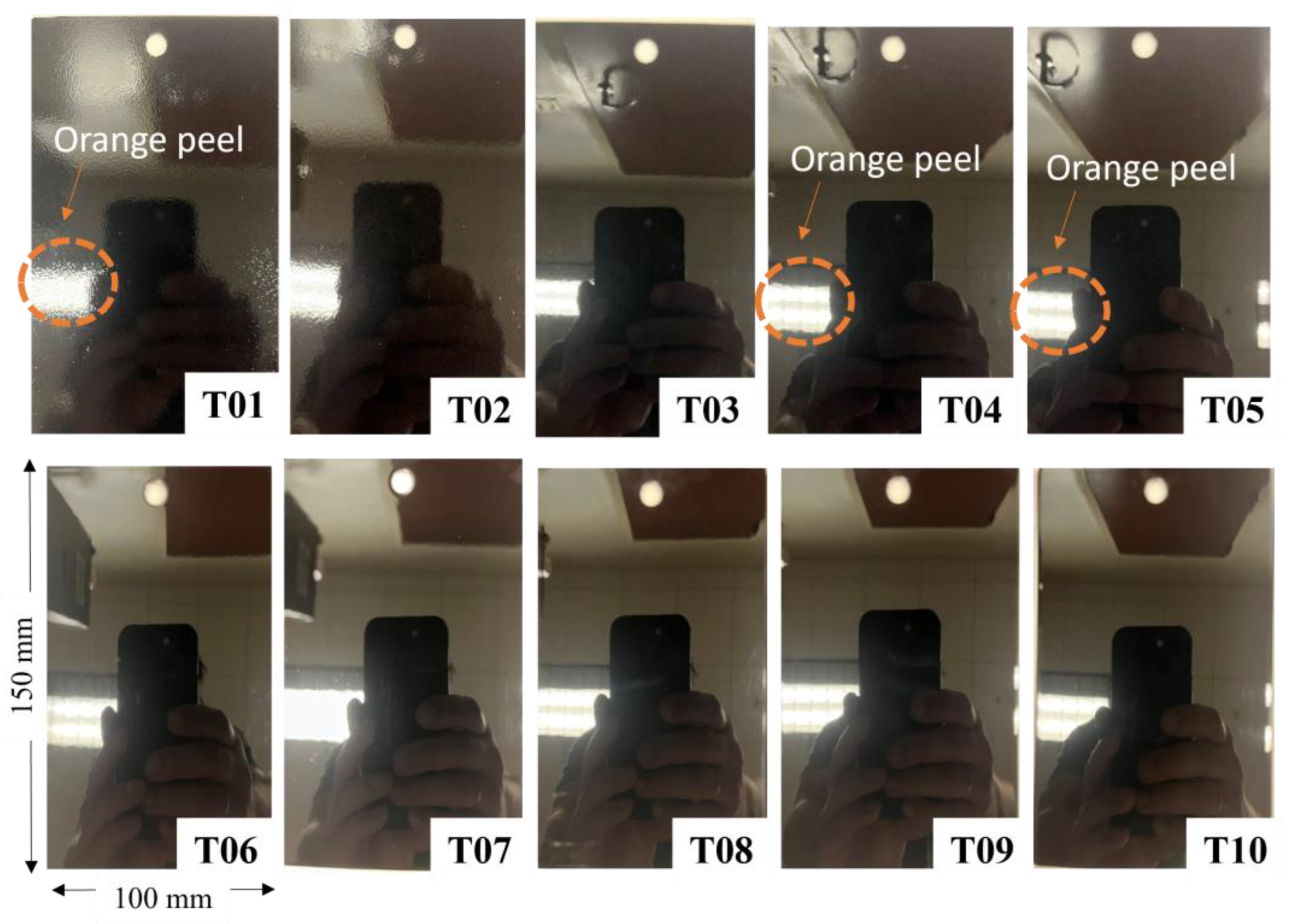

2.1. Visual Smoothness Standards

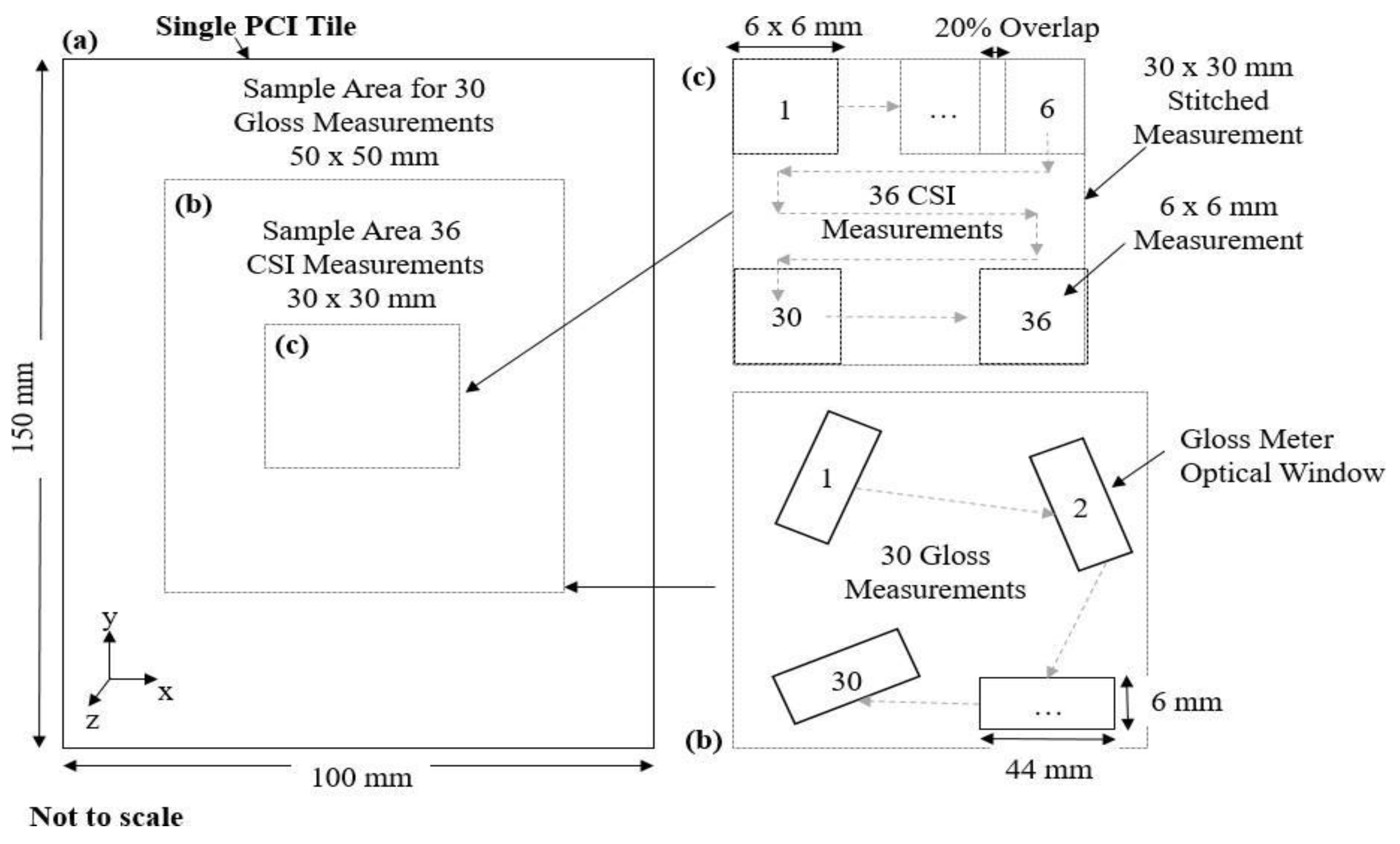

2.2. Gloss Meter and CSI Measurements

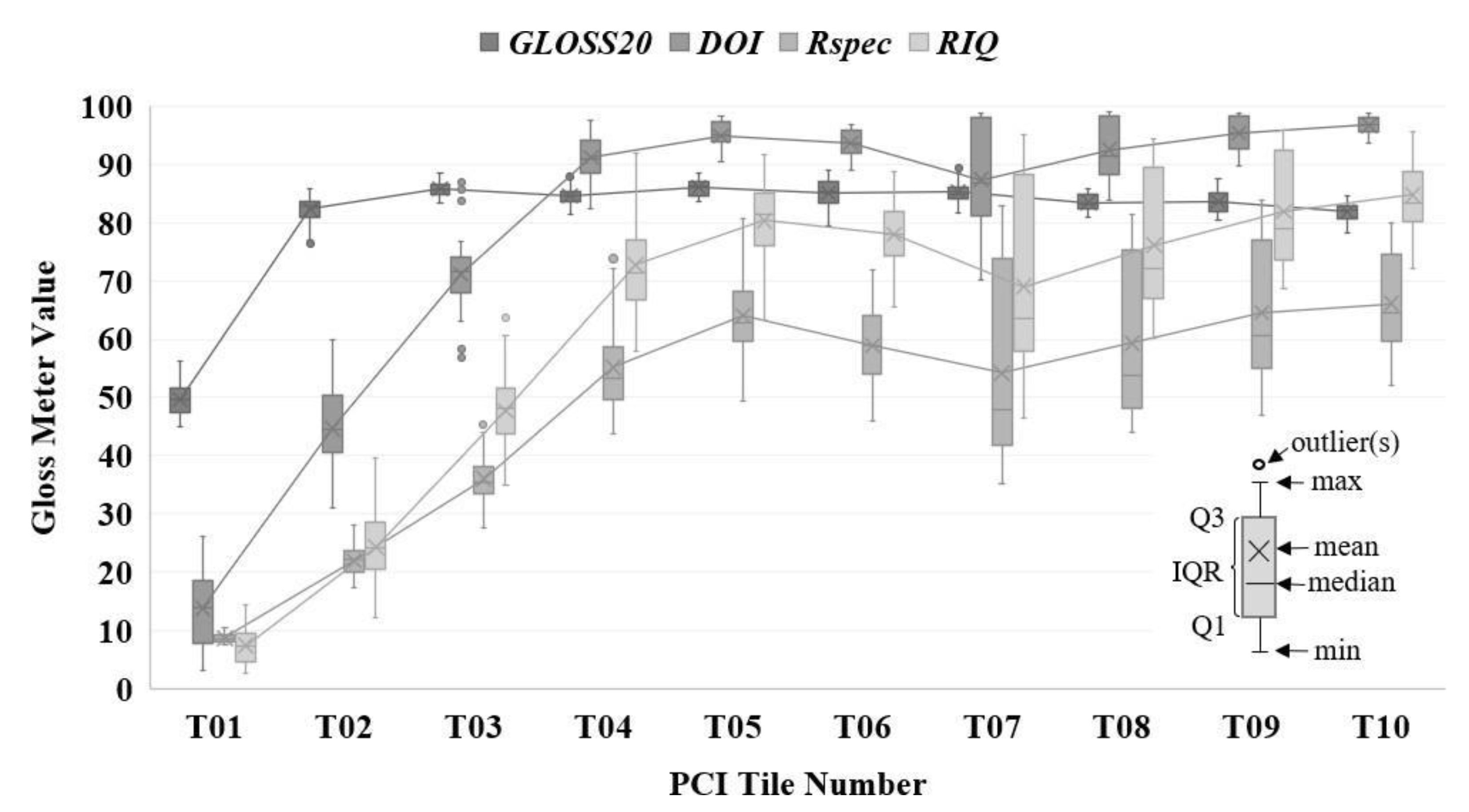

2.2.1. Measured Gloss Metric Values

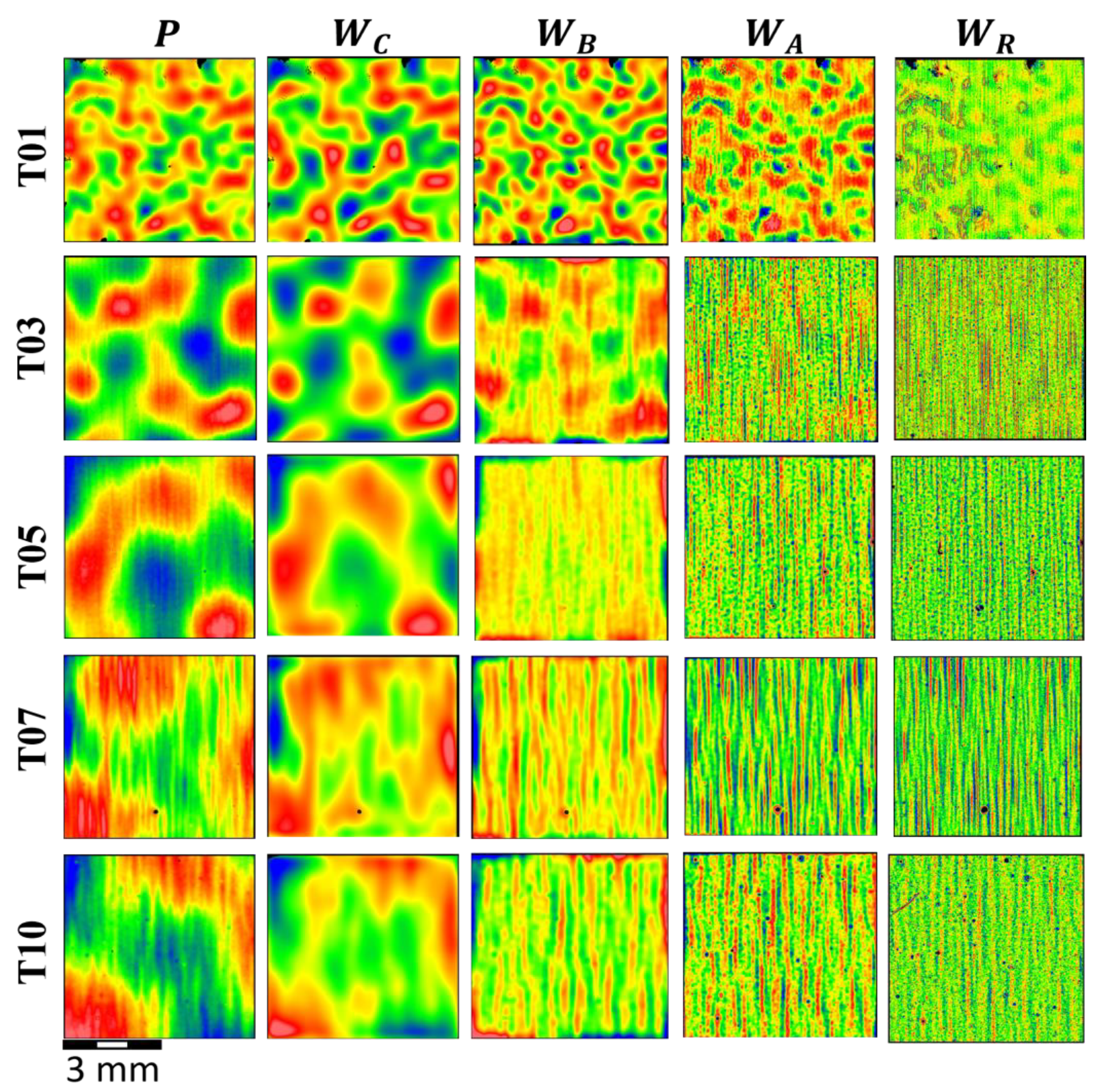

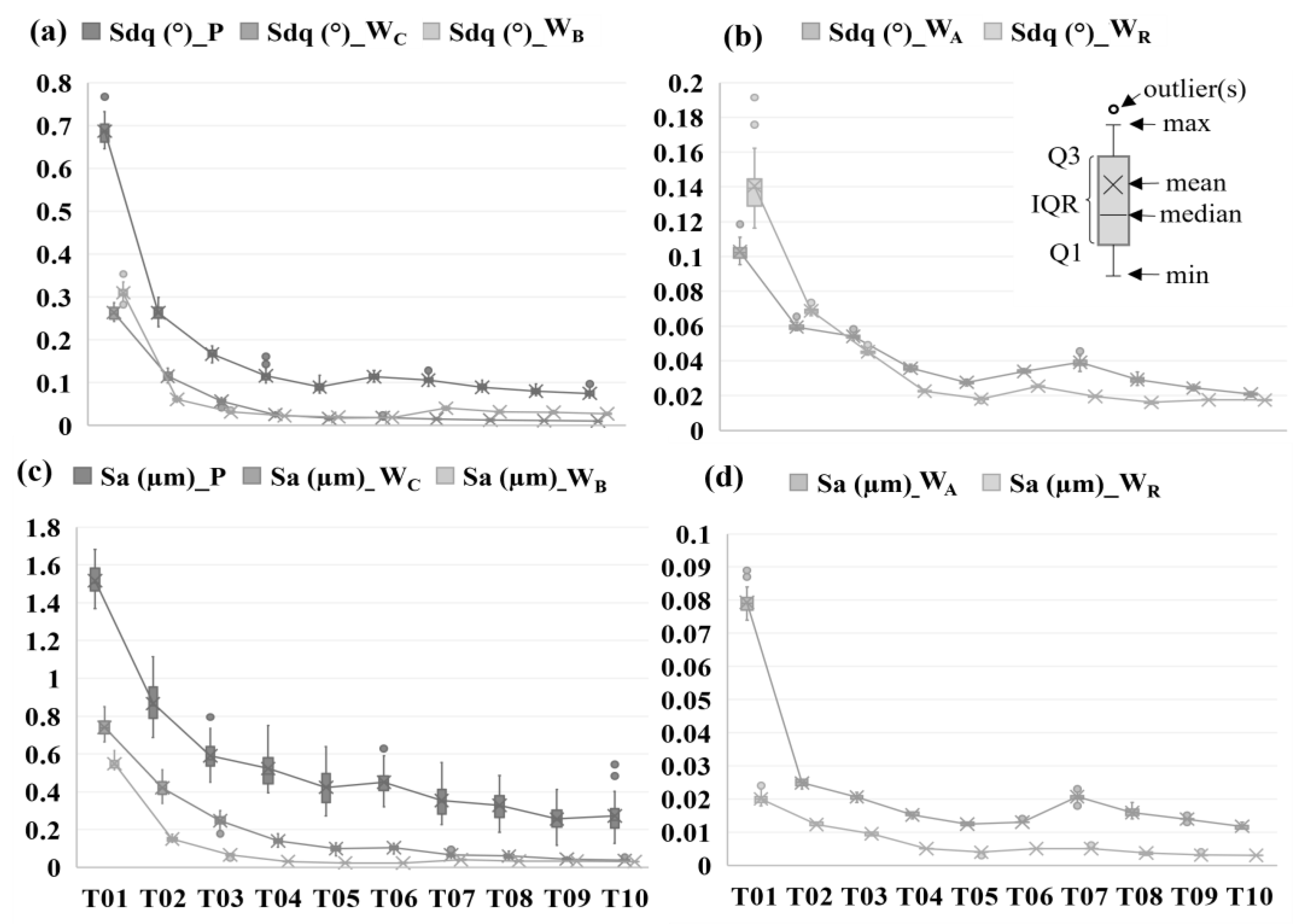

2.2.2. Measured CSI Data and the Power Spectral Density Curves

2.3. Dataset Generation

3. Parameter Selection and Classification Methodology

4. Results

4.1. Summary of d′ Matrix

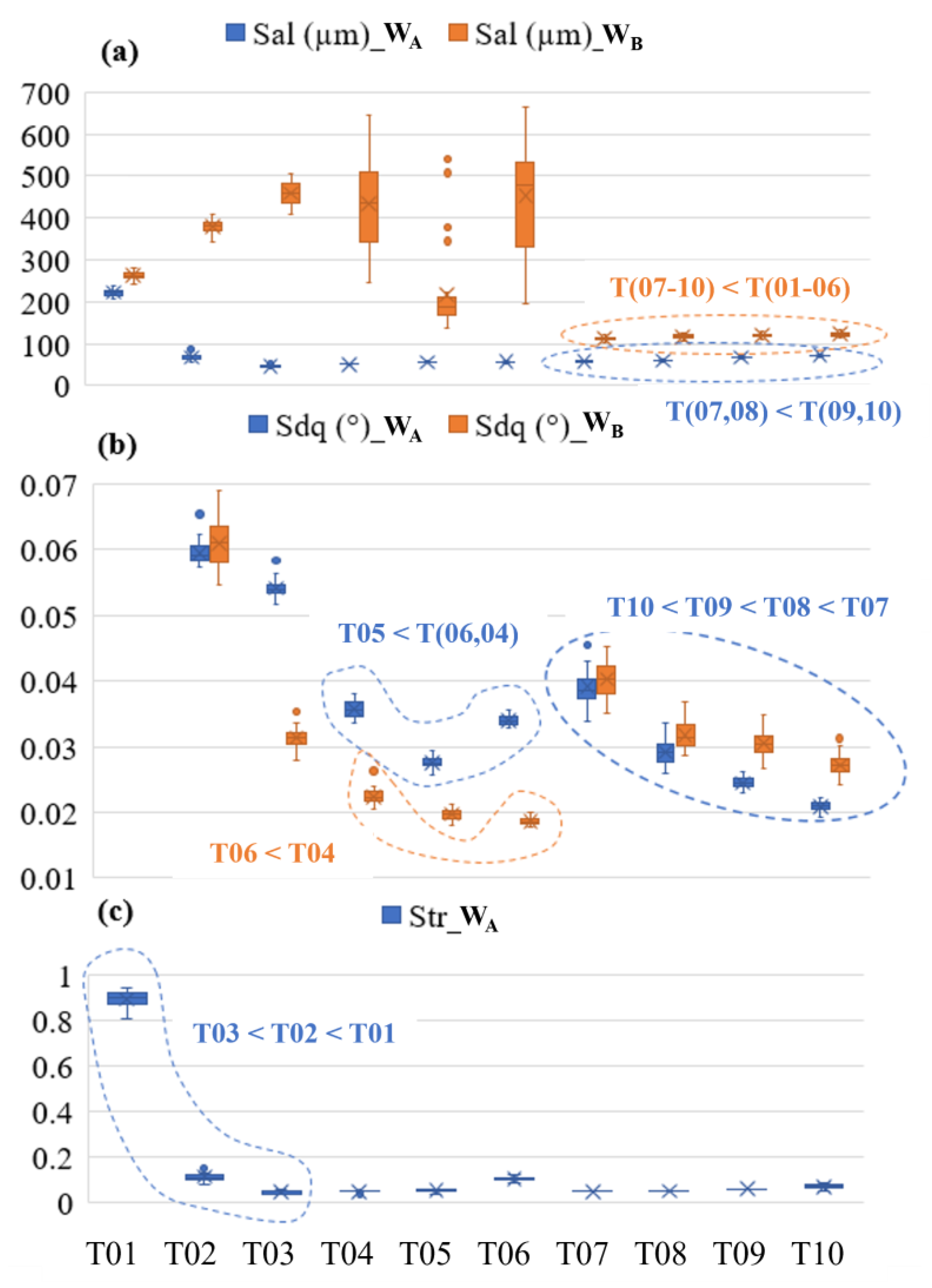

4.2. Identified Parameters and Classification Performance

4.3. Interpretation of Classification Criteria

5. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shi, Y.; Jiang, Z.; Cao, J.; Ehmann, K.F. Texturing of metallic surfaces for superhydrophobicity by water jet guided laser micro-machining. Appl. Surf. Sci. 2020, 500, 144286. [Google Scholar] [CrossRef]

- Nakae, H.; Inui, R.; Hirata, Y.; Saito, H. Effects of surface roughness on wettability. Acta Mater. 1998, 46, 2313–2318. [Google Scholar] [CrossRef]

- Kubiak, K.J.; Wilson, M.C.; Mathia, T.G.; Carval, P. Wettability versus roughness of engineering surfaces. Wear 2011, 271, 523–528. [Google Scholar] [CrossRef]

- Arumugam, K.; Smith, S.T.; Her, T.-H. Limitations caused by rough surfaces when used as the mirror in displacement measurement interferometry using a microchip laser source. In Proceedings of the American Society of Precision Engineering- 33rd Annual Meeting, Las Vegas, NV, USA, 4–9 November 2018. [Google Scholar]

- Vessot, K.; Messier, P.; Hyde, J.M.; Brown, C.A. Correlation between gloss reflectance and surface texture in photographic paper. Scanning 2015, 37, 204–217. [Google Scholar] [CrossRef] [PubMed]

- Hilbert, L.R.; Bagge-Ravn, D.; Kold, J.; Gram, L. Influence of surface roughness of stainless steel on microbial adhesion and corrosion resistance. Int. Biodeterior. Biodegrad. 2003, 52, 175–185. [Google Scholar] [CrossRef]

- Grzesik, W. Prediction of the functional performance of machined components based on surface topography: State of the art. J. Mater. Eng. Perform. 2016, 25, 4460–4468. [Google Scholar] [CrossRef]

- Gockel, J.; Sheridan, L.; Koerper, B.; Whip, B. The influence of additive manufacturing processing parameters on surface roughness and fatigue life. Int. J. Fatigue 2019, 124, 380–388. [Google Scholar] [CrossRef]

- Mandloi, K.; Allen, A.; Cherukuri, H.; Miller, J.; Duttrer, B.; Raquet, J. CFD and experimental investigation of AM surfaces with different build orientations. Surf. Topogr. Metrol. Prop. 2023, 11, 034001. [Google Scholar] [CrossRef]

- Fox, J.C.; Evans, C.; Mandloi, K. Characterization of laser powder bed fusion surfaces for heat transfer applications. CIRP Ann. 2021, 70, 467–470. [Google Scholar] [CrossRef]

- Mullany, B.; Savio, E.; Haitjema, H.; Leach, R. The implication and evaluation of geometrical imperfections on manufactured surfaces. CIRP Ann. 2022, 71, 717–739. [Google Scholar] [CrossRef]

- Hekkert, P. Design aesthetics: Principles of pleasure in design. Psychol. Sci. 2006, 48, 157. [Google Scholar]

- Yanagisawa, H.; Takatsuji, K. Effects of visual expectation on perceived tactile perception: An evaluation method of surface texture with expectation effect. Int. J. Des. 2015, 9, 39–51. [Google Scholar]

- Tymms, C.; Zorin, D.; Gardner, E.P. Tactile perception of the roughness of 3D-printed textures. J. Neurophysiol. 2018, 119, 862–876. [Google Scholar] [CrossRef] [PubMed]

- Stylidis, K.; Wickman, C.; Söderberg, R. Defining perceived quality in the automotive industry: An engineering approach. Procedia CIRP 2015, 36, 165–170. [Google Scholar] [CrossRef]

- Simonot, L.; Elias, M. Color change due to surface state modification. Color Res. Appl. 2003, 28, 45–49. [Google Scholar] [CrossRef]

- Dalal, E.N.; Natale–Hoffman, K.M. The effect of gloss on color. Color Res. Appl. 1999, 24, 369–376. [Google Scholar] [CrossRef]

- Briones, V.; Aguilera, J.M.; Brown, C. Effect of surface topography on color and gloss of chocolate samples. J. Food Eng. 2006, 77, 776–783. [Google Scholar] [CrossRef]

- Jiang, X.J.; Whitehouse, D.J. Technological shifts in surface metrology. CIRP Ann. 2012, 61, 815–836. [Google Scholar] [CrossRef]

- Whitehouse, D.J. Handbook of Surface and Nanometrology; Taylor & Francis: New York, NY, USA, 2002. [Google Scholar]

- Redford, J.; Mullany, B. Construction of a multi-class discrimination matrix and systematic selection of areal texture parameters for quantitative surface and defect classification. J. Manuf. Syst. 2023, 71, 131–143. [Google Scholar] [CrossRef]

- Brown, C.A.; Hansen, H.N.; Jiang, X.J.; Blateyron, F.; Berglund, J.; Senin, N.; Bartkowiak, T.; Dixon, B.; Le Goic, G.; Quinsat, Y.; et al. Multiscale analyses and characterizations of surface topographies. CIRP Ann. 2018, 67, 839–862. [Google Scholar] [CrossRef]

- Das, J.; Linke, B. Evaluation and systematic selection of significant multi-scale surface roughness parameters (SRPs) as process monitoring index. J. Mater. Process. Technol. 2017, 244, 157–165. [Google Scholar] [CrossRef]

- Hunter, R.S. Methods of determining gloss. NBS Res. Pap. RP 1937, 18, 958. [Google Scholar] [CrossRef]

- ISO 2813:2014; Paints and Varnishes—Determination of Gloss Value at 20°, 60° and 85°. International Organization for Standardization: Geneva, Switzerland, 2014. Available online: https://www.iso.org/standard/56807.html (accessed on 1 January 2024).

- ASTM D523; Standard Test Method for Specular Gloss. ASTM International: West Conshohocken, PA, USA, 2018. Available online: https://www.astm.org/d0523-14r18.html (accessed on 1 January 2024).

- ASTM E284-17; Standard Terminology of Appearance. ASTM International: West Conshohocken, PA, USA, 2017. Available online: https://cdn.standards.iteh.ai/samples/97708/7e9a7cafb4bb432f9458a1eeff471dcd/ASTM-E284-17.pdf (accessed on 1 January 2024).

- Weber, C.F.; Spiehl, D.; Dörsam, E. Comparing measurement principles of three gloss meters and using them for measuring gloss on metallic embellishments produced by the printing industry. Lux Jr. 2021, 15, 327–341. [Google Scholar]

- R. Instruments. Rhopoint iq Goniophotometer Datasheet. 2023. Available online: https://www.rhopointamericas.com/download/rhopoint-iq-goniophotometer-datasheet-english/ (accessed on 1 January 2024).

- Leach, R. (Ed.) Optical Measurement of Surface Topography; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Conroy, M.; Armstrong, J. A comparison of surface metrology techniques. J. Phys. Conf. Ser. 2005, 13, 458. [Google Scholar] [CrossRef]

- ISO 25178-2; Geometrical Product Specifications (GPS)—Surface Texture: Areal—Part 2: Terms, Definitions and Surface Texture Parameters. ISO: Geneva, Switzerland, 2021. Available online: https://www.iso.org/obp/ui/en/#!iso:std:74591:en (accessed on 1 January 2024).

- Todhunter, L.D.; Leach, R.K.; Lawes, S.D.; Blateyron, F. Industrial survey of ISO surface texture parameters. CIRP J. Manuf. Sci. Technol. 2017, 19, 84–92. [Google Scholar] [CrossRef]

- Gadelmawla, E.S.; Koura, M.M.; Maksoud, T.M.; Elewa, I.M.; Soliman, H.H. Roughness parameters. J. Mater. Process. Technol. 2002, 123, 133–145. [Google Scholar] [CrossRef]

- Whitehouse, D.J. The parameter rash—Is there a cure? Wear 1982, 83, 75–78. [Google Scholar] [CrossRef]

- ASTM D3451-06; Standard Guide for Testing Coating Powders and Powder Coatings. ASTM International: West Conshohocken, PA, USA, 2006. Available online: https://compass.astm.org/document/?contentCode=ASTM%7CD3451-06%7Cen-US&proxycl=https%3A%2F%2Fsecure.astm.org&fromLogin=true (accessed on 1 January 2024).

- Deere, J. Appearance Standard JDHZ610. Available online: https://studylib.net/doc/25779977/610 (accessed on 9 November 2023).

- Muralikrishnan, B.; Raja, J. Computational Surface and Roundness Metrology; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Michigan Metrology, L. Multiscale Surface Texture Analysis in Action. Available online: https://michmet.com/multiscale-analysis-in-action/ (accessed on 9 November 2023).

- BYK Instruments. Appearance of a Class A Surface: Orange Peel and Brilliance. Available online: https://www.byk-instruments.com/en/t/knowledge/Orange-Peel-Paint (accessed on 9 November 2023).

- DigitalMetrology. Bandify Multi-Band Surface Texture Analysis. Available online: https://digitalmetrology.com/solution/bandify/ (accessed on 9 November 2023).

- Zygo. Mx™ Surface Texture Parameters. Available online: https://www.zygo.com/insights/blog-posts/-/media/ccf08d090bbe4c8eb98c1292e17d1d65.ashx?la=en&revision=662471a5-53c8-4839-beaa-8c00f88e6238 (accessed on 9 November 2023).

- François, B. The areal field parameters. In Characterisation of Areal Surface Texture; Springer: Berlin/Heidelberg, Germany, 2013; pp. 15–43. [Google Scholar]

- FPedregosa, G.; Varoquaux, A.; Gramfort, V.; Michel, B.; Thirion, O.; Grisel, M.; Blondel, P.; Prettenhofer, R.; Weiss, V.; Dubourg, J.; et al. Sklearn.Modelselection.Stratifiedshufflesplit. Available online: http://scikit-learn.org/stable/modules/generated/sklearn.model_selection.StratifiedShuffleSplit.html (accessed on 9 November 2023).

| Instrument | Zygo NexView | Rho-Point IQ |

|---|---|---|

| Objective | 2.75× | n/a |

| Tube Lens | 0.5× | n/a |

| Field of View (FOV) | 6.05 mm × 6.05 mm | 6.04 mm × 6 mm 6 mm × 12 mm 4 mm × 44 mm |

| Sampling Interval | 5.9 µm | n/a |

| Sampling Area | 30.25 mm × 30.25 mm | 50.0 mm × 50.0 mm |

| Number of Measurements | 36/Sample | 30/Sample |

| Metrics | Sa, Sq, Ssk, Sku, Sp, Sv, Sz, Sdq, Sdr, Sal, Str, Std, Sk, Spk, Smr1, Smr2, Svk, Vmc, Vmp, Vvc, Vvv, Sxp | Gloss20, Gloss60, Gloss85, Haze, LogHaze, DOI, RIQ, Rspec |

| References | [32,42] | [27,29] |

| Dataset | Low-Pass | Bandpass | Form Removal | Spike Removal | Edge Clipping |

|---|---|---|---|---|---|

| P | 18.0 µm | - | Best fit plane | 6σ from mean plane | No |

| WC | 18.0 µm | 1.0 mm–3.0 mm | Best fit plane | 6σ from mean plane | 24 pixels |

| WB | 18.0 µm | 0.3 mm–1.0 mm | Best fit plane | 6σ from mean plane | 24 pixels |

| WA | 18.0 µm | 0.1 mm–0.3 mm | Best fit plane | 6σ from mean plane | 24 pixels |

| WR | 18.0 µm | 0.018 mm–0.1 mm | Best fit plane | 6σ from mean plane | 24 pixels |

| Dataset | # Input Features | Selected Features | Test Accuracy * Stratified 5-Fold Shuffle Split | ||

|---|---|---|---|---|---|

| Gloss | 8 | Gloss20, Gloss60, Gloss85, Haze, LogHaze, DOI, RIQ, Rspec | 0.58 ± 0.045 | 0.74 ± 0.013 | 0.75 ± 0.063 |

| P | 22 | Sv, Sp, Sdq, Std, Sz | 0.56± 0.04 | 0.65 ± 0.025 | 0.65 ± 0.075 |

| WC | 22 | Sz, Vmc, Sa, Vvc, Sv, Sq, Sdq | 0.61 ± 0.063 | 0.68 ± 0.013 | 0.73 ± 0.025 |

| WB | 22 | Sp, Vmc, Sdq, Sal, Sdr, Str, Sz, Sk | 0.78 ± 0.02 | 0.81 ± 0.02 | 0.82 ± 0.02 |

| WA | 22 | Sz, Vvv, Vmp, Sp, Sal, Sa, Vvc, Sv, Sdq, Sdr | 0.91 ± 0.04 | 0.97 ± 0.01 | 1.00 ± 0.00 |

| WR | 22 | Spk, Sdq, Sdr, Sal, Vvv, Sxp, Str, Svk, Vvc, Sk, Vmp | 0.91 ± 0.02 | 0.98 ± 0.02 | 0.99 ± 0.01 |

| P+WC + WB + WA +WR | 88 | SdqWB, SalWB, StrWA, SdqWA, SalWA | 0.91 ± 0.02 | 0.99 ± 0.01 | 0.99 ± 0.01 |

| Training/Testing | Split: | 0.1/0.9 | 0.5/0.5 | 0.9/0.1 | |

| ** Number Samples: | 40/320 | 180/180 | 320/40 | ||

| ** Number Samples per Tile: | 4/32 | 18/18 | 32/4 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Redford, J.; Mullany, B. Classification of Visual Smoothness Standards Using Multi-Scale Areal Texture Parameters and Low-Magnification Coherence Scanning Interferometry. Materials 2024, 17, 1653. https://doi.org/10.3390/ma17071653

Redford J, Mullany B. Classification of Visual Smoothness Standards Using Multi-Scale Areal Texture Parameters and Low-Magnification Coherence Scanning Interferometry. Materials. 2024; 17(7):1653. https://doi.org/10.3390/ma17071653

Chicago/Turabian StyleRedford, Jesse, and Brigid Mullany. 2024. "Classification of Visual Smoothness Standards Using Multi-Scale Areal Texture Parameters and Low-Magnification Coherence Scanning Interferometry" Materials 17, no. 7: 1653. https://doi.org/10.3390/ma17071653