Abstract

Forest fires are among the most critical natural tragedies threatening forest lands and resources. The accurate and early detection of forest fires is essential to reduce losses and improve firefighting. Conventional firefighting techniques, based on ground inspection and limited by the field-of-view, lead to insufficient monitoring capabilities for large areas. Recently, due to their excellent flexibility and ability to cover large regions, unmanned aerial vehicles (UAVs) have been used to combat forest fire incidents. An essential step for an autonomous system that monitors fire situations is first to locate the fire in a video. State-of-the-art forest-fire segmentation methods based on vision transformers (ViTs) and convolutional neural networks (CNNs) use a single aerial image. Nevertheless, fire has an inconsistent scale and form, and small fires from long-distance cameras lack salient features, so accurate fire segmentation from a single image has been challenging. In addition, the techniques based on CNNs treat all image pixels equally and overlook global information, limiting their performance, while ViT-based methods suffer from high computational overhead. To address these issues, we proposed a spatiotemporal architecture called FFS-UNet, which exploited temporal information for forest-fire segmentation by combining a transformer into a modified lightweight UNet model. First, we extracted a keyframe and two reference frames using three different encoder paths in parallel to obtain shallow features and perform feature fusion. Then, we used a transformer to perform deep temporal-feature extraction, which enhanced the feature learning of the fire pixels and made the feature extraction more robust. Finally, we combined the shallow features of the keyframe for de-convolution in the decoder path via skip-connections to segment the fire. We evaluated empirical outcomes on the UAV-collected video and Corsican Fire datasets. The proposed FFS-UNet demonstrated enhanced performance with fewer parameters by achieving an F1-score of 95.1% and an IoU of 86.8% on the UAV-collected video, and an F1-score of 91.4% and an IoU of 84.8% on the Corsican Fire dataset, which were higher than previous forest fire techniques. Therefore, the suggested FFS-UNet model effectively resolved fire-monitoring issues with UAVs.

1. Introduction

For humans, forests provide a variety of wealth and play an essential role in living. Forests are also considered our planet’s lungs; they filter the air by adding oxygen (O) and lowering carbon dioxide (CO) levels. Additionally, they purify the water from the majority of pollution-causing agents. Over the last few years, forest fires are becoming a kind of natural tragedy for the forest lands and wild habitats and severely affecting the ecosystem. In 2018, a forest fire in California destroyed over 150,000 acres, leaving 85 people dead and about 1000 people missing [1]. Wildfire outbreaks occurred in eastern Australia, in New South Wales and Victoria, in September 2019. The fires continued for over five months, destroying 5.8 million hectares of forest, and killing nearly 3 billion animals, and at least 23 people [2]. According to statistics from the European Forest Fire Information System [3,4,5], fires destroyed 4260 hectares of forest land in Spain, more than 150,000 in Italy, and 93,600 in Greece, in 2021. These occurrences resulted in the death of people and animals and significant damage to the environmental and financial infrastructures. Hence, the time spent detecting accurate sources of wildfire after ignition and alerting related agencies and officers is vital. It can help extinguish fires quickly and reduce, or even avoid altogether, the loss of forest resources.

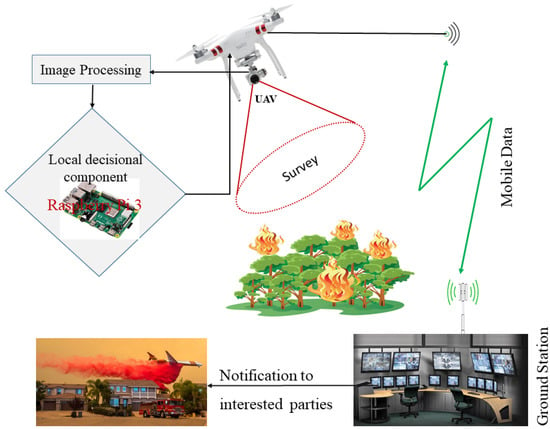

Numerous conventional approaches have been used to detect and control forest fires, such as human-operated watchtowers, patrolling helicopters, and remote-sensing satellites for forest surveillance [6,7]. Watchtowers are equipped with multiple wireless sensors, such as temperature, gas, smoke, and optical, in the forest to identify fires. These detectors have limitations, such as a restricted field-of-view, delayed responses, and high manufacturing costs. Human-powered helicopters can be costly if detection activities are carried out often and can be easily affected by unfavorable situations. Remote sensing satellite methods provide a broad picture but demand enormous resources due to low spatial and temporal image resolution, making it hard to ensure quality monitoring in the early stages. In recent years, application scenarios for UAV surveillance have included traffic monitoring, wildlife monitoring and radio surveillance [8]. UAVs collected real-time images with flexible mobility and variable views and were able to enter dangerous areas and communicate heterogeneous environments in [9]. Furthermore, similar methods have been explored detecting forest fires in aerial images in [10,11,12,13]. However, recognizing forest fires using aerial imagery is challenging due to the fires’ various shapes, sizes, and spectral overlaps. Image-based fire-detection algorithms primarily depend on two aspects: (1) shallow features; and (2) automatic feature extraction using deep neural networks. Traditional UAV-based forest fire detection techniques utilize distinguishable shallow features such as color, form, texture, and motion features. For instance, In [14], Marbach et al. employed the YUV color space and motion features to determine whether an image contained a fire region. Celik et al. [15] introduced a background subtraction method for segmenting fire candidate pixels and a generalized statistical model for enhanced fire-pixel detection. De et al. [10] utilized a rule-based color model that used RGB and YCbCr color spaces, allowing the detection of multiple fires simultaneously. In addition, they created a geolocation-based algorithm to estimate the fire location in terms of latitude, longitude, and altitude. Sudhakar et al. [11] proposed a rule-based technique that included color-code identification and motion information. Despite achieving some progress in forest fire detection, these techniques have been characterized by complex processes, their low accuracy in area detection, and their difficulties in quantifying the scales and changes of forest fires. Recently, deep-learning methods have become the preferred approach for data-driven learning in various applications, such as in disease classification [16], behavior recognition [17], and vehicle detection [18]. These methods have enabled automatic feature extraction using convolutional neural networks (CNNs). Similarly, in the field of forest fire detection, deep-learning-based techniques have also been evolving, including classification-based [12,19,20,21] and segmentation-based [22,23,24] methods using aerial images. Detecting fires using only image cues has resulted in false alarms since objects such as artificial light, paint, sunsets, clouds, and lamps have been mistaken for fires. Very few studies have investigated the detection and localization of forest fires using UAV videos. Nguyen et al. [25] proposed a multi-stage fire detection method that combined CNN and LSTM networks in a video sequence. Cao et al. [26] introduced a bidirectional LSTM to extract discriminatory elements by patching specific frames together with a distinct focus on neighboring patches in a frame sequence. However, their model had a high computational cost and vanishing gradients. Therefore, there is a need for an effective emergency response system to avoid and control fire disasters. We devised a model that segments fires from UAV video in the context of an IoT application (Figure 1), with lower computational cost and higher accuracy.

Figure 1.

A conceptual model for early forest fire detection.

This study proposed FFS-UNet, which had a three-branch encoder path that exploited temporal smoothness by combining transformers in a UNet for fire segmentation in videos. In the FFS-UNet architecture, the transformer converted tokenized picture patches from the multi-branched CNN’s feature map to extract rich global associations between the frame sets. With the help of the depth-wise convolution, (multi-headed) self-attention was implemented in a transformer block [27] that reduced storage costs while maintaining diverse capabilities. Instead of the traditional approach in [28], the authors of [27] modified the patch-embedding by performing a stack of overlapping convolutional operations on a two-dimensional rearranged token map. The decoder discovered global–local semantic information to recover the full spatial resolution of the feature map with precise fire localization by performing an up-sampling operation on the encoded features and combining the multi-scale characteristics of the encoder in a peer-to-peer manner through skip connections. Therefore, we presented a combined loss function to reduce the impacts of class imbalance. Therefore, FFS-UNet received rich context correlations, along with critical information on shallow features, and this enhanced the model’s performance and alleviated ecological inference.

To summarize, our main contributions were the following:

- To benefit from the temporal correlation in UAV video semantic segmentation, the approaches currently in use rely upon the ConvLSTM/optical flow, which is a computational burden for edge devices. Therefore, we proposed a new encoding path using a transformer and UNet, which consisted of three parallel branches for extracting temporal and spatial features for localizing fire from video. Through the UNet structure with parallel streams, the proposed model achieved a full aerial view by combining late-fusion techniques.

- We investigated the significance of our modeling structure through a series of ablation studies and illustrated the efficacy of our network for UAV video-based fire segmentation on the collected datasets. The experimental outcomes showed that the suggested model enhanced performance, as compared to other techniques.

- Finally, we examined the robustness of the fire segmentation of the trained network against anti-inference.

This paper is organized as follows. Section 2 discusses some related works and highlights some representative work closely related to our research. We explain our approach in Section 3; describe the dataset and experiments, and presents the results achieved in Section 4; provide a discussion and prospects for future research in Section 5 before finally arriving at our conclusions in Section 6.

2. Related Work

In this section, we present preliminary research directions and the latest advancements towards advancing the state-of-the-art. Additionally, we provide a brief introduction to CNNs and self-attention mechanisms.

2.1. Shallow Models for Fire Detection

Early fire recognition techniques based on vision were primarily based on color. For instance, in [29], Chen et al. used the red, green, and blue (RGB) color spaces to identify the area that corresponded to the fire. They applied decision rules based on the saturation and intensity weight of the red color feature. In addition, the growth dynamic and disorder of the conflagration were demonstrated to indicate the occurrence of a fire. Toreyin et al. [30] used fire flicker by studying the wavelet domain. Wavelet transforms identified quasi-periodic forms at the boundaries of the flame and color variations in flame regions. In [31], Zhang et al. integrated Fourier and wavelet transforms to identify forest fires. In [32], Chino et al. introduced a method for detecting fire in a photo that blended color feature types with texture types to obtain super-pixel areas. These methods relied on numerous manually defined thresholds, which necessitated the selection of appropriate parameters in diverse environments. However, the reliance on such parameters limited their application in real-world scenarios. In addition, many fire-detection algorithms have also gained popularity for utilizing machine-learning approaches. For instance, Ko et al. [33] first extracted fire features such as frame differences, local luminance variation, and color characteristics. Then, a two-class support vector machine (SVM) classifier with a radial basis function was used to determine fire. In [34], Chenebert et al. utilized a decision tree and a shallow network to verify fire pixels in images based on combined color and texture features. Liang et al. [35] proposed a hybrid method for detecting fire based on a back-propagation neural network and random-forest feature selection. Duong et al. [36] presented a Bayes classifier that focused on the transitions in the statistical characteristics of the image. In [37], Gao et al. used an improved artificial fish-swarm algorithm with twin SVMs for fire recognition. The machine-learning-based approach requires supervision for feature extraction, which is time-consuming and requires domain expertise.

2.2. Deep-Learning Models for Forest Fire Detection with UAVs

In recent years, deep learning has become the preferred choice over traditional machine learning because of its ability to handle complex tasks with high accuracy and its flexibility in working with a wide range of data types. Convolutional neural networks (CNNs) are used in deep-learning-based algorithms to automatically extract high-level fire features. In [19], Zhang et al. presented a deep CNN technique for forest fire detection that operated in two stages: First, the UAV images were analyzed, and subsequently, a fine-grained patch classifier was employed to pinpoint the exact location if a fire was found. In [20], Lee et al. classified pictures taken by UAVs into fire and non-fire categories using different CNN structures, such as VGG13, GoogLeNet, and AlexNet. In [12], Chen et al. presented a CNN-based alarm system during the early phase of a forest fire by employing a hexacopter with a Sony A7 optical camera. They applied pre-processing techniques, such as non-linear filters and histogram equalization, before implementing a CNN model to minimize the noise and improve the data quality. Chen et al. used a synthetic dataset for training and testing the generated model that did not match real-world circumstances.Novac et al. [21] built a low-cost system using deep CNNs to detect wildfire sizes. They evaluated video streams at 19.2 FPS with an accuracy of 60.76 to determine the size and location of the fire. Zhang et al. [13] exploited the ResNet50 model, based on transfer learning, to improve fire detection performance in UAV-collected images. They evaluated the usage of the Mish functions and focal loss functions to optimize the network. In [38], Li et al. proposed a method based on a modified ShuffleNetv2 for real-time forest fire image recognition. In [39], Namburu et al. extended MobileNet (X-MobileNet) to detect wildfires. As compared to shallow approaches, the performance of the fire detection significantly increased with CNNs. However, these methods only provided information about whether or not a fire was present in an image or video frame, and they could not provide information about the precise location or the extent of a fire. These issues impact the accuracy when locating and tracking the fire, such as in firefighting and wildfire-monitoring applications.Additional fire classification methods could be less accurate, particularly in cases where the fire is small or partially obscured, and may be more prone to false-positives and false-negatives, as they rely on classifying entire frames as either fire or non-fire, which can be affected by factors such as lighting conditions, smoke, and other visual distractions. Comparatively, segmentation methods have been more precise when identifying the specific pixels that belonged to a fire, which assisted in reducing the risk of false detection. In study [40], Barmpoutis et al. developed a remote-sensing system that used RGB images captured by a UAV to detect smoke and fire in a 360-degree coverage area. They utilized two deep-learning methods, DeepLabV3 and atrous spatial pyramid pooling to locate flame and smoke regions. They then applied an adaptive post-validation technique to reject areas that exhibited characteristics similar to false or positive smoke and flames to eliminate false-positive results. Shamsoshoara et al. [22] developed a method based on a UNet encoder–decoder structure to capture a fire region in UAV video frames. In [23], Harkat et al. created a Deeplabv3+ architecture for fire segmentation. They evaluated different backbone encoders with varying loss functions in various aerial images. Hossain et al. [41] investigated forest fire images captured by UAVs using new up-sampling techniques called compression–expansion transposed convolution and reversed depth-wise separable transposed convolution in the UNet architecture, with a specific focus on the decoder side. In [24], Ghali et al. considered vision transformers, such as MedT and TransUNet networks, for segmenting UAV images into fire and non-fire classes. Muksimova et al. [42] presented a two-pathway encoder–decoder model based on depth-wise convolutions to detect and accurately segment wildfires and smoke from images captured using UAVs. They attempted to improve the segmentation accuracy by adopting an attention-gating mechanism and nested decoders that used pre-activated residual blocks.

2.3. UNet

Currently, UNet [43] is a widely used framework in many computer vision applications, such as in image recognition and classification models. Due to the hierarchical representation of feature maps, UNet obtains detailed multi-scaled contextual information. Additionally, it improves image reconstruction by utilizing the residual connection between the folding and unfolding path. Furthermore, it has different advanced versions, such as SegFormer [44], Swin-UNet [45], and TransUNet [46]. Therefore, a robust adaptive feature extractor backbone could be applied to UNet architecture to enhance the model’s overall performance.

2.4. Convolution Operation

Let input feature map be , where denotes its input channels, height and width, respectively, and the number of kernel of dimension are expressed as the following: . Here, every kernel , holds feature map . The kernel uses a sliding window to perform M-add operations on a given feature map to produce the , which is described as:

where indicates the collection of offsets in the neighborhood taken into account when convolution is performed on the center pixel, and is given as

The presence of inter-channel redundancy inside the convolution kernel is well known. A lack of long-range spatial interactions in a single shot resulted in the convolution process’s flexibility issues. As compared to a CNN, self-attention mechanisms draw global dependencies by relating different pixel positions in an image.

2.5. Self Attention

Given input , the generalized form of the softmax attention [47] can be written as:

where is the learnable projection matrix, represents the row of matrix O, and is the similarity function. When using , Equation (3) becomes the softmax attention.

3. Method

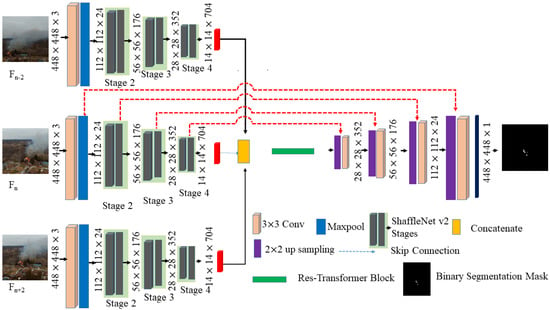

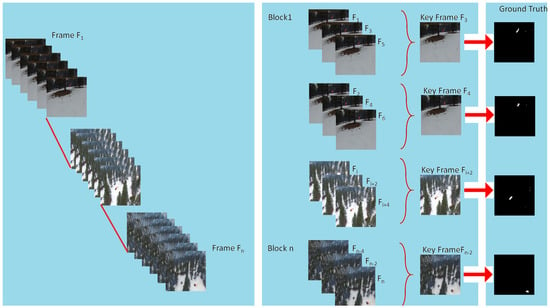

In this section, we discuss the FFS-UNet, which was based on the classic UNet [43] segmentation network, as shown in Figure 2. First, we extracted a keyframe and two reference frames using different encoder paths in parallel to obtain shallow features and perform the feature fusion. Then, we used a transformer to perform deep feature extraction, enhancing the feature learning of the fire pixels and making the feature extraction more robust. Finally, we combined the shallow features for de-convolution in the decoder path to segment the fire.

Figure 2.

The architecture of our proposed FFS-UNet network. It comprised two paths: the encoder path was accountable for extracting spatiotemporal features for understanding view transformation. At the same time, the decoder path was used to retrieve progressively more refined attributes by combining information from the peer-encoder path.

3.1. Encoder Path

In the encoder path of FFS-UNet, UAV video frames had a spatial dimension of and no channel C. First, we employed CNNs as feature-extractors to capture deep feature maps from frame set . Then, we concatenated to obtain deep context that was supplied to multi-headed self-attention layers in the transformer. Before feeding these CNN feature maps into the multi-headed self-attention, patch-embedding was also performed. The feature map was projected into a one-dimensional token , in this case, and were the total number of tokens.

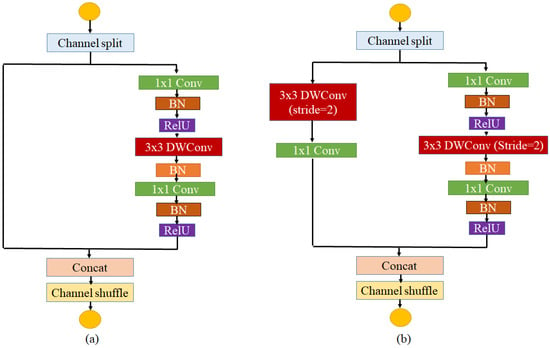

3.1.1. CNN Feature Extractor

In recent years, researchers have paid much attention to creating lightweight backbone networks for resource-constrained devices, such as for real-time fire segmentation with UAVs, which must have fewer parameters and perform fewer computations with high precision. ShuffleNet v2 [48] was an efficient model that utilized a grouping strategy to create a lightweight network. Therefore, we used ShuffleNet v2 in three parallel encoder paths to extract deep feature maps from individual frames . As shown in Figure 3, the core units of ShuffleNet V2 were Channel Split, Concat, and Channel Shuffle. The channel-split operation was used at the beginning and evenly divided the input feature map’s channel dimension into two parts, where the first had three convolutional layers and the second was combined with the output of first through the concatenation operation. In order to confirm that the feature information was shared between the two parts, we utilized Channel Shuffle to randomly mix the output channels and to promote the flow of feature information between them. As compared to Figure 3b, Figure 3a did not include the channel-split operation, which increased output channels and down-sampled the spatial dimension through depth-wise convolution with a stride of 2. Table 1 depicts the parts of the ShuffleNet v2 structure, where each stage comprised the fundamental units (a) and (b), as displayed in Figure 3.

Figure 3.

ShuffleNet v2 basic units: (a) basic unit, (b) basic unit for spatial down-sampling.

Table 1.

Encoder path in our network.

We concatenated the outcome of the fourth stage of ShuffleNet from each encoder path. The resulting feature maps of the dimensions were used as the input for the temporal transformer.

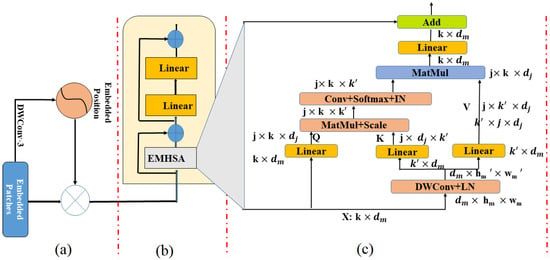

3.1.2. Temporal Transformer Module

Single image-based forest-fire segmentation is challenging due to fast-moving UAVs, the irregular shapes of fires, and cluttered backgrounds. Therefore, the video’s temporal information could be used to handle these issues. To benefit from the temporal correlation in the semantic segmentation of UAV videos, we developed a temporal transformer module (TTM), which was inspired by [27,28]. TTM assessed the relevance and indications of all the locations in 3 frames () in a time sequence. As illustrated in Figure 4, TTM mainly comprised a patch-embedding and temporal REST-block encoder. First, the extracted CNN feature maps of the frames were concatenated. TTM used feature map X as an input that fed into the temporal-encoder layer.

Figure 4.

REST transformer blocks [27], (a), Patch-embedding, (b) Stack of L-encoder layer and (c) Efficient self-attention.

Patch-Embedding: ViT indicated that positional encoding was necessary to exploit the order of a sequence. The position size, however, was precisely identical to the input sequence positions, which has restricted its application potential. To solve this problem, the authors of [27] performed a depth-wise convolutional operation, which was further multiplied by a sigmoid function .The positional encoding with a pixel-attention block (PAB) could be defined as:

We incorporated the positional encoding into the patch-embedding block because the input sequence of each stage was acquired through a convolution function.

REST-Block: This comprised an efficient multi-headed self-attention (EMHSA) layer and a feed-forward (FF) layer, as two sub-layers. Similar to ViT, a skip connection was operated on the EMHSA and FF layers. A normalization layer (NL) [49] was also used before the EMHSA and FF layers. The whole operation could be defined as:

EMHSA: A component of the REST is depicted in Figure 4, which was used to compute the attention operation for the individual elements of the input . For this purpose, it yielded three values , , and , through trainable weight matrices , , and , respectively.Similar to ViT, was obtained, but for the key and the value, first, the two-dimensional input sequence was rearranged (i.e., ) along the spatial dimension. Next, we applied a depth-wise Conv operation to decrease the dimensions of the feature map by a factor of r.After the spatial-squeezing, was rearranged back into a two-dimensional input token, i.e., . Then, was linearly transformed into two sets, i.e., and , to acquire the key and the value, respectively. Therefore, the whole operation of efficient self-attention was expressed by the following equation.

where Conv(.), in this case, is a convolutional operation that simulated the interactions between various heads.

The transformer block output was created by concatenating and linearly projecting the output values of each head.

3.2. Decoder Path

Similar to the UNet in [43], the decoder path contained four distinct modules. Each module consisted of up-sampling (up(x) transpose convolution 2 × 2) and operations, followed by a ReLU activation function. We utilized skip-connections to fuse the multi-scaled information from the encoder with the up-sampled features in each module. Therefore, we concatenated (cat operation) the shallow and deep features together to reduce the loss of the spatial information caused by down-sampling. Next, we applied and ReLU activation operations to halve the channel size. This was defined as the following:

Finally, the UNet design used a operation to convert the number of output channels from the last layer in the decoder path into a single channel for the fire segmentation.

3.3. Loss Function

Due to the significant variations in the sizes and shapes of fires, their segmentation under uncertain conditions was challenging. However, FFS-UNet did not use binary cross-entropy (BCE) loss; instead, it employed a combination of BCE and dice-loss to improve the effectiveness of the multi-scaled fire segmentation. The combined loss was expressed as the following:

where P and G are the prediction and ground-truth, respectively, and and are the the scalar value of BCE and dice-loss, respectively. These values determined the relative contributions of each loss function to the overall loss. The values of and were chosen based on an empirical evaluation.

4. Experiments

First, this section describes the dataset, the implementation details, and the evaluation criteria. Second, the performance of our approach is demonstrated through a series of ablation experiments. Third, we show that it was based on single and multi-frame approaches, as compared to other fire recognition methods. Lastly, we evaluate the robustness of our suggested approach.

4.1. Dataset

Deep-learning methods require all existing weights to learn from examples through iterative processes. Therefore, building extensive data collections with rich backgrounds has become prerequisite.

Collected UAV fire video dataset: No fire segmentation datasets of UAV video were large enough and publicly available. Therefore, we collected our data from various sources, including the ERA dataset [50] and the FLAME dataset [51]. From the ERA dataset, we selected 46 UAV videos (each with 150 frames and a spatial size of 640 × 640 pixels), of which 20 had fire scenes and the remaining did not. Furthermore, manually, we constructed ground-truth segmentation masks for each frame associated with fire. From the FLAME dataset, we selected 2 UAV videos (having 3840 × 2160 resolution); one was the fires of pile burns containing 2003 annotated frames. Figure 5 shows examples of the fire and non-fire frames.For FFS-UNet’s middle frame of the image series, was assumed to be the keyframe, and the other two reference frames were and , where n is the temporal length. For example, if n = 5, then the keyframe was . As discussed, 2 other frames, and , were supplied as the input to FFS-UNet for fire segmentation (Figure 6). We used one ground-truth mask for three inputs to maximize scenery variety. The number of samples is vital for training deep networks with reasonable accuracy. To satisfy the requirements of our investigation, we applied data augmentation by flipping the data to increase the sample size. The dataset was divided as follows: 80% to train and 20% to test our network.

Figure 5.

Portions of the video clips from our UAV fire dataset: The first two rows contain fire samples, and the bottom two rows have non-fire samples.

Figure 6.

Example of training sample.

4.2. Implementation Details

The FFS-UNet experiment was implemented using the Pytorch library and the Python programming language in an Ubuntu environment. To increase the data diversity, data augmentation by flipping was exploited for training. The input images were first resized to . A machine equipped with an NVIDIA GTX 1080Ti GPU with 64 GB RAM capacity was used to train the FFS-UNet model. In order to initialize the weights of the CNN feature extractor, it was pre-trained on ImageNet [52]. For the training, our model was optimized using the Adam optimizer with a learning rate of and a batch size of 8. The model was trained until complete convergence.

4.3. Evaluation Metrics

In order to evaluate the quantitative performance of the suggested FFS-UNet model, we considered intersection-of-the-union (IoU), precision, recall, F1-score and accuracy metrics.

IoU was described as:

Precision was described as:

Recall was described as:

F1-score was described as:

Accuracy was described as:

where represents true-positives, indicating the model determined the number of the fire pixels correctly; represents true-negatives, indicating the model determined the number of normal pixels correctly and did not predict any fire pixels; represents false-negatives, indicating the model incorrectly determined the number of normal pixels as fire pixels; and denotes false-positives, indicating the model determined the number of the fire pixels as normal pixels.

4.4. Ablation Study

In the following section, we highlight the contributions and prove the usefulness of the components of the FFS-UNet network. We measured the performance using different metrics from the collected dataset. We performed several ablations experiments to explore advancements from the multi-branch encoder and temporal transformer module.

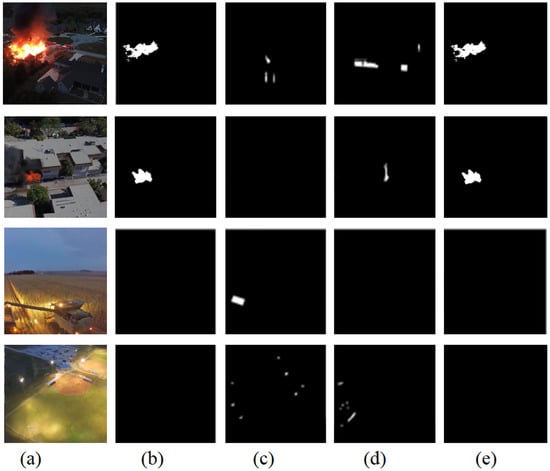

4.4.1. Impact of Encoders with TTM

A video’s three frames were simultaneously extracted for features by the suggested encoder, which was comprised of triplet branches. We compared the suggested architecture with a single-stream encoder, which was Shuffle-UNet (baseline), to assess its performance. We show the quantitative results in Table 2 and a visual comparison of the fire segmentation results in Figure 7, of Shuffle-UNet (single), two-branch, and three-branch encoders. For the segmentation of the fire areas, we trained Shuffle-UNet on the collected dataset, which proved to be incapable of extracting temporal features and yielded labels that were inconsistent with the ground-truth mask, as shown in (Figure 7c). It reached an IoU of 0.725, a precision of 0.701, a recall of 0.837, an F1-score of 0.762, and an accuracy of 78.3%.In contrast, the suggested network with a three-branch encoder yielded more intuitive results by capturing the temporal features from the , and frames and perfectly categorized these pixels as fire. Consequently, the segmentation outcomes became more smooth, as shown in Figure 7d. It had significantly improved results in terms of its metrics, such as an IoU of , a recall of , a precision of ; an F1-score of , and an accuracy of (From Table 2). Therefore, FFS-UNet showed an improvement on the IoU by 10.8%, on the precision by 37.3%, on the recall by 12.3%, on the F1-score by 3.1%, and on the accuracy by 24.1%, in a single stream.A two-branch encoder erroneously compartmentalized a few pixels belonging to the fire region, as shown in Figure 7c. Furthermore, it had an F1-score of only and an accuracy of .

Table 2.

Experimental outcome on the effect of the parallel encoder with TTM.

Figure 7.

Qualitative comparison of the fire segmentation. From left: (a) images from the top two rows include fire and the bottom two rows are normal, (b) ground-truth, (c) single-stream encoder, (d) dual-stream encoder, (e) triple-stream encoder.

4.4.2. Impact of Positional Encoding

As shown in Table 3, when we did not apply PAB, FFS-UNet had scores of 0.8 for IoU, 0.935 for precision, 0.915 for recall, 0.924 for the F1-score, and for accuracy. After applying PAB, FFS-UNet improved by on IoU, on precision, on recall, on F1-score, and on the accuracy. This proved the need for positional encoding for FFS-UNet.

Table 3.

Effect of positional encoding.

4.4.3. Impact of Up-Sampling

Corresponding to the encoder, we designed the decoder to perform up-sampling and increase the feature dimension. To explore the effectiveness of the proposed method, we conducted the experiments concerning FFS-UNet with bilinear interpolation and transposed convolution. The experimental results in Table 4 indicated that the proposed FFS-UNet with transposed convolution obtained better segmentation.

Table 4.

Effect of up-sampling technique.

4.4.4. Impact of Loss Functions

In Table 5, we conducted further research to demonstrate the impact of the combined loss functions on the suggested technique. First, we evaluated the gains on the BCE loss of 0.757 on IoU, 0.795 on precision, 0.824 on recall, 0.809 on the F1-score, and on accuracy. The generated a false alarm and received a worse index value than the suggested combination. Then the loss was replaced with the loss, and this simple alteration led to an obvious performance gain (+5.5% in IoU). Therefore, the loss improved the predicted mask outcomes by lowering the pixel-wise false-positives. Furthermore, when combining the loss with the loss, this variant further improved the performance on all measures. The segmentation results were exceeded by the weighted sum of , as compared to other independent loss functions. The performance of the integrated loss function was 6%–8% better in terms of accuracy. Furthermore, it increased the model’s IoU by 10%–11%, as compared to alone. Overall, the integrated loss functions increased the model’s generalizability.

Table 5.

Effect of different loss functions.

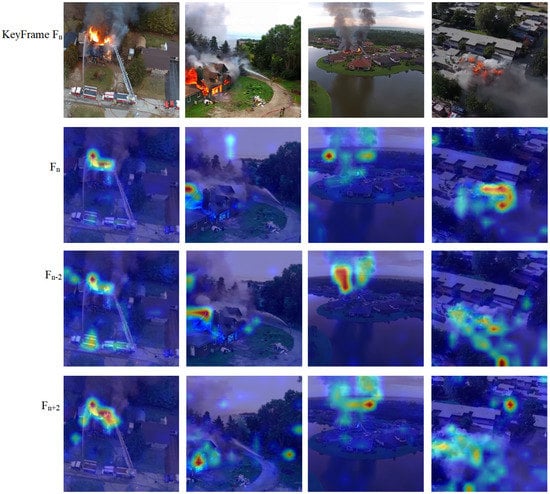

4.4.5. Visual Analysis

To further explore the significance of our technique, we utilized the method in [53] to visualize the attention maps rendered by our FFS-UNet on the collected dataset. Figure 8 depicts the attention map where each column was sampled from the same video, allowing the network to obtain helpful information that agreed with the fire ground-truth. FFS-UNet followed and segmented fire well under challenging conditions, such as (a) occlusion, (b) variations in locations, and (c) differentiating objects that appeared similar to fire.

Figure 8.

Attention map visualization of our model for fire pixels in keyframe , where we discovered the correlation with the other two reference frames in the temporal window (n). Columns 1–4 are shown as examples.

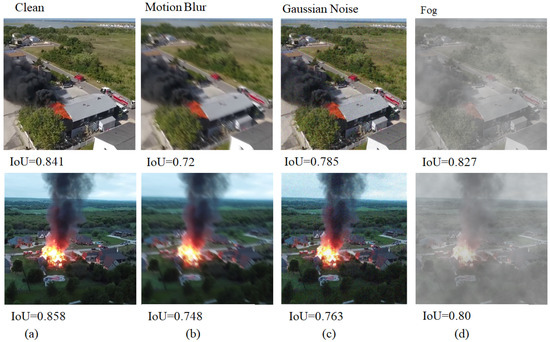

4.4.6. Adaptability to Natural Corruption

To assess the robustness of our approach in the presence of background noise, we expanded our test set to include algorithmically generated motion blur, Gaussian noise, and fog corruptions in each frame of the UAV fire datasets to simulate natural degradation. Samples are shown in Figure 9. We compared the IoU values in both clean and corrupted environments. Our approach demonstrated strong adaptability, with a minor decrease in the IoU of 2%∼4% with foggy weather, 6%∼8% with Gaussian noise, and 10%∼14% with motion blur. These results demonstrated the remarkable robustness of FFS-UNet, which indicated it may be beneficial for safety-critical applications.

Figure 9.

Samples of robustness evaluation, which consisted of (a) normal, (b) motion blur, (c) Gaussian noise, and (d) fog.

4.5. Comparative Method

To verify the effectiveness of the proposed FFS-UNET model, we compared it with the following deep-learning methods:

(a) In [22], Shamsoshoara et al. used a traditional UNet [43] semantic segmentation network; UNet used a skip connection between the encoder and the decoder to minimize performance degradation. Moreover, UNet was used as a benchmark for the segmentation of medical images.

(b) In [23], Harkat et al. exploited Deeplabv3+ResNet-50 to achieve fire segmentation with UAVs by combining spatial pyramid pooling and an encoder–decoder. Their Deeplanv3 extracted multi-scaled information.

(c) In [54], Ghali et al. customized TransUNet [46] to take advantage of the benefits of both UNet and transformers. In the encoder, tokenized patches from the CNN feature map fed into the transformer to generate long-range contextual information. On the other side, to facilitate accurate localization, the decoder up-sampled the encoded features. Then, it combined them with the high-resolution CNN feature maps. We used this model as a benchmark method.

(d) In [45], non-overlapping image tokens were fed into the Swin-UNet transformer, with shifted windows as the encoder–decoder via a skip link, for local–global feature representation. The encoder performed a down-sampling operation to capture contextual information. The decoder conducted the up-sampling process to recover the spatial resolution in order to achieve segmentation.

(e) In [44], SegFormer was developed without positional encoding and using a hierarchical transformer as the encoder and all-MLP as the decoder to generate semantic predictions.

(f) In [55], Shahid et al. proposed a two-stage fire detection, STNet+DenseFire. In the first stage, they identified the proposed fire regions based on a given keyframe in a video sequence. In the second stage, they classified the proposed regions.

4.5.1. Comparison on Our Collected Dataset

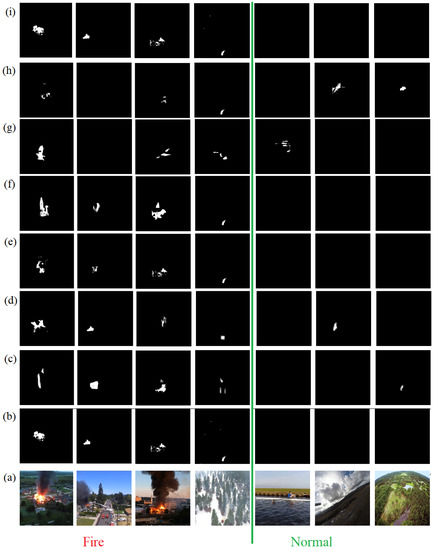

For the dataset, a visual comparison of our method against alternative techniques is presented in Figure 10. We found that when there were some minute areas in rows three and four where the color or form was identical to the fire regions, the UNet segmentation model segmented these small regions as fire regions while also overlooking smaller ones (in row one) due to poor contrast. We also noted that Deeplabv3+ResNet50 performed better than UNet. It was due to the atrous convolution, which was helpful for analyzing global scenes. In both models, false-negatives could occur due to shadows. This was marked by the yellow circle in the figure. SegFormer and Swin-UNet were effective at separating the fire from the diverse environment, but their outcomes frequently exceeded boundary lines.TranUNet results showed good performance in inference situations; however, there were some limitations.Our segmentation model with a multi-branch approach via temporal context aggregation fused the dynamic features of the target fire regions to eliminate these issues. Our method’s segmentation findings were substantially closer to the ground-truth mask. They precisely located the fire area while effectively suppressing background interference. Even if the fire regions was covered by shadows, all three encoders could detect the fire regions accurately.

Figure 10.

Qualitative comparison of the fire segmentation. From left: (a) first four are fire examples and the rest are normal, (b) corresponding ground-truth mask, and predicted masks by (c) TransUNet, (d) Swin-UNet, (e) STNet+DenseFire, (f) SegFormer, (g) Deeplabv3+ResNet50, (h) UNet, and (i) FFS-UNet.

Beyond being more visually compelling, our strategy surpassed all rival approaches in terms of IoU, recall, precision, F1-score, and accuracy. Table 6 shows the comparison results with other methods on the fire dataset. Based on these results, our FFS-UNet approach had the best performance, with an accuracy of , an IoU of , a recall of , a precision of and an F1-score of . UNet obtained a precision of , a recall of , an accuracy of , and an IoU of . UNet was lower in F1-score than our method, which had been anticipated due to the use of convolutional layers. Deeplabv3+ResNet50 attained an accuracy of , a recall of , a precision of , an F1-score of , and an IoU of . Deeplabv3+ResNet50 added an atrous convolution that exhibited some advancement. TransUNet, Swin-UNet, and SegFormer further improved due to their transformer modules. The SegFormer model obtained an IoU of , an F1-score of , a precision of , a recall of , and an accuracy of . SegFormer was the best method in terms of recall. TransUNet obtained a precision of , a recall of , an F1-score of , and an accuracy of . Swin-UNet achieved an accuracy of , a recall of , a precision of 0.944, an F1-score of , and an IoU of . The Swin-UNet and TransUNet had similar accuracy values but were lower than SegFormer. TransUNet, Swin-UNet, and SegFormer had lower F1-scores than our method, , , and , respectively. Due to the temporal features, STNet+DenseFire and our FFS-UNet had significant improvements in IoU values. STNet+DenseFire achieved an accuracy of , a recall of , a precision of , and an F1-score of , but these were still below FFS-UNet’s results.Therefore, our method outperformed the others.

Table 6.

Fire detection results, as compared to different methods, on UAV fire dataset.

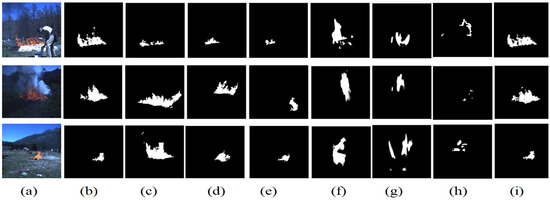

4.5.2. Comparison on Cross-Dataset

In order to verify our suggested technique, we also evaluated the results of all methods on the Corsican Fire dataset [56]. The collection comprised video images of forest fires taken in various locations and under varied situations. We did not use the pictures from the Corsican Fire dataset for the training processes of our proposed method or for the other baseline methods. As shown in Figure 11, the transformer-based techniques SegFormer and Swin-UNet had apparent benefits, as compared to UNet, for recognizing fire, but they still over-represented fire areas and did not have a precise edge. As shown in the first row, a few false-positives were present for fire, which occurred for the pixels covering the smoke. UNet produced many false-negatives for the fire pixels, which was due to the under-performing features. TransUNet performed effectively on the majority of the situations at the expense of extra computation, as it relied on the intricate architecture of its encoder module. STNet+DenseFire did not deliver competitive outcomes, showing there was still opportunities for improvement in this model.However, FFS-UNet outperformed in terms of the sensitivity of the model for these inferences, which was due to the multi-branch temporal transformer module.

Figure 11.

Qualitative comparison of fire segmentation results on the Corsican Fire dataset [56]. From left: (a) Fire image, (b) corresponding ground-truth mask, and predicted masks by (c) Swin-UNet, (d) TransUNet, (e) SegFormer, (f) STNet+DenseFirand (g) Deeplabv3+ResNet50 (h) UNet, and (i) FFS-UNet.

Table 7 shows the comparison results of all the methods on the Corsican Fire dataset. UNet obtained an IoU of , a precision of , a recall of , an F1-score of , and an accuracy of 70.16 %. Deeplabv3+ResNet5 attained an accuracy of 85.11 %, a recall of , a precision of , an F1-score of , and an IoU of . TransUNet obtained results of , , , and , on IoU, precision, recall, F1-score and accuracy, respectively. Swin-UNet obtained an IoU of , an F1-score of , a precision of , a recall of , and an accuracy of . SegFormer achieved an accuracy of , a recall of , a precision of , an F1-score of , and an IoU of . Our FFS-UNet approach reached an accuracy of , an IoU of , a recall of , a precision of , and an F1-score of . As compared to sub-optimal methods, FFS-UNet had a gain of 2.9% on IoU, 2.3% on precision, 2.1% on recall, 3.1% on F1-score, and 1.3% on accuracy. Table 7 shows that our FFS-UNet achieved the best results on the five evaluation metrics.

Table 7.

Fire detection results, as compared to different methods, on the Corsican Fire dataset [56].

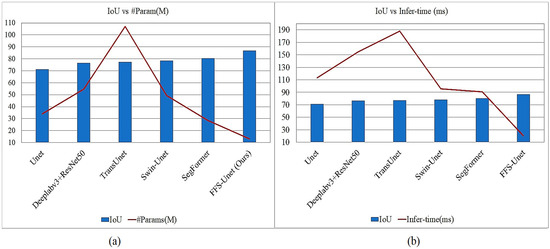

4.5.3. Analysis of Running Time

In Figure 12a,b, we compared the IoU metric vs. the number of parameters (#Param) and the inference time vs. #Param of the various techniques. The #Param of FFS-UNet was 14.17 M, which was lower than the others; our segmentation IoU and inference speed consistently outperformed them. In practical applications, real-time fire segmentation referred to detecting a test image at a rate of 30 milliseconds (ms) or higher, which was equivalent to a frame rate of 30 FPS. The inference time of our network was significantly faster than other techniques, at only 14.12 milliseconds (ms) ( Figure 12b), and the inference rate was as high as 66.13 FPS. Overall, our FFS-UNet achieved outstanding detection performance with fewer parameters, maintaining a better trade-off between speed and IoU.

Figure 12.

(a) Represents the relationship between the intersection-over-union (IoU) metric and the number of parameters (#Param (million (M))) of different models, and (b) represents the relationship between IoU and the inference time (i.e., the time duration of the model’s predictions) in milliseconds. The performance of the models varied as a function of these key variables.

5. Discussion and Future Work

Vision-based surveillance systems are gaining popularity through the application of remote sensors. These systems have been developed by integrating computer-vision techniques with advanced computation and storage capacities to achieve precise object recognition and localization. The distinguishing characteristic of FFS-UNet was its unique architecture that enabled the segmentation of video frames by leveraging temporal information from both preceding and succeeding frames to identify and isolate fire regions in a current frame accurately. Additionally, we utilized long-skip connections to connect the encoder and decoder layers. We concatenated the output of the lower-level layers with the higher-level layers to merge low-level features with the high-level semantic information obtained by the deeper layers. This technique facilitated the process of obtaining clearly defined fire regions. Once the model was trained, it could extract relevant information from the frames and . We noticed that SegFormer and Swin-UNet, which were transformer-based models, showed advantages over the UNet model for detecting fires, but they tended to overestimate the fire regions and lacked precise edge detection. While the TransUNet model performed well in most cases, its encoder module’s complex architecture led to increased computation times. Our method’s segmentation results were significantly closer to the ground-truth mask. The approach used the concept of a temporal transformer in constructing the feature.

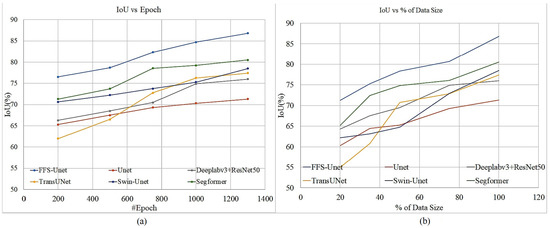

As shown in Figure 13a,b, we formed two training sets to better comprehend the effects of the temporal features and compare our FFS-UNet with transformer- and CNN-based forest-fire-segmentation methods: (a) training with the augmented dataset for 200, 500, 750, 1000, and 1300 epochs, and (b) training with 35%, 50%, 70%, and 100% datasets for 1300 epochs.As shown in Figure 13a, Deeplabv3+ResNet50 and UNet achieved an IoU of 0.713 and 0.76, respectively, after 1300 epochs while our FFS-UNet, after being trained for only 500 epochs, achieved a 78.7% IoU; it also outperformed Swin-UNet, which had been trained for all available epochs. Similarly, in Figure 13b, when the amount of training data was reduced, TransUNet showed the worst performance. Our approach, with 80% of the training examples, offered competitive performances, as compared to Swin-UNet and SegFormer, both of which had been trained on the entire training (100%) data. Therefore, explicitly learning fire features from a temporal transformer is more promising than implicitly learning from a single image.

Figure 13.

Comparison (a) training effect and (b) data size effect of different models.

To verify our claim, we utilized the method in [53] to visualize the attention maps rendered by our FFS-UNet on the collected dataset. The visualization showed that the model’s response differed on each frame, indicating that the network had learned to combine optimal temporal features for spatial segmentation. We also evaluated our model on the Corsican Fire dataset, which the model had never before encountered, and the visual outcomes indicated that FFS-UNet successfully segmented areas in frames related to the fire. FFS-UNet had the ability to be trained for additional classes, including multiple classes (e.g., for rescue operations). However, during the evaluation of the network, it was challenging to identify suitable datasets since most available segmentation datasets only contained single frames. However, the datasets typically utilized for locating fire were ideal for assessing our model. In the future, our objective is to enhance our model’s training by incorporating more than one class and expanding the temporal window, which involves analyzing a sequence of frames to extract even more comprehensive temporal features.

6. Conclusions

This study proposed a forest-fire segmentation model that utilized a new encoder, which was comprised of three feature-extracting streams at an interval of two frames of UAV video. The model learned temporal information via a transformer through the features retrieved from the three encoding streams. FFS-UNet had more satisfactory results, as compared to other techniques without temporal context aggregation, on forest-fire segmentation in the UAV video, specifically when multiple other minute areas with colors and shapes similar to fire were present. We supplied further analysis to better comprehend the temporal transformer module. The suggested model was lightweight and had a processing speed of 66.13 FPS with an IoU of 86.8%. We also evaluated its adaptability against natural corruption; our proposed FFS-UNet model demonstrated strong robustness. Our investigation on a collected dataset and Corsican Fire (cross-dataset) exhibited that the suggested approach could be implemented in real-time with reliable accuracy in UAV-monitoring contexts.

Author Contributions

Conceptualization, M.S.; Formal analysis, M.S. and Y.-L.H.; Funding acquisition, K.-L.H.; Methodology, M.S., Y.-Y.C., Y.-L.C. and K.-L.H.; Project administration, K.-L.H.; Software, M.S. and S.-F.C.; Supervision, K.-L.H.; Validation, S.-F.C.; Writing—original draft, M.S.; Writing—review and editing, Y.-L.H., Y.-Y.C. and Y.-L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Science and Technology Council of Taiwan under Grant NSTC111-2221-E-011-128-MY3, Grant NSTC112-2622-8-011-015-TD1, Grant NSTC111-2623-E-011-001, and in part by the Wang Jhan-Yang Charitable Trust Fund, Taiwan under Contract WJY 2022-HR-02.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cisneros, R.; Schweizer, D.; Navarro, K.; Veloz, D.; Procter, C.T. Climate change, forest fires, and health in California. In Climate Change and Air Pollution; Springer: Berlin/Heidelberg, Germany, 2018; pp. 99–130. [Google Scholar]

- Boer, M.M.; Resco de Dios, V.; Bradstock, R.A. Unprecedented burn area of Australian mega forest fires. Nat. Clim. Chang. 2020, 10, 171–172. [Google Scholar] [CrossRef]

- Sadowska, B.; Grzegorz, Z.; Stępnicka, N. Forest Fires and Losses Caused by Fires–An Economic Approach. WSEAS Trans. Environ. Dev. 2021, 17, 181–191. [Google Scholar] [CrossRef]

- Davide, A.; Jose, V.M.; Marco, M.; Lorenzo, S. Land use change towards forests and wooded land correlates with large and frequent wildfires in Italy. Ann. Silvic. Res. 2021, 46, 177–188. [Google Scholar]

- Pe nuelas, J.; Sardans, J. Global change and forest disturbances in the Mediterranean basin: Breakthroughs, knowledge gaps, and recommendations. Forests 2021, 12, 603. [Google Scholar] [CrossRef]

- Alkhatib, A.A. A review on forest fire detection techniques. Int. J. Distrib. Sens. Netw. 2014, 10, 597368. [Google Scholar] [CrossRef]

- Szpakowski, D.M.; Jensen, J.L. A review of the applications of remote sensing in fire ecology. Remote Sens. 2019, 11, 2638. [Google Scholar] [CrossRef]

- Li, X.; Savkin, A.V. Networked unmanned aerial vehicles for surveillance and monitoring: A survey. Future Internet 2021, 13, 174. [Google Scholar] [CrossRef]

- Sharma, V.; Srinivasan, K.; Chao, H.C.; Hua, K.L.; Cheng, W.H. Intelligent deployment of UAVs in 5G heterogeneous communication environment for improved coverage. J. Netw. Comput. Appl. 2017, 85, 94–105. [Google Scholar] [CrossRef]

- De Sousa, J.V.R.; Gamboa, P.V. Aerial forest fire detection and monitoring using a small UAV. KnE Eng. 2020, 5, 242–256. [Google Scholar] [CrossRef]

- Sudhakar, S.; Vijayakumar, V.; Kumar, C.S.; Priya, V.; Ravi, L.; Subramaniyaswamy, V. Unmanned Aerial Vehicle (UAV) based Forest Fire Detection and monitoring for reducing false alarms in forest-fires. Comput. Commun. 2020, 149, 1–16. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, Y.; Xin, J.; Yi, Y.; Liu, D.; Liu, H. A UAV-based forest fire-detection algorithm using convolutional neural network. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 10305–10310. [Google Scholar]

- Zhang, L.; Wang, M.; Fu, Y.; Ding, Y. A Forest Fire Recognition Method Using UAV Images Based on Transfer Learning. Forests 2022, 13, 975. [Google Scholar] [CrossRef]

- Marbach, G.; Loepfe, M.; Brupbacher, T. An image processing technique for fire detection in video images. Fire Saf. J. 2006, 41, 285–289. [Google Scholar] [CrossRef]

- Celik, T.; Demirel, H.; Ozkaramanli, H.; Uyguroglu, M. Fire detection using statistical color model in video sequences. J. Vis. Commun. Image Represent. 2007, 18, 176–185. [Google Scholar] [CrossRef]

- Tanveer, M.; Rashid, A.H.; Ganaie, M.; Reza, M.; Razzak, I.; Hua, K.L. Classification of Alzheimer’s disease using ensemble of deep neural networks trained through transfer learning. IEEE J. Biomed. Health Inform. 2021, 26, 1453–1463. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.W.; Mou, T.C.; Fang, C.C.; Chang, P.C.; Hua, K.L.; Shih, H.C. Baseball player behavior classification system using long short-term memory with multimodal features. Sensors 2019, 19, 1425. [Google Scholar] [CrossRef]

- Chang, C.W.; Srinivasan, K.; Chen, Y.Y.; Cheng, W.H.; Hua, K.L. Vehicle detection in thermal images using deep neural network. In Proceedings of the 2018 IEEE Visual Communications and Image Processing (VCIP), Taichung, Taiwan, 9–12 December 2018; pp. 1–4. [Google Scholar]

- Zhang, Q.; Xu, J.; Xu, L.; Guo, H. Deep convolutional neural networks for forest fire detection. In Proceedings of the 2016 International Forum on Management, Education and Information Technology Application, Guangzhou, China, 30–31 January 2016; Atlantis Press: Amsterdam, The Netherlands, 2016; pp. 568–575. [Google Scholar]

- Lee, W.; Kim, S.; Lee, Y.T.; Lee, H.W.; Choi, M. Deep neural networks for wild fire detection with unmanned aerial vehicle. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 8–10 January 2017; pp. 252–253. [Google Scholar]

- Novac, I.; Geipel, K.R.; de Domingo Gil, J.E.; de Paula, L.G.; Hyttel, K.; Chrysostomou, D. A Framework for Wildfire Inspection Using Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020; pp. 867–872. [Google Scholar]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. Aerial imagery pile burn detection using deep learning: The FLAME dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Harkat, H.; Nascimento, J.M.; Bernardino, A.; Thariq Ahmed, H.F. Assessing the Impact of the Loss Function and Encoder Architecture for Fire Aerial Images Segmentation Using Deeplabv3+. Remote Sens. 2022, 14, 2023. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A.; Jmal, M.; Souidene Mseddi, W.; Attia, R. Wildfire Segmentation Using Deep Vision Transformers. Remote Sens. 2021, 13, 3527. [Google Scholar] [CrossRef]

- Nguyen, M.D.; Vu, H.N.; Pham, D.C.; Choi, B.; Ro, S. Multistage Real-Time Fire Detection Using Convolutional Neural Networks and Long Short-Term Memory Networks. IEEE Access 2021, 9, 146667–146679. [Google Scholar] [CrossRef]

- Cao, Y.; Yang, F.; Tang, Q.; Lu, X. An attention enhanced bidirectional LSTM for early forest fire smoke recognition. IEEE Access 2019, 7, 154732–154742. [Google Scholar] [CrossRef]

- Zhang, Q.; Yang, Y.B. ResT: An efficient transformer for visual recognition. Adv. Neural Inf. Process. Syst. 2021, 34, 15475–15485. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Chen, T.H.; Wu, P.H.; Chiou, Y.C. An early fire-detection method based on image processing. In Proceedings of the 2004 International Conference on Image Processing, Singapore, 24–27 October 2004; Volume 3, pp. 1707–1710. [Google Scholar]

- Töreyin, B.U.; Dedeoğlu, Y.; Güdükbay, U.; Cetin, A.E. Computer vision based method for real-time fire and flame detection. Pattern Recognit. Lett. 2006, 27, 49–58. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, J.; Zhang, D.; Qu, C.; Ke, Y.; Cai, B. Contour based forest fire detection using FFT and wavelet. In Proceedings of the 2008 International Conference on Computer Science and Software Engineering, Wuhan, China, 12–14 December 2008; Volume 1, pp. 760–763. [Google Scholar]

- Chino, D.Y.; Avalhais, L.P.; Rodrigues, J.F.; Traina, A.J. Bowfire: Detection of fire in still images by integrating pixel color and texture analysis. In Proceedings of the 2015 28th SIBGRAPI Conference on Graphics, Patterns and Images, Bahia, Brazil, 26–29 August 2015; pp. 95–102. [Google Scholar]

- Ko, B.C.; Cheong, K.H.; Nam, J.Y. Fire detection based on vision sensor and support vector machines. Fire Saf. J. 2009, 44, 322–329. [Google Scholar] [CrossRef]

- Chenebert, A.; Breckon, T.P.; Gaszczak, A. A non-temporal texture driven approach to real-time fire detection. In Proceedings of the 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 1741–1744. [Google Scholar]

- Liang, J.X.; Zhao, J.F.; Sun, N.; Shi, B.J. Random Forest Feature Selection and Back Propagation Neural Network to Detect Fire Using Video. J. Sens. 2022, 2022, 5160050. [Google Scholar] [CrossRef]

- Dai Duong, H.; Tinh, D.T. A new approach to vision-based fire detection using statistical features and bayes classifier. In Proceedings of the Asia-Pacific Conference on Simulated Evolution and Learning, Hanoi, Vietnam, 16–19 December 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 331–340. [Google Scholar]

- Gao, Y.; Xie, L.; Zhang, Z.; Fan, Q. Twin support vector machine based on improved artificial fish swarm algorithm with application to flame recognition. Appl. Intell. 2020, 50, 2312–2327. [Google Scholar] [CrossRef]

- Li, M.; Zhang, Y.; Mu, L.; Xin, J.; Xue, X.; Jiao, S.; Liu, H.; Xie, G.; Yi, Y. A Real-Time Forest Fire Recognition Method Based on R-ShuffleNetv2. In Proceedings of the 2022 5th International Symposium on Autonomous Systems (ISAS), Hangzhou, China, 8–10 April 2022; pp. 1–5. [Google Scholar]

- Namburu, A.; Selvaraj, P.; Mohan, S.; Ragavanantham, S.; Eldin, E.T. Forest Fire Identification in UAV Imagery Using X-MobileNet. Electronics 2023, 12, 733. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early fire detection based on aerial 360-degree sensors, deep convolution neural networks and exploitation of fire dynamic textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Hossain, F.A.; Zhang, Y. Development of new efficient transposed convolution techniques for flame segmentation from UAV-captured images. In Proceedings of the 2021 3rd International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 8–11 November 2021; pp. 1–6. [Google Scholar]

- Muksimova, S.; Mardieva, S.; Cho, Y.I. Deep Encoder–Decoder Network-Based Wildfire Segmentation Using Drone Images in Real-Time. Remote Sens. 2022, 14, 6302. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: UNet-like pure transformer for medical image segmentation. arXiv 2021, arXiv:2105.05537. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Cai, H.; Gan, C.; Han, S. Efficientvit: Enhanced linear attention for high-resolution low-computation visual recognition. arXiv 2022, arXiv:2205.14756. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Mou, L.; Hua, Y.; Jin, P.; Zhu, X.X. Era: A data set and deep learning benchmark for event recognition in aerial videos [software and data sets]. IEEE Geosci. Remote Sens. Mag. 2020, 8, 125–133. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.; Blasch, E. The FLAME Dataset: Aerial Imagery Pile Burn Detection Using Drones (UAVs); IEEE DataPort: New York, NY, USA, 2020. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Chefer, H.; Gur, S.; Wolf, L. Transformer interpretability beyond attention visualization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 782–791. [Google Scholar]

- Ghali, R.; Akhloufi, M.A.; Mseddi, W.S. Deep learning and transformer approaches for UAV-based wildfire detection and segmentation. Sensors 2022, 22, 1977. [Google Scholar] [CrossRef] [PubMed]

- Shahid, M.; Virtusio, J.J.; Wu, Y.H.; Chen, Y.Y.; Tanveer, M.; Muhammad, K.; Hua, K.L. Spatio-Temporal Self-Attention Network for Fire Detection and Segmentation in Video Surveillance. IEEE Access 2021, 10, 1259–1275. [Google Scholar] [CrossRef]

- European Commission, Joint Research Centre, ‘Forest Fire in Corsica, France (2017-08-14)’, 2017 (Updated 2017-08-14). Available online: http://data.europa.eu/89h/6d4c9d62-b313-424d-85fa-e52aeddbca20 (accessed on 7 December 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).