Analysis of the Effects of Different Nitrogen Application Levels on the Growth of Castanopsis hystrix from the Perspective of Three-Dimensional Reconstruction

Abstract

1. Introduction

2. Materials and Methods

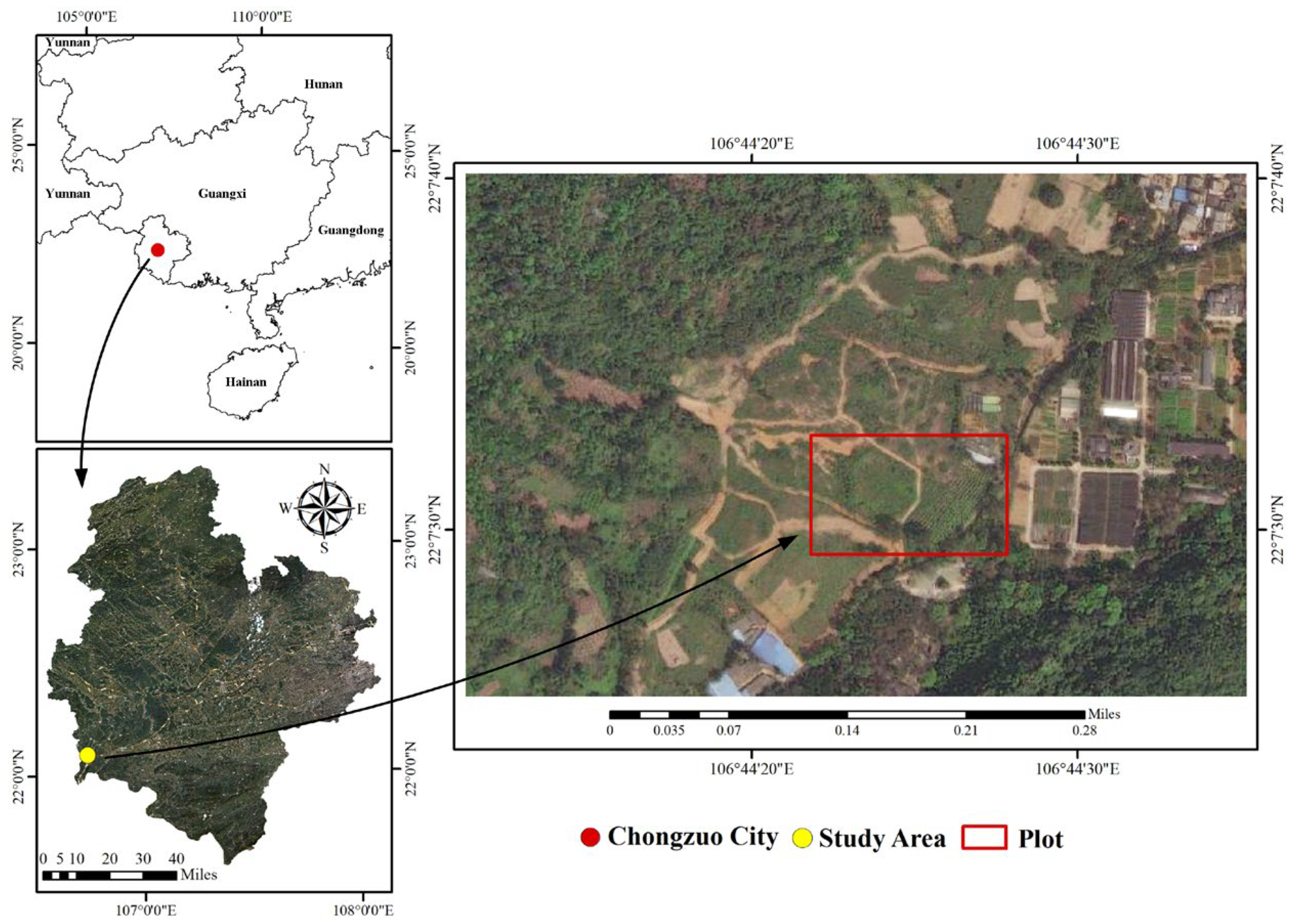

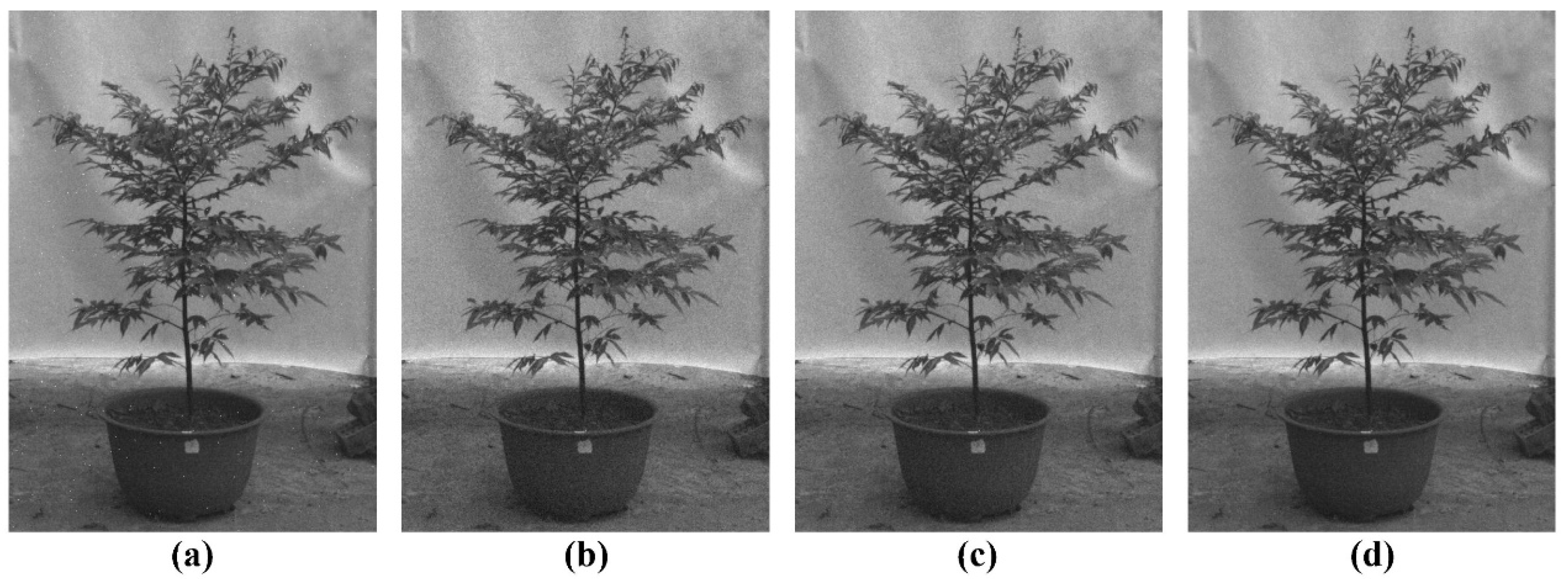

2.1. Experimental Design and Data Acquisition

2.2. Image Denoising

2.3. Camera Calibration

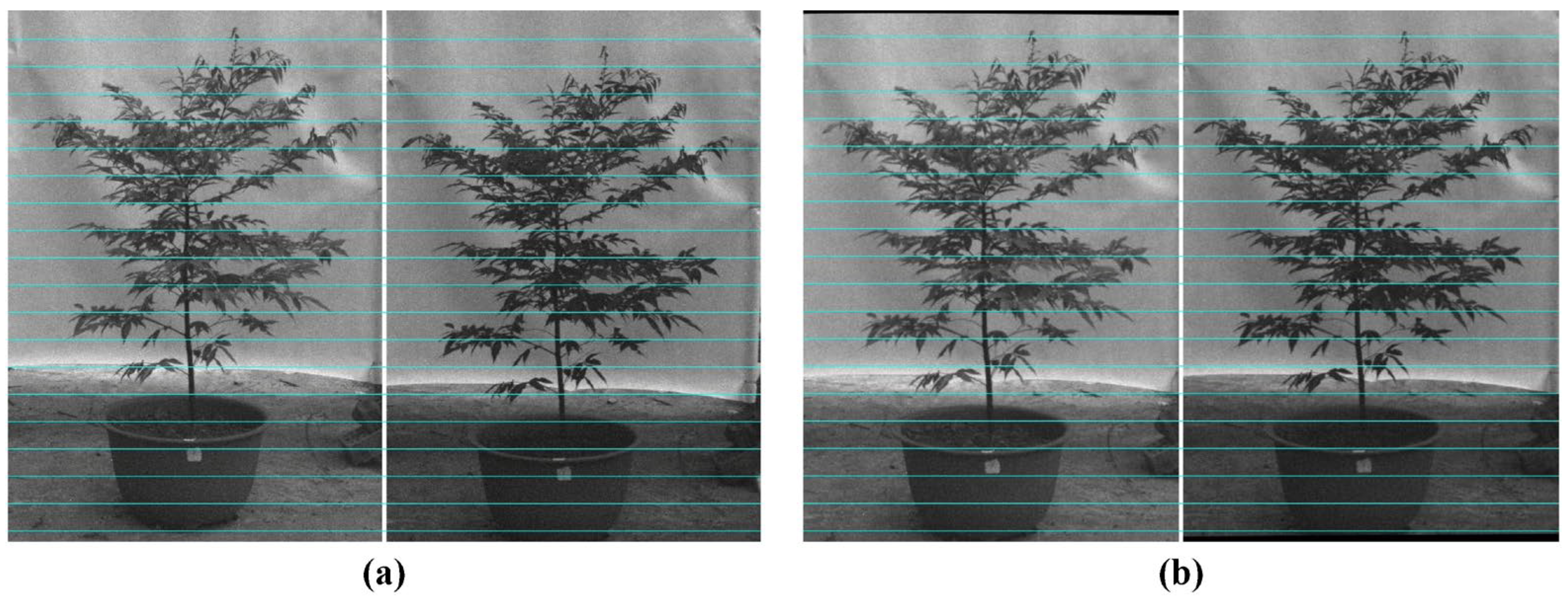

2.4. Epipolar Rectification

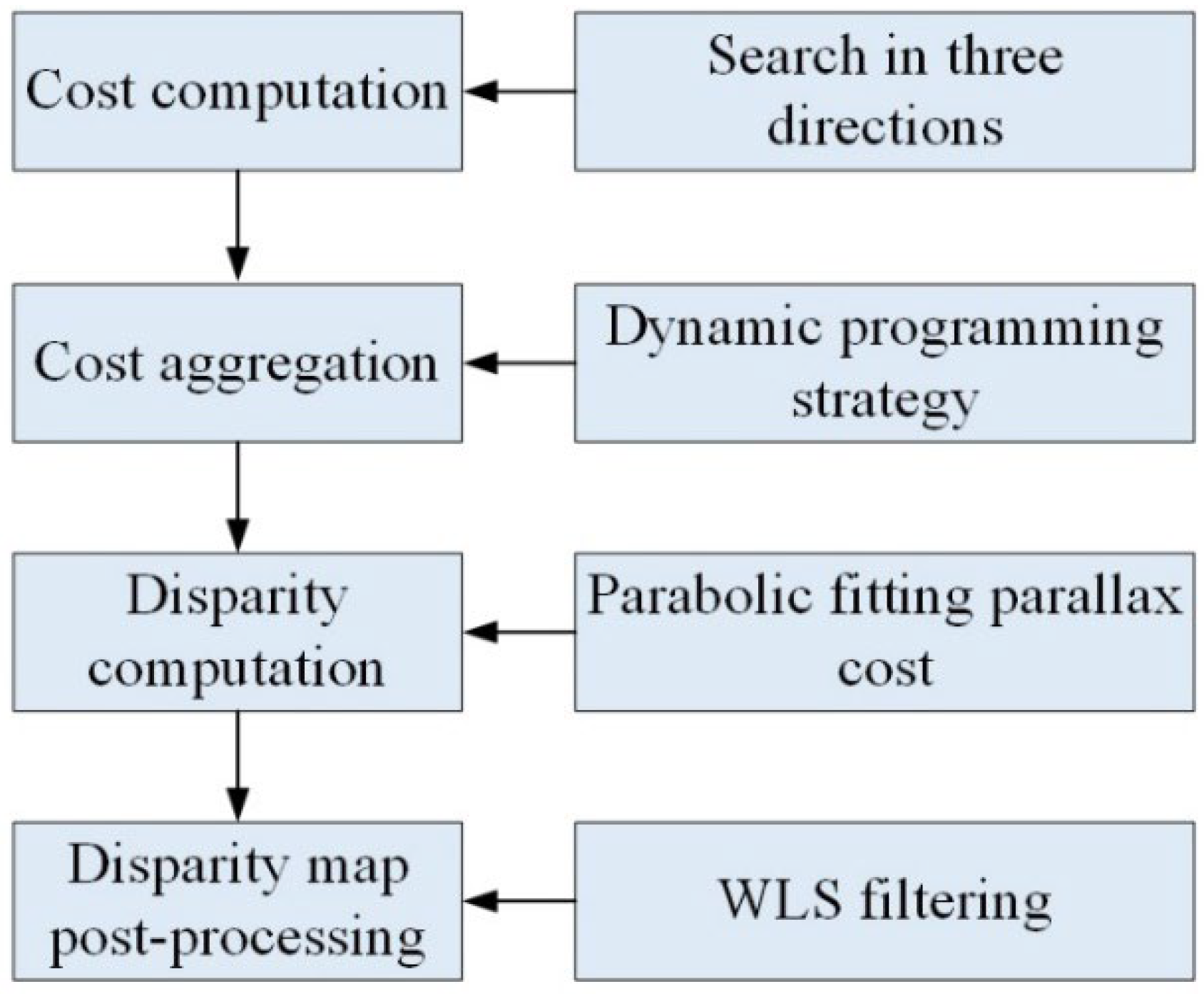

2.5. Stereo Matching

2.5.1. Cost Computation

2.5.2. Cost Aggregation

2.5.3. Disparity Computation

2.5.4. Disparity Map Post-Processing

- Compute the horizontal and vertical gradients of each pixel in the disparity map;

- Set the weights based on the gradient intensity;

- Adjust pixel values by iteratively solving the weighted least squares loss function for the image.

2.6. Depth Estimation

3. Results

3.1. Comparison of Image Denoising Effect

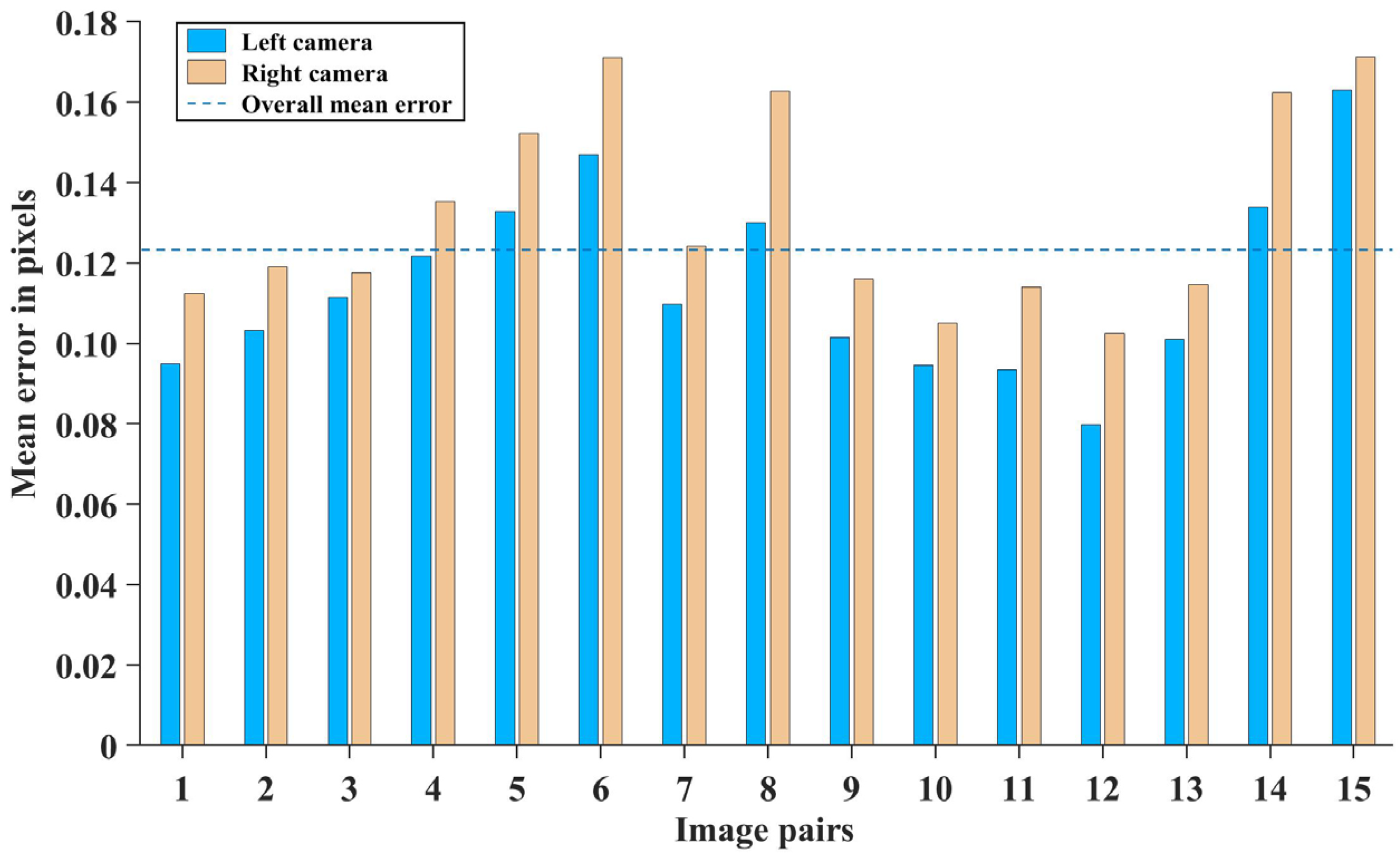

3.2. Camera Parameters

3.3. Correction Effect

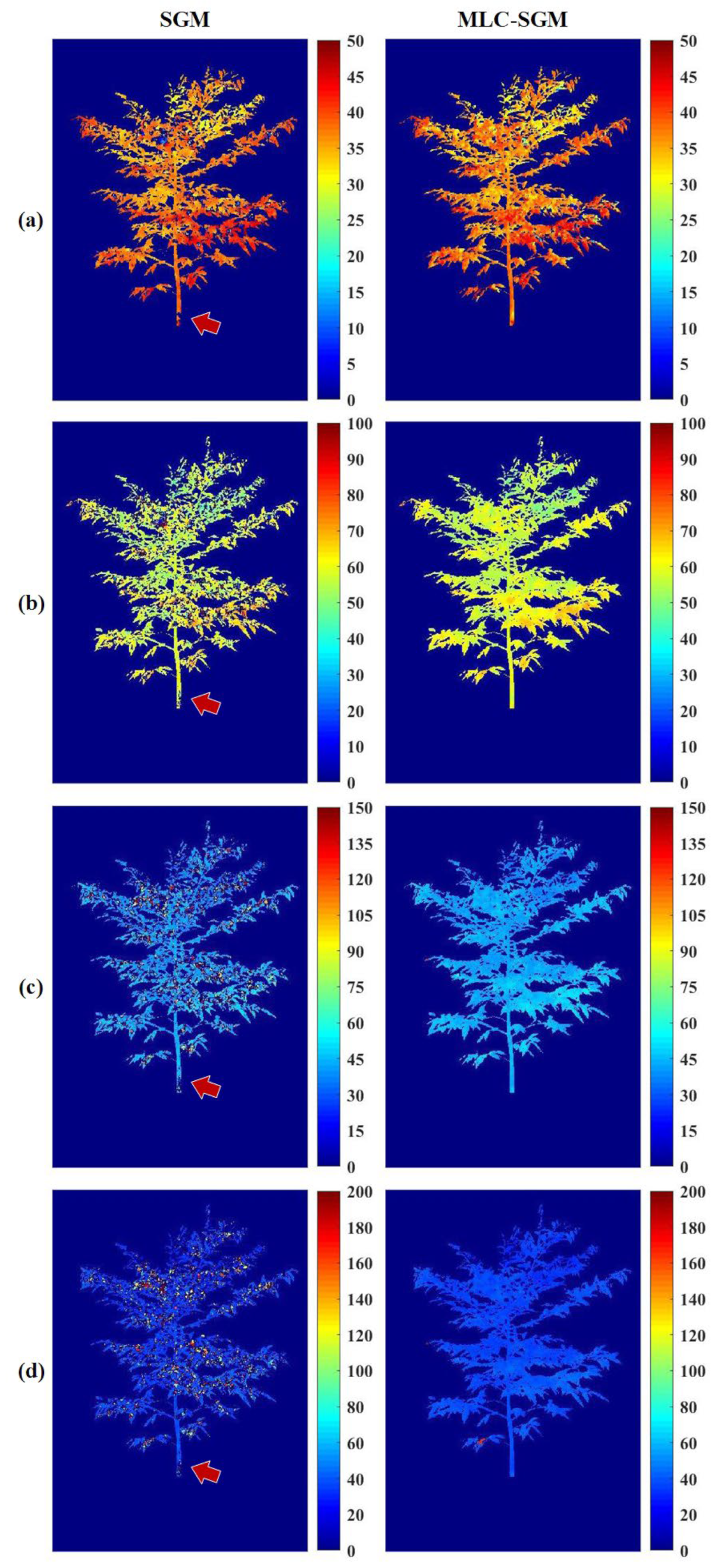

3.4. Performance of the MLC-SGM Algorithm

3.5. Growth Differences of C. hystrix at Different Nitrogen Levels

4. Discussion

4.1. Precise Matching of Plant Images

4.2. Optimization of Tree Fertilization Strategy

5. Conclusions

- The exponential decay threshold function effectively removes random noise from the images of Castanopsis hystrix, and its denoising effect is superior to hard- and soft-threshold functions.

- The MLC-SGM algorithm produces higher-quality disparity maps than the SGM algorithm and results in lower measurement errors for the growth factors of C. hystrix. The average relative errors in height, canopy width, and ground diameter for 64 trees were 2.35%, 3.07%, and 2.93%, respectively.

- Medium nitrogen fertilization significantly promotes the height, canopy width, and ground diameter growth of C. hystrix, but this promoting effect diminishes when over-fertilization occurs, with more significant impacts on canopy width and ground diameter growth.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fan, G.; Feng, W.; Chen, F.; Chen, D.; Dong, Y.; Wang, Z. Measurement of volume and accuracy analysis of standing trees using Forest Survey Intelligent Dendrometer. Comput. Electron. Agric. 2020, 169, 105211. [Google Scholar] [CrossRef]

- Jurjević, L.; Liang, X.; Gašparović, M.; Balenović, I. Is field-measured tree height as reliable as believed–Part II, A comparison study of tree height estimates from conventional field measurement and low-cost close-range remote sensing in a deciduous forest. ISPRS-J. Photogramm. Remote Sens. 2020, 169, 227–241. [Google Scholar] [CrossRef]

- Méndez, V.; Rosell-Polo, J.R.; Pascual, M.; Escola, A. Multi-tree woody structure reconstruction from mobile terrestrial laser scanner point clouds based on a dual neighbourhood connectivity graph algorithm. Biosyst. Eng. 2016, 148, 34–47. [Google Scholar] [CrossRef][Green Version]

- Malekabadi, A.J.; Khojastehpour, M.; Emadi, B. Disparity map computation of tree using stereo vision system and effects of canopy shapes and foliage density. Comput. Electron. Agric. 2019, 156, 627–644. [Google Scholar] [CrossRef]

- Wilkes, P.; Lau, A.; Disney, M.; Calders, K.; Burt, A.; de Tanago, J.G.; Herold, M. Data acquisition considerations for terrestrial laser scanning of forest plots. Remote Sens. Environ. 2017, 196, 140–153. [Google Scholar] [CrossRef]

- Burgess, A.J.; Retkute, R.; Herman, T.; Murchie, E.H. Exploring relationships between canopy architecture, light distribution, and photosynthesis in contrasting rice genotypes using 3D canopy reconstruction. Front. Plant Sci. 2017, 8, 734. [Google Scholar] [CrossRef]

- Cuevas-Velasquez, H.; Gallego, A.J.; Fisher, R.B. Segmentation and 3D reconstruction of rose plants from stereoscopic images. Comput. Electron. Agric. 2020, 171, 105296. [Google Scholar] [CrossRef]

- Yang, T.; Ye, J.; Zhou, S.; Xu, A.; Yin, J. 3D reconstruction method for tree seedlings based on point cloud self-registration. Comput. Electron. Agric. 2022, 200, 107210. [Google Scholar] [CrossRef]

- Xiang, L.; Gai, J.; Bao, Y.; Yu, J.; Schnable, P.S.; Tang, L. Field-based robotic leaf angle detection and characterization of maize plants using stereo vision and deep convolutional neural networks. J. Field Robot. 2023, 40, 1034–1053. [Google Scholar] [CrossRef]

- Dassot, M.; Constant, T.; Fournier, M. The use of terrestrial LiDAR technology in forest science: Application fields, benefits and challenges. Ann. For. Sci. 2011, 68, 959–974. [Google Scholar] [CrossRef]

- Chau, W.Y.; Loong, C.N.; Wang, Y.H.; Chiu, S.W.; Tan, T.J.; Wu, J.; Mei, L.L.; Tan, P.S.; Ooi, G.L. Understanding the dynamic properties of trees using the motions constructed from multi-beam flash light detection and ranging measurements. J. R. Soc. Interface 2022, 19, 20220319. [Google Scholar] [CrossRef]

- Raumonen, P.; Kaasalainen, M.; Åkerblom, M.; Kaasalainen, S.; Kaartinen, H.; Vastaranta, M.; Lewis, P. Fast automatic precision tree models from terrestrial laser scanner data. Remote Sens. 2013, 5, 491–520. [Google Scholar] [CrossRef]

- Lau, A.; Bentley, L.P.; Martius, C.; Shenkin, A.; Bartholomeus, H.; Raumonen, P.; Malhi, Y.; Jackson, T.; Herold, M. Quantifying branch architecture of tropical trees using terrestrial LiDAR and 3D modelling. Trees 2018, 32, 1219–1231. [Google Scholar] [CrossRef]

- Gamarra-Diezma, J.L.; Miranda-Fuentes, A.; Llorens, J.; Cuenca, A.; Blanco-Roldán, G.L.; Rodríguez-Lizana, A. Testing accuracy of long-range ultrasonic sensors for olive tree canopy measurements. Sensors 2015, 15, 2902–2919. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Zhang, B. A camera/ultrasonic sensors based trunk localization system of semi-structured orchards. In Proceedings of the 2021 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Delft, The Netherlands, 12–16 July 2021. [Google Scholar]

- Colaço, A.F.; Molin, J.P.; Rosell-Polo, J.R.; Escolà, A. Application of light detection and ranging and ultrasonic sensors to high-throughput phenotyping and precision horticulture: Current status and challenges. Hortic. Res. 2018, 5, 35. [Google Scholar] [CrossRef]

- Bongers, F. Methods to assess tropical rain forest canopy structure: An overview. In Tropical Forest Canopies: Ecology and Management, Proceedings of the ESF Conference, Oxford University, Oxford, UK, 12–16 December 1998; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Duan, T.; Chapman, S.C.; Holland, E.; Rebetzke, G.; Guo, Y.; Zheng, B. Dynamic quantification of canopy structure to characterize early plant vigour in wheat genotypes. J. Exp. Bot. 2016, 67, 4523–4534. [Google Scholar] [CrossRef]

- Golbach, F.; Kootstra, G.; Damjanovic, S.; Otten, G.; van de Zedde, R. Validation of plant part measurements using a 3D reconstruction method suitable for high-throughput seedling phenotyping. Mach. Vis. Appl. 2016, 27, 663–680. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Z.; Wang, X.; Fu, W.; Li, J. Automatic reconstruction and modeling of dormant jujube trees using three-view image constraints for intelligent pruning applications. Comput. Electron. Agric. 2023, 212, 108149. [Google Scholar] [CrossRef]

- Lu, X.; Ono, E.; Lu, S.; Zhang, Y.; Teng, P.; Aono, M.; Omasa, K. Reconstruction method and optimum range of camera-shooting angle for 3D plant modeling using a multi-camera photography system. Plant Methods 2020, 16, 118. [Google Scholar] [CrossRef]

- Liu, L.; Liu, Y.; Lv, Y.; Li, X. A Novel Approach for Simultaneous Localization and Dense Mapping Based on Binocular Vision in Forest Ecological Environment. Forests 2024, 15, 147. [Google Scholar] [CrossRef]

- Yi, H.; Song, K.; Song, X. Watermelon Detection and Localization Technology based on GTR-Net and Binocular Vision. IEEE Sens. J. 2024, 24, 19873–19881. [Google Scholar] [CrossRef]

- Li, D.; Xu, L.; Tang, X.; Sun, S.; Cai, X.; Zhang, P. 3D imaging of greenhouse plants with an inexpensive binocular stereo vision system. Remote Sens. 2017, 9, 508. [Google Scholar] [CrossRef]

- Peng, Y.; Yang, M.; Zhao, G.; Cao, G. Binocular-vision-based structure from motion for 3-D reconstruction of plants. IEEE Geosci. Remote Sens. Lett. 2021, 19, 8019505. [Google Scholar] [CrossRef]

- Yuan, X.; Li, D.; Sun, P.; Wang, G.; Ma, Y. Real-Time Counting and Height Measurement of Nursery Seedlings Based on Ghostnet–YoloV4 Network and Binocular Vision Technology. Forests 2022, 13, 1459. [Google Scholar] [CrossRef]

- Fu, K.; Yue, S.; Yin, B. DBH Extraction of Standing Trees Based on a Binocular Vision Method. In Proceedings of the 2023 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Kuala Lumpur, Malaysia, 22–25 May 2023. [Google Scholar]

- Ni, Z.; Burks, T.F.; Lee, W.S. 3D reconstruction of plant/tree canopy using monocular and binocular vision. J. Imaging 2016, 2, 28. [Google Scholar] [CrossRef]

- Zhang, R.; Lian, S.; Li, L.; Zhang, L.; Zhang, C.; Chen, L. Design and experiment of a binocular vision-based canopy volume extraction system for precision pesticide application by UAVs. Comput. Electron. Agric. 2023, 213, 108197. [Google Scholar] [CrossRef]

- Saarsalmi, A.; Mälkönen, E. Forest fertilization research in Finland: A literature review. Scand. J. For. Res. 2001, 16, 514–535. [Google Scholar] [CrossRef]

- Gaige, E.; Dail, D.B.; Hollinger, D.Y.; Davidson, E.A.; Fernandez, I.J.; Sievering, H.; Halteman, W. Changes in canopy processes following whole-forest canopy nitrogen fertilization of a mature spruce-hemlock forest. Ecosystems 2007, 10, 1133–1147. [Google Scholar] [CrossRef]

- Zheng, B.; Xiang, Z.; Qaseem, M.F.; Zhao, S.; Li, H.; Feng, J.X.; Stolarski, M.J. Characterization of hemicellulose during xylogenesis in rare tree species Castanopsis hystrix. Int. J. Biol. Macromol. 2022, 212, 348–357. [Google Scholar]

- Rizkinia, M.; Baba, T.; Shirai, K.; Okuda, M. Local spectral component decomposition for multi-channel image denoising. IEEE Trans. Image Process. 2016, 25, 3208–3218. [Google Scholar] [CrossRef]

- Tian, C.; Zheng, M.; Zuo, W.; Zhang, B.; Zhang, Y.; Zhang, D. Multi-stage image denoising with the wavelet transform. Pattern Recognit. 2023, 134, 109050. [Google Scholar] [CrossRef]

- Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999. [Google Scholar]

- Zhang, Z. Determining the epipolar geometry and its uncertainty: A review. Int. J. Comput. Vis. 1998, 27, 161–195. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar] [CrossRef]

- Ma, Y.; Fang, X.; Guan, X.; Li, K.; Chen, L.; An, F. Five-Direction Occlusion Filling with Five Layer Parallel Two-Stage Pipeline for Stereo Matching with Sub-Pixel Disparity Map Estimation. Sensors 2022, 22, 8605. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Zhu, Z.; An, Q.; Wang, Z.; Fang, H. Global–local image enhancement with contrast improvement based on weighted least squares. Optik 2021, 243, 167433. [Google Scholar] [CrossRef]

- Bradley, D.; Heidrich, W. Binocular camera calibration using rectification error. In Proceedings of the 2010 Canadian Conference on Computer and Robot Vision, Ottawa, ON, Canada, 31 May–2 June 2010. [Google Scholar]

- Tabb, A.; Yousef, K.M.A. Parameterizations for reducing camera reprojection error for robot-world hand-eye calibration. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Zhang, Y.; Gu, J.; Rao, T.; Lai, H.; Zhang, B.; Zhang, J.; Yin, Y. A shape reconstruction and measurement method for spherical hedges using binocular vision. Front. Plant Sci. 2022, 13, 849821. [Google Scholar] [CrossRef]

- Lati, R.N.; Filin, S.; Eizenberg, H. Estimating plant growth parameters using an energy minimization-based stereovision model. Comput. Electron. Agric. 2013, 98, 260–271. [Google Scholar] [CrossRef]

- Guo, J.; Wu, Y.; Wang, B.; Lu, Y.; Cao, F.; Wang, G. The effects of fertilization on the growth and physiological characteristics of Ginkgo biloba L. Forests 2016, 7, 293. [Google Scholar] [CrossRef]

- Santiago, L.S.; Wright, S.J.; Harms, K.E.; Yavitt, J.B.; Korine, C.; Garcia, M.N.; Turner, B.L. Tropical tree seedling growth responses to nitrogen, phosphorus and potassium addition. J. Ecol. 2012, 100, 309–316. [Google Scholar] [CrossRef]

- Kwakye, S.; Kadyampakeni, D.M.; Morgan, K.; Vashisth, T.; Wright, A. Effects of iron rates on growth and development of young huanglongbing-affected citrus trees in Florida. HortScience 2022, 57, 1092–1098. [Google Scholar] [CrossRef]

| Method | PSNR (db) | ||

|---|---|---|---|

| db4 | db8 | coif2 | |

| Hard threshold | 29.01 | 26.96 | 25.93 |

| Soft threshold | 29.69 | 27.92 | 26.88 |

| Exponential decay threshold | 30.56 | 29.85 | 28.16 |

| Camera Parameters | Left Camera | Standard Error | Right Camera | Standard Error |

|---|---|---|---|---|

| Principal point coordinates | ||||

| Focal length | ||||

| Radial distortion coefficient | ||||

| Tangential distortion coefficient | ||||

| Rotation matrix | ||||

| Translation matrix |

| Growth Factor | SGM | MLC-SGM | ||

|---|---|---|---|---|

| Relative Error (%) | Average Error (%) | Relative Error (%) | Average Error (%) | |

| Tree height | 2.51~4.84 | 3.32 | 1.85~3.91 | 2.35 |

| Canopy width | 3.65~6.98 | 4.26 | 1.89~4.64 | 3.07 |

| Ground diameter | 2.89~5.79 | 4.34 | 2.21~4.53 | 2.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Wang, X.; Chen, X.; Shi, M. Analysis of the Effects of Different Nitrogen Application Levels on the Growth of Castanopsis hystrix from the Perspective of Three-Dimensional Reconstruction. Forests 2024, 15, 1558. https://doi.org/10.3390/f15091558

Wang P, Wang X, Chen X, Shi M. Analysis of the Effects of Different Nitrogen Application Levels on the Growth of Castanopsis hystrix from the Perspective of Three-Dimensional Reconstruction. Forests. 2024; 15(9):1558. https://doi.org/10.3390/f15091558

Chicago/Turabian StyleWang, Peng, Xuefeng Wang, Xingjing Chen, and Mengmeng Shi. 2024. "Analysis of the Effects of Different Nitrogen Application Levels on the Growth of Castanopsis hystrix from the Perspective of Three-Dimensional Reconstruction" Forests 15, no. 9: 1558. https://doi.org/10.3390/f15091558

APA StyleWang, P., Wang, X., Chen, X., & Shi, M. (2024). Analysis of the Effects of Different Nitrogen Application Levels on the Growth of Castanopsis hystrix from the Perspective of Three-Dimensional Reconstruction. Forests, 15(9), 1558. https://doi.org/10.3390/f15091558